1

Preliminaries: Software Testing

CONTENTS

1.1 Humans, errors, and testing

1.3 Requirements, behavior, and correctness

1.4 Correctness versus reliability

1.7 Software and hardware testing

1.10 Test generation strategies

The purpose of this introductory chapter is to familiarize the reader with the basic concepts related to software testing. Here a framework is set up for the remainder of this book. Specific questions, answered in substantial detail in subsequent chapters, are raised here. After reading this chapter, you are likely to be able to ask meaningful questions related to software testing and reliability.

1.1 Humans, Errors, and Testing

Errors are a part of our daily life. Humans make errors in their thoughts, in their actions, and in the products that might result from their actions. Errors occur almost everywhere. For example, humans make errors in speech, in medical prescription, in surgery, in driving, in observation, in sports, and certainly in love and software development. Table 1.1 provides examples of human errors. The consequences of human errors vary significantly. An error might be insignificant in that it leads to a gentle friendly smile, such as when a slip of the tongue occurs. Or, an error may lead to a catastrophe, such as when an operator fails to recognize that a relief valve on the pressurizer was stuck open and this resulted in a disastrous radiation leak.

Table 1.1 Examples of errors in various fields of human endeavor.

Area |

Error |

|---|---|

|

Hearing |

Spoken: He has a garage for repairing foreign cars. Heard: He has a garage for repairing falling cars. |

|

Medicine |

Incorrect antibiotic prescribed. |

|

Music performance |

Incorrect note played. |

|

Numerical analysis |

Incorrect algorithm for matrix inversion. |

|

Observation |

Operator fails to recognize that a relief valve is stuck open. |

|

Software |

Operator used: ≠, correct operator: >. Identifier used: new_line, correct identifier: next_line. Expression used: a∧(b∨c), correct expression: (a∧b)∨c. Data conversion from 64-bit floating point to 16-bit integer not protected (resulting in a software exception). |

|

Speech |

Spoken: waple malnut, intent: maple walnut. Spoken: We need a new refrigerator, intent: We need a new washing machine. |

|

Sports |

Incorrect call by the referee in a tennis match. |

|

Writing |

Written: What kind of pans did you use ? Intent: What kind of pants did you use ? |

Errors are a part of our daily life.

To determine whether there are any errors in our thought, actions, and the products generated, we resort to the process of testing. The primary goal of testing is to determine if the thoughts, actions, and products are as desired, that is, they conform to the requirements. Testing of thoughts is usually designed to determine if a concept or method has been understood satisfactorily. Testing of actions is designed to check if a skill that results in the actions has been acquired satisfactorily. Testing of a product is designed to check if the product behaves as desired. Note that both syntax and semantic errors arise during programming. Given that most modern compilers are able to detect syntactic errors, testing focuses on semantic errors, also known as faults, that cause the program under test to behave incorrectly.

The process of testing offers an opportunity to discover any errors in the product under test.

Example 1.1 An instructor administers a test to determine how well the students have understood what the instructor wanted to convey. A tennis coach administers a test to determine how well the understudy makes a serve. A software developer tests the program developed to determine if it behaves as desired. In each of these three cases there is an attempt by a tester to determine if the human thoughts, actions, and products behave as desired. Behavior that deviates from the desirable is possibly due to an error.

Example 1.2 “Deviation from the expected” may not be due to an error for one or more reasons. Suppose that a tester wants to test a program to sort a sequence of integers. The program can sort an input sequence in both descending or ascending orders depending on the request made. Now suppose that the tester wants to check if the program sorts an input sequence in ascending order. To do so, she types in an input sequence and a request to sort the sequence in descending order. Suppose that the program is correct and produces an output that is the input sequence in descending order.

Upon examination of the output from the program, the tester hypothesizes that the sorting program is incorrect. This is a situation where the tester made a mistake (an error) that led to her incorrect interpretation (perception) of the behavior of the program (the product).

1.1.1 Errors, faults, and failures

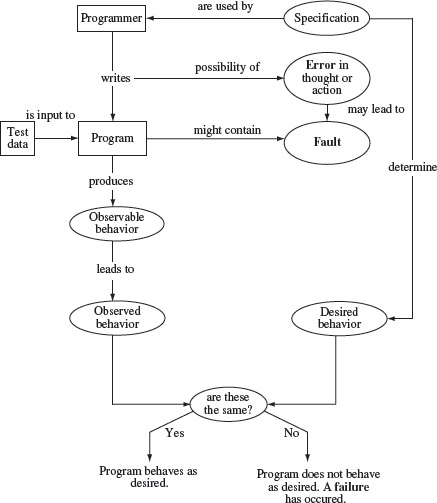

There is no widely accepted and precise definition of the term “error.” Figure 1.1 illustrates one class of meanings for the terms error, fault, and failure. A programmer writes a program. An error occurs in the process of writing a program. A fault is the manifestation of one or more errors. A failure occurs when a faulty piece of code is executed leading to an incorrect state that propagates to the program’s output. The programmer might misinterpret the requirements and consequently write incorrect code. Upon execution, the program might display behavior that does not match with the expected behavior implying thereby that a failure has ocurred. A fault in the program is also commonly referred to as a bug or a defect. The terms error and bug are by far the most common ways of referring to something “wrong” in the program text that might lead to a failure. In this text, we often use the terms “error” and “fault” as synonyms. Faults are sometimes referred to as defects.

Figure 1.1 Errors, faults, and failures in the process of programming and testing.

In Figure 1.1, notice the separation of “observable” from “observed” behavior. This separation is important because it is the observed behavior that might lead one to conclude that a program has failed. Certainly, as explained earlier, this conclusion might be incorrect due to one or more reasons.

1.1.2 Test automation

Testing of complex systems, embedded and otherwise, can be a human intensive task. Often one needs to execute thousands of tests to ensure that, for example, a change made to a component of an application does not cause a previously correct code to malfunction. Execution of many tests can be tiring as well error prone. Hence, there is a tremendous need for automating testing tasks.

Test automation aids in reliable and faster completion of routine tasks. However, not all tasks involved in testing are prone to automation.

Most software development organizations automate test related tasks such as regression testing, GUI testing, and I/O device driver testing. Unfortunately the process of test automation cannot be generalized. For example, automating regression tests for an embedded device such as a pacemaker is quite different from that for an I/O device driver that connects to the USB port of a PC. Such lack of generalization often leads to specialized test automation tools developed in-house.

Nevertheless, there do exist general purpose tools for test automation. While such tools might not be applicable in all test environments, they are useful in many. Examples of such tools include Eggplant, Marathon, and Pounder for GUI testing; eLoadExpert, DBMonster, JMeter, Dieseltest, WAPT, LoadRunner, and Grinder for performance or load testing; and Echelon, TestTube, WinRunner, and XTest for regression testing. Despite the existence of a large number and a variety of test automation tools, large software-development organizations develop their own test automation tools primarily due to the unique nature of their test requirements.

AETG is an automated test generator that can be used in a variety of applications. It uses combinatorial design techniques, which we will discuss in Chapter 6. Random testing is often used for the estimation of reliability of products with respect to specific events. For example, one might test an application using randomly generated tests to determine how frequently does it crash or hang. DART is a tool for automatically extracting an interface of a program and generating random tests. While such tools are useful in some environments, they are dependent on the programming language used and the nature of the application interface. Therefore, many organizations develop their own tools for random testing.

1.1.3 Developer and tester as two roles

In the context of software engineering, a developer is one who writes code and a tester is one who tests code. We prefer to treat developer and tester as two distinct but complementary roles. Thus, the same individual could be a developer and a tester. It is hard to imagine an individual who assumes the role of a developer but never that of a tester, and vice versa. In fact, it is safe to assume that a developer assumes two roles, that of a “developer” and of a “tester,” though at different times. Similarly, a tester also assumes the same two roles but at different times.

A developer is also a tester and vice-versa.

Certainly, within a software development organization, the primary role of an individual might be to test and hence this individual assumes the role of a “tester.” Similarly, the primarily role of an individual who designs applications and writes code is that of a “developer.”

A reference to “tester” in this book refers to the role someone assumes when testing a program. Such an individual could be a developer testing a class she has coded, or a tester who is testing a fully-integrated set of components. A “programmer” in this book refers to an individual who engages in software development and often assumes the role of a tester, at least temporarily. This also implies that the contents of this book are valuable not only to those whose primary role is that of a tester, but also to those who work as developers.

1.2 Software Quality

We all want high-quality software. There exist several definitions of software quality. Also, one quality attribute might be more important to a user than another. In any case, software quality is a multidimensional quantity and is measurable. So, let us look at what defines the quality of a software.

1.2.1 Quality attributes

There exist several measures of software quality. These can be divided into static and dynamic quality attributes. Static quality attributes refer to the actual code and related documentation. Dynamic quality attributes relate to the behavior of the application while in use.

Static quality attributes include structured, maintainable, testable code as well as the availability of correct and complete documentation. You might have come across complaints such as “Product X is excellent, I like the features it offers, but its user manual stinks!” In this case, the user manual brings down the overall product quality. If you are a maintenance engineer and have been assigned the task of doing corrective maintenance on an application code, you will most likely need to understand portions of the code before you make any changes to it. This is where attributes such as code documentation, understandability, and structure come into play. A poorly-documented piece of code will be harder to understand and hence difficult to modify. Further, poorly-structured code might be harder to modify and difficult to test.

Dynamic quality attributes include software reliability, correctness, completeness, consistency, usability, and performance. Reliability refers to the probability of failure-free operation and is considered in the following section. Correctness refers to the correct operation of an application and is always with reference to some artifact. For a tester, correctness is with respect to the requirements; for a user, it is often with respect to a user manual.

Dynamic quality attributes are generally determined through multiple executions of a program. Correctness is one such attribute though one can rarely determine the correctness of a software application via testing.

Completeness refers to the availability of all features listed in the requirements, or in the user manual. An incomplete software is one that does not fully implement all features required. Of course, one usually encounters additional functionality with each new version of an application. This does not mean that a given version is incomplete because its next version has few new features. Completeness is defined with respect to a set of features that might itself be a subset of a larger set of features that are to be implemented in some future version of the application. Of course, one can easily argue that every piece of software that is correct is also complete with respect to some feature set.

Completeness refers to the availability in software of features planned and their correct implementation. Given the near impossibility of exhaustive testing, completeness is often a subjective measure.

Consistency refers to adherence to a common set of conventions and assumptions. For example, all buttons in the user interface might follow a common color coding convention. An example of inconsistency would be when a database application displays the date of birth of a person in the database; however, the date of birth is displayed in different formats, without any regard for the user’s preferences, depending on which feature of the database is used.

Usability refers to the ease with which an application can be used. This is an area in itself and there exist techniques for usability testing. Psychology plays an important role in the design of techniques for usability testing. Usability testing also refers to the testing of a product by its potential users. The development organization invites a selected set of potential users and asks them to test the product. Users in turn test for ease of use, functionality as expected, performance, safety, and security. Users thus serve as an important source of tests that developers or testers within the organization might not have conceived. Usability testing is sometimes referred to as user-centric testing.

Performance refers to the time the application takes to perform a requested task. Performance is considered as a non-functional requirement. It is specified in terms such as “This task must be performed at the rate of X units of activity in one second on a machine running at speed Y, having Z gigabytes of memory.” For example, the performance requirement for a compiler might be stated in terms of the minimum average time to compile of a set of numerical applications.

1.2.2 Reliability

People want software that functions correctly every time it is used. However, this happens rarely, if ever. Most softwares that are used today contain faults that cause them to fail on some combination of inputs. Thus, the notion of total correctness of a program is an ideal and applies mostly to academic and textbook programs.

Correctness and reliability are two dynamic attributes of software. Reliability can be considered as a statistical measure of correctness.

Given that most software applications are defective, one would like to know how often a given piece of software might fail. This question can be answered, often with dubious accuracy, with the help of software reliability, hereafter referred to as reliability. There are several definitions of software reliability, a few are examined below.

ANSI/IEEE STD 729-1983: RELIABILITY

Software reliability is the probability of failure-free operation of software over a given time interval and under given conditions.

The probability referred to in the definition above depends on the distribution of the inputs to the program. Such input distribution is often referred to as operational profile. According to this definition, software reliability could vary from one operational profile to another. An implication is that one user might say “this program is lousy” while another might sing praise for the same program. The following is an alternate definition of reliability.

Reliability

Software reliability is the probability of failure-free operation of software in its intended environment.

This definition is independent of “who uses what features of the software and how often.” Instead, it depends exclusively on the correctness of its features. As there is no notion of operational profile, the entire input domain is considered as uniformly distributed. The term “environment” refers to the software and hardware elements needed to execute the application. These elements include the operating system, hardware requirements, and any other applications needed for communication.

Both definitions have their pros and cons. The first of the two definitions above requires the knowledge of the use profile of its users, which might be difficult or impossible to estimate accurately. However, if an operational profile can be estimated for a given class of users, then an accurate estimate of the reliability can be found for this class of users. The second definition is attractive in that one needs only one number to denote the reliability of a software application that is applicable to all its users. However, such estimates are difficult to arrive at.

1.3 Requirements, Behavior, and Correctness

Products, software in particular, are designed in response to requirements. Requirements specify the functions that a product is expected to perform. Once the product is ready, it is the requirements that determine the expected behavior. Of course, during the development of the product, the requirements might have changed from what was stated originally. Regardless of any change, the expected behavior of the product is determined by the tester’s understanding of the requirements during testing.

Example 1.3 Here are the two requirements, each of which leads to a different program.

Requirement 1:

It is required to write a program that inputs two integers and outputs the maximum of these.

Requirement 2:

It is required to write a program that inputs a sequence of integers and outputs the sorted version of this sequence.

Suppose that program max is developed to satisfy Requirement 1 above. The expected output of max when the input integers are 13 and 19, can be easily determined to be 19. Now suppose that the tester wants to know if the two integers are to be input to the program on one line followed by a carriage return, or on two separate lines with a carriage return typed in after each number. The requirement as stated above fails to provide an answer to this question. This example illustrates the incompleteness of Requirement 1.

The second requirement in the above example is ambiguous. It is not clear from this requirement whether the input sequence is to be sorted in ascending or descending order. The behavior of the sort program, written to satisfy this requirement, will depend on the decision taken by the programmer while writing sort.

Testers are often faced with incomplete and/or ambiguous requirements. In such situations, a tester may resort to a variety of ways to determine what behavior to expect from the program under test. For example, forth above program max, one way to determine how the input should be typed in is to actually examine the program text. Another way is to ask the developer of max as to what decision was taken regarding the sequence in which the inputs are to be typed in. Yet another method is to execute max on different input sequences and determine what is acceptable to max.

Regardless of the nature of the requirements, testing requires the determination of the expected behavior of the program under test. The observed behavior of the program is compared with the expected behavior to determine if the program functions as desired.

1.3.1 Input domain

A program is considered correct if it behaves as desired on all possible test inputs. Usually, the set of all possible inputs is too large for the program to be executed on each input. For example, suppose that the max program above is to be tested on a computer in which the integers range from –32,768 to 32,767. To test max on all possible integers would require it to be executed on all pairs of integers in this range. This will require a total of 232 executions of max. It will take approximately 4.3 seconds to complete all executions assuming that testing is done on a computer that will take 1 nanosecond (=10–9 seconds), to input a pair of integers, execute max, and check if the output is correct. Testing a program on all possible inputs is known as exhaustive testing.

According to one view, the input domain of a program consists of all possible inputs as derived from the program specification. According to another view, it is the set of all possible inputs that a program could be subjected, i.e., legal and illegal inputs.

A tester often needs to determine what constitutes “all possible inputs.” The first step in determining all possible inputs is to examine the requirements. If the requirements are complete and unambiguous, it should be possible to determine the set of all possible inputs. A definition is in order before we provide an example to illustrate how to determine the set of all program inputs.

Input domain

The set of all possible inputs to a program Ρ is known as the input domain, or input space, of P.

Example 1.4 Using Requirement 1 from Example 1.3, we find the input domain of max to be the set of all pairs of integers where each element in the pair is in the range –32,768 till 32,767.

Example 1.5 Using Requirement 2 from Example 1.3, it is not possible to find the input domain for the sort program. Let us therefore assume that the requirement was modified to be the following:

Based on the above modified requirement, the input domain for sort is a set of pairs. The first element of the pair is a character. The second element of the pair is a sequence of zero or more integers ending with a period. For example, following are three elements in the input domain of sort:

< A -3 15 12 55.>

< D 23 78.>

< A .>

The first element contains a sequence of four integers to be sorted in ascending order, the second element has a sequence to be sorted in descending order, and the third element has an empty sequence to be sorted in ascending order.

We are now ready to give the definition of program correctness.

Correctness

A program is considered correct if it behaves as expected on each element of its input domain.

1.3.2 Specifying program behavior

There are several ways to define and specify program behavior. The simplest way is to specify the behavior in a natural language such as English. However, this is more likely subject to multiple interpretations than a more formally specified behavior. Here, we explain how the notion of program “state” can be used to define program behavior and how the “state transition diagram,” or simply “state diagram,” can be used to specify program behavior.

A collection of the current values of program variables and the location of control, is considered as a state vector for that program.

The “state” of a program is the set of current values of all its variables and an indication of which statement in the program is to be executed next. One way to encode the state is by collecting the current values of program variables into a vector known as the “state vector.” An indication of where the control of execution is at any instant of time can be given by using an identifier associated with the next program statement. In the case of programs in assembly language, the location of control can be specified more precisely by giving the value of the program counter.

Each variable in the program corresponds to one element of this vector. Obviously, for a large program, such as the Unix operating system, the state vector might have thousands of elements. Execution of program statements causes the program to move from one state to the next. A sequence of program states is termed as program behavior.

Example 1.6 Consider a program that inputs two integers into variables X and Y, compares these values, sets the value of Ζ to the larger of the two, displays the value of Ζ on the screen, and exits. Program P1.1 shows the program skeleton. The state vector for this program consists of four elements. The first element is the statement identifier where the execution control is currently at. The next three elements are, respectively, the values of the three variables X, Y, and Z.

Program P1.1

1 integer X, Y, Z;

2 input (X, Y);

3 if (X < Y)

4 {Z=Y;}

5 else

6 {Z=X;}

7 endif

8 output (Z);

9 end

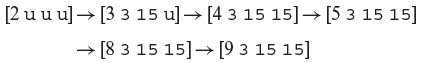

The letter u as an element of the state vector stands for an “undefined” value. The notation si → sj is an abbreviation for “The program moves from state si to sj.” The movement from si to sj is caused by the execution of the statement whose identifier is listed as the first element of state si. A possible sequence of states that the max program may go through is given below.

Upon the start of its execution, a program is in an “initial state.” A (correct) program terminates in its “final state.” All other program states are termed as “intermediate states.” In Example 1.6, the initial state is [2 u u u], the final state is [9 3 15 15], and there are four intermediate states as indicated.

Program behavior can be modeled as a sequence of states. With every program one can associate one or more states that need to be observed to decide whether or not the program behaves according to its requirements. In some applications it is only the final state that is of interest to the tester. In other applications a sequence of states might be of interest. More complex patterns might also be needed.

A sequence of states is representative of program behavior.

Example 1.7 For the max program (P1.1), the final state is sufficient to decide if the program has successfully determined the maximum of two integers. If the numbers input to max are 3 and 15, then the correct final state is [9 3 15 15]. In fact, it is only the last element of the state vector, 15, which may be of interest to the tester.

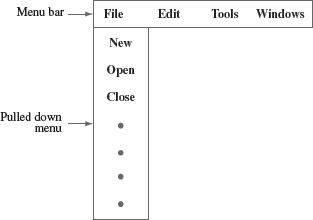

Example 1.8 Consider a menu-driven application named myapp. Figure 1.2 shows the menu bar for this application. It allows a user to position and click the mouse on any one of a list of menu items displayed in the menu bar on the screen. This results in the “pulling down” of the menu and a list of options is displayed on the screen. One of the items on the menu bar is labeled File. When File is pulled down, it displays Open as one of several options. When the Open option is selected, by moving the cursor over it, it should be highlighted. When the mouse is released, indicating that the selection is complete, a window displaying names of files in the current directory should be displayed.

Figure 1.2 Menu bar displaying four menu items when application myapp is started.

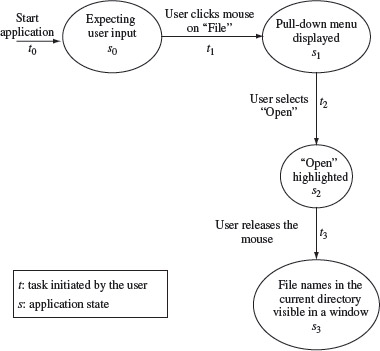

Figure 1.3 depicts the sequence of states that myapp is expected to enter when the user actions described above are performed. When started, the application enters the initial state wherein it displays the menu bar and waits for the user to select a menu item. This state diagram depicts the expected behavior of myapp in terms of a state sequence. As shown in Figure 1.3, myapp moves from state s0 to state s3 after the sequence of actions t0, t1, t2, and t3 has been applied. To test myapp, the tester could apply the sequence of actions depicted in this state diagram and observe if the application enters the expected states.

Figure 1.3 A state sequence for myapp showing how the application is expected to behave when the user selects the open option under the File menu item.

As you might observe from Figure 1.3, a state sequence diagram can be used to specify the behavioral requirements of an application. This same specification can then be used during testing to ensure if the application conforms to the requirements.

1.3.3 Valid and invalid inputs

In the examples above, the input domains are derived from the requirements. However, due to the incompleteness of requirements, one might have to think a bit harder to determine the input domain. To illustrate why, consider the modified requirement in Example 1.5. The requirement mentions that the request characters can be “A” or “D”, but it fails to answer the question “What if the user types a different character ?” When using sort it is certainly possible for the user to type a character other than “A” or “D”. Any character other than “A” or “D” is considered as invalid input to sort. The requirement for sort does not specify what action it should take when an invalid input is encountered.

A program ought to be tested based on representatives from the set of valid as well as invalid inputs. The latter set is used to determine the robustness of a program.

Identifying the set of invalid inputs and testing, the program against these inputs is an important part of the testing activity. Even when the requirements fail to specify the program behavior on invalid inputs, the programmer does treat these in one way or another. Testing a program against invalid inputs might reveal errors in the program.

Example 1.9 Suppose that we are testing the sort program. We execute it against the following input: < Ε 7 19 . >. The requirements in Example 1.5 are insufficient to determine the expected behavior of sort on the above input. Now suppose that upon execution on the above input, the sort program enters into an infinite loop and neither asks the user for any input nor responds to anything typed by the user. This observed behavior points to a possible error in sort.

The argument above can be extended to apply to the sequence of integers to be sorted. The requirements for the sort program do not specify how the program should behave if, instead of typing an integer, a user types in a character, such as “?”. Of course, one would say, the program should inform the user that the input is invalid. But this expected behavior from sort needs to be tested. This suggests that the input domain for sort should be modified.

Example 1.10 Considering that sort may receive valid and invalid inputs, the input domain derived in Example 1.5 needs modification. The modified input domain consists of pairs of values. The first value in the pair is any ASCII character that can be typed by a user as a request character. The second element of the pair is a sequence of integers, interspersed with invalid characters, terminated by a period. Thus, for example, the following are sample elements from the modified input domain:

< A 7 19.>

< D 7 9F 19.>

In the example above, we assumed that invalid characters are possible inputs to the sort program. This, however, may not be the case in all situations. For example, it might be possible to guarantee that the inputs to sort will always be correct as determined from the modified requirements in Example 1.5. In such a situation, the input domain need not be augmented to account for invalid inputs if the guarantee is to be believed by the tester.

In cases where the input to a program is not guaranteed to be correct, it is convenient to partition the input domain into two subdomains. One subdomain consists of inputs that are valid and the other consists of inputs that are invalid. A tester can then test the program on selected inputs from each subdomain.

1.4 Correctness Versus Reliability

1.4.1 Correctness

Though correctness of a program is desirable, it is almost never the objective of testing. To establish correctness via testing would imply testing a program on all elements in the input domain. In most cases that are encountered in practice, this is impossible to accomplish. Thus, correctness is established via mathematical proofs of programs. A proof uses the formal specification of requirements and the program text to prove or disprove that the program will behave as intended. While a mathematical proof is precise, it too is subject to human errors. Even when the proof is carried out by a computer, the simplification of requirements specification and errors in tasks that are not fully automated might render the proof useless.

Program correctness is established via mathematical proofs. Program reliability is established via testing. However, neither approach is foolproof.

While correctness attempts to establish that the program is error free, testing attempts to find if there are any errors in it. Completeness of testing does not necessarily demonstrate that a program is error free. However, as testing progresses, errors might be revealed. Removal of errors from the program usually improves the chances, or the probability, of the program executing without any failure. Also, testing, debugging, and the error removal processes together increase our confidence in the correct functioning of the program under test.

Testing a program for completeness does not ensure its correctness.

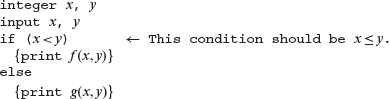

Example 1.11 This example illustrates why the probability of program failure might not change upon error removal. Consider the following program that inputs two integers x and y and prints the value of f (x, y) or g(x, y) depending on the condition x < y.

The above program uses two functions f and g not defined here. Let us suppose that function f produces an incorrect result whenever it is invoked with x = y and that f (x, y) ≠ g(x, y), x = y. In its present form, the program fails when tested with equal input values because function g is invoked instead of function f. When the error is removed by changing the condition x < y to x ≤ y, the program fails again when the input values are the same. The latter failure is due to the error in function f. In this program, when the error in f is also removed, the program will be correct assuming that all other code is correct.

1.4.2 Reliability

The probability of a program failure is captured more formally in the term “reliability.” Consider the second of the two definitions examined earlier: “The reliability of a program Ρ is the probability of its successful execution on a randomly selected element from its input domain.”

Reliability is a statistical attribute. According to one view, it is the probability of failure free execution of a program under given conditions.

A comparison of program correctness and reliability reveals that while correctness is a binary metric, reliability is a continuous metric over a scale from 0 to 1. A program can be either correct or incorrect; its reliability can be anywhere between 0 and 1. Intuitively, when an error is removed from a program, the reliability of the program so obtained is expected to be higher than that of the one that contains the error. As illustrated in the example above, this may not be always true. The next example illustrates how to compute program reliability in a simplistic manner.

Example 1.12 Consider a program Ρ that takes a pair of integers as input. The input domain of this program is the set of all pairs of integers. Suppose now that in actual use there are only three pairs that will be input to P. These are

{<(0, 0) (–l, l) (l, –l)>}

The above set of three pairs is a subset of the input domain of Ρ and is derived from a knowledge of the actual use of P, and not solely from its requirements.

Suppose also that each pair in the above set is equally likely to occur in practice. If it is known that Ρ fails on exactly one of the three possible input pairs then the frequency with which Ρ will function correctly is

This number is an estimate of the probability of the successful operation of Ρ and hence is the reliability of P.

1.4.3 Operational profiles

As per the definition above, the reliability of a program depends on how it is used. Thus, in Example 1.12, if Ρ is never executed on input pair (0, 0), then the restricted input domain becomes {< (–1, 1) (1, –1) >} and the reliability of Ρ is 1. This leads us to the definition of operational profile.

An operational profile is a statistical summary of how a program would be used in practice.

Operational profile

An operational profile is a numerical description of how a program is used.

In accordance with the above definition, a program might have several operational profiles depending on its users.

Example 1.13 Consider a sort program that, on any given execution, allows any one of two types of input sequences. One sequence consists of numbers only and the other consists of alphanumeric strings. One operational profile for sort is specified as follows:

Operational profile #1

Sequence

Probability

Numbers only

0.9

Alphanumeric strings

0.1

Another operational profile for sort is specified as follows:

Operational profile #2

Sequence

Probability

Numbers only

0.1

Alphanumeric strings

0.9

The two operational profiles above suggest significantly different uses of sort. In one case, it is used mostly for sorting sequences of numbers, and in the other case, it is used mostly for sorting alphanumeric strings.

1.5 Testing and Debugging

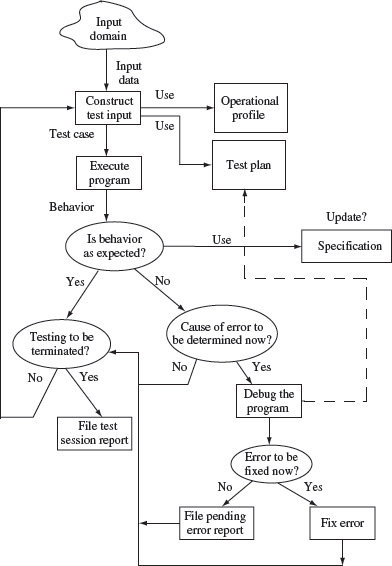

Testing is the process of determining if a program behaves as expected. In the process, one may discover errors in the program under test. However, when testing reveals an error, the process used to determine the cause of this error and to remove it is known as debugging. As illustrated in Figure 1.4, testing and debugging are often used as two related activities in a cyclic manner.

Figure 1.4 A test and debug cycle.

Testing and debugging are two distinct though intertwined activities. Testing generally leads to debugging though both activities might not be always performed by the same individual.

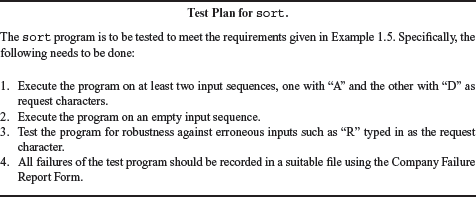

1.5.1 Preparing a test plan

A test cycle is often guided by a test plan. When relatively small programs are being tested, a test plan is usually informal and in the tester’s mind, or there may be no plan at all. A sample test plan for testing the sort program is shown in Figure 1.5.

Figure 1.5 A sample test plan for the sort program.

The sample test plan in Figure 1.5 is often augmented by items such as the method used for testing, method for evaluating the adequacy of test cases, and method to determine if a program has failed or not.

1.5.2 Constructing test data

A test case is a pair of input data and the corresponding program output. The test data are a set of values: one for each input variable. A test set is a collection of test cases. A test set is sometimes referred to as a test suite. The notion of “one execution” of a program is rather tricky and is elaborated later in this chapter. Test data is an alternate term for test set.

A test case is a pair of input data and the corresponding program output; a test set is a collection of test cases.

Program requirements and the test plan help in the construction of test data. Execution of the program on test data might begin after all or a few test cases have been constructed. While testing, relatively small programs testers often generate a few test cases and execute the program against these. Based on the results obtained, the tester decides whether to continue the construction of additional test cases or to enter the debugging phase.

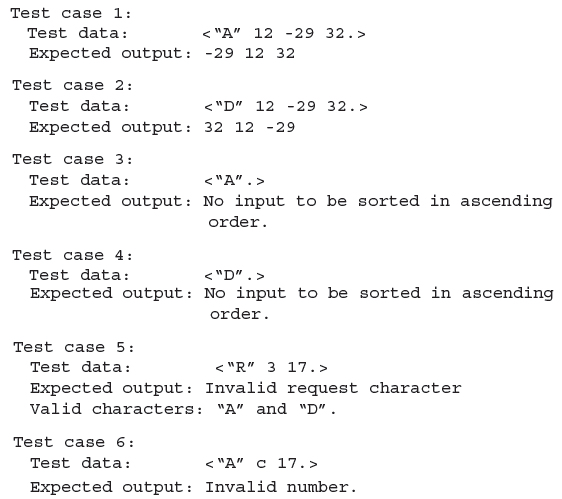

Example 1.14 The following test cases are generated for the sort program using the test plan in Figure 1.5.

Test cases 1 and 2 are derived in response to item 1 in the test plan; 3 and 4 are in response to item 2. Notice that we have designed two test cases in response to item 2 of the test plan even though the plan calls for only 1 test case. Notice also that the requirements for the sort program as in Example 1.5 do not indicate what should be the output of sort when there is nothing to be sorted. We therefore took an arbitrary decision while composing the “Expected output” for an input that has no numbers to be sorted. Test cases 5 and 6 are in response to item 3 in the test plan.

As is evident from the above example, one can select a variety of test sets to satisfy the test plan requirements. Questions such as “Which test set is the best?” and “Is a given test set adequate?” are answered in Part III of this book.

1.5.3 Executing the program

Execution of a program under test is the next significant step in testing. Execution of this step for the sort program is most likely a trivial exercise. However, this may not be so for large and complex programs. For example, to execute a program that controls a digital cross connect switch used in telephone networks, one may first need to follow an elaborate procedure to load the program into the switch and then yet another procedure to input the test cases to the program. Obviously, the complexity of actual program execution is dependent on the program itself.

A test harness is an aid to testing a program.

Often a tester might be able to construct a test harness to aid in program execution. The harness initializes any global variables, inputs a test case, and executes the program. The output generated by the program may be saved in a file for subsequent examination by a tester. The next example illustrates a simple test harness.

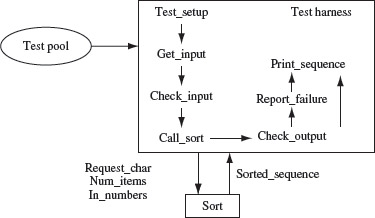

Example 1.15 The test harness in Figure 1.6 reads an input sequence, checks for its correctness, and then calls sort. The sorted array sorted_sequence returned by sort is printed using the print_sequence procedure. Test cases are assumed to be available in the Test pool file shown in the figure. In some cases, the tests might be generated from within the harness.

Figure 1.6 A simple test harness to test the sort program.

In preparing this test harness we assume that (a) sort is coded as a procedure, (b) the get_input procedure reads the request character and the sequence to be sorted into variables request_char, num_items, and in_numbers, and (c) the input is checked prior to calling sort by the check_input procedure.

The test_setup procedure is usually invoked first to set up the test that, in this example, includes identifying and opening the file containing tests. The check_output procedure serves as the oracle that checks if the program under test behaves correctly. The report_failure procedure is invoked in case the output from sort is incorrect. A failure might be simply reported via a message on the screen or saved in a test report file (not shown in Figure 1.6). The print_sequence procedure prints the sequence generated by the sort program. The output generated by print_sequence can also be piped into a file for subsequent examination.

1.5.4 Assessing program correctness

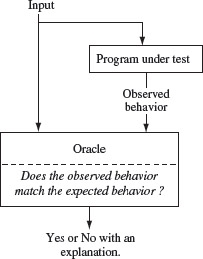

An important step in testing a program is the one wherein the tester determines if the observed behavior of the program under test is correct or not. This step can be further divided into two smaller steps. In the first step one observes the behavior and in the second step analyses the observed behavior to check if it is correct or not. Both these steps can be trivial for small programs, such as for max in Example 1.3, or extremely complex as in the case of a large distributed software system. The entity that performs the task of checking the correctness of the observed behavior is known as an oracle. Figure 1.7 shows the relationship between the program under test and the oracle.

Figure 1.7 Relationship between the program under test and the oracle. The output from an oracle can be binary such as “Yes” or “No” or more complex such as an explanation as to why the oracle finds the observed behavior to be same or different from the expected behavior.

A tester often assumes the role of an oracle and thus serves as a “human oracle.” For example, to verify if the output of a matrix multiplication program is correct or not, a tester might input two 2 × 2 matrices and check if the output produced by the program matches the results of hand calculation. As another example, consider checking the output of a text processing program. In this case, a human oracle might visually inspect the monitor screen to verify whether or not the italicize command works correctly when applied to a block of text.

An oracle is something that checks the behavior of a program. An oracle could itself be a program or a human being.

Checking program behavior by humans has several disadvantages. First, it is error prone as the human oracle might make an error in analysis. Second, it may be slower than the speed with which the program computed the results. Third, it might result in the checking of only trivial input–output behaviors. However, regardless of these disadvantages, a human oracle can often be the best available oracle.

Oracles can also be programs designed to check the behavior of other programs. For example, one might use a matrix multiplication program to check if a matrix inversion program has produced the correct output. In this case, the matrix inversion program inverts a given matrix A and generates Β as the output matrix. The multiplication program can check to see if Α × Β = I within some bounds on the elements of the identity matrix I. Another example is an oracle that checks the validity of the output from a sort program. Assuming that the sort program sorts input numbers in ascending order, the oracle needs to check if the output of the sort program is indeed in ascending order.

Using programs as oracles has the advantage of speed, accuracy, and the ease with which complex computations can be checked. Thus, a matrix multiplication program, when used as an oracle for a matrix inversion program, can be faster, accurate, and check very large matrices when compared to the same function performed by a human oracle.

1.5.5 Constructing an oracle

Construction of an automated oracle, such as the one to check a matrix multiplication or a sort program, requires the determination of input–output relationship. For matrix inversion, and for sorting, this relation is rather simple and expressed precisely in terms of a mathematical formula or a simple algorithm as explained above. Also, when tests are generated from models such as finite state machines or statecharts, both inputs and the corresponding outputs are available. This makes it possible to construct an oracle while generating the tests. However, in general, the construction of an automated oracle is a complex undertaking. The next example illustrates one method for constructing an oracle.

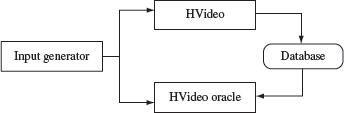

Example 1.16 Consider a program named HVideo that allows one to keep track of old home videos. The program operates in two modes: data entry and search. In the date entry mode, it displays a screen into which the user types in information about a video cassette. This information includes data such as the title, the comments, and the date when the video was prepared. Once the information is typed the user clicks on the Enter button, which results in this information being added to a database. In the search mode, the program displays a screen into which a user can type some attribute of the video being searched for and sets up a search criterion. A sample criterion is “Find all videos that contain the name Sonia in the title field.” In response, the program returns all videos in the database that match the search criteria or displays an appropriate message if no match is found.

To test HVideo, we need to create an oracle that checks whether or not the program functions correctly in data entry and search modes. In addition, an input generator needs to be created. As shown in Figure 1.8, the input generator generates inputs for HVideo. To test the data entry operation of HVideo, the input generator generates a data entry request. This request consists of an operation code, Data Entry, and the data to be entered that includes the title, the comments, and the date on which the video was prepared. Upon completion of execution of the Enter request, HVideo returns control to the input generator. The input generator now requests the oracle to test if HVideo performed its task correctly on the input given for data entry. The oracle uses the input to check if the information to be entered into the database has been entered correctly or not. The oracle returns a Pass or No Pass to the input generator.

Figure 1.8 Relationship between an input generator, HVideo, and its oracle.

To test if HVideo correctly performs the search operation, the input generator formulates a search request with the search data the same as the one given previously with the Enter command. This input is passed on to HVideo that performs the search and returns the results of the search to the input generator. The input generator passes these results to the oracle to check for their correctness. There are at least two ways in which the oracle can check for the correctness of the search results. One is for the oracle to actually search the database. If its findings are the same as that of HVideo, then the search results are assumed to be correct and incorrect otherwise.

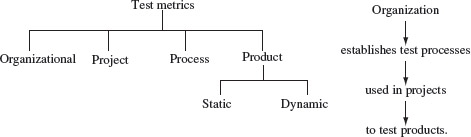

1.6 Test Metrics

The term “metric” refers to a standard of measurement. In software testing, there exist a variety of metrics. Figure 1.9 shows a classification of various types of metrics briefly discussed in this section. Metrics can be computed at the organizational, process, project, and product levels. Each set of measurements has its value in monitoring, planning, and control.

Figure 1.9 Types of metrics used in software testing and their relationships.

A test metric measures some aspect of the test process. Test metrics could be at various levels such as at the level of an organization, a project, a process or a product.

Regardless of the level at which metrics are defined and collected, there exist four general core areas that assist in the design of metrics. These are schedule, quality, resources, and size. Schedule-related metrics measure actual completion times of various activities and compare these with estimated time to completion. Quality-related metrics measure the quality of a product or a process. Resource-related metrics measure items such as cost in dollars, manpower, and tests executed. Size-related metrics measure the size of various objects such as the source code and the number of tests in a test suite.

1.6.1 Organizational metrics

Metrics at the level of an organization are useful in overall project planning and management. Some of these metrics are obtained by aggregating compatible metrics across multiple projects. Thus, for example, the number of defects reported after product release, averaged over a set of products developed and marketed by an organization, is a useful metric of product quality at the organizational level.

Computing this metric at regular intervals and overall products released over a given duration shows the quality trend across the organization. For example, one might say “The number of defects reported in the field over all products and within three months of their shipping, has dropped from 0.2 defects per KLOC (thousand lines of code) to 0.04 defects KLOC.” Other organizational level metrics include testing cost per KLOC, delivery schedule slippage, and time to complete system testing.

Defect density is the number of defects per line of code. Defect density is a widely used metric at the level of a project.

Organizational level metrics allow senior management to monitor the overall strength of the organization and points to areas of weaknesses. These metrics help the senior management in setting new goals and plan for resources needed to realize these goals.

Example 1.17 The average defect density across all software projects in a company is 1.73 defects per KLOC. Senior management has found that for the next generation of software products, which they plan to bid, they need to show that product density can be reduced to 0.1 defects per KLOC. The management thus sets a new goal.

Given the time frame from now until the time to bid, the management needs to do a feasibility analysis to determine whether or not this goal can be met. If a preliminary analysis shows that it can be met, then a detailed plan needs to be worked out and put into action. For example, management might decide to train its employees in the use of new tools and techniques for defect prevention and detection using sophisticated static analysis techniques.

1.6.2 Project metrics

Project metrics relate to a specific project, for example, the I/O device testing project or a compiler project. These are useful in the monitoring and control of a specific project. The ratio of actual to planned system test effort is one project metric. Test effort could be measured in terms of the tester-man-months. At the start of the system test phase, for example, the project manager estimates the total system test effort. The ratio of actual to estimated effort is zero prior to the system test phase. This ratio builds up over time. Tracking the ratio assists the project manager in allocating testing resources.

Another project metric is the ratio of the number of successful tests to the total number of tests in the system test phase. At any time during the project, the evolution of this ratio from the start of the project could be used to estimate the time remaining to complete the system test process.

1.6.3 Process metrics

Every project uses some test process. The “big bang” approach is one process sometimes used in relatively small single person projects. Several other well-organized processes exist. The goal of a process metric is to assess the “goodness” of the process.

When a test process consists of several phases, for example, unit test, integration test, system test, etc, one can measure how many defects were found in each phase. It is well known that the later a defect is found, the costlier it is to fix. Hence, a metric that classifies defects according to the phase in which they are found assists in evaluating the process itself.

The purpose of a process metric is to assess the “goodness” of a process.

Example 1.18 In one software development project, it was found that 15% of the total defects were reported by customers, 55% of the defects prior to shipping were found during system test, 22% during integration test, and the remaining during unit test. The large number of defects found during the system test phase indicates a possibly weak integration and unit test process. The management might also want to reduce the fraction of defects reported by customers.

1.6.4 Product metrics: generic

Product metrics relate to a specific product such as a compiler for a programming language. These are useful in making decisions related to the product, for example, “Should this product be released for use by the customer ?”

Product quality-related metrics abound. We introduce two types of metrics here: the cyclomatic complexity and the Halstead metrics. The cyclomatic complexity proposed by Thomas McCabe in 1976 is based on the control flow of a program. Given the CFG G (see Chapter 2.2 for details) of program Ρ containing Ν nodes, Ε edges, and p connected procedures, the cyclomatic complexity V(G) is computed as follows:

The cyclomatic complexity is a measure of program complexity; higher values imply higher complexity. This is a product metric.

Note that Ρ might consist of more than one procedure. The term p in V(G) counts only procedures that are reachable from the main function. V(G) is the complexity of a CFG G that corresponds to a procedure reachable from the main procedure. Also, V(G) is not the complexity of the entire program, instead it is the complexity of a procedure in Ρ that corresponds to G (see Exercise 1.12). Larger values of V(G) tend to imply higher program complexity and hence a program more difficult to understand and test than one with a smaller values. V(G) is 5 or less are recommended.

The now well-known Halstead complexity measures were published by late Professor Maurice Halstead in a book titled “Elements of Software Science.” Table 1.2 lists some of the software science metrics. Using program size (S) and effort (E), the following estimator has been proposed for the number of errors (B) found during a software development effort:

Extensive empirical studies have been reported to validate Halstead’s software science metrics. An advantage of using an estimator such as Β is that it allows the management to plan for testing resources. For example, a larger value of the number of expected errors will lead to a larger number of testers and testing resources to complete the test process over a given duration. Nevertheless, modern programming languages such as Java and C++ do not lend themselves well to the application of the software science metrics. Instead, one uses specially devised metrics for object-oriented languages described next (also see Exercise 1.16).

Table 1.2 Halstead measures of program complexity and effort.

Measure |

Notation |

Definition |

|---|---|---|

|

Operator count |

N1

|

Number of operators in a program |

|

Operand count |

N2

|

Number of operands in a program. |

|

Unique operators |

η1

|

Number of unique operators in a program |

|

Unique operands |

η2

|

Number of unique operands in a program |

|

Program vocabulary |

η

|

η1 + η2 |

|

Program size |

N

|

N1 + N2 |

|

Program volume |

V

|

N × log2η |

|

Difficulty |

D

|

2/η1 × η2/N2 |

|

Effort |

E

|

D × V |

1.6.5 Product metrics: OO software

A number of empirical studies have investigated the correlation between product complexity metric application quality. Table 1.3 lists a sample of product metrics for object-oriented and other applications. Product reliability is a quality metric and refers to the probability of product failure for a given operational profile. As explained in Section 1.4.2, product reliability of software truly measures the probability of generating a failure causing test input. If for a given operational profile and in a given environment this probability is 0, then the program is perfectly reliable despite the possible presence of errors. Certainly, one could define other metrics to assess software reliability. A number of other product quality metrics, based on defects, are listed in Table 1.3.

Table 1.3 A sample of product metrics.

Metric |

Meaning |

|---|---|

|

Reliability

Defects density Defect severity Test coverage |

Probability of failure of a software product with respect to a given operational profile in a given environment. Number of defects per KLOC. Distribution of defects by their level of severity Fraction of testable items, e.g., basic blocks, covered. Also, a metric for test adequacy or "goodness of tests." |

|

Cyclomatic complexity Weighted methods per class Class coupling Response set Number of children |

Measures complexity of a program based on its CFG.

Measures the number of classes to which a given class is coupled. Set of all methods that can be invoked, directly and indirectly, when a message is sent to object O. Number of immediate descendants of a class in the class hierarchy. |

The OO metrics in the table are due to Shyam Chidamber and Chris Kemerer. They measure program or design complexity. They are of direct relevance to testing in that a product with a complex design will likely require more test effort to obtain a given level of defect density than a product with less complexity.

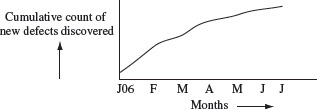

1.6.6 Progress monitoring and trends

Metrics are often used for monitoring progress. This requires making measurements on a regular basis over time. Such measurements offer trends. For example, suppose that a browser has been coded, unit tested, and its components integrated. It is now in the system testing phase. One could measure the cumulative number of defects found and plot these over time. Such a plot will rise over time. Eventually, it will likely show a saturation indicating that the product is reaching a stability stage. Figure 1.10 shows a sample plot of new defects found over time.

Figure 1.10 A sample plot of cumulative count of defects found over seven consecutive months in a software project.

1.6.7 Static and dynamic metrics

Static metrics are those computed without having to execute the product. Number of testable entities in an application is an example of a static product metric. Dynamic metrics require code execution. For example, the number of testable entities actually covered by a test suite is a dynamic product metric.

Product metrics could be classified as static or dynamic. Computing a dynamic metric will likely require program execution.

One could apply the notions of static and dynamic metrics to organization and project. For example, the average number of testers working on a project is a static project metric. Number of defects remaining to be fixed could be treated as a dynamic metric as it can be computed accurately only after a code change has been made and the product retested.

1.6.8 Testability

According to IEEE, testability is the “degree to which a system or component facilitates the establishment of test criteria and the performance of tests to determine whether those criteria have been met.” Different ways to measure testability of a product can be categorized into static and dynamic testability metrics. Software complexity is one static testability metric. The more complex an application, the lower the testability, that is, the higher the effort required to test it. Dynamic metrics for testability include various code-based coverage criteria. For example, a program for which it is difficult to generate tests that satisfy the statement coverage criterion is considered to have low testability than one for which it is easier to construct such tests.

The testability of a program is the degree to which a program facilitates the establishment and measurement of some performance criteria.

High testability is a desirable goal. This is done best by knowing what needs to be tested and how, well in advance. It is recommended that features to be tested and how they are to be tested must be identified during the requirements stage. This information is then updated during the design phase and carried over to the coding phase. A testability requirement might be met through the addition of some code to a class. In more complex situations, it might require special hardware and probes in addition to special features aimed solely at meeting a testability requirement.

Example 1.19 Consider an application Ε required to control the operation of an elevator. Ε must pass a variety of tests. It must also allow a tester to perform a variety of tests. One test checks if the elevator hoists motor and the brakes are working correctly. Certainly one could do this test when the hardware is available. However, for concurrent hardware and software development, one needs a simulator for the hoist motor and brake system.

To improve the testability of E, one must include a component that allows it to communicate with a hoist motor and brake simulator and display the status of the simulated hardware devices. This component must also allow a tester to input tests such as “start the motor.”

Another test requirement for E is that it must allow a tester to experiment with various scheduling algorithms. This requirement can be met by adding a component to Ε that offers a palette of scheduling algorithms to choose from and whether or not they have been implemented. The tester selects an implemented algorithm and views the elevator movement in response to different requests. Such testing also requires a “random request generator” and a display of such requests and the elevator response (also see Exercise 1.14).

Testability is a concern in both hardware and software designs. In hardware design, testability implies that there exist tests to detect any fault with respect to a fault model in a finished product. Thus, the aim is to verify the correctness of a finished product. Testability in software focuses on the verification of design and implementation.

1.7 Software and Hardware Testing

There are several similarities and differences between techniques used for testing software and hardware. It is obvious that a software application does not degrade over time, any fault present in the application will remain, and no new faults will creep in unless the application is changed. This is not true for hardware, such as a VLSI chip, that might fail over time due to a fault that did not exist at the time the chip was manufactured and tested.

This difference in the development of faults during manufacturing or over time leads to built-in self test (BIST) techniques applied to hardware designs and rarely, if at all, to software designs and code. BIST can be applied to software but will only detect faults that were present when the last change was made. Note that internal monitoring mechanisms often installed in software are different from BIST intended to actually test or the correct functioning of a circuit.

Fault models: Hardware testers generate tests based on fault models. For example, using a stuck-at fault model a set of input test patterns can be used to test whether or not a logic gate is functioning as expected. The fault being tested for is a manufacturing flaw or might have developed due to degradation over time. Software testers generate tests to test for correct functionality. Sometimes such tests do not correspond to any general fault model. For example, to test whether or not there is a memory leak in an application, one performs a combination of stress testing and code inspection. A variety of faults could lead to memory leaks.

Hardware testers use a variety of fault models at different levels of abstraction. For example, at the lower level there are transistor level faults. At higher levels there are gate level, circuit level, and function level fault models. Software testers might or might not use fault models during test generation even though the models exist. Mutation testing described in Chapter 8 is a technique based on software fault models. Other techniques for test generation such as condition testing, finite state model-based testing, and combinatorial designs are also based on well-defined fault models which shall be discussed in Chapters 4–6, respectively. Techniques for automatic generation of tests, as described in several chapters in Part II of this book are based on precise fault models.

Test domain: A major difference between tests for hardware and software is in the domain of tests. Tests for a VLSI chip, for example, take the form of a bit pattern. For combinational circuits, for example, a multiplexer, a finite set of bit patterns will ensure the detection of any fault with respect to a circuit level fault model. For sequential circuits that use flip-flops, a test may be a sequence of bit patterns that move the circuit from one state to another and a test suite is a collection of such tests. For software, the domain of a test input is different than that for hardware. Even for the simplest of programs, the domain could be an infinite set of tuples with each tuple consisting of one or more basic data types such as integers and reals.

Test domains differ between hardware and software tests. However, mutation testing of software is motivated by concepts in hardware testing.

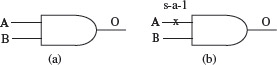

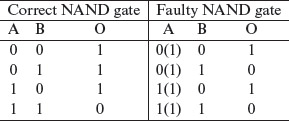

Example 1.20 Consider a simple 2-input NAND gate in Figure 1.11(a). The stuck-at fault model defines several faults at the input and output of a logic gate. Figure 1.11(b) shows a 2-input NAND gate with a stuck-at-1 fault, abbreviated as s-a-1, at input A. The truth tables for the correct and the faulty NAND gates are shown below.

Figure 1.11 (a) A 2-input NAND gate, (b) A NAND gate with a stuck-at 1 fault in input A.

Stuck-at fault models are common in hardware.

A test bit vector v: (A = 0, Β = 1) leads to output 0 whereas the correct output should be 1. Thus, v detects a single s-a-1 fault in the A input of the NAND gate. There could be multiple stuck-at faults. Exercise 1.15 asks you to determine whether or not multiple stuck-at faults in a 2-input NAND gate can always be detected.

Test coverage: It is practically impossible to completely test a large piece of software, for example, an operating system as well as a complex integrated circuit such as a modern 32 or 64-bit microprocessor. This leads to the notion of acceptable test coverage. In VLSI testing, such coverage is measured using a fraction of the faults covered to the total that might be present with respect to a given fault model.

The idea of fault coverage in hardware is also used in software testing using program mutation. A program is mutated by injecting a number of faults using a fault model that corresponds to mutation operators. The adequacy of a test set is assessed as a fraction of the mutants covered to the total number mutants. Details of this technique are described in Chapter 8.

Test set efficiency: There are multiple definitions of test set efficiency. Here we give one. Let T be a test set developed to test program P that must meet requirements R. Let f be the number of faults in Ρ and f′ the number faults detected when Ρ is executed against test cases in Τ. The efficiency of Τ is measured as the ratio ![]() Note that the efficiency is a number between 0 and 1. This definition of test set efficiency is also referred to as the effectiveness of a test set.

Note that the efficiency is a number between 0 and 1. This definition of test set efficiency is also referred to as the effectiveness of a test set.

In most practical situations, one would not know the number of faults in a program under test. Hence, in such situations it is not possible to measure precisely the efficiency of a test set. Nevertheless, empirical studies are generally used to measure the efficiency of a test set by using well-known and understandable programs that are seeded with known faults.

Note that the size of a test set is not accounted for in the above definition of efficiency. However, given two test sets with equal efficiency, it is easy to argue that one would prefer to use the one that contains fewer tests.

The term test efficiency is different from test set or test suite efficiency. While the latter refers to the efficiency of a test suite, test efficiency refers to the efficiency of the test process. The efficiency of a test process is often defined as follows: Let D denote the total number of defects found during the various phases of testing excluding the user acceptance phase. Let Du be the number of defects found in user acceptance testing and D′ = D + Du. Then, test efficiency is defined as ![]()

Notice that test suite efficiency and test efficiency are two quite different metrics. Test set efficiency is an indicator of the goodness of the techniques used to develop the tests. Test efficiency is an indicator of the goodness of the entire test process, and hence it includes in some ways, test set metrics.

1.8 Testing and Verification

Program verification aims at proving the correctness of programs by showing that it contains no errors. This is very different from testing that aims at uncovering errors in a program. While verification aims at showing that a given program works for all possible inputs that satisfy a set of conditions, testing aims to show that the given program is reliable in that no errors of any significance were found.

Testing aims to find if there are any errors in the program. It does so by sampling the input domain and checking the program behavior against the samples. Verification aims at proving the correctness of a program or a specific part of it.

Program verification and testing are best considered as complementary techniques. In practice, one often sheds program verification, but not testing. However, in the development of critical applications, such as smart cards, or control of nuclear plants, one often makes use of verification techniques to prove the correctness of some artifact created during the development cycle, not necessarily the complete program. Regardless of such proofs, testing is used invariably to obtain confidence in the correctness of the application.

Testing is not a perfect process in that a program might contain errors despite the success of a set of tests. However, it is a process with direct impact on our confidence in the correctness of the application under test. Our confidence in the correctness of an application increases when an application passes a set of thoroughly designed and executed tests.

Verification might appear to be a perfect process as it promises to verify that a program is free from errors. However, a close look at verification reveals that it has its own weaknesses. The person who verified a program might have made mistakes in the verification process; there might be an incorrect assumption on the input conditions; incorrect assumptions might be made regarding the components that interface with the program, and so on. Thus, neither verification nor testing are perfect techniques for proving the correctness of programs.

It is often stated that programs are mathematical objects and must be verified using mathematical techniques of theorem proving. While one could treat a program as a mathematical object, one must also realize the tremendous complexity of this object that exists within the program and also in the environment in which it operates. It is this complexity that has prevented formal verification of programs such as the 5ESS switch software from AT&T, the various versions of the Windows operating system, and other monstrously complex programs. Of course, we all know that these programs are defective, but the fact remains that they are usable and provide value to users.

1.9 Defect Management

Defect management is an integral part of a development and test process in many software development organizations. It is a subprocess of the development process. It entails the following: defect prevention, discovery, recording and reporting, classification, resolution, and prediction.

Defect management is a practice used in several organizations. Several methods, e.g., Orthogonal Defect Classification, are available to categorize defects.

Defect prevention is achieved through a variety of processes and tools. For example, good coding techniques, unit test plans, and code inspections are all important elements of any defect prevention process. Defect discovery is the identification of defects in response to failures observed during dynamic testing or found during static testing. Discovering a defect often involves debugging the code under test.

Defects found are classified and recorded in a database. Classification becomes important in dealing with the defects. For example, defects classified as “high severity” will likely be attended to first by the developers than those classified as “low severity.” A variety of defect classification schemes exist. Orthogonal defect classification, popularly known as ODC, is one such scheme. Defect classification assists an organization in measuring statistics such as the types of defects, their frequency, and their location in the development phase and document. These statistics are then input to the organization’s process improvement team that analyzes the data, identifies areas of improvement in the development process, and recommends appropriate actions to higher management.

Each defect, when recorded, is marked as “open” indicating that it needs to be resolved. One or more developers are assigned to resolve the defect. Resolution requires careful scrutiny of the defect, identifying a fix if needed, implementing the fix, testing the fix, and finally closing the defect indicating that it has been resolved. It is not necessary that every recorded defect be resolved prior to release. Only defects that are considered critical to the company’s business goals, which include quality goals, are resolved, others are left unresolved until later.

Defect prediction is another important aspect of defect management. Organizations often do source code analysis to predict how many defects an application might contain before it enters the testing phase. Despite the imprecise nature of such early predictions, they are used to plan for testing resources and release dates. Advanced statistical techniques are used to predict defects during the test process. The predictions tend to be more accurate than early predictions due to the availability of defect data and the use of sophisticated models. The defect discovery data, including time of discovery and type of defect, are used to predict the count of remaining defects. Once again this information is often imprecise, though nevertheless used in planning.

Several tools exist for recording defects, and computing and reporting defect-related statistics. Bugzilla, open source, and FogBugz, commercially available, are three such tools. They provide several features for defect management including defect recording, classification, and tracking. Several tools that compute complexity metrics also predict defects using code complexity.

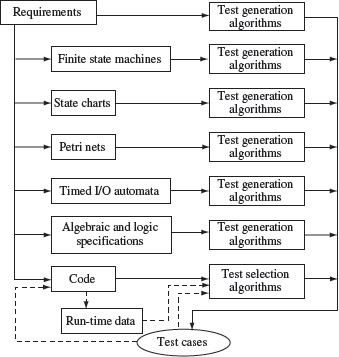

1.10 Test Generation Strategies

One of the key tasks in any software test activity is the generation of test cases. The program under test is executed against the test cases to determine whether or not it conforms to the requirements. The question How to generate test cases ? is answered in significant detail in Part II: Test Generation of this book. Here, we provide a brief overview of the various test generation strategies.

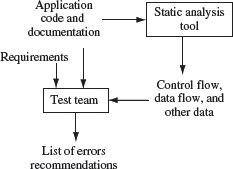

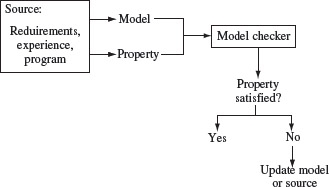

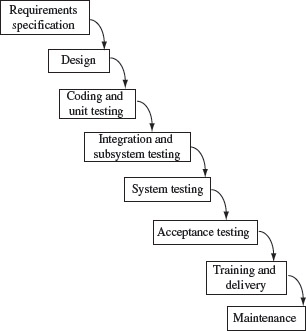

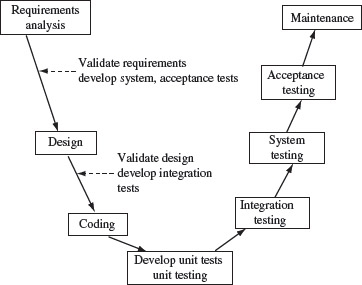

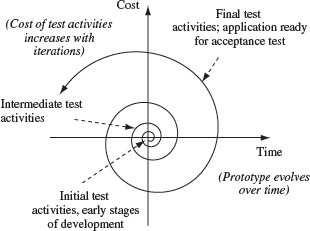

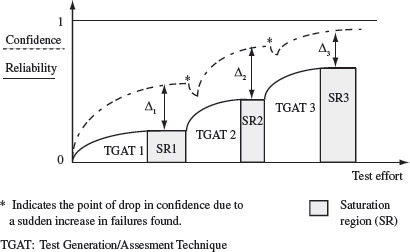

Strategies for test generation abound. Very broadly these are classified as either black box or white box.