Tunneling

Introduction

Or “Where Are We Going, and Why Am I in This Handbasket?”

“Behold the beast, for which I have turned back;

Do thou protect me from her, famous Sage,

For she doth make my veins and pulses tremble.”

“Thee it behoves to take another road,”

Responded he, when he beheld me weeping,

“If from this savage place thou wouldst escape;

Because this beast, at which thou criest out,

Suffers not any one to pass her way,

But so doth harass him, that she destroys him …”

—Dante’s Inferno, Canto I, as Dante meets Virgil (trans. Henry Wadsworth Longfellow)

It is a universal rule of computer science (indeed, management itself) that no solution is perfectly scalable, that is, a process built to handle a small load rarely, if ever, can scale up to an arbitrarily large one, and vice versa. Databases built to handle tens of thousands of entries struggle mightily to handle millions; a word processor built to manage full length books becomes too baroque and unwieldy to tap out a simple e-mail. More than mere artifacts of programming skill (or lack thereof), such limitations are generally and unavoidably a consequence of design decisions regarding exactly how the system might be used. Presumptions are made in design that lead to systemic assumptions in implementation. The best designs have presumptions flexible enough to handle unimaginably diverse implementations, but everything assumes.

Transmission Control Protocol/Internet Protocol (TCP/IP) has been an astonishing success; over the course of the late 1990s, the suite of communication protocols did more than just supplant its competition—it eradicated it. This isn’t always appreciated for the cataclysmic event that it was: Windows 95 supported TCP/IP extraordinarily well, but not by default—by far the dominant networking protocols of the time were Novell’s IPX and Microsoft/IBM’s NetBIOS. A scant three years later, neither IPX nor NetBIOS was installed by default. Windows 98 had gone TCP/IP only, reflecting the networks it was being installed upon.

The TCP/IP protocol suite didn’t take over simply because Microsoft decided to “get” the “Net,” that much is obvious. Some might credit the widespread deployment of the protocol among the UNIX servers found throughout corporations and universities, or the fact that the World Wide Web, built upon TCP/IP, grew explosively during this time. Both answers ignore the underlying question: Why? Why was it widespread among UNIX servers? Why couldn’t the Web be deployed with anything else? In short, why TCP/IP?

Of course, many factors contributed to the success of the protocol suite (notably, the protocol and the reference BSD implementation were quite free), but certainly one of the most critical in a networking context can be summarized as “Think Globally, Route Locally.”

NetBIOS had no concept of an outside world beyond what was directly on your LAN. IPX had the concept of other networks that data needed to get to, but required each individual client to discover and specify in advance the complete route to the destination. TCP/IP, by contrast, allowed each host to simply know the next machine to send data along to—the full path was just assumed to eventually work itself out. If TCP/IP can be thought of as simply mailing a letter with the destination address, IPX was the equivalent of needing to give the mailman driving directions. That didn’t scale too well.

That being said, reasonably large scale networks were still built before TCP/IP, often using various solutions that made it appear that a far-away server was actually quite close and easy to access. Such systems were referred to as tunnels. The name is apt—one enters, passes through normally impenetrable terrain, and finds themselves in a completely different place afterwards. They’re nontrivial to build, but generally are point-to-point pathways that prevent you from jumping anywhere else in-between the two destinations. Their capacity varies, but it is generally less than might be built if there were no barriers in the first place.

TCP/IP, requiring much less central coordination and allowing for far more localized knowledge, obviated the need for “band-aid” tunnels spanning the vast gaps in networks and protocols. Something the scale of the Internet really couldn’t be built with much else, but the protocol was still light enough to scale down for LAN traffic. It worked well—then security happened.

Disturbingly quickly, the massively interconnected graph that was the Internet became a liability—the protections once afforded by network locality and limited interest were vastly overtaken by global connectivity and the Venture Capital Feeding Frenzy. The elegant presumptions of TCP/IP—how sessions can be initiated, how flexible port selection might be, the administrative trust that could be assumed to exist in any directly network-connected host—started falling apart. Eventually, global addressibility itself was weakened, as the concept of Network Address Translation (NAT)—which hides arbitrary numbers of backend clients behind a single network-layer server/firewall—was deployed in response to both a critical need for effective connection interrogation/limitation and a bureaucratic boondoggle in gaining access to IP address space.

And suddenly, old problems involving the interconnection of separated hosts popped up again. As always, old problems call for old solutions … and tunneling was reborn.

It’s not the same as it used to be. More than anything else, tunneling in the 21st century is about virtualizing the lack of connectivity through the judicious use of cryptography. We’ve gone through somewhat of a pendulum shift—first there was very limited global network access, then global network access was everywhere, then there was a clampdown on that connectivity, and finally holes are poked in the clampdown for those systems engineered well enough to be cryptographically secure. It’s this engineering that this chapter hopes to teach. These methods aren’t perfect, and they aren’t claimed to be—at times they’re down and dirty, but they work. The job is to get us from here to there and back again. We mostly use SSH and the paradigm of gateway cryptography to do it.

Strategic Constraints of Tunnel Design

Determining an appropriate method of tunneling between networks is far from trivial. Choosing from the wide range of available protocols, packages, and possible configurations can be a daunting task. The purpose of this chapter is to describe some of the more cutting-edge mechanisms available for establishing connectivity across any network architecture, but equally important is to understand just what makes a tunneling solution viable. Uncountable techniques could be implemented; the following helps you know what should be implemented … or else.

Make no bones about it: Tunneling is quite often a technique of bypassing overly restrictive security controls. This is not always a bad thing—remember, no organization exists merely for the purpose of being secure, and a bankrupt company is particularly insecure (especially when it comes to customer records). But, it’s difficult to argue against security restrictions when your own solution is blisteringly insecure! Particularly in the corporate realm, the key to getting permission (or forgiveness) for a firewall-busting tunnel is to preemptively absorb the security concerns the firewall was meant to address, thus blunting the accusation that you’re responsible for making systems vulnerable.

Privacy: “Where Is My Traffic Going?”

Primary questions for privacy of communications include the following:

![]() Can anyone else monitor the traffic within this tunnel? Read access, addressed by encryption.

Can anyone else monitor the traffic within this tunnel? Read access, addressed by encryption.

![]() Can anyone else modify the traffic within this tunnel, or surreptitiously gain access to it? Write access, addressed primarily through authentication.

Can anyone else modify the traffic within this tunnel, or surreptitiously gain access to it? Write access, addressed primarily through authentication.

Privacy of communications is the bedrock of any secure tunnel design; in a sense, if you don’t know who is participating in the tunnel, you don’t know where you’re going or whether you’ve even gotten there. Some of the hardest problems in tunnel design involve achieving large scale n-to-n level security, and it turns out that unless a system is almost completely trusted as a private solution, no other trait will convince people to actually use it.

Routability: “Where Can This Go Through?”

Primary questions for facing routability concepts are:

![]() How well can this tunnel fit with my limited ability to route packets through my network? Ability to morph packet characteristics to something the network is permeable to.

How well can this tunnel fit with my limited ability to route packets through my network? Ability to morph packet characteristics to something the network is permeable to.

![]() How obvious is it going to be that I’m “repurposing” some network functionality? Ability to exploit masking noise to blend with surrounding network environment.

How obvious is it going to be that I’m “repurposing” some network functionality? Ability to exploit masking noise to blend with surrounding network environment.

The tunneling analogy is quite apropos for this trait, for sometimes you’re tunneling through the network equivalent of soft soil, and sometimes you’re trying to bore straight through the side of a mountain. Routability is a concept that normally refers to whether a path can be found at all; in this case, it refers to whether a data path can be established that does not violate any restrictions on types of traffic allowed. For example, many firewalls allow Web traffic and little else. It is a point of some humor in the networking world that the vast permeability of firewalls to HTTP traffic has led to all traffic eventually getting encapsulated into the capabilities allowed for the protocol.

Routability is divided into two separate but highly related concepts: First, the capability of the tunnel to exploit the permeability of a given network (as in, a set of paths from source to destination and back again) to a specific form of traffic, and to encapsulate traffic within that form regardless of its actual nature. Second, and very important for long-term availability of the tunneling solution in possibly hostile networks, is the capability of that encapsulated traffic to exploit the masking noise of similar but nontunneled data flows surrounding it.

For example, consider the difference between encapsulating traffic within HTTP and HTTPS, which is nothing more than HTTP wrapped in SSL. While most networks will pass through both types of traffic, on the basis of the large amount of legitimate traffic both streams may contain, illegitimate unencrypted HTTP traffic stands out—the tunnel, if you will, is transparent and open for investigation. By contrast, the HTTPS tunnel doesn’t even need to really run HTTP—because SSL renders the tunnel quite opaque to an inquisitive administrator, anything can be moving over it, and there’s no way to know someone isn’t just checking their bank statement.

Or is there? If nothing else, HTTP is not a protocol that generally has traffic in keystroke-like bursts. It is a stateless, quick, and short request driven protocol with much higher download rates than uploads. Traffic analysis can render even an encryption-shielded tunnel vulnerable to some degree of awareness of what’s going on. During periods of wartime, simply knowing who is talking to who can often lead to a great deal of knowledge about what moves the enemy will make—many calls in a short period of time to an ammunition depot very likely means ammo supplies are running dry.

The connection to routability, of course, is that a connection discovered to be undesirable can quickly be made unroutable pending an investigation. Traffic analysis can significantly contribute to such determinations, but it is not all powerful. Networks with large amounts of unclassifiable traffic provide the perfect cover for any sort of tunneling system; there is no need to be excessively covert when there’s someone, somewhere, legitimately doing exactly what you’re doing.

Deployability: “How Painful Is This to Get Up and Running?”

Primary questions involving deployment and installation include the following:

![]() What needs to be installed on clients that want to participate in the tunnel?

What needs to be installed on clients that want to participate in the tunnel?

![]() What needs to be installed on servers that want to participate in the tunnel?

What needs to be installed on servers that want to participate in the tunnel?

Software installation stinks. It does. The code has to be retrieved from somewhere—and there’s always a risk such code might be exchanged for a Trojan—it has to be run on a computer that was probably working just fine before, it might break a production system, and so on. There is always a cost; luckily, there’s often a benefit to offset it. Tunnels add connectivity, which can very well be the difference between a system being useful/profitable and a system not being worth the electricity needed to keep it running. Still, there is a question of who bears the cost… .

Client upgrades can have the advantage that they’re highly localized in exactly the right place: those who most need additional capabilities are often most motivated to upgrade their software, whereas server-level upgrades require those most detached from users need to do work that only benefits others. (The fact that upgrading stable servers is generally a good way to fix something that wasn’t broken for the vast majority of users can’t be ignored either.)

Other tunneling solutions take advantage of software already deployed on the client side and provide server support for them. This usually empowers an even greater set of clients to take advantage of new tunneling capabilities, and provides the opportunity for administrators to significantly increase security by using only a few simple configurations—like, for example, automatically redirecting all HTTP traffic through a HTTPS gateway, or forcing all wireless clients to tunnel in through the PPTP implementation that shipped standard in their operating system.

Generally, the most powerful but least convenient tunneling solutions require special software installation on both the client and server side. It should be emphasized that the operative word here is special—truly elegant solutions use what’s available to achieve the impossible, but sometimes it’s just not feasible to achieve certain results without spreading the “cost” of the tunnel across both the client and the server.

The obvious corollary is that the most convenient but least powerful systems require no software installation on either side—this happens most often when default systems installed on both sides for one purpose are suddenly found to be co-optable for completely different ones. By breaking past the perception of fixed functions for fixed applications, we can achieve results that can be surprising indeed.

Flexibility: “What Can We Use This for, Anyway?”

Primary questions in ensuring flexible usage are

“Sometimes you’re the windshield, sometimes you’re the bug.” In this case, sometimes you’ve got the Chunnel, but other times you’ve got a rickety rope bridge. Not all tunneling solutions carry identical traffic.

Many solutions, both hand-rolled and reasonably professionally done, simply encapsulate a bitstream in a crypto layer. TCP, being a system for reliably exchanging streams of data from one host to another, is accessed by software by the structure known as sockets. One gets the feeling that SSL, the Secure Sockets Layer, was originally intended to be a drop-in replacement for standard sockets, but various incompatibilities prevented this from being possible. (One also gets the feeling there will eventually be an SSL “function interposer,” that is, a system that will automatically convert all socket calls to Secure Socket calls.)

Although its best performance comes when forwarding TCP sessions, SSH is built to forward a wide range of traffic, from TCP to shell commands to X applications, in a generic but extraordinarily flexible manner. This flexibility makes it the weapon of choice for all sorts of tunneling solutions, but it can come at a cost.

To wit: Highly flexible tunneling solutions can suffer from the problem of “excess capacity”—in other words, if a tunnel is established to serve one purpose, could either side exploit the connection to achieve greater access than it’s trusted for?

X-Windows on the UNIX platform is a moderately hairy but reasonably usable architecture for graphical applications to display themselves within, and one of its big selling points is its network transparency: A given window doesn’t necessarily need to be displayed on the computer that’s running it. The idea was that slow and inexpensive hardware could be deployed all over the place for users, but each of the applications running on them would “seem” fast because they were really running on a very fast and expensive server sitting in the back room. (Business types like this, because it’s much easier to get higher profit margins on large servers than small desktops. This specific “carousel revolution” was most recently repeated with the Web, Java/network computers, and of course, .NET, to various degrees of success.)

One of the bigger problems with stock X-Windows is that the encryption is non-existent, and, worse than being non-existent, authentication is both difficult to use and not very secure (in the end, it’s a simple “Ability To Respond” check). Tatu Ylonen, in his development of the excellent Secure Shell (SSH) package for highly flexible secure networking, included a very elegant implementation of X-Forwarding. Tunneling all X traffic over a virtual display tunneled over SSH, a complex and ultimately useless procedure of managing DISPLAY variables and xhost/xauth arguments was replaced with simply typing ssh user@host and running an X application from the shell that came up. Security is nice, but let’s be blunt: Unlike before, it just worked!

The solution was and still is quite brilliant; it ranks as one of the better examples of the most obvious but often impossible to follow laws of upgrade design: “Don’t make it worse.” Even some of the best of security or tunneling solutions can be somewhat awkward to use—at a minimum, they require an extra step, a slight hesitation, perhaps a noticeable processing hit or reduced networking performance (in terms of either latency or bandwidth). This is part of the usually unavoidable exchange between security and liberty that extends quite a bit outside the realm of computer security. Even simply locking the door to your home obligates you to remember your keys, delays entry into your own home, and imposes a inordinately large cost should keys be forgotten (like, for example, the ever-compounding cost of leaving your keys in the possession of a friend or administrator, and what may indeed become an emergency call to that person to regain access to one’s own property). And of course, in the end a simple locked door is only a minor deterrent to a determined burglar! Overall, difficult to use and not too effective—this is a story we’ve heard before.

There was a problem, though, an instructive one at that: X Windows is a system that connects programs running in one place to displays running anywhere. To do so, it required the capability to channel images to the display and receive mouse motions and keystrokes in return.

And what if the server was compromised?

Suddenly, that capability to monitor keystrokes could be subverted for a completely different purpose—monitoring activity on the remote client. Type a password? Captured. Write a letter? Captured. And, of course, this sensitive information would tunnel quite nicely through the very elegantly encrypted and authenticated connection. Oh. The security of a tunnel can never be higher than that of the two endpoints.

The eventual solution was to disable X-Forwarding by default. ssh -X user@host in OpenSSH will now enable it, provided the server was willing to support it as well. (No, this isn’t a complete solution—a compromised server can still abuse the client if it really needs to forward X traffic—but at some level the problem becomes inherent to X itself, and with most SSH sessions having nothing to do with X, most sessions could be made secure simply by disabling the feature by default. Moving X traffic over VNC is a much more secure solution, and in many slower network topologies is faster, easier to set up, and much more stable—check www.tightvnc.org for details.)

In summary, the problem illustrated is simple: Flexibility can sometimes come back to bite you; the less you trust your endpoints, the more you must lock down the capabilities of your tunneling solutions.

Quality: “How Painful Will This System Be to Maintain?”

Primary questions to face regarding systems quality include

There are some things you’d think were obvious; some basic concepts so plainly true that nobody would ever assume otherwise. One of the most inherent of these regards usability: If a system is unusable, nobody is going to use it. You’d think that whole “not able to be used” thing might be a tip-off, but it really isn’t. Too many systems are out there that, by dint of their extraordinary complexity, cannot be upgraded, hacked upon, played with, specialized to the needs of a given site, or whatnot because all energy is being put towards making them work at all. Such systems suffer even in the realm of security, for those who are too afraid they’ll break something are loathe to fix anything. (Many, many servers remain unpatched against basic security holes on the simple logic that a malicious attack might be a possibility but a broken patch is guaranteed.) So a real question for any tunnel system is whether it can be reasonably built and maintained by those using it, and whether it is so precariously configured that any necessary modifications run the risk of causing production downtime.

Less important in some cases but occasionally the defining factor, particularly on server-side aggregators of many cryptographic tunnels, is the issue of speed. All designs have their performance requirements; no solution can efficiently meet all possible needs. When designing your tunneling systems, you need to make sure they have the necessary carrying capacity for your load.

Designing End-to-End Tunneling Systems

There are many types of tunnels one could implement; the study of gateway cryptography tends to focus on which tunneling methodologies should be implemented. One simple rule specifies that whenever possible, tunnels ought to be end-to-end secure. Only the client and the server will be able to decrypt and access the traffic traveling over the tunnel; though firewalls, routers, and even other servers may be involved in passing the encrypted streams of ciphertext around, only the endpoints should be able to participate in the tunnel. Of course, it’s always possible to request that an endpoint give you access to the network visible to it, rather than just services running on that specific host, but that is outside the scope of the tunnel itself—once you pass through the Chunnel from England to France, you’re quite free to travel on to Spain or Germany. What matters is that you do not drown underneath the English Channel!

End-to-end tunnels execute the following three functions without fail:

![]() Create a valid path from client to server.

Create a valid path from client to server.

![]() Independently authenticate and encrypt over this new valid path.

Independently authenticate and encrypt over this new valid path.

These functions can be collapsed into a single step—such as accessing an SSL encrypted Web site over a permeable network. They can also be expanded upon and recombined; for example, authenticating (and being authenticated by) intermediate hosts before being allowed to even attempt to authenticate against the final destination. But these are the three inherent functions to be built, and that’s what we’re going to do now.

Drilling Tunnels Using SSH

So we’re left with a bewildering set of constraints on our behavior, with little more than a sense that an encapsulating approach might be a method of going about satisfying our requirements. What to use? IPSec, for all its hype, is so extraordinarily difficult to configure correctly that even Bruce Schneier, practically the patron saint of computer security and author of Applied Cryptography, was compelled to state “Even though the protocol is a disappointment—our primary complaint is with its complexity—it is the best IP security protocol available at the moment.” (My words on the subject were something along the lines of “I’d rather stick red-hot kitchen utensils in my eyes than administer an IPSec network,” but that’s just me.)

SSL is nice, and well trusted—and there’s even a nonmiserable command-line implementation called Stunnel (www.stunnel.org) with a decent amount of functionality—but the protocol itself is limited and doesn’t facilitate many of the more interesting tunneling systems imaginable. SSL is encrypted TCP—in the end, little more than a secure bitstream with a nice authentication system. But SSL extends only to the next upstream host and becomes progressively unwieldy the more you try to encapsulate within. Furthermore, standard SSL implementations fail to be perfectly forward-secure, essentially meaning that a key compromise in the future will expose data sent today. This is unnecessary and honestly embarrassing.

We need something more powerful, yet still trusted. We need OpenSSH.

Security Analysis: OpenSSH 3.02

The de facto standard for secure remote connectivity, OpenSSH, is best known for being an elegant and secure replacement for both Telnet and the r* series of applications. It is an incredibly flexible implementation of one of the three trusted secure communication protocols (the other two being SSL and IPSec).

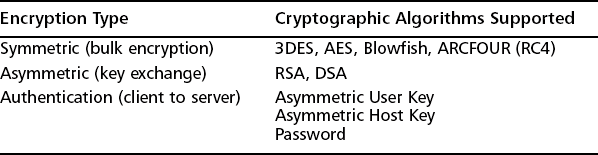

Security

One of the mainstays of open source security, OpenSSH is often the only point of entry made available to some of the most paranoid networks around. Trust in the first version of the SSH protocol is eroding in the face of years of intensive analysis; OpenSSH’s complete implementation of the SSH2 protocol, its completely free code, and its unique position as the only reliable migration path from SSH1 to SSH2 (this was bungled miserably by the original creators of SSH), have made this the de facto standard SSH implementation on the Internet. See Table 13.1 for a list of the encryption types and algorithms OpenSSH supports.

Routability

All traffic is multiplexed over a single outgoing TCP session, and most networks allow outgoing SSH traffic (on 22/tcp) to pass. ProxyCommand functionality provides a convenient interface for traffic maskers and redirectors to be applied, such as a SOCKS redirector or a HTTP encapsulator.

Deployability

Both client and server code is installed by default on most modern UNIX systems, and the system has been ported to a large number of platforms, including Win32.

Flexibility

Having the ability to seamlessly encapsulate a wide range of traffic (see Table 13.2) means that more care needs to be taken to prevent partially trusted clients from appropriating unexpected resources. Very much an embarrassment of riches. One major limitation is the inability to internally convert from one encapsulation context to another, that is, directly connecting the output of a command to a network port.

Table 13.2

Encapsulation Primitives of OpenSSH

| Encapsulation Type | Possible Uses |

| UNIX shell | Interactive remote administration |

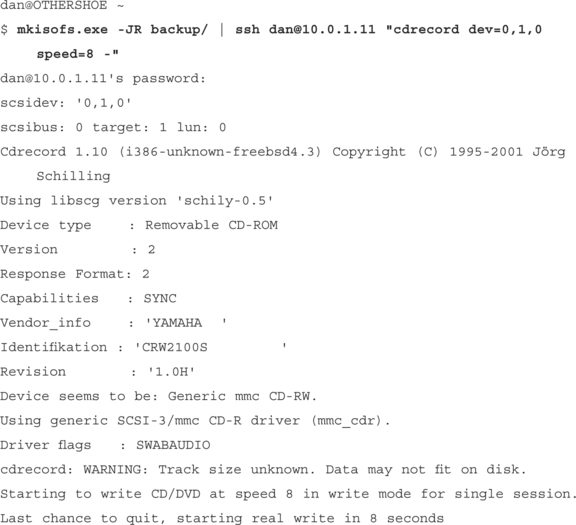

| Command FORWARDING | Remote CD burning, automated backup, cluster management, toolchain interposition |

| Static TCP port forwarding | Single-host network services, like IRC, Mail, VNC, and (very) limited Web traffic |

| Dynamic TCP port forwarding | Multihost and multiport network services, like Web surfing, P2P systems, and Voice over IP |

| X forwarding | Remote access to graphical UNIX applications |

Quality

OpenSSH is very much a system that “just works.” Syntax is generally good, though network port forwarding does tend to confuse those new to the platform. Speed can be an issue for certain platforms, but the one-to-ten MB/s level appears to be the present performance ceiling for default builds of OpenSSH. Some issues with command forwarding can lead to zombie processes. Forked from Tatu Ylonen’s original implementation of SSH and expanded upon by Theo De Raadt, Markus Friedl, Damien Miller, and Ben “Mouring” Lindstrom of the highly secure OpenBSD project, it is under constant, near-obsessive development.

Setting Up OpenSSH

The full procedure for setting up OpenSSH is mostly outside the scope of this chapter, but you can find a good guide for Linux at www.helpdesk.umd.edu/linux/security/ssh_install.shtml. Windows is slightly more complicated; those using the excellent UNIX-On-Windows Cygwin environment can get guidance at http://tech.erdelynet.com/cygwin-sshd.asp; those who simply seek a daemon that will work and be done with it should grab Network Simplicity’s excellent SSHD build at www.networksimplicity.com/openssh/.

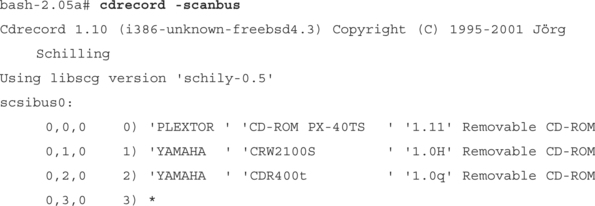

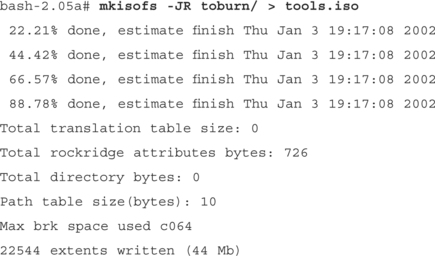

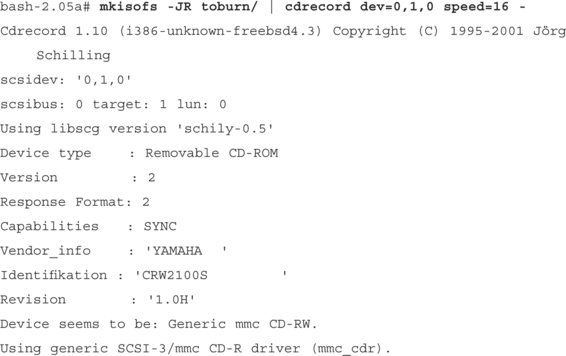

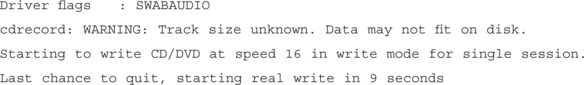

Note this very important warning about versions: Modern UNIX distributions all have SSH daemons installed by default, including Apple’s Macintosh OSX; unfortunately, a disturbing number of these daemons are either SSH 1.2.27 or OpenSSH 2.2.0p2 or earlier. The SSH1 implementations in these packages are highly vulnerable to a remote root compromise, and must be upgraded as soon as possible. If it is not feasible to upgrade the daemon on a machine using the latest available at www.openssh.com (or even the official SSH2 from ssh.com), you can secure builds of OpenSSH that support both SSH1 and SSH2 by editing /etc/sshd_config and changing Protocol 2,1 to Protocol 2. (This has the side effect of disabling SSH1 support entirely, which is a problem for older clients.) Obscurity is particularly no defense in this situation as well—the version of any SSH server can be easily queried remotely, as in the following:

Another important note is that the SSH server does not necessarily require root permissions to execute the majority of its functionality. Any user may execute sshd on an alternate port and even authenticate himself against it. The SSH client in particular may be installed and executed by any normal user—this is particularly important when some of the newer features of OpenSSH, like ProxyCommand, are required but unavailable in older builds.

Open Sesame: Authentication

The first step to accessing a remote system in SSH is authenticating yourself to it. All systems that travel over SSH begin with this authentication process.

Basic Access: Authentication by Password

“In the beginning, there was the command line.” The core encapsulation of SSH is and will always be the command line of a remote machine. The syntax is simple:

[email protected]’s password:

FreeBSD 4.3-RELEASE (CURRENT-12-2-01) #1: Mon Dec 3 13:44:59 GMT 2001$

Throw on a –X option, and if an X-Windows application is executed, it will automatically tunnel. SSH’s password handling is interesting—no matter where in the chain of commands ssh is, if a password is required, ssh will almost always manage to query for it. This isn’t trivial, but is quite useful.

However, passwords have their issues—primarily, if a user’s password is shared between hosts A and B, host A can spoof being the user to host B, and vice versa. Chapter 12 goes into significantly more detail about the weaknesses of passwords, and thus SSH supports a more advanced mechanism for authenticating the client to the server.

Transparent Access: Authentication by Private Key

Asymmetric key systems offer a powerful method of allowing one host to authenticate itself to many—much like many people can recognize a face but not copy its effect on other people, many hosts can recognize the private key referenced by their public component, but not copy the private component itself. So SSH generates private components—one for the SSH1 protocol, another for SSH2—which hosts all over may recognize.

Server to Client Authentication

Although it is optional for the client to authenticate using a public/private keypair, the server must provide key material such that the client, having trusted the host once, may recognize it in the future. This diverges from SSL, which presumes that the client trusts some certificate authority like VeriSign and then can transfer that trust to any arbitrary host. SSH instead accepts the risks of first introductions to a host and then tries to take that first risk and spread it over all future sessions. This has a much lower management burden, but presents a much weaker default model for server authentication. (It’s a tradeoff—one of many. Unmanageable systems aren’t deployed, and undeployed security systems generally are awfully insecure.) First connections to an SSH server generally look like this:

$ ssh [email protected]

The authenticity of host. ‘10.0.1.11 (10.0.1.11)’ can’t be established.

RSA key fingerprint is 6b:77:c8:4f:e1:ce:ab:cd:30:b2:70:20:2e:64:11:db.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘10.0.1.11’ (RSA) to the list of known hosts.

[email protected]’s password:

FreeBSD 4.3-RELEASE (CURRENT-12-2-01) #1: Mon Dec 3 13:44:59 GMT 2001 $

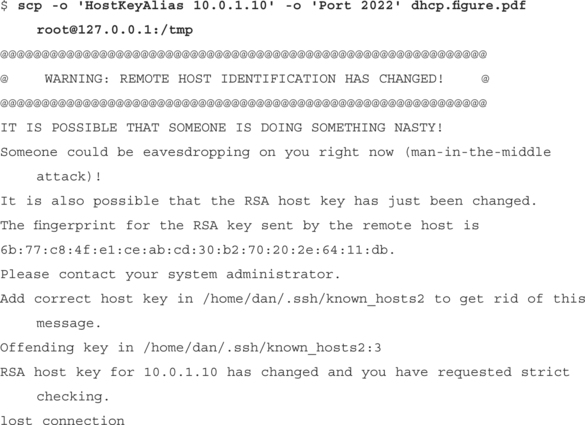

The Host Key, as it’s known, is generated automatically upon installation of the SSH server. This often poses a problem—because the installation routines are pretty dumb, they’ll sometimes overwrite or misplace existing key material. This leads to a very scary error for clients that proclaim that there might be somebody faking the server—but usually it just means that the original key was legitimately lost. This means that users just go ahead and accept the new, possibly spoofed key. This is problematic and is being worked on. For systems that need to be very secure, the most important thing is to come up with decent methods for securely distributing ˜/.ssh/known_hosts and ˜/.ssh/known_hosts2, the files that contains the list of keys the client may recognize. Much of this chapter is devoted to discussing exactly how to distribute files of this type through arbitrarily disroutable networks; upon finding a technique that will work in your network, a “pull” design having each client go to a central host, query for a new known-hosts file, and pull it down might work well.

Client to Server Authentication

Client asymmetric keying is useful but optional. The two main steps are to generate the keys on the client, and then to inform the server that they’re to be accepted. First, key generation executed using ssh-keygen for SSH1 and ssh-keygen –t dsa for SSH2:

Generating public/private rsal key pair.

Enter file in which to save the key (/home/ef fugas/. ssh/ident i ty) :

Enter passphrase (empty for no passphrase) : <ENTER>

Enter same passphrase again: <ENTER>

Your identification has been saved in /home/effugas/.ssh/identi ty.

Your public key has been saved in /home/effugas/.ssh/identity.pub.

c7:d9:12:f8:b4:7b:f2:94:2c:87:43:14:5a:c f:11:1d ef fugas@OTHERSHOE

Generating public/private dsa key pair.

Enter file in which to save the key (/home/ef fugas/. ssh/id_dsa) :

Enter passphrase (empty for no passphrase): <ENTER>

Enter same passphrase again: <ENTER>

Your identification has been saved in /home/effugas/,ssh/id_dsa.

Your public key has been saved in /home/effugas/,ssh/id_dsa.pub.

e0:e2:a7:lb:02:ad:5b:0a:7f:f8:9c:dl:f8:3b:97:bd effugas@OTHERSHOE

Now, you need to inform the server to check connecting clients for possession of the private key (.ssh/identity for SSH1, .ssh/id_dsa for SSH2). Check for possession of the private key by sending the server its public element and adding it to a file in some given user’s home directory—.ssh/authorized_keys for SSH1; .ssh/authorized_keys2 for SSH2. There’s no real elegant way to do this built into SSH, and it is by far the biggest weakness in the toolkit and very arguably the protocol itself. William Stearns has done some decent work cleaning this up; his script at www.stearns.org/ssh-keyinstall/ssh-keyinstall-0.1.3.tar.gz. It’s messy and doesn’t try to hide that. But the following process will remove the need for password authentication using your newly downloaded keys, with the added advantage of not needing any special external applications (note that you need to enter a password):

[email protected]’s password:

Last login: Mon Jan 14 05:38:05 2002 from 10.0.1.56

Okay, deep breath. Now you need to read in the key generated using ssh-keygen, pipe it out through ssh to 10.0.1.10, username effugas. Make sure you’re in the home directory, set file modes so nobody else can read what you’re about to create, create the directory if needed (the –p option makes directory creation optional), then receive whatever you’re being piped and add it to ˜/.ssh/authorized_keys, which the SSH daemon will use to authenticate remote private keys with. Why there isn’t standardized functionality for this is a great mystery; this extended multi-part command, however, will get the job done reasonably well:

$ cat -/.ssh/identity.pub | ssh −1 effugas^lO.O.1.10 “cd – && umask 077 &fc rokdir -p .ssh && cat” -/. ssh/authorized_keys”

[email protected]’s password:

Look ma, no password requested:

Last login: Hon Jan 14 05:44:22 2002 from 10.0.1.56

The equivalent process for SSH2, the default protocol for OpenSSH:

$ cat -/.ssh/id_dsa.pub | ssh ef fugas**10.0. 1 .10 “cd – &fc umask 077 && mkdir -p .ssh &fc cat” -/ .ssh/authorized_keys2”

[email protected]. 10’s password:

Last login: Mon Jan 14 05:47:30 2002 from 10.0.1.56

[e f fugas@1oca1hos t e f fugas]$

Passwords were avoided because we didn’t trust servers, but who says our clients are much better? Great crypto is nice, but we’re essentially taking something that was stored in the mind of the user and putting it on the hard drive of the client for possible grabbing. Remember that there is no secure way to store a password on a client without another password to protect it. Solutions to this problem aren’t great. One system supported by SSH involves passphrases—passwords that are parsed client-side and are used to decrypt the private key that the remote server wishes to verify possession of. You can add passphrases to both SSH2 keys:

Enter file in which the key is (/home/ef fugas/. ssh/ident i ty) :

Key has comment ‘effugas@OTHERSHOE’

Enter new passphrase (empty for no passphrase) :

Your identification has been saved with the new passphrase.

Enter file in which the key is (/home/ef fugas/. ssh/id_dsa) :

Key has comment ‘/home/effugas/,ssh/id_dsa’

Enter new passphrase (empty for no passphrase):

Your identification has been saved with the new passphrase.

# Note the new request for passphrases

$ ssh [email protected]

Enter passphrase for key ‘/home/effugas/,ssh/id_dsa’ :

FreeBSD 4.3-RELEASE (CURRENT-12-2-01) #1: Mon Dec 3 13:44:59 GUT 2001 $

Of course, now we’re back where we started—we have to enter a password every time we want to log into a remote host! What now?

Well, the dark truth is that most people just trust their clients and stay completely passphrase-free, much to the annoyance of IT administrators who think disabling passwords entirely will drive people towards a really nice crypto solution that has no huge wide-open holes. SSH does have a system that tries to address the problem of passphrases being no better than passwords, by allowing a single entry of the passphrase to spread among many authentication attempts. This is done through an agent, which sits around and serves private key computations to SSH clients run under it. (This means, importantly, that only SSH clients running under the shell of the agent get access to its key.) Passphrases are given to the agent, which then decrypts the private key and lets clients access it password-free. A sample implementation of this, assuming keys created as in the earlier example and authorized on both 10.0.1.11 and 10.0.1.10:

First, we start the agent. Note that there is a child shell that is named. If you don’t name a shell, you’ll get an error along the lines of “Could not open a connection to your authentication agent.”

Now, add the keys. If there’s no argument, the SSH1 key is added:

Enter passphrase for effugas@OTHERSHOE:

Identity added: /home/effugas/.ssh/identi ty (effugas@OTHE“RSHOE)

With an argument, the SSH2 key is tossed on:

Enter passphrase for /home/effugas/.ssh/id_dsa:

Ident i ty added: /home/effugas/.ssh/id_dsa (/home/effugas/.ssh/id_dsa)

Now, let’s try to connect to a couple hosts that have been programmed to accept both keys:

$ ssh −1 [email protected]

Last login: Mon Jan 14 06:20:21 2002 from 10.0.1.56

[effugas@!ocalhost effugas]$ ^D

$ ssh −2 [email protected]

FreeBSD 4.3-RELEASE (CURRENT-12-2-01) #1: Mon Dec 3 13:44:59 GMT 2001

Having achieved a connection to a remote host, we now have to figure what to do. For any given SSH connection, we may execute commands on the remote server or establish various forms of network connectivity. We may even do both, sometimes providing ourselves a network path to the very server we just initiated.

Command Forwarding: Direct Execution for Scripts and Pipes

One of the most useful features of SSH derives from its heritage as a replacement for the r* series of UNIX applications. SSH possesses the capability to cleanly execute remote commands, as if they were local. For example, instead of typing:

$ ssh [email protected]

[email protected]’s password:

FreeBSD 4.3-RELEASE (CURRENT-12-2-01) #1: Mon Dec 3 13:44:59 GMT 2001

3:19AM up 18 days. 8:48. 5 users. load averages: 2.02. 2.04. 1.97

$ ssh effugas#10.0.1.11 uptime

[email protected]’s password:

3:20AM up 18 days. 8:49. 4 users. load averages: 2.01. 2.03. 1.97

Indeed, we can pipe output between hosts, such as in this trivial example:

Such functionality is extraordinarily useful for tunneling purposes. The basic concept of a tunnel is something that creates a data flow across a normally impenetrable boundary; there is little that is generically as impenetrable as the separation between two independent pieces of hardware. (A massive amount of work has been done in process compartmentalization, where a failure in one piece of code is almost absolutely positively not going to cause a failure somewhere else, due to absolute memory protection, CPU scheduling, and what not. Meanwhile, simply running your Web server and mail server code on different systems, possible many different systems, possibly geographically spread over the globe provides a completely different class of process separation.) SSH turns pipes into an inter–host communication subsystem—the rule becomes: Almost any time you’d use a pipe to transfer data between processes, SSH allows the processes to be located on other hosts.

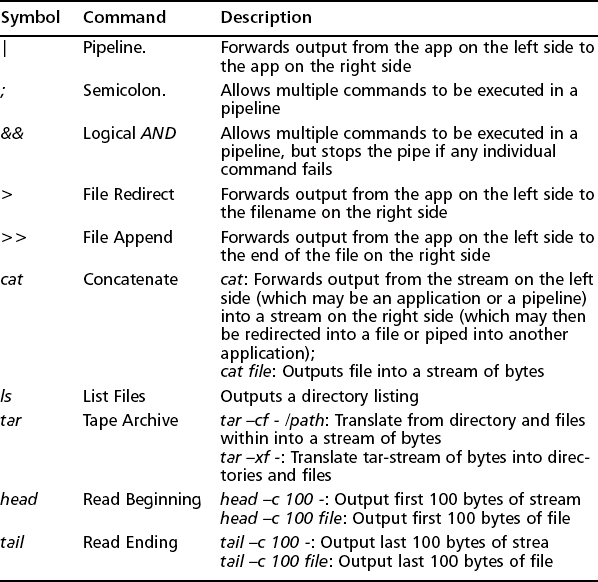

Remote pipe execution can be used to great effect—very simple command pipelines, suddenly able to cross server boundaries, can have extraordinarily useful effects. For example, most file transfer operations can be built using little more than a few basic tools that ship with almost all UNIX and Cygwin distributions. Some base elements are listed in Table 13.3:

From such simple beginnings, we can actually implement the basic elements of a file transfer system (see Table 13.4).

One of the very nice things about SSH is that, when it executes commands remotely, it does so in an extraordinarily restricted context. Trusted paths are actually compiled into the SSH daemon, and the only binaries SSH will execute without an absolute path are those in /usr/local/bin, /usr/bin, and /bin. (SSH also has the capability to forward environment variables, so if the client shell has any interesting paths, their names will be sent to the server as well. This is a slight sacrifice of security for a pretty decent jump in functionality.)

Port Forwarding: Accessing Resources on Remote Networks

Once we’ve got a link, SSH gives us the capability to create a “portal” of limited network connectivity from the client to the server, or vice versa. The portal is not total—simply running SSH does not magically encapsulate all network traffic on your system, any more than the existence of airplanes means you can flap your arms and fly. However, there do exist methods and systems for making SSH an extraordinarily useful network tunneling system.

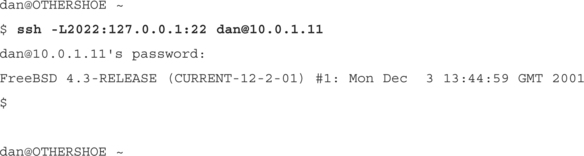

Local Port Forwards

A local port forward is essentially a request for SSH to listen on one client TCP port (UDP is not supported, for good reason but greater annoyance), and should any traffic come to it, to pipe it through the SSH connection into some specified machine visible from the server. Such local traffic could be sent to the external IP address of the machine, but for convenience purposes “127.0.0.1” and usually “localhost” refer to “this host”, no matter the external IP address.

The syntax for a Local Port Forward is pretty simple:

ssh –L listening_port:destination_host:destination_port

Let’s walk through the effects of starting up a port forward, using IRC as an example.

This is the port we want to access from within another network—very useful when IRC doesn’t work from behind your firewall due to identd. This is the raw traffic that arrives when the port is connected to:

$ telnet newyork.ny.us.undernet.org 6667

Connected to newyork.ny.us.undernet.org.

NOTICE AUTH :*** Looking up your hostname

NOTICE AUTH :*** Found your hostname, cached

NOTICE AUTH :*** Checking Ident

We connect to a remote server and tell our SSH client to listen for localhost IRC connection attempts. If any are received, they are to be sent to what the remote host sees as newyork.ny.us.undernet.org, port 6667.

$ ssh [email protected] –L6667:newyork.ny.us.undernet.org:6667

Last login: Mon Jan 14 06:22:19 2002 from some.net on pts/0

Linux libertiee.net 2.4.17 #2 Mon Dec 31 21:28:05 PST 2001 i686 unknown

Last login: Mon Jan 14 06:23:45 2002 from some.net

Let’s see if the forwarding worked—do we get the same output from localhost that we used to be getting from a direct connection? Better—identd is timing out, so we’ll actually be able to talk on IRC.

NOTICE AUTH :*** Looking up your hostname

NOTICE AUTH :*** Found your hostname, cached

NOTICE AUTH :*** Checking Ident

NOTICE AUTH :*** No ident response

Establishing a port forward is not enough; we must configure our systems to actually use the forwards we’ve created. This means going through localhost instead of direct to the final destination. The first method is to simply inform the app of the new address—quite doable when addressing is done “live,” that is, is not stored in configuration files:

*** Connecting to port 6667 of server 127.0.0.1

*** Found your hostname, cached

*** Welcome to the Internet Relay Network Effugas (from newyork.ny.us.undernet.org)

More difficult is when configurations are down a long tree of menus that are annoying to modify each time a simple server change is desired. For these cases, we actually need to remap the name—instead of the name newyork.ny.us.undernet.org returning its actual IP address to the application; it needs to instead return 127.0.0.1. For this, we modify the hosts file. This file is almost always checked before a DNS lookup is issued, and allows a user to manually map names to IP addressed. The syntax is trivial:

bash−2.05a$ tail –nl /etc/hosts

Instead of sending IRC to 127.0.0.1 directly, we can modify the hosts file to contain the line:

effugas@OTHERSH0E /cygdr ive/c/wi ndows/system32/drivers/etc

127.0.0.1 newyork.ny.us.undernet.org

Now, when we run IRC, we can connect to the host using the original name—and it’ll still route correctly through the port forward!

effugas@OTHERSH0E /cygdrive/c/windows/system32/drivers/etc

$ irc Timmy newyork.ny.us.undernet.org

*** Connecting to port 6667 of server newyork.ny.us.undernet.org

*** Found your hostname, cached

*** Welcome to the Internet Relay Network Timmy

Note that the location of the hosts file varies by platform. Almost all UNIX systems use /etc/hosts, Win9x uses WINDOWSHOSTS;WinNT uses WINNTSYSTEM32DRIVERSETCHOSTS; and WinXP uses WINDOWSSYSTEM32DRIVERSETCHOSTS. Considering that Cygwin supports Symlinks(using Windows Shortcut files, no less!), it would probably be good for your sanity to execute something like ln –s HOSTSPATH HOSTS /etc/hosts.

Note that SSH Port Forwards aren’t really that flexible. They require destinations to be declared in advance, have a significant administrative expense, and have all sorts of limitations. Among other things, although it’s possible to forward one port for a listener and another for the sender(for example, –L16667:irc.slashnet.org:6667), you can’t address different port forwards by name, because they all end up resolving back to 127.0.0.1. You also need to know exactly what hosts need to get forwarded—attempting to browse the Web, for example, is a dangerous proposition. Besides the fact that it’s impossible to adequately deal with pages that are served off multiple addresses (each of the port 80 HTTP connections is sent to the same server), any servers that aren’t included in the hosts file will “leak” onto the outside network.

Mind you, SSL has similar weaknesses for Web traffic—it’s just that HTTPS (HTTP–over–SSL) pages are generally engineered to not spread themselves across multiple servers (indeed, it’s a violation of the spec, because the lock and the address would refer to multiple hosts).

Local forwards, however, are far from useless. They’re amazingly useful for forwarding all single–port, single–host services. SSH itself is a single–port, single–host service—and as we show a bit later, that makes all the difference.

Dynamic Port Forwards

That local port forwards are a bit unwieldy doesn’t mean that SSH can’t be used to tunnel many different types of traffic. It just means that a more elegant solution needs to be employed—and indeed, one has been found. Some examination of the SSH protocols themselves revealed that, while the listening port began awaiting connections at the beginning of the session, the client didn’t actually inform the server of the destination of a given forwarding until the connection was actually established. Furthermore, this destination information could change from TCP session to TCP session, with one listener being redirected, through the SSH tunnel, to several different endpoints. If only there was a simple way for applications to dynamically inform SSH of where they intended a given socket to point to, the client could create the appropriate forwarding on demand—enter SOCKS4… .

An ancient protocol, the SOCKS4 protocol was designed to provide the absolute simplest way for a client to inform a proxy of which server it actually intended to connect to. Proxies are little more than servers with a network connection clients wish to access; the client issues to the proxy a request for the server it really wanted to connect to, and the proxy actually issues the network request and sends the response back to the client. That’s exactly what we need for the dynamic directing of SSH port forwards—perhaps we could use a proxy control protocol like SOCKS4? Composed of but a few bytes back and forth at the beginning of a TCP session, the protocol has zero per–packet overhead, is already integrated into large numbers of pre–existing applications, and even has mature wrappers available to make any (non–suid) network–enabled application proxy–aware.

It was a perfect fit. The applications could request and the protocol could respond—all that was needed was for the client to understand. And so we built support for it into OpenSSH, with first public release in 2.9.2p2 (only the client needs to upgraded, though newer servers are much more stable when used for this purpose)—and suddenly, the poor man’s VPN was born. Starting up a dynamic forwarder is trivial; the syntax merely requires a port to listen on: ssh –Dlistening_port user@host. For example:

$ ssh [email protected] –D1080

Enter passphrase for key ‘/home/effugas/,ssh/id_dsa’:

Last login: Mon Jan 14 12:08:15 2002 from localhost.localdomain

This will cause all connections to 127.0.0.1:1080 to be sent encrypted through 10.0.1.10 to any destination requested by an application. Getting applications to make these requests is a bit inelegant, but is much simpler than the contortions required for static local port forwards. We’ll provide some sample configurations now.

Internet Explorer 6: Making the Web Safe for Work

Though simple Web pages can easily be forwarded over a simple, static local port forward, complex Web pages just fail miserably over SSH—or at least, they used to. Configuring a Web browser to use the dynamic forwarder described earlier is pretty trivial. The process for Internet Explorer involves the following steps:

1. Select Tools | Internet Options.

2. Choose the Connections tab.

3. Click LAN Settings. Check Use a Proxy Server and click Advanced.

4. Go to the text box for SOCKS. Fill in 127.0.0.1 as the host, and 1080 (or whatever port you chose for the dynamic forward) for the port.

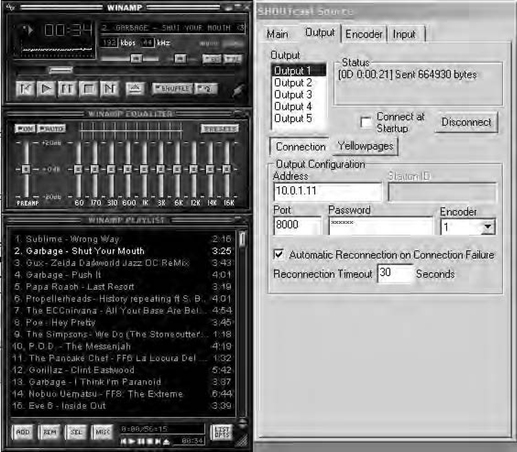

Now go access the Web—if it works at all, it’s most likely being proxied over SSH. Assuming everything worked, you’ll see something like Figure 13.2.

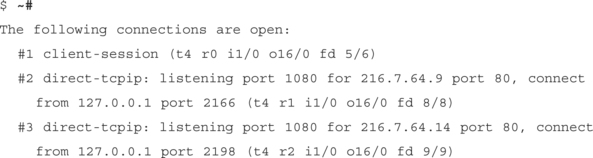

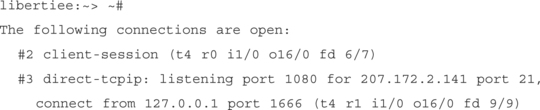

To verify that the link is indeed traveling over SSH, type ˜# in your SSH window. This will bring up a live view of which port forwards are active:

Speak Freely: Instant Messaging over SSH

Though there will probably be a few old–school hackers who might howl about this, instant messaging is one of the killer applications of the Net. There are two major things that are incredibly annoying about public–level (as opposed to corporate/internal) instant messaging circa early 2002: First, to be blunt, there’s really very little privacy. Messages are generally sent in plaintext from your desktop to the central servers and back out—and anyone in your school or your work might very well sniff the messages along the way.

The other major annoying thing is the lack of decent standards for instant messaging. Though the IETF is working on something known as SIMPLE (an extension on SIP), everyone has their own protocol, and nobody can interact. We don’t need four phones to communicate voice across the world, yet we need up to four clients to communicate words across the Internet.

But such has been the cost of centralized instant messaging, which has significantly more reliability and firewall penetration than a peer–to–peer system like ICQ (which eventually absorbed some amount of centralization). Still, it’d be nice if there was some way to mitigate the downsides of chat.

One Ring To Bind Them: Trillian over SSH

Trillian, a free and absolutely brilliant piece of Win32 code, is an extraordinarily elegant and full–featured chat client with no ads but support for Yahoo, MSN, ICQ, AOL, and even IRC. It provides a unified interface to all five services as well as multiuser profiles for shared systems.

It also directly supports SOCKS4 proxies—meaning that although we can’t easily avoid raw plaintext hitting the servers (although there is a SecureIM mode that allows two Trillian users to communicate more securely), we can at least export our plaintext outside our own local networks, where eyes pry hardest if the traffic can pass through at all. Setting up SOCKS4 support in Trillian is pretty simple:

1. Click on the big globe in the lower left–hand corner and select Preferences.

2. Select Proxy from the list of items on the left side—it’s about nine entries down.

3. Check off Use Proxy and SOCKS4.

4. Insert 127.0.0.1 as the host and 1080 (or whatever other port you used) for the port.

5. Click OK and start logging into your services. They’ll all go over SSH now.

You Who? Yahoo IM 5.0 over SSH

Yahoo should just work automatically when Internet Explorer is configured for the localhost SOCKS proxy, but it tries to use SOCKS version 5 instead of 4, which isn’t supported yet. Setting up Yahoo over SOCKS4/SSH is pretty simple anyway:

1. Select Login | Preferences before logging in.

4. Use Server Name 127.0.0.1 and Port 1080 (or whatever else you used).

Just make sure you actually have a dynamic forward bouncing off an SSH server somewhere and you’ll be online. Remember to disable the proxy configuration later if you lose the dynamic forward.

Cryptokiddies: AOL Instant Messenger 5.0 over SSH

Setting this up is also pretty trivial. Remember—without that dynamic forward bouncing off somewhere, like your server at home or school, you’re not going anywhere.

1. Select My AIM | Edit Options | Edit Preferences.

2. Click Sign On/Off along the bar on the left.

3. Click Connection to “configure AIM for your proxy server”.

4. Check Connect Using Proxy, and select SOCKS4 as your protocol.

5. Use 127.0.0.1 as your host and 1080 (or whatever else you used) for your port.

6. Click OK on both windows that are up. You’ll now be able to log in—just remember to disable the proxy configuration if you want to directly connect through the Internet once again.

That’s a Wrap: Encapsulating Arbitrary Win32 Apps within the Dynamic Forwarder

Pretty much any application that runs on outgoing TCP messages can be pretty easily run through Dynamic Forwarding. The standard tool on Win32 (we discuss UNIX in a bit) for SOCKS Encapsulation is SocksCap, available from the company that brought you the TurboGrafx-16: NEC. NEC invented the SOCKS protocol, so this isn’t too surprising. Found at www.socks.nec.com/reference/sockscap.html, SocksCap provides an alternate launcher for apps that may on occasion need to travel through the other side of a SOCKS proxy without necessarily having the benefit of the 10 lines of code needed to support the SOCKS4 protocol (sigh).

SocksCap is trivial to use. The first thing to do upon launching it is go to File | Settings, put 127.0.0.1 into the Server field and 1080 for the port. After you click OK, simply drag shortcuts of apps you’d rather run through the SSH tunnel onto the SocksCap window—you can actually drag entries straight off the Start menu into SocksCap Control (see Figure 13.3). These entries can either be run directly or can be added as a “profile” for later execution.

Most things “just work;” one thing in particular is good to see going fast through SSH: FTP.

File This: FTP over SSH Using LeechFTP

FTP support for SSH has long been a bit of an albatross for it; the need to somehow manage a highly necessary but completely inelegant protocol has long haunted the package. SSH.com and MindTerm both implemented special FTP translation layers for their latest releases to address this need; OpenSSH by contrast treats FTP as any other nontrivial protocol and handles it well.

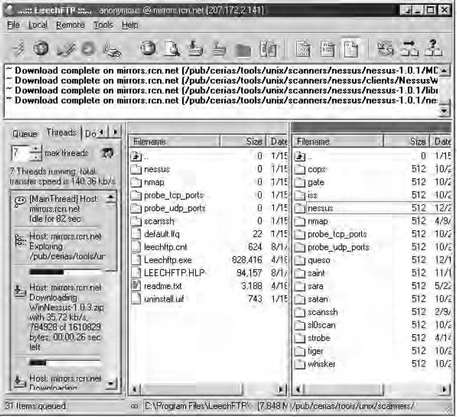

The preeminent FTP client for Windows is almost certainly Jan Debis’ LeechFTP, available at http://stud.fh-heilbronn.de/˜jdebis/leechftp/files/lftp13.zip. Free, multithreaded, and simple to use, LeechFTP encapsulates beautifully within SocksCap and OpenSSH. The one important configuration it requires is to switch from Active FTP (where the server initiates additional TCP connections to the client, within which individual files will be transferred) to Passive FTP (where the server names TCP ports that, should the client connect to them, the content transmitted would be an individual file); this is done like this:

![]() 4. Click OK and connect to some server. The lightning bolt in the upper left-hand corner (see Figure 13.4) is a good start.

4. Click OK and connect to some server. The lightning bolt in the upper left-hand corner (see Figure 13.4) is a good start.

And how well does it do? Take a look at Figure 13.4. Seven threads are sucking data at full speed using dynamically specified ports—works for me:

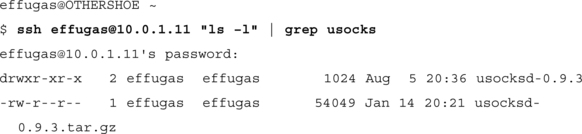

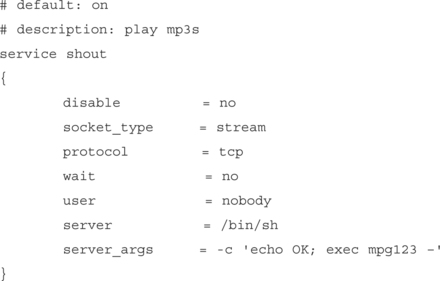

Summoning Virgil: Using Dante’s Socksify to Wrap UNIX Applications

Though some UNIX tools directly support SOCKS for firewall traversal, the vast majority don’t. Luckily, we can add support for SOCKS at runtime to all dynamically linked applications using the client component of Dante, Inferno Nettverks’ industrial-strength implementation of SOCKS4/SOCKS5. You can find Dante at ftp://ftp.inet.no/pub/socks/dante–1.1.11.tar.gz, and though complex, compiles on most platforms.

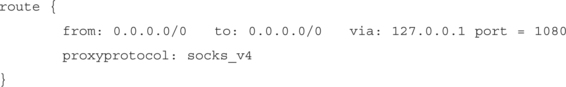

After installation, the first thing to do is set up the system–level SOCKS configuration. It’s incredibly annoying that we have to do this, but there’s no other way (for now). Create a file named /etc/socks.conf and place this into it:

Now, when you execute applications, prefacing them with socksify will cause them to communicate over a dynamic forwarder set up on 1080. Because we’re stuck with a centralized SOCKS configuration file, we need to both have root access to the system we’re working on and restrict ourselves to only one dynamic forwarder at a time—check www.doxpara.com/tradecraft or the book’s Web site www.syngress.com/solutions for updates on this annoying limitation. Luckily, a few applications—Mozilla and Netscape, most usefully—do have internal SOCKS support and can be configured much like Internet Explorer could. Unluckily, setuid apps (ssh often included, though it doesn’t need setuid anymore) cannot be generically forwarded in this manner. All in all, though, most things work. After SSHing into libertiee with –D1080, this works:

Of course, we verify the connection is going through our SSH forward like so:

Remote Port Forwards

The final type of port forward that SSH supports is known as the remote port forward. Although both local and dynamic forwards effectively imported network resources—an IRC server on the outside world became mapped to localhost, or every app under the sun started talking through 127.0.0.1:1080—remote port forwards actually export connectivity available to the client onto the server it’s connected to. Syntax is as follows:

ssh –R 1istening_port:destination_host:destination_port

It’s just the same as a local port forward, except now the listening port is on the remote machine, and the destination ports are the ones normally visible to the client.

One of the more useful services to forward, especially on the Windows platform (we talk about UNIX style forwards later) is WinVNC. WinVNC, available at www.tightvnc.com, provides a simple to configure remote desktop management interface—in other words, I see your desktop and can fix what you broke. Remote port forwarding lets you export that desktop interface outside your firewall into mine.

Do we have the VNC server running? Yup:

Connect to another machine, forwarding its port 5900 to our own port 5900.

$ ssh –R5900:127.0.0.1:5900 [email protected]

[email protected]‘s password:

FreeBSD 4.3–RELEASE (CURRENT–12–2–01) #1: Mon Dec 3 13:44:59 GMT 2001

Test if the remote machine sees its own port 5900 just like we did when we tested our own port:

Note that remote forwards are not particularly public; other machines on 10.0.1.11’s network can’t see this port 5900. The GatewayPorts option in SSHD must be set to allow this—however, such a setting is unnecessary, as later sections of this chapter will show.

When in Rome: Traversing the Recalcitrant Network

You have a server running sshd and a client with ssh. They want to communicate, but the network isn’t permeable enough to allow it—packets are getting dropped on the floor, and the link isn’t happening. What to do? Permeability, in this context, is usually determined by one of two things: What’s being sent, and who’s sending. Increasing permeability then means either changing the way SSH is perceived on the network, or changing the path the data takes through the network itself.

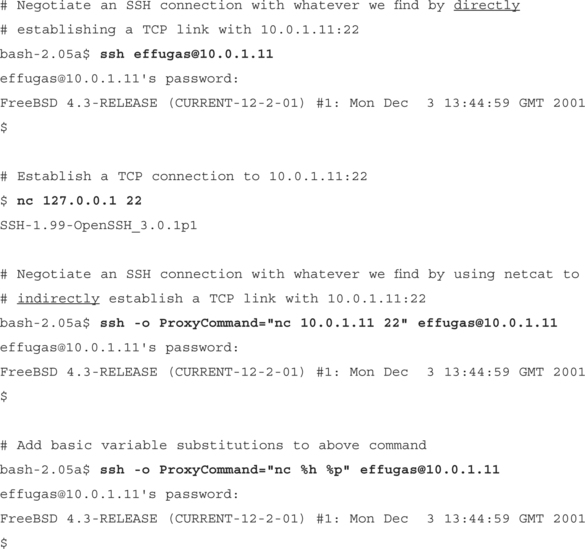

Crossing the Bridge: Accessing Proxies through ProxyCommands

It is actually a pretty rare network that doesn’t directly permit outgoing SSH connectivity; when such access isn’t available, often it is because those networks are restricting all outgoing network connectivity, forcing it to be routed through application layer proxies. This isn’t completely misguided, proxies are a much simpler method of providing back–end network access than modern NAT solutions, and for certain protocols have the added benefit of being much more amenable to caching. So proxies aren’t useless. There are many, many different proxy methodologies, but because they generally add little or nothing to the cause of outgoing connection security, the OpenSSH developers had no desire to place support for any of them directly inside of the SSH client. Implementing each of these proxying methodologies directly into SSH would be a Herculean task.

So instead of direct integration, OpenSSH added a general–purpose option known as ProxyCommand. Normally, SSH directly establishes a TCP connection to some port on a given host and negotiates an SSH protocol link with whatever daemon it finds there. ProxyCommand disables this TCP connection, instead routing the entire session through a standard I/O stream passed into and out of some arbitrary application. This application would apply whatever transformations were necessary to get the data through the proxy, and as long as the end result was a completely clean link to the SSH daemon, the software would be happy. The developers even added a minimal amount of variable completion with a %h and %p flag, corresponding to the host and port that the SSH client would be expecting, if it was actually initiating the TCP session itself. (Host authentication, of course, matches this expectation.)

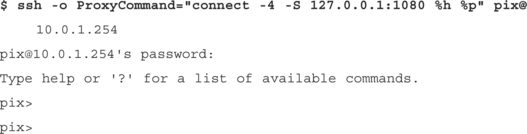

The most flexible ProxyCommand developed has been Shun–Ichi Goto’s connect.c. You can find this elegant little application at www.imasy.or.jp/˜gotoh/connect.c, or www.doxpara.com/tradecraft/connect.c. It supports SOCKS4 and SOCKS5 with authentication, and HTTP without:

$ ssh –o ProxyCommand=“connect.exe −4 –S [email protected]:20080 %h %p”

[email protected]’s password:

Last login: Mori Jan 14 03:24:06 2002 from 10.0.1.11

$ ssh –o ProxyCommand=“connect.exe −5 –S foo#10.0.1.11:20080 %h %p”

effugas#10.0.1.10 [email protected]’s password:

Last login: Hon Jan 14 03:24:06 2002 from 10.0.1.11

![]() SSH over HTTP (HTTP CONNECT, using connect.c)

SSH over HTTP (HTTP CONNECT, using connect.c)

$ ssh –o ProxyCommand=“connect.exe –H 10.0.1.11:20080 %h %p”

effugas#10.0.1.10 [email protected]’s password:

No Habla HTTP? Permuting thy Traffic

ProxyCommand functionality depends on the capability to redirect the necessary datastream through standard input/output—essentially, what comes from the “keyboard” and is sent to the “screen” (though these concepts get abstracted). Not all systems support doing this level of communication, and one in particular—nocrew.org’s httptunnel, available at www.nocrew.org/software/httptunnel.html —is extraordinarily useful, for it allows SSH connectivity over a network that will pass genuine HTTP traffic and nothing else. Any proxy that supports Web traffic will support httptunnel—although, to be frank, you’ll certainly stick out even if your traffic is encrypted.

Httptunnel operates much like a local port forward—a port on the local machine is set to point at a port on a remote machine, though in this case the remote port must be specially configured to support the server side of the httptunnel connection. Furthermore, whereas with local port forwards the client may specify the destination, httptunnel’s are configured at server launch time. This isn’t a problem for us, though, because we’re using httptunnel as a method of establishing a link to a remote SSH daemon.

Start the httptunnel server on 10.0.1.10 that will listen on port 10080 and forward all httptunnel requests to its own port 22:

[effugas@loca1 host effugas]$ hts 10080 –F 127.0.0.1:22

Start a httptunnel client on the client that will listen on port 10022, bounce any traffic that arrives through the HTTP proxy on 10.0.1.11:8888 into whatever is being hosted by the httptunnel server at 10.0.1.10:10080:

$ htc –F 10022 –P 10.0.1.11:8888 10.0.1.10:10080

Connect ssh to the local listener on port 10022, making sure that we end up at 10.0.1.10:

$ ssh –o HostKeyAlias=10.0.1.10 –o Port=10022 [email protected]

Enter passphrase for key ’/home/effugas/.ssh/id_dsa’

Last login: Mon Jan 14 08:45:40 2002 from 10.0.1.10

Latency suffers a bit (everything is going over standard GETs and POSTs), but it works. Sometimes, however, the problem is less in the protocol and more in the fact that there’s just no route to the other host. For these issues, we use path–based hacks.

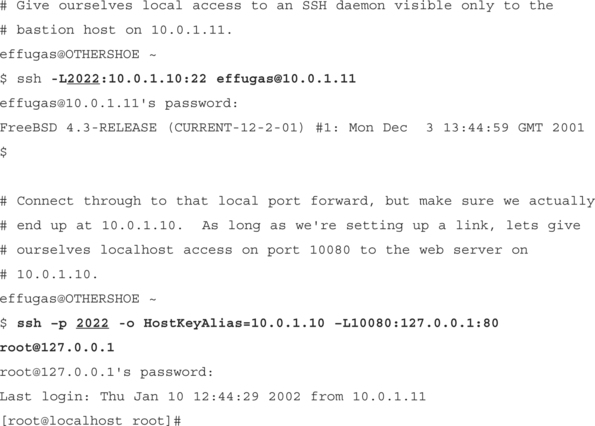

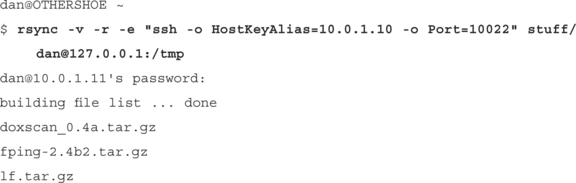

Show Your Badge: Restricted Bastion Authentication

Many networks are set up as follows: One server is publicly accessible on the global Internet, and provides firewall, routing, and possibly address translation services for a set of systems behind it. These systems are known as bastion hosts—they are the interface between the private network and the real world.

It is very common that the occasion will arise that an administrator will want to remotely administer one of the systems behind the bastion. This is usually done like this:

[email protected]’s password:

FreeBSD 4.3–RELEASE (CURRENT–12–2–01) #1: Mon Dec 3 13:44:59 GMT 2001

[email protected]’s password:

Last login: Thu Jan 10 12:43:40 2002 from 10.0.1.11

Sometimes it’s even summarized nicely as ssh [email protected] “ssh [email protected]”.However it’s done, this method is brutally insecure and leads to horribly effective mass penetrations of backend systems. The reason is simple: Which host is legitimately trusted to access the private destination? The original client, generally with the user physically sitting in front of its CPU. What host is actually accessing the private destination? Whose SSH client is accessing the final SSH server? The bastion’s! It is the bastion host that receives and retransmits the plaintext password. It is the bastion host that decrypts the private traffic and may or may not choose to retransmit it unmolested to the original client. It is only by choice that the bastion host may or may not decide to permanently retain that root access to the backend host. (Even one time passwords will not protect you from a corrupted server that simply does not report the fact that it never logged out.) These threats are not merely theoretical—major compromises on Apache.org and Sourceforge, two critical services in the Open Source community, were traced back to Trojan horses in SSH clients on prominent servers.

These threats can, however, be almost completely eliminated.

Bastion hosts provide the means to access hosts that are otherwise inaccessible from the global Internet. People authenticate against them so as to gain access to these pathways. This authentication is completed using an SSH client, against an SSH daemon on the server. Because we already have one SSH client that we (have to) trust, why are we depending on someone else’s as well? Using port forwarding, we can parlay the trust the bastion has in us into a direct connection into the host we wanted to connect to in the first place. We can even gain end–to–end secure access to network resources available on the private host, from the middle of the public Net!

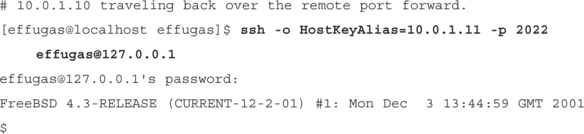

Like any static port forward, this works great for one or two hosts when the user can remember which local ports map to which remote destinations, but usability begins to suffer terribly as the need for connectivity increases. Dynamic forwarding provides the answer: We’ll have OpenSSH dynamically specify the tunnels it requires to administer the private hosts behind the bastion. Because OpenSSH lacks the SOCKS4 Client support necessary to direct its own Dynamic Forwards, we’ll once again use Goto’s connect as a ProxyCommand—only this time, we’re bouncing off our own SSH client instead of some open proxy on the network.

Access another host without reconfiguring the bastion link. Note that nothing at all changes except for the final destination:

Still, it is honestly inconvenient to have to set up a forwarding connection in advance. One solution would be to, by some method, have the bastion SSH daemon pass you, via standard I/O, a direct link to the SSH port on the destination host. With this capability, SSH could act as its own ProxyCommand: The connection attempt to the final destination would proxy through the connection attempt to the intermediate bastion.

This can actually be implemented, with some inelegance. SSH, as of yet, does not have the capacity to translate between encapsulation types—port forwarders can’t point to executed commands, and executed commands can’t directly travel to TCP ports. Such functionality would be useful, but we can do without it by installing, server side, a translator from standard I/O to TCP. Netcat, by Hobbit (Windows port by Chris Wysopal), exists as a sort of “Network Swiss Army Knife” and provides this exact service.

$ ssh –o ProxyCommand=“ssh [email protected] nc %h %p“ [email protected]

[email protected]’s password:

[email protected]’s password:

Last login: Thu Jan 10 15:10:41 2002 from 10.0.1.11

Such a solution is moderately inelegant—the client should really be able do this translation internally, and in the near future there might very well soon be a patch to ssh providing a –W host:port that does this translation client side instead of server side. But at least using netcat works, right?

There is a problem. Some obscure cases of remote command execution have commands leaving file descriptors open even after the SSH connection dies. The daemon, wishing to serve these descriptors, refuses to kill either the app or itself. The end result is zombified processes—and unfortunately, command forwarding nc can cause this case to occur. As of the beginning of 2002, these issues are a point of serious discord among OpenSSH developers, for the same code that obsessively prevents data loss from forwarded commands also quickly forms zombie processes out of slightly quirky forwarded commands. Caveat Hacker!

Network administrators wishing to enforce safe bastion activity may go to such lengths as to remove all network client code from the server, including Telnet, ssh, even lynx. As a choke point running user–supplied software, the bastion host makes for uniquely attractive and vulnerable concentration of connectivity to attack. If it wasn’t even less secure (or technically infeasible) to trust every backend host to completely manage its own security, the bastion concept would be more dangerous than it was worth.

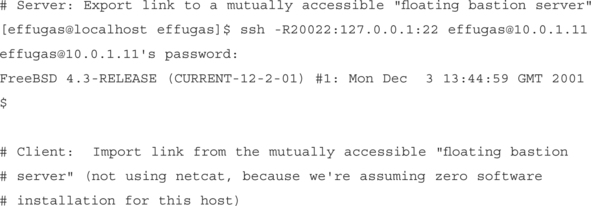

Bringing the Mountain: Exporting SSHD Access

A bastion host is quite useful, for it allows a network administrator to centrally authenticate mere access to internal hosts. Using the standards discussed in the previous chapter, without providing strong authentication to the host in the middle, the ability to even transmit connection attempts to backend hosts is suppressed. But centralization has its own downsides, as Apache.org and Sourceforge found—catastrophic and widespread failure is only a single Trojan horse away. We got around this by restricting our use of the bastion host: As soon as we had enough access to connect to the one unique resource the bastion host offered—network connectivity to hosts behind the firewall—we immediately combined it with our own trusted resources and refused to unnecessarily expose ourselves any further.

End result? We are left as immune to corruption of the bastion host as we are to corruption of the dozens of routers that may stand between us and the hosts we seek. This isn’t unexpected—we’re basically treating the bastion host as an authenticating router and little more. Quite useful.

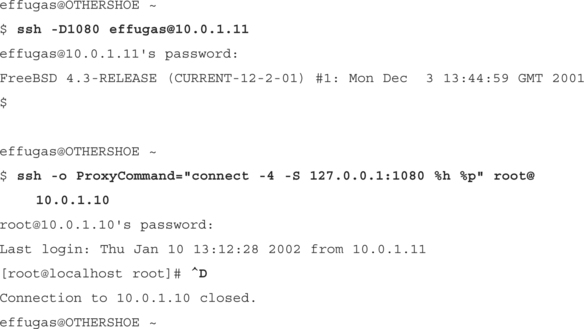

But what if there is no bastion host?

What if the machine to manage is at home, on a DSL line, behind one of LinkSys’s excellent Cable/DSL NAT Routers (the only devices known that can NAT IPSec reliably), and there’s no possibility of an SSH daemon showing up directly on an external interface?

What if, possibly for good reason, there’s a desire to expose no services to the global Internet? Older versions of SSH and OpenSSH ended up developing severe issues in their SSH1 implementations, so even the enormous respect the Internet community has for SSH doesn’t justify the risk of being penetrated?

What if the need for remote management is far too fleeting to justify the hardware or even the administration cost of a permanent bastion host?

No problem. Just don’t have a permanent server. A bastion host is little more than a system through which the client can successfully communicate with the server; although it is convenient to have permanent infrastructure and user accounts set up to manage this communication, it’s not particularly necessary. SSH can quite effectively export access to its own daemon through the process of setting up Remote Port Forwards. Let’s suppose that the server can access the client, but not vice versa—a common occurrence in the realm of multilayered security, where higher levels can communicate down:

bash–2.05a$ ssh –R2022:10.0.1.11:22 [email protected]

[email protected]’s password:

So even though the host at work that we are sitting on is firewalled from the outside world, we can SSH to our box at home, and give it a local port to connect to, which will give it access to the SSH daemon on our work machine.

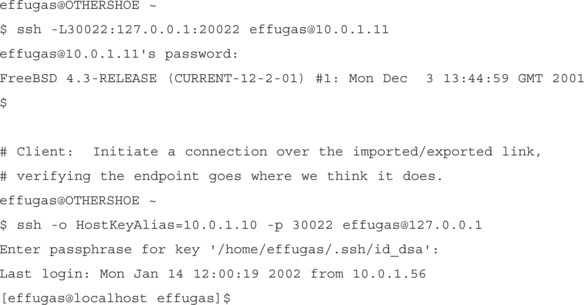

Echoes in a Foreign Tongue: Cross–Connecting Mutually Firewalled Hosts