Chapter 8. HCI Business Benefits and Use Cases

This chapter covers the following key topics:

Hyperconverged Infrastructure (HCI) Business Benefits: Covers HCI business benefits as seen by chief information officers (CIOs) and IT managers who want justification for moving from a legacy storage area network (SAN) and converged environment to hyperconverged. This includes faster deployment cycles, easier scalability with pay as you grow, a better operational model inside the IT organization, easier system management, cloud-like agility, better availability at lower costs, better entry point costs, and better total cost of ownership (TCO).

Hyperconverged Infrastructure (HCI) Business Benefits: Covers HCI business benefits as seen by chief information officers (CIOs) and IT managers who want justification for moving from a legacy storage area network (SAN) and converged environment to hyperconverged. This includes faster deployment cycles, easier scalability with pay as you grow, a better operational model inside the IT organization, easier system management, cloud-like agility, better availability at lower costs, better entry point costs, and better total cost of ownership (TCO). HCI Use Cases: Discusses the multitude of HCI use cases ranging from simple server virtualization to more complex environments. It includes details about sample applications such as DevOps, virtual desktops, remote office branch office, edge computing, tier-1 enterprise class applications, backup, and disaster recovery.

HCI Use Cases: Discusses the multitude of HCI use cases ranging from simple server virtualization to more complex environments. It includes details about sample applications such as DevOps, virtual desktops, remote office branch office, edge computing, tier-1 enterprise class applications, backup, and disaster recovery.

So, what does hyperconvergence bring to the table? Hyperconvergence promises to bring simplicity, agility, and cost reduction in building future data centers. Hyperconvergence is coined as a “webscale” architecture, a term in the industry that means it brings the benefit and scale of provider cloud–based architectures to the enterprise. Although convergence was able to eliminate some of the headaches that IT professionals had in integrating multiple technologies from different vendors, it didn’t simplify the data center setup or reduce its size, complexity, and time of deployment. And although software-defined storage (SDS) as applied to a legacy environment can enhance the storage experience and enhance efficiency, it still does not address the fact that traditional storage area networks (SANs) remain expensive and hard to scale to address the data explosion and the changing needs of storage. This chapter discusses the business benefits of hyperconvergence and its use cases.

HCI Business Benefits

Hyperconverged infrastructure (HCI) brings a wealth of business benefits that cannot be overlooked by CIOs and IT managers. As the focus shifts to the speed of application deployment for faster customer growth, IT managers do not want to be bogged down by the complexity of the infrastructure; rather, they want to focus on expanding the business. As such, HCI brings many business benefits in the areas of faster deployment, scalability, simplicity, and agility at much lower costs than traditional deployments. The HCI business benefits can be summarized as follows: fast deployment, easier-to-scale infrastructure, enhanced IT operational model, easier system management, public cloud agility in a private cloud, higher availability at lower costs, low-entry cost structure, and reduced total cost of ownership.

Fast Deployment

Compared to existing data centers that take weeks and months to be provisioned, HCI deployments are provisioned in minutes. When HCI systems are shipped to the customer, they come in preset configurations. Typical HCI systems come in a minimum configuration of three nodes to allow for a minimum level of hardware redundancy. Some vendors are customizing sets of two nodes or even one node for environments like remote offices. The system normally has all the virtualization software preinstalled, such as ESXi, Hyper-V, or kernel-based virtual machine (KVM) hypervisors. The distributed file system that delivers the SDS functionality is also preinstalled.

Once the hardware is installed and the system is initiated, it is automatically configured through centralized management software. The system discovers all its components and presents the user with a set of compute and storage resources to be used. The administrator can immediately allocate a chunk of storage to its application and increase or decrease the size of storage as needed.

The environment is highly flexible and agile because the starting point is the application. The networking and storage policies are assigned based on the application needs.

Enterprises highly benefit from the shorter deployment times by spending less time on the infrastructure and more time on customizing revenue-generating applications.

Easier-to-Scale Infrastructure

Scaling SANs to support a higher number of applications and storage requirements was traditionally painful. Storage arrays come in a preset configuration with a certain number of disks, memory, and central processing units (CPUs). Different systems are targeted to different markets, such as small and medium-sized businesses (SMBs) and large enterprises (LEs). Storage arrays were traditionally scale-up systems, meaning that the system can grow to a maximum configuration, at which point it runs out of storage capacity, memory, or CPU horsepower. At that point, enterprises must either get another storage array to handle the extra load or go through a painful refresh cycle of the existing system. The storage arrays are not cheap. The smallest configuration can run hundreds of thousands of dollars. Enterprises must go through major planning before adding or replacing a storage array. A large storage array could waste a lot of idle resources, and a small array could mean a shorter upgrade cycle. Also, the fact that the storage array architecture is based on rigid logical unit numbers (LUNs), you always have the bin-packing problem: trying to match storage requirements with physical storage constraints. When a storage array capacity is exceeded, the data of a storage application might have to be split between storage arrays or move from the older to the newer, larger array.

HCI systems are scale-out because they leverage a multitude of nodes. The system starts with two or three nodes and can scale out to hundreds of nodes depending on the implementation. So, the capacity and processing power of the system scales linearly with the number of nodes. This benefits the enterprise by starting small and expanding as required. There are no rigid boundaries on where the data should be in the storage pool. Once more nodes are added to the system, the data can be automatically reallocated to evenly use the existing resources. This results in smoother scalability of the overall system.

Enhanced IT Operational Model

Another paradigm shift from an operational point of view is that HCI evolves the IT operation from single-function IT resources to a multifunctional, operational model. Today’s IT operations have hard boundaries between storage engineers, networking engineers, virtualization engineers, and network management engineers. HCI can change this model due to the ease of operation and embedded automation in the system, which requires lesser skills for the individual functions.

With HCI, a virtualization administrator can take control of the storage required for his applications. That is something that storage administrators do not like to hear because they are supposed to be the masters of their storage domain. With HCI, the virtualization administrator can decide at virtual machine (VM) creation what storage policies to attach to the VM and request that those policies be delivered by the underlying storage pool. The storage pool itself and its capabilities are under the control of the same administrator. The beauty of this model is that virtualization administrators do not need the deep knowledge in storage that storage administrators had because policy-based management and automation hides many of the storage nuts and bolts settings that used to keep storage administrators busy.

In the same way, virtualization engineers can move their applications around without much disruption to the network. In traditional SAN setups, anytime an application is moved around, the virtualization administrator has to worry about both the storage networking and the data networking. Moving an application can disrupt how certain paths are set in the fibre channel network and how a VLAN is set in the data network. With HCI, the fibre channel storage network disappears altogether, and the setup of the data network can be automated to allow application mobility.

HCI promotes the notion of cross skills so that an enterprise can take better advantage of its resources across all functional areas. Instead of having storage engineers, virtualization engineers, and networking engineers, an enterprise can eventually have multifunctional engineers because many of the tasks are automated by the system itself. HCI implementations that will rise above the others are those that understand the delicate mix of IT talent and how to transition the operational model from a segmented and function-oriented model to an integrated and application-oriented model.

Easier System Management

HCI systems offer extremely simple management via graphical user interfaces (GUIs). Many implementations use an HTML5 interface to present the user with a single pane of glass dashboard across all functional areas. Converged systems tried to simplify management by integrating between components from different vendors. The beauty of HCI is that a single vendor builds the whole system. The integration between storage, compute, and networking is built into the architecture. The same network management system provides the ability to manage and monitor the system and summarizes information about VMs and capacity usage. Sample summary information shows the used and unused storage capacity of the whole cluster, how much savings are obtained by using functions such as deduplication and compression, the status of the software health and the hardware health, system input/output operations per second (IOPS), system latency, and more. The management software drills down to the details of every VM or container, looking at application performance and latency. The provisioning of storage is a point-and-click exercise, creating, mounting, and expanding volumes.

It is important to note here that not all HCI implementations are created equal. Some implementations’ starting point is existing virtualization systems such as vCenter, so they try to blend it with already existing management systems. Examples are Cisco leveraging vCenter and using plug-ins to include management functionality for the HyperFlex (HX) product. This is a huge benefit for Cisco’s Unified Computing System (UCS) installed base, which already uses vCenter. Other implementations start with new management GUIs, which is okay for greenfield deployments but creates management silos for already established data centers.

Public Cloud Agility in a Private Cloud

One of the main dilemmas for IT managers and CIOs is whether to move applications and storage to the public cloud or to keep them in house. There are many reasons for such decisions; cost is a major element, but not the only one. The agility and flexibility of the public cloud are attractive because an enterprise can launch applications or implement a DevOps model for a faster release of features and functionality. An enterprise can be bogged down in its own processes to purchase hardware and software and integrate all the components to get to the point where the applications are implemented. HCI gives the enterprises this type of agility in house. Because the installation and provisioning times are extremely fast, an enterprise can use its HCI setup as a dynamic pool of resources used by many departments. Each department can have its own resources to work with, and the resources can be released back into the main pool as soon as the project is done. This constitutes enormous cost savings by avoiding the hefty public cloud fees, which can be leveraged to invest in internal, multifunctional IT resources.

Higher Availability at Lower Costs

HCI systems are architected for high availability, meaning that the architecture anticipates that components will fail and, as such, data and applications must be protected. The HCI availability is a many-to-many rather than a one-to-one as in traditional storage arrays. With traditional arrays, an array has dual controllers; if one fails, the input/output (I/O) is moved to the other. Also, traditional RAID 5 or RAID 6 protects the disk. However, in traditional SANs, failures affect the paths that I/O takes from the host to the array, so protection must be done on that path as well. This means that high availability (HA) results in dual fibre channel fabrics and possible multipath I/O software on the hosts to leverage multiple paths. Also, when a controller fails, 100% of the I/O load goes to the other controller. During a failure, applications can take a major performance hit if individual controllers are running at more than 50% load. Basically, achieving high availability in storage array designs is expensive.

Because HCI is a distributed architecture, the data is protected by default by being replicated to multiple nodes and disks. The protection is many-to-many, meaning that upon a node failure, the rest of the nodes absorb the load and have access to the same data. HCI systems automatically rebalance themselves when a node or capacity is added or removed. Therefore, HCI systems are highly available and have a better cost structure.

Low-Entry Cost Structure

HCI systems start with two or three nodes and grow to hundreds of nodes. This eliminates the decision-making that IT managers and CIOs must do in choosing how big or small a storage array needs to be. The low-entry cost structure encourages enterprises to experiment with new applications and grow as they need. When converged architectures such as the Vblock were introduced to the market, the systems were marketed with small, medium, and large configurations with huge upward steps between the different configurations. Bear in mind that the prices ranged from expensive to very expensive to ridiculously expensive, with a big chunk of the cost going to the bundled storage arrays.

This is not to say that storage arrays cannot be upgraded, but the long upgrade cycles and the cost of the upgrade could sway CIOs to just get another bigger array. IT would overprovision the systems and invest in extra capacity that might remain idle for years just to avoid the upgrade cycles. With HCI, the systems are a lot more cost effective by nature because they are built on open x86 architectures. In addition, the system can start small and be smoothly upgraded by adding more nodes. When nodes are added, the system rebalances itself to take advantage of the added storage, memory, and CPUs.

Reduced Total Cost of Ownership

TCO is a measure of spending on capital expenditures (CAPEX) and operational expenditures (OPEX) over a period of time. Normally in the data center business, this calculation is based on a 3- to 5-year average period, which is the traditional period of hardware and software refresh cycles.

CAPEX in a data center for storage environment is calculated based on hardware purchases for servers, storage arrays, networking equipment, software licenses, spending on power, facilities, and so on. Some studies by analysts have shown that savings in CAPEX when using HCI versus traditional SAN storage arrays are in the range of 30%, considering savings in hardware, software, and power. Also, the reduced times, ease of deployment and operation, and reliance on a IT general knowledge in HCI could result in about 50% savings in IT OPEX. These numbers vary widely among research depending on what products are compared, so take analyst numbers lightly. However, the takeaway should be that HCI runs on x86-based systems that are more cost effective than closed hardware storage array solutions. The benefit in simpler IT, shorter deployment cycles, and added revenue from efficiently rolling out applications makes HCI a product offering that needs to be seriously considered.

HCI Use Cases

It helps to look at the different HCI use cases in comparison to traditional storage architectures. After all, HCI needs to deliver on the simplicity and agility of deploying multiple business applications and at the same time exceed the performance of traditional architectures. Some of the main business use cases for HCI are these:

Server virtualization

Server virtualization DevOps

DevOps Virtual desktop infrastructure (VDI)

Virtual desktop infrastructure (VDI) Remote office business office (ROBO)

Remote office business office (ROBO) Edge computing

Edge computing Tier-1 enterprise class applications

Tier-1 enterprise class applications Data protection and disaster recovery (DR)

Data protection and disaster recovery (DR)

Server Virtualization

Server virtualization is not specific to hyperconvergence because it mainly relies on hypervisors and container daemons to allow multiple VMs and containers on the same hardware. However, hyperconverged systems complement the virtualization layer with the SDS layer to make server virtualization simpler to adopt inside data centers. The adoption of server virtualization in the data center, whether in deploying VMs or containers, will see a major shift from traditional SAN environments and converged systems to hyperconverged infrastructures. Enterprises benefit from the fact that storage and backup are already integrated from day one without having to plan and design for a separate storage network.

DevOps

To explain DevOps in layman’s terms, it is the lifecycle of an application from its planning and design stages to its engineering implementation, test, rollout, operation, and maintenance. This involves many departments working together to create, deploy, and operate. This lifecycle sees constant changes and refresh cycles because any changes in any part of the cycle affect the other. With the need to validate and roll out hundreds of applications, enterprises need to minimize the duration of this lifecycle as much as possible.

Performing DevOps with traditional compute and storage architectures is challenging. Every department needs its own servers, data network, storage network, and storage. Many duplications occur between the departments, and IT needs to create different silo networks. Some of these networks end up overutilized, whereas others are underutilized.

Hyperconverged infrastructure creates the perfect setup for DevOps environments. One pool of resources is deployed and divided into virtual networks. Each network has its own virtual compute, network, and storage sharing the same physical resources. Adding, deleting, or resizing storage volumes or initiating and shutting down VMs and containers is done via the click of a button. Once any resource is utilized and is no longer needed, it goes back to the same pool. The network can start with a few nodes and scale as needed.

For this environment to work efficiently, HCI implementations need to support multitenancy for compute storage and the network. Because each of the different departments needs its own storage policies and network policies, multitenancy support at all layers including the network is a must.

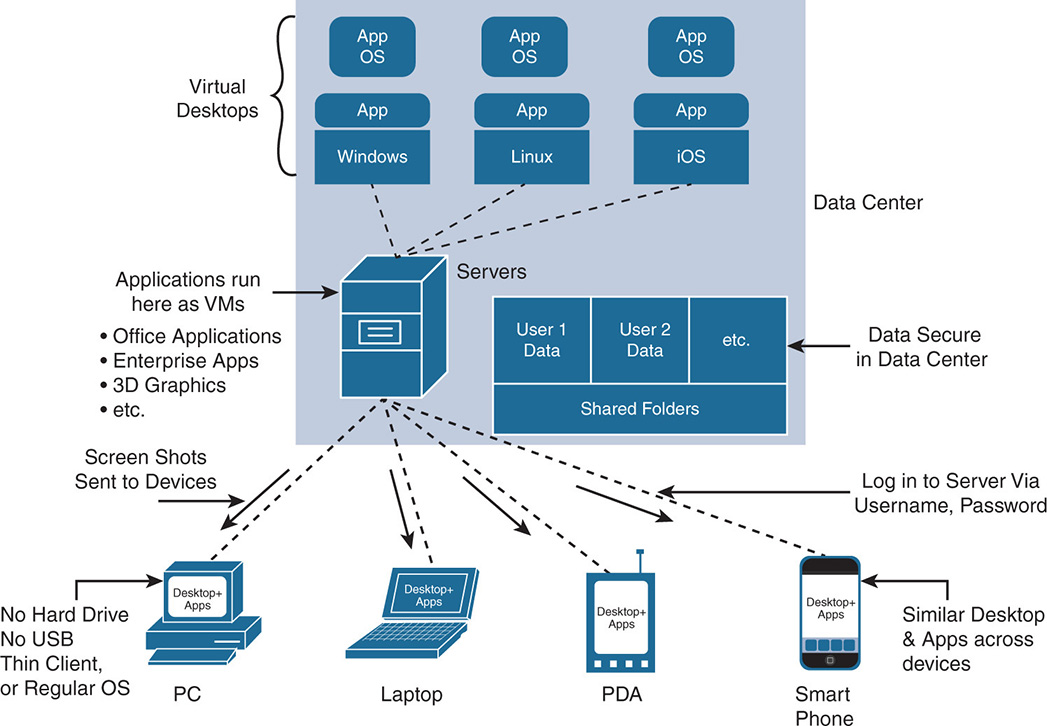

Virtual Desktop Infrastructure

Virtual desktop infrastructure (VDI) was one of the first business applications that resulted from the virtualization of the data center. VDI allows users to use their desktops, laptops, personal digital assistants (PDAs), and smartphones as basically dumb terminals, while the actual application and data run securely on servers in the data center. The terminal just receives the screen shots over the network, while all heavy lifting and processing are done on the server. Any types of end devices can be used as terminals because the end device is not doing any of the processing and does not need to have the application or data local on the device. Figure 8-1 shows a high-level description of VDI.

Figure 8-1 VDI High-Level Description

As you can see, the virtual desktops run on the servers as VMs. Each VM has its own OS and applications that are associated with the user plus the data associated with the VM. The terminals themselves could run thin clients that operate a simple browser or can operate a regular OS such as Microsoft Windows, Apple iOS, or Linux. The client accesses the server via a URL and, as such, is presented with a user ID and a password to log in. After login, each user receives his own desktop, which has the same look and feel of a regular desktop on a PC or laptop. The terminals also receive a preconfigured set of applications. The desktops have their CPU, memory, and storage resources tailored to the type of user. For example, light users can have one vCPU and 2 GB of RAM, whereas power users can have two or three vCPUs and 32 GB of RAM, and so on. There are normally two types of desktops:

Persistent desktops: These desktops maintain user-specific information when the user logs off from the terminal. Examples of user data that are maintained are screen savers, background and wallpaper, and desktop customization settings.

Nonpersistent desktops: These desktops lose user-specific information upon logout and start with a default desktop template. Such desktops are usually used for general-purpose applications, such as student labs or hospital terminals, where multiple users employ the same device for a short period of time.

There are many business cases behind VDI. Here are a few:

VDI eliminates expensive PC and laptop refresh cycles. Enterprises spend a lot of money on purchasing PC and laptop hardware for running office and business applications. These desktops go through a three-year refresh cycle, where either the CPUs become too slow or the storage capacity becomes too small. Add to this that operating systems such as Microsoft Windows and Apple iOS go through major releases every year; the OS on its own cripples the system due to OS size and added functionality. By using VDI, the desktop hardware remains unchanged because the application and storage are not local to the desktop and reside on the server.

VDI eliminates expensive PC and laptop refresh cycles. Enterprises spend a lot of money on purchasing PC and laptop hardware for running office and business applications. These desktops go through a three-year refresh cycle, where either the CPUs become too slow or the storage capacity becomes too small. Add to this that operating systems such as Microsoft Windows and Apple iOS go through major releases every year; the OS on its own cripples the system due to OS size and added functionality. By using VDI, the desktop hardware remains unchanged because the application and storage are not local to the desktop and reside on the server. Because the end user device acts as a dumb terminal, users can bring in their own personal devices for work purposes. The user logs in and has access to its desktop environment and folders.

Because the end user device acts as a dumb terminal, users can bring in their own personal devices for work purposes. The user logs in and has access to its desktop environment and folders. With VDI, access to USB devices, CD-ROMs, and other peripherals can be disabled on the end user device. When an application is launched on the server, the data is accessed inside the data center and is saved in the data center. Enterprises can relax knowing that their data is secure and will not end up in the wrong hands, whether on purpose or by accident.

With VDI, access to USB devices, CD-ROMs, and other peripherals can be disabled on the end user device. When an application is launched on the server, the data is accessed inside the data center and is saved in the data center. Enterprises can relax knowing that their data is secure and will not end up in the wrong hands, whether on purpose or by accident. Any application can be accessed from any end user device. Microsoft Windows office applications are accessed from an iOS device, and iOS applications are accessed from a device running Android. Business-specific applications that usually required dedicated stations are now accessed from home or an Internet cafe.

Any application can be accessed from any end user device. Microsoft Windows office applications are accessed from an iOS device, and iOS applications are accessed from a device running Android. Business-specific applications that usually required dedicated stations are now accessed from home or an Internet cafe.

Problems of VDI with Legacy Storage

Initially, vendors promoted converged systems as a vehicle to sell the VDI application. However, this failed miserably. Other than the huge price tag, converged systems failed to deliver on the performance, capacity, and availability needs of VDI.

With VDI, administrators must provision hundreds of virtual desktops on virtualized servers. Each virtual desktop contains an OS, the set of applications needed by the user, desktop customization data that is specific to the user for persistent desktops, and an allocated disk space per user. Each virtual desktop could consume a minimum of 30 GB just to house the OS and applications. Add to this the allocated storage capacity for the user folders. From a storage perspective, if you multiply 30 GB per user times 100 users, you end up with 10 TB just to store the OS and applications. Now imagine an enterprise having 3,000 to 5,000 users, and you want to change that environment to VDI. The centralized storage needed in that case is enormous. The storage that normally is distributed among thousands of physical desktop now has to be delivered by expensive storage arrays.

On the I/O performance side, things get a lot worse. As soon as thousands of users log in to access their desktops at the start of a business day, thousands of OSs and desktops are loaded, creating a boot storm with hundreds of thousands of I/O requests that cripple the storage controllers. Also, any storage array failures, even in redundant systems, would overload the storage controllers, affecting user experience in a negative way.

IT managers really struggled with how to tailor converged systems to their user base from a cost and performance perspective. They had to decide between small, medium, or large systems, with big-step functions in cost and performance.

How HCI Helps VDI

The HCI-distributed architecture, when used for VDI, helps eliminate many of the deficiencies of converged systems and legacy storage arrays. As a scale-out architecture, enterprises can scale the compute and storage requirement on a per-need basis. The more nodes that are added, the higher the CPU power of the system and the higher the I/O performance. The I/O processing that was done via two storage controllers is now distributed over many nodes, each having powerful CPUs and memory. Also, because the growth in storage capacity is linear, with the use of thin provisioning, enterprises can start with small storage and expand as needed. This transforms the cost structure of VDI and allows enterprises to start with a small number of users and expand.

Also, because VDI uses many duplicate components, data optimization techniques help minimize the number of duplicated operating systems and user applications. With HCI, the use of data optimization with inline deduplication and compression, erasure coding, and linked clones drastically reduces the amount of storage needed for the VDI environment.

Remote Office Business Office (ROBO)

Managing ROBO environments is challenging for enterprises. Remote offices and branch offices can grow to hundreds of locations, with each location having its own specific networking needs. The main challenges facing ROBO deployments follow:

Deployments are not uniform. No two ROBOs are the same. Some require more compute power and less storage, and some require more storage than compute. Some need to run services such as Exchange servers, databases, and enterprise applications, and others just need a few terminals.

Deployments are not uniform. No two ROBOs are the same. Some require more compute power and less storage, and some require more storage than compute. Some need to run services such as Exchange servers, databases, and enterprise applications, and others just need a few terminals. There is no local IT. Most deployments are managed from headquarters (HQ), except for some large branch offices. Adding IT professionals to hundreds of offices is not practical or economical.

There is no local IT. Most deployments are managed from headquarters (HQ), except for some large branch offices. Adding IT professionals to hundreds of offices is not practical or economical. Management tools are designed for different technologies, compute, storage, and networking. Keeping track of a variety of management tools for the different offices is extremely challenging.

Management tools are designed for different technologies, compute, storage, and networking. Keeping track of a variety of management tools for the different offices is extremely challenging. WAN bandwidth limitations to remote offices create challenges for data backup over slow links.

WAN bandwidth limitations to remote offices create challenges for data backup over slow links.

IT managers are challenged to find one solution that fits all. Converged compute and storage architectures used in the main data center are too complicated and too expensive for remote offices. HCI can handle them, though. The benefits that HCI brings to ROBO follow:

Small configurations starting from two nodes, with a one-to-one redundancy.

Small configurations starting from two nodes, with a one-to-one redundancy. Ability to run a multitude of applications on the same platform with integrated storage.

Ability to run a multitude of applications on the same platform with integrated storage. Ability to remotely back up with data optimization. Data is deduplicated and compressed; only delta changes are sent over the WAN. This efficiently utilizes the WAN links because it minimizes the required bandwidth.

Ability to remotely back up with data optimization. Data is deduplicated and compressed; only delta changes are sent over the WAN. This efficiently utilizes the WAN links because it minimizes the required bandwidth. Centrally managed with one pane of glass management application that provides management as well as monitoring for the health of the system. It is worth noting here that with HCI, central management no longer refers to the network management systems sitting in the HQ data center. The public cloud plays a major role in the management and provisioning because management software is deployed as virtual software appliances in the cloud. This allows the administrators to have a uniform view of the whole network as if it is locally managed from every location.

Centrally managed with one pane of glass management application that provides management as well as monitoring for the health of the system. It is worth noting here that with HCI, central management no longer refers to the network management systems sitting in the HQ data center. The public cloud plays a major role in the management and provisioning because management software is deployed as virtual software appliances in the cloud. This allows the administrators to have a uniform view of the whole network as if it is locally managed from every location. Periodic snapshots of VMs, where applications can be restored to previous points in time.

Periodic snapshots of VMs, where applications can be restored to previous points in time. Consistent architecture between the main data center and all remote offices that leverage the same upgrade and maintenance procedures.

Consistent architecture between the main data center and all remote offices that leverage the same upgrade and maintenance procedures. Similar hardware between all remote office, but with different numbers of nodes depending on the use case.

Similar hardware between all remote office, but with different numbers of nodes depending on the use case.

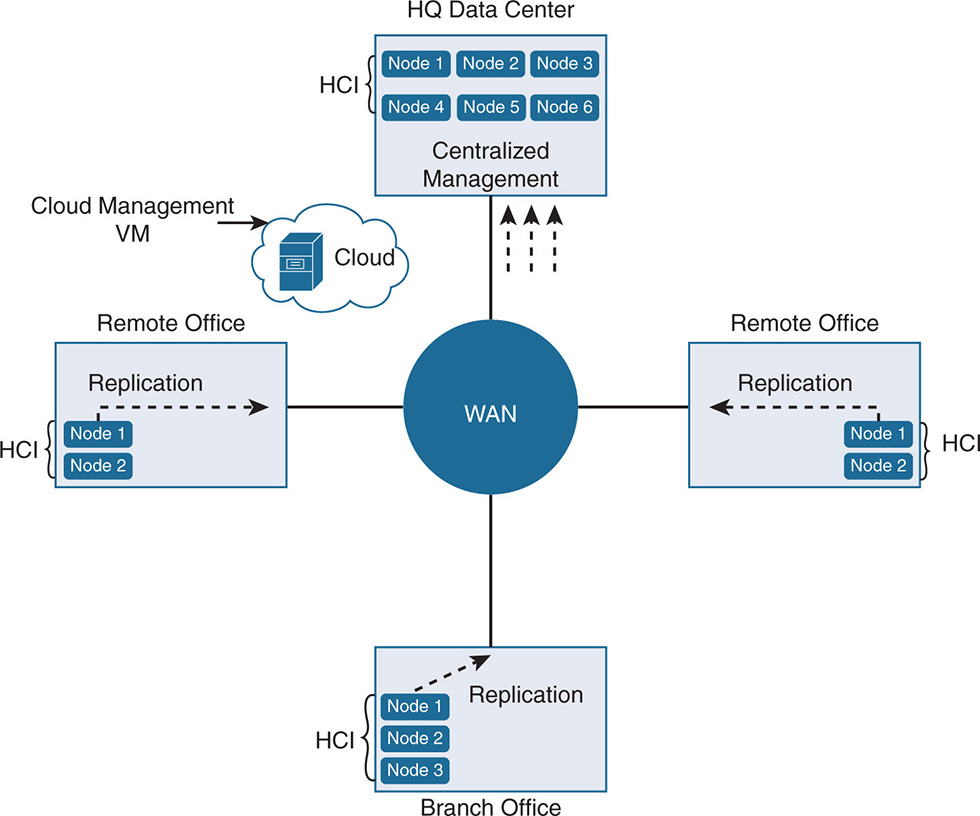

Figure 8-2 shows a sample ROBO deployment.

Figure 8-2 HCI ROBO Application

As you can see, the hardware configuration in all offices is uniform and varies only on number of nodes. Replication and data optimization ensure efficiency in backups over slow links. Notice also that management is not restricted to centralized management in HQ but can be extended to management servers in the cloud that run as virtual software appliances. The adoption of cloud management allows easy and fast configuration and management as well as a unified management across all locations.

Edge Computing

The trend of edge computing is growing due to the need of enterprises to put applications closer to end users. Some applications that require high performance and low latency, such as databases and video delivery, should be as close to the end user as possible. Other applications, such as the collection of telemetry data from the Internet of things (IoT), are driving the use of compute and storage in distributed locations rather than large centralized data centers.

Similar to the case of ROBO, edge computing requires the deployment of infrastructure in what is now called private edge clouds. These are mini data centers that are spread over different locations that act as collection points for processing the data. This greatly reduces latencies and bandwidth by avoiding constantly shipping raw data back and forth between endpoints and large centralized data centers.

As with ROBO, the mini data centers lack the presence of IT resources and require a unified architecture that is tailored to the size of the data centers. As such, HCI provides the perfect unified architecture that scales according to the deployment and is easily managed from centralized management systems located in main data centers or from virtual software appliances in the public cloud.

Tier-1 Enterprise Class Applications

Enterprise class applications such as enterprise resource management (ERM) and customer relationship management (CRM) from companies such as Oracle and SAP are essential for the operation of the enterprise. Such applications work with relational databases (RDBs), which have high-performance requirements for CPU processing and for memory. For such enterprise applications, although application servers run in a virtualized environment, the databases traditionally run on dedicated servers because they consume most of the resources on their own. Even with the introduction of virtualization, some of these databases still run on dedicated bare-metal servers. Enterprise applications and Structured Query Language (SQL) databases enjoy stability on traditional SANs. These applications are surrounded by a fleet of experts, such as application engineers, system software engineers, and storage administrators just because they are the sustaining income of the enterprise.

Some of these applications evolved dramatically over the years to handle real-time analytics, in which a user can access large amounts of historical data and perform data crunching in real time. An example is a sales manager wanting to base her sales projections in a geographical area based on the sales figures for the past 10 years in a particular region. Traditionally, such an exercise required loading large amounts of data from tape drives. Crunching the data required days to compile the required information. Today, the same exercise needs to happen in minutes. As such, the historical data must be available in easily accessible storage, and powerful processing and memory are needed to shorten the time.

Some implementations, such as Oracle Database In-Memory, require moving the data into fast flash disks or dynamic random-access memory (DRAM). Other implementations, such as SAP HANA, require the whole database to be moved into DRAM. Traditional storage arrays and converged systems are easily running out of processing power in supporting such large amounts of data and processing requirements. To handle such requirements, converged systems require millions of dollars to be spent on storage controllers and storage arrays.

HCI vendors have fast jumped on the bandwagon to certify such applications on the newly distributed data architecture. The modular nature of HCI allows the system to grow or shrink as the enterprise application requires. Also, regardless of whether HCI comes as hybrid or all-flash configurations, the use of flash solid-state drives (SSD) in every node accelerates the reads and writes of sequential data as the applications need.

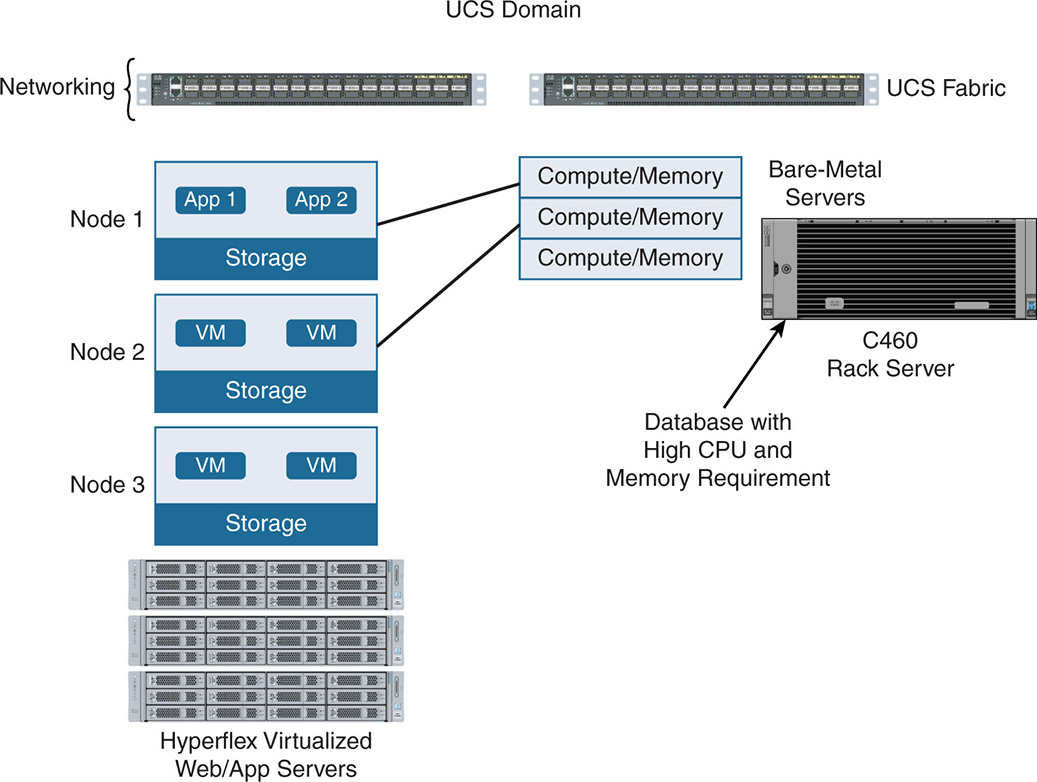

However, the HCI implementations that stand out are the ones that can mix virtualized servers with bare-metal servers under the same management umbrella.

An HCI implementation must be able to scale the compute and memory regardless of the storage needs. Figure 8-3 shows an example of Cisco HX/UCS implementation, where application servers such as SAP Business Warehouse (BW) are running on an HX cluster. The SAP HANA in-memory database is running on a separate Cisco C460 rack server that offers expandable compute and memory. The virtualized servers and bare-metal servers are running under one Cisco UCS domain.

Figure 8-3 Enterprise Class Applications—Virtualization and Bare-Metal

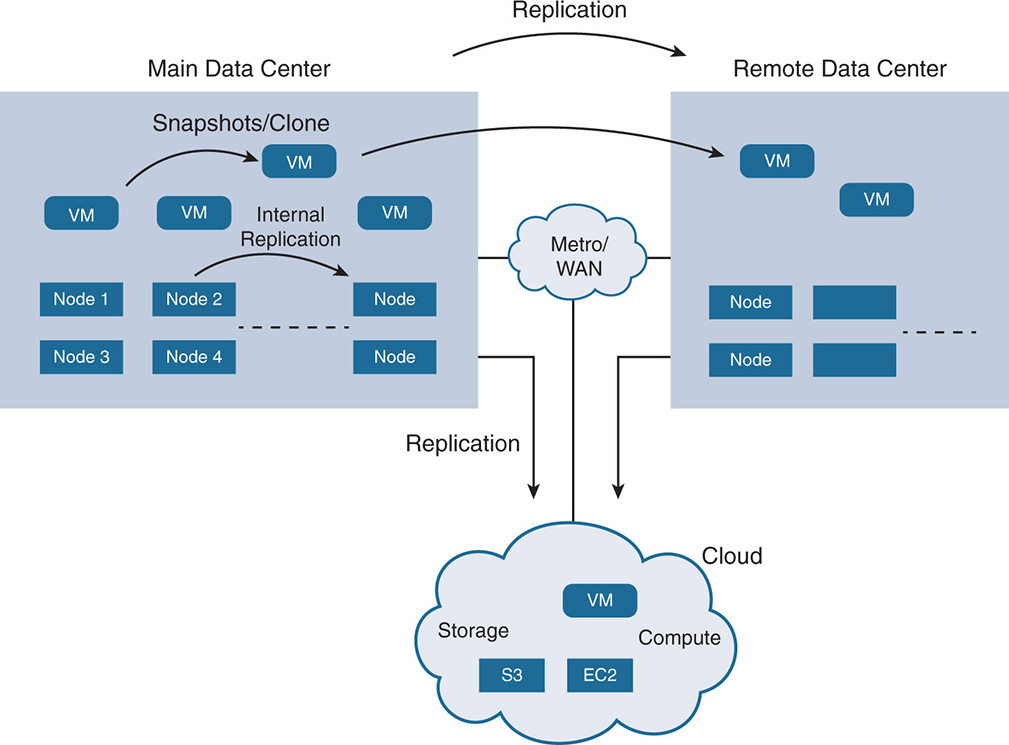

Data Protection and Disaster Recovery

Data protection and DR are essential for the continuous nonstop operation of any enterprise application. However, the complexities and costs of building multiple data centers to protect the data and applications are cost prohibitive. Building SANs with traditional fibre channel switching and storage arrays in multiple data centers and backing up the data over WAN links is an expensive proposition. HCI presents a cost-effective solution by handling data protection and DR natively as part of the architecture. Let’s look at the different aspects of data protection and DR that HCI offers:

Local data protection: HCI allows data replication and striping in a distributed fashion on many nodes and many disks. Data is replicated either two times or three times, and even more depending on how many node failures or disk failures are tolerated. Data optimization techniques such as deduplication, compression, and erasure coding ensure space optimization.

Local data protection: HCI allows data replication and striping in a distributed fashion on many nodes and many disks. Data is replicated either two times or three times, and even more depending on how many node failures or disk failures are tolerated. Data optimization techniques such as deduplication, compression, and erasure coding ensure space optimization. Local snapshots: HCI allows for periodic snapshots of VMs without affecting performance. Techniques such as redirect on write are used to eliminate performance hits when copying the data. Recovery time objective (RTO) and recovery point objective (RPO) can be customized depending on the frequency of the snapshots.

Local snapshots: HCI allows for periodic snapshots of VMs without affecting performance. Techniques such as redirect on write are used to eliminate performance hits when copying the data. Recovery time objective (RTO) and recovery point objective (RPO) can be customized depending on the frequency of the snapshots. Active-active data centers: DR is provided while making full use of the resources of a primary and backup data centers. A pair of data centers can run in different locations, with both being active. Data can be replicated locally or to the remote data center (DC). VMs are protected in the local DC as well as the remote DC in case of total DC failure. Data optimization such as deduplication and compression is used, and only the delta difference between data is replicated to the remote site.

Active-active data centers: DR is provided while making full use of the resources of a primary and backup data centers. A pair of data centers can run in different locations, with both being active. Data can be replicated locally or to the remote data center (DC). VMs are protected in the local DC as well as the remote DC in case of total DC failure. Data optimization such as deduplication and compression is used, and only the delta difference between data is replicated to the remote site. Cloud replication: HCI also allows data protection and replication to be done between an on-premise setup and a cloud provider. This could be built in the HCI offering so that an HCI cluster can interface using APIs with cloud providers such as AWS, Azure, and Google Cloud Platform. The level of interaction between the on-premise setup and the cloud depends on whether the customer wants data protection only or full disaster recovery. In the case of full DR, VMs are launched in the cloud as soon as a VM cannot be recovered on premise.

Cloud replication: HCI also allows data protection and replication to be done between an on-premise setup and a cloud provider. This could be built in the HCI offering so that an HCI cluster can interface using APIs with cloud providers such as AWS, Azure, and Google Cloud Platform. The level of interaction between the on-premise setup and the cloud depends on whether the customer wants data protection only or full disaster recovery. In the case of full DR, VMs are launched in the cloud as soon as a VM cannot be recovered on premise.

Figure 8-4 shows a high-level data protection and DR mechanism with HCI.

Figure 8-4 HCI Data Protection and DR

Looking Ahead

This chapter discussed the main architecture and functionality of HCI and the different storage services it offers. It also examined the business benefits of HCI and a sample of its many use cases.

Future chapters take a much deeper dive into HCI and the specific functionality of some HCI-leading implementations. Part IV of this book is dedicated to the Cisco HyperFlex HCI product line and its ecosystem. Part V discusses other implementations, such as VMware vSAN and Nutanix Enterprise cloud platform, and touches on some other HCI implementations.