Software support

This chapter lists the minimum operating system requirements and support considerations for the IBM z13 (z13) and its features. It addresses z/OS, z/VM, z/VSE, z/TPF, and Linux on z Systems. Because this information is subject to change, see the Preventive Service Planning (PSP) bucket for 2964DEVICE for the most current information. Also included is generic software support for IBM z BladeCenter Extension (zBX) Model 004.

Support of z13 functions depends on the operating system, its version, and release.

This chapter includes the following sections:

•IOCP

7.1 Operating systems summary

Table 7-1 lists the minimum operating system levels that are required on the z13. For similar information about the IBM z BladeCenter Extension (zBX) Model 004, see 7.15, “IBM z BladeCenter Extension (zBX) Model 004 software support” on page 298.

|

End-of-service operating systems: Operating system levels that are no longer in service are not covered in this publication. These older levels might provide support for some features.

|

Table 7-1 z13 minimum operating systems requirements

|

Operating systems

|

ESA/390

(31-bit mode)

|

z/Architecture

(64-bit mode)

|

Notes

|

|

z/OS V1R121

|

No

|

Yes

|

Service is required.

See the following box, which is titled “Features”.

|

|

z/VM V6R22

|

No

|

Yes3

|

|

|

z/VSE V5R1

|

No

|

Yes

|

|

|

z/TPF V1R1

|

Yes

|

Yes

|

|

|

Linux on z Systems

|

No4

|

1 Regular service support for z/OS V1R12 ended September 2014. However, by ordering the IBM Lifecycle Extension for z/OS V1R12 product, fee-based corrective service can be obtained up to September 2017.

2 z/VM V6R2 with PTF provides compatibility support (CEX5S with enhanced crypto domain support)

3 VM supports both 31-bit and 64-bit mode guests.

4 64-bit distributions included the 31-bit emulation layer to run 31-bit software products.

|

Features: Usage of certain features depends on the operating system. In all cases, PTFs might be required with the operating system level that is indicated. Check the z/OS, z/VM, z/VSE, and z/TPF subsets of the 2964DEVICE PSP buckets. The PSP buckets are continuously updated, and contain the latest information about maintenance.

Hardware and software buckets contain installation information, hardware and software service levels, service guidelines, and cross-product dependencies.

For Linux on z Systems distributions, consult the distributor’s support information.

|

7.2 Support by operating system

IBM z13 introduces several new functions. This section addresses support of those functions by the current operating systems. Also included are some of the functions that were introduced in previous z Systems and carried forward or enhanced in zEC12. Features and functions that are available on previous servers but no longer supported by zEC12 have been removed.

For a list of supported functions and the z/OS and z/VM minimum required support levels, see Table 7-3 on page 232. For z/VSE, z/TPF, and Linux on z Systems, see Table 7-4 on page 237. The tabular format is intended to help you determine, by a quick scan, which functions are supported and the minimum operating system level that is required.

7.2.1 z/OS

z/OS Version 1 Release 13 is the earliest in-service release that supports z13. After September 2016, a fee-based Extended Service for defect support (for up to three years) can be obtained for z/OS V1R13. Although service support for z/OS Version 1 Release 12 ended in September of 2014, a fee-based extension for defect support (for up to three years) can be obtained by ordering the IBM Lifecycle Extension for z/OS V1R12. Also, z/OS.e is not supported on zEC12, and z/OS.e Version 1 Release 8 was the last release of z/OS.e.

z13 capabilities differ depending on the z/OS release. Toleration support is provided on z/OS V1R12. Exploitation support is provided only on z/OS V2R1 and higher. For a list of supported functions and their minimum required support levels, see Table 7-3 on page 232.

7.2.2 z/VM

At general availability, z/VM V6R2 and V6R3 provide compatibility support with limited use of new z13 functions.

For a list of supported functions and their minimum required support levels, see Table 7-3 on page 232.

|

Capacity: For the capacity of any z/VM logical partition (LPAR), and any z/VM guest, in terms of the number of Integrated Facility for Linux (IFL) processors and central processors (CPs), real or virtual, you might want to adjust the number to accommodate the processor unit (PU) capacity of the z13.

z/VM V6R3 and IBM z Unified Resource Manager: In light of the IBM cloud strategy and adoption of OpenStack, the management of z/VM environments in zManager is now stabilized and will not be further enhanced. Accordingly, zManager does not provide systems management support for z/VM V6R2 on IBM z13 or for z/VM V6.3 and later releases. However, zManager continues to play a distinct and strategic role in the management of virtualized environments that are created by integrated firmware hypervisors (IBM Processor Resource/Systems Manager (PR/SM), PowerVM, and System x hypervisor based on a kernel-based virtual machine (KVM)) of the z Systems.

Statements of Direction1:

•Removal of support for Expanded Storage (XSTORE): z/VM V6.3 is the last z/VM release that supports Expanded Storage (XSTORE) for either host or guest use. The z13 will be the last high-end server to support Expanded Storage (XSTORE).

•Stabilization of z/VM V6.2 support: The IBM z13 server family is planned to be the last z Systems server supported by z/VM V6.2 and the last z Systems server that will be supported where z/VM V6.2 is running as a guest (second level). This is in conjunction with the statement of direction that the IBM z13 server family will be the last to support ESA/390 architecture mode, which z/VM V6.2 requires. z/VM V6.2 will continue to be supported until December 31, 2016, as announced in announcement letter # 914-012.

•Product Delivery of z/VM on DVD/Electronic only: z/VM V6.3 is the last release of z/VM that is available on tape. Subsequent releases will be available on DVD or electronically.

•Enhanced RACF password encryption algorithm for z/VM: In a future deliverable, an enhanced RACF/VM password encryption algorithm is planned. This support is designed to provide improved cryptographic strength by using AES-based encryption in RACF/VM password algorithm processing. This planned design is intended to provide better protection for encrypted RACF password data if a copy of RACF database becomes inadvertently accessible

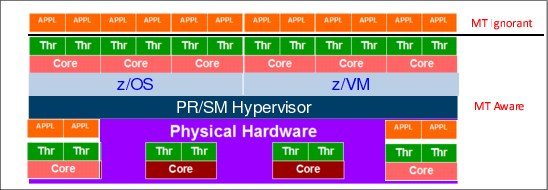

•z/VM V6.3 Multi-threading CPU Pooling support: z/VM CPU Pooling support will be enhanced to enforce IFL pool capacities as cores rather than as threads in an environment with multi-threading enabled.

|

1 All statements regarding IBM plans, directions, and intent are subject to change or withdrawal without notice. Any reliance on these statements of general direction is at the relying party’s sole risk and will not create liability or obligation for IBM.

7.2.3 z/VSE

Support is provided by z/VSE V5R1 and later. Note the following considerations:

•z/VSE runs in z/Architecture mode only.

•z/VSE uses 64-bit real memory addressing.

•Support for 64-bit virtual addressing is provided by z/VSE V5R1.

•z/VSE V5R1 requires an architectural level set that is specific to the IBM System z9.

For a list of supported functions and their minimum required support levels, see Table 7-4 on page 237.

7.2.4 z/TPF

For a list of supported functions and their minimum required support levels, see Table 7-4 on page 237.

7.2.5 Linux on z Systems

Linux on z Systems distributions are built separately for the 31-bit and 64-bit addressing modes of the z/Architecture. The newer distribution versions are built for 64-bit only. Using the 31-bit emulation layer on a 64-bit Linux on z Systems distribution provides support for running 31-bit applications. None of the current versions of Linux on z Systems distributions (SUSE Linux Enterprise Server (SLES1) 12 and SLES 11, and Red Hat (RHEL) 7 and RHEL 6)2 require z13 toleration support. Table 7-2 shows the service levels of SUSE and Red Hat releases supported at the time of writing.

Table 7-2 Current Linux on z Systems distributions

|

Linux on z Systems distribution

|

z/Architecture

(64-bit mode)

|

|

SUSE SLES 12

|

Yes

|

|

SUSE SLES 11

|

Yes

|

|

Red Hat RHEL 7

|

Yes

|

|

Red Hat RHEL 6

|

Yes

|

For the latest information about supported Linux distributions on z Systems, see this website:

IBM is working with its Linux distribution partners to provide further use of selected z13 functions in future Linux on z Systems distribution releases.

Consider the following guidelines:

•Use SUSE SLES 12 or Red Hat RHEL 6 in any new projects for the z13.

•Update any Linux distributions to their latest service level before the migration to z13.

•Adjust the capacity of any z/VM and Linux on z Systems LPAR guests, and z/VM guests, in terms of the number of IFLs and CPs, real or virtual, according to the PU capacity of the z13.

7.2.6 z13 function support summary

The following tables summarize the z13 functions and their minimum required operating system support levels:

•Table 7-3 on page 232 is for z/OS and z/VM.

•Table 7-4 on page 237 is for z/VSE, z/TPF, and Linux on z Systems.

Information about Linux on z Systems refers exclusively to the appropriate distributions of SUSE and Red Hat.

Both tables use the following conventions:

Y The function is supported.

N The function is not supported.

- The function is not applicable to that specific operating system.

Although the following tables list all functions that require support, the PTF numbers are not given. Therefore, for the current information, see the PSP bucket for 2964DEVICE.

Table 7-3 z13 function minimum support requirements summary (part 1 of 2)

|

Function

|

z/OS

V2 R1

|

z/OS

V1R13

|

z/OS

V1R12

|

z/VM

V6R3

|

z/VM

V6R2

|

|

z13

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Maximum Processor Unit (PUs) per system image

|

1411

|

100

|

100

|

642

|

32

|

|

Support of IBM zAware

|

Y

|

Y

|

N

|

-

|

-

|

|

z Systems Integrated Information Processors (zIIPs)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Java Exploitation of Transactional Execution

|

Y

|

Y

|

N

|

N

|

N

|

|

Large memory support (TB)3

|

4 TB

|

1 TB

|

1 TB

|

1 TB

|

256 GB

|

|

Large page support of 1 MB pageable large pages

|

Y

|

Y

|

Y

|

N

|

N

|

|

2 GB large page support

|

Y

|

Y4

|

N

|

N

|

N

|

|

Out-of-order execution

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Hardware decimal floating point5

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

85 LPARs

|

Y6

|

Yf

|

N

|

Y

|

Y

|

|

CPU measurement facility

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Enhanced flexibility for Capacity on Demand (CoD)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

HiperDispatch

|

Y

|

Y

|

Y

|

Y

|

N

|

|

Six logical channel subsystems (LCSSs)

|

Y

|

Y

|

N

|

N

|

N

|

|

Four subchannel sets per LCSS

|

Y

|

Y

|

N

|

Y

|

Y

|

|

Simultaneous MultiThreading (SMT)

|

Y

|

N

|

N

|

Y

|

N

|

|

Single-Instruction Multiple-Data (SIMD)

|

Y

|

N

|

N

|

N

|

N

|

|

Multi-vSwitch Link Aggregation

|

N

|

N

|

N

|

Y

|

N

|

|

Cryptography

|

|||||

|

CP Assist for Cryptographic Function (CPACF) greater than 16 Domain Support

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

CPACF AES-128, AES-192, and AES-256

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

CPACF SHA-1, SHA-224, SHA-256, SHA-384, and SHA-512

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

CPACF protected key

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Secure IBM Enterprise PKCS #11 (EP11) coprocessor mode

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Crypto Express5S

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Elliptic Curve Cryptography (ECC)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

HiperSockets

|

|||||

|

32 HiperSockets

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

HiperSockets Completion Queue

|

Y

|

Y

|

N

|

Y

|

Y

|

|

HiperSockets integration with IEDN

|

Y

|

Y

|

N

|

N

|

N

|

|

HiperSockets Virtual Switch Bridge

|

-

|

-

|

-

|

Y

|

Y

|

|

HiperSockets Network Traffic Analyzer

|

N

|

N

|

N

|

Y

|

Y

|

|

HiperSockets Multiple Write Facility

|

Y

|

Y

|

Y

|

N

|

N

|

|

HiperSockets support of IPV6

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

HiperSockets Layer 2 support

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

HiperSockets

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Flash Express Storage

|

|||||

|

Flash Express

|

Y

|

Y

|

N

|

N

|

N

|

|

zEnterprise Data Compression (zEDC)

|

|||||

|

zEDC Express

|

Y

|

N

|

N

|

Y

|

N

|

|

Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE)

|

|||||

|

10GbE RoCE Express

|

Y

|

Y

|

Y

|

Y

|

N

|

|

Shared RoCE environment

|

Y

|

N

|

N

|

Y

|

N

|

|

FICON (Fibre Connection) and FCP (Fibre Channel Protocol)

|

|||||

|

FICON Express 8S (CHPID type FC) when using z13 FICON or Channel-To-Channel (CTC)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express 8S (CHPID type FC) for support of zHPF single-track operations

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express 8S (CHPID type FC) for support of zHPF multitrack operations

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express 8S (CHPID type FCP) for support of SCSI devices

|

N

|

N

|

N

|

Y

|

Y

|

|

FICON Express 8S (CHPID type FCP) support of hardware data router

|

N

|

N

|

N

|

Y

|

N

|

|

T10-DIF support by the FICON Express8S and FICON Express8 features when defined as CHPID type FCP

|

N

|

N

|

N

|

Y

|

Y

|

|

GRS FICON CTC toleration

|

Y

|

Y

|

Y

|

N

|

N

|

|

FICON Express8 CHPID 10KM LX and SX type FC

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express 16S (CHPID type FC) when using FICON or CTC

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express 16S (CHPID type FC) for support of zHPF single-track operations

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express 16S (CHPID type FC) for support of zHPF multitrack operations

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express 16S (CHPID type FCP) for support of SCSI devices

|

N

|

N

|

N

|

Y

|

Y

|

|

FICON Express 16S (CHPID type FCP) support of hardware data router

|

N

|

N

|

N

|

Y

|

N

|

|

T10-DIF support by the FICON Express16S features when defined as CHPID type FCP

|

N

|

N

|

N

|

Y

|

Y

|

|

Health Check for FICON Dynamic routing

|

Y

|

Y

|

Y

|

N

|

N

|

|

OSA (Open Systems Adapter)

|

|||||

|

OSA-Express5S 10 Gigabit Ethernet Long Reach (LR) and Short Reach (SR) CHPID type OSD

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express5S 10 Gigabit Ethernet LR and SR

CHPID type OSX

|

Y

|

Y

|

Y

|

N7

|

Ng

|

|

OSA-Express5S Gigabit Ethernet Long Wave (LX) and Short Wave (SX) CHPID type OSD (two ports per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express5S Gigabit Ethernet LX and SX

CHPID type OSD (one port per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSC

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSD (two ports per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSD (one port per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSE

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSM

|

Y

|

Y

|

Y

|

Ng

|

Ng

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSN

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 10-Gigabit Ethernet LR and SR

CHPID type OSD

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 10-Gigabit Ethernet LR and SR

CHPID type OSX

|

Y

|

Y

|

Y

|

Ng

|

Ng

|

|

OSA-Express4S Gigabit Ethernet LX and SX

CHPID type OSD (two ports per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S Gigabit Ethernet LX and SX

CHPID type OSD (one port per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSC (one or two ports per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSD (two ports per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSD (one port per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSE (one or two ports per CHPID)

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSM (one port per CHPID)

|

Y

|

Y

|

Y

|

Ng

|

Ng

|

|

OSA-Express4S 1000BASE-T

CHPID type OSN

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Inbound workload queuing Enterprise extender

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Checksum offload IPV6 packets

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Checksum offload for LPAR-to-LPAR traffic

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Large send for IPV6 packets

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

Parallel Sysplex and other

|

|||||

|

STP

|

Y

|

Y

|

Y

|

-

|

-

|

|

Coupling over InfiniBand CHPID type CIB

|

Y

|

Y

|

Y

|

N

|

N

|

|

InfiniBand coupling links 12x at a distance of 150 m (492 ft.)

|

Y

|

Y

|

Y

|

N

|

N

|

|

InfiniBand coupling links 1x at an unrepeated distance of 10 km (6.2 miles)

|

Y

|

Y

|

Y

|

N

|

N

|

|

CFCC Level 20

|

Y

|

Y

|

Y

|

Y

|

Y

|

|

CFCC Level 20 Flash Express exploitation

|

Y

|

Y

|

N

|

N

|

N

|

|

CFCC Level 20 Coupling Thin Interrupts

|

Y

|

Y

|

Y

|

N

|

N

|

|

CFCC Level 20 Coupling Large Memory support

|

Y

|

Y

|

Y

|

N

|

N

|

|

CFCC 20 Support for 256 Coupling CHPIDs

|

Y

|

Y

|

Y

|

N

|

N

|

|

IBM Integrated Coupling Adapter (ICA)

|

Y

|

Y

|

Y

|

N

|

N

|

|

Dynamic I/O support for InfiniBand and ICA CHPIDs

|

-

|

-

|

-

|

Y

|

Y

|

|

RMF coupling channel reporting

|

Y

|

Y

|

Y

|

N

|

N

|

1 141-way without multithreading. 128-way with multithreading.

2 64-way without multithreading and 32-way with multithreading enabled.

3 10 TB of real storage available per server.

4 PTF support required and with RSM enabled web delivery.

5 Packed decimal conversion support.

6 Only 60 LPARs can be defined if z/OS V1R12 is running.

7 Dynamic I/O support only

Table 7-4 z13 functions minimum support requirements summary (part 2 of 2)

|

Function

|

z/VSE V5R2

|

z/VSE V5R1

|

z/TPF V1R1

|

Linux on z Systems

|

|

z13

|

Yf

|

Yf

|

Y

|

Y

|

|

Maximum PUs per system image

|

10

|

10

|

86

|

1411

|

|

Support of IBM zAware

|

-

|

-

|

-

|

Y

|

|

System z Integrated Information Processors (zIIPs)

|

-

|

-

|

-

|

-

|

|

Java Exploitation of Transactional Execution

|

N

|

N

|

N

|

Y

|

|

Large memory support 2

|

32 GB

|

32 GB

|

4 TB

|

4 TB3

|

|

Large page support pageable 1 MB page support

|

Y

|

Y

|

N

|

Y

|

|

2 GB Large Page Support

|

-

|

-

|

-

|

-

|

|

Out-of-order execution

|

Y

|

Y

|

Y

|

Y

|

|

85 logical partitions

|

Y

|

Y

|

Y

|

Y

|

|

HiperDispatch

|

N

|

N

|

N

|

N

|

|

Six logical channel subsystems (LCSSs)

|

Y

|

Y

|

N

|

Y

|

|

Four subchannel set per LCSS

|

Y

|

Y

|

N

|

Y

|

|

Simultaneous multithreading (SMT)

|

N

|

N

|

N

|

Y

|

|

Single Instruction Multiple Data (SIMD)

|

N

|

N

|

N

|

N

|

|

Multi-vSwitch Link Aggregation

|

N

|

N

|

N

|

N

|

|

Cryptography

|

||||

|

CP Assist for Cryptographic Function (CPACF)

|

Y

|

Y

|

Y

|

Y

|

|

CPACF AES-128, AES-192, and AES-256

|

Y

|

Y

|

Y4

|

Y

|

|

CPACF SHA-1/SHA-2, SHA-224, SHA-256, SHA-384, and SHA-512

|

Y

|

Y

|

Y5

|

Y

|

|

CPACF protected key

|

N

|

N

|

N

|

N

|

|

Secure IBM Enterprise PKCS #11 (EP11) coprocessor mode

|

N

|

N

|

N

|

N

|

|

Crypto Express5S

|

Y

|

Y

|

Y

|

|

|

Elliptic Curve Cryptography (ECC)

|

N

|

N

|

N

|

Ni

|

|

HiperSockets

|

||||

|

32 HiperSockets

|

Y

|

Y

|

Y

|

Y

|

|

HiperSockets Completion Queue

|

Yf

|

N

|

N

|

Y

|

|

HiperSockets integration with IEDN

|

N

|

N

|

N

|

N

|

|

HiperSockets Virtual Switch Bridge

|

-

|

-

|

-

|

Y8

|

|

HiperSockets Network Traffic Analyzer

|

N

|

N

|

N

|

Y9

|

|

HiperSockets Multiple Write Facility

|

N

|

N

|

N

|

N

|

|

HiperSockets support of IPV6

|

Y

|

Y

|

N

|

Y

|

|

HiperSockets Layer 2 support

|

N

|

N

|

N

|

Y

|

|

HiperSockets

|

Y

|

Y

|

N

|

Y

|

|

Flash Express Storage

|

||||

|

Flash Express

|

N

|

N

|

N

|

Y

|

|

zEnterprise Data Compression (zEDC)

|

||||

|

zEDC Express

|

N

|

N

|

N

|

N

|

|

Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE)

|

||||

|

10GbE RoCE Express

|

N

|

N

|

N

|

Ni

|

|

FICON and FCP

|

||||

|

FICON Express8S support of zHPF single track

CHPID type FC

|

N

|

N

|

N

|

Y

|

|

FICON Express8 support of zHPF multitrack

CHPID type FC

|

N

|

N

|

N

|

Y

|

|

High Performance FICON (zHPF)

|

N

|

N

|

N

|

Y10

|

|

GRS FICON CTC toleration

|

-

|

-

|

-

|

-

|

|

N-Port ID Virtualization for FICON (NPIV) CHPID type FCP

|

Y

|

Y

|

N

|

Y

|

|

FICON Express8S support of hardware data router

CHPID type FCP

|

N

|

N

|

N

|

Y11

|

|

FICON Express8S and FICON Express8 and FICON Express8S support of T10-DIF CHPID type FCP

|

N

|

N

|

N

|

Yj

|

|

FICON Express8S, FICON Express8, FICON Express16S 10KM LX, and FICON Express4 SX support of SCSI disks

CHPID type FCP

|

Y

|

Y

|

N

|

Y

|

|

FICON Express8S CHPID type FC

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express8 CHPID type FC

|

Y

|

Y12

|

Yl

|

Yl

|

|

FICON Express 16S (CHPID type FC) when using FICON or CTC

|

Y

|

Y

|

Y

|

Y

|

|

FICON Express 16S (CHPID type FC) for support of zHPF single-track operations

|

N

|

N

|

N

|

Y

|

|

FICON Express 16S (CHPID type FC) for support of zHPF multitrack operations

|

N

|

N

|

N

|

Y

|

|

FICON Express 16S (CHPID type FCP) for support of SCSI devices

|

Y

|

Y

|

N

|

Y

|

|

FICON Express 16S (CHPID type FCP) support of hardware data router

|

N

|

N

|

N

|

Y

|

|

T10-DIF support by the FICON Express16S features when defined as CHPID type FCP

|

N

|

N

|

N

|

Y

|

|

OSA

|

||||

|

Large send for IPv6 packets

|

-

|

-

|

-

|

-

|

|

Inbound workload queuing for Enterprise Extender

|

N

|

N

|

N

|

N

|

|

Checksum offload for IPV6 packets

|

N

|

N

|

N

|

N

|

|

Checksum offload for LPAR-to-LPAR traffic

|

N

|

N

|

N

|

N

|

|

OSA-Express5S 10 Gigabit Ethernet LR and SR

CHPID type OSD

|

Y

|

Y

|

Y13

|

Y

|

|

OSA-Express5S 10 Gigabit Ethernet LR and SR

CHPID type OSX

|

Y

|

Y

|

Y14

|

Y15

|

|

OSA-Express5S Gigabit Ethernet LX and SX

CHPID type OSD (two port per CHPID)

|

Y

|

Y

|

Ym

|

Y16

|

|

OSA-Express5S Gigabit Ethernet LX and SX

CHPID type OSD (one port per CHPID)

|

Y

|

Y

|

Ym

|

Y

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSC

|

Y

|

Y

|

N

|

-

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSD (two ports per CHPID)

|

Y

|

Y

|

Ym

|

Yp

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSD (one port per CHPID)

|

Y

|

Y

|

Ym

|

Y

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSE

|

Y

|

Y

|

N

|

N

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSM

|

N

|

N

|

N

|

Y17

|

|

OSA-Express5S 1000BASE-T Ethernet

CHPID type OSN

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 10-Gigabit Ethernet LR and SR

CHPID type OSD

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 10-Gigabit Ethernet LR and SR

CHPID type OSX

|

Y

|

N

|

Y18

|

Y

|

|

OSA-Express4S Gigabit Ethernet LX and SX

CHPID type OSD (two ports per CHPID)

|

Y

|

Y

|

Yr

|

Y

|

|

OSA-Express4S Gigabit Ethernet LX and SX

CHPID type OSD (one port per CHPID)

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSC (one or two ports per CHPID)

|

Y

|

Y

|

N

|

-

|

|

OSA-Express4S 1000BASE-T

CHPID type OSD (two ports per CHPID)

|

Y

|

Y

|

Yf

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSD (one port per CHPID)

|

Y

|

Y

|

Y

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSE (one or two ports per CHPID)

|

Y

|

Y

|

N

|

N

|

|

OSA-Express4S 1000BASE-T

CHPID type OSM

|

N

|

N

|

N

|

Y

|

|

OSA-Express4S 1000BASE-T

CHPID type OSN (one or two ports per CHPID)

|

Y

|

Y

|

Yf

|

Y

|

|

Parallel Sysplex and other

|

||||

|

Server Time Protocol (STP) enhancements

|

-

|

-

|

-

|

Y

|

|

STP - Server Time Protocol

|

-

|

-

|

Y19

|

Y

|

|

Coupling over InfiniBand CHPID type CIB

|

-

|

-

|

Y

|

-

|

|

InfiniBand coupling links 12x at a distance of 150 m (492 ft.)

|

-

|

-

|

-

|

-

|

|

InfiniBand coupling links 1x at unrepeated distance of 10 km (6.2 miles)

|

-

|

-

|

-

|

-

|

|

Dynamic I/O support for InfiniBand CHPIDs

|

-

|

-

|

-

|

-

|

|

CFCC Level 20

|

-

|

-

|

Y

|

-

|

1 SLES12 and RHEl can support up to 256 PUs (IFLs or CPs).

2 10 TB of real storage is supported per server.

3 Red Hat (RHEL) supports a maximum of 3 TB.

4 z/TPF supports only AES-128 and AES-256.

5 z/TPF supports only SHA-1 and SHA-256.

6 Service is required.

7 Supported only when running in accelerator mode.

8 Applicable to guest operating systems.

9 IBM is working with its Linux distribution partners to include support in future Linux on z Systems distribution releases.

10 Supported by SLES 11.

11 Supported by SLES 11 SP3 and RHEL 6.4.

12 For more information, see 7.3.39, “FCP provides increased performance” on page 264.

13 Requires PUT 5 with PTFs.

14 Requires PUT 8 with PTFs.

15 Supported by SLES 11 SP1, SLES 10 SP4, and RHEL 6, RHEL 5.6.

16 Supported by SLES 11, SLES 10 SP2, and RHEL 6, RHEL 5.2.

17 Supported by SLES 11 SP2, SLES 10 SP4, and RHEL 6, RHEL 5.2.

18 Requires PUT 4 with PTFs.

19 Server Time Protocol (STP) is supported in z/TPF with APAR PJ36831 in PUT 07.

7.3 Support by function

This section addresses operating system support by function. Only the currently in-support releases are covered.

Tables in this section use the following convention:

N/A Not applicable

NA Not available

7.3.1 Single system image

A single system image can control several processor units, such as CPs, zIIPs, or IFLs.

Maximum number of PUs per system image

Table 7-5 lists the maximum number of PUs supported by each operating system image and by special-purpose LPARs.

Table 7-5 Single system image size software support

|

Operating system

|

Maximum number of PUs per system image

|

|

z/OS V2R1

|

1411.

|

|

z/OS V1R13

|

1002.

|

|

z/OS V1R12

|

100b.

|

|

z/VM V6R3

|

643.

|

|

z/VM V6R2

|

32.

|

|

z/VSE V5R1 and later

|

z/VSE Turbo Dispatcher can use up to 4 CPs, and tolerates up to 10-way LPARs.

|

|

z/TPF V1R1

|

86 CPs.

|

|

CFCC Level 20

|

16 CPs or ICFs: CPs and ICFs cannot be mixed.

|

|

IBM zAware

|

80.

|

|

Linux on z Systems4

|

SUSE SLES 12: 256 CPs or IFLs.

SUSE SLES 11: 64 CPs or IFLs.

Red Hat RHEL 7: 256 CPs or IFLs.

Red Hat RHEL 6 64 CPs or IFLs.

|

1 128 PUs in multithreading mode and 141 PUs supported without multithreading.

2 Total characterizable PUs including zIIPs and CPs.

3 64 PUs without SMT mode and 32 PUs with SMT.

4 IBM is working with its Linux distribution partners to provide the use of this function in future Linux on z Systems distribution releases.

The zAware-mode logical partition

zEC12 introduced an LPAR mode, called zAware-mode, that is exclusively for running the IBM zAware virtual appliance. The IBM zAware virtual appliance can pinpoint deviations in z/OS normal system behavior. It also improves real-time event diagnostic tests by monitoring the z/OS operations log (OPERLOG). It looks for unusual messages, unusual message patterns that typical monitoring systems miss, and unique messages that might indicate system health issues. The IBM zAware virtual appliance requires the monitored clients to run z/OS V1R13 with PTFs or later. The newer version of IBM zAware is enhanced to work with messages without message IDs. This includes support for Linux running natively or as a guest under z/VM on z Systems.

The z/VM-mode LPAR

z13 supports an LPAR mode, called z/VM-mode, that is exclusively for running z/VM as the first-level operating system. The z/VM-mode requires z/VM V6R2 or later, and allows z/VM to use a wider variety of specialty processors in a single LPAR. For example, in a z/VM-mode LPAR, z/VM can manage Linux on z Systems guests running on IFL processors while also managing z/VSE and z/OS guests on central processors (CPs). It also allows z/OS to fully use zIIPs.

7.3.2 zIIP support

zIIPs do not change the model capacity identifier of the z13. IBM software product license charges based on the model capacity identifier are not affected by the addition of zIIPs. On a z13, z/OS Version 1 Release 12 is the minimum level for supporting zIIPs.

No changes to applications are required to use zIIPs. zIIPs can be used by these applications:

•DB2 V8 and later for z/OS data serving, for applications that use data Distributed Relational Database Architecture (DRDA) over TCP/IP, such as data serving, data warehousing, and selected utilities.

•z/OS XML services.

•z/OS CIM Server.

•z/OS Communications Server for network encryption (Internet Protocol Security (IPSec)) and for large messages that are sent by HiperSockets.

•IBM GBS Scalable Architecture for Financial Reporting.

•IBM z/OS Global Mirror (formerly XRC) and System Data Mover.

•IBM OMEGAMON® XE on z/OS, OMEGAMON XE on DB2 Performance Expert, and DB2 Performance Monitor.

•Any Java application that is using the current IBM SDK.

•WebSphere Application Server V5R1 and later, and products that are based on it, such as WebSphere Portal, WebSphere Enterprise Service Bus (WebSphere ESB), and WebSphere Business Integration (WBI) for z/OS.

•CICS/TS V2R3 and later.

•DB2 UDB for z/OS Version 8 and later.

•IMS Version 8 and later.

•zIIP Assisted Hiper Sockets for large messages

The functioning of a zIIP is transparent to application programs.

In z13, the zIIP processor is designed to run in SMT mode, with up to two threads per processor. This new function is designed to help improve throughput for zIIP workloads and provide appropriate performance measurement, capacity planning, and SMF accounting data. This support is planned to be available for z/OS V2.1 with PTFs at z13 general availability.

Use the PROJECTCPU option of the IEAOPTxx parmlib member to help determine whether zIIPs can be beneficial to the installation. Setting PROJECTCPU=YES directs z/OS to record the amount of eligible work for zIIPs in SMF record type 72 subtype 3. The field APPL% IIPCP of the Workload Activity Report listing by WLM service class indicates the percentage of a processor that is zIIP eligible. Because of the zIIP lower price as compared to a CP, a utilization as low as 10% might provide benefits.

7.3.3 Transactional Execution

The IBM zEnterprise EC12 introduced an architectural feature called Transactional Execution (TX). This capability is known in academia and industry as “hardware transactional memory”. Transactional execution has also been implemented in z13.

This feature enables software to indicate to the hardware the beginning and end of a group of instructions that need to be treated in an atomic way. Either all of their results happen or none happens, in true transactional style. The execution is optimistic. The hardware provides a memory area to record the original contents of affected registers and memory as the instruction’s execution takes place. If the transactional execution group is canceled or must be rolled back, the hardware transactional memory is used to reset the values. Software can implement a fallback capability.

This capability enables more efficient software by providing a way to avoid locks (lock elision). This advantage is of special importance for speculative code generation and highly parallelized applications.

TX is used by IBM JVM, but potentially can be used by other software. z/OS V1R13 with program temporary fixes (PTFs) or later is required. The feature also is enabled for specific Linux distributions.3

7.3.4 Maximum main storage size

Table 7-6 on page 244 lists the maximum amount of main storage that is supported by current operating systems. A maximum of 10 TB of main storage can be defined for an LPAR4 on a z13.

Expanded storage, although part of the z/Architecture, is used only by z/VM.

|

Statement of direction1: z/VM V6.3 is the last z/VM release that supports Expanded Storage (XSTORE) for either host or guest use. The z13 will be the last high-end server to support Expanded Storage (XSTORE).

|

1 All statements regarding IBM plans, directions, and intent are subject to change or withdrawal without notice. Any reliance on these statements of general direction is at the relying party’s sole risk and will not create liability or obligation for IBM.

Table 7-6 Maximum memory that is supported by the operating system

|

Operating system

|

Maximum supported main storage1

|

|

z/OS

|

z/OS V2R1 and later support 4 TBa.

|

|

z/VM

|

z/VM V6R3 and later support 1 TB.

z/VM V6R2 supports 256 GB

|

|

z/VSE

|

z/VSE V5R1 and later support 32 GB.

|

|

z/TPF

|

z/TPF supports 4 TB.a

|

|

CFCC

|

Level 20 supports up to 3 TB per server.a

|

|

IBM zAware

|

Supports up to 3 TB per server.a

|

|

Linux on z Systems (64-bit)

|

SUSE SLES 12 supports 4 TB.a

SUSE SLES 11 supports 4 TB.a

SUSE SLES 10 supports 4 TB.a

Red Hat RHEL 7 supports 3 TB.a

Red Hat RHEL 6 supports 3 TB.a

Red Hat RHEL 5 supports 3 TB.a

|

1 z13 supports 10 TB user configurable memory per server.

7.3.5 Flash Express

IBM z13 continues support for Flash Express, which can help improve the resilience and performance of the z/OS system. Flash Express is designed to assist with the handling of workload spikes or increased workload demand that might occur at the opening of the business day, or in a workload shift from one system to another.

z/OS is the first OS to use Flash Express storage as storage-class memory (SCM) for paging store and supervisor call (SVC) memory dumps. Flash memory is a faster paging device as compared to a hard disk drive (HDD). SVC memory dump data capture time is expected to be substantially reduced. As a paging store, Flash Express storage is suitable for workloads that can tolerate paging. It does not benefit workloads that cannot afford to page. The z/OS design for Flash Express storage does not completely remove the virtual storage constraints that are created by a paging spike in the system. However, some scalability relief is expected because of faster paging I/O with Flash Express storage.

Flash Express storage is allocated to an LPAR similarly to main memory. The initial and maximum amount of Flash Express Storage that is available to a particular LPAR is specified at the Support Element (SE) or Hardware Management Console (HMC) by using a new Flash Storage Allocation panel. The Flash Express storage granularity is 16 GB. The amount of Flash Express storage in the partition can be changed dynamically between the initial and the maximum amount at the SE or HMC. For z/OS, this change also can be made by using an operator command. Each partition’s Flash Express storage is isolated like the main storage, and sees only its own space in the flash storage space.

Flash Express provides 1.4 TB of storage per feature pair. Up to four pairs can be installed, for a total of 5.6 TB. All paging data can easily be on Flash Express storage, but not all types of page data can be on it. For example, virtual I/O (VIO) data always is placed on an external disk. Local page data sets are still required to support peak paging demands that require more capacity than provided by the amount of configured SCM.

The z/OS paging subsystem works with a mix of internal Flash Express storage and external disk. The placement of data on Flash Express storage and external disk is self-tuning, based on measured performance. At IPL time, z/OS detects whether Flash Express storage is assigned to the partition. z/OS automatically uses Flash Express storage for paging unless specified otherwise by using PAGESCM=NONE in IEASYSxx. No definition is required for placement of data on Flash Express storage.

The support is delivered in the z/OS V1R13 real storage manager (RSM) Enablement Offering Web Deliverable (FMID JBB778H) for z/OS V1R13.5 The installation of this web deliverable requires careful planning because the size of the Nucleus, extended system queue area (ESQA) per CPU, and RSM stack is increased. Also, there is a new memory pool for pageable large pages. For web-deliverable code on z/OS, see the z/OS downloads website:

The support also is delivered in z/OS V2R1 (included with the base product) or later.

Linux on z Systems also offers the support for Flash Express as an SCM device. This is useful for workloads with large write operations with a block size of 256 KB or more of data. The SCM increments are accessed through extended asynchronous data mover (EADM) subchannels.

Table 7-7 lists the minimum support requirements for Flash Express.

Table 7-7 Minimum support requirements for Flash Express

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R131

|

|

Linux on z Systems

|

•SLES12

•SLES11 SP3

•RHEL7

•RHEL6

|

1 Web deliverable and PTFs are required.

Flash Express usage by CFCC

Coupling facility control code (CFCC) Level 20 supports Flash Express. Initial CF Flash usage is targeted for WebSphere MQ shared queues application structures. Structures can now be allocated with a combination of real memory and SCM that is provided by the Flash Express feature. For more information, see “Flash Express exploitation by CFCC” on page 289.

Flash Express usage by Java

z/OS Java SDK 7 SR3, CICS TS V5.1, WebSphere Liberty Profile 8.5, DB2 V11, and IMS V12 are targeted for Flash Express usage. There is a statement of direction to support traditional WebSphere V8. The support is for just-in-time (JIT) Code Cache and Java Heap to improve performance for pageable large pages.

7.3.6 zEnterprise Data Compression Express (zEDC)

The growth of data that must be captured, transferred, and stored for a long time is unrelenting. Software-implemented compression algorithms are costly in terms of processor resources, and storage costs are not negligible either.

zEDC is an optional feature that is available to z13, zEC12, and zBC12, addresses those requirements by providing hardware-based acceleration for data compression and decompression. zEDC provides data compression with lower CPU consumption than the compression technology that previously was available on z Systems.

Exploitation support of zEDC Express functions is provided exclusively by z/OS V2R1 zEnterprise Data Compression for both data compression and decompression.

Support for data recovery (decompression) when the zEDC is not installed, or installed but not available, on the system, is provided through software on z/OS V2R1, V1R13, and V1R12 with the correct PTFs. Software decompression is slow and uses considerable processor resources; therefore, it is not suggested for production environments.

zEDC is enhanced to support QSAM/BSAM (non-VSAM) data set compression. This can be achieved by any of the following ways

•Data class level: Two new values, zEDC Required (ZR) and zEDC Preferred (ZP), can be set with the COMPACTION option in the data class.

•System Level: Two new values, zEDC Required (ZEDC_R) and zEDC Preferred (ZEDC_P), can be specified with the COMPRESS parameter found in the IGDSMSXX member of the SYS1.PARMLIB data set.

Data class takes precedence over system level.

Table 7-8 shows the minimum support requirements for zEDC Express.

Table 7-8 Minimum support requirements for zEDC Express

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V2R11

z/OS V1R13a (Software decompression support only)

z/OS V1R12a (Software decompression support only)

|

1 PTFs are required.

7.3.7 10GbE RoCE Express

The IBM z13 supports the RoCE Express feature. It extends this support by providing support to the second port on the adapter and by sharing the ports to up 31 partitions, per adapter, using both ports.

The 10 Gigabit Ethernet (10GbE) RoCE Express feature is designed to help reduce consumption of CPU resources for applications that use the TCP/IP stack (such as WebSphere accessing a DB2 database). Use of the 10GbE RoCE Express feature also can help reduce network latency with memory-to-memory transfers using Shared Memory Communications over Remote Direct Memory Access (SMC-R) in z/OS V2R1. It is transparent to applications and can be used for LPAR-to-LPAR communication on a single z13 or for server-to-server communication in a multiple CPC environment.

z/OS V2R1 with PTFs is the only supporting OS for the SMC-R protocol. It does not roll back to previous z/OS releases. z/OS V1R12 and z/OS V1R13 with PTFs provide only compatibility support.

IBM is working with its Linux distribution partners to include support in future Linux on z Systems distribution releases.

Table 7-9 lists the minimum support requirements for 10GbE RoCE Express.

Table 7-9 Minimum support requirements for RoCE Express

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V2R1 with supporting PTFs (SPE for IBM VTAM®, TCP/IP, and IOS1). The IOS PTF is a minor change to allow greater than 256 (xFF) PFIDs for RoCE.

|

|

z/VM

|

z/VM V6.3 with supporting PTFs. The z/VM V6.3 SPE for base PCIe support is required. There currently are no known additional z/VM software changes that are required for SR-IOV. When running z/OS as a guest z/OS, APAR OA43256 is required for RoCE, and APARs OA43256 and OA44482 are required for zEDC. A z/VM website that details the prerequisites for using RoCE and zEDC as a guest can be found at following location:

|

|

Linux on z Systems

|

Currently limited to experimental support in:

•SUSE SLES 12

•SUSE SLES11 SP3 with latest maintenance.

•RHEL 7.0.

|

7.3.8 Large page support

In addition to the existing 1-MB large pages, 4-KB pages, and page frames, z13 supports pageable 1-MB large pages, large pages that are 2 GB, and large page frames. For more information, see “Large page support” on page 113.

Table 7-10 lists the support requirements for 1-MB large pages.

Table 7-10 Minimum support requirements for 1-MB large page

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R11

z/OS V1R131 for pageable 1-MB large pages

|

|

z/VM

|

Not supported, and not available to guests

|

|

z/VSE

|

z/VSE V4R3: Supported for data spaces

|

|

Linux on z Systems

|

SUSE SLES 12

SUSE SLES 11

Red Hat RHEL 7

Red Hat RHEL 6

|

1 Web deliverable and PTFs are required, plus the Flash Express hardware feature.

Table 7-11 lists the support requirements for 2-GB large pages.

Table 7-11 Minimum support requirements for 2-GB large pages

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R13

|

7.3.9 Hardware decimal floating point

Industry support for decimal floating point is growing, with IBM leading the open standard definition. Examples of support for the draft standard IEEE 754r include Java BigDecimal, C#, XML, C/C++, GCC, COBOL, and other key software vendors, such as Microsoft and SAP.

Decimal floating point support was introduced with z9 EC. z13 inherited the decimal floating point accelerator feature that was introduced with z10 EC. For more information, see 3.4.6, “Decimal floating point (DFP) accelerator” on page 96.

Table 7-12 lists the operating system support for decimal floating point. For more information, see 7.6.6, “Decimal floating point and z/OS XL C/C++ considerations” on page 286.

Table 7-12 Minimum support requirements for decimal floating point

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12.

|

|

z/VM

|

z/VM V6R2: Support is for guest use.

|

|

Linux on z Systems

|

SUSE SLES 12

SUSE SLES 11

Red Hat RHEL 7

Red Hat RHEL 6

|

7.3.10 Up to 85 LPARs

This feature, first made available in z13, allows the system to be configured with up to 85 LPARs. Because channel subsystems can be shared by up to 15 LPARs, it is necessary to configure six channel subsystems to reach the 85 LPARs limit. Table 7-13 lists the minimum operating system levels for supporting 85 LPARs.

Table 7-13 Minimum support requirements for 85 LPARs

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

|

z/VM

|

z/VM V6R2

|

|

z/VSE

|

z/VSE V5R1

|

|

z/TPF

|

z/TPF V1R1

|

|

Linux on z Systems

|

SUSE SLES 11

SUSE SLES 12

Red Hat RHEL 7

Red Hat RHEL 6

|

|

Remember: The IBM zAware virtual appliance runs in a dedicated LPAR, so when it is activated, it reduces by one the maximum number of available LPARs.

|

7.3.11 Separate LPAR management of PUs

The z13 uses separate PU pools for each optional PU type. The separate management of PU types enhances and simplifies capacity planning and management of the configured LPARs and their associated processor resources. Table 7-14 lists the support requirements for the separate LPAR management of PU pools.

Table 7-14 Minimum support requirements for separate LPAR management of PUs

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

|

z/VM

|

z/VM V6R2

|

|

z/VSE

|

z/VSE V5R1

|

|

z/TPF

|

z/TPF V1R1

|

|

Linux on z Systems

|

SUSE SLES 12

SUSE SLES 11

Red Hat RHEL 7

Red Hat RHEL 6

|

7.3.12 Dynamic LPAR memory upgrade

An LPAR can be defined with both an initial and a reserved amount of memory. At activation time, the initial amount is made available to the partition and the reserved amount can be added later, partially or totally. Those two memory zones do not have to be contiguous in real memory, but appear as logically contiguous to the operating system that runs in the LPAR.

z/OS can take advantage of this support and nondisruptively acquire and release memory from the reserved area. z/VM V6R2 and higher can acquire memory nondisruptively, and immediately make it available to guests. z/VM virtualizes this support to its guests, which now also can increase their memory nondisruptively if supported by the guest operating system. Releasing memory from z/VM is not supported. Releasing memory from the z/VM guest depends on the guest’s operating system support.

Dynamic LPAR memory upgrade is not supported for zAware-mode LPARs.

7.3.13 LPAR physical capacity limit enforcement

On the IBM z13, PR/SM is enhanced to support an option to limit the amount of physical processor capacity that is consumed by an individual LPAR when a PU that is defined as a central processor (CP) or an IFL is shared across a set of LPARs. This enhancement is designed to provide a physical capacity limit that is enforced as an absolute (versus a relative) limit; it is not affected by changes to the logical or physical configuration of the system. This physical capacity limit can be specified in units of CPs or IFLs.

Table 7-15 lists the minimum operating system level that is required on z13.

Table 7-15 Minimum support requirements for LPAR physical capacity limit enforcement

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R121

|

|

z/VM

|

z/VM V6R3

|

|

z/VSE

|

z/VSE V5R1a

|

1 PTFs are required.

7.3.14 Capacity Provisioning Manager

The provisioning architecture enables clients to better control the configuration and activation of the On/Off CoD. For more information, see 8.8, “Nondisruptive upgrades” on page 346. The new process is inherently more flexible, and can be automated. This capability can result in easier, faster, and more reliable management of the processing capacity.

The Capacity Provisioning Manager, a function that is first available with z/OS V1R9, interfaces with z/OS Workload Manager (WLM) and implements capacity provisioning policies. Several implementation options are available, from an analysis mode that issues only guidelines, to an autonomic mode that provides fully automated operations.

Replacing manual monitoring with autonomic management or supporting manual operation with guidelines can help ensure that sufficient processing power is available with the least possible delay. Support requirements are listed in Table 7-16.

Table 7-16 Minimum support requirements for capacity provisioning

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

|

z/VM

|

Not supported: Not available to guests

|

7.3.15 Dynamic PU add

Planning of an LPAR configuration allows defining reserved PUs that can be brought online when extra capacity is needed. Operating system support is required to use this capability without an IPL, that is, nondisruptively. This support has been in z/OS for a long time.

The dynamic PU add function enhances this support by allowing you to define and change dynamically the number and type of reserved PUs in an LPAR profile, removing any planning requirements. Table 7-17 lists the minimum required operating system levels to support this function.

The new resources are immediately made available to the operating system and, in the case of z/VM, to its guests. The dynamic PU add function is not supported for zAware-mode LPARs.

Table 7-17 Minimum support requirements for dynamic PU add

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

|

z/VM

|

z/VM V6R2

|

|

z/VSE

|

z/VSE V5R1

|

|

Linux on z Systems

|

SUSE SLES 12

SUSE SLES 11

Red Hat RHEL 7

Red Hat RHEL 6

|

7.3.16 HiperDispatch

The HIPERDISPATCH=YES/NO parameter in the IEAOPTxx member of SYS1.PARMLIB and on the SET OPT=xx command controls whether HiperDispatch is enabled or disabled for a z/OS image. It can be changed dynamically, without an IPL or any outage.

The default is that HiperDispatch is disabled on all releases, from z/OS V1R10 (requires PTFs for zIIP support) through z/OS V1R12.

Beginning with z/OS V1R13, when running on a z13, zEC12, zBC12, z196, or z114 server, the IEAOPTxx keyword HIPERDISPATCH defaults to YES. If HIPERDISPATCH=NO is specified, the specification is accepted as it was on previous z/OS releases.

The usage of SMT in the z13 requires that HiperDispatch is enabled on the operating system (z/OS V2R1 or z/VM V6R3).

Additionally, with z/OS V1R12 or later, any LPAR running with more than 64 logical processors is required to operate in HiperDispatch Management Mode.

The following rules control this environment:

•If an LPAR is defined at IPL with more than 64 logical processors, the LPAR automatically operates in HiperDispatch Management Mode, regardless of the HIPERDISPATCH= specification.

•If more logical processors are added to an LPAR that has 64 or fewer logical processors and the additional logical processors raise the number of logical processors to more than 64, the LPAR automatically operates in HiperDispatch Management Mode regardless of the HIPERDISPATCH=YES/NO specification. That is, even if the LPAR has the HIPERDISPATCH=NO specification, that LPAR is converted to operate in HiperDispatch Management Mode.

•An LPAR with more than 64 logical processors running in HiperDispatch Management Mode cannot be reverted to run in non-HiperDispatch Management Mode.

HiperDispatch in the z13 uses a new chip and CPC drawer configuration to improve the access cache performance. Beginning with z/OS V1R13, HiperDispatch changed to use the new node cache structure of z13. The base support is provided by PTFs identified by IBM.device.server.z13-2964.requiredservice.

The PR/SM in the System z9 EC to zEC12 servers stripes the memory across all books in the system to take advantage of the fast book interconnection and spread memory controller work. The PR/SM in the z13 seeks to assign all memory in one drawer striped across the two nodes to take advantage of the lower latency memory access in a drawer and smooth performance variability across nodes in the drawer.

The PR/SM in the System z9 EC to zEC12 servers attempts to assign all logical processors to one book, packed into PU chips of that book in cooperation with operating system HiperDispatch optimize shared cache usage. The PR/SM in the z13 seeks to assign all logical processors of a partition to one CPC drawer, packed into PU chips of that CPC drawer in cooperation with operating system HiperDispatch optimize shared cache usage.

The PR/SM automatically keeps partition’s memory and logical processors on the same CPC drawer. This arrangement looks simple for a partition, but it is a complex optimization for multiple logical partitions because some must be split among processors drawers.

To use effectively HiperDispatch, WLM goal adjustment might be required. Review the WLM policies and goals, and update them as necessary. You might want to run with the new policies and HiperDispatch on for a period, turn it off, and then run with the older WLM policies. Compare the results of using HiperDispatch, readjust the new policies, and repeat the cycle, as needed. WLM policies can be changed without turning off HiperDispatch.

A health check is provided to verify whether HiperDispatch is enabled on a system image that is running on z13.

z/VM V6R3

z/VM V6R3 also uses the HiperDispatch facility for improved processor efficiency by better use of the processor cache to take advantage of the cache-rich processor, node, and drawer design of the z13 system. The supported processor limit has been increased to 64, while with SMT, it remains at 32, supporting having up to 64 threads running simultaneously (32 processors).

The operating system support requirements for HiperDispatch are listed in Table 7-18.

Table 7-18 Minimum support requirements for HiperDispatch

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R11 with PTFs

|

|

z/VM

|

z/VM V6R3

|

|

Linux on z Systems

|

SUSE SLES 12a

SUSE SLES 11a

Red Hat RHEL 7a

Red Hat RHEL 61

|

1 For more information about CPU polarization support; see http://www-01.ibm.com/support/knowledgecenter/linuxonibm/com.ibm.linux.z.lgdd/lgdd_t_cpu_pol.html

7.3.17 The 63.75-K subchannels

Servers before z9 EC reserved 1024 subchannels for internal system use, out of a maximum of 64 K subchannels. Starting with z9 EC, the number of reserved subchannels was reduced to 256, increasing the number of subchannels that are available. Reserved subchannels exist only in subchannel set 0. One subchannel is reserved in each of subchannel sets 1, 2, and 3.

The informal name, 63.75-K subchannels, represents 65280 subchannels, as shown in the following equation:

63 x 1024 + 0.75 x 1024 = 65280

The above equation is applicable for subchannel set 0. For subchannel sets 1, 2 and 3, the available subchannels are derived by using the following equation:

(64 X 1024) -1=65535

Table 7-19 lists the minimum operating system level that is required on the z13.

Table 7-19 Minimum support requirements for 63.75-K subchannels

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

|

z/VM

|

z/VM V6R2

|

|

Linux on z Systems

|

SUSE SLES 12

SUSE SLES 11

Red Hat RHEL 7

Red Hat RHEL 6

|

7.3.18 Multiple subchannel sets (MSS)

MSS, first introduced in z9 EC, provide a mechanism for addressing more than 63.75-K I/O devices and aliases for ESCON6 (CHPID type CNC) and FICON (CHPID types FC) on the z13, zEC12, zBC12, z196, z114, z10 EC, and z9 EC. z196 introduced the third subchannel set (SS2). With z13, one more subchannel set (SS3) has been introduced, which expands the alias addressing by 64-K more I/O devices.

Table 7-20 lists the minimum operating system levels that are required on the z13.

Table 7-20 Minimum software requirement for MSS

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

|

z/VM

|

z/VM V6R31

|

|

Linux on z Systems

|

SUSE SLES 12

SUSE SLES 11

Red Hat RHEL 7

Red Hat RHEL 6

|

1 For specific Geographically Dispersed Parallel Sysplex (GDPS) usage only

z/VM V6R3 MSS support for mirrored direct access storage device (DASD) provides a subset of host support for the MSS facility to allow using an alternative subchannel set for Peer-to-Peer Remote Copy (PPRC) secondary volumes.

7.3.19 Fourth subchannel set

With z13, a fourth subchannel set (SS3) was introduced. It applies FICON (CHPID type FC for both FICON and zHPF paths) channels.

Together with the second subchannel set (SS1) and third subchannel set (SS2), SS3 can be used for disk alias devices of both primary and secondary devices, and as Metro Mirror secondary devices. This set helps facilitate storage growth and complements other functions, such as extended address volume (EAV) and Hyper Parallel Access Volumes (HyperPAV).

Table 7-21 lists the minimum operating systems level that is required on the z13.

Table 7-21 Minimum software requirement for SS3

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R13 with PTFs

|

|

z/VM

|

z/VM V6R2 with PTF

|

|

Linux on z Systems

|

SUSE SLES 12

SUSE SLES 11

Red Hat RHEL 7

Red Hat RHEL 6

|

7.3.20 IPL from an alternative subchannel set

z13 supports IPL from subchannel set 1 (SS1), subchannel set 2 (SS2), or subchannel set 3 (SS3), in addition to subchannel set 0. For more information, see “IPL from an alternative subchannel set” on page 187.

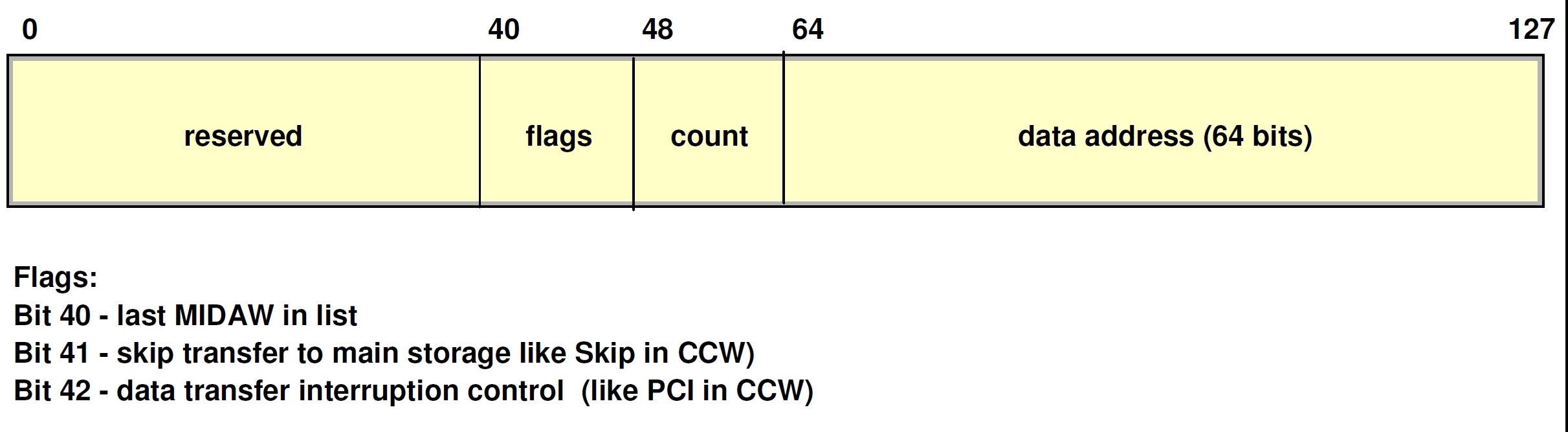

7.3.21 Modified Indirect Data Address Word (MIDAW) facility

The MIDAW facility improves FICON performance. It provides a more efficient channel command word (CCW)/indirect data address word (IDAW) structure for certain categories of data-chaining I/O operations.

Support for the MIDAW facility when running z/OS as a guest of z/VM requires z/VM V6R2 or higher. For more information, see 7.9, “Simultaneous multithreading (SMT)” on page 290.

Table 7-22 lists the minimum support requirements for MIDAW.

Table 7-22 Minimum support requirements for MIDAW

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

|

z/VM

|

z/VM V6R2 for guest use

|

7.3.22 HiperSockets Completion Queue

The HiperSockets Completion Queue function is exclusive to z13, zEC12, zBC12, z196, and z114. The HiperSockets Completion Queue function is designed to allow HiperSockets to transfer data synchronously if possible, and asynchronously if necessary. Therefore, it combines ultra-low latency with more tolerance for traffic peaks. This benefit can be especially helpful in burst situations.

Table 7-23 lists the minimum support requirements for HiperSockets Completion Queue.

Table 7-23 Minimum support requirements for HiperSockets Completion Queue

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R13a

|

|

z/VSE

|

z/VSE V5R11

|

|

z/VM

|

z/VM V6R2a

|

|

Linux on z Systems

|

SUSE SLES 12

SUSE SLES 11 SP2

Red Hat RHEL 7

Red Hat RHEL 6.2

|

1 PTFs are required.

7.3.23 HiperSockets integration with the intraensemble data network

The HiperSockets integration with the intraensemble data network (IEDN) is exclusive to z13, zEC12, zBC12, z196, and z114. HiperSockets integration with the IEDN combines the HiperSockets network and the physical IEDN to be displayed as a single Layer 2 network. This configuration extends the reach of the HiperSockets network outside the CPC to the entire ensemble, displaying as a single Layer 2 network.

Table 7-24 lists the minimum support requirements for HiperSockets integration with the IEDN.

Table 7-24 Minimum support requirements for HiperSockets integration with IEDN

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R131

|

1 PTFs are required.

7.3.24 HiperSockets Virtual Switch Bridge

The HiperSockets Virtual Switch Bridge is exclusive to z13, zEC12, zBC12, z196, and z114. HiperSockets Virtual Switch Bridge can integrate with the IEDN through OSA-Express for zBX (OSX) adapters. It can then bridge to another central processor complex (CPC) through OSD adapters. This configuration extends the reach of the HiperSockets network outside of the CPC to the entire ensemble and hosts that are external to the CPC. The system is displayed as a single Layer 2 network.

Table 7-25 lists the minimum support requirements for HiperSockets Virtual Switch Bridge.

Table 7-25 Minimum support requirements for HiperSockets Virtual Switch Bridge

|

Operating system

|

Support requirements

|

|

z/VM

|

z/VM V6R21,

z/VM V6R3

|

|

Linux on z Systems2

|

SUSE SLES 12

SUSE SLES 11

SUSE SLES 10 SP4 update (kernel 2.6.16.60-0.95.1)

Red Hat RHEL 7

Red Hat RHEL 6

Red Hat RHEL 5.8 (GA-level)

|

1 PTFs are required.

2 Applicable to guest operating systems.

7.3.25 HiperSockets Multiple Write Facility

The HiperSockets Multiple Write Facility allows the streaming of bulk data over a HiperSockets link between two LPARs. Multiple output buffers are supported on a single Signal Adapter (SIGA) write instruction. The key advantage of this enhancement is that it allows the receiving LPAR to process a much larger amount of data per I/O interrupt. This process is transparent to the operating system in the receiving partition. HiperSockets Multiple Write Facility with fewer I/O interrupts is designed to reduce processor utilization of the sending and receiving partitions.

Support for this function is required by the sending operating system. For more information, see 4.8.6, “HiperSockets” on page 167. Table 7-26 lists the minimum support requirements for HiperSockets Virtual Multiple Write Facility.

Table 7-26 Minimum support requirements for HiperSockets multiple write

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

7.3.26 HiperSockets IPv6

IPv6 is expected to be a key element in future networking. The IPv6 support for HiperSockets allows compatible implementations between external networks and internal HiperSockets networks.

Table 7-27 lists the minimum support requirements for HiperSockets IPv6 (CHPID type IQD).

Table 7-27 Minimum support requirements for HiperSockets IPv6 (CHPID type IQD)

|

Operating system

|

Support requirements

|

|

z/OS

|

z/OS V1R12

|

|

z/VM