Introducing the IBM z13

Digital business has been driving the transformation of underlying IT infrastructure to be more efficient, secure, adaptive, and integrated. Information Technology (IT) must be able to handle the explosive growth of mobile clients and employees. IT must be able to use enormous amounts of data to provide deep and real-time insights to help achieve the greatest business impact.

Data has become more valuable as a resource that can be used by businesses, with their existing and previously under-served clients (businesses and citizens), allowing them to gain access to new products, services, and societal benefits. To help achieve the required business outcome, sophisticated real-time analytics can be applied on the data, which is present in structured and unstructured format. This enables real-time analytics such as performing fraud detection during the life of a financial transaction, or providing suggestions for cross-selling or up-selling during a sales transaction.

A data-centric infrastructure must always be available for a 24 x 7 business, have flawless data integrity, and be secured from misuse. It needs to be an integrated infrastructure that can support new applications. It needs to have integrated capabilities that can provide new mobile capabilities with real-time analytics delivered by a secure cloud infrastructure.

The IBM z13, like its predecessors, is designed from the chip level up for data serving and transaction processing, which forms the core of business. IBM enables a common view data. It provides unmatched support for data, including a strong, fast I/O infrastructure, cache on the chip to bring data close to processing power, the security and compression capabilities of the co-processors and I/O features, and the 99.999% data availability design of clustering technologies.

The z13 has a new processor chip that offers innovative technology that is designed to deliver maximum performance, and up to 10 TB of available memory for improved efficiency across workloads. The addition of more memory can help improve response time, minimize constraints, speed up batch processing, and reduce the processor cycles that are consumed for a specific workload. The z13 also offers a 2X improvement in the system I/O bandwidth, which can be combined with new key I/O enhancements to help reduce transfer time and enable longer distance for the global infrastructure.

A key design aspect of the z13 is building a stronger Enterprise grade platform for running Linux. The z13 has more throughput per core (Integrated Facility for Linux (IFL)) and leverages the memory and I/O enhancements. New solutions and features provide high availability in case of system, application, or network failure.

|

Terminology: The remainder of the book uses the designation CPC to refer to the central processor complex.

|

z/OS V2.1 running on z13, sets the groundwork for digital business by providing the foundation that you need to support demanding workloads such as operational analytics and cloud along with your traditional mission-critical applications. z/OS V2.1 continues to support the IBM System z Integrated Information Processor (zIIP)1 which can take advantage of the simultaneous multithreading (SMT) feature implemented in the IBM z Systems processor unit (PU). Applications running under z/OS V2.1 can take advantage of single-instruction multiple-data (SIMD) by using compilers that are designed to support the SIMD instructions. z/OS features many I/O related enhancements, such as extending the reach of workload management into the SAN Fabric. With enhancements to management and operations, z/OS V2.1 and z/OS Management Facility V2.1 can help system administrators and other personnel handle configuration tasks with ease. Recent Mobile Workload Pricing for z/OS can help reduce the cost of growth for mobile transactions that are processed by programs such as IBM CICS®, IBM IMS™, and IBM DB2® for z/OS.

The new 141-core design delivers massive scale-up across all workloads and enables cost saving consolidation opportunities. IBM z/VM® 6.3 has been enhanced to use SMT offered on the new processor chip2, and also supports twice as many processors (up to 64) or as many as 64 threads for Linux workloads. With support for sharing Open Systems Adapters (OSAs) across z/VM systems, z/VM 6.3 delivers enhanced availability and reduced cost of ownership in network environments.

|

Statement of direction1: z/VM support for SIMD: In a future deliverable, IBM intends to deliver support to enable z/VM guests to use the Vector Facility for z/Architecture® (SIMD).

|

1 All statements regarding IBM plans, directions, and intent are subject to change or withdrawal without notice. Any reliance on these statements of general direction is at the relying party’s sole risk and will not create liability or obligation for IBM.

The IBM z13 (z13) brings a new approach for Enterprise-grade Linux with offerings and capabilities for availability, virtualization with z/VM, and a focus on open standards and architecture with new support of kernel-based virtual machine (KVM) on the mainframe (see previous Statement of Direction).

|

Statement of Direction1: KVM: In addition to continued investment in z/VM, IBM intends to provide a KVM hypervisor for z Systems that will host Linux guest virtual machines. The KVM offering will be software based and will coexist with z/VM virtualization environments, z/OS, IBM z/VSE®, and z/TPF. This modern, open source-based hypervisor will enable enterprises to capitalize on virtualization capabilities using common Linux administration skills, while enjoying the robustness of the z Systems’ scalability, performance, security, and resilience. The KVM offering will be optimized for z Systems architecture and will provide standard Linux and KVM interfaces for operational control of the environment. In addition, the KVM will integrate with standard OpenStack virtualization management tools, enabling enterprises to easily integrate Linux servers into their existing traditional infrastructure and cloud offerings.

|

•1 All statements regarding IBM plans, directions, and intent are subject to change or withdrawal without notice. Any reliance on these statements of general direction is at the relying party’s sole risk and will not create liability or obligation for IBM.

IBM z13 continues to provide heterogeneous platform investment protection with the updated IBM z BladeCenter Extension (zBX) Model 004 and IBM z Unified Resource Manager (zManager). Enhancements to the zBX include the uncoupling of the zBX from the server and installing a Support Element (SE) into the zBX. zBX Model 002 and Model 003 can be upgraded to the zBX Model 004.

This chapter includes the following sections:

1.1 z13 highlights

This section reviews some of the most important features and functions of z13:

1.1.1 Processor and memory

IBM continues its technology leadership with the z13. The z13 is built using the IBM modular multi-drawer design that supports 1 - 4 CPC drawers per CPC. Each CPC drawer contains eight single-chip modules (SCM), which host the redesigned complementary metal-oxide semiconductor (CMOS) 14S03 processor units, storage control chips, and connectors for I/O. The superscalar processor has enhanced out-of-order (OOO) instruction execution, redesigned caches, an expanded instruction set that includes a Transactional Execution facility, includes innovative SMT capability, and provides 139 SIMD vector instruction subset for better performance.

Depending on the model, the z13 can support from 256 GB to a maximum of 10 TB of usable memory, with up to 2.5 TB of usable memory per CPC drawer. In addition, a fixed amount of 96 GB is reserved for the hardware system area (HSA) and is not part of customer-purchased memory. Memory is implemented as a redundant array of independent memory (RAIM) and uses extra physical memory as spare memory. The RAIM function uses 20% of the physical installed memory in each CPC drawer.

1.1.2 Capacity and performance

The z13 provides increased processing and I/O capacity over its predecessor, the zEC12 system. This capacity is achieved both by increasing the performance of the individual processor units, which increases the number of processor units (PUs) per system, redesigning the system cache, and increasing the amount of memory. The increased performance and the total system capacity available, with possible energy savings, allow consolidating diverse applications on a single platform, with significant financial savings. The introduction of new technologies and instruction set ensure that the z13 is a high performance, reliable, and rich-security platform. z13 is designed to maximize resource exploitation and utilization, and allows you to integrate and consolidate applications and data across the enterprise IT infrastructure.

z13 has five model offerings of 1 - 141 configurable PUs. The first four models (N30, N63, N96, and NC9) have up to 39 PUs per CPC drawer, and the high-capacity model (the NE1) has four 42 PU CPC drawers. Model NE1 is estimated to provide over 40%more total system capacity than the zEC12 Model HA1, with the same amount of memory and power requirements. With up to 10 TB of main storage and SMT, the performance of the z13 processors provide considerable improvement. Uniprocessor performance has also increased significantly. A z13 Model 701 offers, on average, performance improvements of more than 10% over the zEC12 Model 701. However, variations on the observed performance increase depend on the workload type.

The IFL and zIIP processor units on the z13 can run two simultaneous threads per clock cycle in a single processor, increasing the capacity of the IFL and zIIP processors up to 1.2 times over the zEC12. However, the observed performance increase varies depending on the workload type.

The z13 expands the subcapacity settings, offering three subcapacity levels for up to 30 processors that are characterized as central processors (CPs). This configuration gives a total of 231 distinct capacity settings in the system, and provides a range of over 1:446in processing power (111,556 : 250 PCI4 ratio). The z13 delivers scalability and granularity to meet the needs of medium-sized enterprises, while also satisfying the requirements of large enterprises that have demanding, mission-critical transaction and data processing requirements.

This comparison is based on the Large System Performance Reference (LSPR) mixed workload analysis. For a description about performance and workload variation on z13, see Chapter 12, “Performance” on page 455.

The z13 continues to offer all the specialty engines that are available on previous z Systems except for zAAPs5. A zAAP qualified workload can now run on a zIIP processor, thus reducing the complexity of the z/Architecture.

Workload variability

Consult the LSPR when considering performance on the z13. The range of performance ratings across the individual LSPR workloads is likely to have a large spread. There is more performance variation of individual logical partitions (LPARs) when there is an increased number of partitions and more PUs are available. For more information, see Chapter 12, “Performance” on page 455.

For detailed performance information, see the LSPR website:

The millions of service units (MSUs) ratings are available from the following website:

Capacity on demand (CoD)

CoD enhancements enable clients to have more flexibility in managing and administering their temporary capacity requirements. The z13 supports the same architectural approach for CoD offerings as the zEC12 (temporary or permanent). Within the z13, one or more flexible configuration definitions can be available to solve multiple temporary situations and multiple capacity configurations can be active simultaneously.

Up to 200 staged records can be created for many scenarios. Up to eight of these records can be installed on the server at any time. After the records are installed, the activation of the records can be done manually, or the z/OS Capacity Provisioning Manager can automatically start the activation when Workload Manager (WLM) policy thresholds are reached. Tokens are available that can be purchased for On/Off CoD either before or after workload execution (pre- or post-paid).

1.1.3 I/O subsystem and I/O features

The z13 supports both PCIe and InfiniBand I/O infrastructure. PCIe features are installed in PCIe I/O drawers. Up to five PCIe I/O drawers per z13 server are supported, providing space for up to 160 PCIe I/O features. When upgrading a zEC12 or a z196 to a z13, up to two I/O drawers6 are also supported as carry forward.

For a four CPC drawer system, there are up to 40 PCIe and 16 InfiniBand fanout connections for data communications between the CPC drawers and the I/O infrastructure. The multiple channel subsystem (CSS) architecture allows up to six CSSs, each with 256 channels.

For I/O constraint relief, four subchannel sets are available per CSS, allowing access to a larger number of logical volumes. For improved device connectivity for parallel access volumes (PAVs), Peer-to-Peer Remote Copy (PPRC) secondary devices, and IBM FlashCopy® devices, this fourth subchannel set allows extending the amount of addressable external storage. In addition to performing an ILP from subchannel set 0, the z13 allows you to also perform an IPL from subchannel set 1 (SS1), subchannel set 2 (SS2), or subchannel set 3 (SS3).

The system I/O buses take advantage of the PCIe technology and the InfiniBand technology, which are also used in coupling links.

z13 connectivity supports the following I/O or special purpose features:

•Storage connectivity:

– Fibre Channel connection (FICON):

• FICON Express16S 10 KM long wavelength (LX) and short wavelength (SX)

• FICON Express8S 10 KM long wavelength (LX) and short wavelength (SX)

• FICON Express8 10 KM LX and SX (carry forward only)

•Networking connectivity:

– Open Systems Adapter (OSA):

• OSA-Express5S 10 GbE LR and SR

• OSA-Express5S GbE LX and SX

• OSA-Express5S 1000BASE-T Ethernet

• OSA-Express4S 10 GbE LR and SR

• OSA-Express4S GbE LX and SX

• OSA-Express4S 1000BASE-T Ethernet

– IBM HiperSockets™

– 10 GbE Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE)

•Coupling and Server Time Protocol (STP) connectivity:

– Integrated communication adapter (ICA SR)

– Parallel Sysplex InfiniBand coupling links (IFB)

– Internal Coupling links (IC)

In addition, z13 supports the following special function features, which are installed on the PCIe I/O drawers:

•Crypto Express5S

•Flash Express

•zEnterprise Data Compression (zEDC) Express

Flash Express

Flash Express is an innovative optional feature that was first introduced with the zEC12. It is intended to provide performance improvements and better availability for critical business workloads that cannot afford any impact to service levels. Flash Express is easy to configure, requires no special skills, and provides rapid time to value.

Flash Express implements storage-class memory (SCM) through an internal NAND Flash solid-state drive (SSD), in a PCIe card form factor. The Flash Express feature is designed to allow each LPAR to be configured with its own SCM address space.

Flash Express is used by these products:

•z/OS V1R13 (or later), for handling z/OS paging activity and supervisor call (SAN Volume Controller) memory dumps.

•Coupling Facility Control Code (CFCC) Level 20, to use Flash Express as an overflow device for shared queue data. This provides emergency capacity to handle IBM WebSphere® MQ shared queue buildups during abnormal situations, such as when “putters” are putting to the shared queue, but “getters” are transiently not retrieving data from the shared queue.

•Linux for z Systems (Red Hat Enterprise Linux (RHEL) and SUSE Linux enterprise (SLES)), for use as temporary storage.

For more information, see Appendix C, “Flash Express” on page 489.

10GbE RoCE Express

The 10 Gigabit Ethernet (10GbE) RoCE Express is an optional feature that uses Remote Direct Access Memory over Converged Ethernet and is designed to provide fast memory-to-memory communications between two z Systems CPCs. It is transparent to applications.

Use of the 10GbE RoCE Express feature helps reduce CPU consumption for applications using the TCP/IP stack (for example, IBM WebSphere Application Server accessing a DB2 database), and might also help to reduce network latency with memory-to-memory transfers using Shared Memory Communications over Remote Direct Memory Access (SMC-R) in z/OS V2R1.

The 10GbE RoCE Express feature on z13 can now be shared among 31 LPARs running z/OS and uses both ports on the feature. z/OS V2.1 with PTF supports the new sharing capability of the RDMA over Converged Ethernet (RoCE Express) features on z13 processors. Also, the z/OS Communications Server has been enhanced to support automatic selection between TCP/IP and RoCE transport layer protocols based on traffic characteristics. The 10GbE RoCE Express feature is supported on z13, zEC12, and zBC12 and is installed in the PCIe I/O drawer. A maximum of 16 features can be installed. On zEC12 and zBC12, only one port can be used and the feature must be dedicated to a logical partition.

zEDC Express

The growth of data that needs to be captured, transferred, and stored for large periods of time is unrelenting. The large amounts of data that need to be handled require ever-increasing needs for bandwidth and storage space. Software-implemented compression algorithms are costly in terms of processor resources, and storage costs are not negligible.

zEDC Express, an optional feature that is available to z13, zEC12, and zBC12, addresses bandwidth and storage space requirements by providing hardware-based acceleration for data compression and decompression. zEDC provides data compression with lower CPU consumption than previously existing compression technology on z Systems.

For more information, see Appendix F, “IBM zEnterprise Data Compression Express” on page 523.

1.1.4 Virtualization

The IBM Processor Resource/Systems Manager™ (PR/SM™) is Licensed Internal Code (LIC) that manages and virtualizes all the installed and enabled system resources as a single large SMP system. This virtualization enables full sharing of the installed resources with high security and efficiency. It does so by configuring up to 85 LPARs, each of which has logical processors, memory, and I/O resources, and are assigned from the installed CPC drawers and features. For more information about PR/SM functions, see 3.7, “Logical partitioning” on page 116.

LPAR configurations can be dynamically adjusted to optimize the virtual servers’ workloads. On z13, PR/SM supports an option to limit the amount of physical processor capacity that is consumed by an individual LPAR when a PU defined as a CP or an IFL is shared across a set of LPARs. This feature is designed to provide and enforce a physical capacity limit as an absolute (versus a relative) limit. Physical capacity limit enforcement is not affected by changes to the logical or physical configuration of the system. This physical capacity limit can be specified in units of CPs or IFLs.

z13 provides improvements to the PR/SM HiperDispatch function. HiperDispatch provides work alignment to logical processors, and alignment of logical processors to physical processors. This alignment optimizes cache utilization, minimizes inter-CPC drawer communication, and optimizes z/OS work dispatching, with the result of increasing throughput. For more information, see “HiperDispatch” on page 117

z13 supports the definition of up to 32 IBM HiperSockets. HiperSockets provide for memory to memory communication across LPARs without the need for any I/O adapters, and have virtual LAN (VLAN) capability. HiperSockets have been extended to bridge to the ensemble internode data network (Intraensemble Data Network, or IEDN).

Increased flexibility with z/VM mode logical partition

The z13 provides for the definition of a z/VM mode LPAR containing a mix of processor types. These types include CPs and specialty processors, such as IFLs, zIIPs, and ICFs.

z/VM V6R2 and later support this capability, which increases flexibility and simplifies system management. In a single LPAR, z/VM can perform the following tasks:

•Manage guests that use Linux on z Systems on IFLs or CPs, and manage IBM z/VSE, z/TPF, and z/OS guests on CPs.

•Run designated z/OS workloads, such as parts of IBM DB2 Distributed Relational Database Architecture™ (DRDA®) processing and XML, on zIIPs.

IBM zAware mode logical partition

IBM zAware mode logical partition has been introduced with zEC12. Either CPs or IFLs can be configured to the partition. This special partition is defined for the exclusive use of the IBM z Systems Advanced Workload Analysis Reporter (IBM zAware) offering. IBM zAware requires a special license.

IBM z Advanced Workload Analysis Reporter (zAware)

IBM zAware is a feature that was introduced with the zEC12 that embodies the next generation of system monitoring. IBM zAware is designed to offer a near real-time, continuous-learning diagnostic and monitoring capability. This function helps pinpoint and resolve potential problems quickly enough to minimize their effects on your business.

The ability to tolerate service disruptions is diminishing. In a continuously available environment, any disruption can have grave consequences. This negative effect is especially true when the disruption lasts days or even hours. But increased system complexity makes it more probable that errors occur, and those errors are also increasingly complex. Some incidents’ early symptoms go undetected for long periods of time and can become large problems. Systems often experience “soft failures” (sick but not dead), which are much more difficult or unusual to detect.

IBM zAware is designed to help in those circumstances. For more information, see Appendix A, “IBM z Advanced Workload Analysis Reporter (IBM zAware)” on page 467.

Coupling Facility mode logical partition

Parallel Sysplex continues to be the clustering technology that is used with z13. To use this technology, a special LIC is used. This code is called coupling facility control code (CFCC). To activate the CFCC, a special logical partition must be defined. Only PUs characterized as CPs or Internal Coupling Facilities (ICFs) can be used for CF partitions. For a production CF workload, use dedicated ICFs.

1.1.5 Reliability, availability, and serviceability design

System reliability, availability, and serviceability (RAS) is an area of continuous IBM focus. The RAS objective is to reduce, or eliminate if possible, all sources of planned and unplanned outages, while providing adequate service information in case something happens. Adequate service information is required for determining the cause of an issue without the need to reproduce the context of an event. With a properly configured z13, further reduction of outages can be attained through improved nondisruptive replace, repair, and upgrade functions for memory, drawers, and I/O adapters. In addition, z13 has extended nondisruptive capability to download and install LIC updates.

Enhancements include removing pre-planning requirements with the fixed 96 GB HSA. Client-purchased memory is not used for traditional I/O configurations, and it is no longer required to reserve capacity to avoid disruption when adding new features. With a fixed amount of 96 GB for the HSA, maximums are configured and an IPL is performed so that later insertion can be dynamic, which eliminates the need for a power-on reset of the server.

IBM z13 RAS features provide many high-availability and nondisruptive operational capabilities that differentiate the z Systems in the marketplace.

The ability to cluster multiple systems in a Parallel Sysplex takes the commercial strengths of the z/OS platform to higher levels of system management, scalable growth, and continuous availability.

1.2 z13 technical overview

This section briefly reviews the major elements of z13:

1.2.1 Models

The z13 has a machine type of 2964. Five models are offered: N30, N63, N96, NC9, and NE1. The model name indicates the maximum number of processor units (PUs) available for purchase (“C9” stands for 129 and “E1” for 141). A PU is the generic term for the IBM z/Architecture processor unit (processor core) on the SCM.

On the z13, some PUs are part of the system base, that is, they are not part of the PUs that can be purchased by clients. They are characterized by default as follows:

•System assist processor (SAP) that is used by the channel subsystem. The number of predefined SAPs depends on the z13 model.

•One integrated firmware processor (IFP). The IFP is used in support of select features, such as zEDC and 10GbE RoCE.

•Two spare PUs that can transparently assume any characterization in a permanent failure of another PU.

The PUs that clients can purchase can assume any of the following characterizations:

•Central processor (CP) for general-purpose use.

•Integrated Facility for Linux (IFL) for the use of Linux on z Systems.

•z Systems Integrated Information Processor (zIIP7). One CP must be installed with or before the installation of any zIIPs.

|

zIIPs: At least one CP must be purchased with, or before, a zIIP can be purchased. Clients can purchase up to two zIIPs for each purchased CP (assigned or unassigned) on the system (2:1). However, for migrations from zEC12 with zAAPs, the ratio (CP:zIIP) can go to 4:1.

|

•Internal Coupling Facility (ICF) is used by the Coupling Facility Control Code (CFCC).

•Additional system assist processor (SAP) is used by the channel subsystem.

A PU that is not characterized cannot be used, but is available as a spare. The following rules apply:

•In the five-model structure, at least one CP, ICF, or IFL must be purchased and activated for any model.

•PUs can be purchased in single PU increments and are orderable by feature code.

•The total number of PUs purchased cannot exceed the total number that are available for that model.

•The number of installed zIIPs cannot exceed twice the number of installed CPs.

The multi-CPC drawer system design provides the capability to concurrently increase the capacity of the system in these ways:

•Add capacity by concurrently activating more CPs, IFLs, ICFs, or zIIPs on an existing CPC drawer.

•Add a CPC drawer concurrently and activate more CPs, IFLs, ICFs, or zIIPs.

•Add a CPC drawer to provide more memory, or one or more adapters to support a greater number of I/O features.

1.2.2 Model upgrade paths

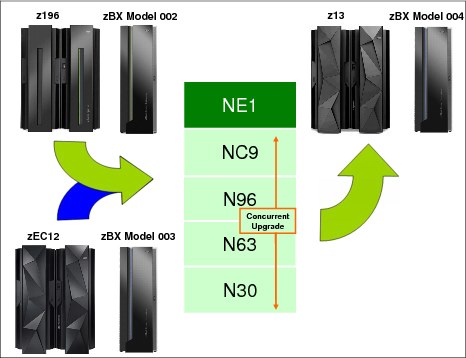

Any z13 can be upgraded to another z13 hardware model. All upgrades from Models N30, N63, and N96 to N63, N96, and NC9 are concurrent. The upgrades to NE1 are disruptive (that is, the system is unavailable during the upgrade). Any zEC12 or z196 model can be upgraded to any z13 model, which is also disruptive. Figure 1-1 show the upgrade path

|

Consideration: An air-cooled z13 cannot be converted to a water-cooled z13, and vice versa.

|

Figure 1-1 z13 upgrades

z196 upgrade to z13

When a z196 is upgraded to a z13, the z196 driver level must be at least 93. If a zBX is involved, the Driver 93 must be at bundle 27 or higher. When upgrading a z196 that controls a zBX Model 002 to a z13, the zBX is upgraded to a Model 004 and becomes a stand-alone ensemble node. The concept of the zBX being “owned” by a CPC is removed when the zBX is upgraded to a Model 004. Upgrading from z196 to z13 is disruptive.

zEC12 upgrade to z13

When an EC12 is upgraded to a z13, the zEC12 must be at least at Driver level 15. If a zBX is involved, Driver 15 must be at Bundle 27 or higher. When upgrading a zEC12 that controls a zBX Model 003 to a z13, the zBX is upgraded to a Model 004 and becomes a stand-alone ensemble node. The concept of the zBX being “owned” by a CPC is removed when the zBX is upgraded to a model 004. Upgrading from zEC12 to z13 is disruptive.

The following processes are not supported:

•Downgrades within the z13 models

•Upgrade from a zBC12 or z114 to z13

•Upgrades from IBM System z10® or earlier systems

•Attachment of a zBX Model 002 or model 003 to a z13

1.2.3 Frames

The z13 has two frames that are bolted together and are known as the A frame and the Z frame. The frames contain the following CPC components:

•Up to four CPC drawers in Frame A

•PCIe I/O drawers, and I/O drawers, which hold I/O features and special purpose features

•Power supplies

•An optional Internal Battery Feature (IBF)

•Cooling units for either air or water cooling

•Two new System Control Hubs (SCMs) to interconnect the CPC components through Ethernet

•Two new 1U rack-mounted Support Elements (mounted in A frame) with their keyboards, pointing devices, and displays mounted on a tray in the Z frame.

1.2.4 CPC drawer

Up to four CPC drawers are installed in frame A of the z13. Each CPC drawer houses the SCMs, memory, and I/O interconnects.

SCM technology

The z13 is built on the superscalar microprocessor architecture of its predecessor, and provides several enhancements over the zEC12. Each CPC drawer is physically divided into two nodes. Each node has four SCMs, three PU SCMs, and one storage control (SC) SCM, so the CPC drawer has six PU SCMs and two SC SCMs. The PU SCM has eight cores, with six, seven, or eight active cores, which can be characterized as CPs, IFLs, ICFs, zIIPs, SAPs, or IFPs. Two CPC drawers sizes are offered: 39 and 42 cores.

The SCM provides a significant increase in system scalability and an additional opportunity for server consolidation. All CPC drawers are interconnected by using high-speed communication links cable-based, in a full star topology, through the L4 cache. This configuration allows the z13 to be operated and controlled by the PR/SM facility as a memory-coherent and cache-coherent SMP system.

The PU configuration includes two designated spare PUs per CPC and a variable number of SAPs. The SAPs scale with the number of CPC drawers that are installed in the server. For example, there are six standard SAPs with one CPC drawer installed, and up to 24 when four CPC drawer are installed. In addition, one PU is used as an IFP and is not available for client use. The remaining PUs can be characterized as CPs, IFL processors, zIIPs, ICF processors, or extra SAPs.

The z13 offers a water-cooling option for increased system and data center energy efficiency. The PU SCMs are cooled by a cold plate that is connected to the internal water cooling loop and the SC SCMs are air-cooled. In an air-cooled system, the radiator units (RUs) exchange the heat from the internal water loop with air. The water cooling units (WCUs) is fully redundant in an N+1 arrangement. The RU has improved availability with N+2 pumps and blowers.

Processor features

The processor chip has an eight-core design, with either six, seven, or eight active cores, and operates at 5.0 GHz. Depending on the CPC drawer version (39 PU or 42 PU), 39 - 168 PUs are available on 1 - 4 CPC drawers.

Each core on the PU chip includes an enhanced dedicated coprocessor for data compression and cryptographic functions, which are known as the Central Processor Assist for Cryptographic Function (CPACF). The cryptographic performance of CPACF has improved up to 2x (100%) over the zEC12.

Having standard clear key cryptographic coprocessors that are integrated with the processor provides high-speed cryptography for protecting data.

Hardware data compression can play a significant role in improving performance and saving costs over performing compression in software. The zEDC Express feature offers more performance and savings over the coprocessor. Their functions are not interchangeable.

The micro-architecture of core has been altered radically to increase parallelism and improve pipeline efficiency. The core has a new branch prediction and instruction fetch front end to support simultaneous multithreading in a single core and to improve the branch prediction throughput, a wider instruction decode (six instructions per cycle), and 10 arithmetic logical execution units that offer double instruction bandwidth over the zEC12.

Each core has two hardware decimal floating point units that are designed according to a standardized, open algorithm. Much of today’s commercial computing uses decimal floating point calculus, so two on-core hardware decimal floating point units meet the requirements of business and user applications, which provides greater floating point execution throughput with and improved performance and precision.

In the unlikely case of a permanent core failure, each core can be individually replaced by one of the available spares. Core sparing is transparent to the operating system and applications.

Simultaneous multithreading

The micro-architecture of the core of the z13 allows simultaneous execution of two threads (SMT) in the same zIIP or IFL core, dynamically sharing processor resources such as execution units and caches. This facility allows a more efficient utilization of the core and increased capacity because while one of the threads is waiting for a storage access (cache miss), the other thread that is running simultaneously in the core can use the shared resources rather than remain idle.

Single instruction multiple data instruction set

The z13 instruction set architecture includes a subset of 139 new instructions for SIMD execution, which was added to improve efficiency of complex mathematical models and vector processing. These new instructions allow a larger number of operands to be processed with a single instruction. The SIMD instructions use the superscalar core to process operands in parallel, which enables more processor throughput.

Transactional Execution facility

The z13, like its predecessor zEC12, has a set of instructions that allows defining groups of instructions that are run atomically, that is, either all the results are committed or none are. The facility provides for faster and more scalable multi-threaded execution, and is known as hardware transactional memory.

Out-of-order execution

As with its predecessor zEC12, z13 has an enhanced superscalar microprocessor with OOO execution to achieve faster throughput. With OOO, instructions might not run in the original program order, although results are presented in the original order. For example, OOO allows a few instructions to complete while another instruction is waiting. Up to six instructions can be decoded per system cycle, and up to 10 instructions can be in execution.

Concurrent processor unit conversions

The z13 supports concurrent conversion between various PU types, which provides the flexibility to meet the requirements of changing business environments. CPs, IFLs, zIIPs, ICFs, and optional SAPs can be converted to CPs, IFLs, zIIPs, ICFs, and optional SAPs.

Memory subsystem and topology

z13 uses a new buffered dual inline memory module (DIMM) technology. For this purpose, IBM has developed a chip that controls communication with the PU, and drives address and control from DIMM to DIMM. The DIMM capacities are 16 GB, 32 GB, 64 GB, and 128 GB.

Memory topology provides the following benefits:

•A RAIM for protection at the dynamic random access memory (DRAM), DIMM, and memory channel levels

•A maximum of 10 TB of user configurable memory with a maximum of 12.5 TB of physical memory (with a maximum of 10 TB configurable to a single LPAR)

•One memory port for each PU chip, and up to five independent memory ports per CPC drawer

•Increased bandwidth between memory and I/O

•Asymmetrical memory size and DRAM technology across CPC drawers

•Large memory pages (1 MB and 2 GB)

•Key storage

•Storage protection key array that is kept in physical memory

•Storage protection (memory) key that is also kept in every L2 and L3 cache directory entry

•A larger (96 GB) fixed-size HSA that eliminates having to plan for HSA

PCIe fanout hot-plug

The PCIe fanout provides the path for data between memory and the PCIe features through the PCIe 16 GBps bus and cables. The PCIe fanout is hot-pluggable. In case of an outage, a redundant I/O interconnect allows a PCIe fanout to be concurrently repaired without loss of access to its associated I/O domains. Up to 10 PCIe fanouts are available per CPC drawer. The PCIe fanout can also be used for the Integrated Coupling Adapter (ICA SR). If redundancy in coupling link connectivity is ensured, the PCIe fanout can be concurrently repaired.

Host channel adapter (HCA) fanout hot-plug

The HCA fanout provides the path for data between memory and the I/O cards in an I/O drawer through 6 GBps InfiniBand (IFB) cables. The HCA fanout is hot-pluggable. In case of an outage, an HCA fanout can be concurrently repaired without the loss of access to its associated I/O features by using redundant I/O interconnect to the I/O drawer. InfiniBand optical HCA3-O and HCA3-O LR, which are used to provide connectivity between members of a sysplex, are orderable features in z13, and also can be carried forward on a MES from a zEC12 or z196. Up to four HCA fanouts are available per CPC drawer. The HCA fanout also can be used for the InfiniBand coupling links (HCA3-O and HCA3-O LR). If redundancy in coupling link connectivity is ensured, the HCA fanout can be concurrently repaired.

1.2.5 I/O connectivity: PCIe and InfiniBand

The z13 offers various improved I/O features and uses technologies, such as PCIe, InfiniBand, and Ethernet. This section briefly reviews the most relevant I/O capabilities.

The z13 takes advantage of PCIe Generation 3 to implement the following features:

•PCIe Generation 3 (Gen3) fanouts that provide 16 GBps connections to the PCIe I/O features in the PCIe I/O drawers.

•PCIe Gen3 fanouts that provide 8 GBps coupling link connections through the new IBM ICA SR.

The z13 takes advantage of InfiniBand to implement the following features:

•A 6 GBps I/O bus that includes the InfiniBand infrastructure (HCA2-C) for the I/O drawer for non-PCIe I/O features.

•Parallel Sysplex coupling links using IFB: 12x InfiniBand coupling links (HCA3-O) for local connections and 1x InfiniBand coupling links (HCA3-O LR) for extended distance connections between any two zEnterprise CPCs. The 12x IFB link (HCA3-O) has a bandwidth of 6 GBps and the HCA3-O LR 1X InfiniBand links have a bandwidth of 5 Gbps.

1.2.6 I/O subsystems

The z13 I/O subsystem is similar to the one on zEC12 and includes a new PCIe Gen3 infrastructure. The I/O subsystem is supported by both a PCIe bus and an I/O bus similar to that of zEC12. It includes the InfiniBand Double Data Rate (IB-DDR) infrastructure, which replaced the self-timed interconnect that was used in previous z Systems. This infrastructure is designed to reduce processor usage and latency, and provide increased throughput.

z13 offers two I/O infrastructure elements for holding the I/O features: PCIe I/O drawers, for PCIe features; and up to two I/O drawers for non-PCIe features.

PCIe I/O drawer

The PCIe I/O drawer, together with the PCIe features, offers finer granularity and capacity over previous I/O infrastructures. It can be concurrently added and removed in the field, easing planning. Only PCIe cards (features) are supported, in any combination. Up to five PCIe I/O drawers can be installed on a z13.

I/O drawer

On the z13, I/O drawers are supported only when carried forward on upgrades from zEC12 or z196 to z13. For a new z13 order, it is not possible to order an I/O drawer.

The z13 can have up to two I/O drawers. Each I/O drawer can accommodate up to eight FICON Express8 features. Based on the number of I/O features that are carried forward, the configurator determines the number of required I/O drawers.

Native PCIe and Integrated Firmware Processor

Native PCIe was introduced with the zEDC and RoCE Express features, which are managed differently from the traditional PCIe features. The device drivers for these adapters are available in the operating system. The diagnostic tests for the adapter layer functions of the native PCIe features are managed by LIC that is designated as a resource group partition, which runs on the IFP. For availability, two resource groups are present and share the IFP.

During the ordering process of the native PCIe features, features of the same type are evenly spread across the two resource groups (RG1 and RG2) for availability and serviceability reasons. Resource groups are automatically activated when these features are present in the CPC.

I/O and special purpose features

The z13 supports the following PCIe features on a new build, which can be installed only in the PCIe I/O drawers:

•FICON8 Express16S Short Wave (SX) and 10 km (6.2 miles) Long Wave (LX)

•FICON Express 8S Short Wave (SX) and 10 km (6.2 miles) Long Wave (LX)

•OSA-Express5S 10 GbE Long Reach (LR) and Short Reach (SR), GbE LX, and SX

•OSA-Express5S, GbE LX and SX, and 1000BASE-T

•10GbE RoCE Express

•Crypto Express5S

•Flash Express

•zEDC Express

When carried forward on an upgrade, the z13 also supports the following features in the PCIe I/O drawers:

•FICON Express8S SX and LX (10 km)

•OSA-Express 5S (all)

•OSA-Express 4S (all)

•10GbE RoCE Express

•Flash Express

•zEDC Express

When carried forward on an upgrade, the z13 also supports up to two I/O drawers on which the FICON Express8 SX and LX (10 km) feature can be installed.

In addition, InfiniBand coupling links HCA3-O and HCA3-O LR, which attach directly to the CPC drawers, are supported.

FICON channels

Up to 160 features with up to 320 FICON Express16S channels or up to 160 features with up to 320 FICON Express 8S channels are supported. The FICON Express8S features support link data rates of 2, 4, or 8 Gbps, and the FICON Express 16S support 4, 8, or 16 Gbs.

Up to 16 features with up to 64 FICON Express8 channels are supported in two (max) I/O drawers. The FICON Express8 features support link data rates of 2, 4, or 8 Gbps.

The z13 FICON features support the following protocols:

•FICON (FC) and High Performance FICON for z Systems (zHPF). zHPF offers improved performance for data access, which is of special importance to OLTP applications.

•FICON channel-to-channel (CTC).

•Fibre Channel Protocol (FCP).

FICON also offers the following capabilities:

•Modified Indirect Data Address Word (MIDAW) facility: Provides more capacity over native FICON channels for programs that process data sets that use striping and compression, such as DB2, VSAM, partitioned data set extended (PDSE), hierarchical file system (HFS), and z/OS file system (zFS). It does so by reducing channel, director, and control unit processor usage.

•Enhanced problem determination, analysis, and manageability of the storage area network (SAN) by providing registration information to the fabric name server for both FICON and FCP.

Open Systems Adapter (OSA)

The z13 allows any mix of the supported OSA Ethernet features. Up to 48 OSA-Express5S or OSA-Express4S features, with a maximum of 96 ports, are supported. OSA-Express5S and OSA-Express4S features are plugged into the PCIe I/O drawer.

The maximum number of combined OSA-Express5S and OSA-Express4S features cannot exceed 48.

OSM and OSX channel path identifier types

The z13 provides OSA-Express5S, OSA-Express4S, and channel-path identifier (CHPID) types OSA-Express for Unified Resource Manager (OSM) and OSA-Express for zBX (OSX) connections:

•OSA-Express for Unified Resource Manager (OSM)

Connectivity to the intranode management network (INMN) Top of Rack (ToR) switch in the zBX is not supported on z13. When the zBX model 002 or 003 is upgraded to a model 004, it becomes an independent node that can be configured to work with the ensemble. The zBX model 004 is equipped with two 1U rack-mounted Support Elements to manage and control itself, and is independent of the CPC SEs.

•OSA-Express for zBX (OSX)

Connectivity to the IEDN. Provides a data connection from the z13 to the zBX. Uses OSA-Express5S 10 GbE (preferred), and can also use the OSA-Express4S 10 GbE feature.

OSA-Express5S, OSA-Express4S features highlights

The z13 supports five different types each of OSA-Express5S and OSA-Express4S features. OSA-Express5S features are a technology refresh of the OSA-Express4S features:

•OSA-Express5S 10 GbE Long Reach (LR)

•OSA-Express5S 10 GbE Short Reach (SR)

•OSA-Express5S GbE Long Wave (LX)

•OSA-Express5S GbE Short Wave (SX)

•OSA-Express5S Ethernet 1000BASE-T Ethernet

•OSA-Express4S 10 GbE Long Reach

•OSA-Express4S 10 GbE Short Reach

•OSA-Express4S GbE Long Wave

•OSA-Express4S GbE Short Wave

•OSA-Express4S Ethernet 1000BASE-T Ethernet

OSA-Express features provide important benefits for TCP/IP traffic, that is, reduced latency and improved throughput for standard and jumbo frames. Performance enhancements are the result of the data router function being present in all OSA-Express features. For functions that were previously performed in firmware, the OSA Express5S and OSA-Express4S now perform those functions in hardware. Additional logic in the IBM application-specific integrated circuit (ASIC) that is included with the feature handles packet construction, inspection, and routing, allowing packets to flow between host memory and the LAN at line speed without firmware intervention.

With the data router, the store and forward technique in direct memory access (DMA) is no longer used. The data router enables a direct host memory-to-LAN flow. This configuration avoids a hop, and is designed to reduce latency and to increase throughput for standard frames (1492 bytes) and jumbo frames (8992 bytes).

For more information about the OSA features, see 4.8, “Connectivity” on page 155.

HiperSockets

The HiperSockets function is also known as internal queued direct input/output (internal QDIO or iQDIO). It is an integrated function of the z13 that provides users with attachments to up to 32 high-speed virtual LANs with minimal system and network processor usage.

HiperSockets can be customized to accommodate varying traffic sizes. Because the HiperSockets function does not use an external network, it can free system and network resources, eliminating attachment costs while improving availability and performance.

For communications between LPARs in the same z13 server, HiperSockets eliminates the need to use I/O subsystem features and to traverse an external network. Connection to HiperSockets offers significant value in server consolidation by connecting many virtual servers. It can be used instead of certain coupling link configurations in a Parallel Sysplex.

HiperSockets is extended to allow integration with IEDN, which extends the reach of the HiperSockets network outside the CPC to the entire ensemble, and displays it as a single Layer 2 network.

10GbE RoCE Express

The 10 Gigabit Ethernet (10GbE) RoCE Express feature is a RDMA-capable network interface card. The 10GbE RoCE Express feature is supported on z13, zEC12, and zBC12, and is used in the PCIe I/O drawer. Each feature has one PCIe adapter. A maximum of 16 features can be installed.

The 10GbE RoCE Express feature uses a short reach (SR) laser as the optical transceiver, and supports the use of a multimode fiber optic cable that terminates with an LC Duplex connector. Both a point-to-point connection and a switched connection with an enterprise-class 10 GbE switch are supported.

Support is provided by z/OS, which supports one port per feature, dedicated to one partition in zEC12 and zBC12, while with z13 both ports, shared by up to 31 partitions are supported.

For more information, see Appendix E, “RDMA over Converged Ethernet (RoCE) improvements” on page 511.

1.2.7 Coupling and Server Time Protocol connectivity

Support for Parallel Sysplex includes the Coupling Facility Control Code and coupling links.

Coupling links support

Coupling connectivity in support of Parallel Sysplex environments is provided on the z13 by the following features:

•New PCIe Gen3, IBM ICA SR, which allows 2-port coupling links connectivity for a distance of up to 150 m (492 feet) at 8 GBps each.

•HCA3-O, 12x InfiniBand coupling links offering up to 6 GBps of bandwidth between z13, zBC12, z196, and z114 systems, for a distance of up to 150 m (492 feet), with improved service times over previous HCA2-O links that were used on prior z Systems families.

•HCA3-O LR, 1x InfiniBand (up to 5 Gbps connection bandwidth) between z13, zEC12, zBC12, z196, and z114 for a distance of up to 10 km (6.2 miles). The HCA3-O LR (1xIFB) type has twice the number of links per fanout card as compared to type HCA2-O LR (1xIFB) that was used in the previous z Systems generations.

•Internal Coupling Channels (ICs), operating at memory speed.

All coupling link types can be used to carry Server Time Protocol (STP) messages. The z13 does not support ISC-3 connectivity.

CFCC Level 20

CFCC level 20 is delivered on the z13 with driver level 22. CFCC Level 20 introduces the following enhancements:

•Support for up to 141 ICF processors per z Systems CPC:

– The maximum number of logical processors in a Coupling Facility Partition remains 16

•Large memory support:

– Improves availability/scalability for larger CF cache structures and data sharing performance with larger DB2 group buffer pools (GBPs).

– This support removes inhibitors to using large CF structures, enabling the use of Large Memory to scale to larger DB2 local buffer pools (LBPs) and GBPs in data sharing environments.

– The CF structure size remains at a maximum of 1 TB.

•Support for new IBM ICA

|

Statement of Direction1: At the time of writing, IBM plans to support up to 256 Coupling CHPIDs on z13 short, that is, twice the number of coupling CHPIDs supported on zEC12 (128).

Each CF image will continue to support a maximum of 128 coupling CHPIDs.

|

1 All statements regarding IBM plans, directions, and intent are subject to change or withdrawal without notice. Any reliance on these statements of general direction is at the relying party’s sole risk and will not create liability or obligation for IBM.

z13 systems with CFCC Level 20 require z/OS V1R12 or later, and z/VM V6R2 or later for virtual guest coupling.

To support an upgrade from one CFCC level to the next, different levels of CFCC can coexist in the same sysplex while the coupling facility LPARs are running on different servers. CF LPARs that run on the same server share the CFCC level.

A CF running on a z13 (CFCC level 20) can coexist in a sysplex with CFCC levels 17 and 19.

Server Time Protocol (STP) facility

STP is a server-wide facility that is implemented in the LIC of z System CPCs (including CPCs running as stand-alone coupling facilities). STP presents a single view of time to PR/SM and provides the capability for multiple servers to maintain time synchronization with each other.

Any z Systems CPC can be enabled for STP by installing the STP feature. Each server that must be configured in a Coordinated Timing Network (CTN) must be STP-enabled.

The STP feature is the supported method for maintaining time synchronization between z Systems images and coupling facilities. The STP design uses the CTN concept, which is a collection of servers and coupling facilities that are time-synchronized to a time value called Coordinated Server Time (CST).

Network Time Protocol (NTP) client support is available to the STP code on the z13, zEC12, zBC12, z196, and z114. With this function, the z13, zEC12, zBC12, z196, and z114 can be configured to use an NTP server as an external time source (ETS).

This implementation answers the need for a single time source across the heterogeneous platforms in the enterprise. An NTP server becomes the single time source for the z13, zEC12, zBC12, and IBM zEnterprise 196 (z196) and z114, and other servers that have NTP clients, such as UNIX and Microsoft Windows systems.

The time accuracy of an STP-only CTN is improved by using, as the ETS device, an NTP server with the pulse per second (PPS) output signal. This type of ETS is available from various vendors that offer network timing solutions.

Improved security can be obtained by providing NTP server support on the HMC for the Support Element (SE). The HMC is normally attached to the private dedicated LAN for z Systems maintenance and support. For z13 and zEC12, authentication support is added to the HMC NTP communication with NTP time servers.

|

Attention: A z13 cannot be connected to a Sysplex Timer and cannot join a Mixed CTN.

If a current configuration consists of a Mixed CTN or a Sysplex Timer 9037, the configuration must be changed to an STP-only CTN before z13 integration. The z13 can coexist only with z System CPCs that do not have the ETR port capability.

|

1.2.8 Special-purpose features

This section overviews several features that, although installed in the PCIe I/O drawer or in the I/O drawer, provide specialized functions without performing I/O operations. That is, no data is moved between the CPC and externally attached devices.

Cryptography

Integrated cryptographic features provide leading cryptographic performance and functions. The cryptographic solution that is implemented in z Systems has received the highest standardized security certification (FIPS 140-2 Level 49). In addition to the integrated cryptographic features, the cryptographic features (Crypto Express5S) allows adding or moving crypto coprocessors to LPARs without pre-planning.

The z13 implements the PKCS#11, one of the industry-accepted standards that are called Public Key Cryptographic Standards (PKCS), which are provided by RSA Laboratories of RSA, the security division of EMC Corporation. It also implements the IBM Common Cryptographic Architecture (CCA) in its cryptographic features.

CP Assist for Cryptographic Function

The CP Assist for Cryptographic Function (CPACF) offers the full complement of the Advanced Encryption Standard (AES) algorithm and Secure Hash Algorithm (SHA) with the Data Encryption Standard (DES) algorithm. Support for CPACF is available through a group of instructions that are known as the Message-Security Assist (MSA). z/OS Integrated Cryptographic Service Facility (ICSF) callable services and the z90crypt device driver running on Linux on z Systems also start CPACF functions. ICSF is a base element of z/OS. It uses the available cryptographic functions, CPACF, or PCIe cryptographic features to balance the workload and help address the bandwidth requirements of your applications.

CPACF must be explicitly enabled by using a no-charge enablement feature (FC 3863), except for the SHAs, which are included enabled with each server.

The enhancements to CPACF are exclusive to the z System servers, and are supported by z/OS, z/VM, z/VSE, z/TPF, and Linux on z Systems.

Configurable Crypto Express5S feature

The Crypto Express5S represents the newest generation of cryptographic features. It is designed to complement the cryptographic capabilities of the CPACF. It is an optional feature of the z13 server generation. The Crypto Express5S feature is designed to provide granularity for increased flexibility with one PCIe adapter per feature. For availability reasons, a minimum of two features are required.

The Crypto Express5S is a state-of-the-art, tamper-sensing, and tamper-responding programmable cryptographic feature that provides a secure cryptographic environment. Each adapter contains a tamper-resistant hardware security module (HSM). The HSM can be configured as a Secure IBM CCA coprocessor, as a Secure IBM Enterprise PKCS #11 (EP11) coprocessor, or as an accelerator:

•A Secure IBM CCA coprocessor is for secure key encrypted transactions that use CCA callable services (default).

•A Secure IBM Enterprise PKCS #11 (EP11) coprocessor implements an industry standardized set of services that adhere to the PKCS #11 specification v2.20 and more recent amendments.

This new cryptographic coprocessor mode introduced the PKCS #11 secure key function.

•An accelerator for public key and private key cryptographic operations is used with Secure Sockets Layer/Transport Layer Security (SSL/TLS) acceleration.

Federal Information Processing Standard (FIPS) 140-2 certification is supported only when Crypto Express5S is configured as a CCA or an EP11 coprocessor.

TKE workstation and support for smart card readers

The Trusted Key Entry (TKE) workstation and the TKE 8.0 LIC are optional features on the z13. The TKE workstation offers a security-rich solution for basic local and remote key management. It provides to authorized personnel a method for key identification, exchange, separation, update, and backup, and a secure hardware-based key loading mechanism for operational and master keys. TKE also provides secure management of host cryptographic module and host capabilities.

Support for an optional smart card reader that is attached to the TKE workstation allows the use of smart cards that contain an embedded microprocessor and associated memory for data storage. Access to and the use of confidential data on the smart cards are protected by a user-defined personal identification number (PIN).

When Crypto Express5S is configured as a Secure IBM Enterprise PKCS #11 (EP11) coprocessor, the TKE workstation is required to manage the Crypto Express5S feature. If the smart card reader feature is installed in the TKE workstation, the new smart card part 74Y0551 is required for EP11 mode.

For more information about the Cryptographic features, see Chapter 6, “Cryptography” on page 197.

Flash Express

The Flash Express optional feature is intended to provide performance improvements and better availability for critical business workloads that cannot afford any impact to service levels. Flash Express is easy to configure, requires no special skills, and provides rapid time to value.

Flash Express implements SCM in a PCIe card form factor. Each Flash Express card implements an internal NAND Flash SSD, and has a capacity of 1.4 TB of usable storage. Cards are installed in pairs, which provide mirrored data to ensure a high level of availability and redundancy. A maximum of four pairs of cards (eight features) can be installed on a z13, for a maximum capacity of 5.6 TB of storage.

The Flash Express feature is designed to allow each LPAR to be configured with its own SCM address space. It is used for paging. Flash Express can be used, for example, to hold pageable 1 MB pages.

Encryption is included to improve data security. Data security is ensured through a unique key that is stored on the SE hard disk drive (HDD). It is mirrored for redundancy. Data on the Flash Express feature is protected with this key, and is usable only on the system with the key that encrypted it. The Secure Keystore is implemented by using a smart card that is installed in the SE. The smart card (one pair, so you have one for each SE) contains the following items:

•A unique key that is personalized for each system

•A small cryptographic engine that can run a limited set of security functions within the smart card

Flash Express is supported by z/OS V1R13 (at minimum) for handling z/OS paging activity, and has support for 1 MB pageable pages and SAN Volume Controller memory dumps. Support was added to the CFCC to use Flash Express as an overflow device for shared queue data to provide emergency capacity to handle WebSphere MQ shared queue buildups during abnormal situations. Abnormal situations include when “putters” are putting to the shared queue, but “getters” are transiently not getting from the shared queue.

Flash memory is assigned to a CF image through HMC windows. The coupling facility resource management (CFRM) policy definition allows the wanted amount of SCM to be used by a particular structure, on a structure-by-structure basis. Additionally, Linux (RHEL) can now use Flash Express for temporary storage. More functions of Flash Express are expected to be introduced later, including 2 GB page support and dynamic reconfiguration for Flash Express.

For more information, see Appendix C, “Flash Express” on page 489.

zEDC Express

zEDC Express, an optional feature that is available to z13, zEC12, and zBC12, provides hardware-based acceleration for data compression and decompression with lower CPU consumption than the previous compression technology on z Systems.

Use of the zEDC Express feature by the z/OS V2R1 zEnterprise Data Compression acceleration capability is designed to deliver an integrated solution to help reduce CPU consumption, optimize performance of compression-related tasks, and enable more efficient use of storage resources. It also provides a lower cost of computing and helps to optimize the cross-platform exchange of data.

One to eight features can be installed on the system. There is one PCIe adapter/compression coprocessor per feature, which implements compression as defined by RFC1951 (DEFLATE).

A zEDC Express feature can be shared by up to 15 LPARs.

For more information, see Appendix F, “IBM zEnterprise Data Compression Express” on page 523.

1.2.9 Reliability, availability, and serviceability (RAS)

The z13 RAS strategy employs a building-block approach, which is developed to meet the client's stringent requirements for achieving continuous reliable operation. Those building blocks are error prevention, error detection, recovery, problem determination, service structure, change management, measurement, and analysis.

The initial focus is on preventing failures from occurring. This is accomplished by using Hi-Rel (highest reliability) components that use screening, sorting, burn-in, and run-in, and by taking advantage of technology integration. For LIC and hardware design, failures are reduced through rigorous design rules; design walk-through; peer reviews; element, subsystem, and system simulation; and extensive engineering and manufacturing testing.

The RAS strategy is focused on a recovery design to mask errors and make them transparent to client operations. An extensive hardware recovery design is implemented to detect and correct memory array faults. In cases where transparency cannot be achieved, one can restart the server with the maximum capacity possible.

The z13 has the following RAS improvements, among others:

•Cables for SMP fabric

•CP and SC are SCM FRUs

•Point of load (POL) replaces the Voltage Transformation Module

•The water manifold is a FRU

•The redundant oscillators are isolated on their own backplane

•The CPC drawer is a FRU (empty)

•There is a built-in Time Domain Reflectometry (TDR) to isolate failures

•CPC drawer level degrade

•FICON (better recovery on fiber)

•N+2 radiator pumps (air cooled system)

•N+1 System Control Hub (SCH) and power supplies

•N+1 Support Elements (SE)

For more information, see Chapter 9, “Reliability, availability, and serviceability” on page 353.

1.3 Hardware Management Consoles (HMCs) and Support Elements (SEs)

The HMCs and SEs are appliances that together provide platform management for z Systems.

HMCs and SEs also provide platform management for zBX Model 004 and for the ensemble nodes when the z Systems CPCs and the zBX Model 004 nodes are members of an ensemble. In an ensemble, the HMC is used to manage, monitor, and operate one or more z Systems CPCs and their associated LPARs, as well as the zBX Model 004 machines. Also, when the z Systems and a zBX Model 004 are members of an ensemble, the HMC10 has a global (ensemble) management scope, which is compared to the SEs on the zBX Model 004 and on the CPCs, which have local (node) management responsibility. When tasks are performed on the HMC, the commands are sent to one or more CPC SEs or zBX Model 004 SEs, which then issue commands to their CPCs and zBXs. To provide high availability, an ensemble configuration requires a pair of HMCs, a primary and an alternate.

For more information, see Chapter 11, “Hardware Management Console and Support Elements” on page 411.

1.4 IBM z BladeCenter Extension (zBX) Model 004

The IBM z BladeCenter Extension (zBX) Model 004 improves infrastructure reliability by extending the mainframe systems management and service across a set of heterogeneous compute elements in an ensemble.

The zBX Model 004 is only available as an optional upgrade from a zBX Model 003 or a zBX Model 002, through MES, in an ensemble that contains at least one z13 CPC and consists of these components:

•Two internal 1U rack-mounted Support Elements providing zBX monitoring and control functions.

•Up to four IBM 42U Enterprise racks.

•Up to eight BladeCenter chassis with up to 14 blades each, with up to two chassis per rack.

•Up to 11211 blades.

•Intranode management network (INMN) Top of Rack (ToR) switches. On the zBX Model 004, the new local zBX Support Elements directly connect to the INMN within the zBX for management purposes. Because zBX Model 004 is an independent node, there is no INMN connection to any z Systems CPC.

•Intraensemble data network (IEDN) ToR switches. The IEDN is used for data paths between the zEnterprise ensemble members and the zBX Model 004, and also for customer data access. The IEDN point-to-point connections use MAC addresses, not IP addresses (Layer 2 connectivity).

•8 Gbps Fibre Channel switch modules for connectivity to customer supplied storage (through SAN).

•Advanced management modules (AMMs) for monitoring and management functions for all the components in the BladeCenter.

•Power Distribution Units (PDUs) and cooling fans.

•Optional acoustic rear door or optional rear door heat exchanger.

The zBX is configured with redundant hardware infrastructure to provide qualities of service similar to those of z Systems, such as the capability for concurrent upgrades and repairs.

GDPS/PPRC and Geographically Dispersed Parallel Sysplex/ Global Mirror (GDPS/GM) support zBX hardware components, providing workload failover for automated multi-site recovery. These capabilities facilitate the management of planned and unplanned outages.

1.4.1 Blades

Two types of blades can be installed and operated in the IBM zEnterprise BladeCenter Extension (zBX):

•Optimizer Blades: IBM WebSphere DataPower Integration Appliance XI50 for zEnterprise blades

•IBM Blades:

– A selected subset of IBM POWER7® blades

– A selected subset of IBM BladeCenter HX5 blades

•IBM POWER7 blades are virtualized by PowerVM® Enterprise Edition, and the virtual servers run the IBM AIX® operating system.

•IBM BladeCenter HX5 blades are virtualized by using an integrated hypervisor for

System x and the virtual servers run Linux on System x (RHEL and SLES operating systems) and select Microsoft Windows Server operating systems.

System x and the virtual servers run Linux on System x (RHEL and SLES operating systems) and select Microsoft Windows Server operating systems.

Enablement for the blades is specified with an entitlement feature code that is configured on the ensemble HMC.

1.4.2 IBM WebSphere DataPower Integration Appliance XI50 for zEnterprise

The IBM WebSphere DataPower Integration Appliance XI50 for zEnterprise (DataPower XI50z) is a multifunctional appliance that can help provide multiple levels of XML optimization.

This configuration streamlines and secures valuable service-oriented architecture (SOA) applications. It also provides drop-in integration for heterogeneous environments by enabling core enterprise service bus (ESB) functions, including routing, bridging, transformation, and event handling. It can help to simplify, govern, and enhance the network security for XML and web services.

When the DataPower XI50z is installed in the zBX, the Unified Resource Manager provides integrated management for the appliance. This configuration simplifies control and operations, including change management, energy monitoring, problem detection, problem reporting, and dispatching of an IBM service support representative (IBM SSR), as needed.

|

Important: The zBX Model 004 uses the blades carried forward in an upgrade from a previous model. Customers can install additional entitlements up to the full zBX installed blade capacity if the existing blade centers chassis have empty available slots. After the entitlements are acquired from IBM, clients must procure and purchase the additional zBX supported blades to be added, up to the full installed entitlement LIC record, from another source or vendor.

|

1.5 IBM z Unified Resource Manager

The IBM z Unified Resource Manager is the integrated management software that runs on the Ensemble HMC and on the zBX model 004 SEs. The Unified Resource Manager consists of six management areas (for more details, see 11.6.1, “Unified Resource Manager” on page 446):

•Operational controls (Operations)

Includes extensive operational controls for various management functions.

•Virtual server lifecycle management (Virtual servers)

Enables directed and dynamic virtual server provisioning across hypervisors from a single point of control.

•Hypervisor management (Hypervisors)

Enables the management of hypervisors and support for application deployment.

•Energy management (Energy)

Provides energy monitoring and management capabilities that can be used to better understand the power and cooling demands of the zEnterprise System.

•Network management (Networks)

Creates and manages virtual networks, including access control, which allows virtual servers to be connected.

•Workload Awareness and platform performance management (Performance)

Manages CPU resource across virtual servers that are hosted in the same hypervisor instance to achieve workload performance policy objectives.

The Unified Resource Manager provides energy monitoring and management, goal-oriented policy management, increased security, virtual networking, and storage configuration management for the physical and logical resources of an ensemble.

1.6 Operating systems and software

The IBM z13 is supported by a large set of software, including independent software vendor (ISV) applications. This section lists only the supported operating systems. Use of various features might require the latest releases. For more information, see Chapter 7, “Software support” on page 227.

1.6.1 Supported operating systems

The following operating systems are supported for z13:

•z/OS Version 2 Release 1

•z/OS Version 1 Release 13 with program temporary fixes (PTFs)

•z/OS V1R12 with required maintenance (compatibility support only) and extended support agreement

•z/VM Version 6 Release 3 with PTFs

•z/VM Version 6 Release 2 with PTFs

•z/VSE Version 5 Release 1 with PTFs

•z/VSE Version 5 Release 2 with PTFs

•z/TPF Version 1 Release 1 with PTFs

•Linux on z Systems distributions:

– SUSE Linux Enterprise Server (SLES): SLES 12 and SLES 11.

– Red Hat Enterprise Linux (RHEL): RHEL 7 and RHEL 6.

For recommended Linux on z Systems distribution levels, see the following website:

The following operating systems will be supported on zBX Model 004:

•An AIX (on POWER7) blade in IBM BladeCenter Extension Mod 004): AIX 5.3, AIX 6.1, and AIX 7.1 and subsequent releases and PowerVM Enterprise Edition

• Linux on System x (on IBM BladeCenter HX5 blade installed in zBX Mod 004):

– Red Hat RHEL 5.5 and up, 6.0 and up, 7.0 and up

– SLES 10 (SP4) and up, 11 SP1 and up, SLES 12 and up - 64 bit only

• Microsoft Windows (on IBM BladeCenter HX5 blades installed in ZBX Mod 004)

– Microsoft Windows Server 2012, Microsoft Windows Server 2012 R2

– Microsoft Windows Server 2008 R2 and Microsoft Windows Server 2008 (SP2) (Datacenter Edition recommended) 64 bit only

Together with support for IBM WebSphere software, full support for SOA, web services, Java Platform, Enterprise Edition, Linux, and Open Standards, the IBM z13 is intended to be a platform of choice for the integration of the newest generations of applications with existing applications and data.

1.6.2 IBM compilers

The following IBM compilers for z Systems can use z13:

•Enterprise COBOL for z/OS

•Enterprise PL/I for z/OS

•XL C/C++ for Linux on z Systems

•z/OS XL C/C++

The compilers increase the return on your investment in z Systems hardware by maximizing application performance using the compilers’ advanced optimization technology for z/Architecture. Through their support of web services, XML, and Java, they allow for the modernization of existing assets in web-based applications. They support the latest IBM middleware products (CICS, DB2, and IMS), allowing applications to use their latest capabilities.

To use fully the capabilities of the z13, you must compile by using the minimum level of each compiler that is specified in Table 1-1.

Table 1-1 Supported compiler levels

|

Compiler

|

Level

|

|

Enterprise COBOL for z/OS

|

V5.2

|

|

Enterprise PL/I for z/OS

|

V4.5

|

|

XL C/C++ for Linux on z Systems

|

V1.1

|

|

z/OS XL C/C++

|

V2.11

|

1 Web update required

To obtain the best performance, you must specify an architecture level of 11 by using the –qarch=arch11 option for the XL C/C++ for Linux on z Systems compiler or the ARCH(11) option for the other compilers. This option grants the compiler permission to use machine instructions that are available only on the z13. Because specifying the architecture level of 11 results in the generated application that uses instructions that are available only on the z13, the application will not run on earlier versions of hardware. If the application must run on the z13 and on older hardware, specify the architecture level corresponding to the oldest hardware on which the application needs to run. For more information, see the documentation for the ARCH or -qarch options in the guide for the corresponding compiler product.

1 zAAP workloads are now run on zIIP.

2 z/VM 6.3 SMT support is provided on IFL processors only.

3 CMOS 14S0 is a 22-nanometer CMOS logic fabrication process.

4 PCI - Processor Capacity Index

5 zAAP is the System z Application Assist Processor.

6 I/O drawers were introduced with the IBM z10™ BC.

7 zAAPs are not available on z13. The zAAP workload is done on zIIPs.

8 FICON is Fibre Channel connection.

9 Federal Information Processing Standard (FIPS) 140-2 Security Requirements for Cryptographic Modules

10 From Version 2.11. For more information, see 11.6.6, “HMC ensemble topology” on page 452.

11 The maximum number of blades varies according to the blade type and blade function.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.