The Need for Reduced Variation in Processes

This chapter discusses the need for predictable outcomes of processes and how the predictability of outcomes can be controlled by reducing the variation in how a process is executed. The importance of reducing process variation in Lean is twofold. First, reducing variation in how a process is executed reduces the variation in the characteristics of the product or service being created, which reduces defects and errors that, in turn, improves quality. Besides improving customer satisfaction, improving quality reduces waste due to defects. Second, reducing variation in process execution reduces the variation in how long process steps take to perform. Although the explanation is beyond the scope of this book, reducing the variation in process step lead times reduces overall average process lead time, even if the average lead time of the steps are left unchanged. Thus reducing process step lead time variation reduces overall process lead time and, by Little’s law, in-process inventory levels. Hence, this chapter provides the motivation for why variation in how processes are executed should be reduced and, in turn, arguments for standardizing processes at a detailed level. We return to the topic of how processes can be standardized in chapter 7 where we will discuss Standard Work.

An Experiment in Variation

In this section, an exercise is described that can be used in a Lean course to illustrate how variation in process execution causes variation in the attributes of products or services created by the process and, hence, how it reduces quality. The exercise also shows how more precise process descriptions and standardization of process execution reduces variation in the execution of a process and thus how process standardization improves quality. Furthermore, the exercise provokes discussion on how processes should best be improved, how reacting to the quality of output with insufficient data can cause quality to degrade, how simpler processes often result in better quality, and the importance of product and service specifications. It also provides an introduction to the topic of measurement system analysis.

The Penny-Dropping Exercise

The exercise described in this section is inspired by a game conceived by Donald G. Sluti, which is contained in a compilation of exercises edited by Heineke and Meile.1 The title of that exercise is “Common Cause or Special Cause?” and the game focuses on identifying special versus common causes of variation in dropping pennies on a target. The exercise here also involves identifying common and special causes of process variation, but this game is constructed differently from Sluti’s to focus on one particular special cause of variation: not adhering to process descriptions. The game here is, in addition, more comprehensive in that many additional topics can be discussed that Sluti’s game does not apparently address. Sluti suggests that his game be played independently by students in teams of three, whereas the most effective method for this exercise in the author’s opinion is to have two people perform the exercise in front of a class, which provides a shared experience for the entire class. The author’s version of the exercise makes use of the instructions shown in Figure 9.

The exercise begins by introducing the general directions to the entire class using the first slide in Figure 9. (These slides can be downloaded from http://mason.wm.edu/faculty/bradley_j/LeanBook.) Next, two volunteers are recruited from the class, one to be a fixture and one to be an operator, and called to the front of the room. Then, the volunteers receive more detailed instructions with the aid of the second slide in Figure 9. The participants are instructed that the goal of the game is to repeatedly drop a penny through a cardboard tube such that it falls as close as possible to the crosshairs on the third slide of Figure 9. The quality of penny-dropping is defined by the distance of the penny’s landing position from the crosshairs: The closer to the crosshairs, the better the quality. The slide with the crosshairs can be printed on a transparency and placed on an overhead projector or, with more recent technology, it can be placed on a document camera platform; either approach allows the entire class to observe the process. The exercise setup involves placing multiple cardboard tubes among the other game materials—which include a ruler, transparency pen, and penny—on a table near the target. The tubes are as follows:

- A paper towel tube (1.75 inches diameter; 11 inches long)

- A toilet paper tube (1.75 inches diameter; 4.5 inches long)

- A plastic wrap tube (1 inch diameter; 12.25 inches long)

- A gift wrap tube (1.625 inches diameter; 18 inches long)

- Another gift wrap tube (1.625 inches diameter; 26 inches long)

- A larger tube (3.25 inches diameter; 24 inches long)

The instructions to the participants on the second slide include directions to the participant who plays the fixture to hold the cardboard tube at 90 degrees to the target with the bottom of the tube 1.5 inches above the target. The second participant, the operator, drops the penny and, subsequently, marks the location of the center of the penny on each drop with an X. A penny is dropped approximately 10 times.

Some key lessons from the exercise are facilitated by the instructions being less specific than they should be and the process equipment not being up to the task at hand. For example, while the first slide of instructions references a paper towel tube, the participants in the exercise are not yet identified when that slide is covered. Thus, perhaps, because nobody with specific responsibility for carrying out the instructions has been identified, the specification of a paper towel tube is uniformly missed by the class members. The availability of many tubes laid on a table requires that the participants make a decision, which is always made based on the expectation of which tube will perform better. Also, although a ruler is available to the participants and listed as a piece of equipment for this exercise on the first slide, no specific mention is made about using a ruler to maintain the 1.5-inch height on the second slide, and it is left to the participants to infer that the ruler’s purpose is to ensure the proper height of the tube from the target. Also, participants are told to use the transparency pen to mark the X, although the pen is not referenced in the instructions. Finally, although it is a requirement that the tube be perpendicular to the target, no means is provided the participants to adhere to that requirement, nor is any means provided to reliably or steadily maintain the 1.5-inch height.

Figure 9. Penny-dropping exercise instructions.

The most frequently asked question before the operator and fixture begin their task is “Which tube should we use?” The faculty facilitator, posing as the worker’s supervisor, best leaves this point ambiguous (to supply content for follow-up discussion) by reiterating that the participants should use the official tube, claiming ignorance on which tube that is. Without variance, subjects have taken this opportunity to hypothesize, and use, whichever tube they feel will give the most accurate results. Almost uniformly, subjects have selected the narrow plastic wrap tube, at least to start the exercise. When questioned about the reasons for this choice, it is always that the participants hypothesize that the smaller diameter will help direct the penny toward the target more accurately. The results using this tube are unsatisfactory for many participants, who sometimes switch to another tube partway through the exercise. Some participants have switched tubes multiple times.

The Results

The results of the penny-dropping game are characterized by a large variation in the locations where the penny lands on the various attempts. Very rarely does the penny land on the crosshairs, or even near it. Not infrequently, pennies bounce completely off the target when the target is placed on a hard surface. The varying landing position of many pennies is analogous to widely varying quality in a product or service. Those subjects who have selected the narrow, long plastic wrap tube are universally dissatisfied with their choice and often change midprocess to a shorter tube. Some participants change tubes frequently, as often as after every penny is dropped. Other observations that are routinely made over the course of the exercise include the following:

- Operators often forget the instructions about how high to hold the tube above the landing surface and the orientation of the tube relative to the target.

- Besides switching tubes, subjects also purposefully vary other parameters of the process in an attempt to improve accuracy. Most often these are small variations from the directions that were given, although some participants’ deviations from the stated directions are quite severe. The constant process revisions most often do not improve accuracy significantly and many times make the results worse.

- It is necessary for the operator to move the penny to mark its location, which reduces the accuracy of the marks.

The Lessons

The follow-up discussion to the exercise starts with the instructor asking the students about what conditions of the exercise varied from attempt to attempt or what could vary trial by trial in how the subjects executed the experiment, which caused variation in the location of the X that indicates the center of the penny’s landing position. These are relevant responses:

- Varying height of the tube above the target

- Varying angle of the tube

- Varying aim of the tube

- Steadiness of the tube

- Steadiness of the operator’s arm and hand when dropping the penny

- Varying angle of the penny relative to landing surface as it is held and dropped

- Varying height of the penny above the tube

- Location of the penny relative to the centerline of the tube as it is released

- Varying dynamics of the penny’s release (whether it stuck to the operator’s fingers, which edge of the penny stuck to the fingers, and what rotation was caused)

- Angle of the penny’s impact on landing surface

- Using different pennies on different trials

- Not following the defined operation description

- Changing from one tube to another (varying length and diameter of the tubes)

- Varying and changing location of the document camera and interference with the tube

- Variation in where the mark was made relative to the actual location of the penny’s center

- Variation in the direction of ventilation breezes

- Somebody suddenly opening a door and causing a draft

Brainstorming this list allows identification of common versus special causes of variation. As defined in statistical process control, common causes are the frequent but small variations in how a process is performed that are not tightly controlled. These causes should have a small effect on the output variation of a process relative to the effect of special causes. Likely examples of common causes from the list are ventilation air currents in the room and people entering or leaving the room causing sudden drafts. Special causes, conversely, are infrequent, unexpected variations in the process that cause a more substantial degradation in quality. Although it can be difficult to identify and resolve special causes of variation, they can in general be found and mitigated more easily than common causes of variation. One example of a special cause of variation is changing which tube is used: The effect of using different tubes on the penny’s landing position can be significant, and one should not expect a variety of tubes to be used (if directions were sufficiently specific). This raises the more general discussion about how not following the operation description should be considered to be a special cause of variation. The most important lessons of this exercise focus around this point and the role that process descriptions play:

- Deviating from the process instructions is a special cause of variation and quality degradation. Although participants are well intentioned in making these deviations and, in fact, quality improvement is their motive, deviation from the crosshairs increases and quality suffers due to these deviations.

- Deviation from the process description is not solely the fault of the participants. The process definition offered in the PowerPoint slides is insufficient. Much more detail is required if the subjects are expected to carry out the process in the same way every time. The definition leaves a lot of room for interpretation and thus in and of itself is a cause of variation.

- The process is poor in that many of the causes of significant quality degradation should be expected because the tooling and penny-dropping instructions do not allow the operators a good way to control the aim of the tube, hold the tube at the correct height and angle, and so forth. A better process would make it easier for the participants to execute the process as defined.

The main point of this exercise in terms of the topic of this chapter is that if the way a process is performed changes, then the results change. Thus it can be argued that process descriptions must be specific to control variation, and they must be followed consistently for consistent quality and processing times. The last point in the list facilitates the observation that management, not the worker, is responsible for ensuring that the process and the process description are sufficient. Even in organizations where the workers contribute to defining process descriptions, management must permit them to participate in that process. W. Edwards Deming and Joseph Juran maintained that a vast majority of quality problems (80%–85%) are not the fault of the operators but rather management’s fault because management is responsible for defining the process or supporting the operators in defining the process, as well as providing for appropriate equipment and otherwise ensuring a satisfactory system of product manufacturing or service delivery.2

Other important lessons that can emerge from this exercise besides this key point are the following:

- Intuition about process improvement can be incorrect. When operators switch tools in an attempt to improve quality, the choice is based on intuition. Intuition, or, in other words, hypothesis, is often wrong because our intuition might focus on a single factor affecting quality that we believe is the most significant. For example, participants use the plastic wrap tube because of its small diameter and its promise for better aim. However, our intuition might not comprehend other process factors that are more consequential than those we focused on. For example, the plastic wrap tube is longer, thus creating a higher velocity at impact, and velocity seems to be a more significant factor in this process than aim.

- Frequent changes in process execution exacerbate process variation. Frequent changes in tube selection cause variation to increase and quality to degrade. Furthermore, the tube that led to a particular outcome is not identifiable from the data on the observations, which would hinder problem-solving efforts.

- Process improvements should be based on sufficient data. The two previous points suggest the need for collecting data to validate hypothesized process improvements in a controlled fashion. Because hypothesized improvements do not always succeed, hypotheses need to be verified with data. Ideally, sufficient data can be collected to ensure a statistically significant difference in the process results. Changing tubes after each penny is dropped not only fails to give sufficient data upon which to base a decision about how to execute the process but also often causes quality to degrade by increasing variation. This suggests the need for a controlled environment in which deviations from the defined process are sanctioned as experiments to collect data, which determine whether they are indeed improvements. This underscores the importance of process improvement methodologies such as Lean and Six Sigma being data driven.

- Simpler processes often produce better quality. The procedure of using a tube, at a certain height, at a certain angle, and so forth is a very complex setup. One can envision much simpler and much more accurate processes, such as simply placing the penny by hand on the crosshairs. Thus the point can be made that complex processes are not inherently better. In fact, the simpler a process is—with fewer steps, less equipment, less complex fixtures and procedures—the greater the accuracy and quality that result. Furthermore, there are fewer factors that affect the process, which can vary and cause quality to decline.

- Specifications. No specifications were given to the participants to define acceptable quality, and so quality performance cannot be measured. This can be taken as an opportunity to define tolerances on product dimensions and to discuss the Taguchi loss function.

- Measurement system analysis. The penny must be moved before an X can mark its center. We would expect variation in the location of where the penny landed and where the mark was made. This motivates the discussion of whether a measurement system can be trusted to measure the quality of a product or service and how we might ensure that measurements were sufficiently accurate.

Standardization and Its Difficulties

Arguments Against Standardization and Their Rebuttals

The most frequent and instinctive responses as to why managers do not think that Lean will work for their business are these:

- “The customers we serve or the products we manufacture have so much variety that we cannot apply Lean, which depends on a large degree of standardization.”

- “Our industry is more art than science: We need to rely on the experience and expertise of our employees to determine how to resolve issues that arise. You cannot apply Lean’s formulaic management tools to these situations because the resolution is always different depending on the circumstances.”

- “Our business offering relies on creativity, and standardizing the way that we did things would make our product or service less innovative.”

The author, prior to writing this text, had cataloged various arguments against Lean, which he thought to be fundamentally different points. However, thinking through this issue more carefully and distilling the long list of arguments revealed that, at heart, an overwhelming majority of people’s objections to Lean take issue with standardization. Moreover, the bases of their arguments are not significantly different: Standardization either (a) cannot be applied to complex processes, (b) impedes the operators’ need to apply their intelligence, or (c) stifles creativity that is needed to address substantially varying clients or instances. The author has found that although circumstances can be complex and varied and intelligence and creativity are sometimes necessary to cope with these circumstances, there are always aspects of processes that can be standardized such that customers and clients are better off.

Health care is one venue where one might hear all of the previous arguments. Dr. Atul Gawande, in his book titled The Checklist Manifesto,3 describes the vast variety of diseases that a doctor might face on any one day, let alone in any one year. Gawande also describes the complexity in health care in terms of the volume of knowledge that doctors must possess. So this is a likely venue where doctors and other health care professionals might argue for the importance of reliance on experts and their knowledge. Still, Dr. Gawande shows that checklists, which are a standardization tool that might be used in a Lean implementation, effectively reduce accidental deaths in health care and improve patient outcomes.

Another example of using a tool akin to a checklist is provided by a company in the business of delivering customized transportation services, which acquires business by responding to requests for quotation (RFQs) as described in chapter 2. Its managers argued that each RFQ had its own peculiarities and no standardized process could address each RFQ’s idiosyncrasies. At the conclusion of a Lean project, the company found, to the contrary, that approximately 80% to 90% of the data fields required to reply to an RFQ were common among all RFQs. By creating a form (much like Dr. Gawande’s checklist), the company was able to reduce the number of data omissions, errors, and rework steps significantly, resulting in reduced lead time for completing the response. Reduced lead time in this case implies more responses to RFQs are filed on time, and presumably, a higher percentage of bids are successful.

In an automotive part-stamping operation, the author observed a plant that routinely took 6 hours or longer to change stamping dies, whereas Toyota plants routinely changed dies in minutes. Each time a die was inserted into a press, a lengthy calibration process was required to remove creases in the metal parts or other defects before production could be started. One part of the calibration process to remove creases was to insert shims, which raised the height of the die in certain areas. The skill and expertise of the skilled tradespeople who shimmed the dies was revered: These were experienced and knowledgeable people who could do what few others could. A different number of shims needed to be placed in different locations each time. They might describe their job as being more of an art than a science, one implication being that art cannot be codified. This process, however, could have benefitted from some standardization. One fundamental tenet of the single-minute exchange of dies (SMED) technique used at Toyota is to ensure the repeatable placement of a die in a press. Less erratic placement of dies implies less time calibrating to compensate for varying placement. Ironically, the techniques for consistent die placement also help dies to be installed and to become productive more quickly.

The need for the die setters’ expertise and art bears an eerie resemblance to artisans in the American Industrial Revolution before mass production. In that era, “puddlers” mixed small batches of steel according to their own recipes before large mass-production furnaces were invented, and gunsmiths hand fit each part on a musket because the dimensions of manufactured parts varied so much. A key enabler of mass production was, indeed, reducing dimensional variation in parts. It is ironic that a century later erratic die placement is overlooked and die setters are revered for their ability to cope with dimensional uncertainty.

As much difference as a company might think it has among customers and jobs, and as much expertise as one might claim is needed to adapt to continually varying circumstances, many parts of processes can often be codified. One example is the explicit definition of how a die should be placed in a press. This leaves more time to focus on the remaining portion of the job that does require expertise, creativity, or innovation. The author has come to believe that the statement “Our business is more art than science, so we cannot standardize” should most often be interpreted as the following:

- “We have not taken the time to understand our business processes, and so we do not know how the execution of the process is related to the outcome of the process.”

- “We do not understand our processes sufficiently to know which parts of the process can be standardized such that the process could actually be improved.”

If the inputs to a process and the manner in which it is executed are not formally studied in terms of how they affect the quantity and quality of the process output, then executing the process relies on intuitive relationships between process inputs and outputs that have been informed by experience. This is a reasonable definition of art. All too often, however, an intuitive approach is neither efficient nor effective because the intuitive connections between efforts and results may in fact be invalid. In that case, applying expert knowledge is closer to trial and error than we might like to believe and is therefore not an efficient way of getting a job done. Such is the job of a die setter fiddling with shims and a puddler spitting on his pool of molten steel. Taking time to understand the process, and taking time to collect and analyze data, would more definitively establish the connections between what people do in processes and the results. When these connections are clear, then standardizing on the actions that produce the desired results is possible and desirable.

It is interesting and ironic to note that even though the arguments previously mentioned against Lean are often arguments for why a particular industry is different from normal processes where Lean can be used, the same arguments against Lean are heard in all industries. There seems to be a tendency to think that one’s own industry is special and immune from standard techniques that are generally applicable elsewhere. Is this a manifestation that processes are generally interpreted as art rather than science? The motivations to resist standardization might be many. Some rebel against notions associated with Henry Ford and mass production. Perhaps the notion of being an expert and work being an art rather than a routine promotes self-esteem. Maybe doing an expert’s work increases the intellectual challenge of work and thus satisfaction of doing work, even if it is inefficient. Possibly, being more familiar with our own industries, being less familiar with other industries, and perhaps being a bit egocentric might lead us to believe that our industry is truly different. Or being unfamiliar with the value of Lean and having the current mind-sets about experts challenged is threatening. Nonetheless, even where experts, variety, and complexity exist, managers who seriously consider Lean are likely to find some value in it for their operations.

Arguments for Standardization

In a previous chapter, we outlined the steps of Lean, which begin with mapping the process to identify waste. The tools of Lean are then applied to reduce unnecessary lead time. Then, the future state value stream map is created that shows the process as it will be executed once the planned improvements are made. One might ask two questions:

- Will the process improvements that we have identified today be valid if the process is being executed another way tomorrow?

- Of what value is the future state value stream map if we cannot execute it as planned?

To the first question, the future state value stream map reflects solutions to waste as it was observed in the process initially. Surely, if the process is being executed differently tomorrow, the waste we observe in the process on another day may be different and the solutions as originally conceived may not be effective for the new current state of the process. This hypothetical question suggests the importance of executing a process in a consistent way. You cannot fix problems if they are always changing. Also, any metrics taken over the long term for such a situation do not measure one consistent execution mode for the process but rather an amalgam of processes, which has little meaning if one were to numerically compare before and after a change.

The second question offers an even more powerful argument for the importance of standardizing how processes are executed. Clearly, if we cannot practice the future state value stream map as it is planned, then the solutions to waste cannot be implemented. The process must be reorganized around this new definition and practiced consistently for improvement to be realized. We will talk later in this book about process definitions more detailed than value stream maps, and the lesson applies to that level of process description as well: If the process definition cannot be executed reliably, then the waste intended to be eliminated by the definition will likely persist.

Thus consistent process execution is a requirement of process improvement. Any efforts put into improving the process without standardization are a waste of time because they will not be implemented. Furthermore, with no standardization, the varying execution of a process creates process metrics that likely will wander from higher to lower levels and back again, with no apparent improvement trend: Even if a good process unknowingly was practiced long enough to be recognized via process metrics, it soon will be replaced by another set of process steps.

Spear and Bowen analyzed the Toyota Production System and identified the critical aspects of how Toyota executes and improves processes.4 Among the practices that enabled Toyota to overtake the Big Three American automakers in terms of quality and productivity in the 1980s are these:

- Highly specified work sequence and content

- Standardized connections between process steps

- Simple, specified, unique path

- The scientific method as an underlying principle of process improvement

Standardization is a common element of all of these practices. To be effective, highly specified work sequences need to be executed in the specified way every time. Standardized connections between process steps ensure that a specific person is responsible for receiving items for further processing and that the items are always put in a specified location; otherwise, work dumped off at the next step at varying locations can wait a long time before it is noticed, and there may be confusion over who is responsible to handle it. Simple, specified, and unique paths for each type of item manufactured ensures that every unit produced is produced in the same (standardized) manner. This minimizes variation in processing and makes identifying root causes of quality problems easier.

Most importantly, Toyota follows what Spear and Bowen call the scientific method. The scientific method can be described with an illustration that those who have had high school or college physics courses will readily understand. Physics courses typically begin by introducing Newtonian physics. Those sets of principles and equations that describe how physical bodies act with respect to one another and gravity were used for 250 years before Albert Einstein developed new theories for bodies that are moving very fast or that are very heavy. Engineering and scientific endeavors in the intervening years did just fine using Newton’s theories in all but the most extreme situations. Einstein’s theories of relativity refined Newton’s ideas for those extreme environments. The important point is that Einstein’s theories were not accepted in place of Newton’s until experimental physicists carried out experiments to validate those theories. Thus in science theories precede experimentation, but both are required to establish a new belief.

The scientific method, then, requires that we operate under the current, proven hypotheses until better theories are developed and validated experimentally. This is a data-driven approach; we do not change our beliefs when new theories come about but only when experimental data validate those ideas. Similarly, the current process definition is our validated hypothesis for the best known way to operate the system, and as such we standardize on it. We are open, however, to better ways to execute the process, and we will adopt those new methods, but only when we have tried them out and validated with data that they are indeed better. Ideas about improving processes are merely hypotheses until proven. Unlike the penny-dropping exercise, hypotheses should not be implemented unless the results are going to be studied and verified with data.

It is appropriate at this point to make a few more comments about why tasks should be defined and executed in fine detail. First, one of the main lessons of the penny-dropping exercise described earlier in this chapter is that unless a process is defined in detail, the considerable latitude allowed in the execution of the task allows deviations that could have catastrophic effects. In that vein, the paragraphs that follow offer three more arguments for process specificity from real-world processes.

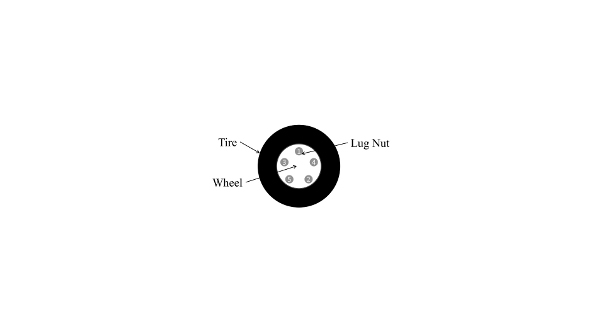

To illustrate, we will use an experience that many readers have likely had. Specifically, we will talk about how to change a wheel and tire on a car and, more specifically, the order in which you should tighten the lug nuts. When tightening the lug nuts on a wheel, one should first install all the lug nuts finger tight before fully tightening any of the lugs nuts. When tightening the lug nuts, one should tighten in two passes, using the sequence indicated in Figure 10 (for a five-lug wheel). (The starting lug, which is indicated with a 1 in Figure 10, can be any lug.) If either a lug nut is fully tightened before the other lug nuts are put on finger tight or the pattern shown in Figure 10 is not used, it is possible that the wheel might not be seated flatly on the wheel hub. This can result in vibration and even loosening of the wheel. Thus for safety it is extremely important that this series of two steps be followed and, moreover, that the correct pattern be used when tightening lug nuts. Spear and Bowen discuss a similar example in which Toyota has specified the sequence for tightening seat bolts. Does deviating from this specified sequence cause the seat to not be placed properly or restrict the bolts from attaining proper torque, as is the case when we mount a wheel onto a car? We do not know, and Toyota may not know whether there is such a link between cause and effect. But why take a chance and leave the sequence and quality at risk on such a critical operation? Toyota should know the quality they have attained with a certain bolt-tightening sequence, and maintaining that same sequence guarantees that same performance.

An example of a standardized, consistent process from food service is the process for preparing McDonald’s French fries. It is one of the hallmarks of McDonald’s, and possibly a strategic advantage, that customers can expect French fries to have the same characteristics regardless of which McDonald’s location they visit.5 Leaving details about the process up to individual discretion invites not only differences in taste from location to location but also process variation that could cause customers to experience poor quality. Customers who are dissatisfied by such a critical facet of McDonald’s business threaten McDonald’s strategic positioning, so why take a chance on variation?

Figure 10. Lug nut tightening sequence.

An example from an administrative process was observed by the author in which it was left to individual discretion how to gather data and write it up in response to an RFQ, where the company responding to the RFQ was doing so to secure new business in the form of a contract with another company. Although it was indeed true that some aspects of the data required for each RFQ were different, a great many of the data items were consistently required for each RFQ response. Leaving it up to individuals to catalog the requirements of each RFQ afresh created a situation where some requested data items were missed. Creating a standard form that required the most frequent data items to be included in each RFQ response eliminated the possibility that those items would be forgotten because it was obvious when fields on the form were left blank.

Conclusions

This chapter has presented arguments for why performing a process consistently every time is essential to improve a process. Standardization is required at two levels: First, at a macro level, each of the specified steps of a process must be executed in the same sequence every time. For example, the steps in a value stream map must be followed in every instance. Additionally, at a detailed level, the minute steps of a process must be performed as specified and in the sequence specified; for example, failing to tighten the lug nuts in the proper order could cause damage to the vehicle, an unstable ride, and injury. The mechanism in Lean to specify the macro process is the value stream map. The tool used to specify the details of how tasks are to be executed when Lean and the Toyota Production System are used is most often Standard Work, which connotes a standardized way to do an operation. We will describe how to use Standard Work in chapter 7.