CHAPTER 20

Information Assurance Monitoring Tools and Methods

Independent of the investments made by an organization to implement security controls, its information is still vulnerable because there is no guarantee that all controls function perfectly at all times. To complement this effort, an organization should employ a continuous vigilant surveillance of its environment. Additionally, a model that addresses critical systems using overlapping countermeasures should be implemented. This provides yet one more form of defense in depth.

Information assurance monitoring surveys a system 24 hours a day 7 days a week to ensure that the information assurance posture (that is, confidentiality, integrity, and availability from the MSR model) is not compromised and that breaches to the system are reported in a timely manner. Effective monitoring of an environment requires being aware of its state by observing all changes occurring over time.

This chapter focuses on the methods and tools employed to monitor an organization’s information assurance risk posture. Monitoring covers surveillance not only over the network and on machines, but also over personnel (human behavior).

Intrusion Detection Systems

An intrusion detection system is an information assurance management system (IAMS) that detects inappropriate, incorrect, or anomalous activities (remember, you cannot be sure as behavior is anomalous unless you have a reliable baseline). An intrusion detection system (IDS) detects malicious activities not ordinarily detected by conventional firewalls. Detectible malicious activities include network-based attacks such as SYN flooding, as well as host-based attacks such as privilege escalation.

There are two broad classes of IDS: host IDS and network IDS. A host IDS operates on a host to detect malicious activity upon that host, while a network IDS operates on network data flows.

Host Intrusion Detection System

A host intrusion detection system (HIDS) is one of the first lines of defense for electronic information systems against attackers. An HIDS detects unauthorized access attempts on computers and generates warning alerts (via pager, e-mail, or SMS) or takes corrective actions. An HIDS looks for events in the system logs for evidence of malicious code activities in real time. Other features of an HIDS include checking the critical system file changes and accesses for attempts to tamper with information or evidence of modifying users’ privileges.

Network Intrusion Detection System

A network intrusion detection system (NIDS) operates at the ISO network layer (layer 2) and protects a wide range of targeted platforms. An NIDS detects intrusions by monitoring network traffic and monitoring multiple hosts via a network switch with port mirroring capabilities, through the connection to a hub or a network tap.

In addition, an NIDS may detect the network vulnerabilities and provide recommendations to protect the network. When malicious activities are identified, the response generated from an NIDS system may be a notification to the network engineer’s monitor screen, generation of e-mail alerts, or SMS alerts to the network administrator.

Log Management Tools

Logs are the records maintained by systems. Depending on the budget, resources, and level of interest, almost any action can be recorded. The log can contain information ranging from when an individual logged in and which resources were used to failed attempts to access resources. The more details kept, the more resources used. Log only the activities that represent potential threats, and store only those that one might reasonably address.

Log management tools provide access control to log information and enable system administrators to trace attacks or suspicious activities. Gaining valuable information from audit logs in organizations is difficult. One of the problems encountered when trying to obtain information from log files is the volume of data to be analyzed (frequently in the range of multiple terabytes).

Log management tools have filters that reflect sets of rules. They select a subset of data from the data pool. However, using filters may cause important data to be accidentally filtered out. This may interrupt the ability to detect and recognize important attack patterns and valuable information.

Security Information and Event Management (SIEM)

Security Information and Event Management (SIEM, also known as security information management [SIM]) is software designed to import information from security systems logs and correlate events among systems (NIST-SP800-94). SIEM analyzes logs collected from log management tools and IDS. Normalization converts the log data into standard fields and values common to the SIEM. Without normalization, matching relevant information is extremely difficult. A field titled logonTime may mean the same thing as successFullLogonTime, but if the SIEM isn’t programmed to treat them the same, it may process them as different events. Logs are collected over secure channels and normalized.

Correlation is a process of identifying relationships among two or more security events. Events are correlated in various ways, such as rule based, statistical, and visualization. If automatic correlation is used, it generates an alert for further investigation. Correlation reduces the number of events to be investigated by eliminating the number of irrelevant events (false positives).

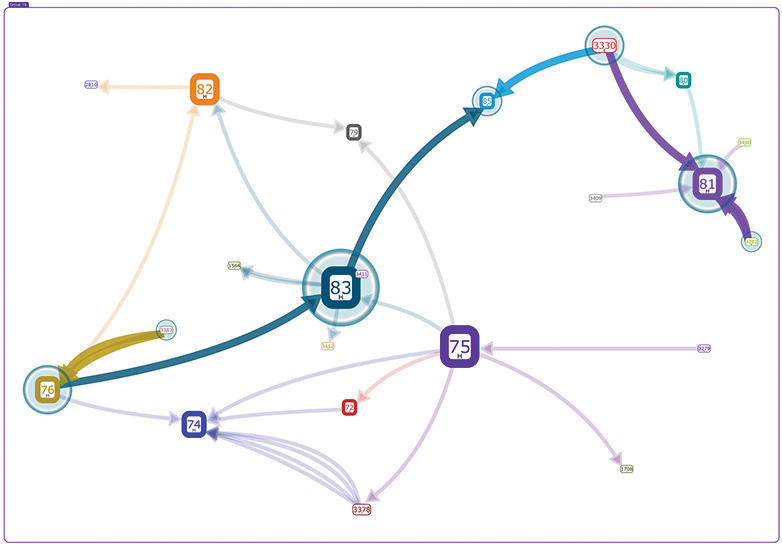

SIEM includes a report generator to produce summaries of activities over a period of time or ad hoc reports based on certain events. Organizations should consider training information assurance personnel in statistics, data science, and data visualization to get the most out of SIEM solutions. An example of network model is shown in Figure 20-1.

Figure 20-1 Network visualization

Honeypot/Honeynet

A honeypot is a computer system set up with intentional/known vulnerabilities. The main purpose is to study hackers’ behavior, motivation, strategy, and tools used. The honeypot has no production value; any attempt or connection to a honeypot is potentially suspicious or malicious.

There are two types of honeypot: low interaction and high interaction. A low-interaction honeypot simulates only limited services of any operating system or application. It is useful to gather information at a higher level such as to learn about behavior of worm, botnet, or spammer. A high-interaction honeypot simulates the behavior of an entire operating system. It can be used to study hacker behavior and to identify new methods of attack.

When used, a honeypot gives an organization another layer of detection, but extra precautions are still needed so that the honeypot will not be used as a platform to launch attacks on other organizations. The Honeynet Project, a nonprofit research organization dedicated to computer security and information sharing, actively promotes the deployment of honeypot.

Malware Detection

Malicious software, often referred to as malware, is software designed to break into or damage a computer system without the owner’s consent. Hostile or intrusive code is malware. Malware includes all software programs (including macros and scripts) intentionally coded to cause an unwanted event or problem on the target system. Table 20-1 gives examples of malware.

Table 20-1 Types of Malware

Removing malware from a computer can be difficult, even for experts. As a precautionary measure, it is essential to detect malware and simultaneously prevent the system from becoming infected. There are three commonly used methods for detecting malware, as discussed next.

Signature Detection

One of the most widely used detection methods is signature detection. It detects the patterns or signatures in a particular program that may be malware. When malware is suspected, it is verified against the database of known bad code fragments. To be effective, this database must be updated constantly with current threats.

There are advantages and disadvantages of the signature detection method. It is most effective for known malware represented in the database. An additional advantage is that users and administrators can perform a simple precautionary measure keeping signature files up to date and periodically scanning for viruses. However, the signature files may be quite large, which makes scanning slow. Regardless of this condition, keeping the signature files up to date should be performed frequently for them to be effective. Since there are not signatures for new malware, these systems are vulnerable to zero-day attacks.

Change Detection

Finding files that have been changed is called change detection. A file that changes unexpectedly may be due to a virus infection. One of the advantages of change detection is that if a file has been infected, a change can be detected. An unknown malware, one not previously identified (zero-day), can be detected through change detection.

There is a possibility of false positives resulting from frequent changes of the files in the system. This is not convenient for users and administrators. If a virus is inserted into a file that changes often, it will may slip through a change detection procedure—masked by the normal high volume of activity. Therefore, careful analysis of the log files is recommended, and an organization may have to fall back on a signature scan method. In this case, the reliability of the change detection system is uncertain. This approach also requires constant maintenance of baseline data so changes can be detected.

State Detection

State detection aims to detect unusual/anomalous behavior. It relies on an expert system that determines if a state change is anomalous. These state changes includes malicious behavior; by extension, anomaly detection is the ability to identify potentially malicious activity. Clearly, the challenge in using this technique is to determine what is normal and what is unusual and to be able to distinguish between the two. Having current system baselines is essential.

Vulnerability Scanners

A vulnerability scanner is software that detects vulnerabilities by analyzing networks or host systems. The results from scanning networks or host systems are presented as reports. Based on these reports, the personnel in charge may respond to mitigate or resolve the vulnerabilities discovered. Several types of scanners address different types of vulnerabilities. They are host-based scanners, network-based scanners, database vulnerability scanners, and distributed network scanners.

Vulnerability Scanner Standards

Vulnerability scanners take technical results from a scan and try to present the vulnerability as human-readable and understandable information. Vendors have varying professional opinions as to the severity and reporting of weaknesses. To help address the discrepancy between scanners, the U.S. National Institute of Standards and Technology, in coordination with MITRE, has developed the Common Vulnerabilities and Exposures program. The following is from the program’s web site (https://cve.mitre.org):

Common Vulnerabilities and Exposures (CVE®) is a dictionary of common names (i.e., CVE Identifiers) for publicly known information security vulnerabilities. CVE’s common identifiers make it easier to share data across separate network security databases and tools, and provide a baseline for evaluating the coverage of an organization’s security tools. If a report from one of your security tools incorporates CVE Identifiers, you may then quickly and accurately access fix information in one or more separate CVE-compatible databases to remediate the problem. CVE is:

One name for one vulnerability or exposure

One standardized description for each vulnerability or exposure

A dictionary rather than a database

How disparate databases and tools can “speak” the same language

The way to interoperability and better security coverage

A basis for evaluation among tools and databases

Free for public download and use

Industry-endorsed via the CVE Editorial Board and CVE-Compatible Products

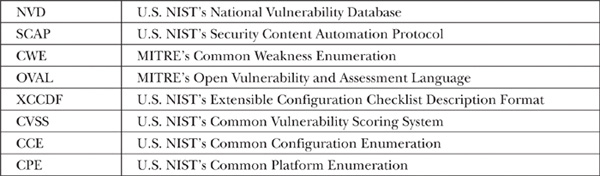

CVE works with several other programs to form a comprehensive and standardized method to convey configuration, vulnerability, and weakness information between scanners and reporting methods. Standards related to CVE include the following:

These standards provide a framework which organizations can use to standardize vulnerability, configuration, and platform vulnerability severity and reporting. Organizations should consider adopting only the vulnerability scanning technology that supports full integration with the standards noted prior.

Host-Based Scanner

Host-based scanners retrieve detailed information from operating systems and other hosts’ services and configurations. A host-based scanner seeks potentially high-risk activities involving users’ passwords, for example. This type of scanner is ideal for verifying the integrity of file systems for signs of unauthorized modifications.

Network-Based Scanner

Network-based scanners scrutinize the network looking for vulnerabilities. Network scanners can provide a map of what devices are alive on the network by using simple probes, such as the echo request command (ping command), performing port mapping, and identifying which network services are running on each host on the network. For example, if a machine is not a web server, it would be unusual for it to have port 80 open. Advanced network scanners also include databases containing information on known vulnerabilities.

Database Vulnerability Scanner

Database vulnerability scanners perform information security analysis of database systems. Database vulnerability scanners examine authorization, authentication, and integrity. It identifies potential vulnerabilities in areas such as password strength, information security configurations, cross-site scripting, and injection vulnerabilities.

Distributed Network Scanner

Distributed network scanners perform information security analysis for distributed networks in large organizations spanning geographic locations. It consists of remote scanning agents, which are updated using a plug-in update mechanism and controlled by a centralized management console.

Penetration Test

A penetration test (pen test) involves conducting reconnaissance scans against an organization’s perimeter defenses such as routers, switches, firewalls, servers, and workstations to allow the organization to determine the overall network topology. Pen tests are performed at two levels, external as well as internal to the organization. This activity is the same sort of approach the adversary might take.

The information gathered can identify the attack vector in an attempt to penetrate targeted systems to see whether they can be compromised using known vulnerability scans and exploits. The overall plan is to map the entire network and perform an assessment to make sure any vulnerable devices are identified and patched frequently.

External Penetration Test

An external penetration test concentrates on external threats (mainly hackers) attempting to break into an organization’s network. There are two methods for an external penetration test: a black-box test and a white-box test.

Black-box testing assumes that a hacker has no prior knowledge of the target organization, network, or systems. The objective of this testing is to discover how information can leak from an organization. White-box testing, on the other hand, assumes that a hacker has complete knowledge of the organization’s network. The focus of white-box testing is to determine the potential for exploitation and not discovery.

Internal Penetration Test

Most successful attacks originate within the organization’s perimeter. Organizations need to conduct testing from different network access segments, both logical and physical. However, today organizations may focus more on the external threat and pay less attention to securing their systems from internal threats.

Internal penetration testing identifies vulnerable resources within an organization’s network and assists the system administrator in addressing these vulnerabilities. Internal information assurance controls not only protect an organization from internal threats but also help provide an additional layer of protection from external attackers who attempt to penetrate the perimeter defenses. Access to internal systems can be obtained through physical access to the organization or by remotely exploiting a vulnerable system. An internal penetration test assumes a successful bypass of perimeter controls such as firewalls and intrusion detection systems (IDSs), making it possible to access resources and services not accessible outside of the perimeter, such as RCP, NetBIOS, FTP, and Telnet services.

Wireless Penetration Test

Wireless penetration test is important. It helps organizations understand the overall information assurance infrastructure through the eyes of an attacker. A wireless penetration test evaluates how vulnerable a network is to wireless attackers. Wireless penetration test should be organized and well planned and should simulate the action of a highly skilled attacker determined to break into the network.

Basic wireless penetration test tasks may be limited to the ability to connect to the access point (a layer 1 device, a hub for wireless LAN) and get free bandwidth to the Internet. An advanced wireless penetration test involves packet analysis to find valuable data, collecting and cracking passwords, attempting to gain root or administrator privileges on vulnerable hosts in a range, connecting to external hosts, and finally hiding their tracks.

War driving is the act of gaining (potentially) illegal access to a network resulting from an individual’s continuous exploring of the Wi-Fi wireless networks via a laptop with a Wi-Fi feature enabled. There are free and downloadable tools on the Internet to gain access to unsecured wireless networks.

Figure 20-2 shows an example of using an open source tool to crack Wired Equivalency Privacy (WEP) keys of wireless networks.

Figure 20-2 Using an open source tool to get a WEP key

A WEP key can be extracted from wireless communication within seconds or minutes. Leaving wireless networks unattended is asking for trouble. Designing a network with information assurance in mind from the beginning mitigates wireless security problems.

In most cases, the human factor again proves to be the weakest link. Because of improper network design, an attacker associated with a wireless network may find himself connected directly to a wired LAN behind a corporate firewall with unsecured and unpatched services exposed to an unexpected attack.

The security of a wireless network can be improved by adopting the followings:

• Network segregation Provide proper network segregation between wireless and LAN with a firewall and appropriate routing.

• Change the default settings Access points are vulnerable when using a default configuration. Changing the access point name and administrator password should be done to ensure that the wireless network is harder to penetrate.

• IEEE 802.1X The IEEE 802.1X is an IEEE standard that when implemented provides a certificate-based authentication mechanism. The standard can also be implemented in a wireless network to ensure stronger security in terms of encryption.

• IEEE 802.11i This is the security standard for 802.11. It provides a stronger encryption known as Wi-Fi Protected Access 2 (WPA2). By deploying WPA2 with a strong passphrase, the wireless network is harder to penetrate.

Physical Controls

As noted in Chapter 16, physical security is essential to keeping systems and data secure. Risks associated with physical security, such as theft, data loss, and physical damage, can cause substantial damage to an organization. As part of information assurance, it is important to check an organization’s physical security posture for vulnerabilities.

Motion sensors, smoke and fire detectors, closed-circuit television monitors, and sensor alarms are physical detective controls that can be installed to alert information assurance personnel about physical security violations. The following are some of the more common detective physical controls:

• Closed-circuit television and monitors Closed-circuit television (CCTV) is used to monitor computing areas where security personnel may be absent and is useful for detecting suspicious individuals.

• Motion detectors Use motion detectors in unmanned computing facilities. They provide an alert in the event of intrusions. This equipment should be monitored by guards.

• Sensors and alarms Sensors and alarms are used to inspect the environment to verify that air, water, and temperature parameters stay within certain operating ranges. If out of range, an alarm is triggered to call attention to operations and maintenance personnel to correct the situation.

• Smoke and fire detectors Signs of fire can be detected by smoke and fire detectors placed strategically, and testing should be conducted on a regular basis to ensure the detectors are in good working order.

Refer to Chapter 16 for details on physical security and environmental security controls.

Personnel Monitoring Tools

With an increase in the number of employees having access to the Internet and e-mail, there is a need for personnel monitoring to safeguard organizational records and stakeholders’ interests. Personnel monitoring is required to maintain information assurance and privacy of organizational records. It protects against internal and external threats to the information assurance or records integrity and protects against unauthorized access or use of records or information. Organizations need to understand the local and international laws pertaining to privacy before implementing monitoring. An employee’s consent may be required, and employees should be made aware that such monitoring is in place. Each employee should sign an acknowledgment of monitoring.

Network Surveillance

Network surveillance encourages employees to abide by acceptable use policies and limit the personal use of organization resources. Another network surveillance objective is to block spam and viruses that affect personnel productivity. One method of implementing network surveillance is the use of specialized software. The software allows authorized individuals to monitor by accessing their workers’ computer systems, remotely view a person’s monitor display, and record the keystrokes made on the computer. Network monitoring is also a simple tool to detect ex-filtration (surreptitiously sending information assets to unauthorized individuals) of corporate assets.

E-mail Monitoring

E-mail in today’s workplace is the norm. In most cases, the information sent via e-mail across a corporate network is within the boundaries of private communication network. However, while internal e-mail often feels secure and private, e-mail can be retained, read, and disseminated without the knowledge or consent of the sender. Typically, all e-mails are retained in the system even when both parties, sender and receiver, have deleted the messages.

An organization is liable for all communications sent from their network. These e-mails can be retrieved and printed out, and they serve as supporting documents for legal actions. For any inappropriate or illegal communications carried out by an employee, an organization is at risk of facing legal actions. E-mail monitoring is useful in detecting such activities and allows an organization to control and take necessary actions against the culprits.

Employee Privacy and Rights

Employee privacy and rights are the two issues to be considered when deploying a monitoring mechanism. Necessary precautions and steps must be taken to ensure employee privacy and rights are not violated. Ensure monitoring activities are in accordance with applicable laws.

However, as mentioned in Chapter 13, it is becoming a norm for authorities in some countries to monitor personal information. Newly recruited employees are usually required to sign a clause allowing management to monitor whatever information they are managing, including personal information.

The Concept of Continuous Monitoring and Authorization

Chapter 14 discussed the approach for a mature certification and accreditation program. The concept of accreditation as a formalized method of risk acceptance was mentioned. A problem with any assessment technique is time. As time goes on, the value of an assessment diminishes. This is because of changes in the threat environment but also because of changes in the information technology environment. The goal of risk management is to ensure that stakeholders have an informed view of risk to their organization or mission in near real time.

Organizations cannot spend resources continually performing assessment after assessment in the hopes of providing near-real-time risk visibility. The answer lies in leveraging change management and information assurance monitoring tools and methods. Organizations must determine the criticality of controls and the resulting frequency of assessment. Some controls, such as, policies may need to be assessed only annually or even less. Other controls such as network patching and vulnerability assessment may need to be accessed daily, weekly, or monthly.

The results of the assessment need to be combined into a normalized and meaningful dashboard that stakeholders can use to gauge risk to their operations. Stakeholders should be able to access this dashboard at any time to gauge their information assurance–related risk. The dashboard should also provide estimated resources required to eliminate risks and points of contact for specific risks.

Further Reading

• ACM Computing Curricula Information Technology Volume: Model Curriculum. ACM, Dec. 12, 2008. http://campus.acm.org/public/comments/it-curriculum-draft-may-2008.pdf.

• Bejtlich, R. The Tao of Network Security Monitoring: Beyond Intrusion Detection. Addison-Wesley, 2004.

• Bejtlich, R. Extrusion Detection: Security Monitoring for Internal Intrusion. Addison-Wesley, 2005.

• Dittrich, David, and S. Dietrich. “P2P As Botnet Command and Control: A Deeper Insight.” Proceedings of the 2008 3rd International Conference on Malicious and Unwanted Software (Malware), October 2008. http://staff.washington.edu/dittrich/misc/malware08-dd-final.pdf.

• Gurgul, P. “Access Control Principles and Objective.” securitydocs.com, 2004. www.securitydocs.com/library/2770.

• Honeypot Background. honeyd.org, 2002. www.honeyd.org/background.php.

• Kent, K., and M. Souppaya. Guide to Computer Security Log Management (Management (SP800-92). NIST, 2006.

• Nestler, Vincent J. Computer Security Lab Manual (Information Assurance & Security). 2005.

• Nichols, R., D. Ryan, and J. Ryan. Defending Your Digital Assets Against Hackers, Crackers, Spies, and Thieves. McGraw-Hill Education, 2000.

• Ryan, D., J.C.H. Julie, and C.D. Schou. On Security Education, Training, and Certifications. Information Systems Audit and Control Association, 2004.

• Scarfone, K., and P. Mell. Guide to Intrusion Detection and Prevention Systems (SP800-94). NIST, 2007.

• Conklin, Wm. Arthur, Introduction to Principles of Computer Security: Security+ and Beyond. McGraw-Hill Education, 2004.

• Schou, Corey D., and D.P. Shoemaker. Information Assurance for the Enterprise: A Roadmap to Information Security. McGraw-Hill Education, 2007.

• The Honeynet Project. www.honeynet.org/about.

• Tipton, Harold F., and S. Hernandez, ed. Official (ISC)2 Guide to the CISSP CBK 3rd edition. ((ISC)2) Press, 2012.

• Tipton, Harold F., and M. Krause. Information Security Management Handbook, 4th Edition. Auerbach, 2002.

• What Is a Honeynet? SearchSecurity.com, 2007. http://searchsecurity.techtarget.com/definition/honeynet.

Critical Thinking Exercises

1. A CISO decides to deploy a honeypot with a baseline configuration on it into the wild to determine what vulnerabilities exist. What are the advantages and disadvantages to this approach?

2. The CIO of an organization is trying to prioritize the information assurance workload of the organization. The CIO has asked the CISO to take vulnerability scanner output and add it to the organization’s dashboard. The CIO then tasks the organization’s system owners to correct the most “critical” vulnerabilities first. Is this the most prudent plan to minimize risk in the organization?