The final chapter of this book reviews several source code examples that demonstrate advanced x86 assembly language programming techniques. The first example explains how to use the cpuid instruction to detect specific x86 instruction set extensions. This is followed by two examples that illustrate how to accelerate SIMD processing functions using non-temporal memory stores and data prefetch instructions. The concluding example elucidates the use of an assembly language calculating function in a multithreaded application.

CPUID Instruction

It’s been mentioned several times in this book that an application program should never assume that a specific instruction set extension such as AVX, AVX2, or AVX-512 is available simply by knowing the processor’s microarchitecture, model number, or brand name. An application program should always test for the presence of an instruction set extension using the cupid (CPU Identification) instruction. Application programs can use this instruction to verify that a processor supports one of the previously-mentioned x86-AVX instruction set extensions. The cpuid instruction can also be used to obtain additional processor feature information that’s useful or needed in both application programs and operating system software.

Example Ch16_01

Before examining the source code, it will be helpful to have a basic understanding of the cupid instruction and how it works. Prior to using cpuid, a function must load register EAX with a “leaf” value that specifies what information the cpuid instruction should return. A second or “sub-leaf” value may also be required in register ECX. The cpuid instruction returns its results in registers EAX, EBX, ECX, and EDX. The calling function must then decode the values in these registers to ascertain processor support for specific features. As you will soon see, it is often necessary for a program to employ the cupid instruction multiple times. Most application programs typically use cupid during initialization and save the results for later use. The reason for this is that cupid is a serializing instruction, which means that it forces the processor to finish executing all previously fetched instructions and perform any pending memory writes before fetching the next instruction. In other words, the cupid instruction takes a long time to complete its execution.

Listing 16-1 begins with the header file CpuidInfo.h. Near the top of this file is a structure named CpuidRegs, which is used to save the results returned by cupid. Following CpuidRegs is a C++ class named CpuidInfo. This class contains the code and data that’s associated with cpuid instruction use. The public portion of CpuidInfo includes a subclass named CacheInfo. This class is employed to report information about a processor’s memory caches. Class CpuidInfo also includes an enum named FF. An application program can use this enum as an argument value with the member function CpuidInfo::GetFF to determine if the host processor supports a specific instruction set. You’ll see how this works later in this section. Toward the bottom of header file CpuidInfo.h are two declaration statements for the assembly language functions Cpuid_ and Xgetbv_. These functions execute the cpuid and xgetbv (Get Value of Extended Control Register) instructions, respectively.

Following CpuidInfo.h in Listing 16-1 is the source code file CpuidInfo_.asm. This file contains the assembly language functions Cpuid_ and Xgetbv_, which are simple wrapper functions for the x86 instructions cupid and xgetbv. The function Cpuid_ begins its execution by saving register RBX on the stack. It then loads argument values r_eax and r_ecx into registers EAX and ECX. The actual cupid instruction follows the loading of registers EAX and ECX. Following the execution of cpuid, the results in registers EAX, EBX, ECX, and EDX are saved to the specified CpuidRegs structure. The assembly language function Xgetbv_ executes the xgetbv instruction. This instruction loads the contents of the extended processor control register that’s specified by ECX into register pair EDX:EAX. The xgetbv instruction allows an application program to determine if the host operating system supports AVX, AVX2, or AVX-512, as explained later in this section.

The next file in Listing 16-1 is Ch16_01.cpp. The function main contains code illustrates how to use the C++ class CpuidInfo. The statement ci.LoadInfo() invokes the member function CpuidInfo::LoadInfo, which generates multiple executions of cpuid to obtain information about the processor. Note that CpuidInfo::LoadInfo is only called once. The function DisplayCacheInfo streams information about the processor’s memory caches to cout. This function invokes CpuidInfo::GetCacheInfo to report cache information that was obtained during execution of CpuidInfo::LoadInfo. The function DisplayFeatureFlags shows information about some of the instruction set extensions that the processor supports. Each cout statement in this function uses CpuidInfo::GetFF with a different CpuidInfo::FF value. The member function CpuidInfo::GetFF returns a single bool value that indicates whether the processor supports instruction set extension that’s specified by its argument value. Like the cache data, the processor instruction set extension information was obtained and saved during the call to CpuidInfo::LoadInfo. Note that CpuidInfo is structured to allow an application program to make multiple CpuidInfo::GetFF calls without triggering additional executions of cupid.

Following the file Ch16_01.cpp in Listing 16-1 is the source code for CpuidInfo.cpp, which contains the non-trivial member functions for class CpuidInfo. The member function CpuidInfo::LoadInfo that was discussed earlier invokes six private member functions that perform a multitude of cupid queries. The first of these functions, CpuidInfo::LoadInfo0, begins its execution by calling CpuidInfo::Init to carry out the requisite initializations. It then invokes the assembly language function Cpuid_ to obtain the maximum cpuid leaf value that’s supported by the processor and the processor vendor ID string. Another call to Cpuid_ is then used to obtain the maximum leaf value for extended cupid information. This is followed by a call to CpuidInfo::InitProcessorBrand, which uses several Cpuid_ calls to query and save the processor brand string. The source code for this function is located toward the end of the file CpuidInfo.cpp.

The member functions CpuidInfo::LoadInfo1, CpuidInfo::LoadInfo2, and CpuidInfo::LoadInfo3 also exploit Cpuid_ to ascertain processor support for a variety of instruction set extensions. The code that’s contained in these member functions is mostly brute-force decoding of the various Cpuid_ results. The AMD and Intel programming reference manuals contain additional information about the cpuid feature flag bits that are used to indicate processor support for a specific instruction set extension. The private member function CpuidInfo::LoadInfo4 contains the code that checks for AVX, AVX2, and AVX-512. This member function warrants closer examination.

An application program can use the computational resources of x86-AVX only if it’s supported by both the processor and its host operating system. The Xgetbv_ function can be employed to determine host operating system support. Before using Xgetbv_, the cpuid flag OSXSAVE must be tested to ensure that it’s safe for an application program to use the xgetbv instruction. If OSXSAVE is set to true, the function CpuidInfo::LoadInfo4 invokes Xgetbv_ to obtain information regarding OS support for x86-AVX state information (i.e., whether the OS properly preserves the XMM, YMM, and ZMM registers during a task switch). When using the Xgetbv_ function, the processor will generate an exception if the extended control register number is invalid or if the processor’s OSXSAVE flag is set to false. This explains why the software flag m_OsXsave is checked in prior to calling Xgetbv_. If the host operating system supports x86-AVX state information, the function CpuidInfo::LoadInfo4 proceeds to decode the cpuid feature flags related to AVX and AVX2. Note that the feature flags FMA and F16C are also tested here. The remaining code in CpuidInfo::LoadInfo4 decodes the cupid feature flags that signify support for the various AVX-512 instruction set extensions.

Summary of Information from cpuid Instruction for Select Intel Processors

CPUID Feature | i3-2310m | i7-4790s | i9-7900x | i7-8700k |

|---|---|---|---|---|

L1 Data (KB, per core) | 32 | 32 | 32 | 32 |

L1 Instruction (KB, per core) | 32 | 32 | 32 | 32 |

L2 Unified (KB, per core) | 256 | 256 | 1024 | 256 |

L3 Unified (MB) | 3 | 8 | 13 | 12 |

ADX | 0 | 0 | 1 | 1 |

AVX | 1 | 1 | 1 | 1 |

AVX2 | 0 | 1 | 1 | 1 |

AXV512F | 0 | 0 | 1 | 0 |

AVX512BW | 0 | 0 | 1 | 0 |

AVX512CD | 0 | 0 | 1 | 0 |

AVX512DQ | 0 | 0 | 1 | 0 |

AVX512ER | 0 | 0 | 0 | 0 |

AVX512PF | 0 | 0 | 0 | 0 |

AVX512VL | 0 | 0 | 1 | 0 |

AVX512_IFMA | 0 | 0 | 0 | 0 |

AVX512_VBMI | 0 | 0 | 0 | 0 |

BMI1 | 0 | 1 | 1 | 1 |

BMI2 | 0 | 1 | 1 | 1 |

F16C | 0 | 1 | 1 | 1 |

FMA | 0 | 1 | 1 | 1 |

LZCNT | 0 | 1 | 1 | 1 |

POPCNT | 1 | 1 | 1 | 1 |

Non-Temporal Memory Stores

From the perspective of a memory cache, data can be classified as either temporal or non-temporal. Temporal data is any value that is accessed more than once within a short period of time. Examples of temporal data include the elements of an array or data structure that are referenced multiple times during execution of a program loop. It also includes the instruction bytes of a program. Non-temporal data is any value that is accessed once and not immediately reused. The destination arrays of many SIMD processing algorithms often contain non-temporal data. The differentiation between temporal and non-temporal data is important since processor performance often degrades if its memory caches contain excessive amounts of non-temporal data. This condition is commonly called cache pollution . Ideally, a processor’s memory caches contain only temporal data since it makes little sense to cache items that are only used once.

Example Ch16_02

Near the top of Listing 16-2 is the C++ function CalcResultCpp. This function performs a simple arithmetic calculation using the elements of two single-precision floating-point source arrays. It then saves the result to a destination array. The next C++ function in Listing 16-2 is named CompareResults. This function verifies equivalence between the C++ and assembly language output arrays. The function NonTemporalStore allocates and initializes the test arrays. It then invokes the C++ and assembly language calculating functions. The output arrays of the three calculating functions are then compared for any discrepancies.

Mean Execution Times (Microseconds) for Functions CalcResultCpp, CalcResultA_, and CalcResultB_ (n = 2,000,000)

CPU | CalcResultCpp | CalcResultA_(uses vmovaps) | CalcResultB_(uses vmovntps) |

|---|---|---|---|

i7-4790s | 1553 | 1554 | 1242 |

i9-7900x | 1173 | 1139 | 934 |

i7-8700k | 847 | 801 | 590 |

Data Prefetch

An application program can also use the prefetch (Prefetch Data Into Caches) instruction to improve the performance of certain algorithms. This instruction facilitates pre-loading of expected-use data into the processor’s cache hierarchy. There are two basic forms of the prefetch instruction. The first form, prefetcht[0|1|2], pre-loads temporal data into a specific cache level. The second form, prefetchnta, pre-loads non-temporal data while minimizing cache pollution. Both forms of the prefetch instruction provide hints to the processor about the data that a program expects to use; a processor may choose to perform the prefetch operation or ignore the hint.

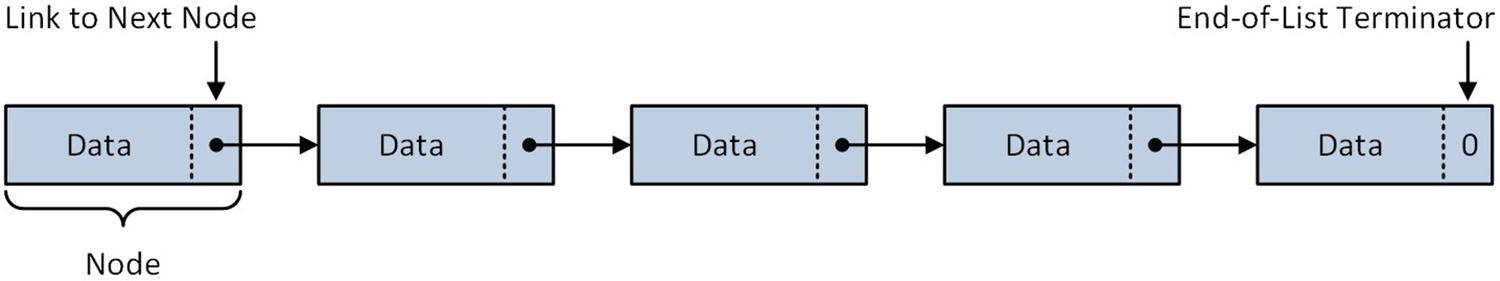

Simple linked list

Example Ch16_03

Listing 16-3 begins with the header file Ch16_03.h. The declaration of structure LlNode is located near the top of this file. The C++ code uses this structure to construct linked lists of test data. Structure members ValA through ValD hold the data values that are manipulated by the linked list traversal functions. The member FreeSpace is included to increase the size of LlNode for demonstration purposes since prefetching works best with larger data structures. A real-world implementation of LlNode could use this space for additional data items. The final member of LlNode is a pointer named Link, which points to the next LlNode structure. The assembly language counterpart of LlNode is declared in the file Ch16_03_.asmh.

The base function for source code example Ch16_03 is named LinkedListPrefetch and can be found in source code file Ch16_03.cpp. This function builds several test linked lists, invokes the C++ and assembly language traversal functions, and validates the results. The source code file Ch16_03_misc.cpp contains a set of miscellaneous functions that implement basic linked list processing operations. The function LlCompare compares the data nodes of its argument linked lists for equivalence. The functions LlCreate and LlDelete perform linked list allocation and deletion. LlPrint dumps the data contents of a linked list to a file. Finally, LlTraverse traverses a linked list and performs a simulated calculation using LlNode data elements ValA, ValB, ValC, and ValD.

Mean Execution Times (Microseconds) for Linked List Traversal Functions (num_nodes = 50,000) .

CPU | LlTraverse(C++) | LlTraverseA_(without prefetchnta) | LlTraverseB_(with prefetchnta) |

|---|---|---|---|

i7-4790s | 5685 | 3093 | 2680 |

i9-7900x | 5885 | 3064 | 2842 |

i7-8700k | 5031 | 2384 | 2319 |

Multiple Threads

All the source code examples presented in this book thus far have shared one common characteristic: they all contain single-threaded code. The mere fact that you are reading this book probably means that you already know that most modern software applications utilize a least a few threads to better exploit the multiple cores of modern processors. For example, many high-performance computing applications frequently perform arithmetic calculations using large data arrays that contain millions of floating-point elements. One strategy that’s often employed to accelerate the performance of these types of calculations is to distribute the array elements across multiple threads and have each thread carry out a subset of the required calculations. The next source code example demonstrates how to perform an arithmetic calculation using large floating-point arrays and multiple threads. Listing 16-4 shows the source code for example Ch16_04.

Caution

A processor can become extremely hot while executing multithreaded code that makes extensive use of x86-AVX instructions. Before running the code of example Ch16_04, you should verify that the processor in your computer has an adequate cooling system.

Example Ch16_04

Source code example Ch16_04 performs a simulated calculation using large arrays of double-precision floating-point values across multiple threads. It uses the C++ STL class thread to run multiple instances of an AVX2 assembly language calculating function. Each thread performs its calculations using only a portion of the array data. The C++ driver routine exercises the calculating algorithm using various combinations of array sizes and simultaneously executing threads. It also implements benchmark timing measurements to quantify the performance benefits of the multithreaded technique.

Listing 16-4 begins the header file Ch16_04.h. In this file, the structure CalcInfo contains the data that each thread needs to carry out its calculations. The structure members m_X1, m_X2, m_Y1, m_Y2, m_Z2, and m_Z2, point to the source arrays, while m_Result points to the destination array. Members m_Index0 and m_Index1 are array indices that define a range of unique elements for each calculating thread. Header file Ch16_04.h also includes a structure named CoutInfo, which contains status information that’s optionally displayed during program execution.

The next file in Listing 16-4 is Ch16_04_Misc.cpp. This file contains the source code for the program’s ancillary functions. The functions Init and CompareResults perform array initialization and verification, respectively. The next function is DisplayThreadMsg. This function displays status information for each executing thread. Note that the cout statements in DisplayThreadMsg are synchronized with a C++ STL mutex . A mutex is a synchronization object that facilitates controlled access to a single resource by multiple threads. When mutex_cout is locked, only one thread can stream its status results to cout. The other executing threads are blocked from streaming their results to cout until mutex_cout becomes unlocked. Without this mutex, status information text from the executing threads would be intermingled on the display (if you’re interested in seeing what happens, try commenting out the mutex_cout.lock and mutext_cout.unlock statements).

The function GetNumElementsVec returns a vector that contains the sizes of the test arrays. Note that the amount of memory required by the largest test array should be less than the amount of available memory plus a small fudge factor. The fudge factor prevents the program from allocating all available memory. Also note that GetNumElementsVec throws an exception if insufficient memory is available since running the program in this manner is very slow due to the amount of page swapping that occurs. The function GetNumThreadsVec returns a vector of test thread counts. You can change the values in num_threads_vec to experiment with different thread count values.

The source code file Ch16_04.cpp contains the driver routines for source code example Ch16_04. The function CalcResultCpp is a C++ implementation of the simulated calculating algorithm and is used for result verification purposes. The next function, CalcResultThread, is the main thread function. This function invokes the assembly language calculating function CalcResult_. It also displays thread status messages if they’re enabled.

Following CalcResultThread is the function RunMultipleThreads. This function exercises the calculating algorithm using the specified combinations of array sizes and number of simultaneously executing threads. The first code section of RunMultipleThreads performs array allocation and element initialization. It also calls the function CalcResultCpp to calculate results values for algorithm verification purposes. Note that prior to calling this function, an instance of CalcInfo is initialized with the data that’s necessary to carry out the required calculations.

The second code section of RunMultipleThreads, which starts immediate after the comment line Code section #2, runs the calculating algorithm by distributing the test array elements across multiple threads. The test array elements are spilt into groups based on the number of threads that will execute. For example, if the number of test array elements equals 64 million, launching four threads will result in each thread processing 16 million elements. The inner most for loop that follows the comment line Thread start code begins each iteration by initializing an instance of CalcInfo for the next thread. The statement threads[k] = new thread(CalcResultThread, &ci2[k], &cout_info[k]) constructs a new thread object and starts execution of the thread function CalcResultThread using argument values &ci2[k] and &cout_info[k]. This inner for loop repeats until the required number of executing thread have been launched. While the threads are executing, the function RunMultipleThreads executes a small for loop that invokes the function thread::join. This effectively forces RunMultipleThreads to wait until all executing threads have finished. The remaining code in RunMultipleThreads performs data verification and object cleanup.

Before reviewing the assembly language code, the C++ code in RunMultipleThreads merits a few additional comments. The first thing to note is that the benchmarking code measures both the time it takes to carry out the required calculations and the overhead that’s associated with thread management. If the algorithm that’s employed by RunMultipleThreads were to be used in a real-world application, any benchmark timing measurements would be meaningless without factoring in this overhead. It should also be noted that RunMultipleThreads implements an extremely rudimentary form of multithreading that omits many important real-world operations to simplify the code for this example. If you’re interested in learning more about the C++ STL thread class and the other STL classes that facilitate multithreaded processing, you are strongly encouraged to consult the references listed in Appendix A.

Benchmark Timing Measurements (Milliseconds) for RunMultipleThreads Using an Intel i7-4790s Processor

Number of Threads | |||||

|---|---|---|---|---|---|

Number of Elements (Millions) | 1 | 2 | 4 | 6 | 8 |

64 | 686 | 226 | 178 | 180 | 172 |

128 | 1146 | 491 | 345 | 355 | 347 |

192 | 1592 | 702 | 513 | 530 | 516 |

256 | 2054 | 942 | 679 | 714 | 688 |

Benchmark Timing Measurements (Milliseconds) for RunMultipleThreads Using an Intel i9-7900x Processor

Number of Threads | |||||

|---|---|---|---|---|---|

Number of Elements (Millions) | 1 | 2 | 4 | 6 | 8 |

64 | 492 | 137 | 84 | 69 | 61 |

128 | 765 | 300 | 163 | 131 | 121 |

192 | 1110 | 454 | 233 | 193 | 178 |

256 | 1330 | 582 | 313 | 260 | 238 |

Benchmark Timing Measurements (Milliseconds) for RunMultipleThreads Using an Intel i7-8700k Processor

Number of Threads | |||||

|---|---|---|---|---|---|

Number of Elements (Millions) | 1 | 2 | 4 | 6 | 8 |

64 | 332 | 125 | 120 | 123 | 123 |

128 | 522 | 265 | 240 | 245 | 246 |

192 | 839 | 387 | 363 | 366 | 369 |

256 | 919 | 499 | 478 | 484 | 492 |

Summary of Hardware Features for Test Processors Used in Example Ch16_04

Hardware Feature | i7-4790s | i9-7900x | i7-8700k |

|---|---|---|---|

Number of cores | 4 | 10 | 6 |

Number of threads | 8 | 20 | 12 |

Base frequency (GHz) | 3.2 | 3.3 | 3.7 |

Maximum frequency (GHz) | 4.0 | 4.5 | 4.7 |

Memory type | DDR3-1600 | DDR4-2666 | DDR4-2666 |

Number of memory channels | 2 | 4 | 2 |

Summary

An application program should always use the cpuid instruction to verify processor support for specific instruction set extensions. This is extremely important for software compatibility with future processors from both AMD and Intel.

An assembly language function can use the non-temporal store instructions vmovntp[d|s] instead of the vmovap[d|s] instructions to improve the performance of algorithms that carry out calculations using large arrays of non-temporal floating-point data.

An assembly language function can use the prefetch[0|1|2] instructions to pre-load temporal data into the processor’s cache hierarchy. A function can also use the prefetchnta instruction to pre-load non-temporal data and minimize cache pollution. The performance benefits of the prefetch instructions vary depending on data access patterns and the processor’s underlying microarchitecture.

A multithreaded algorithm that’s implemented in a high-level language such as C++ can exploit AVX, AVX2, or AVX-512 assembly language calculating functions to accelerate an algorithm’s overall performance.