CONTENTS

4.3 Fourier-Based Image Registration

4.3.1 Rigid Transform Relationship Between Image and Fourier Domain

4.3.2 Moment Matching Approach to Estimating Rigid Transform Matrix

4.3.3 A Novel Fourier-Moment-Based Image Registration Algorithm

4.4 Iterative Refinement Process by a Distance Weighting

4.4.3 Fourier-Based Image Registration Algorithm with Iterative Refinement

4.5.1 Watermark Removal for Pictures

4.5.2 Watermark Removal for Text Images

4.6 Experiments and Discussions

In recent years, widespread use of the Internet increased the exchange of information and knowledge. However, the accompanying copyright and ownership problems became very critical to the success of digital content distribution. In order to solve these problems for digital content, information-hiding techniques have become more and more important in many application areas. Electronic watermark, which is also called digital watermark, is a branch of information-hiding techniques and it is a common function in several famous photo-processing application programs. Digital watermarking can be roughly divided into two types—visible marking and invisible marking. The former is commonly used in academic theses and stock photos, and the latter is popular in commercial document sharing, copyright protection, and bill antifaking. Both these types have their own special requirements, such as easy embedding, copy prevention, hard removing, robustness against tempering, and preservation of original information.

The development of invisible watermarking technology has been almost two decades and there are two main approaches: one is the spatial-domain approach and the other is the frequency-domain approach. Van Schyndel et al. (1994) proposed an algorithm called Least-Significant Bits (LSB), which changes the least important bit to add the hiding information. Later, Pitas and Kaskalis (1995) and Pitas (1996) proposed a modified LSB method for information hiding, but the problem with this approach is that it is sensitive to image compression, noise adding, or other image attacking. The frequency-domain approach includes the discrete Cosine Transform (DCT)-based methods and discrete wavelet transform (DWT)-based methods. For example, Barni et al. (1998) proposed to embed a pseudo-random sequence of real numbers in a selected set of DCT coefficients, and Xia et al. (1998) proposed to add pseudo-random codes to the large coefficients at the high- and middle-frequency bands in the DWT of an image.

Sometimes watermarks need not be hidden in the digital contents, as some companies use visible watermarks, but most of the literature has focused on invisible (or transparent) watermarking. Visible watermarks overlaid on the original images or documents are strongly linked to the original watermarks and should be easy to recognize without blocking the original information. The critical point of the visible watermark is how to maintain the balance of watermark clearly visible yet difficult to move. Braudaway et al. (1996) used an analytic human perceptual models and varying pixel brightness to embed reasonably unobtrusive visible logos in color images, and an automatic way of adjusting the watermark intensity for a given image was presented in Rao et al. (1998) by using a texture-based human visual system (HVS) metric. Kankanhalli et al. (1999) worked in the DCT domain to classify DCT image blocks by image characteristics with the strength of the watermark in a block determined by its class. In Hu and Kwong (2001), they proposed a method to overcome the limitations of the DCT-domain-based methods and make the marking applied varied according to the features of the host images. The scaling factors are adaptively determined based on the luminance masking and local spatial characteristics of the host images.

Visible image watermark is commonly used to protect the copyright of documents and images. However, visible image watermark may cause problems for automatic understanding or analysis of the document or image contents, which is required for information or image retrieval. Thus, the visible watermark may need to be removed or modified, and the concept of reversible visible watermarks was proposed by IBM in 1997. The related literature has just rarely been reported. Pei and Zeng (2006) utilized independent component analysis to separate source images from watermarked and host images. Hu et al. (2006) presented a user-key-dependent removable visible watermarking system. Huang et al. (2007) proposed a copyright annotation scheme with both the visible watermarking and reversible data-hiding algorithms, and Liu and Tsai (2010) developed a lossless approach based on using deterministic one-to-one compound mappings of image pixel values for overlaying a variety of visible watermarks of arbitrary sizes on cover images.

In this chapter, we focus on the problems of detecting the visible watermarks from images and removing the watermarks to generate the watermark-free images. We will present our Fourier-based image alignment method with iterative refinement for the watermark detection. Subsequently, we describe how to remove the watermark from the image after the watermark is precisely detected. Some experimental results are given to show the performance of the proposed watermark detection and removal algorithm on real watermark documents and images.

Visible image watermark is commonly used to protect the copyright of documents and images. In general, most visible watermark embedding can be formulated as follows.

The visible image watermark embedding can be modeled as follows by Hu et al. (2006):

It(x)=wo(x)Io(x)+ww(x)Iw(x)for x ∈ W |

(4.1) |

where W is the region of the watermark embedding, Io(x) is the original image, Iw(x) is the watermark image, wo(x) and ww(x) are the associated weighting functions, and It(x) is the watermark-embedded image. Note that wo(x) + ww(x) = 1. The relationship of an original watermark Iw(y) and its transformed watermark Iw(x) can be modeled by a geometric affine transform given by x = Ay, where A is the affine transform matrix.

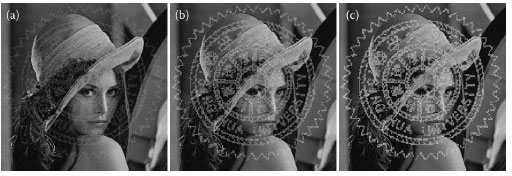

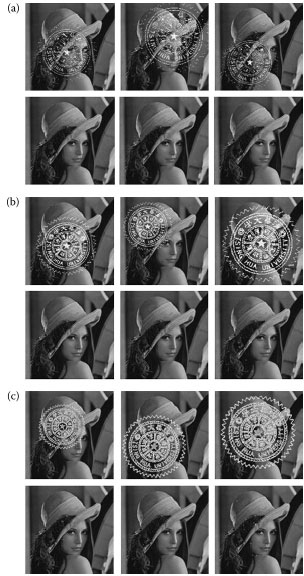

Generally speaking, wo(x) and ww(x) are usually set to constants inside the watermark area and the transformation A is usually the rigid transform with scaling, rotation, and translation. Figure 4.1 shows the typical visible watermark images with different weighting wo. If wo(x) and ww(x) are not constant, the most common setting is as follows:

FIGURE 4.1 The watermark embedded images with (a) wo = 0.8, (b) wo = 0.5, and (c) wo = 0.3.

wo(x)=Iw(x)Io(x)+Iw(x); ww(x)=Io(x)Io(x)+Iw(x) |

(4.2) |

Combining Equations 4.1 and 4.2 leads to the following model:

It(x)=(2Io(x)+Iw(x))Io(x)+Iw(x) |

(4.3) |

This equation shows that the embedded image It(x) is proportional to the multiplication between Io(x) and Iw(x). The main advantage of this embedding scheme is that no parameter is required to be adjusted.

For documents, the content can be segmented into two classes: that is, foreground and background. The foreground includes the texts or photos, and the background is always the white color area without anything, such as pdf or word files. In order to preserve the information for understanding, foreground will not be allowed to be modified. Thus the visible document watermarking can be defined as follows:

It(x)={Io(x)x∈foreground kIw(w)x∈background ∩ watermark region |

(4.4) |

k is a scale factor for decreasing the intensity values of the watermark in the documents. Figure 4.2 depicts two examples of typical watermarked documents.

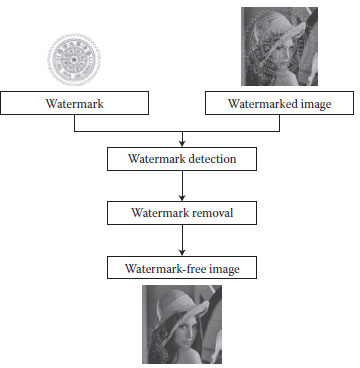

Given two images, a watermark image and a watermarked document image, there are two steps in the watermark removal procedure. The first step is to detect the visible watermark from the watermarked image or document, and the second step is to remove the watermark to generate the watermark-free image. Figure 4.3 shows the system of the watermark removal. In the remainder of this chapter, we focus on giving a detailed description of watermark detection and water removal components. In the next section, we first review some previous works on Fourier-based image alignment methods, and then we present a novel Fourier-moment-based image alignment algorithm to precisely estimate the position, orientation, and scaling of the watermark from a watermarked image. Subsequently, we propose how to improve the image alignment accuracy under occlusion or outliers by introducing the distance weighting scheme in the proposed algorithm. In Section 4.4, we present how to remove watermark from a watermarked image after the watermark is precisely aligned. Finally, we show some experimental results, followed by some conclusions.

FIGURE 4.2 Two examples of watermarked documents: (a) watermarked document, (b) watermarked document containing images.

FIGURE 4.3 The system flow of watermark removal.

4.3 FOURIER-BASED IMAGE REGISTRATION

There have been several Fourier-based methods proposed for image registration in the past by De Castro and Morandi (1987), Bracewell et al. (1993), and Reddy and Chatterji (1996). All the Fourier-based methods have the advantages that it is robust to noise with low computational complexity and it can handle large motion. Unfortunately, the accuracy of the Fourier-based image registration methods is limited to the mapping of log-polar transform due to the spectrum interpolation error from the Cartesian plane to the log-polar plane for estimating rotation and scaling. The interpolation directly on the 2D Cartesian Fourier domain will result in error in the image registration. Thus, the log-polar transform is the primary challenge to improve the registration precision and the alignment range. Many modified Fourier-based image registration methods, like the log-polar method in Reddy and Chatterji (1996), unequally spaced Fourier transform by Averbuch et al. (2006), and multilayer fractional Fourier transform (MLFFT) by Pan et al. (2009), have been proposed to evaluate the log-polar transform more efficiently and reliably. MLFFT uses the fractional Fourier transform to create several spectrums with different resolutions from an image and sums them into one for the log-polar transform. This strategy makes the Fourier-based image registration more accurate than the other Fourier-based methods. However, MLFFT cannot go beyond the framework of the log-polar transform, and it just selects multiple spectrums from the fractional FFT to achieve better approximation.

In the next section, we will introduce a novel Fourier-based image registration algorithm without using the log-polar transform. This algorithm can be used in both rigid and affine registration, while the previous Fourier-based methods can only deal with the rigid alignment, which is composed of scaling, rotation, and translation. The proposed algorithm is based on the fact that the rigid transform between two images corresponds to a related rigid transform between their Fourier spectrums, whose energies are normally concentrated around the origin in the frequency domain. Thus, the moments for the corresponding Fourier spectrum distributions can be calculated as probability density functions. The novel registration algorithm is based on minimizing the relationship between the moments for the Fourier spectrums of the two images.

4.3.1 RIGID TRANSFORM RELATIONSHIP BETWEEN IMAGE AND FOURIER DOMAIN

Consider two images g(x, y) and h(x, y) and they are related by a rigid transformation, that is, h(x,y) = g(r0cosθ0x+r0sinθ0y+c, -r0sinθ0x+r0cosθ0y+f)

G(u,v)=1r20ei[(ccosθ0−fsinθ0)u+ (fcosθ0+csinθ0)v]r0H(cosθ0u+sinθ0vr0,−sinθ0u+cosθ0vr0) |

(4.5) |

By letting u′=(cos θ0 + sinθθ0 v)/r0 and v′ = (–sinθ0u + cosθ0 v)/r0 we have the relationship u = r0(cosθ0 u′ – sinθ0v′) and v = r0 (sinθu′ + cosθ0 v′) Taking the absolute values on both sides of Equation 4.5, we have the rigid transformation relationship between the spectrums |G(u, v)| and |H(u, v)| as follows:

(4.6) |

where

[uv]=[r0cosθ0−r0sinθ0r0sinθ0r0cosθ0][u′v′] |

(4.7) |

Equations 4.6 and 4.7 show that the rigid transformation relationship in the amplitude of their Fourier spectrums only has two parameters, rotation Θ0and scaling r0. In order to evaluate them easily, Reddy and Chatterji (1996) proposed the log-polar transform from the Cartesian plane |G(u, v)| and |H(u, v)| into the log-polar plane Mg(log r, θ and Mh(log r, θ) in Equation 4.6 by letting u = r cos θ and v = r sin θ. Equation 4.6 can be rewritten as follows:

Mg(logr,θ)=1r20Mh(logr−logr0,θ−θ0) |

(4.8) |

Equation 4.8 shows that the problem for estimating rotation and scaling is converted into a translation-like problem by taking the log-polar transform. Thus, the rotation and scaling can be determined as follows:

(logr0,θ0)=arg max(logr,θ) real(IFT{FT(Mg)conj(FT(Mh))|FT(Mg)conj(FT(Mh))|}) |

(4.9) |

where FT and IFT denotes the forward and inverse Fourier transform operators, respectively, and conj denotes the complex conjugate operator.

The rotation θ0and scaling r0 can be first estimated based on Equation 4.9 from the two Fourier spectrums FT(Mg) and FT(Mh) through the log-polar transform, and the remaining translation vector (c, f) can also be computed by transforming image g(x, y) with the transformation g′(x, y) = g(r0cos θ0x + r0sin θ0y, – r0sin θ0x + r0cos θ0) and determining the translation between g′(x, y) and h(x, y) from their cross-power spectrum. To be more specific, the translation vector is determined as follows:

(c,f) = arg max(x,y) real(IFT{G′(u,v)H*(u,v)|G′(u,v)H*(u,v)|}) |

(4.10) |

where G′(u, v) is the Fourier transform of g′(x, y), and H*(u, v) is the complex conjugate of H(u, v).

4.3.2 MOMENT MATCHING APPROACH TO ESTIMATING RIGID TRANSFORM MATRIX

Owing to the interpolation error in the log-polar transform, we introduce the moment matching approach to estimate the rotation and scaling of the rigid transform in Equation 4.6 instead of using the log-polar transform. Let the Fourier spectrums of the 2D image functions f1(x, y) and f2(x, y) be denoted by F1(u, v) and F2(u, v), respectively. If f1(x, y) and f2(x, y) are related by a rigid transformation, then their Fourier spectrums are also related by the corresponding rigid transform, that is, |F1(u, v)| = |F2(u′, v′)|/r02 with the relation between (u, v) and (u′, v′) given in Equation 4.7. We rewrite Equation 4.7 by a = r0 cos θ0 and b = r0 sin θ0 as follows:

(4.11) |

To determine the rigid transform parameters, a and b, we employ the moment matching technique to the Fourier spectrums F1(u, v) and F2(u, v). The (i + j) th-order moment for the Fourier spectrum |Fk(u, v)|, k = 1 or 2, is defined as

(4.12) |

By coordinate substitution, we can derive the following equation:

(4.13) |

m(2)i,j=∫∫(au′−bv′)i(bu′+av′)j|F2(u′,v′)|du′dv′ |

(4.14) |

Thus, we have the following relationship between the first-order moments of the two Fourier spectrums from Equations 4.13 and 4.14.

[m(1)1,0m(1)0,1]=[a−bba][m(2)1,0m(2)0,1] |

(4.15) |

The 2D rigid transform parameters (a, b) can be estimated by minimizing the errors associated with the constraints in Equation 4.15 in a least-squares estimation framework as follows:

a=m(1)1,0m(2)1,0+m(1)0,1m(2)0,1(m(2)1,0)2+(m(2)1,0)2 |

(4.16) |

b=m(1)0,1m(2)1,0−m(1)1,0m(2)0,1(m(2)1,0)2+(m(2)0,1)2 |

(4.17) |

4.3.3 A NOVEL FOURIER-MOMENT-BASED IMAGE REGISTRATION ALGORITHM

In this section, we summarize the novel Fourier-moment-based image registration algorithm. The detailed procedure is given as follows:

1. Compute the discrete Fourier transforms of two images h(x, y) and g(x, y) via FFT as Equation 4.6.

2. Compute the first-order moments for the Fourier spectrums |H(u, v)| and |G(u, v)|.

3. Determine the rigid transform parameters (a, b) in Equations 4.16 and 4.17 by minimizing the least-square errors associated with the moment matching constraints given in Equation 4.15.

4. Transform the image g(x, y) with the rigid transform associated with the estimated parameters (a, b) and the transformed data are denoted by g′(x, y).

5. Determine the translation from the cross-power spectrum of g′(x, y) and h′(x, y) based on Equation 4.10.

Compared with the previous Fourier-based image registration methods, the proposed Fourier-moment-based image registration approach has less time complexity and can provide higher accuracy, because it does not require the log-polar transform, which involves a computationally costly interpolation procedure on the log-polar plane and introduces errors in the interpolation.

4.4 ITERATIVE REFINEMENT PROCESS BY A DISTANCE WEIGHTING

In the previous section, we present a novel Fourier-moment-based image registration algorithm based on matching the moments with the Fourier spectrums of the image functions. To further improve the accuracy of the proposed image registration algorithm, especially to cope with the occlusion problem, we propose an iterative refinement process by introducing a distance weighting scheme into images, which is detailed in the following sections.

Let a point set p(x, y) ∈ E, where E is the set of the Canny edge points, be extracted from an image h(x, y). Then the image h(x, y) is transformed into a binary image B(x, y) with values of the pixels set to 1 if they are edge points, and set to 0 otherwise.

(4.18) |

The idea of improving the accuracy of the proposed Fourier-moment-based image registration algorithm under the occlusion or outlier problems is to assign an appropriate weight to each point p in the binary image such that the points without correspondences have very small weights and the points with proper correspondences have high weights in the binary image. In the previous definition of the binary image B given in Equation 4.18, the function has binary values to indicate the presence of data points. Since the point sets may contain some noise variation, we introduce a distance weighting to reduce the influence of the points without proper correspondences.

The distance for one data point p ∈ E1 in the binary image B1(x, y) to the other point set E2 in the B2(x, y) is defined as

(4.19) |

Note that the distance for all data points in E1 to E2 can be efficiently computed by using the distance transform. Then, we define the weighting for each data point in E1 to E2 as follows:

(4.20) |

To improve the correctness of the point correspondences, we employ both Euclidean and gradient distances to compute their corresponding weighting functions given in Equation 4.20. Thus, the total weighting wt(p, E2) is determined by the Euclidean weighting we(p, E2) and the gradient distance weighting wg(p, E2), and they are defined as follows:

wt(p,E2)=we(p,E2)wg(p,E2)=σ2eσ2e+d2e(p,E2)σ2gσ2g+d2g(p,E2) |

(4.21) |

where σ2e and σ2g

Thus, the binary image B1 can be transformed into weighted image function Bw1 as follows:

Bw1(x,y)={wt((x,y),E2)(x,y)∈E10 (x,y)∈E1 |

(4.22) |

Similarly, the weight image function Bw2 is defined as follows:

Bw2(x,y)={wt((x,y),E1)(x,y)∈E20 (x,y)∉E2 |

(4.23) |

In our algorithm, we first apply the proposed Fourier-moment-based image registration to the binary image B1 and B2 without using the distance weighting. The estimated rigid transform is applied to all points in E1 to update the point set E1. Then, the distance weighting is computed to produce the weighted image function Bw1 and Bw2 as given in Equations 4.22 and 4.23. The proposed Fourier-moment-based image registration algorithm is applied to find the rigid transformation between Bw1 and Bw2, and the estimation result is used to refine the rigid registration. This refinement process is repeated several times until convergence.

The iterative registration is very crucial to the robustness of the registration. If the first step cannot provide robust registration, then the iterative refinement will not converge to the correct registration results. The novel Fourier-based registration algorithm is robust even without the iterative refinement. It is because the Fourier transform of the image brings most of the energy to the low-frequency region in the Fourier domain, thus making the registration determined from the moments of the Fourier coefficients robust against noise which is normally considered to be mostly distributed in the high-frequency domain.

4.4.3 FOURIER-BASED IMAGE REGISTRATION ALGORITHM WITH ITERATIVE REFINEMENT

In this section, we summarize the Fourier-moment-based image registration algorithm with iterative refinement. The detailed procedure is given as follows:

1. Generate the binary images B1 and B2 from the two images h(x) and g(x) as Equation 4.18.

2. Compute the discrete Fourier transforms of B1 and B2 via FFT.

3. Compute the first-order moments for the Fourier spectrums of B1 and B2 from Equations 4.13 and 4.14.

4. Determine the rigid parameters by minimizing the least-square errors associated with the moment matching constraints given in Equation 4.15.

5. Transform B1 with the rigid transform associated with the estimated parameters (a, b) only and the transformed data are denoted by B′1

6. Determine the translation vector t between B′1

7. Shift the map B′1

8. Repeat step 2 through step 7 with the binary image replaced by the weighting functions computed in the previous step until the changes in the rigid transformation parameters are within a small threshold.

According to the general model of visible watermark embedding, the production of watermarked text images and pictures is different; therefore, the methods of removing the watermarks to generate the watermark-free text and picture images are also different. We will describe the watermark removal methods for these two types of images subsequently in this section.

4.5.1 WATERMARK REMOVAL FOR PICTURES

Equation 4.1 shows the general model of watermark embedding. We consider the most common situation that wo(x) and ww(x) are set to a constant in all x positions belonging to the watermark area. If wo(x) equals to c, then the model is transformed as follows:

(4.24) |

Equation 4.24 can be rewritten as follows:

(4.25) |

In a natural picture, it is very common that the intensity values of adjacent pixels are very close to each other. Thus, a small patch with watermarked area and its neighbors can be considered as having the similar intensity value in the watermark-free picture. Let It be the watermarked area and its neighbors be Io. Figure 4.4 shows the intensity relationship between watermarked area and its neighboring region. After detecting the location and its geometric transformation of the watermark Iw in the watermarked image, we select the entire small patches with watermarked area and its neighbors, we can acquire many patch sets S = {S1, S2, S3, …, Sn} and Sn = {Io, It, Iw}. Then c can be solved by minimizing the least-square errors in Equation 4.25 between It – Iw and Io – Iw using the entire patch S.

FIGURE 4.4 Intensity relationship between watermarked area and the neighboring region.

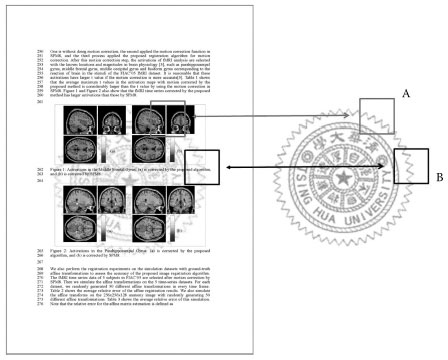

FIGURE 4.5 Detection of the watermark in the watermarked document image.

4.5.2 WATERMARK REMOVAL FOR TEXT IMAGES

The image in the watermarked text region is not changed by the watermark, which is visible only in the background of the document image and has smaller intensity values than the document image. After detecting the location and estimating the geometric transformation of the watermark Iw in the watermarked document image, we use the corresponding sliding windows both in watermarked document and in watermark. If the areas in the corresponding sliding windows are similar, such as the B area in Figure 4.5, intensity thresholding is simply applied to distinguish text and watermark regions based on their intensity distribution. If the areas in the corresponding sliding windows are very different, such as the A area in Figure 4.5, do nothing. A small sliding window is better to recover the watermark-free document image.

4.6 EXPERIMENTS AND DISCUSSIONS

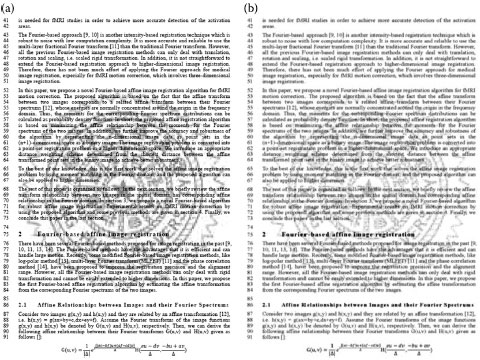

There are two types of watermark removal experiments to demonstrate the effectiveness of the proposed algorithm in this section; namely, the picture and document image watermark removal. In the picture watermark removal experiments, the color Lena image (512 × 512) is used as the original picture with the watermark of Tsing Hua logo (146 × 139) imposed with random rigid geometric transform and different blending weight c values as shown in Figure 4.6. The documents are produced by ourselves and the same watermark of Tsing Hua logo is added with random rigid geometric transforms. The watermarked images and documents are printed by HP Color LaserJet CP2020 Series PCL6 and then the printed watermarked images and documents are scanned by HP Scanjet for practical watermark removal from paper-copy documents.

FIGURE 4.6 DFirst row: watermarked images with random watermark positions and scales. The blending weight c value is set to (a) 0.8, (b) 0.5, and (c) 0.3. Second row: the corresponding watermark-removed images by using the proposed algorithm.

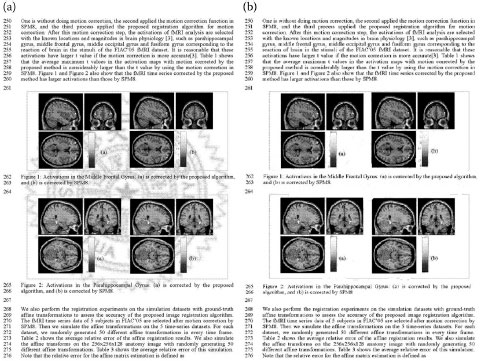

When there is no noise or picture in the watermarked document image, the watermark removal can be simply accomplished by intensity thresholding if the watermark can be precisely detected and aligned from the image. However, there could be considerable noise corruption and image degradation after image scanning in practice. Figure 4.7 depicts an example of the document images before and after paper scanning. It is obvious that the image quality is greatly degraded after paper scanning. Figure 4.8 shows an example of the watermark removal result on a scanned document image containing pictures and texts by using the proposed watermark removal algorithm. It is clear that the proposed algorithm provides a satisfactory watermark removal result in this experiment.

FIGURE 4.7 (a) The ideal watermarked document and (b) the watermarked document image after paper scanning.

FIGURE 4.8 (a) A scanned watermarked document image and (b) the watermark-removed document image by using the proposed algorithm.

In this chapter, we presented a watermark removal algorithm to remove visible watermarks from picture or text images. Our algorithm is based on first applying a robust Fourier-moment-based image registration algorithm for watermark detection, followed by a watermark removal process for picture and text images. Experimental results demonstrate satisfactory watermark removal results for both the picture and document images. In the future, we aim to extend this robust Fourier-moment-based image registration algorithm to overcome more challenging watermark-embedding problems.

Averbuch, A., Coifman, R. R., Donoho, D. L., Elad, M., and Israeli, M., Fast and accurate polar Fourier transform, Appl. Comput. Harmon. Anal., 21, 145–167, 2006.

Barni, M., Bartolini, F., Cappellini, V., and Piva, A., A DCT-domain system for robust image watermarking, Signal Process., 66(3), 357–372, 1998.

Bracewell, R. N., Chang, K. Y., Jha, A. K., and Wang, Y. H., Affine theorem for two-dimensional Fourier transform, Electron. Lett., 29(3), 304–309, 1993.

Braudaway, G. W., Magerlein, K. A., and Mintzer, F., Protecting publicly-available images with a visible image watermark, in Optical Security and Counterfeit Deterrence Techniques, Vol. 2659, R. L. van Renesse (ed.), San Jose, CA: ISandT and SPIE, pp. 126–133, 1996.

De Castro, E. and Morandi, C., Registration of translated and rotated images using finite Fourier transforms, IEEE Trans. Pattern Anal. Mach. Intell., 3, 700–703, 1987.

Hu, Y. and Kwong, S., Wavelet domain adaptive visible watermarking, IET Electron. Lett., 37(20), 1219–1220, September 2001.

Hu, Y. J., Kwong, S., and Huang, J., An algorithm for removable visible watermarking, IEEE Trans. Circuits Syst. Video Technol., 16(1), 129–133, 2006.

Huang, H. C., Chen, T. W., Pan, J. S., and Ho, J. H., Copyright protection and annotation with reversible data hiding and adaptive visible watermarking, Second International Conference on Innovative Computing, Information and Control, pp. 292–292, September 2007.

Kankanhalli, M. S., Rajmohan, R. and Ramakrishnan, K. R., Adaptive visible watermarking of images, in Proceedings of the IEEE Conference Multimedia Computing and Systems, vol. 1, pp. 568–573, 1999.

Liu, T. Y. and Tsai, W. H., Generic lossless visible watermarking—A new approach, IEEE Trans. Image Process., 19(5), 1224–1235, 2010.

Pan, W., Qin, K., and Chen, Y., An adaptable-multilayer fractional Fourier transform approach for image registration, IEEE Trans. Pattern Anal. Mach. Intell., 31(3), 400–413, 2009.

Pei, S. C. and Zeng, Y. C., A novel image recovery algorithm for visible watermarked images, IEEE Trans. Inf. Forensics Sec., 1(4), 543–550, 2006.

Pitas, I., A method for watermark casting on digital images, in Proceedings of the IEEE International Conference on Image Processing, vol. 3, pp. 215–218, 1996.

Pitas, I. and Kaskalis, P. H., Applying signatures on digital images, in Proceedings of the IEEE Workshop Nonlinear Image and Signal Processing, Neos Marmaros, Greece, pp. 460–463, June 1995.

Rao, A. R., Braudaway, G. W., and Mintzer, F. C., Automatic visible watermarking of images, Proceedings of the SPIE, Optical Security and Counterfeit Deterrence Techniques II, vol. 3314, pp. 110–121, 1998.

Reddy, B. S. and Chatterji, B. N., An FFT-based technique for translation, rotation, and scale-invariant image registration, IEEE Trans. Pattern Anal. Mach. Intell., 5(8), 1266–1270, 1996.

Van Schyndel, R. G., Tirkel, A. Z., and Osborne, C. F., A digital watermark, in Proceedings of the IEEE International Conference on Image Processing, vol. 2, pp. 86–90, November 1994.

Xia, X. G., Boncelet, C., and Arce, G., Wavelet transform based watermark for digital images, Opt. Express, 3(12), 497–511, 1998.