It’s difficult to overstate the importance of testing in the context of game design. Because games are highly dynamic systems, any number of outcomes can emerge when real people with different skills, experiences, and expectations sit down and play. Just as UX designers benefit by seeing what happens when people actually use their interfaces, game designers benefit by seeing what happens when people actually play their games. Testing early and often sets up a cycle in which testing informs game design, which generates improvements to the experience, which in turn need to be tested. In his influential book The Art of Game Design, Jesse Schell goes so far as to say that “good games are created through playtesting.”[31]

Playtesting encompasses all of the same objectives as usability testing, as well as many more. In fact, it serves so many objectives that it constitutes a distinct practice. In playtesting, you’re weighing design elements that seldom are concerns in conventional usability, such as:

How much fun players have.

Which parts of the game are too difficult or too easy.

How long people feel motivated to play before they become bored.

Whether the amount of experience required to level up is appropriate.

Whether the strengths of different skills are balanced against one another.

Whether people find the story line interesting, amusing, or heart-wrenching.

Whether players identify with the characters in the game.

Still, playtesting proceeds in much the same way as the good old usability testing we all know and love. That is, it is best handled as frequently scheduled one-on-one sessions with participants who are asked to think aloud as they complete a set of objectives while a facilitator records observations about their actions. UX designers should feel very much at home in such playtests and find that their existing skills translate quite well. For this reason, I won’t dwell on the basic procedures and logistics of playtesting, but instead on the considerations that are specific to games.

In playtesting, designers need to look for a very broad set of potential problems. These include:

Usability. As we all know, usability is the extent to which players understand the interface and are able to successfully operate it to achieve intended tasks.

Ergonomics. Often a concern of conventional testing, ergonomics is especially important in games, because each has its own custom mapping of controls. You may find that the placement of the buttons assigned to aiming and firing makes it too difficult for players to do both at the same time, for example.

Aesthetics. In addition to the visual and aural design of the game, aesthetics includes the storytelling elements of narrative and character development, the tactile experience of vibrating and force feedback controllers, and the game’s sense of humor. Testing is a great opportunity to see whether the jokes fall flat or people think the story is pretentious.

Agility. How much physical skill the game demands of the player to succeed. Testers need to consider questions like whether a jump is too difficult for players to time correctly, or whether too many enemies are firing on the player at once.

Balance. The attributes assigned to the different elements in the game must work in combination to create an experience that’s seen as fair and equitable.

Puzzles. Players must be able to solve the cognitive challenges that the game presents. For each puzzle encountered, are most people able to “get it” with the right amount of applied thought, or do they tend to become irrevocably stumped?

Motivation. Players should perceive the rewards of playing as sufficient to continue trying. Do players take an active interest in the game? If so, how long is it sustained? At what point do people lose interest, and why? Would they be better motivated by different rewards or game structures?

Affect. Most important, playtesting needs to examine whether players have positive feelings about the experience. When they’re playing, are they enjoying themselves? Are they bored, frustrated, or amused? After playing, do they regard the experience as time well spent? Do they want to continue playing the game after the playtest is over?

Many of the guidelines discussed in this section apply to usability testing as well, but they take on a pronounced importance in the context of playtesting.

Because games are meant to be enjoyable experiences, a large number of people are wonderfully enthusiastic about participating in playtesting. Although these players are a great resource, keep in mind that they might not play the same way other important elements of your target audience would play. Recruiting exclusively from eager volunteers may result in oversampling players who are disproportionately skilled, knowledgeable, or positively biased toward gaming. Such a misrepresentative sample can distort the picture of the game that emerges from testing.

In particular, many of the applications of game design discussed in this book are targeted at traditional gamers and nongamers alike. Make a concerted effort to recruit test participants who represent the diversity of your actual target audience. Be sure to sample among genders, ages, ethnicities, income levels, education levels, and game aptitudes in proportion to the communities in which you’ll be promoting the game. If you’re targeting a very specific gamer demographic (for example, unmarried 24- to 30-year-old female college graduates living in urban areas and working in finance), focus on just those people. Write up a set of screener questions to probe for these criteria, and conduct phone interviews before accepting participants into the test. If you can’t get the participants you need from volunteers alone, consider enlisting the help of an agency.

Lab-based testing always has the disadvantage of creating an artificial environment that can alter the way people behave in the real world. The testing environment is particularly a concern for playtesting, because players would normally feel very much at ease while gaming. Seating them in a testing lab in front of one-way glass from which muffled comments and chuckles occasionally emanate can completely kill the experience.

Whenever possible, use remote testing and screen-sharing software to observe players using the game on their home or work computers. This method has a lot of appeal, because players are in the environment where they would normally experience the game anyway. Be sure to ask players about the location from which they’re remoting so that you can better understand the way they would typically play your game. See Nate Bolt and Tony Tulathimutte’s book Remote Research for an in-depth guide to running tests long-distance.[32]

Remote testing is not always feasible, though, especially if you’re developing for a platform other than a desktop computer. When you must do lab-based testing, do it in a location that resembles the conditions in which players actually play. Let them sprawl out on a couch, a recliner, or a beanbag chair. Banish fluorescent lighting. Paint the walls in colors other than white or gray. Offer them a beer (laws permitting). Show them where the restroom is, and invite them to take a break whenever they feel the urge. Make it feel cozy (Figure 8-1).

The question-and-answer style of facilitation that’s the norm in usability testing is too intrusive for playtesting. Players need time to focus and enter a state of flow, and there’s a significant risk that your questions will influence their behavior in the game. It may take some practice, but get used to sitting back and observing quietly. Provide minimal direction, and avoid asking questions unless you really need to. You’ll get less benefit from the think-aloud protocol as players turn their attention to the gameplay, but that’s okay, because players need time to concentrate on what they’re doing. You might break in at the worst possible moment to interrupt, and lose a chance to gain valuable insight. Let it go and wait until the next break, and then just remind the players again to try to think out loud. Take careful notes while the test is in progress, and discuss your observations with the players after they’re done.

Instead of the traditional test script, prepare a script of key events that you especially want to observe people handling. Get ready for the test by running through the game and making note of the points around which the play turns—for example, when the player needs to learn a new skill, when a hint to the solution of a puzzle is dropped, or when a tougher enemy is first introduced. Draw up a list of these events and spend some time studying it (they might happen in any order). As each event arises in the game, note the players’ level of success in the situation, their apparent understanding of what’s happening, and their subsequent action in the game.

Usability tests of conventional user interfaces seldom last longer than two hours, which is more than sufficient when a user’s typical interaction with a website lasts only a few minutes. But depending on the design, video games can run quite long. Many of the most important observations that testing can provide might not become apparent until the player is hours into the experience. On the other hand, the quality of feedback will start to diminish after a few hours, so all-day sessions aren’t the way to go either.

If you’re testing a long game, break the evaluation into multiple sessions of two to three hours each. That’ll provide enough time per sitting for people to get comfortable with the game without becoming overly fatigued. If confidentiality concerns permit, even consider allowing players to continue playing the game on their own after the observation session is done, so that they can e-mail you with additional thoughts. Extended testing outside of the formal testing environment can be an invaluable way to evaluate engagement with the game over the long term.

Early in development, you may find that the prototypes you’re testing are plagued with problems—bugs, glitches, and challenges that you never imagined a player would have. In the test, be ready to skip to a completely different track as needed to get past something that just isn’t working. If you have more tests lined up, make sure the development team is on call to start working on any problems that are exposed in the course of testing, so that you can get the most out of the next session.

In traditional UX design, the ideal is for every task to be completed with as little difficulty as possible. This is not equally true of game design, because an appropriate level of challenge is an important part of the experience. If a game demands too little, it can fail to hold the player’s interest. At the same time, it’s important to identify real problems that unnecessarily diminish the quality of the player experience.

Evaluating a game’s difficulty correctly can be a real dilemma in playtesting, where it can be difficult to distinguish real problems from valuable challenges. People may express frustration with a portion of the game, but simply removing all sources of frustration would inevitably make the game less engaging. When you observe players having difficulty in a testing session, there are a few key questions you should ask yourself before deciding on a course of action.

First consider whether the source of the difficulty is the intended challenge of the design. Suppose a game contains a puzzle that requires players to slide tiles around a board to put them in order. If players are having difficulty getting the tiles to slide in the direction they intend, then they’re spending time on the puzzle’s interface rather than the cognitive challenge of the puzzle itself. UX designers will find that their background makes them adept at identifying such usability problems and synthesizing appropriate fixes.

In a more complex case, suppose that players aren’t tripped up by the puzzle’s interface but instead are having difficulty understanding what the puzzle requires them to do, or formulating the right strategy to complete it. These are arguably parts of the challenge of the puzzle itself and are not necessarily problems demanding resolution. Again, think about whether these challenges are intended parts of the design and whether the puzzle is more enjoyable because of them. If not, then more contextual help might be in order to help people focus on the real challenge. But if they are an intended part of the design, you’ll need to consider some additional questions.

Observe whether the problem is damaging to the player’s willingness to keep at it. This question is tricky to evaluate, because a person’s feelings of frustration may be only temporary. It’s even common for players who feel stuck to get up and walk away for a while, only to come back and decide to give it another go. It’s a good idea to allow players the latitude to do this in testing, rather than making them feel strapped to the chair.

When players make comments like “This is really tough,” it’s okay to ask them to clarify whether they mean it’s good tough or bad tough. Be careful to stay neutral, though, and not to ask too many questions that might change the way your players would behave. You don’t want to lead them toward a conclusion they wouldn’t have drawn on their own.

As long as players show that they’re motivated to keep trying of their own accord, it’s a good sign that the challenge is engaging their interest and would be considered worthwhile. But if they truly throw in the towel, it’s time to assess whether the design should be changed. Even if they don’t completely give up, consider the cumulative effect of such experiences on players, and whether people are inclined to characterize the game as a whole as frustrating.

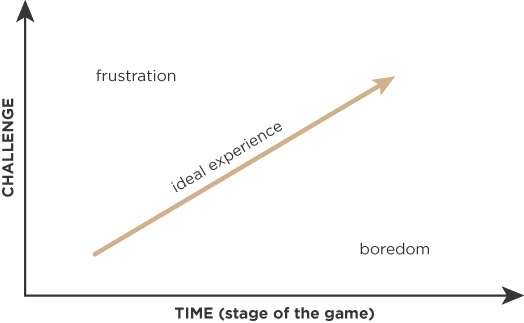

Ideally, games start out by accommodating players who have very little skill. As the game progresses, players are prepared for the incrementally higher challenge of the next stage by merit of having successfully mastered the previous one, and the game gradually becomes more demanding of their skill. Demand too much and the experience becomes frustrating; demand too little and the experience feels boring (Figure 8-2).

Figure 8-2. An ideal experience should offer a steadily increasing level of challenge over the course of the game, or else it risks becoming either too frustrating or too boring.

In this light, consider where the challenge presented to the player falls on what should be a smoothly ascending slope. If previous experience didn’t provide sufficient practice for the current challenge, then players will be more likely to feel discouraged before they’ve given the game a real chance.

Super Mario Bros., for example, starts out by requiring players to master simple jumps and face off against enemies who don’t have especially potent offensive capabilities. But by the end of the game, players are timing jumps to moving platforms while avoiding spurts of erupting lava and facing enemies that shoot fire and daggers. These feats can be difficult for any player, but they’re a reasonable challenge for players who have had time to practice with each of the elements earlier in the game. The same challenges would be exclusionary if they came too early in the game, prompting more players to drop out in frustration. In testing, measure the appropriateness of a challenge by the number of players who are able to successfully clear it, the number of attempts at it, and the total time to completion.

When trying to solve a problem, are people generally on the right track or do they attribute their difficulty to the wrong causes? Say, for example, that players need to find a key to open a door. Do they start looking for the key, or do they ignore the door and focus their attention on the useless window down the hall? People need to understand the nature of the problems they face and the actions that are available to them, and they must have a reasonable basis for figuring out what they need to do. They must be able to construct a mental model that contains the problem in order to create theories about how to solve it.

By paying attention to how players react when they’re faced with a problem, you can get a sense of whether the design is what it should be. In the case of the door and the key, you might need to provide a stronger cue to direct players’ attention to the door and to suggest that a key is available.

One of the best measures of a problem is how players feel about it once some time has passed since they overcame it. In retrospect, they may remember an experience that felt frustrating at the time as ultimately rewarding or as inconsequential in the broader context of the game.

Don’t ask people to reflect on problems right away, when negative emotions are still fresh. Instead, keep a list of the problems people encounter as they play the game, and when the testing is over, ask them to recall their most positive and negative experiences of the game. Then prompt them to comment on specific problems they faced and how they feel about them now. Persistent negative feelings about those problems are a good sign that the design should be revisited.

How can you assess whether most players will feel engaged by a game experience? You could set out to test for fun, but fun is a funny thing. It’s very subjective and can be defined in any number of ways. It’s hard to test for something so amorphous, because there are no good standards for saying what level of fun is sufficient or what type of fun players should be having.

A model that has gained a great deal of traction in game design circles suggests that you can more successfully assess potential engagement by focusing on the things that motivate engagement. The Player Experience of Need Satisfaction (PENS) model, developed by Scott Rigby and Richard Ryan of the consulting firm Immersyve and based on self-determination theory, proposes that three primary motivators drive players’ subjective experience of a game:[33]

Competence—the feeling that you are effective at what you’re doing

Autonomy—the feeling of freedom to make your own decisions and act on them

Relatedness—the feeling of authentic connections to other people

You can measure for these motivators in a playtest session by having players complete short questionnaires immediately following important events in the game, such as a difficult puzzle or a pitched battle. For example, you might ask players to rate the extent to which they agree or disagree with these statements:

“The game kept me on my toes but did not overwhelm me.” (to measure competence)

“I felt controlled and pressured to act a certain way.” (to measure autonomy)

“I formed meaningful connections with other people.” (to measure relatedness)

Asking similar questions periodically over the course of gameplay, you can develop a map of the experiences in the game that most strongly support each of these drivers. Using these measures, Rigby and Ryan report that you can project outcomes such as the likelihood that players will purchase other games from the same developer or that they will recommend the game to others.[34]

Rigby and Ryan have demonstrated correlations among the three measures of motivation and player outcomes for specific genres of games. For example, the PENS measures were found to be very accurate at predicting the likelihood that players of an adventure or role-playing game would purchase more games from the same developer. However, PENS does not predict that same outcome as reliably for first-person shooter games. Figure 8-3 shows the strengths of the relationships that Rigby and Ryan have found in each of four genres.

Figure 8-3. PENS measures were found to accurately predict specific player outcomes. The strength of the relationship varied by the genre of the game being played.

PENS is still maturing as an assessment method. More research is needed to discover how the importance of the three motivators changes for different types of players. There is not yet a robust accounting of outcomes that PENS can be used to project in other genres. And the findings across genres don’t account for design variability within those genres (not all role-playing games play the same way). Still, the demonstrated success of PENS in predicting player outcomes speaks well of motivational models of engagement. With continued development, PENS or a similar method holds the promise of reducing the risk of design by predicting long-range outcomes from short-range measures.

Playtesting may be the part of game design where UX design generalists feel most at home. Although games require significant changes in the way we usually test, we’re also accustomed to adapting our methods to accommodate a broad range of different interfaces, platforms, and design questions. It’s not an enormous leap to adapt them to playtesting. Our skills in recruitment, test design, facilitation, debriefing, and synthesizing observations into actionable recommendations all translate directly.

Playtesting is in fact currently underpracticed by game design companies. As a UX designer, you may find that providing testing consulting services can serve as a good introduction to the game design discipline. It’s a great opportunity to bring a valuable skill set to games while developing a critical eye for design.

[31] Schell, J. (2008). The art of game design. Burlington, MA: Morgan Kaufmann, p. 389.

[32] Bolt, N., & Tulathimutte, T. (2010). Remote research: Real users, real time, real research. New York, NY: Rosenfeld Media (![]() rosenfeldmedia.com/books/remote-research).

rosenfeldmedia.com/books/remote-research).

[33] Rigby, S., & Ryan, R. (2011). Glued to games: How video games draw us in and hold us spellbound. Santa Barbara, CA: Praeger, p. 10.

[34] Ryan, R. M., Rigby, C. S., & Przybylski, A. (2006). The motivational pull of video games: A self-determination theory approach. Motivation and Emotion, 30, 344–360.