![]()

Data Protection with Check Constraints and Triggers

You can’t, in sound morals, condemn a man for taking care of his own integrity. It is his clear duty.

—Joseph Conrad

One of the weirdest things I see in database implementations is that people spend tremendous amounts of time designing the correct database storage (or, at least, what seems like tremendous amounts of time to them) and then just leave the data unprotected with tables being more or less treated like buckets that will accept anything, opting to let code outside of the database layer to do all of the data protection. Honestly, I do understand the allure, in that the more constraints you apply, the harder development is in the early stages of the project, and the programmers honestly do believe that they will catch everything. The problem is, there is rarely a way to be 100% sure that all code written will always enforce every rule.

The second argument against using automatically enforced data protection is that programmers want complete control over the errors they will get back and over what events may occur that can change data. Later in this chapter, I will suggest methods that will let data formatting or even cascading insert operations occur to make sure that certain conditions in the data are met, making coding a lot easier. While the data being manipulated “magically” can be confusing initially, you have to think of the data layer as part of the application, not as a bucket with no limitations. Keeping the data from becoming an untrustworthy calamity of random bits is in everyone’s best interest.

Perhaps, in an ideal world, you could control all data input carefully, but in reality, the database is designed and then turned over to the programmers and users to “do their thing.” Those pesky users immediately exploit any weakness in your design to meet the requirements that they “thought they gave you in the first place.” No matter how many times I’ve forgotten to apply a UNIQUE constraint in a place where one was natural to be (yeah, I am preaching to myself along with the choir in this book sometimes), it’s amazing to me how quickly the data duplications start to occur. Ultimately, user perception is governed by the reliability and integrity of the data users retrieve from your database. If they detect data anomalies in their data sets (usually in skewed report values), their faith in the whole application plummets faster than a skydiving elephant who packed lunch instead of a parachute. After all, your future reputation is based somewhat on the perceptions of those who use the data on a daily basis.

One of the things I hope you will feel as you read this chapter (and keep the earlier ones in mind) is that, if at all possible, the data storage layer should own protection of the fundamental data integrity. Not that the other code shouldn’t play a part: I don’t want to have to wait for the database layer to tell me that a required value is missing, but at the same time, I don’t want a back-end loading process to have to use application code to validate that the data is fundamentally correct either. If the column allows NULLs, then I should be able to assume that a NULL value is at least in some context allowable. If the column is a nvarchar(20) column with no other data checking, I should be able to put every Unicode character in the column, and up to 20 concatenated values at that. The primary point of data protection is that the application layers ought do a good job of making it easy for the user, but the data layer can realistically be made nearly 100 percent trustworthy, whereas the application layers cannot. At a basic level, you expect keys to be validated, data to be reasonably formatted and fall within acceptable ranges, and required values to always exist, just to name a few. When those criteria can be assured, the rest won’t be so difficult, since the application layers can trust that the data they fetch from the database meets them, rather than having to revalidate.

The reason I like to have the data validation and protection logic as close as possible to the data it guards is that it has the advantage that you have to write this logic only once. It’s all stored in the same place, and it takes forethought to bypass. At the same time, I believe you should implement all data protection rules in the client, including the ones you have put at the database-engine level. This is mostly for software usability sake, as no user wants to have to wait for the round-trip to the server to find out that a column value is required when the UI could have simply indicated this to them, either with a simple message or even with visual cues. You build these simple validations into the client, so users get immediate feedback. Putting code in multiple locations like this bothers a lot of people because they think it’s

- Bad for performance

- More work

As C.S. Lewis had one of his evil characters note, “By mixing a little truth with it they had made their lie far stronger.” The fact of the matter is that these are, in fact, true statements, but in the end, it is a matter of degrees. Putting code in several places is a bit worse on performance, usually in a minor way, but done right, it will help, rather than hinder, the overall performance of the system. Is it more work? Well, initially it is for sure. I certainly can’t try to make it seem like it’s less work to do something in multiple places, but I can say that it is completely worth doing. In a good user interface, you will likely code even simple rules in multiple places, such as having the color of a column indicate that a value is required and having a check in the submit button that looks for a reasonable value instantly before trying to save the value where it is again checked by the business rule or object layer.

The real problem we must solve is that data can come from multiple locations:

- Users using custom front-end tools

- Users using generic data manipulation tools, such as Microsoft Access

- Routines that import data from external sources

- Raw queries executed by data administrators to fix problems caused by user error

Each of these poses different issues for your integrity scheme. What’s most important is that each of these scenarios (with the possible exception of the second) forms part of nearly every database system developed. To best handle each scenario, the data must be safeguarded, using mechanisms that work without the responsibility of the user.

If you decide to implement your data logic in a different tier other than directly in the database, you have to make sure that you implement it—and far more importantly, implement it correctly—in every single one of those clients. If you update the logic, you have to update it in multiple locations anyhow. If a client is “retired” and a new one introduced, the logic must be replicated in that new client. You’re much more susceptible to coding errors if you have to write the code in more than one place. Having your data protected in a single location helps prevent programmers from forgetting to enforce a rule in one situation, even if they remember everywhere else.

Because of concurrency, every statement is apt to fail due to a deadlock, or a timeout, or the data validated in the UI no longer being in the same state as it was even milliseconds ago. In Chapter 11, we will cover concurrency, but suffice it to say that errors arising from issues in concurrency are often exceedingly random in appearance and must be treated as occurring at any time. And concurrency is the final nail in the coffin of using a client tier only for integrity checking. Unless you elaborately lock all users out of the database objects you are using, the state could change and a database error could occur. Are the errors annoying? Yes, they are, but they are the last line of defense between having excellent data integrity and something quite the opposite.

In this chapter, I will look at the two basic building blocks of enforcing data integrity in SQL Server, first using declarative objects: check constraints, which allow you to define predicates on new rows in a table, and triggers, which are stored procedure style objects that can fire after a table’s contents have changed.

Check constraints are part of a class of the declarative constraints that are a part of the base implementation of a table. Basically, constraints are SQL Server devices that are used to enforce data integrity automatically on a single column or row. You should use constraints as extensively as possible to protect your data, because they’re simple and, for the most part, have minimal overhead.

One of the greatest aspects of all of SQL Server’s constraints (other than defaults) is that the query optimizer can use them to optimize queries, because the constraints tell the optimizer about some additional quality aspect of the data. For example, say you place a constraint on a column that requires that all values for that column must fall between 5 and 10. If a query is executed that asks for all rows with a value greater than 100 for that column, the optimizer will know without even looking at the data that no rows meet the criteria.

SQL Server has five kinds of declarative constraints:

- NULL: Determines if a column will accept NULL for its value. Though NULL constraints aren’t technically constraints, they behave like them.

- PRIMARY KEY and UNIQUE constraints: Used to make sure your rows contain only unique combinations of values over a given set of key columns.

- FOREIGN KEY: Used to make sure that any migrated keys have only valid values that match the key columns they reference.

- DEFAULT: Used to set an acceptable default value for a column when the user doesn’t provide one. (Some people don’t count defaults as constraints, because they don’t constrain updates.)

- CHECK: Used to limit the values that can be entered into a single column or an entire row.

We have introduced NULL, PRIMARY KEY, UNIQUE, and DEFAULT constraints in enough detail in Chapter 6; they are pretty straightforward without a lot of variation in the ways you will use them. In this section, I will focus the examples on the various ways to use check constraints to implement data protection patterns for your columns/rows. You use CHECK constraints to disallow improper data from being entered into columns of a table. CHECK constraints are executed after DEFAULT constraints (so you cannot specify a default value that would contradict a CHECK constraint) and INSTEAD OF triggers (covered later in this chapter) but before AFTER triggers. CHECK constraints cannot affect the values being inserted or deleted but are used to verify the validity of the supplied values.

The biggest complaint that is often lodged against constraints is about the horrible error messages you will get back. It is one of my biggest complaints as well, and there is very little you can do about it, although I will posit a solution to the problem later in this chapter. It will behoove you to understand one important thing: all statements should have error handling as if the database might give you back an error—because it might.

There are two flavors of CHECK constraint: column and table. Column constraints reference a single column and are used when the individual column is referenced in a modification. CHECK constraints are considered table constraints when more than one column is referenced in the criteria. Fortunately, you don’t have to worry about declaring a constraint as either a column constraint or a table constraint. When SQL Server compiles the constraint, it verifies whether it needs to check more than one column and sets the proper internal values.

We’ll be looking at building CHECK constraints using two methods:

The two methods are similar, but you can build more complex constraints using functions, though the code in a function can be more complex and difficult to manage. In this section, we’ll take a look at some examples of constraints built using each of these methods; then we’ll take a look at a scheme for dealing with errors from constraints. First, though, let’s set up a simple schema that will form the basis of the examples in this section.

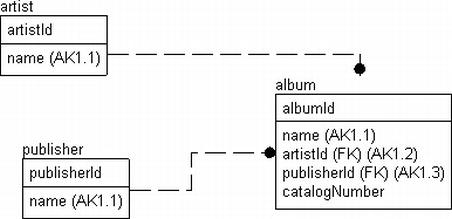

The examples in this section on creating CHECK constraints use the sample tables shown in Figure 7-1.

Figure 7-1. The example schema

To create and populate the tables, execute the following code (in the downloads, I include a simple create database for a database named Chapter7 and will put all objects in that database):

CREATE SCHEMA Music;

GO

CREATE TABLE Music.Artist

(

ArtistId int NOT NULL,

Name varchar(60) NOT NULL,

CONSTRAINT PKMusic_Artist PRIMARY KEY CLUSTERED (ArtistId),

CONSTRAINT PKMusic_Artist_Name UNIQUE NONCLUSTERED (Name)

);

CREATE TABLE Music.Publisher

(

PublisherIdint NOT NULL, primary key

Namevarchar(20) NOT NULL,

CatalogNumberMask varchar(100) NOT NULL

CONSTRAINT DfltMusic_Publisher_CatalogNumberMask default ('%'),

CONSTRAINT AKMusic_Publisher_Name UNIQUE NONCLUSTERED (Name),

);

CREATE TABLE Music.Album

(

AlbumId int NOT NULL,

Name varchar(60) NOT NULL,

ArtistId int NOT NULL,

CatalogNumber varchar(20) NOT NULL,

PublisherId int NOT NULL --not requiring this information

CONSTRAINT PKMusic_Album PRIMARY KEY CLUSTERED(AlbumId),

CONSTRAINT AKMusic_Album_Name UNIQUE NONCLUSTERED (Name),

CONSTRAINT FKMusic_Artist$records$Music_Album

FOREIGN KEY (ArtistId) REFERENCES Music.Artist(ArtistId),

CONSTRAINT FKMusic_Publisher$published$Music_Album

FOREIGN KEY (PublisherId) REFERENCES Music.Publisher(PublisherId)

);

Then seed the tables with the following data:

INSERT INTO Music.Publisher (PublisherId, Name, CatalogNumberMask)

VALUES (1,'Capitol',

'[0-9][0-9][0-9]-[0-9][0-9][0-9a-z][0-9a-z][0-9a-z]-[0-9][0-9]'),

(2,'MCA', '[a-z][a-z][0-9][0-9][0-9][0-9][0-9]'),

INSERT INTO Music.Artist(ArtistId, Name)

VALUES (1, 'The Beatles'),(2, 'The Who'),

INSERT INTO Music.Album (AlbumId, Name, ArtistId, PublisherId, CatalogNumber)

VALUES (1, 'The White Album',1,1,'433-43ASD-33'),

(2, 'Revolver',1,1,'111-11111-11'),

(3, 'Quadrophenia',2,2,'CD12345'),

A likely problem with this design is that it isn’t normalized well enough for a realistic solution. Publishers usually have a mask that’s valid at a given point in time, but everything changes. If the publishers lengthen the size of their catalog numbers or change to a new format, what happens to the older data? For a functioning system, it would be valuable to have a release-date column and catalog number mask that was valid for a given range of dates. Of course, if you implemented the table as presented, the enterprising user, to get around the improper design, would create publisher rows such as 'MCA 1989-1990', 'MCA 1991-1994', and so on and mess up the data for future reporting needs, because then, you’d have work to do to correlate values from the MCA company (and your table would be not even technically in First Normal Form!).

As a first example of a check constraint, consider if you had a business rule that no artist with a name that contains the word 'Pet' followed by the word 'Shop' is allowed, you could code the following as follows (note, all examples assume a case-insensitive collation, which is almost certainly the norm):

ALTER TABLE Music.Artist WITH CHECK

ADD CONSTRAINT chkMusic_Artist$Name$NoPetShopNames

CHECK (Name not like '%Pet%Shop%'),

Then, test by trying to insert a new row with an offending value:

INSERT INTO Music.Artist(ArtistId, Name)

VALUES (3, 'Pet Shop Boys'),

This returns the following result

Msg 547, Level 16, State 0, Line 1

The INSERT statement conflicted with the CHECK constraint "chkMusic_Artist$Name$NoPetShopNames". The conflict occurred in database "Chapter7", table "Music.Artist", column 'Name'.

thereby keeping my music collection database safe from at least one band from the ’80s.

When you create a CHECK constraint, the WITH NOCHECK setting (the default is WITH CHECK) gives you the opportunity to add the constraint without checking the existing data in the table.

Let’s add a row for another musician who I don’t necessarily want in my table:

INSERT INTO Music.Artist(ArtistId, Name)

VALUES (3, 'Madonna'),

Later in the process, it is desired that no artists with the word “Madonna” will be added to the database, but if you attempt to add a check constraint

ALTER TABLE Music.Artist WITH CHECK

ADD CONSTRAINT chkMusic_Artist$Name$noMadonnaNames

CHECK (Name not like '%Madonna%'),

rather than the happy “Command(s) completed successfully.” message you so desire to see, you see the following:

Msg 547, Level 16, State 0, Line 1

The ALTER TABLE statement conflicted with the CHECK constraint "chkMusic_Artist$Name$noMadonnaNames". The conflict occurred in database "Chapter7", table "Music.Artist", column 'Name'.

In order to allow the constraint to be added, you might specify the constraint using WITH NOCHECK rather than WITH CHECK because you now want to allow this new constraint, but there’s data in the table that conflicts with the constraint, and it is deemed too costly to fix or clean up the existing data.

ALTER TABLE Music.Artist WITH NOCHECK

ADD CONSTRAINT chkMusic_Artist$Name$noMadonnaNames

CHECK (Name not like '%Madonna%'),

The statement is executed to add the check constraint to the table definition, though using NOCHECK means that the bad value does not affect the creation of the constraint. This is OK in some cases but can be very confusing because anytime a modification statement references the column, the CHECK constraint is fired. The next time you try to set the value of the table to the same bad value, an error occurs. In the following statement, I simply set every row of the table to the same name it has stored in it:

UPDATE Music.Artist

SET Name = Name;

This gives you the following error message:

Msg 547, Level 16, State 0, Line 1

The UPDATE statement conflicted with the CHECK constraint "chkMusic_Artist$Name$noMadonnaNames". The conflict occurred in database "Chapter7", table "Music.Artist", column 'Name'.

“What?” most users will exclaim. If the value was in the table, shouldn’t it already be good? The user is correct. This kind of thing will confuse the heck out of everyone and cost you greatly in support, unless the data in question is never used. But if it’s never used, just delete it, or include a time range for the values. CHECK Name not like %Madonna% OR rowCreateDate < '20111131' could be a reasonable compromise. Using NOCHECK and leaving the values unchecked is almost worse than leaving the constraint off in many ways.

![]() Tip If a data value could be right or wrong, based on external criteria, it is best not to be overzealous in your enforcement. The fact is, unless you can be 100 percent sure, when you use the data later, you will still need to make sure that the data is correct before usage.

Tip If a data value could be right or wrong, based on external criteria, it is best not to be overzealous in your enforcement. The fact is, unless you can be 100 percent sure, when you use the data later, you will still need to make sure that the data is correct before usage.

One of the things that makes constraints excellent beyond the obvious data integrity reasons is that if the constraint is built using WITH CHECK , the optimizer can make use of this fact when building plans if the constraint didn’t use any functions and just used simple comparisons such as less than, greater than, and so on. For example, imagine you have a constraint that says that a value must be less than or equal to 10. If, in a query, you look for all values of 11 and greater, the optimizer can use this fact and immediately return zero rows, rather than having to scan the table to see whether any value matches.

If a constraint is built with WITH CHECK, it’s considered trusted, because the optimizer can trust that all values conform to the CHECK constraint. You can determine whether a constraint is trusted by using the sys.check_constraints catalog object:

SELECT definition, is_not_trusted

FROMsys.check_constraints

WHEREobject_schema_name(object_id) = 'Music'

AND name = 'chkMusic_Artist$Name$noMadonnaNames';

This returns the following results (with some minor formatting, of course):

definition is_not_trusted

------------------------------ ------------

(NOT [Name] like '%Madonna%') 1

Make sure, if at all possible, that is_not_Trusted = 0 for all rows so that the system trusts all your CHECK constraints and the optimizer can use the information when building plans.

![]() Caution Creating check constraints using the CHECK option (instead of NOCHECK) on a tremendously large table can take a very long time to apply, so often, you’ll feel like you need to cut corners to get it done fast. The problem is that the shortcut on design or implementation often costs far more in later maintenance costs or, even worse, in the user experience. If at all possible, it’s best to try to get everything set up properly, so there is no confusion.

Caution Creating check constraints using the CHECK option (instead of NOCHECK) on a tremendously large table can take a very long time to apply, so often, you’ll feel like you need to cut corners to get it done fast. The problem is that the shortcut on design or implementation often costs far more in later maintenance costs or, even worse, in the user experience. If at all possible, it’s best to try to get everything set up properly, so there is no confusion.

To make the constraint trusted, you will need to clean up the data and use ALTER TABLE <tableName> WITH CHECK CHECK CONSTRAINT constraintName to have SQL Server check the constraint and set it to trusted. Of course, this method suffers from the same issues as creating the constraint with NOCHECK in the first place (mostly, it can take forever!). But without checking the data, the constraint will not be trusted, not to mention that forgetting to reenable the constraint is too easy. For our constraint, we can try to check the values:

ALTER TABLE Music.Artist WITH CHECK CHECK CONSTRAINT chkMusic_Artist$Name$noMadonnaNames;

And it will return the following error (as it did when we tried to create it the first time):

Msg 547, Level 16, State 0, Line 1

The ALTER TABLE statement conflicted with the CHECK constraint "chkMusic_Artist$Name$noMadonnaNames". The conflict occurred in database "Chapter7", table "Music.Artist", column 'Name'.

But, if we delete the row with the name Madonna

DELETE FROM Music.Artist

WHERE Name = 'Madonna';

and try again, the ALTER TABLE statement will be execute without error, and the constraint will be trusted (and all will be well with the world!). One last thing you can do is to disable a constraint, using NOCHECK:

ALTER TABLE Music.Artist NOCHECK CONSTRAINT chkMusic_Artist$Name$noMadonnaNames;

Now, you can see that the constraint is disabled by adding an additional object property:

SELECT definition, is_not_trusted, is_disabled

FROMsys.check_constraints

WHEREobject_schema_name(object_id) = 'Music'

AND name = 'chkMusic_Artist$Name$noMadonnaNames';

which will return

definition is_not_trusted is_disabled

------------------------------ ------------ ------------

(NOT [Name] like '%Madonna%') 1 1

Then, rerun the statement to enable the statement before we continue:

ALTER TABLE Music.Artist WITH CHECK CHECK CONSTRAINT chkMusic_Artist$Name$noMadonnaNames

After that, checking the output of the sys.check_constraints query, you will see that it has been enabled.

CHECK Constraints Based on Simple Expressions

By far, most CHECK constraints are simple expressions that just test some characteristic of a value in a column or columns. These constraints often don’t reference any data other than the single column but can reference any of the columns in a single row.

As a few examples, consider the following:

- Empty strings: Prevent users from inserting one or more space characters to avoid any real input into a column—CHECK (LEN(ColumnName) > 0). This constraint is on 90 percent of the varchar and char columns in databases I design.

- Date range checks: Make sure a reasonable date is entered, for example:

- The date a rental is required to be returned should be greater than one day after the RentalDate (assume the two columns are implemented with the date datatype): CHECK (ReturnDate > dateadd(day,1,RentalDate)).

- Date of some event that’s supposed to have occurred already in the past: CHECK (EventDate <= GETDATE()).

- Value reasonableness: Make sure some value, typically a number of some sort, is reasonable for the situation. Reasonable, of course, does not imply that the value is necessarily correct for the given situation, which is usually the domain of the middle tier of objects—just that it is within a reasonable domain of values. For example:

- Values must be a nonnegative integer. This is common, because there are often columns where negative values don’t make sense (hours worked, miles driven, and so on): CHECK (MilesDriven >= 0).

- Royalty rate for an author that’s less than or equal to 30 percent. If this rate ever could be greater, it isn’t a CHECK constraint. So if 15 percent is the typical rate, the UI might warn that it isn’t normal, but if 30 percent is the absolute ceiling, it would be a good CHECK constraint: CHECK (RoyaltyRate <= .3).

CHECK constraints of this variety are always a good idea when you have situations where there are data conditions that must always be true. Another way to put this is that the very definition of the data is being constrained, not just a convention that could change fairly often or even be situationally different. These CHECK constraints are generally extremely fast and won’t negatively affect performance except in extreme situations. As an example, I’ll just show the code for the first, empty string check, because simple CHECK constraints are easy to code once you have the syntax. A common CHECK constraint that I add to string type columns (varchar, char) prevents blank data from being entered. This is because, most of the time, if a value is required, it isn’t desired that the value for a column be blank, unless having no value for the column makes sense(as opposed to having a NULL value, meaning that the value is not currently known).

For example, in the Album table, the Name column doesn’t allow NULLs. The user has to enter something, but what about when the enterprising user realizes that '' is not the same as NULL? What will be the response to an empty string? Ideally, of course, the UI wouldn’t allow such nonsense for a column that had been specified as being required, but the user just hits the space bar, but to make sure, we will want to code a constraint to avoid it.

To avoid letting a user get away with a blank row, you can add the following constraint to prevent this from ever happening again (after deleting the two blank rows). It works by using the LEN function that does a trim by default, eliminating any space characters, and checking the length:

ALTER TABLE Music.Album WITH CHECK

ADD CONSTRAINT chkMusicAlbum$Name$noEmptyString

CHECK (LEN(Name) > 0); --note,len does a trim by default, so any string

--of all space characters will return 0

Testing this with data that will clash with the new constraint

INSERT INTO Music.Album ( AlbumId, Name, ArtistId, PublisherId, CatalogNumber )

VALUES ( 4, '', 1, 1,'dummy value' );

you get the following error message

Msg 547, Level 16, State 0, Line 1

The INSERT statement conflicted with the CHECK constraint "chkMusicAlbum$Name$noEmptyString". The conflict occurred in database "Chapter7", table "Music.Album", column 'Name'.

All too often, nonsensical data is entered just to get around your warning, but that is more of a UI or managerial oversight problem than a database design concern, because the check to see whether 'ASDFASDF' is a reasonable name value is definitely not of the definite true/false variety. (Have you seen what some people name their kids?) What’s generally the case is that the user interface will then prevent such data from being created via the UI, but the CHECK constraint is there to prevent other processes from putting in completely invalid data as well.

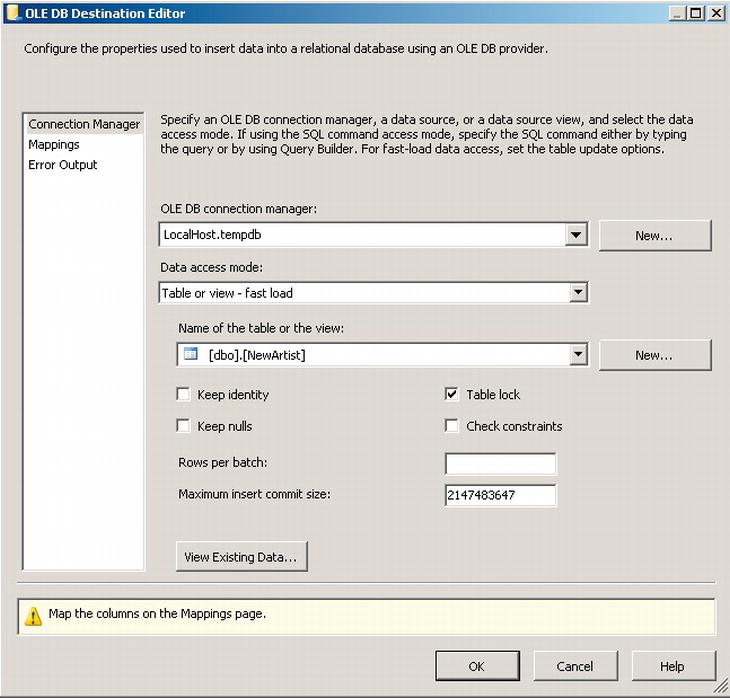

These check constraints are very useful when you are loading data into the table from an outside source. Often, when data is imported from a file, like from the Import Wizard, blank data will be propagated as blank values, and the programmers involved might not think to deal with this condition. The check constraints make sure that the data is put in correctly. And as long as you are certain to go back and recheck the trusted status and values, their existence helps to remind you even if they are ignored, like using SSIS’s bulk loading features. In Figure 7-2, you will see that you can choose to (or choose not to) check constraints on the OLEDB destination output. In this case, it may either disable the constraint, or set to not trusted to speed loading, but it will limit the data integrity and optimizer utilization of the constraint until you reset it to trusted as was demonstrated in the previous section.

Figure 7-2. Example SSIS OLEDB Output with Check constraints deselected

CHECK Constraints Using Functions

Sometimes, you really need to implement a complex data check where a simple Boolean expression using the columns in the table and base T-SQL scalar functions just won’t do. In standard SQL, you can use a subquery in your constraints. However, in SQL Server, subqueries are not allowed, but you can use a scalar T-SQL function, even if it accesses another table.

In general, using functions is a fairly atypical solution to ensuring data integrity, but it can be far more powerful and, in many ways, quite useful when you need to build slightly complex data integrity protection. For the most part, CHECK constraints usually consist of the simple task of checking a stable format or value of a single column, and for these tasks, a standard CHECK constraint using the simple <BooleanExpression> is perfectly adequate.

However, a CHECK constraint need not be so simple. A UDF can be complex and might touch several tables in the instance. Here are some examples:

- Complex scalar validations (often using CLR functions): For example, in a situation where a regular expression would be easier to use than a LIKE comparison.

- Validations that access other tables : For example, to check a domain that is based on values in several tables, rather than a simple foreign key. In the example, I will implement an entry mask that is table based, so it changes based on a related table’s value.

I should warn you that calling a UDF has a great deal of overhead, and while you might get the urge to encapsulate a simple scalar for use in a CHECK constraint, it almost always isn’t worth the overhead. As we have mentioned, CHECK constraints are executed once per row affected by the DML modification statement, and this extra cost will be compounded for every row affected by the modification query. I realize that this can be counterintuitive to a good programmer thinking that encapsulation is one of the most important goals of programming, but SQL is quite different from other types of programming in many ways because of the fact that you are pushing so much of the work to the engine, and the engine has its own way of doing things that you must respect.

Hence, it’s best to try to express your Boolean expression without a UDF unless it’s entirely necessary to access additional tables or do something more complex than a simple expression can. In the following examples, I’ll employ UDFs to provide powerful rule checking, which can implement complex rules that would prove difficult to code using a simple Boolean expression.

You can implement the UDFs in either T-SQL or a .NET language (VB .NET, C#, or any .NET language that lets you exploit the capabilities of SQL Server 2005+ to write CLR-based objects in the database). In many cases, especially if you aren’t doing any kind of table access in the code of the function, the CLR will perform much better than the T-SQL version.

As an example, I need to access values in a different table, so I’m going to build an example that implements an entry mask that varies based on the parent of a row. Consider that it’s desirable to validate that catalog numbers for albums are of the proper format. However, different publishers have different catalog number masks for their clients’ albums. (A more natural, yet distinctly more complex example would be phone numbers and addresses from around the world.)

For this example, I will continue to use the tables from the previous section. Note that the mask column, Publisher.CatalogNumberMask , needs to be considerably larger (five times larger in my example code) than the actual CatalogNumber column, because some of the possible masks use multiple characters to indicate a single character. You should also note that it’s a varchar, even though the column is stored as a char value, because using char variables as LIKE masks can be problematic because of the space padding at the end of such columns (the comparison thinks that the extra space characters that are padded on the end of the fixed-length string need to match in the target string, which is rarely what’s desired).

To do this, I build a T-SQL function that accesses this column to check that the value matches the mask, as shown (note that we’d likely build this constraint using T-SQL rather than by using the CLR, because it accesses a table in the body of the function):

CREATE FUNCTION Music.Publisher$CatalogNumberValidate

(

@CatalogNumber char(12),

@PublisherId int --not based on the Artist ID

)

RETURNS bit

AS

BEGIN

DECLARE @LogicalValue bit, @CatalogNumberMask varchar(100);

SELECT @LogicalValue = CASE WHEN @CatalogNumber LIKE CatalogNumberMask

THEN 1

ELSE 0 END

FROM Music.Publisher

WHERE PublisherId = @PublisherId;

RETURN @LogicalValue;

END;

When I loaded the data in the start of this section, I preloaded the data with valid values for the CatalogNumber and CatalogNumberMask columns:

SELECT Album.CatalogNumber, Publisher.CatalogNumberMask

FROMMusic.Album as Album

JOIN Music.Publisher as Publisher

ON Album.PublisherId = Publisher.PublisherId;

This returns the following results:

CatalogNumber CatalogNumberMask

-------------- ------------------------------------------------------------

433-43ASD-33 [0-9][0-9][0-9]-[0-9][0-9][0-9a-z][0-9a-z][0-9a-z]-[0-9][0-9]

111-11111-11 [0-9][0-9][0-9]-[0-9][0-9][0-9a-z][0-9a-z][0-9a-z]-[0-9][0-9]

CD12345 [a-z][a-z][0-9][0-9][0-9][0-9][0-9]

Now, let’s add the constraint to the table, as shown here:

ALTER TABLE Music.Album

WITH CHECK ADD CONSTRAINT

chkMusicAlbum$CatalogNumber$CatalogNumberValidate

CHECK (Music.Publisher$CatalogNumbervalidate

(CatalogNumber,PublisherId) = 1);

If the constraint gives you errors because of invalid data existing in the table (because you were adding data, trying out the table, or in real development, this often occurs with test data from trying out the UI that they are building), you can use a query like the following to find them:

SELECT Album.Name, Album.CatalogNumber, Publisher.CatalogNumberMask

FROMMusic.Album AS Album

JOIN Music.Publisher AS Publisher

on Publisher.PublisherId = Album.PublisherId

WHERE Music.Publisher$CatalogNumbervalidate(Album.CatalogNumber,Album.PublisherId) = 1;

Now, let’s attempt to add a new row with an invalid value:

INSERT Music.Album(AlbumId, Name, ArtistId, PublisherId, CatalogNumber)

VALUES (4,'Who''s Next',2,2,'1'),

This causes the error, because the catalog number of '1' doesn’t match the mask set up for PublisherId number 2:

Msg 547, Level 16, State 0, Line 1

The INSERT statement conflicted with the CHECK constraint "chkMusicAlbum$CatalogNumber$CatalogNumberValidate". The conflict occurred in database "Chapter7", table "Music.Album".

Now, changing change the catalog number to something that matches the entry mask the constraint is checking:

INSERT Music.Album(AlbumId, Name, ArtistId, CatalogNumber, PublisherId)

VALUES (4,'Who''s Next',2,'AC12345',2);

SELECT * FROM Music.Album;

This returns the following results, which you can see matches the ‘[a-z][a-z][0-9][0-9][0-9][0-9][0-9]’ mask set up for the publisher with PublisherId = 2:

| AlbumId | Name | ArtistId | CatalogNumber | PublisherId |

| ------- | --------------- | -------- | ------------- | ----------- |

| 1 | The White Album | 1 | 433-43ASD-33 | 1 |

| 2 | Revolver | 1 | 111-11111-11 | 1 |

| 3 | Quadrophenia | 2 | CD12345 | 2 |

| 4 | Who's Next | 2 | AC12345 | 2 |

Using this kind of approach, you can build any single-row validation code for your tables. As described previously, each UDF will fire once for each row and each column that was modified in the update. If you are making large numbers of inserts, performance might suffer, but having data that you can trust is worth it.

We will talk about triggers later in this chapter, but alternatively, you could create a trigger that checks for the existence of any rows returned by a query, based on the query used earlier to find improper data in the table:

SELECT *

FROM Music.Album AS Album

JOIN Music.Publisher AS Publisher

on Publisher.PublisherId = Album.PublisherId

WHERE Music.Publisher$CatalogNumberValidate

(Album.CatalogNumber, Album.PublisherId) <> 1;

There’s one drawback to this type of constraint, whether implemented in a constraint or trigger. As it stands right now, the Album table is protected from invalid values being entered into the CatalogNumber column, but it doesn’t say anything about what happens if a user changes the CatalogEntryMask on the Publisher table. If this is a concern, you’d need to add a CHECK constraint to the Publisher table that validates changes to the mask against any existing data.

![]() Caution Using user-defined functions

that access other rows in the same table is dangerous, because while the data for each row appears in the table as the function is executed, if multiple rows are updated simultaneously, those rows do not appear to be in the table, so if an error condition exists only in the rows that are being modified, your final results could end up in error.

Caution Using user-defined functions

that access other rows in the same table is dangerous, because while the data for each row appears in the table as the function is executed, if multiple rows are updated simultaneously, those rows do not appear to be in the table, so if an error condition exists only in the rows that are being modified, your final results could end up in error.

Enhancing Errors Caused by Constraints

The real downside to check constraints is the error messages they produce upon failure. The error messages are certainly things you don’t want to show to a user, if for no other reason other than they will generate help desk calls every time typical users see them. Dealing with these errors is one of the more annoying parts of using constraints in SQL Server.

Whenever a statement fails a constraint requirement, SQL Server provides you with an ugly message and offers no real method for displaying a clean message automatically. Luckily, SQL Server 2005 implemented vastly improved error-handling capabilities in T-SQL over previous versions. In this section, I’ll briefly detail a way to refine the ugly messages you get from a constraint error message, much like the error from the previous statement:

Msg 547, Level 16, State 0, Line 1

The INSERT statement conflicted with the CHECK constraint "chkMusicAlbum$CatalogNumber$CatalogNumberValidate". The conflict occurred in database "Chapter7", table "Music.Album".

I’ll show you how to map this to an error message that at least makes some sense. First, the parts of the error message are as follows:

- Error number —Msg 547: The error number that’s passed back to the calling program. In some cases, this error number is significant; however, in most cases it’s enough to say that the error number is nonzero.

- Level —Level 16: A severity level for the message. 0 through 18 are generally considered to be user messages, with 16 being the default. Levels 19–25 are severe errors that cause the connection to be severed (with a message written to the log) and typically involve data corruption issues.

- State —State 0: A value from 0–127 that represents the state of the process when the error was raised. This value is rarely used by any process.

- Line —Line 1: The line in the batch or object where the error is occurring. This value can be extremely useful for debugging purposes.

- Error description : A text explanation of the error that has occurred.

In its raw form, this is the exact error that will be sent to the client. Using the new TRY-CATCH error handling, we can build a simple error handler and a scheme for mapping constraints to error messages (or you can do much the same thing in client code as well for errors that you just cannot prevent from your user interface). Part of the reason we name constraints is to determine what the intent was in creating the constraint in the first place. In the following code, we’ll implement a very rudimentary error-mapping scheme by parsing the text of the name of the constraint from the message, and then we’ll look this value up in a mapping table. It isn’t a “perfect” scheme, but it does the trick when using constraints as the only data protection for a situation (it is also helps you to document the errors that your system may raise as well).

First, let’s create a mapping table where we put the name of the constraint that we’ve defined and a message that explains what the constraint means:

CREATE SCHEMA utility; --used to hold objects for utility purposes

GO

CREATE TABLE utility.ErrorMap

(

ConstraintName sysname NOT NULL primary key,

Message varchar(2000) NOT NULL

);

GO

INSERT utility.ErrorMap(constraintName, message)

VALUES ('chkMusicAlbum$CatalogNumber$CatalogNumberValidate',

'The catalog number does not match the format set up by the Publisher'),

Then, we create a procedure to do the actual mapping by taking the values that can be retrieved from the ERROR_%() procedures that are accessible in a CATCH block and using them to look up the value in the ErrorMap table:

CREATE PROCEDURE utility.ErrorMap$MapError

(

@ErrorNumber int = NULL,

@ErrorMessage nvarchar(2000) = NULL,

@ErrorSeverity INT= NULL

) AS

BEGIN

SET NOCOUNT ON

--use values in ERROR_ functions unless the user passes in values

SET @ErrorNumber = Coalesce(@ErrorNumber, ERROR_NUMBER());

SET @ErrorMessage = Coalesce(@ErrorMessage, ERROR_MESSAGE());

SET @ErrorSeverity = Coalesce(@ErrorSeverity, ERROR_SEVERITY());

--strip the constraint name out of the error message

DECLARE @constraintName sysname;

SET @constraintName = substring( @ErrorMessage,

CHARINDEX('constraint "',@ErrorMessage) + 12,

CHARINDEX('"',substring(@ErrorMessage,

CHARINDEX('constraint "',@ErrorMessage) +

12,2000))-1)

--store off original message in case no custom message found

DECLARE @originalMessage nvarchar(2000);

SET @originalMessage = ERROR_MESSAGE();

IF @ErrorNumber = 547 --constraint error

BEGIN

SET @ErrorMessage =

(SELECT message

FROM utility.ErrorMap

WHERE constraintName = @constraintName

);

END

--if the error was not found, get the original message with generic 50000 error number

SET @ErrorMessage = isNull(@ErrorMessage, @originalMessage);

THROW 50000, @ErrorMessage, @ErrorSeverity;

END

GO

Now, see what happens when we enter an invalid value for an album catalog number:

BEGIN TRY

INSERT Music.Album(AlbumId, Name, ArtistId, CatalogNumber, PublisherId)

VALUES (5,'who are you',2,'badnumber',2);

END TRY

BEGIN CATCH

EXEC utility.ErrorMap$MapError;

END CATCH

The error message is as follows:

Msg 50000, Level 16, State 1, Procedure ErrorMap$mapError, Line 24

The catalog number does not match the format set up by the Publisher

rather than:

Msg 547, Level 16, State 0, Line 1

The INSERT statement conflicted with the CHECK constraint "chkMusicAlbum$CatalogNumber$CatalogNumberValidate". The conflict occurred in database "Chapter7", table "Music.Album".

This is far more pleasing, even if it was a bit of a workout getting to this new message.

Triggers are a type of stored procedure attached to a table or view that is executed automatically when the contents of a table are changed. While they share the ability to enforce data protection, they differ from constraints in being far more flexible because you can code them like stored procedures and you can introduce side effects like formatting input data or cascading an operation to another table. You can use them to enforce almost any business rule, and they’re especially important for dealing with situations that are too complex for a CHECK constraint to handle. We used triggers in the previous chapter to automatically manage row update date values.

Triggers often get a bad name because they can be pretty quirky, especially because they can kill performance when you are dealing with large updates. For example, if you have a trigger on a table and try to update a million rows, you are likely to have issues. However, for most OLTP operations in a relational database, operations shouldn’t be touching more than a handful of rows at a time. Trigger usage does need careful consideration, but where they are needed, they are terribly useful. My recommendation is to use triggers when you need to do the following:

- Perform cross-database referential integrity.

- Check inter-row rules, where just looking at the current row isn’t enough for the constraints.

- Check inter-table constraints, when rules require access to data in a different table.

- Introduce desired side effects to your data-modification queries, such as maintaining required denormalizations.

- Guarantee that no insert, update, or delete operations can be executed on a table, even if the user does have rights to perform the operation.

Some of these operations could also be done in an application layer, but for the most part, these operations are far easier and safer (particularly for data integrity) when done automatically using triggers. When it comes to data protection, the primary advantages that triggers have over constraints is the ability to access other tables seamlessly and to operate on multiple rows at once. In Appendix B, I will discuss a bit more of the mechanics of writing triggers and their limitations. In this chapter, I am going to create DML triggers to handle typical business needs.

There are two different types of DML triggers that we will make use of in this section. Each type can be useful in its own way, but they are quite different in why they are used.

- AFTER : These triggers fire after the DML statement (INSERT/UPDATE/DELETE) has affected the table. AFTER triggers are usually used for handling rules that won’t fit into the mold of a constraint, for example, rules that require data to be stored, such as a logging mechanism. You may have a virtually unlimited number of AFTER triggers that fire on INSERT, UPDATE, and DELETE, or any combination of them.

- INSTEAD OF : These triggers operate “instead of” the built-in command (INSERT, UPDATE, or DELETE) affecting the table or view. In this way, you can do whatever you want with the data, either doing exactly what was requested by the user or doing something completely different (you can even just ignore the operation altogether). You can have a maximum of one INSTEAD OF INSERT, UPDATE, and DELETE trigger of each type per table. It is allowed (but not a generally good idea) to combine all three into one and have a single trigger that fires for all three operations.

This section will be split between these two types of triggers because they have two very different sets of use cases. Since coding triggers is not one of the more well trod topics in SQL Server, in Appendix B, I will introduce trigger coding techniques and provide a template that we will use throughout this chapter (it’s the template we used in Chapter 6, too).

AFTER Triggers

AFTER triggers fire after the DML statement has completed. They are the most common trigger that people use, because they have the widest array of uses. Though triggers may not seem very useful, back in SQL Server 6.0 and earlier, there were no CHECK constraints, and even FOREIGN KEYS where just being introduced, so all data protection was managed using triggers. Other than being quite cumbersome to maintain, some fairly complex systems were created using hardware that is comparable to one of my Logitech Harmony remote controls.

In this section on AFTER triggers, I will present examples that demonstrate several forms of triggers that I use to solve problems that are reasonably common. I’ll give examples of the following usages of triggers:

- Range checks on multiple rows

- Maintaining summary values (only as necessary)

- Cascading inserts

- Child-to-parent cascades

- Maintaining an audit trail

- Relationships that span databases and servers

From these examples, you should be able to extrapolate almost any use of AFTER triggers. Just keep in mind that, although triggers are not the worst thing for performance, they should be used no more than necessary.

![]() Note For one additional example, check the section on uniquness in Chapter 8, where I will implement a type of uniqueness based on ranges of data using a trigger-based solution.

Note For one additional example, check the section on uniquness in Chapter 8, where I will implement a type of uniqueness based on ranges of data using a trigger-based solution.

The first type of check we’ll look at is the range check, in which we want to make sure that a column is within some specific range of values. You can do range checks using a CHECK constraint to validate the data in a single row (for example, column > 10) quite easily. However, you wouldn’t want to use them to validate conditions based on aggregates of multiple rows (sum(column) > 10), because if you updated 100 rows, you would have to do 100 validations where one statement could do the same work.

If you need to check that a row or set of rows doesn’t violate a given condition, usually based on an aggregate like a maximum sum, you should use a trigger. As an example, I’ll look at a simple accounting system. As users deposit and withdraw money from accounts, you want to make sure that the balances never dip below zero. All transactions for a given account have to be considered.

First, we create a schema for the accounting groups :

CREATE SCHEMA Accounting;

Then, we create a table for an account and then one to contain the activity for the account:

CREATE TABLE Accounting.Account

(

AccountNumber char(10)

constraint PKAccounting_Account primary key

--would have other columns

);

CREATE TABLE Accounting.AccountActivity

(

AccountNumber char(10) NOT NULL

constraint Accounting_Account$has$Accounting_AccountActivity

foreign key references Accounting.Account(AccountNumber),

--this might be a value that each ATM/Teller generates

TransactionNumberchar(20) NOT NULL,

Datedatetime2(3) NOT NULL,

TransactionAmountnumeric(12,2) NOT NULL,

constraint PKAccounting_AccountActivity

PRIMARY KEY (AccountNumber, TransactionNumber)

);

Now, we add a trigger to the Accounting.AccountActivity table that checks to make sure that when you sum together the transaction amounts for an Account, that the sum is greater than zero:

CREATE TRIGGER Accounting.AccountActivity$insertUpdateTrigger

ON Accounting.AccountActivity

AFTER INSERT,UPDATE AS

BEGIN

SET NOCOUNT ON;

SET ROWCOUNT 0; --in case the client has modified the rowcount

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

DECLARE @msg varchar(2000), --used to hold the error message

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

@rowsAffected int = (SELECT COUNT(*) FROM inserted);

-- @rowsAffected int = (SELECT COUNT(*) FROM deleted);

--no need to continue on if no rows affected

IF @rowsAffected = 0 RETURN;

BEGIN TRY

--[validation section]

--disallow Transactions that would put balance into negatives

IF EXISTS ( SELECT AccountNumber

FROM Accounting.AccountActivity as AccountActivity

WHERE EXISTS (SELECT *

FROM inserted

WHERE inserted.AccountNumber =

AccountActivity.AccountNumber)

GROUP BY AccountNumber

HAVING SUM(TransactionAmount) < 0)

BEGIN

IF @rowsAffected = 1

SELECT @msg = 'Account: ' + AccountNumber +

' TransactionNumber:' +

cast(TransactionNumber as varchar(36)) +

' for amount: ' + cast(TransactionAmount as varchar(10))+

' cannot be processed as it will cause a negative balance'

FROM inserted;

ELSE

SELECT @msg = 'One of the rows caused a negative balance';

THROW 50000, @msg, 16;

END

--[modification section]

END TRY

BEGIN CATCH

IF @@trancount > 0

ROLLBACK TRANSACTION;

THROW; --will halt the batch or be caught by the caller's catch block

END CATCH

END;

The key to using this type of trigger is to look for the existence of rows in the base table, not the rows in the inserted table, because the concern is how the inserted rows affect the overall status for an Account. Take this query, which we’ll use to determine whether there are rows that fail the criteria:

SELECT AccountNumber

FROM Accounting.AccountActivity as AccountActivity

WHERE EXISTS (SELECT *

FROM inserted

WHERE inserted.AccountNumber = AccountActivity.AccountNumber)

GROUP BY AccountNumber

HAVING SUM(TransactionAmount) < 0;

The key here is that we could remove the bold part of the query, and it would check all rows in the table. The WHERE clause simply makes sure that the only rows we consider are for accounts that have new data inserted. This way, we don’t end up checking all rows that we know our query hasn’t touched. Note, too, that I don’t use a JOIN operation. By using an EXISTS criteria in the WHERE clause, we don’t affect the cardinality of the set being returned in the FROM clause , no matter how many rows in the inserted table have the same AccountNumber .

To see it in action, use this code:

--create some set up test data

INSERT INTO Accounting.Account(AccountNumber)

VALUES ('1111111111'),

INSERT INTO Accounting.AccountActivity(AccountNumber, TransactionNumber,

Date, TransactionAmount)

VALUES ('1111111111','A0000000000000000001','20050712',100),

('1111111111','A0000000000000000002','20050713',100);

Now, let’s see what happens when we violate this rule:

INSERT INTO Accounting.AccountActivity(AccountNumber, TransactionNumber,

Date, TransactionAmount)

VALUES ('1111111111','A0000000000000000003','20050713',-300);

Here’s the result:

Msg 50000, Level 16, State 16, Procedure AccountActivity$insertUpdateTrigger, Line 36

Account: 1111111111 TransactionNumber:A0000000000000000003 for amount: -300.00 cannot be processed as it will cause a negative balance

The error message is the custom error message that we coded in the case where a single row was modified. Now, let’s make sure that the trigger works when we have greater than one row in the INSERT statement:

--create new Account

INSERT INTO Accounting.Account(AccountNumber)

VALUES ('2222222222'),

GO

--Now, this data will violate the constraint for the new Account:

INSERT INTO Accounting.AccountActivity(AccountNumber, TransactionNumber,

Date, TransactionAmount)

VALUES ('1111111111','A0000000000000000004','20050714',100),

('2222222222','A0000000000000000005','20050715',100),

('2222222222','A0000000000000000006','20050715',100),

('2222222222','A0000000000000000007','20050715',-201);

This causes the following error:

Msg 50000, Level 16, State 16, Procedure AccountActivity$insertUpdateTrigger, Line 36

One of the rows caused a negative balance

The multirow error message is much less informative, though you could expand it to include information about a row (or all the rows) that caused the violation with some more text, even showing the multiple failed values. Usually a simple message is sufficient to deal with, because generally if multiple rows are being modified in a single statement, it’s a batch process, and the complexity of building error messages is way more than it’s worth. Processes would likely be established on how to deal with certain errors being returned.

![]() Tip In the error message, note that the first error states it’s from line 36. This is line 36 of the trigger where the error message was raised. This can be valuable information when debugging triggers (and any SQL code, really). Note also that because the ROLLBACK command was used in the trigger, the batch will be terminated. This will be covered in more detail in the "Dealing with Triggers and Constraints Errors" section later in this chapter.

Tip In the error message, note that the first error states it’s from line 36. This is line 36 of the trigger where the error message was raised. This can be valuable information when debugging triggers (and any SQL code, really). Note also that because the ROLLBACK command was used in the trigger, the batch will be terminated. This will be covered in more detail in the "Dealing with Triggers and Constraints Errors" section later in this chapter.

To see the events for which a trigger fires, you can use the following query:

SELECT trigger_events.type_desc

FROM sys.trigger_events

JOIN sys.triggers

ON sys.triggers.object_id = sys.trigger_events.object_id

WHERE triggers.name = 'AccountActivity$insertUpdateTrigger';

This returns INSERT and UPDATE in two rows, because we declared the Accounting.AccountActivity$insertUpdateTrigger trigger to fire on INSERT and UPDATE operations.

Maintaining summary values is generally not necessary, and doing so typically is just a matter of poor normalization or perhaps a misguided attempt to optimize where a better database design would have sufficed. However, there are cases where some form of active summarization may be necessary:

- There is no other reasonable method available.

- The amount of data to be summarized is large.

- The amount of reads of the summary values is far greater than the activity on the lower values.

As an example, let’s extend the previous example of the Account and AccountActivity tables from the “Range Checks on Multiple Rows” section. To the Account table, I will add a BalanceAmount column:

ALTER TABLE Accounting.Account

ADD BalanceAmount numeric(12,2) NOT NULL

CONSTRAINT DfltAccounting_Account_BalanceAmount DEFAULT (0.00);

Then, we will update the Balance column to have the current value of the data in the -AccountActivity rows. First, running this query to view the expected values:

SELECT Account.AccountNumber,

SUM(coalesce(AccountActivity.TransactionAmount,0.00)) AS NewBalance

FROM Accounting.Account

LEFT OUTER JOIN Accounting.AccountActivity

ON Account.AccountNumber = AccountActivity.AccountNumber

GROUP BY Account.AccountNumber;

This returns the following:

AccountNumber NewBalance

------------- ----------

1111111111 200.00

2222222222 0.00

Now, update the BalanceAmount column values to the existing rows using the following statement:

WITH Updater as (

SELECT Account.AccountNumber,

SUM(coalesce(TransactionAmount,0.00)) as NewBalance

FROM Accounting.Account

LEFT OUTER JOIN Accounting.AccountActivity

On Account.AccountNumber = AccountActivity.AccountNumber

GROUP BY Account.AccountNumber)

UPDATE Account

SET BalanceAmount = Updater.NewBalance

FROM Accounting.Account

JOIN Updater

ON Account.AccountNumber = Updater.AccountNumber;

That statement will make the basis of our changes to the trigger that we added in the previous section (the changes appear in bold). The only change that needs to be made is to filter the Account set down to the accounts that were affected by the DML that cause the trigger to fire. Using an EXISTS filter lets you not have to worry about whether one new row was created for the account or 100.

ALTER TRIGGER Accounting.AccountActivity$insertUpdateTrigger

ON Accounting.AccountActivity

AFTER INSERT,UPDATE AS

BEGIN

SET NOCOUNT ON;

SET ROWCOUNT 0; --in case the client has modified the rowcount

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

DECLARE @msg varchar(2000), --used to hold the error message

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

@rowsAffected int = (SELECT COUNT(*) FROM inserted);

-- @rowsAffected int = (SELECT COUNT(*) FROM deleted);

BEGIN TRY

--[validation section]

--disallow Transactions that would put balance into negatives

IF EXISTS ( SELECT AccountNumber

FROM Accounting.AccountActivity as AccountActivity

WHERE EXISTS (SELECT *

FROM inserted

WHERE inserted.AccountNumber =

AccountActivity.AccountNumber)

GROUP BY AccountNumber

HAVING sum(TransactionAmount) < 0)

BEGIN

IF @rowsAffected = 1

SELECT @msg = 'Account: ' + AccountNumber +

' TransactionNumber:' +

cast(TransactionNumber as varchar(36)) +

' for amount: ' + cast(TransactionAmount as varchar(10))+

' cannot be processed as it will cause a negative balance'

FROM inserted;

ELSE

SELECT @msg = 'One of the rows caused a negative balance';

THROW 50000, @msg, 16;

END

--[modification section]

IF UPDATE (TransactionAmount)

BEGIN

;WITH Updater as (

SELECT Account.AccountNumber,

SUM(coalesce(TransactionAmount,0.00)) as NewBalance

FROM Accounting.Account

LEFT OUTER JOIN Accounting.AccountActivity

On Account.AccountNumber = AccountActivity.AccountNumber

--This where clause limits the summarizations to those rows

--that were modified by the DML statement that caused

--this trigger to fire.

WHERE EXISTS (SELECT *

FROM Inserted

WHERE Account.AccountNumber = Inserted.AccountNumber)

GROUP BY Account.AccountNumber)

UPDATE Account

SET BalanceAmount = Updater.NewBalance

FROM Accounting.Account

JOIN Updater

on Account.AccountNumber = Updater.AccountNumber;

END

END TRY

BEGIN CATCH

IF @@trancount > 0

ROLLBACK TRANSACTION;

THROW; --will halt the batch or be caught by the caller's catch block

END CATCH

END;

Now, insert a new row into AccountActivity :

INSERT INTO Accounting.AccountActivity(AccountNumber, TransactionNumber,

Date, TransactionAmount)

VALUES ('1111111111','A0000000000000000004','20050714',100);

Next, examine the state of the Account table, comparing it to the query used previously to check what the balances should be:

SELECT Account.AccountNumber,Account.BalanceAmount,

SUM(coalesce(AccountActivity.TransactionAmount,0.00)) AS SummedBalance

FROM Accounting.Account

LEFT OUTER JOIN Accounting.AccountActivity

ON Account.AccountNumber = AccountActivity.AccountNumber

GROUP BY Account.AccountNumber,Account.BalanceAmount;

which returns the following, showing that the sum is the same as the stored balance:

| AccountNumber | BalanceAmount | SummedBalance |

| ------------- | ------------- | ------------- |

| 1111111111 | 300.00 | 300.00 |

| 2222222222 | 0.00 | 0.00 |

The next step—the multirow test—is very important when building a trigger such as this. You need to be sure that if a user inserts more than one row at a time, it will work. In our example, we will insert rows for both accounts in the same DML statement and two rows for one of the accounts. This is not a sufficient test necessarily, but it’s enough for demonstration purposes at least:

INSERT into Accounting.AccountActivity(AccountNumber, TransactionNumber,

Date, TransactionAmount)

VALUES ('1111111111','A0000000000000000005','20050714',100),

('2222222222','A0000000000000000006','20050715',100),

('2222222222','A0000000000000000007','20050715',100);

Again, the query on the AccountActivity and Account should show the same balances:

| AccountNumber | BalanceAmount | SummedBalance |

| ------------- | ------------- | ------------- |

| 1111111111 | 400.00 | 400.00 |

| 2222222222 | 200.00 | 200.00 |

If you wanted a DELETE trigger (and in the case of a ledger like this, you generally do not want to actually delete rows but rather insert offsetting values, so to delete a $100 insert, you would insert a –100), the only difference is that instead of the EXISTS condition referring to the inserted table, it needs to refer to the deleted table:

CREATE TRIGGER Accounting.AccountActivity$deleteTrigger

ON Accounting.AccountActivity

AFTER DELETE AS

BEGIN

SET NOCOUNT ON;

SET ROWCOUNT 0; --in case the client has modified the rowcount

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

DECLARE @msg varchar(2000), --used to hold the error message

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

-- @rowsAffected int = (SELECT COUNT(*) FROM inserted);

@rowsAffected int = (SELECT COUNT(*) FROM deleted);

BEGIN TRY

--[validation section]

--[modification section]

;WITH Updater as (

SELECT Account.AccountNumber,

SUM(coalesce(TransactionAmount,0.00)) as NewBalance

FROM Accounting.Account

LEFT OUTER JOIN Accounting.AccountActivity

On Account.AccountNumber = AccountActivity.AccountNumber

WHERE EXISTS (SELECT *

FROM deleted

WHERE Account.AccountNumber =

deleted.AccountNumber)

GROUP BY Account.AccountNumber, Account.BalanceAmount)

UPDATE Account

SET BalanceAmount = Updater.NewBalance

FROM Accounting.Account

JOIN Updater

ON Account.AccountNumber = Updater.AccountNumber;

END TRY

BEGIN CATCH

IF @@trancount > 0

ROLLBACK TRANSACTION;

THROW; --will halt the batch or be caught by the caller's catch block

END CATCH

END;

GO

So now, delete a couple of transactions:

DELETE Accounting.AccountActivity

WHERE TransactionNumber in ('A0000000000000000004',

'A0000000000000000005'),

Checking the balance now, you will see that the balance for Account 1111111111 has been decremented to 200:

| AccountNumber | BalanceAmount | SummedBalance |

| ------------- | ------------- | ------------- |

| 1111111111 | 200.00 | 200.00 |

| 2222222222 | 200.00 | 200.00 |

I can’t stress enough that this type of strategy should be the exception, not the rule. But when you have to implement summary data, using a trigger is the way to go in most cases. One of the more frustrating things to have to deal with is summary data that is out of whack, because it takes time away from making progress with creating new software.

![]() Caution I want to reiterate to be extremely careful to test your code extra thoroughly when you include denormalizations like this. If you have other DML in triggers that insert or update into the same table, there is a chance that the trigger will not fire again, based on how you have the nested triggers and recursive triggers options set that I discussed previously. Good testing strategies are important in all cases really, but the point here is to be extra careful when using triggers to modify data.

Caution I want to reiterate to be extremely careful to test your code extra thoroughly when you include denormalizations like this. If you have other DML in triggers that insert or update into the same table, there is a chance that the trigger will not fire again, based on how you have the nested triggers and recursive triggers options set that I discussed previously. Good testing strategies are important in all cases really, but the point here is to be extra careful when using triggers to modify data.

A cascading insert refers to the situation whereby after a row is inserted into a table, one or more other new rows are automatically inserted into other tables. This is frequently done when you need to initialize a row in another table, quite often a status of some sort.

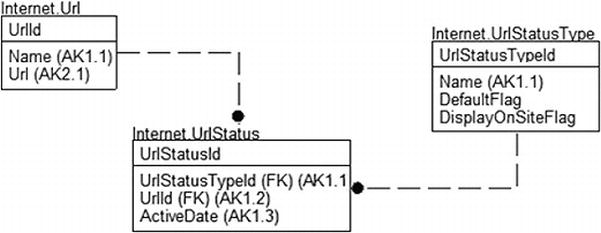

For this example, we’re going to build a small system to store URLs for a website-linking system. During low-usage periods, an automated browser connects to the URLs so that they can be verified (hopefully, limiting broken links on web pages).

To implement this, I’ll use the set of tables in Figure 7-3.

Figure 7-3. Storing URLs for a website-linking system

CREATE SCHEMA Internet;

GO

CREATE TABLE Internet.Url

(

UrlId int not null identity(1,1) constraint PKUrl primary key,

Name varchar(60) not null constraint AKInternet_Url_Name UNIQUE,

Url varchar(200) not null constraint AKInternet_Url_Url UNIQUE

);

--Not a user manageable table, so not using identity key (as discussed in

--Chapter 5 when I discussed choosing keys) in this one table. Others are

--using identity-based keys in this example.

CREATE TABLE Internet.UrlStatusType

(

UrlStatusTypeId int not null

CONSTRAINT PKInternet_UrlStatusType PRIMARY KEY,

Name varchar(20) NOT NULL

CONSTRAINT AKInternet_UrlStatusType UNIQUE,

DefaultFlag bit NOT NULL,

DisplayOnSiteFlag bit NOT NULL

);

CREATE TABLE Internet.UrlStatus

(

UrlStatusId int not null identity(1,1)

CONSTRAINT PKInternet_UrlStatus PRIMARY KEY,

UrlStatusTypeId int NOT NULL

CONSTRAINT

Internet_UrlStatusType$defines_status_type_of$Internet_UrlStatus

REFERENCES Internet.UrlStatusType(UrlStatusTypeId),

UrlId int NOT NULL

CONSTRAINT Internet_Url$has_status_history_in$Internet_UrlStatus

REFERENCES Internet.Url(UrlId),

ActiveTime datetime2(3) NOT NULL,

CONSTRAINT AKInternet_UrlStatus_statusUrlDate

UNIQUE (UrlStatusTypeId, UrlId, ActiveTime)

);

--set up status types

INSERT Internet.UrlStatusType (UrlStatusTypeId, Name,

DefaultFlag, DisplayOnSiteFlag)

VALUES (1, 'Unverified',1,0),

(2, 'Verified',0,1),

(3, 'Unable to locate',0,0);

The Url table holds URLs to different sites on the Web. When someone enters a URL, we initialize the status to 'Unverified'. A process should be in place in which the site is checked often to make sure nothing has changed (particularly the unverified ones!).

You begin by building a trigger that inserts a row into the UrlStatus table on an insert that creates a new row with the UrlId and the default UrlStatusType based on DefaultFlag having the value of 1.

CREATE TRIGGER Internet.Url$afterInsertTrigger

ON Internet.Url

AFTER INSERT AS

BEGIN

SET NOCOUNT ON;

SET ROWCOUNT 0; --in case the client has modified the rowcount

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

DECLARE @msg varchar(2000), --used to hold the error message

--use inserted for insert or update trigger, deleted for update or delete trigger

--count instead of @@rowcount due to merge behavior that sets @@rowcount to a number

--that is equal to number of merged rows, not rows being checked in trigger

@rowsAffected int = (SELECT COUNT(*) FROM inserted);

-- @rowsAffected int = (SELECT COUNT(*) FROM deleted);

BEGIN TRY

--[validation section]

--[modification section]

--add a row to the UrlStatus table to tell it that the new row

--should start out as the default status

INSERT INTO Internet.UrlStatus (UrlId, UrlStatusTypeId, ActiveTime)

SELECT inserted.UrlId, UrlStatusType.UrlStatusTypeId,

SYSDATETIME()

FROM inserted

CROSS JOIN (SELECT UrlStatusTypeId

FROM UrlStatusType

WHERE DefaultFlag = 1) as UrlStatusType;

--use cross join with a WHERE clause

--as this is not technically a join

--between inserted and UrlType

END TRY

BEGIN CATCH

IF @@trancount > 0

ROLLBACK TRANSACTION;

THROW; --will halt the batch or be caught by the caller's catch block

END CATCH

END;

The idea here is that for every row in the inserted table, we’ll get the single row from the UrlStatusType table that has DefaultFlag equal to 1. So, let’s try it:

INSERT Internet.Url(Name, Url)

VALUES ('More info can be found here',

'http://sqlblog.com/blogs/louis_davidson/default.aspx'),

SELECT * FROM Internet.Url;

SELECT * FROM Internet.UrlStatus;

This returns the following results:

| UrlId | Name | Url |

| ----- | --------------------------- | ---------------------------------------------------- |

| 1 | More info can be found here | http://sqlblog.com/blogs/louis_davidson/default.aspx |

| UrlStatusId | UrlStatusTypeId | UrlId | ActiveTime |

| ----------- | --------------- | ----- | ----------------------- |

| 1 | 1 | 1 | 2011-06-10 00:11:40.480 |

![]() Tip It’s easier if users can’t modify tables such as the UrlStatusType table, so there cannot be a case where there’s no status set as the default (or too many rows). If there were no default status, the URL would never get used, because the processes wouldn’t see it. You could also create a trigger to check to see whether more than one row is set to the default, but the trigger still doesn’t protect you against there being zero rows that are set to the default.

Tip It’s easier if users can’t modify tables such as the UrlStatusType table, so there cannot be a case where there’s no status set as the default (or too many rows). If there were no default status, the URL would never get used, because the processes wouldn’t see it. You could also create a trigger to check to see whether more than one row is set to the default, but the trigger still doesn’t protect you against there being zero rows that are set to the default.

Cascading from Child to Parent

All the cascade operations that you can do with constraints (CASCADE or SET NULL) are strictly from parent to child. Sometimes, you want to go the other way around and delete the parents of a row when you delete the child. Typically, you do this when the child is what you’re interested in and the parent is simply maintained as an attribute of the child. Also typical of this type of situation is that you want to delete the parent only if all children are deleted.

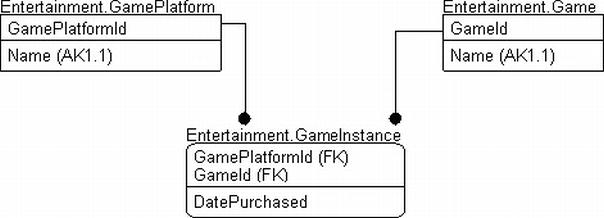

In our example, we have a small model of my game collection. I have several game systems and quite a few games. Often, I have the same game on multiple platforms, so I want to track this fact, especially if I want to trade a game that I have on multiple platforms for something else. So, we have a table for the GamePlatform (the system) and another for the actual game itself. This is a many-to-many relationship, so we have an associative entity called GameInstance to record ownership, as well as when the game was purchased for the given platform. Each of these tables has a delete-cascade relationship, so all instances are removed. What about the games, though? If all GameInstance rows are removed for a given game, we want to delete the game from the database. The tables are shown in Figure 7-4.

Figure 7-4. The game tables

--start a schema for entertainment-related tables

CREATE SCHEMA Entertainment;

go

CREATE TABLE Entertainment.GamePlatform

(

GamePlatformId int NOT NULL CONSTRAINT PKEntertainmentGamePlatform PRIMARY KEY

Name varchar(50) NOT NULL CONSTRAINT AKEntertainmentGamePlatform_Name UNIQUE

);

CREATE TABLE Entertainment.Game

(

GameId int NOT NULL CONSTRAINT PKEntertainmentGame PRIMARY KEY,

Namevarchar(50) NOT NULL CONSTRAINT AKEntertainmentGame_Name UNIQUE

--more details that are common to all platforms

);

--associative entity with cascade relationships back to Game and GamePlatform