6

Search

WHAT'S IN THIS CHAPTER?

- Using the Enterprise Search product line

- Understanding architecture and user experience features

- Customizing search, from user experience and social search to federation, connectors, and content processing

- Recognizing common patterns for developing search extensions and search-based applications

- Exploring examples you can use to get started on custom search projects

Search is now everywhere, and most people use it numerous times a day to keep from drowning in information. Enterprise search provides a powerful way to access information of all types, and it lets you bridge across the information silos that proliferate in many organizations. Perhaps because of the simplicity and ubiquity of the search user experience, the complexity of search under the hood is rarely appreciated until you get into it. Developing with search and SharePoint 2010 is rewarding and not difficult, although it requires an understanding of search and sometimes a different mindset.

Microsoft has been in the Enterprise Search business for a long time. Over the last three years focus in this area has increased, including the introduction of Search Server 2008 and the acquisition of FAST Search and Transfer. Search is becoming a strategic advantage in many businesses, and Microsoft's investments reflect this.

Enterprise Search delivers content for the benefit of the employees, customers, partners, or affiliates of a single company or organization. Companies, government agencies, and other organizations maintain huge amounts of information in electronic form, including spreadsheets, policy manuals, and web pages, just to name a few. Contemporary private datasets can now exceed the size of the entire Internet in the 1990s — running into petabytes or even exabytes of information. This content may be stored in file shares, websites, content management systems, or databases, but without the ability to find this corporate knowledge, managing even a small company would be difficult.

Enterprise Search applications are found throughout most enterprises — both in obvious places (like intranet search) and in less visible ways (search-driven applications often don't look like “search”). Search supports all these applications, and complements all the other workloads within SharePoint 2010 — Insights, Social, Composites, and the like — in powerful ways.

Learning to develop great applications, including search, will serve you and your organization very well. You can build more flexible, more powerful applications that bridge different information silos while providing a natural, simple user experience.

This chapter provides an introduction to developing with search in SharePoint 2010. First, it covers the options, capabilities, and architecture of search. A section on the most common search customizations gives you a sense of what kind of development you are likely to run into. Next, it runs through different areas of search: social search, indexing connectors, federation, content processing, ranking and relevance, the UI, and administration. In each of these areas, you get a look at the capabilities, learn how a developer can work with them, and review an example. Combining these techniques leads to the realm of search-driven applications, which we cover as a separate topic with tips for successful projects. A section on search and the cloud reviews ways to work with Office 365 and Azure. Finally, the summary gives an overview of the power of search and offers some ways to combine it with other solutions in SharePoint 2010.

SEARCH OPTIONS WITH SHAREPOINT 2010

With the 2010 wave, Microsoft added new Enterprise Search products and updated existing ones — bringing in a lot of new capabilities. Some of these are brand new, some are evolutions of the SharePoint 2007 search capabilities, and some are capabilities brought from FAST. The result is a set of options that lets you solve any search problem, but because of the number of options, it can also be confusing.

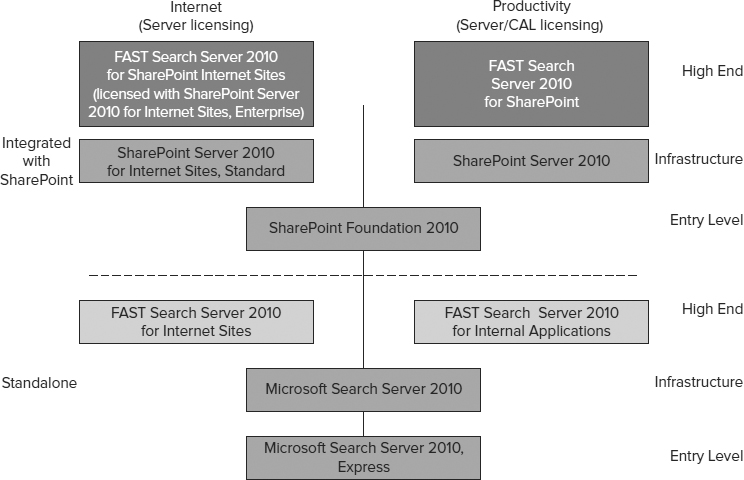

Figure 6-1 shows the Enterprise Search products in the 2010 wave. There are many options; in fact, there are 9 offerings for Enterprise Search. This is evidence of the emphasis Microsoft is putting on search, and also a byproduct of the ongoing integration of the FAST acquisition.

This lineup might seem confusing at first, and the sheer number of options is a bit daunting. As you will see, there is method to this madness. For most purposes, you will be considering only one or two of these options.

Looking at the lineup from different perspectives helps to understand it. There are three dimensions to consider:

FIGURE 6-1

- Tier (labeled along the right side of Figure 6-1): Microsoft adopted a three-tier approach in 2008 when it introduced Search Server 2008 Express and acquired FAST. These tiers are entry level, infrastructure, and high end. Search Server 2010 Express, Search Server 2010, and the search in SharePoint Foundation 2010 are all entry level; SharePoint Server flavors cover the infrastructure tier, and any option labeled “FAST” is high end.

- Integration (labeled along the left side of Figure 6-1): Search options integrated with SharePoint have features, such as social search, that build on and therefore require other parts of SharePoint. Standalone search products don't require SharePoint, but they lack these features.

- Application (labeled across the top of Figure 6-1): Applications are classified as Internet applications or Productivity applications. For the most part, the distinction between search applications inside the firewall (Productivity) and outside the firewall (Internet) is purely a licensing distinction. Inside the firewall, products are licensed per server and per client access license (CAL). Outside the firewall, it isn't possible to license clients, so products are licensed only per server. The media, documentation, support, and architecture are the same across these application areas (shown horizontally across Figure 6-1). There are a few minor feature differences, which are called out in this chapter where relevant.

Another perspective useful in understanding this lineup is that of the codebase. The acquisition of FAST brought a large codebase of high-end search code, different from the SharePoint search codebase. As the integration of FAST proceeds, ultimately all Enterprise Search options will be derived from a single common codebase.

At the moment there are three separate codebases from which Enterprise Search products are derived. The first is the SharePoint 2010 search codebase, an evolution from the MOSS 2007 search code. Search options derived from this codebase are in medium gray boxes as shown in Figure 6-1. The second is the FAST standalone codebase, a continuation of the code from FAST ESP, the flagship product provided by FAST up to this time. Search options derived from this codebase are shown in light gray boxes in Figure 6-1. The third is the FAST integrated codebase, a new one resulting from reworking the ESP code, integrating it with the SharePoint search architecture, and adding in new elements. Search options derived from this codebase are shown in dark gray boxes in Figure 6-1.

The codebase perspective is useful for developers, as it provides a sense of what to expect with APIs and system behavior. The FAST integrated codebase uses the same APIs as the SharePoint search codebase, but extends those APIs to expose additional capabilities. The FAST standalone codebase uses different APIs. Note that search products from the FAST standalone codebase are in a special status — licensed through FAST as a subsidiary and under different support programs. This book doesn't cover products from the FAST standalone codebase or the APIs specific to them.

If you consider the search options across application areas as the same, and disregard the FAST standalone codebase, you are left with five options in the Enterprise Search lineup, rather than nine. Look at each of these options and see where you might use each one. This chapter also introduces some shorter names and acronyms for each option to make the discussion simpler.

SharePoint Foundation

Microsoft SharePoint Foundation (also called SharePoint Foundation, or SPF) is a free, downloadable platform that includes basic search capabilities. SPF search is limited to content within SharePoint — no search scopes, and no refinement. SPF is in the entry-level tier and is integrated with SharePoint.

If you are using SharePoint Foundation and care about search (which is likely, because you are reading this book!), you should forget about the built-in search capability and use one of the other options. Most likely this will be Search Server Express, as it is also free.

Search Server 2010 Express

Microsoft Search Server 2010 Express (also called Search Server Express or MSSX) is a free, downloadable standalone search offering. It is intended for tactical, small-scale search applications (such as departmental sites), requiring little or no cost and IT effort. Microsoft Search Server 2008 Express was a very popular product — Microsoft reports over 100,000 downloads. There is a lot added with the 2010 wave: better connectivity, search refiners, improved relevance, and much more.

Search Server Express is an entry-level standalone product. It is limited to one server with up to 300,000 documents. It lacks many of the capabilities of SharePoint Server's search, such as taxonomy, or people and expertise search, not to mention the capabilities of FAST. It can, however, be a good enough option for many departments that require a straightforward site search.

If you have little or no budget and an immediate, simple, tactical search need, use Search Server Express. It is quick to deploy, easy to manage, and free. You can always move to one of the other options later.

Search Server 2010

Microsoft Search Server 2010 (also called Search Server or MSS) has the same functional capabilities as MSS Express, with full scale — up to about 10 million items per server and 100 million items in a system using multiple servers. It isn't free, but the per-server license cost is low. MSS is a great way to scale up for applications that start with MSS Express and grow (as they often do).

MSS is an infrastructure-tier standalone product. Both MSS and MSS Express lack some search capabilities that are available in SharePoint Server 2010, such as taxonomy support, people and expertise search, social tagging, and social search (where search results improve because of social behavior), to name a few. And, of course, MSS does not have any of the other SharePoint Server capabilities (BI, workflow, etc.) that are often mixed together with search in applications.

If you have no other applications for SharePoint Server, and need general intranet search or site search, MSS is a good choice. But in most cases, it makes more sense to use SharePoint Server 2010.

SharePoint Server 2010

Microsoft SharePoint Server 2010 (also called SharePoint Server or SP) includes a complete intranet search solution that provides a robust search capability out of the box. It has many significant improvements over its predecessor, Microsoft Office SharePoint Server 2007 (also called MOSS 2007) search. New capabilities include refinement, people and expertise search with phonetic matching, social tagging, social search, query suggestions, editing directly in a browser, and many more. Connectivity is much broader and simpler, both for indexing and federation. SharePoint Server 2010 also has a markedly improved architecture with respect to scalability, performance, and capacity. It supports a wide range of configurations, growth, and changing needs.

SharePoint Server has three license variants in the 2010 wave — all with precisely the same search functionality. With all of them, Enterprise Search is a component or “workload,” not a separate license. SharePoint Server 2010 is licensed in a typical Microsoft server/CAL model. Each server needs a server license, and each user needs a client access license (CAL). For applications where CALs don't apply (typically outside the firewall in customer-facing sites), there is SharePoint Server 2010 for Internet Sites, Standard (FIS-S) and SharePoint Server 2010 for Internet Sites, and Enterprise (FIS-E).

For the rest of this chapter, these licensing variants will be ignored, and we will refer to all of them as SharePoint Server 2010 or SP. All of them are infrastructure-tier, integrated offerings.

SharePoint Server 2010 is a good choice for general intranet search, people search, and site search applications. It is a fully functional search solution and should cover the scale and connectivity needs of most organizations. However, it is no longer the best search offered with SharePoint, given the integration of FAST in this wave.

FAST Search Server 2010 for SharePoint

Microsoft FAST Search Server 2010 for SharePoint (also called FAST Search for SharePoint or FS4SP) is a new product introduced along with SharePoint 2010. It is a high-end Enterprise Search product, providing an excellent search experience out of the box and the flexibility to customize search for very diverse needs at essentially unlimited scale. FS4SP is notably simpler to deploy and operate than other high-end search offerings. It provides high-end search, integrated with SharePoint.

The frameworks and tools used by IT professionals and developers are common across the SharePoint search codebase and the FAST integrated codebase. FAST Search for SharePoint builds on SharePoint Server, and integrates into the SharePoint 2010 architecture using some of the new elements, such as the enhanced connector framework and the federation framework. This means that FAST Search for SharePoint shares the same object models and APIs for connectors, queries, and system management. In addition, administrative and frontend frameworks are common — basically the same management console and the same Search Center web parts.

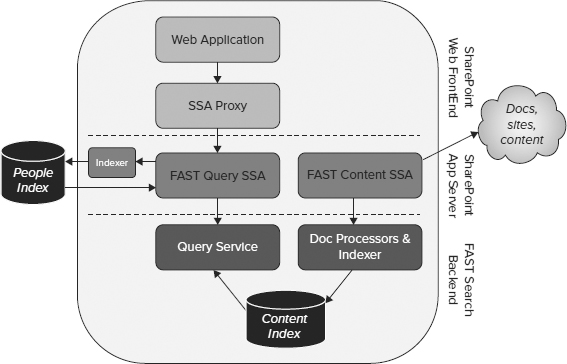

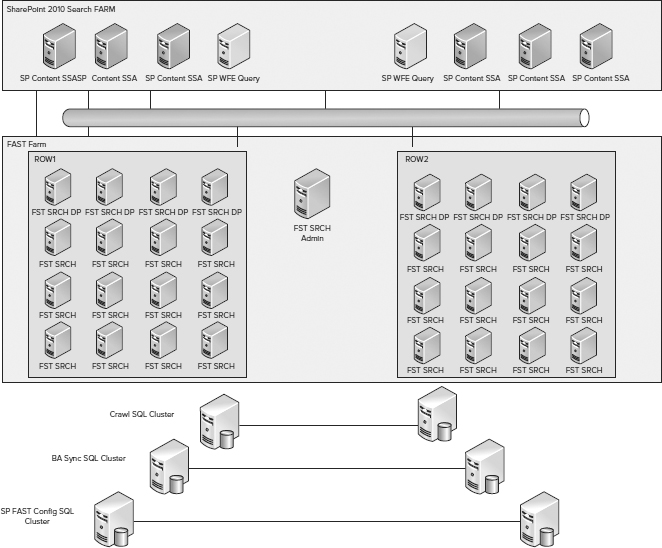

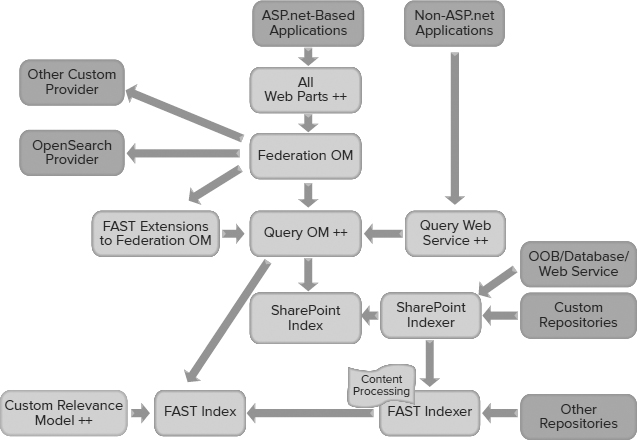

Figure 6-2 shows how FAST adds on to SharePoint Server. In operation, both SharePoint servers and FAST Search for SharePoint servers are used. SharePoint servers handle crawling, accept and federate queries, and serve up people search. FAST Search for SharePoint servers handle all content processing and core search. The result is a combination of SharePoint search and FAST search technology in a hybrid form, plus several new elements and capabilities.

FIGURE 6-2

FAST Search for SharePoint provides significant enhancements to the Enterprise Search capabilities. This means that there are capabilities and extensions to APIs that are specific to FAST Search for SharePoint. For example, there are extensions to the Query Object Model (OM), to accommodate the additional capabilities of FAST such as FAST Query Language (FQL). The biggest differences are in the functionality available: a visual and “contextual” search experience; advanced content processing, including metadata extraction; multiple relevance profiles and sorting options available to users; more control of the user experience; and extreme scale capabilities.

FAST LICENSING VARIANTS

Just like SharePoint Server, FS4SP has licensing variants for internal and external use. FS4SP is licensed per server, requires Enterprise CALs (e-CALs) for each user, and needs SharePoint Server 2010 as a prerequisite. FAST Search Server 2010 for SharePoint Internet Sites (FS4SP-IS) is for situations where CALs don't apply, typically Internet-facing sites with various search applications. In these situations, SP-FIS-E (enterprise) is a prerequisite, and SP-FIS-E server licenses can be used for either SP-FIS-E servers or FS4SP-IS servers. FS4SP and FS4SP-IS have essentially the same search functionality with a few exceptions. The most notable difference is that the thumbnails and previews that come with FS4SP aren't available for use with SP-FIS-E. We largely ignore these variants for the remainder of this chapter and refer to them both as FAST Search for SharePoint or FS4SP.

FAST Search for SharePoint handles general intranet search, people search, and site search applications, providing more capability than SharePoint Server does, including the ability to give different groups using the same site different experiences based on user context. FS4SP is particularly well suited for high-value search applications such as those described next.

Choosing the Right Search Product

Most often, organizations implementing a Microsoft Enterprise Search product choose between SharePoint Server 2010's search capabilities and FAST Search for SharePoint. SharePoint Server's search has improved significantly since 2007, so it is worth a close look, especially if you are already running SharePoint 2007's search. FAST Search for SharePoint has many capabilities beyond SharePoint Server 2010's search, but it also carries additional licensing costs. By understanding the differences in features and the requirements that can be addressed by each feature, you can determine whether you need the additional capabilities offered by FAST.

With Enterprise Search inside the firewall, there are two distinct types of search applications:

- General-purpose search applications increase employee efficiency by connecting “everyone to everything.” General-purpose search solutions increase employee efficiency by connecting a broad set of people to a broad set of information. Intranet search is the most common example of this type of search application.

- Special-purpose search applications help a specific set of people make the most of a specific set of information. Common examples include product support applications, research portals ranging from market research to competitive analysis, knowledge centers, and customer-oriented sales and service applications. This kind of application is found in many places, with variants for essentially every role in an enterprise. These applications typically are the highest-value search applications, as they are tailored to a specific task that is usually essential to the users they serve. They are also typically the most rewarding for developers.

SharePoint Server 2010's built-in search is targeted at general-purpose search applications, and can be tailored to provide specific intranet search experiences for different organizations and situations. FAST Search for SharePoint can be used for general-purpose search applications, and can be an “upgrade” from SharePoint search to provide superior search in those applications. However, it is designed with special-purpose search applications in mind. So applications you identify as fitting the “special-purpose” category should be addressed with FAST Search for SharePoint.

Because SP and FS4SP share the connector framework (with a few exceptions covered later), you won't find big differences in connectors or security, which traditionally are areas where search engines have differentiated themselves. Instead, you see big differences in content processing, user experience, and advanced query capabilities. Examples of capabilities specific to FAST Search for SharePoint are:

- Content-processing pipeline

- Metadata extraction

- Structured data search

- Deep refinement

- Visual search

- Advanced linguistics

- Visual best bets

- Development platform flexibility

- Ease of creating custom search experiences

- Extreme scale and performance

Common Platform and APIs

There are more aspects in common between SharePoint Server 2010 Search and FAST Search for SharePoint than there are differences. The frameworks and tools for use by IT pros and developers are kept as common as possible across the product line, given the additional capabilities in FAST Search Server 2010 for SharePoint. In particular, the object models for content, queries, and federation are all the same, and the web parts are largely common. All of the products described previously provide a unified Query Object Model. The result is that if you develop a custom solution that uses the Query Object Model for SharePoint Foundation 2010, for example, it continues to work if you upgrade to SharePoint Server 2010, or if you migrate your code to FAST Search Server 2010 for SharePoint.

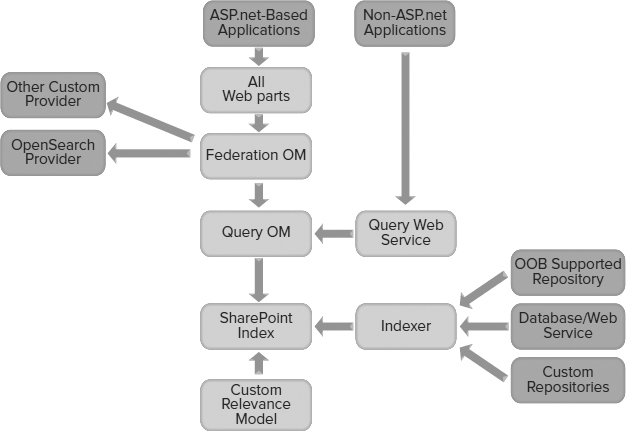

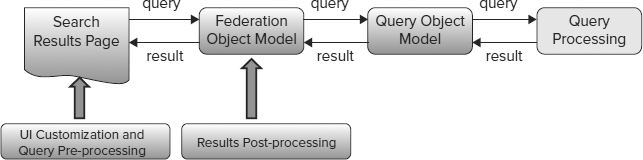

Figure 6-3 shows the “stack” involved with Enterprise Search, from the user down to the search cores.

FIGURE 6-3

For the rest of this chapter, we describe one set of capabilities and OMs and call out specific differences within the product line where relevant.

SEARCH USER EXPERIENCE

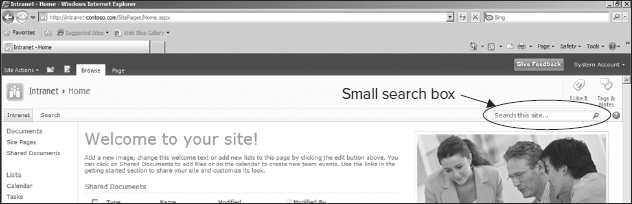

Information workers typically start searches either from the Simple Search box or by browsing to a site based on a Search Center site template. Figure 6-4 shows the Simple Search box that is available by default on all site pages. By default, this search box issues queries that are scoped to the current site, because users often navigate to sites that they know contain the information they want before they perform a search.

FIGURE 6-4

Search Center

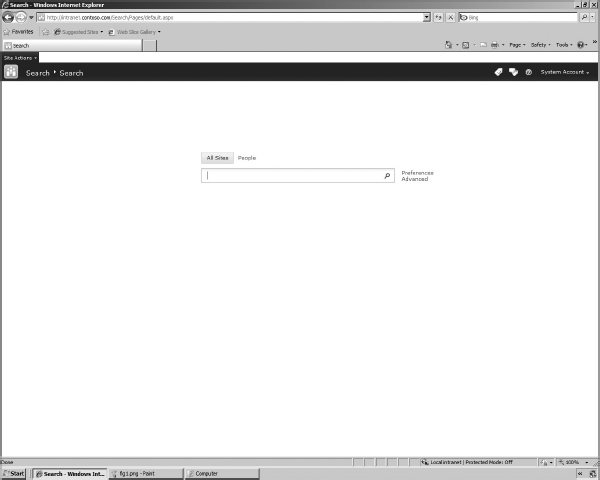

Figure 6-5 shows a search site based on the Enterprise Search Center template. Information workers use Search Center sites to search across all crawled and federated content.

FIGURE 6-5

Note how the Search Center includes an Advanced Search Box that provides links to the current user's search preferences and advanced search options. By default, the Search Center includes search tabs to toggle between All Sites and People.

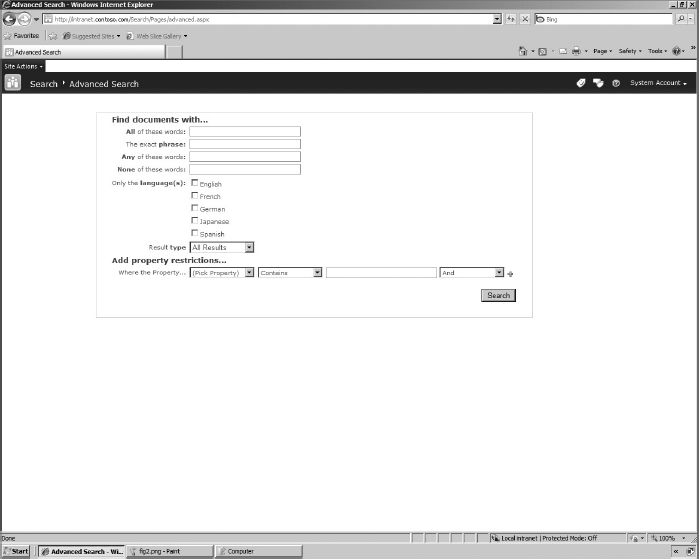

Figure 6-6 shows the default view for performing an advanced search, with access to phrase management features, language filters, result type filters, and property filters.

FIGURE 6-6

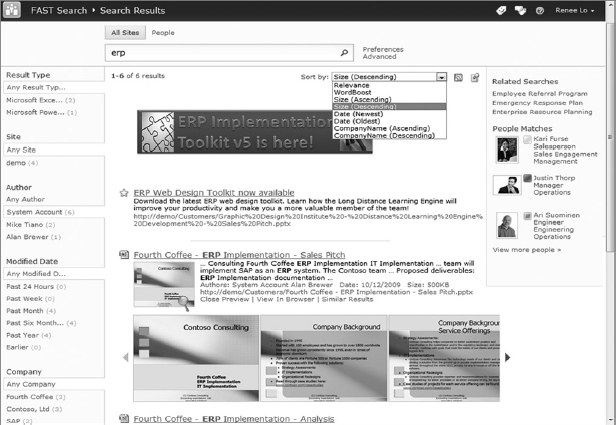

All of the search user interfaces are intuitive and easy to use, so information workers can start searches in a very straightforward way. When someone performs a search, the results are displayed on a results page, as shown in Figure 6-7. The SharePoint Sever 2010 Search core results page offers a very user-friendly and intuitive user interface. People can use simple and familiar keyword queries, and get results in a rich and easy-to-navigate layout. A Search Center site template is provided, as well as a simple search box that can be made available on every page in a SharePoint Server 2010 site.

FIGURE 6-7

Visual Cues in Search Results

FAST Search for SharePoint adds visual cues into the search experience. These provide an engaging, useful, and efficient way for information workers to interact with search results. People find information faster when they recognize documents visually. A search result from FAST Search for SharePoint is shown in Figure 6-8.

FIGURE 6-8

Thumbnails and Previews

Word documents and PowerPoint presentations can be recognized directly in search results. A thumbnail image is displayed along with the search results to provide rapid recognition of information and, thereby, faster information finding. This feature is part of the Search Core Results web part for FAST Search Server 2010 for SharePoint, and can be configured in that web part.

In addition to the thumbnail, a scrolling preview is available for PowerPoint documents, enabling an information worker to browse the actual slides in a presentation. People are often looking for a particular slide, or remember a presentation on the basis of a couple of slides. This preview helps them recognize what they're looking for quickly, without having to open the document.

Visual Best Bets

SharePoint Server 2010 Search keywords can have definitions, synonyms, and Best Bets associated with them. A Best Bet is a particular document set up to appear whenever someone searches for a keyword. It appears along with a star icon and the definition of that keyword. FAST Search Server 2010 for SharePoint enables you to define Visual Best Bets for keywords. This Visual Best Bet may be anything you can identify with a URI — an image, video, or application. It provides a simple, powerful, and very effective way to guide people's search experience.

These visual search elements are unique to FAST Search Server 2010 for SharePoint and are not provided in SharePoint Server 2010 Search.

Exploration and Refinement

SharePoint Server 2010 also provides a new way to explore information — via search refinements, as shown in Figure 6-9. These refinements are displayed down the left side of the page in the core search results. They provide self-service drill-down capabilities for filtering the search results returned. The refinements are automatically determined by SharePoint Server 2010 using tags and metadata in the search results. Such refinements include searching by the type of content (web page, document, spreadsheet, presentation, and so on), location, author, last modified date, and metadata tags. Administrators can extend the refinement panel easily to include refinements based on any managed property.

FIGURE 6-9

Refinement with FAST Search Server 2010 for SharePoint is considerably more powerful than refinement in SharePoint Server 2010. SharePoint Server 2010 automatically generates shallow refinement for search results that enable a user to apply additional filters to search results based on the values returned by the query. Shallow refinement is based on the managed properties returned from the first 50 results by the original query.

In contrast, FAST Search Server 2010 for SharePoint offers the option of deep refinement, which is based on statistical aggregation of managed property values within the entire result set.

Using deep refinement, you can find the needle in the haystack, such as a person who has written a document about a specific subject, even if this document would otherwise appear far down the result list. Deep refinement can also display counts and lets the user see the number of results in each refinement category. You can also use the statistical data returned for numeric refinements in other types of analysis.

“Conversational” Search

Search is more than “find” it is also “explore.” In many situations, the quickest and most effective way to find or explore is through a dialogue with the machine — a “conversation” allowing the user to respond to results and steer towards the answer or insight. The conversational search capabilities in FAST Search for SharePoint provide ways for information workers to interact with and refine their search results, so that they can quickly find the information they require.

Sorting Results

With FAST Search Server 2010 for SharePoint, users can sort results in different ways, such as sorting by Author, Document Size, or Title. Relevance ranking profiles can also be surfaced as sorting criteria, allowing end users to pick different relevance rankings as desired.

This sorting is considerably more powerful than sorting in SharePoint Server 2010 Search. By default, SharePoint Server 2010 sorts results on each document's relevance rank. Information workers can re-sort the results by date modified, but these are the only two sort options available in SharePoint Server 2010 without writing custom code.

Similar Results

With FAST Search Server 2010 for SharePoint, results returned by a query include links to “Similar Results.” When a user clicks on the link, the search and result set are expanded to include documents that are similar to the previous results.

Result Collapsing

FAST Search Server 2010 for SharePoint documents that have the same numeric value stored in the index are collapsed as one document in the search result. This means that documents stored in multiple locations in a source system are displayed only once during a search using the collapse search parameter. The collapsed results include links to Duplicates. When a user clicks on the link, the search result displays all versions of that document. Similar results and result collapsing are unique to FAST Search Server 2010 for SharePoint and are not provided in SharePoint Server 2010 Search.

Contextual Search Capabilities

FAST Search Server 2010 for SharePoint allows you to associate the properties of a user's context with Best Bets, Visual Best Bets, document promotions, document demotions, site promotions, and site demotions to tailor the search experience. You can use the FAST Search User Context link in the Site Collection Settings pages to define user contexts for these associations.

For example, people in different roles might receive a different visual best bets — so that a salesperson sees content about sales promotions while a consultant sees content about delivery. Similarly, people in different geographies might get different content. You can create tailored experiences easily using this feature.

Relevancy Tuning by Document or Site Promotions

SharePoint Server 2010 enables you to identify varying levels of authoritative pages that help you tune relevancy ranking by site. FAST Search Server 2010 for SharePoint adds the ability for you to specify individual documents within a site for promotion and, furthermore, enables you to associate each promotion with user contexts.

Synonyms

SharePoint Server 2010 keywords can have one-way synonyms associated with them. FAST Search Server 2010 for SharePoint extends this concept by enabling you to implement both two-way and oneway synonyms. With a two-way synonym set of, for example, {auto car}, a search for “auto” would be translated into a search for “auto OR car,” and a search for “car” would be translated into a search for “car OR auto.” With a one-way synonym set of, for example, {car coupe}, a search for “car” would translate into a search for “car OR coupe,” but a search for “coupe” would remain just “coupe.”

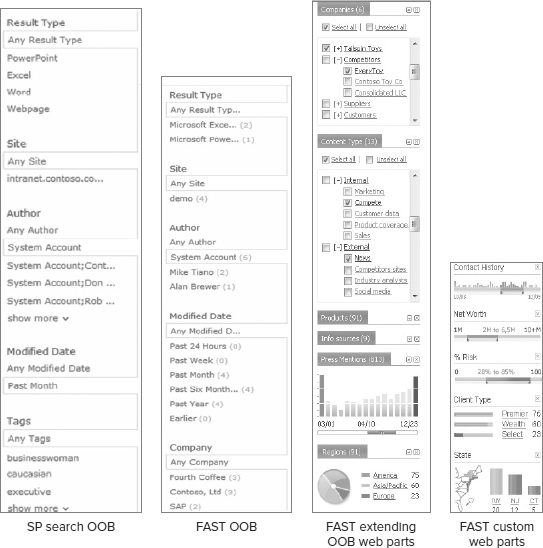

People Search

SharePoint Server 2010 provides an address-book-style name lookup experience with name and expertise matching, making it easy to find people by name, title, expertise, and organizational structure. This includes phonetic name matching that returns names that sound similar to what the user has typed in a query. It also returns all variations of common names, including nicknames.

The refiners provided for the core search results are also provided with people search results — exploring results via name, title, and various fields in a user's profile enable quick browsing and selection of people. People search results also include real-time presence through Office Communication Server, making it easy to immediately connect with people once they are found through search. Figure 6-10 shows a people search results page.

FIGURE 6-10

The people and expertise finding capabilities with SharePoint Server 2010 are a dramatic enhancement over MOSS 2007. They are remarkably innovative and effective, and tie in nicely to the social computing capabilities covered in Chapter 5. The exact same capabilities are available with FAST Search for SharePoint.

SEARCH ARCHITECTURE AND TOPOLOGIES

The search architecture has been significantly enhanced with SharePoint Server 2010. The new architecture provides fault-tolerance options and scaling to 100 million documents, well beyond the limits of MOSS 2007 search. Adding FAST provides even more flexibility and scale. Of course, these capabilities and flexibility add complexity. Understanding how search fits together architecturally will help you build applications that scale well and perform quickly.

SharePoint Search Key Components

Figure 6-11 provides an overview of the logical architecture for the Enterprise Search components in SharePoint Server 2010.

FIGURE 6-11

As shown in Figure 6-11, four main components deliver the Enterprise Search features of SharePoint Server 2010:

- Crawler: This component invokes connectors that are capable of communicating with content sources. Because SharePoint Server 2010 can crawl different types of content sources (such as SharePoint sites, other websites, file shares, Lotus Notes databases, and data exposed by Business Connectivity Services), a specific connector is used to communicate with each type of source. The crawler then uses the connectors to connect to and traverse the content sources, according to crawl rules that an administrator can define. For example, the crawler uses the file connector to connect to file shares by using the FILE:// protocol, and then traverses the folder structure in that content source to retrieve file content and metadata. Similarly, the crawler uses the web connector to connect to external websites by using the HTTP:// or HTTPS:// protocols and then traverses the web pages in that content source by following hyperlinks to retrieve web page content and metadata. Connectors load specific IFilters to read the actual data contained in files. Refer to the “New Connector Framework Features” section later in this document for more information about connectors.

- Indexer: This component receives streams of data from the crawler and determines how to store that information in a physical, file-based index. For example, the indexer optimizes the storage space requirements for words that have already been indexed, manages word breaking and stemming in certain circumstances, removes noise words, and determines how to store data in specific index partitions if you have multiple query servers and partitioned indexes. Together with the crawler and its connectors, the indexing engine meets the business requirement of ensuring that enterprise data from multiple systems can be indexed. This includes collaborative data stored in SharePoint sites, files in file shares, and data in custom business solutions, such as customer relationship management (CRM) databases, enterprises resource planning (ERP) solutions, and so on.

- Query Server: Indexed data that is generated by the indexing engine is propagated to query servers in the SharePoint farm, where it is stored in one or more index files. This process is known as “continuous propagation” that is, while indexed data is being generated or updated during the crawl process, the changes are propagated to query servers, where they are applied to the index file (or files). In this way, the data in the indexes on query servers experience a very short latency. In essence, when new data has been indexed (or existing data in the index has been updated), those changes are applied to the index files on query servers in just a few seconds. A server that is performing the query server role responds to searches from users by searching its own index files, so it is important that latency be kept to a minimum. SharePoint Server 2010 ensures this automatically. The query server is responsible for retrieving results from the index in response to a query received via the Query Object Model. The query sever is also responsible for the word breaking, noise-word removal, and stemming (if stemming is enabled) for the search terms provided by the Query Object Model.

- Query Object Model: As mentioned earlier, searches are formed and issued to query servers by the Query Object Model. This is typically done in response to a user performing a search in a SharePoint site, but it may also be in response to a search service call from either within or outside the SharePoint farm. Furthermore, the search might have been issued by custom code, for example, from a workflow or from a custom navigation component. In any case, the Query Object Model parses the search terms and issues the query to a query server in the SharePoint farm. The results of the query are returned from the query server to the Query Object Model, and the object model provides the results back to the caller.

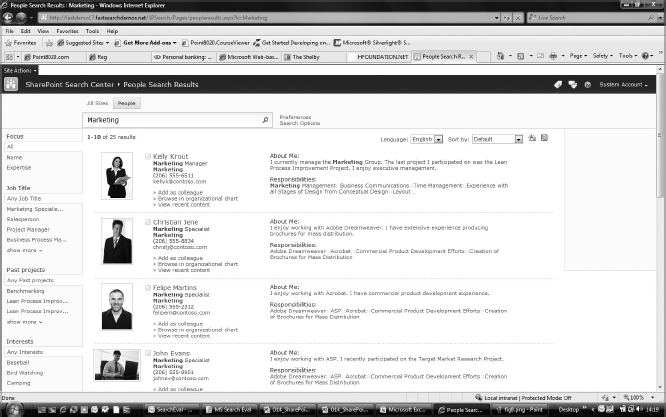

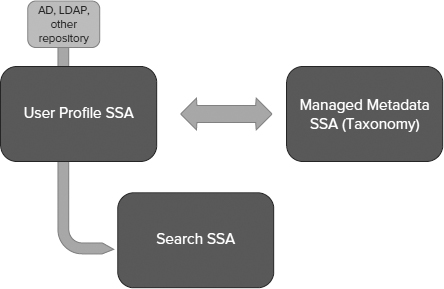

Figure 6-12 shows a process view of SharePoint Server Search. The Shared Service Architecture (SSA), new to SharePoint 2010, is used to provide a shareable and scalable service. A search SSA can work across multiple SharePoint farms, and is administered on a service level.

FIGURE 6-12

Search Topologies, Scaling, and High Availability

SharePoint Server 2010 enables you to add multiple instances of each of the crawler, indexing, and query components. This level of flexibility means that you can scale your SharePoint farms. Previous versions of SharePoint Server did not allow you to scale or provide redundancy for the indexing component.

The Enterprise Search features in SharePoint Server 2010 aim to provide subsecond query latencies for all queries, regardless of the size of your farm, and to remove bottlenecks that were present in previous versions of SharePoint Server. You can achieve these aims by implementing a scaled-out architecture. SharePoint Server 2010 enables you to scale out every logical component in your search architecture, unlike previous versions.

As shown in Figure 6-13, the architecture provides scaling at multiple levels. You can add multiple crawlers/ indexers to your farm to increase availability and to achieve higher performance for the indexing process. You can also add multiple query servers to increase availability and to achieve high query performance. All components, including administration, can be fault tolerant and can take advantage of the mirroring capabilities of the underlying databases.

FIGURE 6-13

The crawlers handle indexing as well. Each crawler can be assigned to a discrete set of content sources, so not all indexers need to index the entire corpus. This is a new capability for SharePoint Server 2010. Crawlers are now stateless, so that one can take over the activity of another, and they use the crawl database to coordinate the activity of multiple crawlers. Indexers no longer store full copies of the index; they simply propagate the indexes to query servers. Crawling and indexing are I/O and CPU intensive; adding more machines increases the crawl/index throughput linearly. Because content freshness is determined by crawl frequency, adding resources to crawling can provide fresher content, too.

When you add multiple query servers, you are really implementing index partitioning; each query server maintains a subset of the entire logical index and, therefore, does not need to query the entire index (which could be a very large file) for every query. The partitions are maintained automatically by SharePoint Server 2010, which uses a hash of each crawled document's ID to determine in which partition a document belongs. The indexed data is then propagated to the appropriate query server.

Another new feature is that property databases are also propagated to query servers so that retrieving managed properties and security descriptors is much more efficient than in Microsoft Office SharePoint Server 2007.

High Availability and Resiliency

Each search component also fulfills high-availability requirements by supporting mirroring. Figure 6-14 shows a scaled-out and mirrored architecture, sized for 100 million documents. SQL Server mirroring is used to keep multiple instances synchronized across geographic boundaries. In this example, each of the six query processing servers serves results from a partition of the index and also acts as a failover for another partition. The two crawler servers provide throughput (multiple crawlers) as well as high availability — if either crawler server fails the crawls continue.

FIGURE 6-14

As with any multi-tier system, understanding the level of performance resiliency you need is the starting point. You can then engineer for as much capacity and safety as you need.

FAST Architecture and Topology

FAST Search for SharePoint shares many architectural features of SharePoint Server 2010 search. It uses the same basic layers (crawl, index, query) architecturally. It uses the same crawler and query handlers, and the same people and expertise search. It uses the same OMs and the same administrative framework.

However, there are some major differences. FAST Search for SharePoint adds on to SharePoint server in a hybrid architecture (see Figure 6-2). This means that processing from multiple farms is used to form a single system. Understanding what processing happens in what farm can be confusing; remembering the hybrid approach with common crawlers and query OM, but separate people and content search is key to understanding the system configuration.

Figure 6-15 shows a high-level mapping of processing to farms. Light gray represents the SharePoint farm, medium gray represents the FAST backend farm, and dark gray represents other systems such as the System Center Operations Manager (SCOM).

FIGURE 6-15

SharePoint 2010 provides shared service applications (SSAs) to serve common functions across multiple site collections and farms. SharePoint Server 2010 search uses one SSA (see Figure 6-12). FAST Search for SharePoint uses two SSAs: the FAST Query SSA and the FAST Content SSA. This is a result of the hybrid architecture (shown in Figure 6-2) with SharePoint servers providing people search and FAST servers providing content search. Both SSAs run on SharePoint farms and are administered from the SharePoint 2010 central administration console.

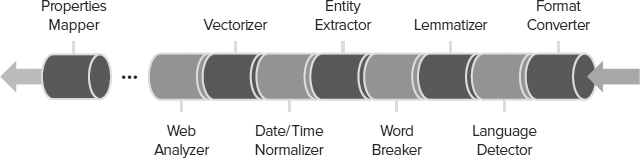

The FAST farm (also called the FAST backend) includes a Query Service, document processors that provide advanced content processing, and FAST-specific indexing connectors used for advanced content retrieval. Configuration of the additional indexing connectors is performed via XML files and through Windows PowerShell cmdlets or command-line operations, and are not visible via SharePoint Central Administration. Figure 6-16 gives an overview of where the SSAs fit in the search architecture.

FIGURE 6-16

The use of multiple SSAs to provide one FAST Search for SharePoint system is probably the most awkward aspect of FAST Search for SharePoint and the area of the most confusion. In practice, this is pretty straightforward, but you need to get your mind around the hybrid architecture and keep this in mind when you are architecting or administering a system. As a developer, you have to remember this when you are using the Administrative OM as well. The FAST backend is essentially a black box as far as SharePoint is concerned; all the administrative UI functions run in SharePoint and administer via the SSAs. One result of this is that the FAST Search for SharePoint distribution is only available in English, whereas the SharePoint distribution has many different language packs.

The FAST Query SSA handles all queries and also serves people search. If the queries are for content search, it routes them to a FAST Query Service (which resides on a FAST farm). Routing uses the default service provider property — or overrides this if you explicitly set a provider on the query request.

The FAST Query SSA also handles crawling for people search content. This is confusing to many because the people search is served from the same process that serves SharePoint 2010 search; it is in fact exactly the same search. If you look at the crawl administration screens for the FAST Query SSA, you should see it crawling the profile store, and nothing else. All other crawls appear in the FAST Content SSA.

The FAST Content SSA handles all the content crawling that goes through the SharePoint connectors or connector framework. It feeds all content as crawled properties through to the FAST farm (specifically a FAST content distributor), using extended connector properties. The FAST Content SSA includes indexing connectors that can retrieve content from any source, including SharePoint farms, internal/external web servers, Exchange public folders, line-of-business data and file shares.

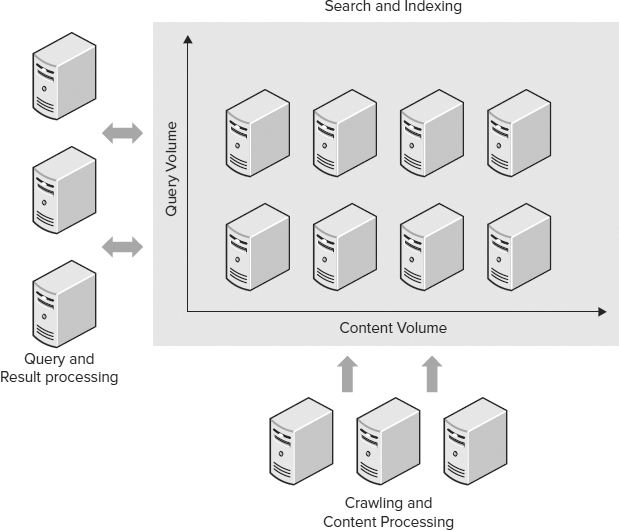

Scale Out with FAST

FAST Search for SharePoint is built on a highly modular architecture where the services can be scaled individually to achieve the desired performance. The architecture of FAST Search for SharePoint uses a row and column approach for core system scaling, as shown in Figure 6-17.

FIGURE 6-17

This architecture provides extreme scale and fault tolerance with respect to:

- Amount of indexed content: Each column handles a partition of the index, which is kept as a file on the file system (unlike SharePoint Server search index partitions, which are held in a database). By adding columns, the system can scale linearly to extreme scale — billions of documents.

- Query load: Each row handles a set of queries; multiple rows provide both fault tolerance and capacity. An extra row provides full fault tolerance, so if an application required four rows for query handling, a fifth row would provide fault tolerance. (For most inside-the-firewall implementations, a single row provides plenty of query capacity).

- Freshness (indexing latency): FAST Search for SharePoint enables you to optimize for low latency from the moment a document is changed in the source repository to the moment it is searchable. This can be done by proper dimensioning of the crawling, item processing, and indexing to fulfill your requirements. These three parts of the system can be scaled independently through the modular architecture.

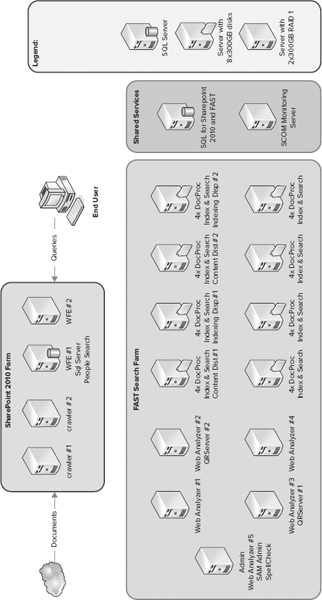

Figure 6-18 shows an example of a FAST Search for SharePoint topology, with full fault tolerance, sized for several hundred million documents.

FIGURE 6-18

This example includes both the SharePoint Server farm and the FAST Search backend farm. Because the connector framework is the same, crawling scale out and redundancy are the same as with SharePoint Server 2010 Search — unless FAST-specific connectors are in use. The query-mirroring approach is the same as with SharePoint Server Search, except that content queries are processed very lightly before handing off to FAST — so query capacity per machine or virtual machine (VM) is much higher for the SharePoint servers. The center layer is a farm of FAST Search servers, in a row-column architecture — which provides both scaling and fault tolerance.

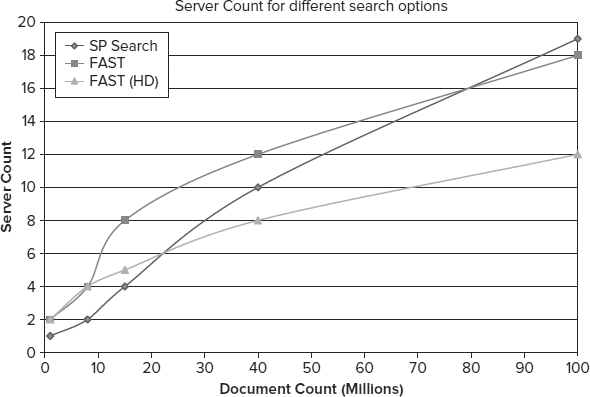

FAST Search for SharePoint provides a high-density mode that supports up to 45 million items per node (versus 15 million in standard mode). When you are working in this mode, your query response times are slightly longer. Using high-density mode is nearly always the best approach, because the hardware savings are significant and the added delay is quite small. Figure 6-19 compares the server counts for SharePoint search, FAST, and FAST with high-density mode.

FIGURE 6-19

SharePoint runs well with virtualization and with centralized storage such as Storage Area Networks (SANs), but planning and operating a large search topology with a SAN is a complex task. Search performance depends on I/O latency for random I/O, and each query may generate a dozen or more I/Os. Using SANs can be a great way to provide the needed storage, but shared SANs can be problematic, especially those engineered for low cost rather than high performance. Search performance using virtual machines is lower than on bare metal, and all VMs need adequate resources. Both SharePoint Search and FAST Search for SharePoint work well in virtual environments when set up properly. Figure 6-20 shows an example large-scale configuration planned for a virtual environment.

FIGURE 6-20

How Architecture Meets Applications

Capacity planning, scaling, and sizing are usually the domain of the IT pro; as a developer, you need only be aware that the architecture supports a much broader range of performance and availability than MOSS 2007. You can tackle the largest, most demanding applications without worrying that your application won't be available at extreme scale.

Architecture is also important for applications that control configuration and performance. You may want to set up a specific recommended configuration — or implement self-adjusting performance based on the current topology, load, and performance. The architecture supports adding new processing on the fly — in fact, the central administration console makes it easy to do so. This means that your applications can scale broadly, ensure good performance, and meet a broad range of market needs.

DEVELOPING WITH ENTERPRISE SEARCH

Developing search-powered applications has been a difficult task. Even though search is simple on the outside, it is complicated on the inside. With SharePoint 2010, developers have a development platform that is much more powerful and simpler to work with than MOSS 2007. That fact extends to search-based applications as well. Through a combination of improvements to the ways in which developers can collect data from repositories, query that data from the search index, and display the results of those queries, SharePoint Server 2010 offers a variety of possibilities for more complex and flexible search applications that access data from a wide array of locations and repositories.

There are many areas where development has become simpler — where you can cover with configuration what you used to do with code, or where you can do more with search. The new connector framework provides a flexible standard for connecting to data repositories through managed code. This reduces the amount of time and work required to build and maintain code that connects to various content sources. Enhanced keyword query syntax makes it easier to build complex queries by using standard logical operators, and the newly public Federated Search runtime object model provides a standard way of invoking those queries across all relevant search locations and repositories. The changes enable a large number of more complex interactions among Search web parts and applications, and ultimately a richer set of tools for building search result pages and search-driven features.

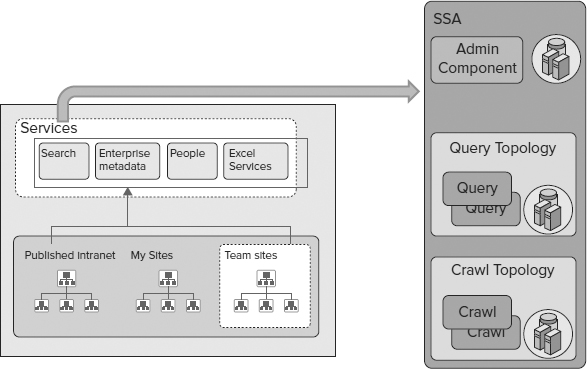

Range of Customization

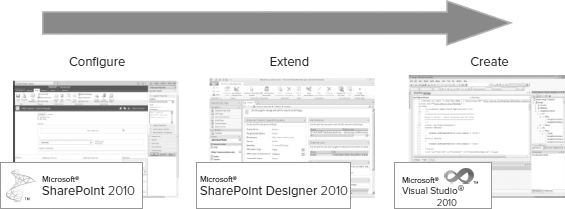

Customization of search falls into three main categories:

- Configure: Using configuration parameters alone, you can set up a tailored search system. Usually, you are working with web part configuration, XML, and PowerShell. Most of the operations are similar to what IT pros use in administering search — but packaged ahead of time by you as a developer.

- Extend: Using the SharePoint Designer, XSLT, and other “light” development, you can create vertical and role-specific search applications. Tooling built into SPD lets you build new UIs and new connectors without code.

- Create: Search can do amazing things in countless scenarios when controlled and integrated using custom code. Visual Studio 2010 has tooling built in, which makes developing applications with SharePoint much easier. In many of these scenarios, search is one of many components in the overall application.

Figure 6-21 shows the range of customization and the tooling typically used in these three categories. There are no hard rules here — general-purpose search applications, such as intranet search, can benefit from custom code and might be highly customized in some situations, even though intranet search works with no customization at all. However, most customization tends to be done on special-purpose applications with a well-identified set of users and a specific set of tasks they are trying to accomplish. Usually, these are the most valuable applications as well — ones that make customization well worth it.

FIGURE 6-21

Top Customization Scenarios

Although there are no hard rules, there are common patterns found when customizing Enterprise Search. The most common customization scenarios are:

- Modify the end user experience to create a specific experience and/or surface specific information. Examples: add a new refinement category, show results from federated locations, modify the look and feel of the OOB end user experience, enable sorting by custom metadata, add a Visual Best Bet for an upcoming sales event, configure different rankings for the human resources and engineering departments.

- Create a new vertical search application for a specific industry or role. Examples: connecting to and indexing specific new content, designing a custom search experience, adding Audio/ Video/Image search.

- Create new visual elements that add to the standard search. Examples: show location refinement on charts/maps, show tags in a tag cloud, enable “export results to a spreadsheet,” summarize financial information from customers in graphs.

- Develop content pipeline plug-ins: Used to process content in more sophisticated ways. Example: create a new “single view of the customer” application that includes customer contact details, customer project details, customer correspondence, internal experts, and customer-related documents.

- Query and indexing shims: Add terms and custom information to the search experience. Examples: expand query terms based on synonyms defined in the term store, augment customer results with project information, show popular people inline with search results, or show people results from other sources. Both the query OM and the connector framework provide a way to write “shims” — simple extensions of the .NET assembly where a developer can easily add custom data sources and/or do data mash-ups.

- Create new search-driven sites and applications: Create customized content exploration experiences. Examples: show email results from personal mailbox on Exchange Server through Exchange Web Services (EWS), index content from custom repositories like Siebel, create content-processing plug-ins to generate new metadata.

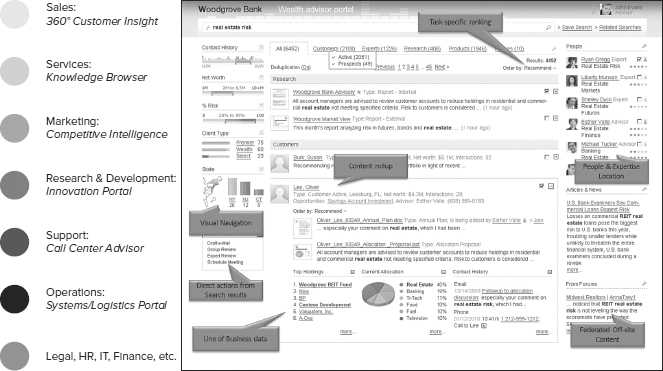

Search-Driven Applications

Search is generally not well understood or fully used by developers. SharePoint 2010 can change all that. By making it easier to own and use high-end search capabilities, and by including tooling and hooks specifically for application developer, Microsoft has taken a big step forward in helping developers do more with search.

Figure 6-22 shows some examples of search-driven applications. These are applications like any other, except that they take advantage of search technology in addition to other elements of SharePoint to create flexible and powerful user experiences.

FIGURE 6-22

The rest of this chapter covers different aspects of search with SharePoint 2010, highlighting how you can customize them and how you can include them in search-driven applications.

CUSTOMIZING THE SEARCH USER EXPERIENCE

While the out-of-the-box user interface is very intuitive and useful for information workers, power users can create their own search experiences. SharePoint Server 2010 includes many search-related web parts for power users to create customized search experiences, including Best Bets, refinement panel extensions, featured content, and predefined queries. Figure 6-23 shows the Search web parts.

FIGURE 6-23

IT pros or developers can configure the built-in search web parts to tailor the search experience. As a developer, you can also extend the web parts, which makes it unnecessary to create web parts to change the behavior of built-in web parts on search results pages. Instead of building new web parts, developers can build onto the functionality of existing web parts.

The most important search web parts provided are as follows:

- Advanced Search box: Allows users to create detailed searches against Managed Properties

- Federated Results: Displays results from a federated search location

- People Refinement Panel: Presents facets that can be used to refine a people search

- People Search box: Allows users to search for people using a keyword

- People Search Core Results: Displays the primary result set from a people search

- Refinement panel: Presents facets that can be used to refine a search

- Related Queries: Presents queries related to the user's query

- Search Action links: Displays links for RSS, alerts, and Windows Explorer

- Search Best Bets: Presents best-bets results

- Search box: Allows users to enter keyword query searches

- Search Core Results: Displays the primary result set from a query

- Search Paging: Allows a user to page through search results

- Search Statistics: Presents statistics such as the time taken to execute the query

- Search Summary: Provides a summary display of the executed query

- Top Federated Results: Displays top results from a federated location

You can use these web parts in combination with each other, and create new search web parts by extending the OOB ones.

Example: New Core Results Web Part

This section walks you through the creation of a new search web part in Visual Studio 2010. (The full code is included with Code Project 6-P-1, which contains SortedSearch.zip and is courtesy of Steve Peschka.) This web part inherits from the CoreResultsWebPart class and displays data from a custom source. The standard CoreResultsWebPart part includes a constructor and then two methods that you learn how to modify in this example.

The first step is to create a new WebPart class. Create a new web part project that inherits from the CoreResultsWebPart class. Override CreateChildControls to add any controls necessary for your interface, and then override CreateDataSource. This is where you get access to the “guts” of the query. In the override, you create an instance of a custom datasource class you build.

class MSDNSample : CoreResultsWebPart { public MSDNSample() { //default constructor; } protected override void CreateChildControls() { base.CreateChildControls(); //add any additional controls needed for your UI here } protected override void CreateDataSource() { //base.CreateDataSource(); this.DataSource = new MyCoreResultsDataSource(this); }

![]()

![]() All code in this section is from the SortedSearch.zip project file. To follow along, download the code and unzip it. The file has a complete ready-to-go project.

All code in this section is from the SortedSearch.zip project file. To follow along, download the code and unzip it. The file has a complete ready-to-go project.

The second step is to create a new CoreResultsDatasource class. In the override for CreateDataSource, set the DataSource property to a new class that inherits from CoreResultsDataSource. In the CoreResultsDataSource constructor, create an instance of a custom datasource view class you will build. No other overrides are necessary.

public class MyCoreResultsDataSource : CoreResultsDatasource

{

public MyCoreResultsDataSource(CoreResultsWebPart ParentWebpart)

: base(ParentWebpart)

{

//to reference the properties or methods of the web part

//use the ParentWebPart parameter

//create the View that will be used with this datasource

this.View = new MyCoreResultsDataSourceView(this,“MyCoreResults”);

}

}

The third step is to create a new CoreResultsDatasourceView class. Set the View property for your CoreResultsDatasource to a new class that inherits from CoreResultsDatasourceView. In the CoreResultsDatasourceView constructor, get a reference to the CoreResultsDatasource so that you can refer back to the web part. Then, set the QueryManager property to the shared query manager used in the page.

public class MyCoreResultsDataSourceView : CoreResultsDatasourceView

{

public MyCoreResultsDataSourceView(SearchResultsBaseDatasource

DataSourceOwner, string ViewName):

base(DataSourceOwner, ViewName)

{

//make sure we have a value for the datasource

if (DataSourceOwner == null)

{

throw new ArgumentNullException(“DataSourceOwner”);

}

//get a typed reference to our datasource

MyCoreResultsDataSource ds = this.DataSourceOwner as

MyCoreResultsDataSource;

//configure the query manager for this View

this.QueryManager =

SharedQueryManager.GetInstance(ds.ParentWebpart.Page

.QueryManager);

}

You now have a functional custom web part displaying data from your custom source. In the next example, you take things one step further to provide some custom query processing.

Example: Adding Sorting to Your New Web Part

The CoreResultsDataSourceView class lets you modify virtually any aspect of the query. The primary way to do that is in an override of AddSortOrder. This class provides access to SharePointSearchRuntime class, which includes: KeywordQueryObject, Location, and RefinementManager.

The following code example adds sorting by overriding AddSortOrder. (The full code is included with Code Project 6-P-1, courtesy of Steve Peschka.)

public override void AddSortOrder(SharePointSearchRuntime runtime)

{

#region Ensure Runtime

//make sure our runtime has been properly instantiated

if (runtime.KeywordQueryObject == null)

{

return;

}

#endregion

//remove any other sorted fields we might have had

runtime.KeywordQueryObject.SortList.Clear();

//get the datasource so we can get to the web part

//and retrieve the sort fields the user selected

SearchResultsPart wp = this.DataSourceOwner.ParentWebpart

as SearchResultsPart;

string sortField = wp.SortFields;

//check to see if any sort fields have been provided

if (Istring.IsNullOrEmpty(sortField))

{

//if posting back, then use the value from the sort drop-down

if (wp.Page.IsPostBack)

{

//get the sort direction that was selected

SortDirection dir =

(wp.Page.Request.Form[SearchResultsPart.mFormSortDirection]

== “ASC” ?

SortDirection.Ascending : SortDirection.Descending);

//configure the sort list with sort field anddirection

runtime.KeywordQueryObject.SortList.Add

(wp.Page.Request.Form[SearchResultsPart.mFormSortField],

dir);

}

else

{

//split the value out from its delimiter and

//take the first item in descending order

string[] values = sortField.Split(“;”.ToCharArray(),

StringSplitOptions.RemoveEmptyEntries);

runtime.KeywordQueryObject.SortList.Add(values[0],

SortDirection.Descending);

}

}

else

//no sort fields provided so use the default sortorder

base.AddSortOrder(runtime);

}

![]()

The KeywordQueryObject class is what's used in this scenario. It provides access to key query properties like:

- EnableFQL

- EnableNicknames

- EnablePhonetic

- EnableStemming

- Filter

- QueryInfo

- QueryText

- Refiners

- RowLimit

- SearchTerms

- SelectProperties

- SortList

- StartRow

- SummaryLength

- TrimDuplicates

- … and many more

To change the sort order in your web part, first remove the default sort order. Get a reference to the web part, as it has a property that has the sort fields. If the page request is a post-back, then get the sort field the user selected. Otherwise, use the first sort field the user selected. Finally, add the sort field to the SortList property.

To allow sorting, you also need to provide fields on which to sort. Ordering can be done with DateTime fields, Numeric fields, or Text fields where: HasMultipleValues = false, IsInDocProps = true, and MaxCharactersInPropertyStoreIndex > 0.

You can limit the user to selecting only fields by creating a custom web part property editor. This would follow the same process as in SharePoint 2007: inherit from EditorPart and implement IWebEditable. The custom version of EditorPart in this example web part uses a standard LINQ query against the search schema to find properties.

Web Parts with FAST

SharePoint search and FAST Search for SharePoint share the same UI framework. When you install FAST Search for SharePoint, the same Search Centers and Small Search Box web parts apply; the main Result web part and Refiner web part are replaced with FAST-specific versions, and a Search Visual Best Bets web part is added. Otherwise, the web parts (like the Related Queries web part or Federated Results web part) remain the same.

Because of the added capabilities of FAST, there are some additional configuration options. For example, the Core Results web part allows for configuration of thumbnails and scrolling previews — whether to show them or not, how many to render, and so forth. The search Action Links web part provides configuration of the sorting pulldown (which can also be used to expose multiple ranking profiles to the user). The Refinement web part has additional options, and counts are returned with refiners (since they are deep refiners — over the whole result set).

The different web parts provided with FAST Search for SharePoint and the additional configuration options are fairly self-evident when you look at the web parts and their documentation. Because web parts are public with SharePoint 2010, you can look at them directly and see the available configuration options within Visual Studio.

There are many features you can build with search and web parts. By modifying the Content Query web part to use data from search, for example, you can show dynamic content on your pages in nearly any format you like. Something as simple as adding a Survey web part on your search page can make a big difference in the search experience and is a great way to gather feedback that you can use to improve search for everyone.

SEARCH CONNECTORS AND SEARCHING LOB SYSTEMS

Acquiring content is essential for search: if it's not crawled, you can't find it! Typical enterprises have hundreds of repositories of dozens of different types. Bridging content silos in an intuitive UI is one of the primary benefits of search applications. SharePoint 2010 supports this through a set of pre-created connectors, plus a framework and set of tools that make it much easier to create and administer connectivity to whatever source you like. There is already a rich set of partner-built connectors to choose from, and as a developer, you can easily leverage these or add to them.

Using Out-of-Box Connectors

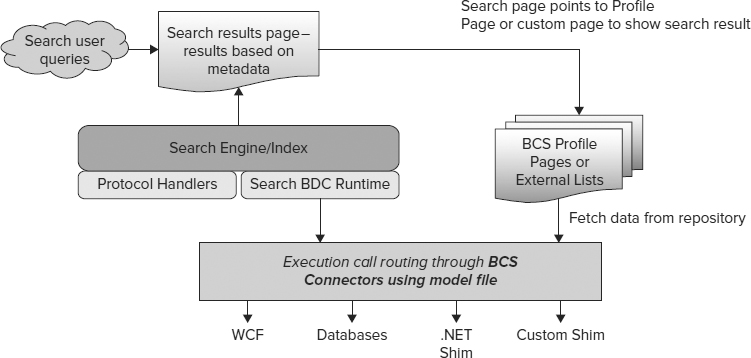

A number of connectors provide built-in access to some of the most popular types of data repositories (including SharePoint sites, websites, file shares, Exchange public folders, Documentum instances, and Lotus Notes databases). The same connectors can be configured to work with a wide range of custom databases and web services via Business Connectivity Services (BCS). For complex repositories, custom code lets you access line-of-business data and make it searchable.

SharePoint Server 2010 supports existing protocol handlers (custom interfaces written in unmanaged C++ code) used with MOSS 2003 and MOSS 2007. However, the new BCS connector framework is now the primary way to create interfaces to data repositories. The connector framework uses .NET assemblies, and supports the BCS declarative methodology for creating and expressing connections. It also enables connector authoring by means of managed code. This increased flexibility, with enhanced APIs and a seamless end-to-end experience for creating, deploying, and managing connectors, makes the job of collecting and indexing data considerably easier.

Search leverages BCS heavily in this wave (see Chapter 11 for more information about BCS). BCS is a set of services and features that provides a way to connect SharePoint solutions to sources of external data and to define External Content Types that are based on that external data. External Content Types allow the presentation of and interaction with external data in SharePoint lists (known as external lists), web parts, Microsoft Outlook 2010, Microsoft SharePoint Workspace 2010, and Microsoft Word 2010 clients. External systems that BCS can connect to include SQL Server databases, SAP applications, web services (including Windows Communication Foundation web services), custom applications, and websites based on SharePoint. With BCS you can design and build solutions that extend SharePoint collaboration capabilities and the Office user experience to include external business data and the processes that are associated with that data.

Microsoft BCS solutions use a set of standardized interfaces to provide access to business data. As a result, developers of solutions don't have to learn programming practices that apply to a specific system or need to be adapted to work with each external data source. Microsoft BCS also provides a runtime environment in which solutions that include external data are loaded, integrated, and executed in supported Office client applications and on the web server. Enterprise Search uses these same practices and framework — and connectors can reveal information in SharePoint that is synchronized with the external line-of-business system, including writing back any changes.

New Connector Framework Features

The connector framework, shown in Figure 6-24, provides improvements over the protocol handlers in previous versions of SharePoint Server. For example, connectors can now crawl attachments, as well as the content, in email messages. Also, item-level security descriptors can now be retrieved for external data exposed by Business Connectivity Services. Furthermore, when crawling a BCS entity, additional entities can be crawled via its entity relationships. Connectors also perform better than previous versions by implementing concepts such as inline caching and batching.

FIGURE 6-24

Connectors support richer crawl options than the protocol handlers in previous versions of SharePoint Server. For example, they support the full crawl mode that was implemented in previous versions, and they support timestamp-based incremental crawls. However, they also support change log crawls that can remove items that have been deleted since the last crawl.

FAST-Specific Indexing Connectors

The connector framework and all of the productized connectors work with FAST Search for SharePoint as well as SharePoint Server search. FAST also has two additional connectors.

The Enterprise crawler provides web crawling at high performance with more sophisticated capabilities than the default web crawler. It is good for large-scale crawling across multiple nodes and supports dynamic data, including JavaScript.

The Java Database Connectivity (JDBC) connector brings in content from any JDBC-compliant source. This connector supports simple configuration using SQL commands (joins, selects, and so on) inline. It supports push-based crawling, so that a source can force an item to be indexed immediately. The JDBC connector also supports change detection through checksums, and high-throughput performance.

These two connectors don't use the connector framework and cannot be used with SharePoint Server 2010 Search. They are FAST-specific and provide high-end capabilities. You don't have to use them if you are creating applications for FAST Search for SharePoint, but it is worth understanding if and when they apply to your situation.

Creating Indexing Connectors

In previous versions of SharePoint Server, it was very difficult to create protocol handlers for new types of external systems. Protocol handlers had to be coded in unmanaged C++ code and typically took a long time to test and stabilize.

With SharePoint Server 2010, you have many more options for crawling external systems:

- Use SharePoint Designer 2010 to create external content types and entities for databases or web services and then simply crawl those entities

- Use Visual Studio 2010 to create external content types and entities for databases or web services, and then simply crawl those entities

- Use Visual Studio 2010 to create .NET types for Business Connectivity Services (typically for backend systems that implement dynamic data models, such as document management systems), and then use either SharePoint Designer 2010 or Visual Studio 2010 to create external content types and entities for the .NET type

![]() You can still create protocol handlers (as in previous versions of SharePoint Server) if you need to.

You can still create protocol handlers (as in previous versions of SharePoint Server) if you need to.

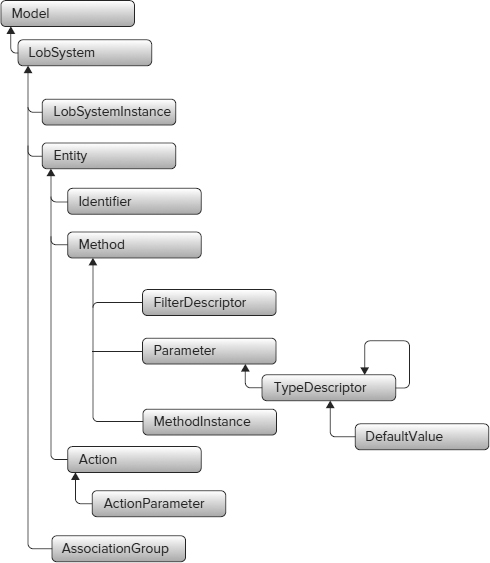

Model Files

Every indexing connector needs a model file (also called an application definition file) to express connection information and the structure of the backend, and a BCS connector for code to execute when accessing the backend (also called a shim). The model file tells the search indexer what information from the repository to index and any custom-managed code that developers determine they must write (after consulting with their IT and database architects). The connector might require, for example, special methods for authenticating to a given repository and other methods for periodically picking up changes to the repository.

You can use OOB shims with the model file or write a custom shim. Either way, the deployment and connector management framework makes it easy — crawling content is no longer an obscure art. SharePoint 2010 also has great tooling support for connectors.

Using OOB shims (Database/WCF/.NET) is very straightforward with SharePoint 2010. OOB shims are most appropriate when connecting to “flat” data structures for read-write or flat views for read-only look-ups, and custom shims often become necessary when connecting to complex data types (for example, multi-level hierarchies or tables with multiple relationships), or when fault tolerance logic needs to be applied (for example, to provide resiliency against source schema changes).

Tooling in SPD and VS2010

Both SharePoint Designer 2010 and Visual Studio 2010 have tooling that helps in authoring connectors. You can use SharePoint Designer to create model files for out-of-box BCS connectors (such as a database), to import and export model files between BCS services applications, and to enable other SharePoint workloads using external lists. Use Visual Studio 2010 to implement methods for the .NET shim or to write custom shim for your repository.

When you create a model file through SharePoint Designer, it is automatically configured for full-fidelity performant crawling. This takes advantage of features of the new connector framework, including inline caching for better citizenship, and timestamp-based incremental crawling. You can specify the search click-through URL to go to the profile page, so that content includes writeback, integrated security, and other benefits of BCS. Crawl management is automatically enabled through the Search Management console.

Figure 6-25 shows the relationships between the elements that are most commonly changed when creating a new connector using SharePoint Designer and OOB shims.

FIGURE 6-25

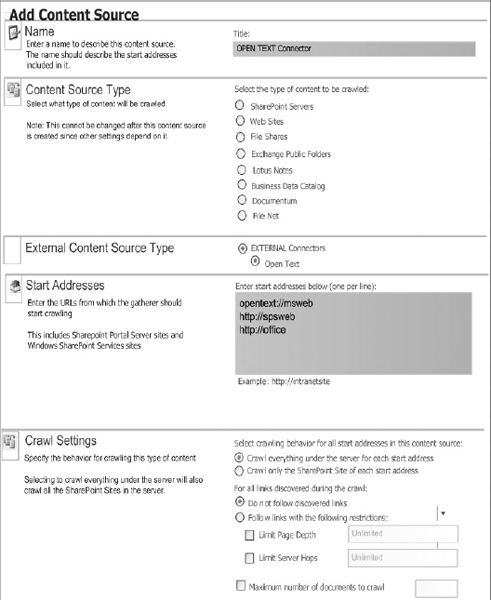

Figure 6-26 shows the Configuration panel within SharePoint Designer.

FIGURE 6-26

Writing Custom Connectors

This section walks through how to create a connector with a custom shim. Assume that you have a product catalog in an external system and want to make it searchable. Code project 6-P-2 shows the catalog schema and walks through this example step by step.

There are two types of custom connectors: a managed .NET Assembly BCS connector and a custom BCS connector. In this case, you use the .NET BCS connector approach. You need to create only two things: the URL parsing classes, and a model file.

The code is written with .NET classes and compiled into a Dynamic Link Library (DLL). Each entity maps to a class in the DLL, and each BDC operation in that entity maps to a method inside that class. After the code is done and the model file is uploaded, you can register the new connector either by adding DLLs to the global assembly cache (GAC) or by using PowerShell cmdlets to register the BCS connector model file. Configuration of the connector is then available through the standard UI; the content sources, crawl rules, managed properties, crawl schedule, and crawl logs work as they do in any other repository.

If you chose to build a custom BCS connector, you implement the ISystemUtility interface for connectivity. For URL mapping, you implement the ILobUri and INamingContainer interfaces. Compile the code into a DLL and add the DLL to the GAC, author a model file for the custom backend, register the connector using PowerShell, and you are done! The SharePoint Crawler invokes the Execute() method in the ISystemUtility class (as implemented by the custom shim), so you can put your special magic into this method.

A Few More Tips

The new connector framework takes care of a lot of things for you. There are a couple more new capabilities you might want to take advantage of:

- To create item-level security: Implement the GetSecurityDescriptor() method. For each entity, add a method instance property:

<Property Name = “WindowsSecurityDescriptorField” Type =“System.Byte[]”> Field name </Property>

- To crawl through entity associations: For association navigators (foreign key relationships), add the following property:

<Property Name=“DirectoryLink” Type=“System.String”> NotUsed </Property>

Deploying Connectors

Deploying connectors is quite straightforward for simple search systems. Out-of-the-box connectors are already installed with SharePoint and the configuration is easy. Some Microsoft-supplied connectors (notably the Documentum connector) are not provided with the SharePoint distribution, but are downloadable from TechNet. Third-party connectors have their own installation and configuration instructions. Custom connectors you write now have a straightforward packaging and deployment process as well.

Because SharePoint 2010 provides a highly scalable architecture, with the ability to provision multiple crawlers and multiple crawl databases, deployment of connectors for more complex search systems is possible. In these situations some planning and performance benchmarking is important. It is no longer unusual to crawl systems with 10 million items, which requires high throughput to keep full crawls to a reasonable timeframe. Deployments with 100 million items or more are now happening fairly regularly. At this scale, the throughput and reliability of connectors becomes the critical factor, especially in managing full crawls.

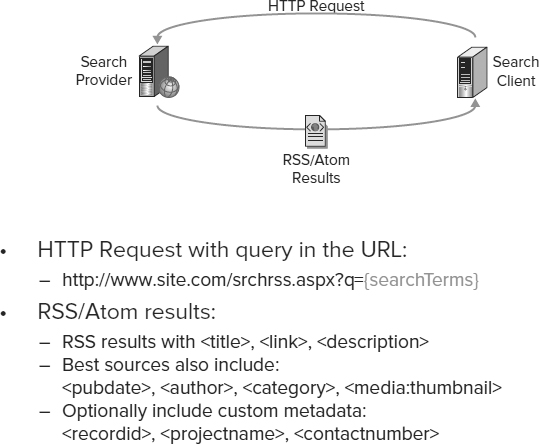

Packaging and Deploying Custom Connectors