CHAPTER 2

“Gut Feel” vs. Decision Analysis

Introduction to the Psychology of Project Decision-Making

The purpose of psychology is to give us a completely different idea of the things we know best.

—PAUL VALERY, FRENCH POET (1871–1945)

The root cause of almost all project failures is human error or misjudgment. These errors are hard to prevent, for they stem from human psychology. But decision-making is a skill that can be improved by training. By understanding how psychological heuristics and biases can affect our judgment, it is possible to mitigate their negative effects and make better decisions.

Human Judgment Is Almost Always to Blame

In his paper “Lessons Discovered but Seldom Learned or Why Am I Doing This if No One Listens” (Hall 2005), David C. Hall reviewed a number of projects that had failed or had major problems. Among them were:

• Malfunctions in bank-accounting software systems, which cost millions of dollars

• Space programs, including the Mars Polar Lander, Mars Climate Orbiter, and Ariane 5 European Space Launcher, that were lost

• Defense systems, including the Patriot Missile Radar System and the Tomahawk/LASM/Naval Fires Control System, which had serious problems

Hall listed the various reasons why projects are unsuccessful:

• Sloppy requirements and scope creep

• Poor planning and estimation

• Poor documentation

• Issues with implementation of new technology

• Lack of disciplined project execution

• Poor communication

• Poor or inexperienced project management

• Poor quality control

Hall’s list includes only the results of human factors; he did not find any natural causes—earthquakes, say, or falling meteorites or locust attacks—for project failures in these cases. In his paper he also described a recent study by the Swiss Federal Institute of Technology. The study analyzed 800 cases of structural failures where engineers were at fault. In those incidents 504 people were killed, 592 injured, and millions of dollars of damage incurred. The main reasons for failures were:

• Insufficient knowledge (36%)

• Underestimation of influence (16%)

• Ignorance, carelessness, and neglect (14%)

• Forgetfulness (13%)

• Relying upon others without sufficient control (9%)

• Objectively unknown situation (7%)

• Other factors related to human error (5%)

Extensive research on why projects fail in different industries leads to the same conclusion: human factors are almost always the cause (Johnson 2016; Rombout and Wise 2007). Furthermore, one fundamental reason for all these problems can be attributed: poor judgment. Dave Hall asks, “Why don’t more people and organizations actually use history, experience, and knowledge to increase their program success?” The answer lies in human psychology.

All project stakeholders make mental mistakes or have biases of different types. Although the processes described in the PMBOK® Guide and many project management books help us to avoid and correct these mental mistakes, we should try to understand why these mistakes occur in the first place. In this chapter we will review a few fundamental principles of psychology that are important in project management. In subsequent chapters we will examine how each psychological pitfall can affect the decision analysis process.

Blink or Think?

Malcolm Gladwell, a staff writer for The New Yorker, published a book titled Blink: The Power of Thinking without Thinking (Gladwell 2005), which instantly became a best seller. Gladwell focused on the idea that most successful decisions are made intuitively, or in the “blink of an eye,” without comprehensive analysis. In a very short time, Michael LeGault wrote Think! Why Critical Decisions Can’t Be Made in the Blink of an Eye (LeGault 2006), as a response to Malcolm Gladwell. LeGault argued that in our increasingly complex world people simply do not have the mental capabilities to make major decisions without doing a comprehensive analysis. So, who is right—Gladwell or LeGault? Do we blink or do we think?

Both authors raised a fundamental question: What is the balance between intuitive (“gut feel”) and controlled (analytical) thinking? The answer is not straightforward. As the human brain evolved, it developed certain thinking mechanisms—ones that are similar for all people regardless of their nationality, language, culture, or profession. Our mental machinery has enabled us to achieve many wondrous things: architecture, art, space travel, and cotton candy. Among these mechanisms is our capacity for intuitive thinking. When you drive a car, you don’t consciously think about every action you must make as you roll down the street. At a traffic light, you don’t think through how to stop or how to accelerate. You can maintain a conversation and listen to the radio as you drive. You still think about driving, but most of it is automatic.

Alternatively, controlled thinking involves logical analysis of many alternatives, such as you might perform when you are looking at a map and deciding which of several alternative routes you are going to take (after you’ve pulled over to the side of the road, we hope). When you think automatically, and even sometimes when you are analyzing a situation, you apply certain simplification techniques. In many cases, these simplification techniques can lead to wrong judgments.

People like to watch sci-fi moves in part because by comparing ourselves with aliens we can learn how we actually think. The Vulcans from the Star Trek TV series and movies are quite different from humans. They are limited emotionally and arrive at rational decisions only after a comprehensive analysis of all possible alternatives with multiple objectives. In many cases, Vulcan members of Star Trek crews like Spock from the original Star Trek (figure 2-1), T’Pol from Enterprise, or Tuvoc from Voyager help save the lives of everybody on board. However, in a few instances, especially those involving uncertainties and multiple objectives, human crew members were able to find a solution when Vulcan logic proved fallible. In the “Fallen Hero” episode of Enterprise, the Vulcan ambassador V’Lar noted that the human commander Archer’s choice was not a logical course of action when he decided to fly away from an enemy ship. Archer replied that humans don’t necessarily take the logical course of action. Ultimately, in this episode, Archer’s choice proved to be the best one.

Project managers should resist the temptation to make an intuitive choice when they feel there is a realistic opportunity for further analysis.

Figure 2-1. Spock from Star Trek had the ability to think rationally (Credit: NBC Television, 1967)

The balance between intuitive and analytical thinking for a particular problem is not clear until the decision-making process is fully examined. Significant intellectual achievements usually combine both automatic and controlled thinking. For example, business executives often believe that their decisions were intuitive; but when they are questioned, it can be demonstrated that they did in fact perform some analysis (Hastie and Dawes 2009).

When people think consciously, they are able to focus on only a few things at once (Dijksterhuis et al. 2006). The more factors involved in the analysis, the more difficult it is to make a logical choice. In such cases, decision-makers may switch to intuitive thinking in an attempt to overcome the complexity. However, they always have the option to use different analytical tools, including decision analysis software, to come up with better decisions.

So, coming back to our original question—do we blink or think?—it is important not to dismiss the value of intuitive thinking in project management. Ever since there have been projects to manage, managers have been making intuitive decisions, and they will continue to do so. Intuition can work well for most short-term decisions of limited scope.

Because project managers rarely have enough time and resources to perform a proper analysis, and since decision analysis expertise is not always available, project managers are always tempted to make intuitive decisions. Even if you have experience with and knowledge of a particular area, some natural limitations to your thinking mechanisms can lead to potentially harmful choices. In complex situations, intuition may not be sufficient for the problems you face. This is especially true for strategic decisions that can significantly affect the project. In addition, intuitive decisions are difficult to evaluate: when you review a project, it is difficult to understand why a particular intuitive decision was made.

Cognitive and Motivational Biases

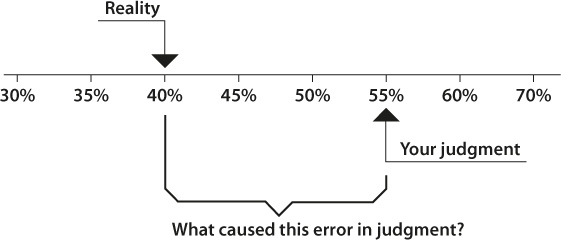

Let’s imagine that you are a campaign manager for a U.S. senator. You organized a few very successful meetings with voters in local day care centers, distributed one million “My Opponent Is a Degenerate” flyers, and released $3 million worth of negative ads exposing your opponent’s scandalous behavior when he was five years old. After all your hard work, you estimate that your senator has the support of at least 55% of the decided voters. Unfortunately, your estimate happens to be wrong: in reality you have only 40% support. So, what is the cause of this discrepancy (figure 2-2)? This is not only a mistake in your estimate of the poll numbers; there is also the question of whether you ran your campaign (project) correctly.

Bias is a discrepancy between someone’s judgment and reality.

Why did you make this mistake? There might be a number of explanations:

• You were overconfident, and your expectations were greater than what was actually possible.

• You did not accurately analyze your own data.

Figure 2-2. Bias in Estimation of Poll’s Results

• You were motivated to produce such positive estimates because you didn’t want to be fired if the poll numbers were not good enough.

• Your boss, the senator, told you what your estimates should be.

We can explain the discrepancy in your poll numbers, and perhaps other problems in the campaign, by looking at some of the biases in your thinking. Don’t worry—we’re not picking on you. These are biases that can occur in anyone’s thinking.

There are two types of biases: cognitive and motivational.

COGNITIVE BIASES

Cognitive biases show up in the way we process information. In other words, they are distortions in the way we perceive reality. Many forms of cognitive bias exist, but we can separate them into a few groups:

Behavioral biases influence how we form our beliefs. An example is the illusion of controlling something that we cannot influence. For example, in the past some cultures performed sacrifices in the belief that doing so would protect them from the vagaries of the natural world. Another example is our tendency to seek information even when it cannot affect the project.

Perceptual biases can skew the ways we see reality and analyze information. Probability and belief biases are related to how we judge the likelihood that something will happen. This set of biases can especially affect cost and time estimates in project management.

Social biases are related to how our socialization affects our judgment. It is rare to find anyone who manages a project in complete isolation. Daniel Defoe’s classic novel Robinson Crusoe may be the only literary example of a project carried out in complete isolation (aside from the occasional requirement raised by the threats from the local island population where he was living and writing). The rest of us are subject to varying biases about how people communicate with each other.

Memory biases influence how we remember and recall certain information. An example is hindsight bias (“I knew it all along!”), which can affect project reviews.

An example of one of the more common perceptual biases in project management is overconfidence. Many project failures originate in our tendency to be more certain than we should be that a certain outcome will be achieved. Before the disaster of the space shuttle Challenger, NASA scientists estimated that the chance of a catastrophic event was 1 per 100,000 launches (Feynman 1988). Given that the disaster occurred on the Challenger’s tenth launch (NASA 2018), the 1 in 100,000 estimate now appears to be wildly optimistic. Overconfidence is often related to judgment about probabilities, and it can affect our ability to make accurate estimates. Sometimes we can be overconfident in our very ability to resolve a problem successfully (McCray et al. 2002).

Another example of biases that is extremely common in project management is optimism bias or planning fallacy. Optimism bias, among other things, affects how project managers perform their estimations. We will discuss this bias in details in chapter 11.

Appendix B lists cognitive biases that are particularly related to project management. The list is not a comprehensive set of all possible mental traps that pertain to project management. Instead, we offer it as a tool that can help you understand how such traps can affect you and your projects.

MOTIVATIONAL BIASES

The personal interests of a person expressing an opinion cause what we call motivational biases. These are often easy to identify but difficult to correct, as you must remove the motivational factors causing the bias. If an opinion comes from an independent expert, removing the bias will not be too difficult because, by definition, such an expert has no vested interest in the project outcomes. If, however, you suspect that a member of the project team is biased, corrective actions can be difficult to accomplish, as it is hard to eliminate the personal interests of team members or managers from the project without removing the individuals themselves. Motivational biases are like an illness: you know that you have the flu, but there is very little you can do about it.

Perception

Consider a situation in which you and your manager are in the midst of a heated disagreement. You believe that your project is progressing well; your manager thinks that it is on the road to failure. Both of you are looking at the same project data, so you and your manager obviously have different perceptions of the project. Who is right?

Most people believe themselves to be objective observers. However, perception is an active process. We don’t just stand back passively and let the real “facts” of the world come to us in some kind of pure form. If that were so, we’d all agree on what we see. Instead, we reconstruct reality, using our own assumptions and preconceptions: what we see is what we want to see. This psychological phenomenon is called selective perception. As a project manager, you will have a number of expectations about the project. These expectations have different sources: past experience, knowledge of the project, and certain motivational factors, including political considerations. These factors predispose you to process information in a certain way.

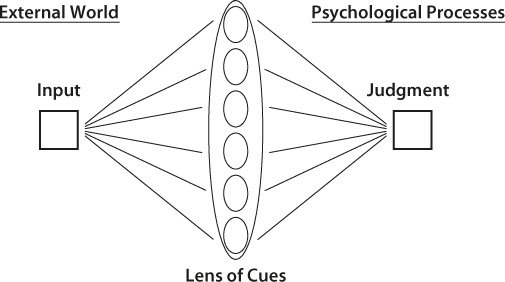

Psychologists try to understand how the process of making judgments actually works. One of the tools that can be used to model mental activities associated with project management is the lens model. Invented by Edon Brunswik in 1952 (Hammond and Stewart 2001), the lens model is not a comprehensive theory about how judgments are made, but rather a conceptual framework that models how judgments are made under uncertain conditions.

For example, let’s assume that you are working for a national intelligence agency and are involved in a project to capture some terrorists. Occasionally they issue a new tape that could provide some information about their whereabouts. Your task is to analyze the tape to discover the location of his hideout. We can assess your task by applying the lens model.

The lens model is divided in two: the left side represents the “real world”; the right side represents events as you see them in your mind (figure 2-3). You try to see a true state of the world (the terrorist’s location) through the lens of cues or items of information. On the right side of the diagram, information is conveyed to you by cues in the form of estimates, predictions, or judgment of the value of the input parameter. If, for example, you have an audiotape supposedly from the terrorists, you could try to listen for some external sounds specific to a geographical location, certain features of the speaker’s voice, the content of the speech, or anything else that might give an indication regarding the location. A videotape might give you more information or cues. However, the way that you interpret these cues is predicated on the lens through which you view them.

For example, if a video came in showing a group of “Islamic-appearing” men drinking tea, the intelligence officer might immediately infer that they are meeting to discuss political matters, perhaps planning a future attack somewhere in the Pakistan–Afghanistan border area, and will start to look for clues to confirm this perception. The reality may be that they are merely discussing family matters in an entirely different location.

This “lens of cues” is a certain mind-set that predisposes you to see information in a certain way. These mind-sets are unavoidable: it is impossible to remove our own expectations from our prior judgments. Moreover, these mind-sets are easily formed but extremely hard to change. You can come to an assumption based on very little information (such as your certainty of a terrorist network in Pakistan) but, once formed, it is hard to change the perception unless solid evidence to the contrary is provided. Therefore, if your intelligence manager has come to an opinion about the project (for instance, she already believes that terrorists are plotting something in Pakistan) based on inaccurate or incomplete information, it is hard to change this opinion. You probably know about this phenomenon with regard to first impressions; when you judge people or somebody judges you, original impressions are quite difficult to change.

Figure 2-3. Lens Model of Judgment

A project manager will manage a project based on how he or she perceives the project. When a manager believes that everything is going well in spite of evidence to the contrary, he or she will not see the need to take corrective actions. In these cases, selective perception can lead to biases—and eventually to wrong decisions. A common upshot of this bias is a premature termination of the search for evidence: we tend to accept the first alternative that looks like it might work. We also tend to ignore evidence that doesn’t support our original conclusion.

Before making a decision, therefore, it is important to pause and consider these questions:

• Are you motivated to see the project in a particular way?

• What do you expect from this particular decision?

• Would you be able to see the project differently without these expectations and motivational factors?

Bounded Rationality

Why, you may wonder, do our cognitive abilities have limitations? Herbert Simon suggested the concept of bounded rationality (Simon 1956)—that is, we humans have a limited mental capacity and cannot directly capture and process all the world’s complexity. Instead, people construct a simplified model of reality and then use this model to come up with judgments. We behave rationally within the model; however, our model does not necessarily represent reality. For example, when you plan a project, you have to deal with a web of political, financial, technical, and other considerations. Moreover, reality has a lot of uncertainties that you cannot easily comprehend. In response, you create a simplified model that enables you to deal with these complex situations. Unfortunately, the model is probably inadequate, and judgments based on this model can be incorrect.

Heuristics and Biases

According to a theory that Daniel Kahneman developed with Amos Tversky (Tversky and Kahneman 1971), people rely on heuristics, or general rules of thumb, when they make judgments. In other words, they use mental “shortcuts.” In many cases, heuristics lead to rational solutions and good estimates. In certain situations, however, heuristics can cause inconsistencies and promote cognitive biases. Kahneman and Tversky outlined three main heuristics.

AVAILABILITY HEURISTIC

Assume that you are evaluating project-management software for your company. You did a lot of research, read a number of detailed reviews, used a number of different evaluation tools, and concluded that Product X is a good fit for your organization. Then, just after you finished your report, you went to a conference and met a well-known expert in the industry who had a different opinion: “Product X is a poor choice. It is slow and difficult to use.” You feel relieved that you had this conversation before you handed in your recommendations, but your real mistake may be in throwing out your original recommendations. On the basis of the opinion of one individual, you are ready to scrap the findings in your well-researched and comprehensive report. You are giving too much weight to this opinion because of the manner and the timing in which it was presented to you. This is an example of a bias that is related to the availability heuristic.

According to the availability heuristic, people judge the probability of the occurrence of events by how easily these events are brought to mind. When we try to access the probability of a certain event or recall all instances of an event, we initially recall events that are unusual, rare, vivid, or associated with other events, such as major issues, successes, or failures. As a result, our own assessment of probabilities is skewed because the ease with which an event can be recalled or imagined has nothing to do with actual probabilities of the event’s occurring.

When you see a slot machine winner holding up a poster-sized multimillion-dollar check, you might assume that you yourself have a reasonable chance of winning at the casinos. This belief can be formed because you have received a vivid image or information related to a rare (and desirable) event: winning the lottery. Add to this what you read and see in the media, and you have all necessary means to misjudge your probability (or hope) of winning. If the government really wanted to fight gambling, based on what we know about the availability heuristic, it should erect huge billboards listing personal bankruptcies and showing broken families in front of casinos. How would you feel about your chances of winning if each time you went to the casino you saw this sign:

Welcome to Our Friendly Casino

This year 168,368 people lost $560 million here!

5% of our guests divorced, 1% became alcoholics,

and 0.4% committed suicide

You might have second thoughts about your chances of winning the jackpot. Advertisers, politicians, salespeople, and trial lawyers use the power of vivid information all the time. Biases associated with availability heuristic are extremely common in project management, primarily when we perform project estimations. We will review the psychology of estimating in chapter 11.

(Here is a suggestion: if you want your project idea to be accepted, use many colorful images and details in your presentation! When the time comes for management to decide which projects should go forward, they will have an easier time remembering your presentation.)

So, how do you mitigate the negative impact of any availability heuristics? One suggestion is to collect as many samples as you can of reliable information and include it with the analysis. For example, if you estimate a probability of a risk of “delay with receiving components,” ask your procurement department for records related to all components. Are they delayed or not?

REPRESENTATIVENESS HEURISTIC

Take a look at figure 2-4. What brand is this car and where is it produced? Is it a Mazda, Toyota, or Kia? In fact, it is a Malaysian-designed Proton, assembled in Bangladesh. You probably don’t associate very nice-looking and well-built cars with Malaysian or Bangladesh design and production.

Here is another example: let’s assume that you want to estimate the chance of success for a project with the following description:

The project is managed by a project manager with ten years of industry experience. He has PMP designation and actively uses processes defined in the PMBOK Guide in his management practices.

Based on this description, you categorize this as a well-managed project. You will judge the probability of success of the project based on the category this project represents (Tversky and Kahneman 1982). In many cases this representativeness heuristic will help you to come up with a correct judgment. However, sometimes it causes major errors. For example, we may believe the software of a new startup is unproven and potentially unreliable and, therefore, we choose to avoid dealing with their products even if they are of very high quality.

Figure 2-4. What brand is this car, and where was it produced? (Photo by Areo7)

One type of bias related to this heuristic is the conjunction fallacy. Here is an example of a conjunction fallacy: a company is evaluating whether to upgrade its existing network infrastructure and is considering two scenarios:

A. New networking infrastructure will improve efficiency and security by providing increased bandwidth and offering more advanced monitoring tools.

B. New networking infrastructure will be more efficient and secure.

Statement A seems to be more plausible, and therefore more probable, than the more general statement B. However, in reality, the more general statement B has a higher probability of occurring. The conjunction fallacy states that people tend to believe that scenarios with greater detail are also more probable. This fallacy can greatly affect your ability to manage projects, because if you must select one project from a number of proposals, you may tend to pick those proposals with the most details, even though they may not have the best chance of success.

Another bias related to representativeness is ignoring regression to mean. People expect some extreme events to be followed by similar extreme events. This is extremely common in investing and sales. If you have a huge return on investment or a significant sales deal, you expect that it will repeat again and become part of a trend. However, it may be just a single event and everything will come back to its normal state.

So, how can you mitigate the negative effect of representativeness? You may try to think about different methods to categorize processes or objects. For example, you have to estimate how long it might take to do excavation during construction. That particular task could belong to different categories: excavation of foundation for a residential building, excavation for utilities, and so on. This way, you can make your estimates based on similar tasks for each different category.

ANCHORING HEURISTIC

How often have you gone to a store and found that an item you want is on sale? For example, the suit you want is priced down from $399 to $299 with a “sale” label attached to it. “What a great bargain!” you think, so you buy the suit. However, from the store’s point of view, the original price of $399 served as a reference point or anchor, a price at which they would probably never actually attempt to sell the suit. But by posting $399 and a “sale” sign on it, the store is able to sell a lot of suits at $299. By fixating on only a single piece of information, the price of $399, you probably did not stop to consider whether the asking price of $299 was a good value for your money. Further research might show, for example, that other stores sell the same suit for $199, or that other suits in the first store priced at $299, but not “on sale,” are better values.

Anchoring is the human tendency to rely on one trait or piece of information when making a decision.

We always use a reference point when we try to quantify something. This is called the anchoring heuristic, which can be very helpful in many cases. Unfortunately, as with the other heuristics we have mentioned, it often causes biases that are difficult to overcome. One of them is related to an insufficient adjustment after defining an initial value. Once we have determined a certain number or learned about a certain reference point, we don’t significantly deviate from this value when we research a problem.

Another bias related to anchoring is called the “focusing effect” (Schkade and Kahneman 1998). The focusing effect occurs when decision-makers place too much importance on one aspect of an event or process. For example, people often associate job satisfaction with salary and position, while in fact job satisfaction is determined by many factors, including working environment, location, and so on.

The problem with the anchoring heuristic is that it very difficult to overcome. Our suggestion is to use more than one reference point during your analysis of an issue. When you buy suit, for instance, try to assess prices using not just the suit from the department store as a reference, but also suits that you can find at Walmart or other less expensive outlets. When you think about project cost, use more than one example as a reference.

Behavioral Traps

Let’s say that you are managing a software project that includes the development of a component for creating 3-D diagrams. Four team members are slated to work on this particular component for at least a year. The development of the component could easily cost more than $1 million when you add up the salaries and expenses such as travel, computers, the holiday party, and other sundries. (If you are lucky, the team members are sitting in cubicles somewhere in rural Montana. If they develop this component in Manhattan, development costs will probably triple.) As luck has it, the project progresses on time and within budget, and everything appears to be going extremely well. Inadvertently, though, while browsing an industry website you discover that you could have purchased a similar component off the shelf, one that not only has better performance but also costs only $10,000. At this point, your project is 90% complete, and you have spent $900,000. Should you halt your project and switch to the off-the-shelf solution—or continue your project with the added $100,000 investment?

When psychologists asked people a similar question, 85% chose to continue with the original project (Arkes and Blumer 1985). But when the original investment was not mentioned, only 17% of people chose to continue the original project. This is a classic case in which you are asked to either “cut and run” or “stay the course.”

This phenomenon is called the “sunk-cost effect,” and it is one of many behavioral traps (Plous 1993). These traps occur when you become involved in rational activity that later becomes undesirable and difficult to extricate yourself from. A number of different categories of behavioral traps can be found in project management. The sunk-cost effect belongs to the category of investment traps. Walter Fielding, played by Tom Hanks in the 1986 film Money Pit, experienced an investment trap when he purchased his dream house. His original investment was quite small, but the incremental cost of the required renovations proved to be his undoing. A rational person would have walked away, once the real cost of the house became apparent.

The following are a few other types of traps that you will want to avoid.

TIME DELAY TRAPS

One type of trap, the time delay trap, occurs when a project manager cannot balance long-term and short-term goals. If you want to expedite the delivery of a software product at the expense of the software’s architecture, unit testing, and technical documentation, you will jeopardize the long-term viability of the software, even though you get the first (and possibly flawed) generation of it out to your customers on time. All project managers are aware of this trade-off but often ignore long-term objectives to meet short-term goals. In these cases, project managers usually blame organizational pressure, customer relationships, and so on, when the problem is really a fundamental psychological trap. It’s like when you postpone your dental cleaning appointment to save a few bucks or because it’s inconvenient, and a few years later you end up needing to have major dental work done. Or when you use your credit cards for your holiday shopping and end up with an even larger debt.

DETERIORATION TRAPS

Similar to investment traps, deterioration traps occur when the expected cost and benefits associated with the project change over time. During the course of new product development, costs may grow substantially. At the same time, because of a number of unrelated marketing issues, fewer clients may be willing to buy the product. In this case, the results of the original analysis are no longer valid.

Deterioration traps are common in processes involving the maintenance of “legacy” products. Should the software company continue releasing new versions of its old software or develop completely new software? Releasing new versions of the old product would be cheaper. Over time, however, it can be more expensive to delay the new product. Should automakers continue with an old platform or invest hundreds of millions of dollars to develop a new one?

Frames and Accounts

As a project manager, you are probably a frequent flyer. Oil prices go up and down, so airlines often impose fuel surcharges. They can do it in two ways:

1. Announce a fuel surcharge of, say, $20 per flight when fuel prices go up.

2. Advertise prices that already include a fuel surcharge, and announce a sale (“$20 off!”) when fuel prices go down.

The consumer actually finds no financial difference between these two methods of advertising, but tends to perceive them differently. Tversky and Kahneman (1981) call this effect framing. They proposed that decision frames are the ways in which we perceive a problem. These frames are controlled by contrasting personal habits, preferences, and characteristics, as well as the different formulation or language of the problem itself.

We apply different frames not only to our choices but also to the outcomes of our choices. In the example that follows, consider three scenarios:

Scenario 1: You are involved in a construction project worth $300 million and have discovered a new approach that would save $1 million. It will take you a lot of time and effort to do the drawings, perform structural analysis, and prepare a presentation that will persuade management to take this course. Would you do it?

Scenario 2: You are involved in an IT project worth $500,000 and have discovered a way to save $80,000. You need to spend at least a couple of days for researching and putting together a presentation. Would you do it?

Scenario 3: You are involved in the same construction project as in scenario 1 and have found a way to save $80,000 (by replacing just one beam). You need to spend several days on research and the presentation. Would you do it?

Most likely you would not bother with an $80,000 improvement for a $300 million project (scenario 3) but would pursue your ideas in scenarios 1 and 2. This is because people have different frames and accounting systems for different problems. When we purchase a home, we don’t worry if we overspend by $20 because it comes from our “home buying” account and $20 is a tiny part. However, when we purchase a shovel, we are really concerned about an extra $20 because it comes from our “home tools” account and $20 is a significant amount. Both accounts operate according to different mental rules, even though everything technically comes from the same bank account.

Training for Project Decision-Making Skills

The CN Tower in Toronto (figure 2-5) was the world’s tallest building at 1,815 feet (553 meters) until 2007. A glass-floored outdoor observation deck is located at a height of 1,122 feet (342 meters). There, you can walk on a glass floor and see what is directly below your feet—the ground, more than a thousand feet below (figure 2-6). At first, you would probably be afraid to step out onto the floor. But as you realize that the glass is extraordinarily strong (you might bounce a little to see how rigid it is), you walk a few steps away from the edge. Finally, as you overcome your anxiety, you start walking more or less freely. Still, you can see that more people stay on the edge of the glass than actually walk out on it.

All of us have inherited a fear of heights. We are afraid to fall, and this is a natural fear. This property of our mental machinery saves as from a lot of trouble. At Toronto’s CN Tower, you have started to teach yourself to overcome this particular bias as your instinctive fear of heights is gradually replaced with the logic that there is no danger in this particular case.

This example illustrates a very important point. Decision-making is a skill that can be improved with experience and training (Hastie and Dawes 2009). Remember the Vulcans from the Star Trek series we discussed earlier? They are used to juxtapose their extreme rationality with how human emotions cause the other main characters to act irrationally. But according to Star Trek lore, this was not always the case. In the past, Vulcans were much more like Star Trek’s human characters, though in response to severe internal conflicts they taught themselves to be more rational and less emotional. Could you follow the Vulcans’ footsteps?

Figure 2-5. CN Tower (Photo by Wladyslaw)

Figure 2-6. View from CN Tower glass floor (Photo by Franklin.vp)

Project managers can teach themselves to make better choices by overcoming common mental traps. Many biases are hard to overcome, and it requires concerted effort and some experience to do so. As a first step, you need to learn that these biases exist.