CHAPTER 4

What Is Rational Choice?

A Brief Introduction to Decision Theory

Different organizations and individuals use different preferences and principles to select policy alternatives. We call those preferences and principles their “decision policies.” An important part of an organization’s decision policy is its attitude toward risk.

In this chapter we look at what it means to make rational decisions. We examine the concept of expected value and the normative expected utility theory, which provides a set of axioms, or rules, for how rational decisions should be made. Finally, we look at prospect theory, which is a descriptive approach to understanding decision-making.

Decision Policy

A decision policy is the set of principles or preferences that an organization uses when selecting its policy alternatives. The organization’s attitude toward risk is a key component of its decision policy.

Every decision policy reflects the attitude of an organization toward its objectives. The policy is a function of many parameters, such as client requirements, corporate culture, organizational structure, and investor relationships. In most cases, decision policies are not written; instead, they are an unwritten understanding that evolves over time with organizational changes, and they are understood by the managers. However, even though decision policies are usually an informal understanding, they are very difficult to change, even if directed by executive management.

The art and science of decision-making is applying decision policy to make rational choices.

In addition to an organization’s attitude toward risk, a decision policy includes attitudes toward cost, customer satisfaction, safety, quality, and the environment, among other parameters. When an organization makes its choices, it puts a premium on some parameters and downplays others. The problem is that different objectives often conflict. For example, maximizing shareholder value in the short term may conflict with a long-term research and innovation agenda.

Figure 4-1. Pfizer Project Pipeline as of January 30, 2018

How much risk should an organization take? How to protect against it? How to balance risk-taking with other organizational goals? Consider the example of Pfizer, one of the world’s largest pharmaceutical companies.

Figure 4-1 shows the actual Pfizer project and product pipeline as of January 2018 and tells how many drugs are at various phases of the trial and registration process (Pfizer 2018). The development process for new drugs is rife with risks and uncertainties. Only a small percentage of medications that are pursued by researchers actually reach consumers. They all must undergo numerous tests, clinical trials, and finally a complex Food and Drug Administration (FDA) approval process. The FDA is under intensive public scrutiny and therefore is very risk-averse. Normally, if there is any indication of significant side effects, new medications do not pass the FDA approval process, an outcome that can dramatically affect the pharmaceutical company’s bottom line.

A decision policy is a set of principles or preferences used for selecting alternatives.

In the Pfizer example, different companies were willing to take on varying levels of risk depending upon their decision policy. For the most part, the decision policy regarding tolerable risk levels is motivated by a desire to create wealth; however, a business may want to achieve other objectives.

The drug-approval process, of course, does not apply to the production of other goods, such as axes or shovels. Risks and uncertainties are present in every business, but some involve much more risk than others. Designing and manufacturing garden tools has some risks, yet they are relatively much smaller—there is no Axe and Shovel Administration to approve your tool. To guide a medicine from a research proposal through the final approval requires a pharmaceutical company to invest significant time and money. Alternatively, if few potential drugs are in the pipeline, a pharmaceutical company like Pfizer can purchase a business with promising projects by paying a very hefty price.

Different companies have contrasting attitudes toward risk. Junior pharmaceutical companies are often less risk-averse than more established ones. But the established companies may have the means to pay the companies that assumed this risk.

For another example, here are the basic components of the decision policy of Don Corleone’s enterprise in Mario Puzo’s novel The Godfather. This design policy would include a strong emphasis on:

• Profitability

• Security of its own employees, with a special concern for management

• Organizational structure, including clear definitions of roles, responsibilities, and reporting

• Fostering good relationships with the local community

And a low regard for:

• The safety of adversaries

• Legal rules and regulations

What was Don Corleone’s decision policy? That is, what was his attitude toward risk? Was he a risk-taker or a risk-averse leader?

Which Choice Is Rational?

All decision-makers try to make rational decisions. Before we can describe how you can make rational decisions, though, let’s first try to understand what the term “rationality” means.

Reid Hastie and Robyn Dawes (2009) define rationality as “compatibility between choice and value” and state that “rational behavior is behavior that maximizes the value of consequences.” Essentially, rational choice is an alternative that leads to the maximum value for the decision-maker. It is only through the prism of an organization’s decision policy that we can judge whether or not a decision is rational. Because decision policies can vary greatly, your rational decision can appear completely irrational to your friend who works in another company. This holds true not only for organizations but also for individuals, project teams, and even countries. It is very important to remember that when you work with a project team, decisions should be made based on a consistent decision policy.

Returning to our Godfather example, were Vito Corleone’s decisions rational or irrational? Our answer is that he was a rational decision-maker because, according to our definition of “rationality,” he followed the decision policy of his enterprise. In other words, he always followed the fundamental rules on which his organization was based. What this analysis does not tell us is whether, from the rest of society’s point of view, this was a good decision policy.

Expected Value

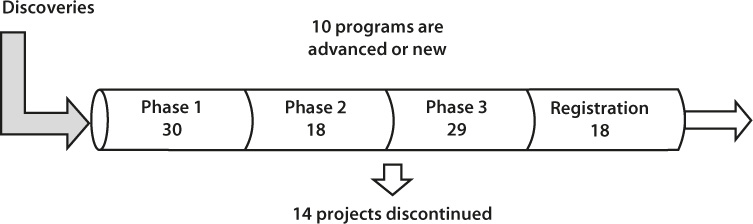

One method of evaluating multiple alternatives is to apply the concept of expected value. Let’s begin our discussion of this concept with a simple example of a decision driven by a single objective. Consider that a big pharmaceutical company has two choices:

1. It can continue to develop a drug in which it has invested $200 million in research and development. The chance that it will get FDA approval is 80%. If the drug is approved, the company will earn $800 million in profit (80% chance). But if it fails, the company will have lost the $200 million in development costs (20% chance).

2. It can buy another company that has already developed an FDA-approved drug. The estimated profit of this transaction is $500 million.

This problem can be represented by using a decision tree (figure 4-2). There is a simple procedure to calculate the indicator. You simply multiply the probability by the outcome of the event. In the first case, the company has an 80% chance (the probability that the FDA will approve the drug) of earning $800M: therefore, the expected value is $800M × 80% = $640M. If the FDA rejects the drug (20% chance), the expected value will be $−200M × 20% = $−40M. From a probabilistic viewpoint, the company can expect to receive $600M from developing its own drug: $640M − $40M = $600M.

Expected value is a probability-weighted average of all outcomes. It is calculated by multiplying each possible outcome by its probability of occurrence and then summing the results.

This number ($600M) is the expected value of the drug development project. Now we can compare this value to the alternatives. The expected value if the company develops its our own drug ($600M) is higher than the profit forecasted if it buys the other company ($500M). If the objective is to maximize revenue, the choice is clear; the company should develop its own drug. The arrow on figure 4-2 represents that choice. Outcomes can also be expressed as duration, cost, revenue, and so on. If the outcome is defined in monetary units, expected value is called expected monetary value, or EVM.

Basically, we combined two outcomes, using the probability of their occurrence. Because we cannot have a drug that at the same time is and is not approved by the FDA, expected value does not represent actual events. It is a calculation method that allows us to integrate risks and uncertainties into our decision analysis of what might happen.

In the real world, drug companies perform a quite similar process. Of course, the underlying valuation models they use are much more complex with more alternatives, risks, and uncertainties, so their decision trees can become quite large. We will cover quantitative analysis using decision trees in chapter 15.

Figure 4-2. Decision Tree

The St. Petersburg Paradox

Almost 300 years ago, the Swiss scholar Nicolas Bernoulli came up with an interesting paradox. He proposed a game, which looked something like this:

1. You toss a coin and if tails come up, you are paid $2.

2. You toss the coin a second time. If you get tails again, you are paid $4; otherwise, you get nothing and the game is over.

3. If you get tails a third time, you are paid $8; otherwise, you get nothing and the game is over.

4. The fourth time you may get $16; the fifth time, $32; and so on.

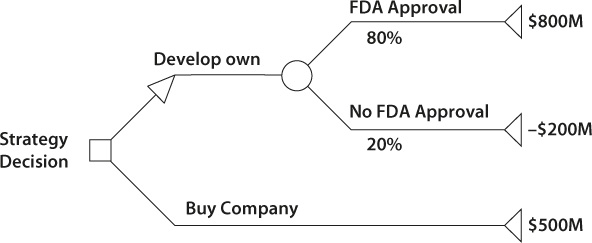

In theory, you can continue indefinitely and might win a lot of money. In fact, according to the expected-value approach, you can win an infinite amount of money, as shown in table 4.1.

Table 4-1. Bernoulli’s Coin-Tossing Game

Would you play this game? In reality, most people are not willing to risk a lot of money when they play any game. If they win for a while and the payoff gets larger, it becomes less likely that they are willing to stay in the game. The paradox lies in the question of why people don’t want to try to win an infinite amount of money if it costs them nothing to try. The only thing they risk is ending up back where they were before they started the game.

This kind of game probably sounds familiar, for it is the basis for the popular TV show Who Wants to Be a Millionaire? You can argue that the game is not the same because the answers are not random. But unless you are a genius or an extremely lucky guesser, as the payoff grows and the questions grow more difficult, you will probably need a wild guess to win. Sometimes people want to take a chance and give a random answer, but often they don’t, so they take the money instead of continuing to play the game. People like to watch this game not only because of its interesting questions but also because of the way it highlights this particular aspect of human psychology.

In 1738 Nicolas’s cousin Daniel Bernoulli proposed a solution to this problem in his book Commentaries of the Imperial Academy of Science of Saint Petersburg. This game was named the “St. Petersburg Paradox.” Bernoulli suggested that we need to take into account the value—or utility—of money, to estimate how useful this money will be to a particular person. Utility reflects the preferences of decision-makers toward various factors, including profit, loss, and risk.

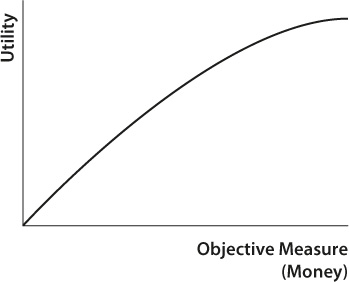

Utility is a highly subjective measure. What is the relationship between utility and objective measures, such as wealth or time? Bernouilli’s idea was that “twice as much money does not need to be twice as good.” The usefulness, or utility, of money can be represented on a graph as a curved line (figure 4-3). As you can see, the value of additional money declines as wealth increases. If you earn $1 million per year, an extra $10,000 will not be as valuable as it would be to somebody who earns $100,000 per year.

We need to be able to measure utility. The scale of the utility axis can be measured by arbitrary units called utils. It is possible to come up with a mathematical equation that defines this chart. The chart of the mathematical equation is called the utility function. The function represents a person’s or organization’s risk preferences, or their risk policy. Risk policy is the component of decision policy that reflects an attitude toward risk.

Figure 4-3. Utility Function

Risk-Taker versus Risk-Avoider

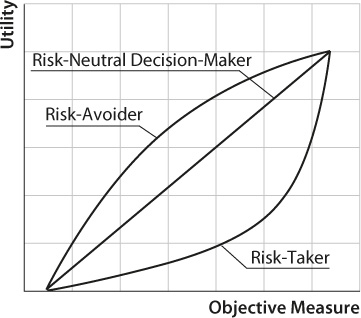

We have discussed how some companies and individuals can be risk-avoiders or risk-takers, depending on their risk policy, which is part of their general decision policy. We can now explain it using the utility function (figure 4-4).

1. A risk-avoider will have a concave utility function. Individuals or businesses purchasing insurance exhibit risk-avoidance behavior. The expected value of the project can be greater than the risk-avoider is willing to pay.

2. A risk-taker, such as a gambler, pays a premium to obtain risk. His or her utility function is convex.

3. A risk-neutral decision-maker has a linear utility function. His or her behavior can be defined by the expected value approach.

Most individuals and organizations are risk-avoiders when a certain amount of money is on the line and are risk-neutral or risk-takers for other amounts of money. This explains why the same individual will purchase both an insurance policy and a lottery ticket.

An example of an ultimate risk-taker is the fictional James Bond. When the world is about to be taken over by a villain, who are we most likely to see jumping into a shark-infested pond with only swim trunks and a pocketknife to save the day? Bond, James Bond, that’s who. According to Bond’s risk policy, the more dangerous the situation, the more likely he is to take the risk.

Figure 4-4. Using the Utility Function to Depict Risk Behavior

Expected Utility

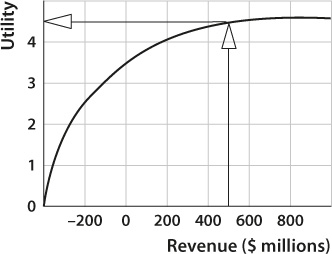

Utility function can be used in conjunction with quantitative analysis to reflect a risk policy when selecting particular alternatives. Let’s try to apply the utility function to the analysis of the strategic decision-making by the pharmaceutical company we discussed above (see the decision tree shown in figure 4-2).

The risk policy of the pharmaceutical company is represented by the utility function in figure 4-5. Using this chart, we can get a utility associated with each outcome. Arrows on the chart show how to get utility for the “buy company” outcome. The utility of the “buy” outcome of $500M ($500 million) equals 4.5. However, in most cases it is better to use a mathematical formula for the utility function rather than the chart.

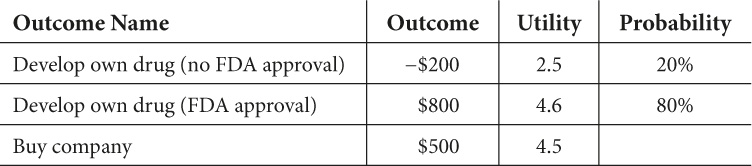

Table 4-2 shows the results of each outcome.

Expected utility is similar to expected value, except instead of using the outcomes, it uses utilities associated with these outcomes. Expected utility is calculated by multiplying the utility associated with each possible outcome by the probability of occurrence and then adding the results. In our case the expected utility of the “develop own drug” alternative equals 0.50 (no approval outcome) + 3.68 (FDA approval outcome) = 4.18. The utility for the “buy company” alternative is 4.5. So, based on utility theory, we should buy the company.

In this example we see an interesting phenomenon: using the expected value approach, the company should select the “develop own drug” alternative. Using the expected utility approach, the company should select the “buy company” alternative. Why? This company is risk-averse, and the expected utility approach incorporated the company’s risk profile. The company does not want to accept the risk associated with the potential loss that could occur if it attempted to develop its own drug.

Expected Utility Theory

Euclid, the Greek philosopher and mathematician, developed the postulates for plane geometry. They include all the necessary assumptions that apply in plane geometry, from which the entire theory was derived. John von Neumann and Oskar Morgenstern attempted to do a similar thing in decision science.

Table 4-2. Calculation of Expected Utility

Figure 4-5. Using the Utility Function to Select an Alternative

In their book Theory of Games and Economic Behavior, von Neumann and Morgenstern proposed the expected utility theory, which describes how people should behave when they make rational choices. They provided a set of assumptions, or axioms, which would be the rules of rational decision-making. To a certain extent, they were trying to build a logical foundation beneath decision analysis theory. Their book is full of mathematical equations. To avoid mathematical discussions, we will cover just a few of their basic ideas:

Axioms of the expected utility theory are conditions of desirable choices, not descriptions of actual behavior.

1. Decision-makers should be able to compare any two alternatives based on their outcomes.

2. Rational decision-makers should select alternatives that lead to maximized value in at least one aspect. For example, project A and project B have the same cost and duration, but the success rate of A is greater than the success rate of B. Therefore, project A should be selected.

3. If you prefer a 10-week project as opposed to a 5-week project, and a 5-week project to a 3-week project, you therefore should prefer a 10-week project to 3-week project.

4. Alternatives that have the same outcomes will cancel each other out. Choices must be based on outcomes that are different. If project A and project B have the same cost and duration but different success rates, the selection between the two projects should be based on the success rate—the only differing factor.

5. Decision-makers should always prefer a gamble between the best and worst outcomes to ensure an intermediate outcome. If project A has guaranteed revenue of $100 and project B has two alternatives—a complete disaster or revenue of $1,000—you should select project B if the chance of a complete disaster is 1/100000000000. . . .

Some of these axioms are just common sense, yet they are the necessary building blocks of decision theory. Von Neumann and Morgenstern mathematically proved that violation of these principles would lead to irrational decisions.

Extensions of Expected Utility Theory

A number of scientists have suggested that the axioms of von Neumann and Morgenstern unreasonably constrain the manner in which we make choices. In reality, people don’t always follow the axioms, for a number of reasons:

• Often, people make rational decisions, but not as von Neumann and Morgenstern conceived them. They may not have complete or perfect information about the alternatives. In addition, people often cannot estimate the advantages and disadvantages of each alterative.

• Even if people make rational decisions, there are still a number of exceptions to the axioms.

In spite of these and other observations, since the publication of their book scientists have continued to come up with explanations for the expected utility theory and have developed a number of extensions to it. As a result, currently there is no single, accepted expected utility theory. Expected utility theory is now a family of theories explaining rational behavior.

Leonard Savage (1954) focused his extension of expected utility theory on subjective probabilities of outcomes. For example, he concluded that if a type of project has never been done, it is therefore hard to determine the probability of the project’s success. However, according to Savage’s theory, it makes sense to consider subjectively defined probability.

How to Use Expected Utility Theory

The axioms of expected utility theory were intended to serve as the definition of a rational choice. In reality, project managers will likely never use the set of axioms directly. If you need to select a subcontractor for a project, the last thing you are going to do is open the von Neumann and Morgenstern book, peruse a dozen of pages of mathematical equations, and after a couple of weeks of analysis come up with your conclusion (which still has a chance of being wrong!). Doing such a thing would in itself be absolutely irrational.

When you take a measurement of a building’s wall, you don’t think about Euclid’s axioms, but you do use methods and techniques derived from his axioms. It is the same situation with expected utility theory when you are attempting to understand how to best maximize the value of your projects:

• You may read about best practices in one of the many project management books available.

• Your consultant may recommend some useful project management techniques.

• You may use the quantitative methods described in this book.

• You may try to avoid psychological traps when you make decisions.

Keep in mind that there is a foundation for all these activities. It is called decision science, of which the expected utility theory is one of the fundamental components.

Target Oriented Interpretation of Utility

What is the target-oriented utility, and how could that approach help project managers? The goal of project management is to meet certain targets or benchmark that clients set. For example, the goal is to develop software within a certain deadline and budget. At the same time, the client has not provided detailed requirements for the software. In other words, the probability of achieving this goal is very uncertain. It is the job of project managers to increase the probability that this goal is met. Maximizing expected utility is equivalent to maximizing the probability of meeting certain targets. This set of axioms also implies that project managers should select actions that maximize the probability of meeting an uncertain target (Castagnoli and Calzi 1996; Bordley and LiCalzi 2000; Bier and Kosanoglu 2015; Bordley et al. 2015). In the target-oriented approach, utility assessment is focused on identification of benchmarks or targets, which are easier to identify and track. It can be done using methods similar to what we will describe in chapter 13 (Bordley 2017).

Descriptive Models of Decision-Making

Von Neumann and Morgenstern considered expected utility theory to be a normative theory of behavior. As we noted in chapter 1, normative theories do not explain the actual behavior of people; instead, they describe how people should behave when they have made rational choices.

Psychologists have attempted to come up with descriptive models that explain the actual behavior of people. Herbert Simon proposed that people search for alternatives to satisfy certain needs rather than to maximize utility functions (Simon 1956). As a project manager, you probably will not review all your previous projects to select the subcontractor that seems to have the highest overall utility for your project. Instead, you will choose one that best satisfies your immediate requirements: quality of work done, reliability, price, and so on.

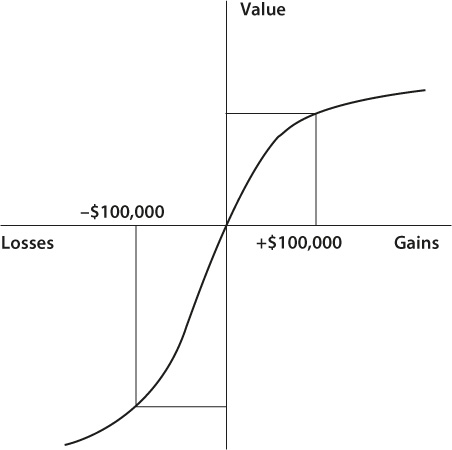

Daniel Kahneman and Amos Tversky developed a theory of behavior that helps to explain a number of psychological phenomena, which they called the prospect theory (Kahneman and Tversky 1979). They argued that a person’s attitude toward risk is different from his or her attitude toward losses. Here is a question for you as a project manager. Would you prefer:

A. A project with an absolutely certain NPV of $100,000?

B. A project with a 50% chance of an NPV of $200,000?

Most likely you would select A. But let’s reframe the question. Which would you prefer between these two:

A. A sure loss of $100,000?

B. A 50% chance of a loss of $200,000?

Most likely, you would prefer option B. At least, this was the most frequent answer from the people who participated in a survey.

This “loss aversion” effect can be demonstrated by using a chart (figure 4-6). Kahneman and Tversky replaced the notion of utility with value, which they defined in terms of gains and losses. The value function on the gain side is similar to the utility function (figure 4-3). But the value function also includes a steeper curve for losses on the left side compared with the curve for gains. Using the chart in figure 4-6, you can see that losses of $100,000 felt much stronger than gains of $100,000.

Unlike expected utility theory, prospect theory predicts that people make risk-averse choices if the expected outcome is positive, but make risk-seeking choices to avoid negative outcomes. Everything depends on how choices are framed or presented to the decision-maker.

Another interesting phenomenon explained by prospect theory is called the endowment effect, which asserts that “once something is given to me, it is mine.” People place a higher value on objects they own than on objects they do not. In project management, these can be resources. It explains why most project managers continue to use materials they have purchased, even though a better material may be available.

Figure 4-6. Value Function in the Prospect Theory

Prospect theory also explains zero-risk bias, which is the preference for reducing a small risk to zero, rather than having a greater reduction to a larger risk. Knowing about this bias is important in project risk analysis, because project managers usually like to avoid smaller risks rather than expend effort to mitigate more critical risks.