Another way of preventing overfitting is regularization. Recall that unnecessary complexity of the model is a source of overfitting just like cross-validation is a general technique to fight overfitting. Regularization adds extra parameters to the error function we are trying to minimize in order to penalize complex models.

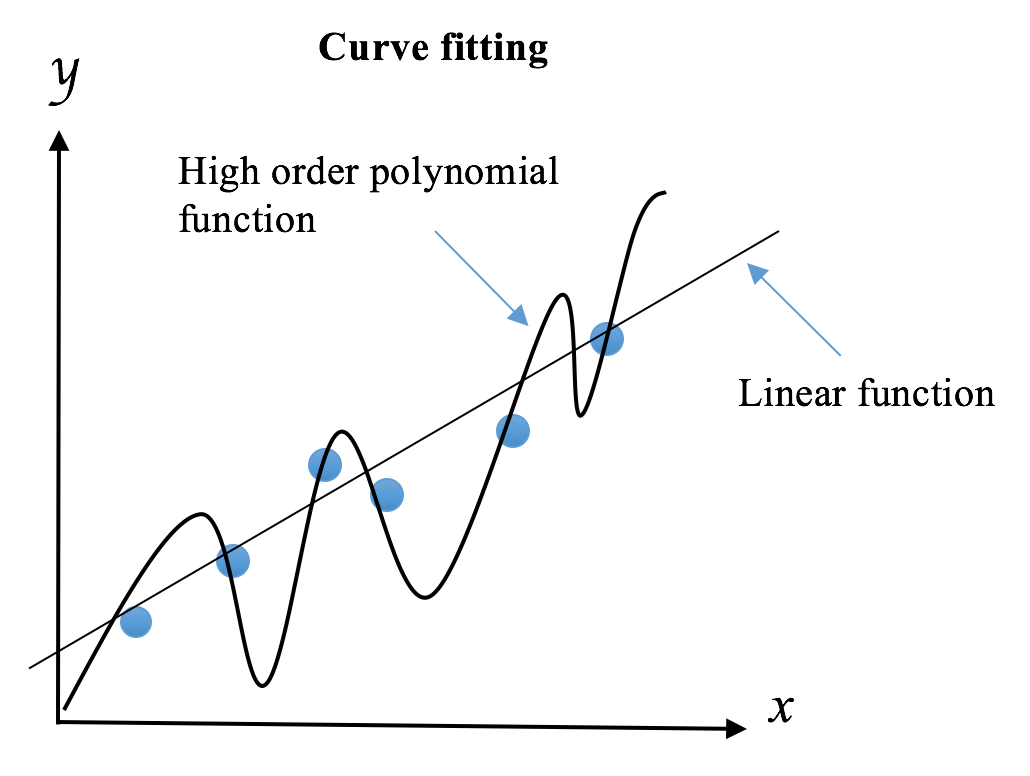

According to the principle of Occam’s Razor, simpler methods are to be favored. William Occam was a monk and philosopher who, around 1320, came up with the idea that the simplest hypothesis that fits data should be preferred. One justification is that we can invent fewer simple models than complex models. For instance, intuitively, we know that there are more high-polynomial models than linear ones. The reason is that a line (y=ax+b) is governed by only two parameters--the intercept b and slope a. The possible coefficients for a line span a two-dimensional space. A quadratic polynomial adds an extra coefficient to the quadratic term, and we can span a three-dimensional space with the coefficients. Therefore, it is much easier to find a model that perfectly captures all the training data points with a high order polynomial function as its search space is much larger than that of a linear model. However, these easily-obtained models generalize worse than linear models, which are more prompt to overfitting. And of course, simpler models require less computation time. The following figure displays how we try to fit a linear function and a high order polynomial function respectively to the data:

The linear model is preferable as it may generalize better to more data points drawn from the underlying distribution. We can use regularization to reduce the influence of the high orders of polynomial by imposing penalties on them. This will discourage complexity, even though a less accurate and less strict rule is learned from the training data.

We will employ regularization quite often staring from Chapter 6, Click-Through Prediction with Logistic Regression. For now, let’s see the following analogy, which will help us understand it better:

A data scientist wants to equip his robotic guard dog the ability to identify strangers and his friends. He feeds it with the the following learning samples:

|

Male |

Young |

Tall |

With glasses |

In grey |

Friend |

|

Female |

Middle |

Average |

Without glasses |

In black |

Stranger |

|

Male |

Young |

Short |

With glasses |

In white |

Friend |

|

Male |

Senior |

Short |

Without glasses |

In black |

Stranger |

|

Female |

Young |

Average |

With glasses |

In white |

Friend |

|

Male |

Young |

Short |

Without glasses |

In red |

Friend |

The robot may quickly learn the following rules: any middle-aged female of average height without glasses and dressed in black is a stranger; any senior short male without glasses and dressed in black is a stranger; anyone else is his friend. Although these perfectly fit the training data, they seem too complicated and unlikely to generalize well to new visitors. In contrast, the data scientist limits the learning aspects. A loose rule that can work well for hundreds of other visitors could be: anyone without glasses dressed in black is a stranger.

Besides penalizing complexity, we can also stop a training procedure early as a form of regularization. If we limit the time a model spends in learning or set some internal stopping criteria, it is more likely to produce a simpler model. The model complexity will be controlled in this way, and hence, overfitting becomes less probable. This approach is called early stopping in machine learning.

Last but not least, it is worth noting that regularization should be kept on a moderate level, or to be more precise, fine-tuned to an optimal level. Regularization, when too small, does has make any impact; regularization, when too large, will result in underfitting as it moves the model away from the ground truth. We will explore how to achieve the optimal regularization mainly in Chapter 6, Click-Through Prediction with Logistic Regression and Chapter 7, Stock Price Prediction with Regression Algorithms.