10.4 Count Data and Overdispersion

The examples in the previous sections of this chapter involved categorical data. Generalized linear models are useful for other types of non-normal data as well. Many studies, for example, use counts as the primary response variable. This section presents a typical example.

Historically, statistical theory has placed counting processes in a framework that implies a Poisson distribution. However, recent work, particularly in biological settings such as agriculture, ecology, and environmental science, suggests that the Poisson distribution is often inappropriate for counts. The Poisson assumes that the mean and variance are equal. In biological settings, the birds, insects, plants, and so forth being counted tend to be more aggregated—that is, clustered together—than you would expect under the Poisson model. Thus, the variance tends to be larger than the mean, in many cases much larger. Overdispersion is said to occur when the variance is larger than expected under a given model.

There are two approaches to overdispersion in GzLMs. First, you can use the GzLM assuming a Poisson distribution, but adjust standard errors and test statistics, as suggested by McCullagh and Nelder (1989). Alternatively, you can use a different distribution. For example, agricultural and ecological count data are often accurately characterized by the negative binomial distribution (Young and Young 1998).

This section uses an example of insect count to illustrate the main features of count models. Section 10.4.1 shows the standard Poisson model with no adjustments. Section 10.4.2 shows some basic model-checking procedures you can do with PROC GENMOD. Section 10.4.3 shows you how to do the overdispersion correction suggested by McCullagh and Nelder (1989). Section 10.4.4 presents an alternative model assuming the negative binomial and shows you how to fit it using PROC GENMOD.

10.4.1 An Insect Count Example

Output 10.24 contains data from an insect control experiment. The treatment design consisted of an untreated control and a 3×3 factorial for a total of ten treatments. The experiment was conducted as a randomized-complete-blocks design with four blocks. The response variable was insect count. TRT 0 is the control. The variable CTL_TRT is coded 0 for control and 1 otherwise. The two treatment factors, A and B, have three levels each (1, 2, and 3) and are both coded 0 for the control.

Output 10.24 Insect Count Data

| Obs | BLOCK | TRT | CTL_TRT | A | B | COUNT |

| 1 | 1 | 0 | 0 | 0 | 0 | 16 |

| 2 | 1 | 1 | 1 | 1 | 1 | 6 |

| 3 | 1 | 2 | 1 | 1 | 2 | 2 |

| 4 | 1 | 3 | 1 | 1 | 3 | 4 |

| 5 | 1 | 4 | 1 | 2 | 1 | 5 |

| 6 | 1 | 5 | 1 | 2 | 2 | 3 |

| 7 | 1 | 6 | 1 | 2 | 3 | 1 |

| 8 | 1 | 7 | 1 | 3 | 1 | 1 |

| 9 | 1 | 8 | 1 | 3 | 2 | 3 |

| 10 | 1 | 9 | 1 | 3 | 3 | 1 |

| 11 | 2 | 0 | 0 | 0 | 0 | 25 |

| 12 | 2 | 1 | 1 | 1 | 1 | 9 |

| 13 | 2 | 2 | 1 | 1 | 2 | 6 |

| 14 | 2 | 3 | 1 | 1 | 3 | 3 |

| 15 | 2 | 4 | 1 | 2 | 1 | 3 |

| 16 | 2 | 5 | 1 | 2 | 2 | 4 |

| 17 | 2 | 6 | 1 | 2 | 3 | 5 |

| 18 | 2 | 7 | 1 | 3 | 1 | 2 |

| 19 | 2 | 8 | 1 | 3 | 2 | 2 |

| 20 | 2 | 9 | 1 | 3 | 3 | 0 |

| 21 | 3 | 0 | 0 | 0 | 0 | 5 |

| 22 | 3 | 1 | 1 | 1 | 1 | 2 |

| 23 | 3 | 2 | 1 | 1 | 2 | 14 |

| 24 | 3 | 3 | 1 | 1 | 3 | 5 |

| 25 | 3 | 4 | 1 | 2 | 1 | 3 |

| 26 | 3 | 5 | 1 | 2 | 2 | 6 |

| 27 | 3 | 6 | 1 | 2 | 3 | 17 |

| 28 | 3 | 7 | 1 | 3 | 1 | 2 |

| 29 | 3 | 8 | 1 | 3 | 2 | 3 |

| 30 | 3 | 9 | 1 | 3 | 3 | 2 |

| 31 | 4 | 0 | 0 | 0 | 0 | 9 |

| 32 | 4 | 1 | 1 | 1 | 1 | 22 |

| 33 | 4 | 2 | 1 | 1 | 2 | 4 |

| 34 | 4 | 3 | 1 | 1 | 3 | 5 |

| 35 | 4 | 4 | 1 | 2 | 1 | 2 |

| 36 | 4 | 5 | 1 | 2 | 2 | 3 |

| 37 | 4 | 6 | 1 | 2 | 3 | 1 |

| 38 | 4 | 7 | 1 | 3 | 1 | 3 |

| 39 | 4 | 8 | 1 | 3 | 2 | 4 |

| 40 | 4 | 9 | 1 | 3 | 3 | 9 |

As mentioned above, historically, the Poisson distribution has been assumed for count data. Using the Poisson canonical link, a GLM for these data is

log(λij) = μ + γi + τj

where

| λij | is the mean count for the ith block (i = 1, 2, 3, 4) and jth treatment (j = 0, 1,...,9). |

| μ | is the intercept. |

| γi | is the ith block effect. |

| τj | is the jth treatment effect. |

The treatment effect can be expanded to account for control versus treated and the factorial treatment design. You partition it as

τjkl = δj + τk + β?l + (τβ)kl

where

| δj | is the contrast between control and treated (j = 0, 1). |

| τk | is the main effect of the kth level of factor A (k = 1, 2, 3). |

| βl | is the main effect of the lth level of factor B (k = 1, 2, 3). |

| (τβ)kl | is the klth A×B interaction effect. |

Use the following SAS statements to compute the analysis:

proc genmod data=a;

class block ctl_trt a b;

model count=block ctl_trt a b a*b/dist=poisson type1

type3;

The MODEL statement allows you to partition the treatment effects according to the model for τjkl given above. The LINK= option is omitted, but the DIST=POISSON statement causes the canonical link for the Poisson—the log link—to be fitted by default. Output 10.25 shows the results of the Type 1 and Type 3 likelihood ratio tests. These results are discussed below. The goodness-of-fit statistics are shown in Section 10.4.2.

Output 10.25 The First Version of the GENMOD Analysis of the Poisson Model for Insect Count Data

LR Statistics For Type 1 Analysis

| Source | Deviance | DF | Chi- Square |

Pr < ChiSq |

| Intercept | 175.0974 | |||

| CTL_TRT | 135.1948 | 1 | 39.90 | <.0001 |

| BLOCK | 130.4411 | 3 | 4.75 | 0.1907 |

| A | 107.5545 | 2 | 22.89 | <.0001 |

| B | 107.0454 | 2 | 0.51 | 0.7753 |

| A*B | 93.9652 | 4 | 13.08 | 0.0109 |

LR Statistics For Type 3 Analysis

| Source | DF | Chi- Square |

Pr > ChiSq |

| CTL_TRT | 0 | 0.00 | . |

| BLOCK | 3 | 4.75 | 0.1907 |

| A | 2 | 19.40 | <.0001 |

| B | 2 | 0.13 | 0.9394 |

| A*B | 4 | 13.08 | 0.0109 |

You could put only BLOCK and TRT in the CLASS and MODEL statements and use the CONTRAST statements to compute tests for the (CTL) versus (TRT), the main effects of A and B, and the A×B interaction. The SAS program is then

proc genmod data=a;

class block trt;

model count=block trt/dist=poisson type1 type3;

contrast ‘ctl vs trt’ trt 9 -1 -1 -1 -1 -1 -1 -1 -1 -1;

contrast ‘a’ trt 0 1 1 1 0 0 0 -1 -1 -1,

trt 0 0 0 0 1 1 1 -1 -1 -1;

contrast ‘b’ trt 0 1 0 -1 1 0 -1 1 0 -1,

trt 0 0 1 -1 0 1 -1 0 1 -1;

contrast ‘a x b’ trt 0 1 0 -1 0 0 0 -1 0 1,

trt 0 0 0 0 1 0 -1 -1 0 1,

trt 0 0 1 -1 0 0 0 0 -1 1,

trt 0 0 0 0 0 1 -1 0 -1 1;

Using A, B, and A*B in the MODEL statement as shown in the first program above is clearly more convenient than writing the equivalent contrasts. The results of the likelihood ratio tests for the contrasts in the second program appear in Output 10.26.

Output 10.26 Texts of Contrasts, the Second Version of the GENMOD Analysis of the Poisson Model for Insect Data

Contrast Results

| Contrast | DF | Chi- Square |

Pr > ChiSq | Type |

| ctl vs trt | 1 | 45.86 | <.0001 | LR |

| a | 2 | 19.40 | <.0001 | LR |

| b | 2 | 0.13 | 0.9394 | LR |

| a x b | 4 | 13.08 | 0.0109 | LR |

You can see that the TYPE3 likelihood ratio χ2 statistics for A, B, and A*B in Output 10.25 are the same as you get when you use TRT in the model and write contrasts, shown in Output 10.26. The χ2 statistic for A*B is 13.08 with a p-value of 0.0109, strong evidence of an A×B interaction.

The situation is not quite so simple for CTL_TRT. In Output 10.25, the Type I likelihood ratio χ2 statistic is 39.90 with a p-value <0.0001. This statistic tests H0:δ0 =δ1, using the partitioned form of the model for τjkl given above. The TYPE3 χ2 statistic for CTL_TRT is 0 with 0 DF. This is because there are 0 levels of A and B, and the CTL_TRT effect is confounded with the 0 versus other levels of A and B comparisons. Hence, there are 0 degrees of freedom left for CTL_TRT once adjusted for A and B. Therefore, if you use the approach shown in Output 10.25, you must use the TYPE1 test for CTL_TRT, rather than the Type 3, and CTL_TRT must appear before A, B, and A*B in the MODEL statement. In Output 10.26, the control versus treated CONTRAST tests H0:τ0=199∑j=1τj

10.4.2 Model Checking

Both GENMOD programs in Section 10.4.1 produce the goodness-of-fit statistics shown in Output 10.27.

Output 10.27 Goodness-of-Fit Statistics for Poisson GLM of Insect Count Data

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 93.9652 | 3.4802 |

| Pearson Chi-Square | 27 | 94.6398 | 3.5052 |

Both the deviance and the Pearson χ2 are greater than you would expect under the Poisson generalized linear model given in Section 10.4.1. This casts doubt on whether the Poisson model is appropriate and therefore whether the conclusions reached in the last section are valid.

There are a number of possible reasons for poor fit. Some of these reasons are familiar from conventional normal errors linear models. For example, the right-hand side of the model equation (κ + γi + τj) might be inadequate, in this case indicating a possible treatment×block interaction. Some of the reasons are unique to the generalized linear model, because of its added flexibility. The choice of probability distribution, or link function, or variance function, may be inappropriate. This section surveys model-checking procedures for GLMs you can do using PROC GENMOD. For more details, see generalized linear model texts such as McCullagh and Nelder (1989). Certain SAS procedures, for example, PROC LOGISTIC, have far more extensive model-checking facilities for certain specialized GLMs. These are not covered in this book.

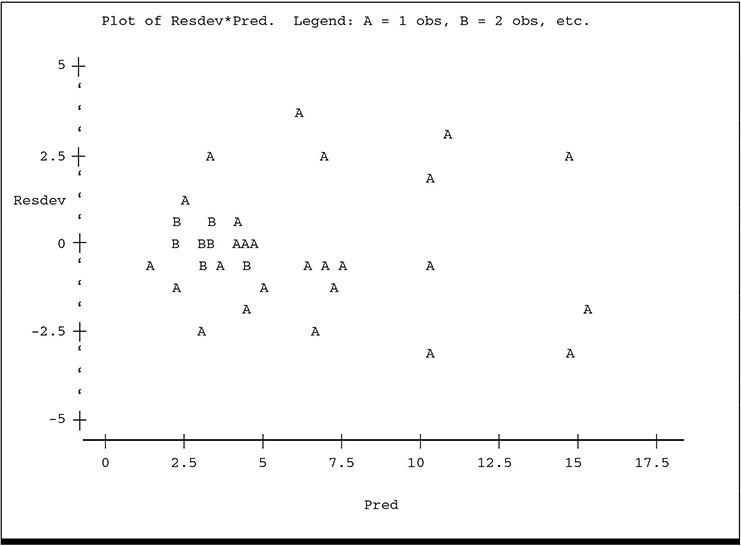

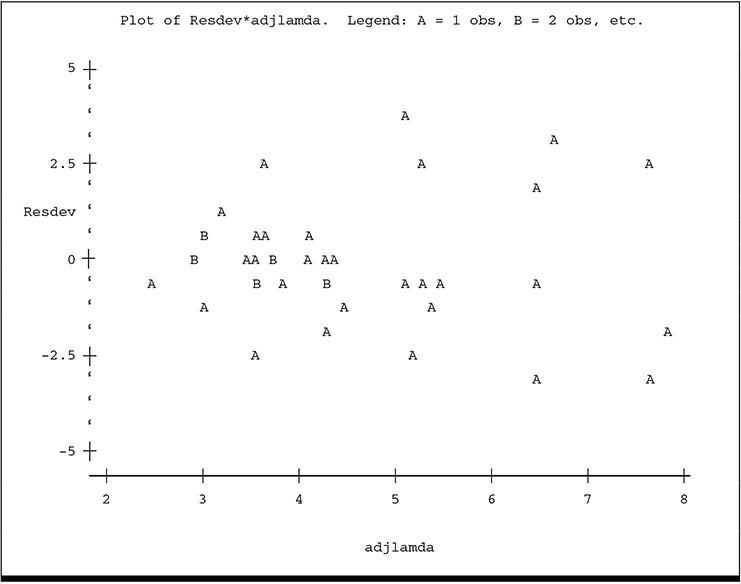

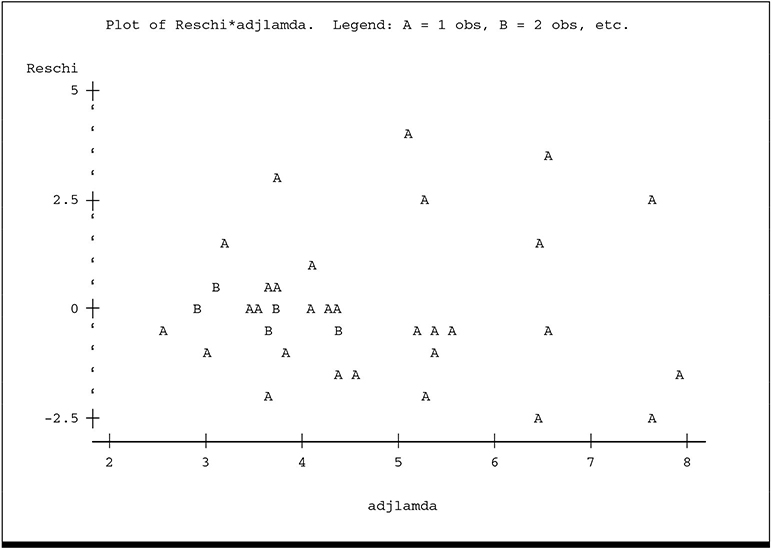

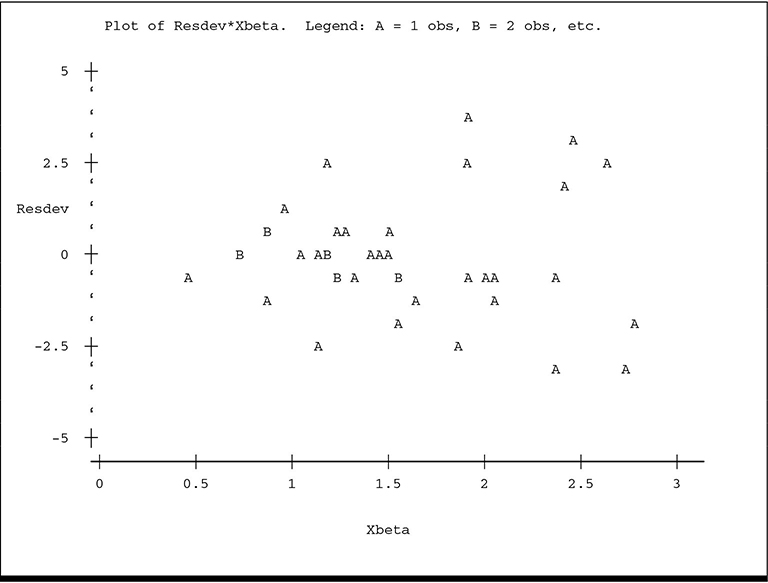

McCullagh and Nelder suggest several plots to assist in identifying obvious problems with the model, including these:

• Plot standardized residuals against the predicted mean. This serves the same purpose as similar plots in conventional linear models. Unequal scatter indicates violation of the homogeneity of variance assumption. More generally, unequal scatter may suggest a poor choice of variance function. Systematic pattern may indicate a poor choice of Xβ, for example, fitting a linear regression to a quadratic pattern, or it may indicate a poor choice of link function or probability distribution.

• Plot y*, for example, ˆη+y−ˆλˆλ

You can get these plots by using the GENMOD and PLOT procedures in SAS. For the insect count data and the Poisson model from Section 10.4.1, the SAS statements are

proc genmod data=a;

class BLOCK trt;

model count=BLOCK trt/dist=poisson type1 ObStats;

ODS OUTPUT ObStats=check;

title ‘compute model checking statistics’;

data plot;

set check;

adjlamda=2*sqrt(pred);

ystar=xbeta+(count-pred)/pred;

absres=abs(resdev);

options ps=28;

proc plot;

plot resdev*(pred xbeta);

plot (resdev reschi)*adjlamda;

plot ystar*xbeta;

plot absres*adjlamda;

The GENMOD statement is the same as you used to fit the Poisson model in Section 10.4.1, with the addition of an OBSTATS option to the MODEL statement and an ODS statement to output the OBSTATS to a new data set. The OBSTATS option computes several statistics analogous to predicted values and residuals for model checking in conventional linear models. The pertinent statistics are

| STATISTIC | Definition |

|---|---|

XBETA |

estimate of link function, that is, ˆη=Xˆβ |

| PRED | predicted value on COUNT scale, that is,ˆλij=h(ˆηij)=exp(ˆηij) |

| RESDEV | residual deviance = sign(residual)*deviance of yij= sign(resid)×[ℓ(λij;yij)−ℓ(λij;ˆλij)] |

| RESCHI | similar to residual deviance but uses component of Pearson χ2from ijth obs |

The DATA PLOT step computes three additional functions of statistics in the OBSTATS output useful for model checking. McCullagh and Nelder (1989) suggest adjusting predicted values, in this case ˆλ,

The example plots are shown below.

The plot of ADJLAMDA spreads the plot more evenly over the horizontal axis and reveals more detail for the lower predicted counts. While there is no overt visual evidence of unequal scatter or systematic pattern, there is a hint of lower variance among the residuals for the lowest predicted counts, especially on the ADJLAMDA plot. The plots of residual deviance against XBETA and residual π2 against ADJLAMDA reveal similar information:

The plot of y* versus ˆη

Finally, the plot of absolute value of residual deviance versus ADJLAMDA reinforces the information contained in the previous residual plots.

There are two main results of this model-checking exercise:

1. The goodness-of-fit statistics, deviance, and Pearson χ2 are larger than expected.

2. There is slight visual evidence that scatter among the residuals is not constant, but is lower for lower predicted counts.

Taken together, these symptoms indicate that the Poisson model may be inappropriate and, in particular, the assumed variance structure may not be correct. The next section discusses the likely reason as well as a commonly used adjustment.

10.4.3 Correction for Overdispersion

The Poisson model assumes that the mean and variance are equal. As mentioned previously, in biological counting processes the variance is typically greater than the mean. When the variance is larger than expected under a given model, the condition is called overdispersion. Unaccounted for overdispersion tends to cause standard errors to be underestimated and test statistics to be overestimated, resulting in excessive Type I error rates.

With generalized linear models, you have two ways to account for overdispersion—fit the Poisson model but adjust the standard errors and test statistics—or choose a different distribution for the model. This section shows you how to adjust the Poisson model. Section 10.4.4 gives an example of using PROC GENMOD to fit an alternative distribution.

You can model overdispersion by letting the actual variance equal the assumed variance multiplied by an additional scale parameter that adjusts for the discrepancy between assumed and actual. For the Poisson distribution, the assumed variance is the mean, ϕ, so the adjusted variance is ϕλ. Overdispersion occurs when ϕ>1. In GzLM literature, ϕ is typically referred to as the overdispersion parameter.

As a theoretical note, when you assume E(y)=λ and var(y)=ϕλ, you no longer have a true probability distribution for the random variable, y, but you do have a valid quasi-likelihood (see Section 10.6.4). You can show that for estimable Ḱβ from the resulting GLM, standard errors of K′ˆβ

Because the overdispersion parameter is unknown, you must estimate it. McCullagh (1983) gives ˆϕ

You can compute the Poisson model corrected for overdispersion by using PROC GENMOD. For the insect count data, use the following SAS statements:

proc genmod data=a;

class BLOCK CTL_TRT a b;

model count=BLOCK CTL_TRT a b a*b/dist=poisson dscale

type1 type3;

This is the same set of statements used in Section 10.4.1 except for the addition of the DSCALE option in the MODEL statement. DSCALE causes all standard errors and test statistics to be corrected for a scale parameter estimated using the deviance, that is, ˆϕ=DevianceN−p.

Output 10.28 The Poisson Analysis of Insect Count Data Corrected for Overdispersion

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 93.9652 | 3.4802 |

| Scaled Deviance | 27 | 27.0000 | 1.0000 |

| Pearson Chi-Square | 27 | 94.6398 | 3.5052 |

| Scaled Pearson X2 | 27 | 27.1938 | 1.0072 |

LR Statistics For Type 1 Analysis

| Source | Deviance | Num DF | Den DF | F Value | Pr > F | Chi- Square |

Pr > ChiSq |

| Intercept | 175.0974 | ||||||

| BLOCK | 170.3437 | 3 | 27 | 0.46 | 0.7157 | 1.37 | 0.7135 |

| CTL_TRT | 130.4411 | 1 | 27 | 11.47 | 0.0022 | 11.47 | 0.0007 |

| A | 107.5545 | 2 | 27 | 3.29 | 0.0527 | 6.58 | 0.0373 |

| B | 107.0454 | 2 | 27 | 0.07 | 0.9296 | 0.15 | 0.9295 |

| A*B | 93.9652 | 4 | 27 | 0.94 | 0.4561 | 3.76 | 0.4397 |

LR Statistics For Type 3 Analysis

| Source | Num DF | Den DF | F Value | Pr > F | Chi- Square |

Pr > ChiSq |

| BLOCK | 3 | 27 | 0.46 | 0.7157 | 1.37 | 0.7135 |

| CTL_TRT | 0 | 27 | . | . | 0.00 | . |

| A | 2 | 27 | 2.79 | 0.0794 | 5.57 | 0.0616 |

| B | 2 | 27 | 0.02 | 0.9822 | 0.04 | 0.9822 |

| A*B | 4 | 27 | 0.94 | 0.4561 | 3.76 | 0.4397 |

The scaled deviance is the deviance divided by the estimated overdispersion parameter. Here, the overdispersion parameter is estimated by using the deviance, that is, ˆϕ=93.965227

In the table of likelihood ratio statistics, there are two sets of test statistics. The right-hand two columns give an adjusted χ2, equal to the original χ2 computed in Section 10.4.1, divided by ˆϕ,

In the analysis of the Poisson model in Section 10.4.1, there was very strong evidence of a CTL_TRT effect—that is, a difference between the untreated control and the mean of the AB treatment combinations. There was also strong evidence of an A×B interaction. When the analysis is corrected for overdispersion, evidence of the CTL_TRT effect remains (F=11.47, p=0.0022), but the A×B interaction no longer is statistically significant. Instead, there is some evidence of an A main effect (F=2.79, p= 0.0794), but no other differences among the treated groups.

To complete the analysis, you need the estimated mean counts for control and treated, and for the levels of factor A. While the model using CTL_TRT A B A*B is convenient for obtaining test statistics, it results in non-estimable LS means. The only alternative is to define the LS means using ESTIMATE statements defined on the model that substitutes TRT for CTL_TRT A B A*B. The SAS statements follow. For completeness, the program includes the CONTRAST from Section 10.4.1.

proc genmod data=a;

class block trt;

model count=block trt/dist=poisson pscale type1 type3;

contrast ‘ctl vs trt’ trt 9 -1 -1 -1 -1 -1 -1 -1 -1 -1;

contrast ‘a’ trt 0 1 1 1 0 0 0 -1 -1 -1,

trt 0 0 0 0 1 1 1 -1 -1 -1;

contrast ‘b’ trt 0 1 0 -1 1 0 -1 1 0 -1,

trt 0 0 1 -1 0 1 -1 0 1 -1;

contrast ‘a x b’ trt 0 1 0 -1 0 0 0 -1 0 1,

trt 0 0 0 0 1 0 -1 -1 0 1,

trt 0 0 1 -1 0 0 0 0 -1 1,

trt 0 0 0 0 0 1 -1 0 -1 1;

estimate ‘ctl lsmean’ intercept 1 trt 1 0/exp;

estimate ‘treated lsm’intercept 9 trt 0 1 1 1 1 1 1 1 1 1/

divisor=9 exp;

estimate ‘A=1 lsmean’ intercept 3 trt 0 1 1 1 0/divisor=3 exp;

estimate ‘A=2 lsmean’ intercept 3 trt 0 0 0 0 1 1 1

0/divisor=3 exp;

estimate ‘A=3 lsmean’ intercept 3 trt 0 0 0 0 0 0 0 1 1 1 0/

divisor=3 exp;

The results appear in Output 10.29. The PSCALE option appears in the above MODEL statement, so the Pearson χ2 is used to estimate the overdispersion parameter. You could just as well use DSCALE. The EXP option in the ESTIMATE statements computes exp(estimate), as well as its standard error and upper and lower confidence limits. For the log link, the inverse link is λ=exp(η), so the EXP option computes the inverse link to obtain the estimate of the actual counts, applies the Delta Rule to get the standard error, and so forth. The log is the only link function for which there is an option in the ESTIMATE statement to compute the inverse link. Otherwise, you must output the LS means using an ODS statement in the WRITE statement to compute the inverse link as shown for the binomial data in Sections 10.2 and 10.3.

Output 10.29 CONTRAST and ESTIMATE Results for Insect Count Analysis Using the Poisson Model Corrected for Overdispersion

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 93.9652 | 3.4802 |

| Scaled Deviance | 27 | 26.8076 | 0.9929 |

| Pearson Chi-Square | 27 | 94.6398 | 3.5052 |

| Scaled Pearson X2 | 27 | 27.0000 | 1.0000 |

Contrast Results

| Contrast | Num DF | Den DF | F Value | Pr > F | Chi- Square |

Pr > ChiSq | Type |

| ctl vs trt | 1 | 27 | 13.08 | 0.0012 | 13.08 | 0.0003 | LR |

| a | 2 | 27 | 2.77 | 0.0807 | 5.53 | 0.0629 | LR |

| b | 2 | 27 | 0.02 | 0.9823 | 0.04 | 0.9823 | LR |

| a x b | 4 | 27 | 0.93 | 0.4597 | 3.73 | 0.4435 | LR |

Contrast Estimate Results

| Label | Estimate | Standard Error |

Alpha | Confidence Limits | Chi- Square |

|

| ctl lsmean | 2.6096 | 0.2532 | 0.05 | 2.1133 | 3.1059 | 106.21 |

| Exp(ctl lsmean) | 13.5940 | 3.4422 | 0.05 | 8.2758 | 22.3296 | |

| treated lsm | 1.4156 | 0.1618 | 0.05 | 1.0986 | 1.7326 | 76.59 |

| Exp(treated lsm) | 4.1189 | 0.6663 | 0.05 | 2.9999 | 5.6555 | |

| A=1 lsmean | 1.8539 | 0.2197 | 0.05 | 1.4233 | 2.2845 | 71.22 |

| Exp(A=1 lsmean) | 6.3848 | 1.4026 | 0.05 | 4.1510 | 9.8207 | |

| A=2 lsmean | 1.4408 | 0.2663 | 0.05 | 0.9189 | 1.9628 | 29.27 |

| Exp(A=2 lsmean) | 4.2242 | 1.1249 | 0.05 | 2.5065 | 7.1189 | |

| A=3 lsmean | 0.9520 | 0.3376 | 0.05 | 0.2903 | 1.6137 | 7.95 |

| Exp(A=3 lsmean) | 2.5910 | 0.8747 | 0.05 | 1.3369 | 5.0216 | |

The adjusted statistics for A B and A*B are somewhat different from Output 10.28 because overdispersion was estimated using the Pearson χ2 instead of the deviance. Here, ˆϕ

The table of “Contrast Estimate Results” gives the estimates in pairs of lines. The first line is the literal K′ˆβ

There were two main points of this section. First, the DSCALE or PSCALE options in GENMOD allow you to adjust for overdispersion. Second, adjusting for overdispersion can substantially affect the conclusions you draw from the analysis. Without correction for overdispersion, you would focus on a highly significant A×B interaction within the treated group. With correction for overdispersion, your emphasis shifts to interpreting the main effect of factor A.

While the overdispersion correction presented in this section is simple, many statisticians criticize it for being simplistic as well. They contend that the real problem is that the assumed distribution is inappropriate, and advocate using a more realistic model. Section 10.4.4 presents an example of using GENMOD to fit a user-supplied distribution, when none of the distributions supported by GENMOD are considered appropriate.

10.4.4 Fitting a Negative Binomial Model

For biological count data, recent work indicates that overdispersion is the rule, not the exception, and that distributions where the variance exceeds the mean are usually more appropriate than the Poisson as the basis for generalized linear models. One such distribution is the negative binomial.

The negative binomial appears in statistical theory texts as the distribution of the probability that it takes exactly N Bernoulli trials for exactly Y successes to occur. If π denotes the probability of a success, the P(N=n)= (N–1)!(y)!(N–y–1)!πy(1−π)N−y.

The natural parameter for the negative binomial is log(λλ+k)

A generalized linear model for the insect count data using the negative binomial with a canonical link is

ηij= = log(λijλij+k)

The alternative model using the log link is

ηij= log(λij)

where the terms of both models share the same definitions given in previous sections. As shown earlier, you can partition τi into its components. You can fit the latter model using the DIST=NEGBIN option in PROC GENMOD. However, the canonical link shown in the former model is not one of the links supported by PROC GENMOD—that is, it is not available in the LINK option of the MODEL statement. You can, however, use program statements in GENMOD to supply your own distribution or quasi-likelihood. This section shows you how to work with both link functions.

10.4.5 Using PROC GENMOD to Fit the Negative Binomial with a Log Link

Use the following SAS statements to estimate the model:

proc genmod data=a;

class BLOCK CTL_TRT a b;

model count=BLOCK CTL_TRT a b a*b/dist=negbin type1 type3;

The DIST=NEGBIN option estimates the parameters for the negative binomial distribution. Output 10.30 shows the results.

Output 10.30 An Analysis of Count Data Using Negative Binomial Distribution and a Log Link

Model Information

| Data Set | WORK.A |

| Distribution | Negative Binomial |

| Link Function | Log |

| Dependent Variable | COUNT |

| Observations Used | 40 |

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 36.7953 | 1.3628 |

| Pearson Chi-Square | 27 | 33.8797 | 1.2548 |

Analysis Of Parameter Estimates

| Parameter | DF | Estimate | Standard Error |

Wald 95% Confidence Limits |

|

| Dispersion | 1 | 0.2383 | 0.0899 | 0.1137 | 0.4992 |

NOTE: The negative binomial dispersion parameter was estimated by maximum likelihood.

LR Statistics For Type 1 Analysis

| Source | 2*Log Likelihood |

DF | Chi- Square |

Pr > ChiSq |

| Intercept | 399.7286 | |||

| BLOCK | 400.9381 | 3 | 1.21 | 0.7507 |

| CTL_TRT | 411.5677 | 1 | 10.63 | 0.0011 |

| A | 420.5913 | 2 | 9.02 | 0.0110 |

| B | 420.6331 | 2 | 0.04 | 0.9794 |

| A*B | 425.4540 | 4 | 4.82 | 0.3062 |

LR Statistics For Type 3 Analysis

| Source | DF | Chi- Square |

Pr > ChiSq |

| BLOCK | 3 | 3.12 | 0.3733 |

| CTL_TRT | 0 | 0.00 | . |

| A | 2 | 8.96 | 0.0113 |

| B | 2 | 0.03 | 0.9849 |

| A*B | 4 | 4.82 | 0.3062 |

Several items in Output 10.30 are worth noting. First, under “Model Information,” you can see that the log link function was used. The VALUE/DF ratios for the deviance and Pearson χ2 are both greater than 1, but not enough to suggest lack of fit. The dispersion parameter is estimated obtained via maximum likelihood. The estimate given, 0.2383, is actually an estimate of 1/k, as the negative binomial was set up earlier in this section. Thus, ˆk=10.2383=4.196

You can use the alternative analysis with TRT identifying treatments and the CTL_TRT, A, B, and A*B effects broken out by the CONTRAST statements. The SAS statements are

proc genmod data=a;

class block trt;

model count=block trt/dist=negbin type1 type3 wald;

contrast 'ctl vs trt' trt 9 -1 -1 -1 -1 -1 -1 -1 -1 -1/wald;

contrast 'a' trt 0 1 1 1 0 0 0 -1 -1 -1,

trt 0 0 0 0 1 1 1 -1 -1 -1/wald;

contrast 'b' trt 0 1 0 -1 1 0 -1 1 0 -1,

trt 0 0 1 -1 0 1 -1 0 1 -1/wald;

contrast 'a x b' trt 0 1 0 -1 0 0 0 -1 0 1,

trt 0 0 0 0 1 0 -1 -1 0 1,

trt 0 0 1 -1 0 0 0 0 -1 1,

trt 0 0 0 0 0 1 -1 0 -1 1/wald;

estimate 'ctl lsmean' intercept 1 trt 1 0/exp;

estimate 'treated lsm'intercept 9 trt 0 1 1 1 1 1 1 1 1 1

/divisor=9 exp;

estimate 'A=1 lsmean' intercept 3 trt 0 1 1 1 0/divisor=3 exp;

estimate 'A=2 lsmean' intercept 3 trt 0 0 0 0 1 1 1

0/divisor=3 exp;

estimate 'A=3 lsmean' intercept 3 trt 0 0 0 0 0 0 0 1 1 1

/divisor=3 exp;

ods output estimates=lsm;

Output 10.31 shows the results.

Output 10.31 CONTRAST Results for a Negative Binomial Model of Count Data with a Log Link

Contrast Results

| Contrast | DF | Chi- Square |

Pr > ChiSq | Type |

| ctl vs trt | 1 | 15.99 | <.0001 | LR |

| a | 2 | 8.96 | 0.0113 | LR |

| b | 2 | 0.03 | 0.9849 | LR |

| a x b | 4 | 4.82 | 0.3062 | LR |

You can see that the χ2 statistics for A, B, and A×B are identical to those in Output 10.30. CTL_TRT on the other hand, differs: the χ2 statistic obtained using the CTL_TRT class variable was 10.63; here it is 15.99. This discrepancy is similar to what was observed in Sections 10.4.1 and 10.4.3.

10.4.6 Fitting the Negative Binomial with a Canonical Link

You need to write program statements to define the canonical link. Specifically, you need to provide the link and inverse link functions. The following SAS statements fit the desired model:

proc genmod data=a;

k=1/0.2383;

mu=_mean_;

eta=_xbeta_;

fwdlink link=log(mu/(mu+k));

invlink ilink=k*exp(eta)/(1-exp(eta));

class block trt;

model count=block trt/dist=negbin type1 type3 wald;

contrast 'ctl vs trt' trt 9 -1 -1 -1 -1 -1 -1 -1 -1 -1;

contrast 'a' trt 0 1 1 1 0 0 0 -1 -1 -1,

trt 0 0 0 0 1 1 1 -1 -1 -1;

contrast 'b' trt 0 1 0 -1 1 0 -1 1 0 -1,

trt 0 0 1 -1 0 1 -1 0 1 -1;

contrast 'a x b' trt 0 1 0 -1 0 0 0 -1 0 1,

trt 0 0 0 0 1 0 -1 -1 0 1,

trt 0 0 1 -1 0 0 0 0 -1 1,

trt 0 0 0 0 0 1 -1 0 -1 1;

The statements FWDLINK and INVLINK define the link and inverse link, respectively. You need to provide the estimate of k in order to define these two functions. MU and ETA are not required; they are conveniences. _MEAN_ and _XBETA_ are internal SAS names for the estimates of λ and η, respectively. They are awkward. MU and ETA are simply more manageable names for subsequent program statements.

Alternatively, you could use the CLASS statements for CTL_TRT, A, and B, that is, use the statements

proc genmod data=a;

k=1/0.2383;

mu=_mean_;

eta=_xbeta_;

fwdlink link=log(mu/(mu+k));

invlink ilink=k*exp(eta)/(1-exp(eta));

class block ctl_trt a b;

model count=BLOCK CTL_TRT a b a*b/dist=negbin type1 type3;

The “Criteria for Assessing Goodness of Fit” and parameter estimates for both models are identical. Output 10.32 shows these results. The tests of treatment effects are different. Output 10.33 shows the treatment effect results.

Output 10.32 Goodness of Fit and Dispersion Estimate for a Negative Binomial with a Canonical Link

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 37.5857 | 1.3921 |

| Pearson Chi-Square | 27 | 36.0722 | 1.3360 |

Algorithm converged.

Analysis Of Parameter Estimates

| Parameter | DF | Estimate | Standard Error |

Wald 95% Confidence Limits |

|

| Dispersion | 1 | 0.2456 | 0.0933 | 0.1167 | 0.5171 |

The deviance and Pearson χ2 values are very close to those obtained using the log link (Output 10.30). The dispersion estimate is also slightly changed by using the canonical link. Here, ˆk=10.2456=4.072.

Output 10.33 Treatment Effect Tests Using a Negative Binomial with a Canonical Link

Run 1: TRT in model, effects defined by CONTRAST

Contrast Results

| Contrast | DF | Chi- Square |

Pr > ChiSq | Type |

| ctl vs trt | 1 | 15.22 | <.0001 | LR |

| a | 2 | 8.05 | 0.0179 | LR |

| b | 2 | 0.29 | 0.8656 | LR |

| a x b | 4 | 5.13 | 0.2739 | LR |

Run 2: CTL_TRT, A, and B in CLASS statement

LR Statistics For Type 1 Analysis

| Source | 2*Log Likelihood |

DF | Chi- Square |

Pr > ChiSq |

| Intercept | 399.7286 | |||

| BLOCK | 400.9381 | 3 | 1.21 | 0.7507 |

| CTL_TRT | 410.0197 | 1 | 9.08 | 0.0026 |

| A | 418.7535 | 2 | 8.73 | 0.0127 |

| B | 418.9195 | 2 | 0.17 | 0.9203 |

| A*B | 424.0528 | 4 | 5.13 | 0.2739 |

LR Statistics For Type 3 Analysis

| Source | DF | Chi- Square |

Pr > ChiSq |

| BLOCK | 3 | 1.72 | 0.6325 |

| CTL_TRT | 0 | 0.00 | . |

| A | 2 | 8.05 | 0.0179 |

| B | 2 | 0.29 | 0.8656 |

| A*B | 4 | 5.13 | 0.2739 |

These results follow the same pattern: The Run 1 CONTRAST results and Run 2 Type 3 likelihood ratio statistics are the same for A, B, and A×B, whereas the CTL_TRT results vary somewhat. All results are similar to those obtained using the log link.

10.4.7 Advanced Application: A User-Supplied Program to Fit the Negative Binomial with a Canonical Link

To try other distributions, including values of the aggregation parameter k, you need to write program statements to provide the variance function and deviance as well as the link and inverse link. The following examples illustrate how to do this. Suppose, for example, you want to fit the geometric distribution, that is, the negative binomial with k=1. For the insect count data and the negative binomial, the SAS GENMOD statements are

proc genmod;

count=_resp_;

y=count;

if y=0 then y=0.1;

mu=_mean_;

eta=_xbeta_;

K=1;

FWDLINK LINK=LOG(MU/(MU+K));

INVLINK ILINK=K*EXP(ETA)/(1-EXP(ETA));

lly=y*log(y/(y+k))-k*log((k+y)/k);

llm=y*log(mu/(mu+k))-k*log((k+mu)/k);

d=2*(lly-llm);

VARIANCE VAR=MU+(MU*MU/K);

DEVIANCE DEV=D;

class block ctl_trt a b;

model y=block ctl_trt/type3 wald;

model y=block ctl_trt a b a*b/ type3 wald;

The required program statements appear in uppercase. The statement K=1 sets the aggregation parameter. FWDLINK LINK= gives the link function for your model; you supply the expression after the equal sign. Similarly, INVLINK ILINK= supplies the inverse link function. VARIANCE VAR= and DEVIANCE DEV= give the forms of the variance function and deviance, respectively. The statements immediately preceding VARIANCE VAR= are for programming convenience. LLY defines the log likelihood (or quasi-likelihood) evaluated at the observations, and LLM defines the log likelihood or quasi-likelihood evaluated using the estimated natural parameter from the model. Then D=2*(LLY–LLM) is the formula for the deviance. You could write the full formula immediately following DEV=, but separating the task into steps may help you reduce the chances of a hard-to-recognize mistake. GENMOD uses the internal SAS names _RESp_ for the response variable, _MEAN_ for the expected value of the observation based on the model, and _XBETA_ for the current value of ˆη=Xˆβ.

Note: The response variable for these data is COUNT. For one observation (block 2, treatment 9), COUNT=0. The deviance is 2*(LLY–LLM), but for this observation, LLY cannot be determined since log(yy+k)

Output 10.34 Goodness-oF-Fit Statistics for a Negative Binomial Model with a User-Supplied Program and Uncorrected Zero Counts

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 0.0000 | 0.0000 |

| Scaled Deviance | 27 | 0.0000 | 0.0000 |

| Pearson Chi-Square | 27 | 8.1026 | 0.3001 |

| Scaled Pearson X2 | 27 | 8.1026 | 0.3001 |

| Log Likelihood | -1.79769E308 | ||

ERROR: Error in computing deviance function.

Therefore, for observations where COUNT=0, you must add a small positive constant in order to avoid this problem, hence the statements Y=COUNT followed by IF Y=0 THEN Y=0.1. This prevents the zero counts from causing an error when computing LLY.

Finally, user-supplied distributions may introduce difficulty computing certain likelihood ratio statistics. The best alternative is to use Wald statistics. You can compute Wald statistics for Type III hypotheses with GENMOD. Therefore, to fully test the model, you must run it twice, once with CTL_TRT only in the model, adjusted for BLOCK, then with the full model. Run 1 tests control versus treated. Run 2 tests the factorial effects within the treated group. Output 10.35 shows the results.

Output 10.35 Deviance and Wald Statistics for a Geometric Model Fit to Insect Count Data

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 14.4938 | 0.5368 |

| Pearson Chi-Square | 27 | 13.2924 | 0.4923 |

Wald Statistics For Type 3 Analysis

Run 1

| Source | DF | Chi- Square |

Pr > ChiSq |

| BLOCK | 3 | 0.56 | 0.9052 |

| CTL_TRT | 1 | 6.62 | 0.0101 |

Run 2

| Source | DF | Chi- Square |

Pr > ChiSq |

| BLOCK | 3 | 0.51 | 0.9177 |

| CTL_TRT | 0 | 0.00 | . |

| A | 2 | 2.89 | 0.2361 |

| B | 2 | 0.17 | 0.9173 |

| A*B | 4 | 1.80 | 0.7733 |

These results suggest there is a statistically significant difference between the control and treated groups (Wald χ2=6.62, p=0.0101), but no evidence of any statistically significant A or B effects within the treated group. These results, however, may be excessively conservative. The deviance/DF ratio is only 0.5368, considerably less than 1. This may suggest underdispersion, that is, the variance may be less than you would expect with the geometric model. Possibly the geometric model assumes too low a value for the aggregation parameter.

You can try different values of k using the program statements given above. Setting k=2.5 yields the results in Output 10.36.

Output 10.36 Deviance and Wald Statistics for a Negative Binomial Model, k=2.5, Fit to Insect Count Data

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 27.6607 | 1.0245 |

| Pearson Chi-Square | 27 | 26.5857 | 0.9847 |

Wald Statistics For Type 3 Analysis

Run 1

| Source | DF | Chi- Square |

Pr > ChiSq |

| BLOCK | 3 | 1.18 | 0.7580 |

| CTL_TRT | 1 | 13.94 | 0.0002 |

Run 2

| Source | DF | Square | Pr > ChiSq |

| BLOCK | 3 | 1.08 | 0.7818 |

| CTL_TRT | 0 | 0.00 | . |

| A | 2 | 5.81 | 0.0547 |

| B | 2 | 0.26 | 0.8781 |

| A*B | 4 | 3.66 | 0.4546 |

The results are more consistent with the Poisson model corrected for overdispersion discussed in Section 10.4.3.

You can also fit the model with block and treatment and use ESTIMATE statements to compute LS means, similar to what was done in previous sections. For example, the following statements allow you to do this for the model with k=2.5:

PROC GENMOD;

k=2.5;

count=_resp_;

y=count;

if y=0 then y=0.1;

mu=_mean_;

eta=_xbeta_;

fwdlink link=log(mu/(mu+k));

invlink ilink=k*exp(eta)/(1-exp(eta));

lly=y*log(y/(y+k))-k*log((k+y)/k);

llm=y*log(mu/(mu+k))-k*log((k+mu)/k);

d=2*(lly-llm);

variance var=mu+(mu*mu/k);

deviance dev=d;

CLASS BLOCK TRT;

MODEL y=trt block;

contrast 'ctl vs trt' trt 9 -1 -1 -1 -1 -1 -1 -1 -1 -1/wald;

contrast 'a' trt 0 1 1 1 0 0 0 -1 -1 -1,

trt 0 0 0 0 1 1 1 -1 -1 -1/wald;

contrast 'b' trt 0 1 0 -1 1 0 -1 1 0 -1,

trt 0 0 1 -1 0 1 -1 0 1 -1/wald;

contrast 'a x b' trt 0 1 0 -1 0 0 0 -1 0 1,

trt 0 0 0 0 1 0 -1 -1 0 1,

trt 0 0 1 -1 0 0 0 0 -1 1,

trt 0 0 0 0 0 1 -1 0 -1 1/wald;

contrast 'ctl vs trt' trt 9 -1 -1 -1 -1 -1 -1 -1 -1 -1;

contrast 'a' trt 0 1 1 1 0 0 0 -1 -1 -1,

trt 0 0 0 0 1 1 1 -1 -1 -1;

contrast 'b' trt 0 1 0 -1 1 0 -1 1 0 -1,

trt 0 0 1 -1 0 1 -1 0 1 -1;

contrast 'a x b' trt 0 1 0 -1 0 0 0 -1 0 1,

trt 0 0 0 0 1 0 -1 -1 0 1,

trt 0 0 1 -1 0 0 0 0 -1 1,

trt 0 0 0 0 0 1 -1 0 -1 1;

estimate 'ctl lsmean' intercept 1 trt 1 0;

estimate 'treated lsm'intercept 9 trt 0 1 1 1 1 1 1 1 1 1

/divisor=9;

estimate 'A=1 lsmean' intercept 3 trt 0 1 1 1 0/divisor=3;

estimate 'A=2 lsmean' intercept 3 trt 0 0 0 0 1 1 1

0/divisor=3;

estimate 'A=3 lsmean' intercept 3 trt 0 0 0 0 0 0 0 1 1 1 0

/divisor=3;

ods output estimates=lsm;

These statements are identical to the program shown above except MODEL COUNT=BLOCK CTL_TRT A B A*B is replaced by MODEL COUNT=BLOCK TRT and the CONTRAST and ESTIMATE statements shown. Notice that the program includes the ODS OUTPUT statement for the estimates of the LS means. Output 10.37 shows the results for the goodness-of-fit statistics and the contrasts. The LS means are discussed below.

Output 10.37 An Alternative Analysis of a Negative Binomial Model Using Contrasts

Criteria For Assessing Goodness Of Fit

| Criterion | DF | Value | Value/DF |

| Deviance | 27 | 27.6607 | 1.0245 |

| Pearson Chi-Square | 27 | 26.5857 | 0.9847 |

Contrast Results

| Contrast | DF | Chi- Square |

Pr > ChiSq | Type |

| ctl vs trt | 1 | 17.76 | <.0001 | Wald |

| a | 2 | 5.81 | 0.0547 | Wald |

| b | 2 | 0.26 | 0.8781 | Wald |

| a x b | 4 | 3.66 | 0.4546 | Wald |

| ctl vs trt | 1 | 13.46 | 0.0002 | LR |

| a | 2 | 6.41 | 0.0406 | LR |

| b | 2 | 0.28 | 0.8703 | LR |

| a x b | 4 | 4.05 | 0.3993 | LR |

The deviance and Pearson χ2 are similar but not identical to the previous model. The difference results from the fact that with the non-linearity of the link function, the change in the parameterization of the model induces slight differences in many of the statistics. This model did allow the likelihood ratio statistics to be computed; they are given along with the Wald statistics. The numbers are somewhat different, but the overall conclusions are similar: There is very strong evidence of a difference between the control and treated groups, fairly strong evidence of an A main effect, and no evidence of either an A×B interaction or a B main effect.

The ESTIMATE statements given above compute LS means on the link function scale. As with previous examples, you can use an ODS statement to output the results. Then you can use the following program statements to compute the inverse link and the Delta Rule to get LS means and their standard errors on the COUNT scale.

data c_lsm;

set lsm;

k=2.5;

counthat=k*exp(estimate)/(1-exp(estimate));

deriv=k*exp(estimate)/((1-exp(estimate))**2);

se_count=deriv*stderr;

proc print data=c_lsm;

run;

Output 10.38 shows the results. You can adapt these statements to obtain predicted counts from the negative binomial models fit with the maximum likelihood estimate of k earlier in this section. For example, you could substitute k=4.072 above and use the LS means for the negative binomial model, canonical link, and maximum likelihood estimate of k.

Output 10.38 LS Means and Standard Errors for Negative Binomial Model of Insect Count Data

| Obs | Label | Estimate | StdErr | Alpha | LowerCL | UpperCL |

| 1 | ctl lsmean | -0.1752 | 0.0537 | 0.05 | -0.2804 | -0.0700 |

| 2 | treated lsm | -0.4975 | 0.0561 | 0.05 | -0.6075 | -0.3875 |

| 3 | A=1 lsmean | -0.3437 | 0.0650 | 0.05 | -0.4710 | -0.2163 |

| 4 | A=2 lsmean | -0.4718 | 0.0880 | 0.05 | -0.6442 | -0.2993 |

| 5 | A=3 lsmean | -0.6770 | 0.1269 | 0.05 | -0.9257 | -0.4283 |

| Obs | ChiSq | Prob ChiSq |

k | counthat | deriv | se_count |

| 1 | 10.65 | 0.0011 | 2.5 | 13.0587 | 81.2700 | 4.36218 |

| 2 | 78.61 | <.0001 | 2.5 | 3.8785 | 9.8956 | 0.55525 |

| 3 | 27.98 | <.0001 | 2.5 | 6.0955 | 20.9576 | 1.36169 |

| 4 | 28.74 | <.0001 | 2.5 | 4.1473 | 11.0272 | 0.97043 |

| 5 | 28.46 | <.0001 | 2.5 | 2.5827 | 5.2508 | 0.66637 |

ESTIMATE and STDERR give the estimates on the link function scale. COUNTHAT and SE_COUNT are the LS means on the COUNT scale. These results are similar to those obtained using the Poisson model corrected for overdispersion. Recall, for example, that the control LS mean was 13.59 with a standard error of 3.44, whereas for the negative binomial model the control LS mean is 13.06 with a standard error of 4.36. Although the overall conclusions about which treatment effects are significant are reasonably consistent, the estimates of the means and hence the magnitude of the treatment effects are different.

This is an ongoing area of research. At this time, no compelling evidence favors either approach over the other. However, Young et al. (1999) showed that Type I error control in tests using GLMs are severely affected by model misspecification. They did not address the question of how best to estimate treatment means and treatment effects when the treatments are different. Further research will certainly shed more light on how best to use GLMs. The main point of this section is that your choice of model can greatly affect your conclusions. This section presented a number of approaches that are possible and how to use tools available with PROC GENMOD to check these approaches for problems.

10.5 Generalized Linear Models with Repeated Measures—Generalized Estimating Equations

Chapter 8 discussed repeated measures with standard linear models. The main distinguishing feature of repeated-measures data is the possible correlation among observations observed at different times on the same subject. PROC MIXED allows you to fit a variety of correlation models when the data have a normal distribution. When there are no random-model effects, the MIXED procedure uses generalized least squares (GLS) to estimate model parameters. PROC GENMOD uses generalized estimating equations (GEEs), a generalized linear model analog of GL developed by Liang and Zeger (1986) and Zeger et al. (1988). Section 10.6 presents background theory for the GEE method. The section discusses a repeated-measures example and shows you how to use PROC GENMOD’S GEE option to do repeated-measured analysis of generalized linear models.

10.5.1 A Poisson Repeated-Measures Example

Output 10.39 shows data from a study evaluating a new treatment for epilepsy. These data appeared in Leppik et al. (1985) and were subsequently discussed by Thall and Vail (1990), and Breslow and Clayton (1993). The variable ID identifies each patient in the study. The treatments are TRT=0, a placebo, and TRT=1, an anti-epileptic drug. The response variable is the number of seizures over a two-week interval. For the eight weeks prior to placing the participants on treatment, the number of seizures was counted for each patient in order to form a baseline (BASE) measurement. Also, the patients’ AGE, in years, was thought to be a potentially important covariate. The number of seizures was recorded for each of four time intervals and appears in the data set as Y1 through Y4 for the first through fourth observation interval, respectively.

Output 10.39 Epilepsy Seizure Repeated-Measures Data

| Obs | id | trt | base | age | y1 | y2 | y3 | y4 |

| 1 | 104 | 0 | 11 | 31 | 5 | 3 | 3 | 3 |

| 2 | 106 | 0 | 11 | 30 | 3 | 5 | 3 | 3 |

| 3 | 107 | 0 | 6 | 25 | 2 | 4 | 0 | 5 |

| 4 | 114 | 0 | 8 | 36 | 4 | 4 | 1 | 4 |

| 5 | 116 | 0 | 66 | 22 | 7 | 18 | 9 | 21 |

| 6 | 118 | 0 | 27 | 29 | 5 | 2 | 8 | 7 |

| 7 | 123 | 0 | 12 | 31 | 6 | 4 | 0 | 2 |

| 8 | 126 | 0 | 52 | 42 | 40 | 20 | 23 | 12 |

| 9 | 130 | 0 | 23 | 37 | 5 | 6 | 6 | 5 |

| 10 | 135 | 0 | 10 | 28 | 14 | 13 | 6 | 0 |

| 11 | 141 | 0 | 52 | 36 | 26 | 12 | 6 | 22 |

| 12 | 145 | 0 | 33 | 24 | 12 | 6 | 8 | 4 |

| 13 | 201 | 0 | 18 | 23 | 4 | 4 | 6 | 2 |

| 14 | 202 | 0 | 42 | 36 | 7 | 9 | 12 | 14 |

| 15 | 205 | 0 | 87 | 26 | 16 | 24 | 10 | 9 |

| 16 | 206 | 0 | 50 | 26 | 11 | 0 | 0 | 5 |

| 17 | 210 | 0 | 18 | 28 | 0 | 0 | 3 | 3 |

| 18 | 213 | 0 | 111 | 31 | 37 | 29 | 28 | 29 |

| 19 | 215 | 0 | 18 | 32 | 3 | 5 | 2 | 5 |

| 20 | 217 | 0 | 20 | 21 | 3 | 0 | 6 | 7 |

| 21 | 219 | 0 | 12 | 29 | 3 | 4 | 3 | 4 |

| 22 | 220 | 0 | 9 | 21 | 3 | 4 | 3 | 4 |

| 23 | 222 | 0 | 17 | 32 | 2 | 3 | 3 | 5 |

| 24 | 226 | 0 | 28 | 25 | 8 | 12 | 2 | 8 |

| 25 | 227 | 0 | 55 | 30 | 18 | 24 | 76 | 25 |

| 26 | 230 | 0 | 9 | 40 | 2 | 1 | 2 | 1 |

| 27 | 234 | 0 | 10 | 19 | 3 | 1 | 4 | 2 |

| 28 | 238 | 0 | 47 | 22 | 13 | 15 | 13 | 12 |

| 29 | 101 | 1 | 76 | 18 | 11 | 14 | 9 | 8 |

| 30 | 102 | 1 | 38 | 32 | 8 | 7 | 9 | 4 |

| 31 | 103 | 1 | 19 | 20 | 0 | 4 | 3 | 0 |

| 32 | 108 | 1 | 10 | 30 | 3 | 6 | 1 | 3 |

| 33 | 110 | 1 | 19 | 18 | 2 | 6 | 7 | 4 |

| 34 | 111 | 1 | 24 | 24 | 4 | 3 | 1 | 3 |

| 35 | 112 | 1 | 31 | 30 | 22 | 17 | 19 | 16 |

| 36 | 113 | 1 | 14 | 35 | 5 | 4 | 7 | 4 |

| 37 | 117 | 1 | 11 | 27 | 2 | 4 | 0 | 4 |

| 38 | 121 | 1 | 67 | 20 | 3 | 7 | 7 | 7 |

| 39 | 122 | 1 | 41 | 22 | 4 | 18 | 2 | 5 |

| 40 | 124 | 1 | 7 | 28 | 2 | 1 | 1 | 0 |

| 41 | 128 | 1 | 22 | 23 | 0 | 2 | 4 | 0 |

| 42 | 129 | 1 | 13 | 40 | 5 | 4 | 0 | 3 |

| 43 | 137 | 1 | 46 | 33 | 11 | 14 | 25 | 15 |

| 44 | 139 | 1 | 36 | 21 | 10 | 5 | 3 | 8 |

| 45 | 143 | 1 | 38 | 35 | 19 | 7 | 6 | 7 |

| 46 | 147 | 1 | 7 | 25 | 1 | 1 | 2 | 3 |

| 47 | 203 | 1 | 36 | 26 | 6 | 10 | 8 | 8 |

| 48 | 204 | 1 | 11 | 25 | 2 | 1 | 0 | 0 |

| 49 | 207 | 1 | 151 | 22 | 102 | 65 | 72 | 63 |

| 50 | 208 | 1 | 22 | 32 | 4 | 3 | 2 | 4 |

| 51 | 209 | 1 | 41 | 25 | 8 | 6 | 5 | 7 |

| 52 | 211 | 1 | 32 | 35 | 1 | 3 | 1 | 5 |

| 53 | 214 | 1 | 56 | 21 | 18 | 11 | 28 | 13 |

| 54 | 218 | 1 | 24 | 41 | 6 | 3 | 4 | 0 |

| 55 | 221 | 1 | 16 | 32 | 3 | 5 | 4 | 3 |

| 56 | 225 | 1 | 22 | 26 | 1 | 23 | 19 | 8 |

| 57 | 228 | 1 | 25 | 21 | 2 | 3 | 0 | 1 |

| 58 | 232 | 1 | 13 | 36 | 0 | 0 | 0 | 0 |

| 59 | 236 | 1 | 12 | 37 | 1 | 4 | 3 | 2 |

The data have both repeated measures and generalized linear model features. Repeated measures result from the fact that each subject was observed four times. Because the observations are counts, it is reasonable to fit a model with a link function and an assumed probability distribution appropriate for a random count variable.

The analyses reported by Thall and Vail (1990) used transformed covariates for baseline and age. The baseline covariate was log (BASE/4), the 4 accounting for the baseline period being four times as long as the two-week observation periods during the study. The age covariate was log(AGE). These variables appear at LOG_BASE and LOG_AGE, respectively, in the SAS programs and output shown below.

Following Thall and Vail, a generalized linear model for these data is

log(λij) = μ + αi +τj+(ατ)ij+ β1i*(log_base)+β2*(log_age)

where

| λij | is the mean count for treatment i (i = 0, 1) and time j (j = 1, 2, 3, 4). |

| μ | is the intercept. |

| αi | is the effect of the ith treatment. |

| τj | is the effect of the jth time period. |

| (ατ)ij | is the ijth TIME×TREATMENT interaction effect. |

| β1i | is the regression coefficient for LOG_BASE for the ith treatment. |

| β2 | is the regression coefficient for LOG_AGE. |

Alternatively, you can write β1i=β1+δi, equivalent to specifying a common slope plus a TREATMENT-BY-LOG_base interaction term. Note that Thall and Vail fit a separate regression of LOG_BASE on count for each treatment, but a common regression for LOG_AGE. If you did not have the advantage of their work, you would fit a model with separate regressions over LOG_AGE for each treatment as well and test the hypothesis of equal regressions.

10.5.2 Using PROC GENMOD to Compute a GEE Analysis of Repeated Measures

You can fit the model using PROC GENMOD. First, you must modify the data set so that there is one observation per time, rather than all four observations over time on a single data line as shown in Output 10.39. The one-line-per-time requirement is identical to the format PROC MIXED uses for repeated-measures data. You can use the following SAS statements to convert the data set. Set LEPPIK is the original data set shown in Output 10.39; SEIZURE is the modified form suitable for PROC GENMOD.

data seizure;

set Leppik;

time = 1; y = y1; output;

time = 2; y = y2; output;

time = 3; y = y3; output;

time = 4; y = y4; output;

Then use the following GENMOD statements:

proc genmod;

class id trt time;

model y=trt time trt*time log_base trt*log_base log_age/

dist=poisson linK=log type1 type3;

repeated subject=id / type=exch corrw;

The REPEATED statement implements the generalized estimating equations (GEEs) for the repeated measures over the four times. GEE uses a “working correlation matrix” to account for the correlation among the repeated measurements within subjects. Refer to Section 10.6 for more about working correlation matrices. The SUBJECT= and TYPE= commands are required in the REPEATED statement. SUBJECT= identifies the unit on which the repeated measurements were taken. In this case, they are on each patient, identified by the variable ID. The SUBJECT=ID command creates a block-diagonal working correlation matrix, one 4×4 block per ID. TYPE= defines the type of correlation model, similar to the TYPE command in the REPEATED statement in PROC MIXED. Available types include IND (independent), EXCH (exchangeable, or, equivalently, CS for compound symmetry), AR (first-order autoregressive, similar to AR(1) in PROC MIXED) and UN (unstructured). Following Thall and Vail, this example uses TYPE=EXCH.

The CORRW option in the REPEATED statement causes the estimated working correlation to be included in the output. In the MODEL statement, LOG_BASE and TRT*LOG_BASE account for the separate regressions over LOG_BASE for each treatment. Alternatively, you could use LOG_BASE(TRT). Relating these to the MODEL statement given above, the latter models β1i, whereas the former models β1+δi.

Output 10.40 shows selected results.

Output 10.40 GEE Results for Epilepsy Seizure Data: Test or Treatment×Time Interaction

GEE Model Information

| Correlation Structure | Exchangeable |

| Subject Effect | id (59 levels) |

| Number of Clusters | 59 |

| Correlation Matrix Dimension | 4 |

| Maximum Cluster Size | 4 |

| Minimum Cluster Size | 4 |

Working Correlation Matrix

| Col1 | Col2 | Col3 | Col4 | |

| Row1 | 1.0000 | 0.3569 | 0.3569 | 0.3569 |

| Row2 | 0.3569 | 1.0000 | 0.3569 | 0.3569 |

| Row3 | 0.3569 | 0.3569 | 1.0000 | 0.3569 |

| Row4 | 0.3569 | 0.3569 | 0.3569 | 1.0000 |

Score Statistics For Type 3 GEE Analysis

| Source | DF | Chi- Square |

Pr > ChiSq |

| trt | 1 | 5.82 | 0.0159 |

| time | 3 | 5.03 | 0.1695 |

| trt*time | 3 | 1.53 | 0.6751 |

| log_base | 1 | 6.34 | 0.0118 |

| log_base*trt | 1 | 3.61 | 0.0576 |

| log_age | 1 | 6.48 | 0.0109 |

The “GEE Model Information” tells you how many subjects (clusters) and the dimension of each block of the working correlation matrix (four, that is, the number of time periods). The estimated working correlation matrix appears next. In this case, the observations at any two time periods for the same subject have a correlation of 0.3569. The test for TRT*TIME has a χ2 statistic of 1.53, with a p-value of 0.6751. Thall and Vail reported their analyses without a treatment×time interaction term, which this result clearly justifies.

The SAS statements for the revised model without a TRT*TIME interaction are

proc genmod;

class id trt time;

model y=trt time log_base(trt) log_age/

dist=poisson linK=log type1 type3;

repeated subject=id / type=exch corrw;

lsmeans trt / e;

estimate 'lsm trt 0' intercept 1 trt 1 0 time 0.25 0.25

0.25 0.25

log_base(trt) 1.7679547 0 log_age 3.3197835/exp;

estimate 'lsm at t=4' intercept 1 trt 1 0 time 0 0 0 1

log_base(trt) 1.7679547 0 log_age 3.3197835/exp;

estimate 'lsm at t<4' intercept 3 trt 3 0 time 1 1 1 0

log_base(trt) 5.3038641 0

log_age 9.9593505/ divisor=3 exp;

contrast 'log_b slopes =' log_base(trt) 1 -1;

contrast 'visit 4 vs others' time 1 1 1 -3;

contrast 'among visit 1-3' time 1 -1 0 0, time 1 1 -2 0;

Several additional statements appear. This program uses the alternative form of the regressions over LOG_BASE for each treatment, LOG_BASE(TRT), based on the parameterization β1i. The associated contrast, LOG_B SLOPES = tests H0:β10=β11,

Output 10.41 GEE Analysis of Epilepsy Seizure Data: A Model without a Treatment×Time Interaction, Working Correlation Matrix, Parameter Estimates, and Type 3 Analysis

Working Correlation Matrix

| Col1 | Col2 | Col3 | Col4 | |

| Row1 | 1.0000 | 0.3552 | 0.3552 | 0.3552 |

| Row2 | 0.3552 | 1.0000 | 0.3552 | 0.3552 |

| Row3 | 0.3552 | 0.3552 | 1.0000 | 0.3552 |

| Row4 | 0.3552 | 0.3552 | 0.3552 | 1.0000 |

Analysis Of GEE Parameter Estimates

Empirical Standard Error Estimates

| Parameter | Estimate | Standard Error |

95% Confidence Limits |

Z Pr > |Z| | ||

| Intercept | -4.3522 | 1.0788 | -6.4667 | -2.2377 | -4.03 | <.0001 |

| trt 0 | 1.3551 | 0.4289 | 0.5145 | 2.1956 | 3.16 | 0.0016 |

| trt 1 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | . | . |

| time 1 | 0.2030 | 0.0987 | 0.0096 | 0.3964 | 2.06 | 0.0397 |

| time 2 | 0.1344 | 0.0762 | -0.0149 | 0.2837 | 1.76 | 0.0776 |

| time 3 | 0.1445 | 0.1228 | -0.0963 | 0.3852 | 1.18 | 0.2395 |

| time 4 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | . | . |

| log_base(trt) 0 | 0.9500 | 0.0986 | 0.7567 | 1.1432 | 9.64 | <.0001 |

| log_base(trt) 1 | 1.5202 | 0.1423 | 1.2413 | 1.7992 | 10.68 | <.0001 |

| log_age | 0.9194 | 0.2773 | 0.3759 | 1.4630 | 3.32 | 0.0009 |

Score Statistics For Type 3 GEE Analysis

| Source | DF | Chi- Square |

Pr > ChiSq |

| trt | 1 | 5.81 | 0.0159 |

| time | 3 | 4.71 | 0.1941 |

| log_base(trt) | 2 | 9.94 | 0.0070 |

| log_age | 1 | 6.47 | 0.0110 |

Dropping TRT*TIME has little effect on the estimated working correlation: Here, ˆρ

Output 10.42 GEE Analysis of Epilepsy Seizure Data: A Model without a Treatment×Time Interaction LS Means, Estimate, and CONTRAST Results

Coefficients for trt Least Squares Means

| Label | Row | Prm1 Prm8 |

Prm2 Prm9 |

Prm3 Prm10 |

Prm4 | Prm5 | Prm6 | Prm7 |

| trt | 1 | 1 | 1 | 0 | 0.25 | 0.25 | 0.25 | 0.25 |

| 1.768 | 0 | 3.3198 | ||||||

| trt | 2 | 1 | 0 | 1 | 0.25 | 0.25 | 0.25 | 0.25 |

| 0 | 1.768 | 3.3198 | ||||||

Least Squares Means

| Effect | trt | Estimate | Standard Error |

DF | Chi- Square |

Pr > ChiSq |

| trt | 0 | 1.8552 | 0.1047 | 1 | 313.92 | <.0001 |

| trt | 1 | 1.5084 | 0.1480 | 1 | 103.94 | <.0001 |

Contrast Estimate Results

| Label | Estimate | Standard Error |

Alpha | Confidence Limits | Chi- Square |

|

| lsm trt 0 | 1.8552 | 0.1047 | 0.05 | 1.6500 | 2.0605 | 313.92 |

| Exp(lsm trt 0) | 6.3932 | 0.6694 | 0.05 | 5.2070 | 7.8496 | |

| lsm at t=4 | 1.7348 | 0.0944 | 0.05 | 1.5498 | 1.9197 | 337.91 |

| Exp(lsm at t=4) | 5.6676 | 0.5349 | 0.05 | 4.7105 | 6.8191 | |

| lsm at t<4 | 1.8954 | 0.1128 | 0.05 | 1.6743 | 2.1165 | 282.28 |

| Exp(lsm at t<4) | 6.6552 | 0.7508 | 0.05 | 5.3350 | 8.3020 | |

Contrast Results for GEE Analysis

| Contrast | DF | Chi- Square |

Pr > ChiSq | Type |

| log_b slopes = | 1 | 3.64 | 0.0565 | Score |

| visit 4 vs others | 1 | 4.16 | 0.0414 | Score |

| among visit 1-3 | 2 | 0.35 | 0.8408 | Score |

The coefficients for the LS means show the coefficients SAS uses by default. The order of the coefficients, from PRM1 through, in this case, PRM10, follows from the order in which the parameter estimates were given earlier. That is, PRM1 is the coefficient for INTERCEPT, PRM2 is for TRT 0, PRM3 is for TRT 1, and so forth. You can see that the TRT least-squares means are computed averaged over the four time periods and at the mean LOG_BASE and LOG_AGE. If you subtract the coefficients of the TRT 1 LS Mean from TRT 0, you get the difference tested by the Type III χ2 statistic discussed above: α0–α1+(β10–β11)*LOG_BASE.

The CONTRAST results show moderate evidence of unequal slopes for the regression over LOG_BASE for each treatment (χ2=3.64, p=0.0565). They confirm the Thall and Vail (1990) result that time 4 has a different expected number of seizures from the other time periods (χ2=4.16, p=0.0414), but no statistically significant evidence of differences among the first three periods (χ2=0.35, p=0.8408). The expected number of counts, for example, for the placebo (TRT 0) is 6.66 with a standard error of 0.75, using the EXP(LSM AT T< 4) line of the “Contrast Estimate Results.” Recall that the LS mean is computed on the log link scale; the EXP line applies the inverse link, giving you the estimate on the original count (number of seizures) scale. The expected number of seizures for the placebo at time 4 is 5.67 with a standard error of 0.35. Thus, the VISIT 4 VS OTHERS effect results from an overall reduction in the number of seizures in the fourth period.

This example showed the GEE method applied to Poisson data. You can apply GEE to other GLMs, for example, with binomial or gamma distributions as well. As with normal data using the REPEATED option in PROC MIXED, accounting for correlation among repeated measures can substantially affect your conclusions. Failing to account for repeated measures risks seriously misrepresenting the data. One caution: The GEE option in PROC GENMOD does not account for random-model effects. For more sophisticated analyses where your model needs to have both random-model effects and account for within-subjects correlation, you should not use PROC GENMOD. Generalized linear mixed-model programs such as the GLIMMIX macro or, in some cases, PROC NLMIXED, are better suited for such models. See Littell et al. (1996) for an introduction to GLIMMIX.

10.6 Background Theory

Nelder and Wedderburn (1972) presented the basic theory for generalized linear models, hereafter referred to in this section as GzLMs. As a point of information, people working in the area refer to generalized linear models as “GLMs.” To avoid confusion with PROC GLM—which does not compute generalized linear models—we use the acronym GzLM. Nelder and Wedderburn’s work applied linear model methods to response variables whose distributions belong to the exponential family. Two major extensions followed. Quasi-likelihood theory, developed by Wedderburn (1974) and discussed in detail by McCullagh (1983), allowed GzLMs to be used with a much broader class of response variables than the exponential family. Zeger et al. (1988) developed generalized estimating equations (GEEs), to permit GzLMs to be used with non-normal repeated-measures data. This section provides a brief overview of the main ideas.

To understand the basic idea of GzLMs, it is helpful to review the normal errors linear model from a slightly different perspective. In previous chapters, linear models were presented in the form y= β0Σpi=1βiXi

For the binomial distribution, suppose the possible outcomes of each Bernoulli trial are coded 0 or 1. For example, if you flip a coin, 0 and 1 could represent tails and heads, respectively. The probability that you obtain y 1’s out of N independent Bernoulli trials (for example, y heads out of N coin flips) is given by the formula P(Y=y)=(Ny)πy(1−π)N−y,

For the Poisson distribution, the probability of exactly Y occurrences of a discrete count is P(Y=y)= yλe−λy!

The log likelihood, mean, and variance of these distributions, as well as the normal and other members of the exponential family, share a common form. The general form of the log likelihood is ℓ(θ,ϕ;y) = yθ−b(θ)ϕ+c(y,ϕ)

10.6.1 The Generalized Linear Model Defined

The GzLM models g(μ)=β0+∑pt=1βiXi

The general form of the log likelihood provides one common rationale (but certainly not the only one!) for selecting a suitable link function for a given distribution. Notice that the observations, denoted by the random variable y, are linear in the natural parameter, ө. Therefore, it would make sense to fit a linear model to ө rather than directly to the expected value, μ. Link functions having the form η=ө are called canonical link functions. Two common types of GzLMs are log-linear models, where the observations are assumed to have a Poisson distribution and the canonical link function log(λ) is used, and logistic models, where the observations are assumed to have a binomial distribution and the canonical link log(π1−π)

10.6.2 How the GzLM’s Parameters Are Estimated

The model parameters, β0, β0, ....... βp can be estimated using maximum likelihood, that is, finding the values of the βi’s that maximize the likelihood, or equivalently, the log likelihood. For GzLMs, maximum likelihood estimation results in a generalized form of the normal equations. The normal equations for standard linear models were described in Chapter 6. Recalling the matrix description from Chapter 6, y is an n×1 vector of observations. Let μ denote the n×1 vector of expected values of the elements of y, that is, E(y) = μ, and be an n×n diagonal matrix whose elements are the variances of the elements of y, that is, V = diag[var(y)]. Since var(y) = ϕV (μ), then V = diag[ϕV(μ)].

Under the matrix setup, the GzLM is g(μ) = η = Xβ, where X is the n×p matrix of constants for the linear model as defined in Chapter 5, and β is the p×1 vector of model parameters. To estimate β, you solve the GzLM estimating equations X′WXβ = X′Wy*, where W = DV−1 D, D is an n×n diagonal matrix whose elements are the derivatives of the elements of Γ with respect to μthat is, D=diag[ ∂η∂μ ],

10.6.3 Standard Errors and Test Statistics

Several results are useful when working with GzLMs. Readers can refer to texts such as McCullagh and Nelder (1989), Dobson (1990), and Lindsey (1997) for additional detail. Important results include the following:

❏ For estimable functions Kˊβ, the variance of the estimator, is Kˊˆβ,

❏ It follows that for vector k, the approximate standard error of k′β̂ is √k'(X'WX)−k

❏ For hypothesis testing, you can use either the Wald statistic or the likelihood ratio statistic.

❏ The Wald statistic for testing H0: K′β = K′β0 is computed by using the formula (K′β̂ – K′β0)′ [K′(X′WX)K −K]−1(K′β̂ − K′β0). The Wald statistic has an approximate χ2 distribution with degrees of freedom equal to rank(K). In the normal errors case, the Wald statistic reduces to the sum of squares for testing K′β = K′β0.

❏ When W depends on an unknown scale parameter, the Wald statistic computed using the estimate of ϕ divided by rank(K) has an approximate F-distribution. The ANOVA F-test is a well-known example of Wald/rank(K), but this chapter illustrates other examples using GzLMs for non-normal data.

❏ The deviance is defined as 2[ℓ(θ(y);y) − ℓ(θ(Xβ̂);y], where ℓ(θy) is the log likelihood, with θ(y) the value of θ determined from the data and θ(Xβ̂) determined from the estimate of β under the model. The deviance has an approximate χ2 distribution with N–p degrees of freedom, where N=the number of observations and p is the rank of X.

❏ For distributions that do not depend on an unknown scale parameter, such as the binomial and Poisson, the deviance tests lack of fit of the GzLM. This chapter presents several examples using the deviance for this purpose.

❏ Likelihood ratio statistics can be computed from the difference between deviances as follows. Partition the parameter vector β so that β=[β1β2]

10.6.4 Quasi-Likelihood

The previous sections presented GzLMs as a tool for fitting linear models to non-normal response variables whose probability distributions belong to the exponential family. However, there are occasions when you want to fit a linear model to a response variable whose distribution does not belong to the exponential family and may not even be known. You can do this using quasi-likelihood theory.

Recall the basic form of the log likelihood for the exponential family, ℓ(λ,ϕ;y)=yθ−b(θ)ϕ+c(ϕ,y).

❏ You can specify a function relating the linear model Xβ to E(y). The inverse link μ = h(Xβ) or the link η = g(μ) = Xβ set up the GzLM.

❏ You can specify a variance in the form var(y)=ϕV(μ).

McCullagh and Nelder (1989) review several commonly used quasi-likelihood forms. Section 10.4.4 discussed an example of a quasi-likelihood analysis of count data using PROC GENMOD.

10.6.5 Repeated Measures and Generalized Estimating Equations

The previous sections discussed fitting generalized linear models to non-normally but independently distributed response variables. However, non-normal response variables are often observed in repeated-measures experiments. The resulting longitudinal data raise the same issues of correlation between observations within subjects discussed in Chapter 8. Zeger et al. (1988) presented the method of generalized estimating equations (GEEs), essentially an extension of the repeated-measures methods used by PROC MIXED from normally distributed data to non-normal data and generalized linear models.

The basic idea of GEEs is to build a correlation structure into V, the variance-covariance matrix of the observation vector y described in Section 10.6.2. Recall that V was defined as V(y) = diag[ϕV(μ)]. This variance-covariance structure assumes that the observations are independent. GEEs account for possible serial correlation by modifying V as V(y)=V1/2μRV1/2μ,

Diggle et al. (1994) provide additional background on GEEs. Section 10.5.1 presented an example with correlated Poisson data and their analysis using the GEE option available in PROC GENMOD