Chapter 3 Analysis of Variance for Balanced Data

3.2 One- and Two-Sample Tests and Statistics

3.3 The Comparison of Several Means: Analysis of Variance

3.3.1 Terminology and Notation

3.3.1.1 Crossed Classification and Interaction Sum of Squares

3.3.1.2 Nested Effects and Nested Sum of Squares

3.3.2 Using the ANOVA and GLM Procedures

3.3.3 Multiple Comparisons and Preplanned Comparisons

3.4 The Analysis of One-Way Classification of Data

3.4.1 Computing the ANOVA Table

3.4.2 Computing Means, Multiple Comparisons of Means, and Confidence Intervals

3.4.3 Planned Comparisons for One-Way Classification: The CONTRAST Statement

3.4.4 Linear Combinations of Model Parameters

3.4.5 Testing Several Contrasts Simultaneously

3.4.7 Estimating Linear Combinations of Parameters: The ESTIMATE Statement

3.5.1 Analysis of Variance for Randomized-Blocks Design

3.5.2 Additional Multiple Comparison Methods

3.5.3 Dunnett's Test to Compare Each Treatment to a Control

3.6 A Latin Square Design with Two Response Variables

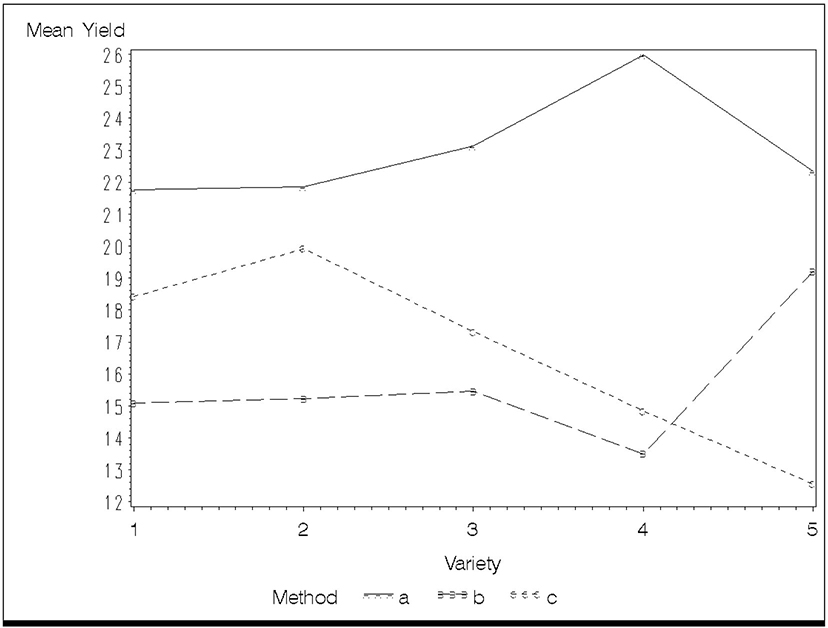

3.7 A Two-Way Factorial Experiment

3.7.1 ANOVA for a Two-Way Factorial Experiment

3.7.2 Multiple Comparisons for a Factorial Experiment

3.7.3 Multiple Comparisons of METHOD Means by VARIETY

3.7.4 Planned Comparisons in a Two-Way Factorial Experiment

3.7.5 Simple Effect Comparisons

3.7.7 Simultaneous Contrasts in Two-Way Classifications

3.7.8 Comparing Levels of One Factor within Subgroups of Levels of Another Factor

3.7.9 An Easier Way to Set Up CONTRAST and ESTIMATE Statements

3.1 Introduction

The arithmetic mean is the basic descriptive statistic associated with the linear model. In some studies, you only want to estimate a single mean. More commonly, you want to compare the means of two or more treatments. For one- or two-sample (that is, one- or two-treatment) analyses, t-tests, or confidence intervals based on the t-distribution, are often used. The MEANS procedure and TTEST procedures can perform one- and two-sample t-tests. In most cases, either you want to compare more than two treatments, or you must use a more complex design in order to adequately control extraneous variation. For these situations, you need to use analysis of variance. In fact the two-sample tests are merely special cases of analysis of variance, so the analysis of variance is actually a general tool applicable to a wide variety of applications, for two or more treatments.

This chapter begins by presenting one-and two-sample analyses of means using the MEANS and TTEST procedures. Then, more complex analyses using the ANOVA and GLM procedures are discussed. Most of the focus is on analysis of variance and related methods using PROC GLM. 1

3.2 One- and Two-Sample Tests and Statistics

In addition to a wide selection of descriptive statistics, SAS can provide t-tests for a single sample, for paired samples, and for two independent samples.

3.2.1 One-Sample Statistics

The following single-sample statistics are available with SAS:

mean: |

|

standard deviation: |

|

standard error of the mean: |

|

student's t: |

The statistics , s, and estimate the population parameters μ, σ, and respectively. Student's t is used to test the null hypothesis H0: μ=0.

PROC MEANS can compute most common descriptive statistics and calculate t-tests and the associated significance probability (p-value) for a single sample. The basic syntax of the MEANS procedure is as follows:

PROC MEANS options;

VAR variables;

BY variables;

CLASS variables;

WHERE variables;

FREQ variables;

WEIGHT variable;

ID variables;

OUTPUT options;

The VAR statement is optional. If this statement is not included, PROC MEANS computes statistics for all numeric variables in the data set. The BY, CLASS, and WHERE statements enable you to obtain separate computations for subgroups of observations in the data set. The FREQ, WEIGHT, ID, and OUTPUT statements can be used with PROC MEANS to perform functions such as weighting or creating an output data set. For more information about PROC MEANS, consult the SAS/STAT User’s Guide in SAS OnlineDoc, Version 8.

The following example shows a single-sample analysis. In order to design a mechanical harvester for bell peppers, an engineer determined the angle (from a vertical reference) at which 28 peppers hang on the plant (ANGLE). The following statistics are needed:

❏ the sample mean x̅, an estimate of the population mean, μ

❏ the sample standard deviation s, an estimate of the population standard deviation, σ

❏ the standard error of the mean, sx̅, a measure of the precision of the sample mean.

Using these computations, the engineer can construct a 95% confidence interval for the mean, the endpoints of which are x̅ − t.05sx̅ and x̅ + t.05 sx̅ where t.05 is obtained from a table of t-values. The engineer can also use the statistic t = x̅ / sx̅ to test the hypothesis that the population mean is equal to 0.

The following SAS statements print the data and perform these computations:

data peppers;

input angle @@;

datalines;

3 11 -7 2 3 8 -3 -2 13 4 7

-1 4 7 -1 4 12 -3 7 5 3 -1

9 -7 2 4 8 -2

;

proc print;

proc means mean std stderr t prt;

run;

This PROC MEANS statement specifically calls for the mean (MEAN), the standard deviation (STD), the standard error of the mean (STDERR), the t-statistic for testing the hypothesis that the population mean is 0 (T), and the p-value (significance probability) of the t-test (PRT). These represent only a few of the descriptive statistics that can be requested in a PROC MEANS statement. The data, listed by PROC PRINT, and output from PROC MEANS, appear in Output 3.1.

Output 3.1 PROC MEANS for Single-Sample Analysis

| Obs | angle |

| 1 | 3 |

| 2 | 11 |

| 3 | –7 |

| 4 | 2 |

| 5 | 3 |

| 6 | 8 |

| 7 | –3 |

| 8 | –2 |

| 9 | 13 |

| 10 | 4 |

| 11 | 7 |

| 12 | –1 |

| 13 | 4 |

| 14 | 7 |

| 15 | –1 |

| 16 | 4 |

| 17 | 12 |

| 18 | –3 |

| 19 | 7 |

| 20 | 5 |

| 21 | 3 |

| 22 | –1 |

| 23 | 9 |

| 24 | –7 |

| 25 | 2 |

| 26 | 4 |

| 27 | 8 |

| 28 | –2 |

| The MEANS Procedure | ||||

| Analysis Variable : angle | ||||

| Mean | Std Dev | Std Error | t Value | Pr > |t| |

| 3.1785714 | 5.2988718 | 1.0013926 | 3.17 | 0.0037 |

A t-table shows t.05=2.052 with 27 degrees of freedom (DF). The confidence interval for the mean ANGLE is, therefore, 3.179 ± 2.052(1.0014), which yields the interval (1.123, 5.333). The value of t=3.17 has a significance probability of p=0.0037, indicating that the engineer can reject the null hypothesis that the mean ANGLE in the population, μ, is 0.

You can compute the confidence interval by adding the option CLM to the PROC MEANS statement. The default is a 95% confidence interval. You can add the ALPHA option to change the level of confidence. For example, ALPHA=0.1 gives you a 90% confidence interval. Alternatively, you can use the OUTPUT statement, along with additional programming statements, to compute the confidence interval. First insert the following statements immediately before the RUN statement in the above program:

output out=stats

mean=xbar stderr=sxbar;

Then use the following program statements:

data stats; set stats;

t=tinv(27,.05);

bound=t*sxbar;

lower=xbar-bound;

upper=xbar+bound;

proc print;

run;

This might seem a little complicated just to get a confidence interval. However, it illustrates the use of the OUTPUT statement to obtain computations from a procedure and the use of a DATA step to make additional computations. Similar methods can be used with other procedures such as the REG procedure, the GLM procedure discussed later in this chapter, the MIXED procedure introduced in Chapter 4, and the GENMOD procedure introduced in Chapter 10.

You should note that a test of H0: μ=C, where C≠0, can be obtained by subtracting C from each observation. You can do this in the DATA step by adding a command after the INPUT statement, and then applying the single-sample analysis to the revised response variable. For example, you could test H0: μ=5 with the following statements:

data peppers;

set peppers;

diff5=angle-5;

proc means t;

run;

3.2.2 Two Related Samples

You can apply a single-sample analysis to the difference between paired measurements to make inferences about means from paired samples. This type of analysis is appropriate for randomized-blocks experiments with two treatments. It is also appropriate in many experiments that use before-treatment and after-treatment responses on the same experimental unit, as shown in the example below.

A combination stimulant-relaxant drug is administered to 15 animals whose pulse rates are measured before (PRE) and after (POST) administration of the drug. The purpose of the experiment is to determine if there is a change in the pulse rate as a result of the drug.

The appropriate t-statistic is t = where di= the difference between the PRE and POST measurement for the ith animal, for example, PRE-POST, and .

The t for the paired differences tests the null hypothesis of no change in pulse rate. You can compute the differences, D=PRE-POST, for each subject and the one-sample t-test based on the differences with the following SAS statements:

data pulse;

input pre post;

d=pre-post;

datalines;

62 61

63 62

58 59

64 61

64 63

61 58

68 61

66 64

65 62

67 68

69 65

61 60

64 65

61 63

63 62

;

proc print;

proc means mean std stderr t prt;

var d;

run;

In this example, the following SAS statement creates the variable D (the difference in rates):

d=pre-post;

Remember that a SAS statement that generates a new variable is part of a DATA step.

The PROC MEANS statements here and in the preceding example are identical. The statement

var d;

following the PROC MEANS statement restricts the PROC MEANS analysis to the variable D. Otherwise, computations would also be performed on PRE and POST. The data listed by PROC PRINT and output from PROC MEANS appear in Output 3.2.

Output 3.2 Paired-Difference Analysis

| Obs | pre | post | d |

| 1 | 62 | 61 | 1 |

| 2 | 63 | 62 | 1 |

| 3 | 58 | 59 | –1 |

| 4 | 64 | 61 | 3 |

| 5 | 64 | 63 | 1 |

| 6 | 61 | 58 | 3 |

| 7 | 68 | 61 | 7 |

| 8 | 66 | 64 | 2 |

| 9 | 65 | 62 | 3 |

| 10 | 67 | 68 | –1 |

| 11 | 69 | 65 | 4 |

| 12 | 61 | 60 | 1 |

| 13 | 64 | 65 | –1 |

| 14 | 61 | 63 | –2 |

| 15 | 63 | 62 | 1 |

| The MEANS Procedure | ||||

| Analysis Variable : d | ||||

| Mean | Std Dev | Std Error | t Value | Pr > |t| |

| 1.4666667 | 2.3258383 | 0.6005289 | 2.44 | 0.0285 |

The t-value of 2.44 with p=0.0285 indicates a statistically significant change in mean pulse rate. Because the mean of D (1.46) is positive, the drug evidently decreases pulse rate.

You can also compute the paired test more simply by using PROC TTEST. The TTEST procedure computes two-sample paired t-tests for both the paired and independent case. The latter is shown in Section 3.2.3., “Two Independent Samples.” For the paired test, use the following SAS statements:

proc ttest;

paired pre*post;

run;

The statement PAIRED PRE*POST causes the test to be computed for the paired difference PRE-POST. The results appear in Output 3.3. The estimated mean difference of PRE-POST, 1.4667, appears in the column labeled MEAN. The lower and upper 95% confidence limits appear in the columns labeled Lower CL Mean and Upper CL Mean, respectively.

Output 3.3 Paired-Difference Analysis Using PROC TTEST with the PAIRED Option

| The TTEST Procedure | |||||||

| Statistics | |||||||

| Lower CL | Upper CL | Lower CL | Upper CL | ||||

| Difference | N | Mean | Mean | Mean | Std Dev | Std Dev | Std Dev |

| pre - post | 15 | 0.1787 | 1.4667 | 2.7547 | 1.7028 | 2.3258 | 3.6681 |

| Statistics | |||

| Difference | Std Err | Minimum | Maximum |

| pre - post | 0.6005 | –2 | 7 |

| T-Tests | |||

| Difference | DF | t Value | Pr > |t| |

| pre - post | 14 | 2.44 | 0.0285 |

You can also use the single mean capability of PROC TTEST with the D variable:

proc ttest;

var d;

run;

As mentioned at the beginning of this section, the paired two-sample test is a special case of the test for treatment effects in a randomized-blocks design, pairs being a special case of blocks. Section 3.5, “Randomized-Blocks Designs,” presents the analysis of blocked designs.

3.2.3 Two Independent Samples

You can test the significance of the difference between means from two independent samples with the t-statistic

where and n1, n2 refer to the means and sample sizes of the two groups, respectively, and s2 refers to the pooled variance estimate,

Note that and are the sample variances for the two groups, respectively. The pooled variance estimate should be used if it is reasonable to assume that the population variances of the two groups, and are equal. If this assumption cannot be justified, then you should use an approximate t-statistic given by

You can use PROC TTEST to compute both of these t’s along with the (folded) F-statistic

to test the assumption . Analysis-of-variance procedures, for example, PROC ANOVA and PROC GLM, give equivalent results but do not test equality of the variances and perform the approximate t-test.

An example of this test is the comparison of muzzle velocities of cartridges made from two types of gunpowder (POWDER). The muzzle velocity (VELOCITY) was measured for eight cartridges made from powder type 1 and ten cartridges from powder type 2. The data appear in Output 3.4.

Output 3.4 PROC PRINT of BULLET Data for Two Independent Samples

| Obs | powder | velocity |

| 1 | 1 | 27.3 |

| 2 | 1 | 28.1 |

| 3 | 1 | 27.4 |

| 4 | 1 | 27.7 |

| 5 | 1 | 28.0 |

| 6 | 1 | 28.1 |

| 7 | 1 | 27.4 |

| 8 | 1 | 27.1 |

| 9 | 2 | 28.3 |

| 10 | 2 | 27.9 |

| 11 | 2 | 28.1 |

| 12 | 2 | 28.3 |

| 13 | 2 | 27.9 |

| 14 | 2 | 27.6 |

| 15 | 2 | 28.5 |

| 16 | 2 | 27.9 |

| 17 | 2 | 28.4 |

| 18 | 2 | 27.7 |

The two-sample t-test is appropriate for testing the null hypothesis that the muzzle velocities are equal. You can obtain such a t-test with these SAS statements:

proc ttest data=bullets;

var velocity;

class powder;

run;

PROC TTEST performs the two-sample analysis. The variable POWDER in the CLASS statement identifies the groups (or treatments) whose means are to be compared. CLASS variables may be numeric or character variables. This CLASS statement serves the same purpose as it does in all other procedures that require identification of groups of treatments. In PROC TTEST, the CLASS variable must have exactly two values. Otherwise, the procedure issues an error message and stops processing. The VAR statement identifies the variable whose means you want to compare. Note that PROC TTEST is limited to comparing two groups. To compare more than two groups, you use analysis-of-variance procedures, discussed in Section 3.3, “The Comparison of Several Means: Analysis of Variance.”

Output 3.5 shows the data from PROC PRINT and the results of PROC TTEST.

Output 3.5 PROC TTEST for Two Independent Samples

| The TTEST Procedure | ||||||||

| Statistics | ||||||||

| Lower CL | Upper CL | Lower CL | ||||||

| Variable | Class | N | Mean | Mean | Mean | Std Dev | Std Dev | |

| velocity | 1 | 8 | 27.309 | 27.638 | 27.966 | 0.2596 | 0.3926 | |

| velocity | 2 | 10 | 27.841 | 28.06 | 28.279 | 0.2106 | 0.3062 | |

| velocity | Diff (1-2) | -0.771 | -0.422 | -0.074 | 0.2582 | 0.3467 | ||

| Statistics | ||||||

| Upper CL | ||||||

| Variable | Class | Std Dev | Std Err | Minimum | Maximum | |

| velocity | 1 | 0.799 | 0.1388 | 27.1 | 28.1 | |

| velocity | 2 | 0.5591 | 0.0968 | 27.6 | 28.5 | |

| velocity | Diff (1-2) | 0.5276 | 0.1644 | |||

| T-Tests | |||||

| Variable | Method | Variances | DF | t Value | Pr > |t| |

| velocity | Pooled | Equal | 16 | -2.57 | 0.0206 |

| velocity | Satterthwaite | Unequal | 13.1 | -2.50 | 0.0267 |

| Equality of Variances | |||||

| Variable | Method | Num DF | Den DF | F Value | Pr > F |

| velocity | Folded F | 7 | 9 | 1.64 | 0.4782 |

The first part of PROC TTEST output gives you the number of observations, mean, standard deviation, standard error of the mean, the minimum and maximum observations of VELOCITY for the two levels of POWDER, and the upper and lower 95% confidence limits. The second part gives you the t-test results, the t-statistic (T), the degrees of freedom (DF), and the p-value (Pr> |t|). You can see that there are two sets of statistics. These correspond to two types of assumptions: the usual two-sample t-test that assumes equal variances (Equal) or an approximate t-test that does not assume equal variances (Unequal). The approximate t-test uses Satterthwaite's approximation for the sum of two mean squares (Satterthwaite 1946) to calculate the significance probability Pr> |t|. Section 4.5.3, “Satterthwaite’s Formula for Approximate Degrees of Freedom,” presents the approximation in some detail.

The F-test at the bottom of Output 3.5 is used to test the hypothesis of equal variances. An F=1.64 with a significance probability of p=0.4782 provides insufficient evidence to conclude that the variances are unequal. Therefore, use the test that assumes equal variances. For this test t=2.5694 with a p-value of 0.0206. This is strong evidence of a difference between the mean velocities for the two powder types, with the mean velocity for powder type 2 greater than that for powder type 1.

The two-sample independent test of the difference between treatment means is a special case of one-way analysis of variance. Thus, using analysis of variance for the BULLET data, shown in Section 3.3 is equivalent to the t-test procedures shown above, assuming equal variances for the two samples. This point is developed in the next section.

3.3 The Comparison of Several Means: Analysis of Variance

Analysis of variance and related mean comparison procedures are the primary tools for making statistical inferences about a set of two or more means. SAS offers several procedures. Two of them, PROC ANOVA and PROC GLM, are specifically intended to compute analysis of variance. Other procedures, such as PROC TTEST, PROC NESTED, and PROC VARCOMP, are available for specialized types of analyses.

PROC ANOVA is limited to balanced or orthogonal data sets. PROC GLM is more general—it can be used for both balanced and unbalanced data sets. While the syntax is very similar, PROC ANOVA is simpler computationally than PROC GLM. At one time, this was an issue, because large models using the GLM procedure often exceeded the computer’s capacity. With contemporary computers, GLM’s capacity demands are rarely an issue, and so PROC GLM has largely superseded PROC ANOVA.

PROC MIXED can compute all of the essential analysis-of-variance statistics. In addition, MIXED can compute statistics specifically appropriate for models with random effects that are not available with any other SAS procedure. For this reason, MIXED is beginning to supplant GLM for data analysis, much as GLM previously replaced ANOVA. However, GLM has many features not available in MIXED that are useful for understanding underlying analysis-of-variance concepts, so it is unlikely that GLM will ever be completely replaced.

The rest of this chapter focuses on basic analysis of variance with the main focus on PROC GLM. Random effects and PROC MIXED are introduced in Chapter 4.

3.3.1 Terminology and Notation

Analysis of variance partitions the variation among observations into portions associated with certain factors that are defined by the classification scheme of the data. These factors are called sources of variation. For example, variation in prices of houses can be partitioned into portions associated with region differences, house-type differences, and other differences. Partitioning is done in terms of sums of squares (SS) with a corresponding partitioning of the associated degrees of freedom (DF). For three sources of variation (A, B, C),

TOTAL SS = SS(A) + SS(B) + SS(C) + RESIDUAL SS

The term TOTAL SS is normally the sum of the squared deviations of the data values from the overall mean, where yi represents the observed response for the ith observation.

The formula for computing SS(A), SS(B), and SS(C) depends on the situation. Typically, these terms are sums of squared differences between means. The term RESIDUAL SS is simply what is left after subtracting SS(A), SS(B), and SS(C) from TOTAL SS.

Degrees of freedom are numbers associated with sums of squares. They represent the number of independent differences used to compute the sum of squares. For example, is a sum of squares based upon the differences between each of the n observations and the mean, that is, ,,...,. There are only n-1 linearly independent differences, because any one of these differences is equal to the negative of the sum of the others. For example, consider the following:

Total degrees of freedom are partitioned into degrees of freedom associated with each source of variation and the residual:

TOTAL DF = DF(A) + DF(B) + DF(C) + RESIDUAL DF

Mean squares (MS) are computed by dividing each SS by its corresponding DF. Ratios of mean squares, called F-ratios, are then used to compare the amount of variability associated with each source of variation. Tests of hypotheses about group means can be based on F-ratios. The computations are usually displayed in the familiar tabular form shown below:

| Source of Variation | DF | SS | MS | F | p-value |

|---|---|---|---|---|---|

| A | DF(A) | SS(A) | MS(A) | F(A) | p for A |

| B | DF(B) | SS(B) | MS(B) | F(B) | p for B |

| C | DF(C) | SS(C) | MS(C) | F(C) | p for C |

| Residual | Residual DF | SS(Residual) | Residual MS | ||

| Total | Total DF | SS(Total) |

Sources of variation in analysis of variance typically measure treatment factor effects. Three kinds of effects are considered in this chapter: main effects, interaction effects, and nested effects. Each is discussed in terms of its SS computation. Effects can be either fixed or random, a distinction that is developed in Chapter 4, “Analyzing Data with Random Effects.” All examples in this chapter assume fixed effects.

A main effect sum of squares for a factor A, often called the sum of squares for treatment A, is given by

(3.1)

or alternatively by

(3.2)

where

| ni | equals the number of observations in level i of factor A. |

| yi | equals the total of observations in level i of factor A. |

| ȳi. | equals the mean of observations in level i of factor A. |

| n. | equals the total number of observations () |

| y. | equals the total of all observations () |

| ȳ | equals the mean of all observations (y./n..). |

As equation (3.1) implies, the SS for a main effect measures variability among the means corresponding to the levels of the factor. If A has a levels, then SS(A) has (a – 1) degrees of freedom.

For data with a single factor, the main effect and treatment SS are one and the same. For data with two or more factors, treatment variation must be partitioned into additional components. The structure of these multiple factors determines what SS besides main effects are appropriate. The two basic structures are crossed and nested classifications. In a crossed classification, every level of each factor occurs with each level of the other factors. In a nested classification, each level of one factor occurs with different levels of the other factor. See also Figures 4.1 and 4.2 in Chapter 4 for an illustration.

3.3.1.1 Crossed Classification and Interaction Sum of Squares

In crossed classifications, you partition the SS for treatments into main effect and interaction components. To understand an interaction, you must first understand simple effects. It is easiest to start with a two-factor crossed classification. Denote ȳij the mean of the observations on the ijth factor combination, that is, the treatment receiving level i of factor A and level j of factor B. The ijth factor combination is also defined as the ijth cell. A simple effect is defined as

A | Bj = y̅ij – y̅i'j, for differences between two levels of i≠i’ of factor A at level j of factor B

or alternatively

B | Ai = y̅ij – y̅ij, for differences between two levels of j≠j’ of factor B at level i of factor A

If the simple effects “A | Bj” are not the same for all levels of factor B, or, equivalently, if the “B | Ai” are not the same for all levels of factor A, then an interaction is said to occur. If all simple effects are equal, there is no interaction. An interaction effect is thus defined by y̅ij − y̅i'j − y̅ij' + y̅i'j'. If it is equal to zero, there is no interaction; otherwise, there is an “A by B” interaction.

It follows that you calculate the sum of squares for the interaction between the factors A and B with the equation

SS(A*B) = (3.3)

or alternatively

SS(A*B) = (3.4)

where

| n | equals the number of observations on the ijth cell. |

| a and b | are the number of levels of A and B, respectively. |

| yij | equals the total of all observations in the ijth cell. |

| yi. | is equal to the total of all observations on the ith level of A. |

| y.j | is equal to the total of all observations on the jth level of B. |

| y.. | is equal to the grand total of all observations. |

The sum of squares for A*B has

(a – 1)(b – 1) = ab – a – b + 1

degrees of freedom.

3.3.1.2 Nested Effects and Nested Sum of Squares

For nested classification, suppose factor B is nested within factor A. That is, a different set of levels of B appears with each level of factor A. For this classification, you partition the treatment sum of squares into the main effect, SS(A) and the SS for nested effect, written B(A). The formula for the sum of squares of B(A) is

SS[B(A)] = (3.5)

or alternatively

SS[B(A)] = (3.6)

where

| nij | equals the number of observations on level j of B and level i of A. |

| yij | equals the total of observations for level j of B and level i of A. |

| y̅ij | equals the mean of observations for level j of B and level i of A. |

| ni. | is equal to |

| ni. | is equal to |

| y̅i. | is equal to yi/ni.. |

Looking at equation (3.5) as

SS(B(A)) = (3.7)

you see that SS(B(A)) measures the variation among the levels of B within each level of A and then pools, or adds, across the levels of A. If there are bi levels of B in level i of A, then there are (bi – 1) DF for B in level i of A, for a total of DF for the B(A) effect.

3.3.2 Using the ANOVA and GLM Procedures

Because of its generality and versatility, PROC GLM is the preferred SAS procedure for analysis of variance, provided all model effects are fixed effects. For one-way and balanced multiway classifications, PROC ANOVA produces the same results as the GLM procedure. The term balanced means that each cell of the multiway classification has the same number of observations.

This chapter begins with a one-way analysis of variance example. Because the computations used by PROC ANOVA are easier to understand without developing matrix algebra concepts used by PROC GLM, the first example begins using PROC ANOVA. Subsequent computations and all remaining examples use PROC GLM, because GLM is the procedure data analysts ordinarily use in practice. These examples are for basic experimental designs (completely random, randomized blocks, Latin square) and factorial treatment designs.

Generally, PROC ANOVA computes the sum of squares for a factor A in the classification according to equation (3.2). Nested effects are computed according to equation (3.6). A two-factor interaction sum of squares computed by PROC ANOVA follows equation (3.4), which can be written more generally as

SS(A*B) = (3.8)

where nij is the number of observations and yij is the observed totalfor the ijth A?B treatment combination. If nij has the same value for all ij, then equation (3.8) is the same as equation (3.4). Equation (3.4) is not correct unless all the nij are equal to the same value, and this formula could even produce a negative value because it would not actually be a sum of squares. If a negative value is obtained, PROC ANOVA prints a value of 0 in its place. Sums of squares for higher-order interactions follow a similar formula.

The ANOVA and GLM procedures share much of the same syntax. The GLM procedure has additional features described later in this section. The shared basic syntax is as follows:

PROC ANOVA (or GLM) options;

CLASS variables;

MODEL dependents=effects / options;

MEANS effects / options;

ABSORB variables;

FREQ variable;

TEST H=effects E=effect;

MANOVA H=effects E=effect M=equations / options;

REPEATED factor-name levels / options;

BY variables;

The CLASS and MODEL statements are required to produce the ANOVA table. The other statements are optional. The ANOVA output includes the F-tests of all effects in the MODEL statement. All of these F-tests use residual mean squares as the error term. PROC GLM produces four types of sums of squares. In the examples considered in this chapter, the different types of sums of squares are all the same, and are identical to those computed by PROC ANOVA. Distinctions among the types of SS occur with unbalanced data, and are discussed in detail in Chapters 5 and 6.

The MEANS statement produces tables of the means corresponding to the list of effects. Several multiple comparison procedures are available as options in the MEANS statement. Section 3.3.3, “Multiple Comparisons and Preplanned Comparisons,” and Section 3.4.2, “Computing Means, Multiple Comparisons of Means, and Confidence Intervals,” illustrate these procedures.

The TEST statement is used for tests where the residual mean square is not the appropriate error term, such as certain effects in mixed models and main-plot effects in split-plot experiments (see Chapter 4). You can use multiple MEANS and TEST statements, but only one MODEL statement. The ABSORB statement implements the technique of absorption, which saves time and reduces storage requirements for certain types of models. This is illustrated in Chapter 11, “Examples of Special Applications.”

The MANOVA statement is used for multivariate analysis of variance (see Chapter 9, “Multivariate Linear Models”). The REPEATED statement can be useful for analyzing repeated-measures designs (see Chapter 8, “Repeated-Measures Analysis”), although the more sophisticated repeated-measures analysis available with PROC MIXED is preferable in most situations. The BY statement specifies that separate analyses are performed on observations in groups defined by the BY variables. Use the FREQ statement when you want each observation in a data set to represent n observations, where n is the value of the FREQ variable.

Most of the analysis-of-variance options in PROC GLM use the same syntax as PROC ANOVA. The same analysis-of-variance program in PROC ANOVA will work for GLM with little modification. GLM has additional statements—CONTRAST, ESTIMATE, and LSMEANS. The CONTRAST and ESTIMATE statements allow you to test or estimate certain functions of means not defined by other multiple comparison procedures. These are introduced in Section 3.4, “Analysis of One-Way Classification of Data.” The LSMEANS statement allows you to compute means that are adjusted for the effects of unbalanced data, an extremely important consideration for unbalanced data, which is discussed in Chapter 5. LSMEANS has additional features useful for factorial experiments (see Section 3.7, “Two-Way Factorial Experiment”) and analysis of covariance (see Chapter 7).

For more information about PROC ANOVA and PROC GLM, see their respective chapters in the SAS/STAT User’s Guide in SAS OnlineDoc, Version 8.

As an introductory example, consider the BULLET data from Section 3.2.3. You can compute the one-way analysis of variance with PROC ANOVA using the following statements:

proc anova;

class powder;

model velocity=powder;

The data appear in Output 3.6.

Output 3.6 Analysis-of-Variance Table for BULLET Two-Sample Data

| The ANOVA Procedure | |||||

| Dependent Variable: velocity | |||||

| Source | DF | Sum of Squares |

Mean Square | F Value | Pr > F |

| Model | 1 | 0.79336111 | 0.79336111 | 6.60 | 0.0206 |

| Error | 16 | 1.92275000 | 0.12017188 | ||

| Corrected Total | 17 | 2.71611111 | |||

| R-Square | Coeff Var | Root MSE | velocity Mean |

| 0.292094 | 1.243741 | 0.346658 | 27.87222 |

| Source | DF | Anova SS | Mean Square | F Value | Pr > F |

| powder | 1 | 0.79336111 | 0.79336111 | 6.60 | 0.0206 |

The output gives the sum of squares and mean square for the treatment factor, POWDER, and for residual, called ERROR in the output. Note that the MODEL and POWDER sum of squares are identical. Treatment and MODEL statistics are always equal for one-way analysis of variance, but not for the more complicated analysis-of-variance models discussed starting with Section 3.6, “Latin Square Design with Two Response Variables.” The F-value, 6.60, is the square of the two-sample t-value assuming equal variances shown previously in Output 3.5. The p-value for the two-sample t-test and the ANOVA F-test shown above are identical. This equivalence of the two-sample test and one-way ANOVA holds whenever there are two treatments and the samples are independent. However, ANOVA allows you to compare more than two treatments.

Alternatively, you can use PROC GLM to compute the analysis of variance. You can also use the ESTIMATE statement in GLM to compute the estimate and standard error of the difference between the means of the two POWDER levels. The statements and results are not shown here, but you can obtain them by following the examples in Section 3.4, “Analysis of One-Way Classification of Data.” The estimate and standard error of the difference for the BULLET data are identical to those given in Output 3.5.

3.3.3 Multiple Comparisons and Preplanned Comparisons

The F-test for a factor in an analysis of variance tests the null hypothesis that all the factor means are equal. However, the conclusion of such a test is seldom a satisfactory end to the analysis. You usually want to know more about the differences among the means (for example, which means are different from which other means or if any groups of means have common values).

Multiple comparisons of the means are commonly used to answer these questions. There are numerous methods for making multiple comparisons, most of which are available in PROC ANOVA and PROC GLM. In this chapter, only a few of the methods are illustrated.

One method of multiple comparisons is to conduct a series of t-tests between pairs of means; this is essentially the method known as least significant difference (LSD). Refer to Steel and Torrie (1980) for examples.

Another method of multiple comparisons is Duncan’s multiple-range test. With this test, the means are first ranked from largest to smallest. Then the equality of two means is tested by referring the difference to tabled critical points, the values of which depend on the range of the ranks of the two means tested. The larger the range of the ranks, the larger the tabled critical point (Duncan 1955).

The LSD method and, to a lesser extent, Duncan’s method, are frequently criticized for inflating the Type I error rate. In other words, the overall probability of falsely declaring some pair of means different, when in fact they are equal, is substantially larger than the stated ?-level. This overall probability of a Type I error is called the experimentwise error rate. The probability of a Type I error for one particular comparison is called the comparisonwise error rate. Other methods are available to control the experimentwise error rate, including Tukey’s method.

You can request the various multiple comparison tests with options in the MEANS statement in the ANOVA and GLM procedures.

Multiple comparison procedures, as described in the previous paragraphs, are useful when there are no particular comparisons of special interest. But in most situations there is something about the factor that suggests specific comparisons. These are called preplanned comparisons because you can decide to make these comparisons prior to collecting data. Specific hypotheses for preplanned comparisons can be tested by using the CONTRAST, ESTIMATE, or LSMEANS statement in PROC GLM, as discussed in Section 3.4.3, “Planned Comparisons for One-Way Classification: The CONTRAST Statement.”

3.4 The Analysis of One-Way Classification of Data

One-way classification refers to data that are grouped according to some criterion, such as the values of a classification variable. The gunpowder data presented in Section 3.2.3, “Two Independent Samples,” and in Section 3.3.2, “Using the ANOVA and GLM Procedures,” are an example of a one-way classification. The values of VELOCITY are classified according to POWDER. In this case, there are two levels of the classification variable—1 and 2. Other examples of one-way classifications might have more than two levels of the classification variable. Populations of U.S. cities could be classified according to the state containing the city, giving a one-way classification with 50 levels (the number of states) of the classification variable. One-way classifications of data can result from sample surveys. For example, wages determined in a survey of migrant farm workers could be classified according to the type of work performed. One-way classifications also result from a completely randomized designed experiment. For example, strengths of monofilament fiber can be classified according to the amount of an experimental chemical used in the manufacturing process, or sales of a new facial soap in a marketing test could be classified according to the color of the soap. The type of statistical analysis that is appropriate for a given one-way classification of data depends on the goals of the investigation that produced the data. However, you can use analysis of variance as a tool for many applications.

The levels of a classification variable are considered to correspond to different populations from which the data were obtained. Let k stand for the number of levels of the classification criterion, so there are data from k populations. Denote the population means as µ1, . . . , µk. Assume that all the populations have the same variance, and that all the populations are normally distributed. Also, consider now those situations for which there are the same number of observations from each population (denoted n). Denote the jth observation in the ith group of data by yij. You can summarize this setup as follows:

y11,…,y1,n is a sample from N(μ1, σ2)

y21,…,y2,n is a sample from N(μ2, σ2)

.

.

.

yk1,…,yk,n is a sample from N(μk, σ2)

N(μi, σ2) refers to a normally distributed population with mean μi and variance σ2. Sometimes it is useful to express the data in terms of linear models. One way of doing this is to write

yij = μi + eij

where μi is the mean of the ith population and eij is the departure of the observed value yij from population mean. This is called a means model. Another model is called an effects model, and is denoted by the equation

y# = μ + τi + eij

The effects model simply expresses the ith population mean as the sum of two components, μi = μ+τi. In both models eij is called the error and is normally distributed with mean 0 and variance σ2. Moreover, both of these models are regression models, as you will see in Chapter 6. Therefore, results from regression analysis can be used for these models, as discussed in subsequent sections.

Notice that the models for one-way analysis of variance assume that the observations within each classification level are normally distributed and that the variances among the observations for each level are equal. The latter assumption was addressed in Section 3.2.3, “Two Independent Samples.” The analysis-of-variance procedure is robust, meaning that only severe failures of these assumptions compromise the results. Nonetheless, these assumptions should be checked. You can obtain simple but useful visual tools by sorting the data by classification level and running PROC UNIVARIATE. For example, for the BULLET data, use the following SAS statements:

proc sort; by powder;

proc univariate normal plot; by powder;

var velocity;

run;

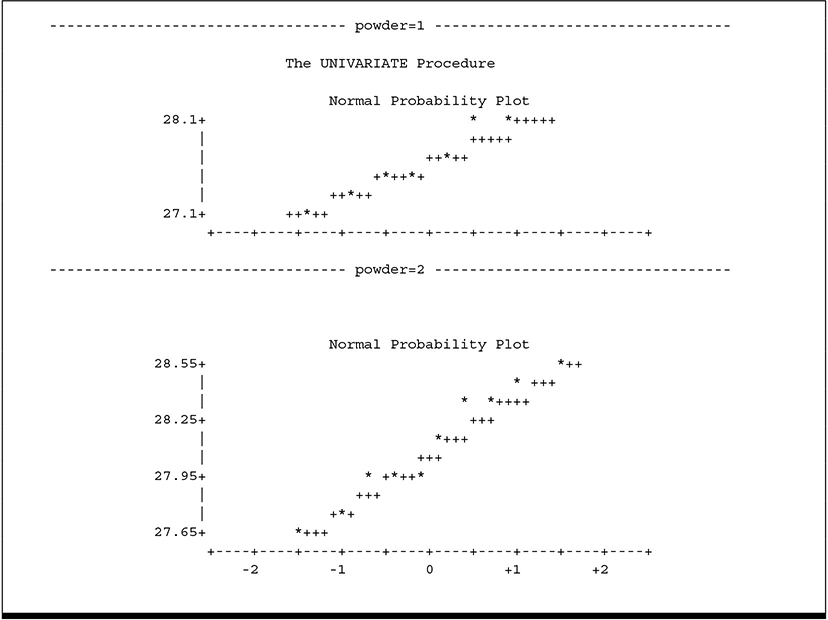

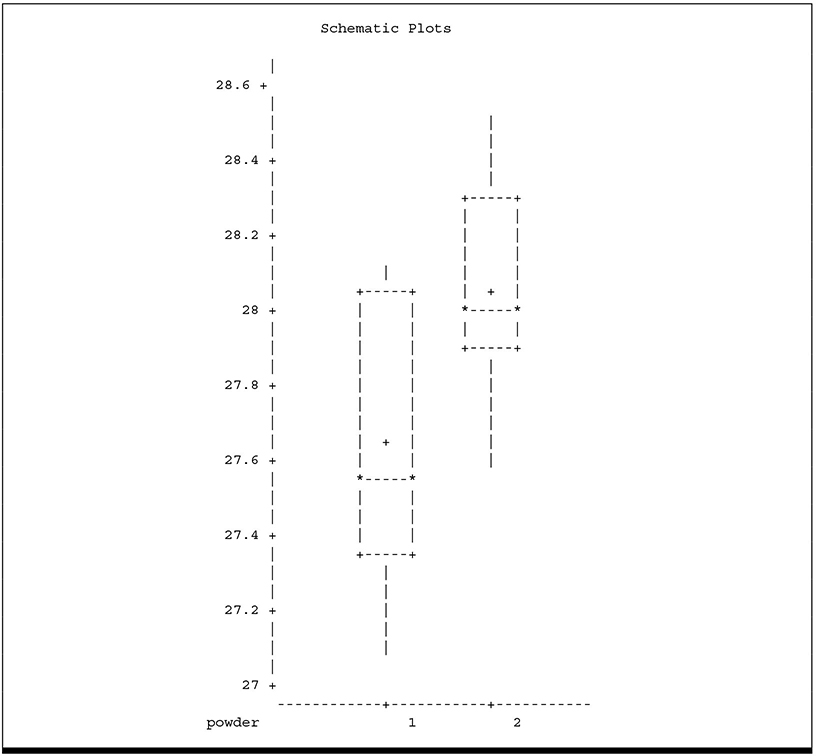

Output 3.7 shows results selected for relevance.

1. Normal Probability Plots

Output 3.7 PROC UNIVARIATE Output for BULLET Data to Check ANOVA Assumptions

2. Side-by-Side Box-and-Whisker Plots

These plots allow you to look for strong visual evidence of failure of assumptions. You can check for non-normality using the normal probability plots. Some departure from normality is common and has no meaningful effect on ANOVA results. In fact, many statisticians argue that true normal distributions are rare in nature, if they exist at all. Highly skewed distributions, however, can seriously affect ANOVA results; strongly asymmetric box-and-whisker plots give you a useful visual cue to detect such situations. The side-by-side box-and-whisker plot also allows you to detect heterogeneous variances. Note that neither plot suggests failure of assumptions. The box-and-whisker plot does suggest that the typical response to POWDER 1 is less than the response to POWDER 2.

The UNIVARIATE output contains many other statistics, such as the variance, skewness, and kurtosis by treatment and formal tests of normality. These can be useful, for example, for testing equal variance. It is beyond the scope of this text to discuss model diagnostics in great detail. You can find such discussions in most introductory statistical methods texts. The MEANS statement of PROC GLM has an option, HOVTEST, that computes statistics to test homogeneity of variance. The GLM procedure and the MEANS statement are discussed in more detail in the remaining sections of this chapter. An example of the HOVTEST output appears in Output 3.9. In any event, you should be aware that many statisticians consider formal tests of assumptions to be of limited usefulness because the number of observations per treatment is often quite small. In most cases, strong visual evidence is the best indicator of trouble.

When analysis-of-variance assumptions fail, a common strategy involves transforming the data and computing the analysis of variance on the transformed data. Section 4.2, “Nested Classifications,” contains an example using this approach. Often, assumptions fail because the distribution of the data is known to be something other than normal. Generalized linear models are essentially regression and ANOVA models for data whose distribution is known but not necessarily normal. In such cases, you can use methods illustrated in Chapter 10, “Generalized Linear Models.”

Section 3.4.1 presents an example of analysis of variance for a one-way classification.

3.4.1 Computing the ANOVA Table

Four specimens of each of five brands (BRAND) of a synthetic wood veneer material are subjected to a friction test. A measure of wear is determined for each specimen. All tests are made on the same machine in completely random order. Data are stored in a SAS data set named VENEER.

data veneer;

input brand $ wear;

cards;

ACME 2.3

ACME 2.1

ACME 2.4

ACME 2.5

CHAMP 2.2

CHAMP 2.3

CHAMP 2.4

CHAMP 2.6

AJAX 2.2

AJAX 2.0

AJAX 1.9

AJAX 2.1

TUFFY 2.4

TUFFY 2.7

TUFFY 2.6

TUFFY 2.7

XTRA 2.3

XTRA 2.5

XTRA 2.3

XTRA 2.4

;

proc print data=veneer;

run;

Output 3.8 shows the data.

Output 3.8 Data for One-Way Classification

| Obs | brand | wear |

| 1 | ACME | 2.3 |

| 2 | ACME | 2.1 |

| 3 | ACME | 2.4 |

| 4 | ACME | 2.5 |

| 5 | CHAMP | 2.2 |

| 6 | CHAMP | 2.3 |

| 7 | CHAMP | 2.4 |

| 8 | CHAMP | 2.6 |

| 9 | AJAX | 2.2 |

| 10 | AJAX | 2.0 |

| 11 | AJAX | 1.9 |

| 12 | AJAX | 2.1 |

| 13 | TUFFY | 2.4 |

| 14 | TUFFY | 2.7 |

| 15 | TUFFY | 2.6 |

| 16 | TUFFY | 2.7 |

| 17 | XTRA | 2.3 |

| 18 | XTRA | 2.5 |

| 19 | XTRA | 2.3 |

| 20 | XTRA | 2.4 |

An appropriate analysis of variance has the basic form:

| Source of Variation | DF | |

|---|---|---|

| BRAND | 4 |

|

| Error | 15 |

|

| Total | 19 |

The following SAS statements produce the analysis of variance:

proc glm data=veneer;

class brand;

model wear=brand;

means brand/hovtest;

run;

Since the data are classified only according to the values of BRAND, this is the only variable in the CLASS statement. The variable WEAR is the response variable to be analyzed, so WEAR appears on the left side of the equal sign in the MODEL statement. The only source of variation (effect in the ANOVA table) other than ERROR (residual) and TOTAL is variation due to brands; therefore, BRAND appears on the right side of the equal sign in the MODEL statement. The MEANS statement causes the treatment means to be computed. The HOVTEST option computes statistics to test the homogeneity of variance assumption. The treatment means are not shown here; they are considered in more detail later. Output from the MODEL and HOVTEST statements appear in Output 3.9.

Output 3.9 Analysis of Variance for One-Way Classification with a Homogeneity-of-Variance Test

| The GLM Procedure | |||||

| Dependent Variable: wear | |||||

| Sum of | |||||

| Source |

DF | Squares | Mean Square | F Value | Pr > F |

| Model |

4 | 0.61700000 | 0.15425000 | 7.40 | 0.0017 |

| Error |

15 | 0.31250000 | 0.02083333 | ||

| Corrected Total |

19 | 0.92950000 | |||

| R-Square | Coeff Var | Root MSE | wear Mean |

| 0.663798 | 6.155120 | 0.144338 | 2.345000 |

| Source | DF | Type I SS | Mean Square | F Value | Pr > F |

| brand | 4 | 0.61700000 | 0.15425000 | 7.40 | 0.0017 |

| Source | DF | Type III SS | Mean Square | F Value | Pr > F |

| brand | 4 | 0.61700000 | 0.15425000 | 7.40 | 0.0017 |

| Levene's Test for Homogeneity of wear Variance ANOVA of Squared Deviations from Group Means | |||||

| Sum of | Mean | ||||

| Source | DF | Squares | Square | F Value | Pr > F |

| brand | 4 | 0.000659 | 0.000165 | 0.53 | 0.7149 |

| Error | 15 | 0.00466 | 0.000310 | ||

The results in Output 3.9 are summarized in the following ANOVA table:

| Source | DF | SS | MS | F | P |

|---|---|---|---|---|---|

| BRAND | 4 | 0.6170 | 0.1542 | 7.40 | 0.0017 |

| ERROR | 15 | 0.3125 | 0.0208 | ||

| TOTAL | 19 | 0.9295 |

Notice that you get the same computations from PROC GLM as from PROC ANOVA for the analysis of variance, although they are labeled somewhat differently. For one thing, in addition to the MODEL sum of squares, PROC GLM computes two sets of sums of squares for BRAND—Type I and Type III sums of squares—rather than the single sum of squares computed by the ANOVA procedure. For the one-way classification, as well as for balanced multiway classifications, the GLM-Type I, GLM-Type III, and PROC ANOVA sums of squares are identical. For unbalanced multiway data and for multiple regression models, the Type I and Type III SS are different. Chapter 5 discusses these differences. For the rest of this chapter, only the Type III SS will be shown in example GLM output.

The HOVTEST output appears as “Levene’s Test for Homogeneity of WEAR Variance.” The F-value, 0.53, tests the null hypothesis that the variances among observations within each treatment are equal. There is clearly no evidence to suggest failure of this assumption for these data.

3.4.2 Computing Means, Multiple Comparisons of Means, and Confidence Intervals

You can easily obtain means and multiple comparisons of means by using a MEANS statement after the MODEL statement. For the VENEER data, you get BRAND means and LSD comparisons of the BRAND means with the statement

means brand/lsd;

Results appear in Output 3.10.

Output 3.10 Least Significant Difference Comparisons of BRAND Means

| t Tests (LSD) for wear | ||

| NOTE: This test controls the Type I comparisonwise error rate, not the experimentwise error rate. | ||

| Alpha | .05 |

| Error Degrees of Freedom | 15 |

| Error Mean Square | .020833 |

| Critical Value of t | .13145 |

| Least Significant Difference | .2175 |

| Means with the same letter are not significantly different. | ||

| t Grouping | Mean | N | brand |

| A | 2.6000 | 4 | TUFFY |

| B | 2.3750 | 4 | XTRA |

| B | |||

| B | 2.3750 | 4 | CHAMP |

| B | |||

| B | 2.3250 | 4 | ACME |

| C | 2.0500 | 4 | AJAX |

Means and the number of observations (N) are produced for each BRAND. Because LSD is specified as an option, the means appear in descending order of magnitude. Under the heading “T Grouping” are sequences of A’s, B’s, and C’s. Means are joined by the same letter if they are not significantly different, according to the t-test or equivalently if their difference is less than LSD. The BRAND means for XTRA, CHAMP, and ACME are not significantly different and are joined by a sequence of B’s. The means for AJAX and TUFFY are found to be significantly different from all other means so they are labeled with a single C and A, respectively, and no other means are labeled with A’s or C’s.

You can obtain confidence intervals about means instead of comparisons of the means if you specify the CLM option:

means brand/lsd clm;

Results in Output 3.11 are self-explanatory.

Output 3.11 Confidence Intervals for BRAND Means

| t Confidence Intervals for wear | ||

| Alpha | .05 |

| Error Degrees of Freedom | 15 |

| Error Mean Square | 0.020833 |

| Critical Value of t | 2.13145 |

| Half Width of Confidence Interval | 0.153824 |

| brand | N | Mean | 95% Confidence Limits | |

| TUFFY | 4 | 2.60000 | 2.44618 | 2.75382 |

| XTRA | 4 | 2.37500 | 2.22118 | 2.52882 |

| CHAMP | 4 | 2.37500 | 2.22118 | 2.52882 |

| ACME | 4 | 2.32500 | 2.17118 | 2.47882 |

| AJAX | 4 | 2.05000 | 1.89618 | 2.20382 |

You can also obtain confidence limits for differences between means, as discussed in Section 3.5.2., “Additional Multiple Comparison Methods.”

3.4.3 Planned Comparisons for One-Way Classification: The CONTRAST Statement

Multiple comparison procedures, as demonstrated in the previous section, are useful when there are no particular comparisons of special interest and you want to make all comparisons among the means. But in most situations there is something about the classification criterion that suggests specific comparisons. For example, suppose you know something about the companies that manufacture the five brands of synthetic wood veneer material. You know that ACME and AJAX are produced by a U.S. company named A-Line, that CHAMP is produced by a U.S. company named C-Line, and that TUFFY and XTRA are produced by a non-U.S. companies.

Then you would probably be interested in comparing certain groups of means with other groups of means. For example, you would want to compare the means for the U.S. companies with the means for the non-U.S. companies; you would want to compare the means for the two U.S. companies with each other; you would want to compare the two A-Line means; and you would want to compare the means for the two non-U.S. brands. These would be called planned comparisons, because they are suggested by the structure of the classification criterion (BRAND) rather than the data. You know what comparisons you want to make before you look at the data. When this is the case, you ordinarily obtain a more relevant analysis of the data by making the planned comparisons rather than using a multiple comparison technique, because the planned comparisons are focused on the objectives of the study.

You use contrasts to make planned comparisons. In SAS, PROC ANOVA does not have a CONTRAST statement, but the GLM procedure does, so you must use PROC GLM to compute contrasts. You use CONTRAST as an optional statement the same way you use a MEANS statement.

To define contrasts and get them into a form you can use in the GLM procedure, you should first express the comparisons as null hypotheses concerning linear combinations of means to be tested. For the comparisons indicated above, you would have the following null hypotheses:

❏ U.S. versus non-U.S.

H0: 1/3(μACME + μAJAX + μCHAMP) = 1/2(μTUFFY + μXTRA)

❏ A-Line versus C-Line

H0: 1/2(μACME + μAJAX) = μCHAMP

❏ ACME versus AJAX

H0: μACME = μAJAX

❏ TUFFY versus XTRA

H0: μTUFFY = μXTRA

The basic form of the CONTRAST statement is

CONTRAST ‘label’ effect-name effect-coefficients;

where label is a character string used for labeling output, effect-name is a term on the right-hand side of the MODEL statement, and effect-coefficients is a list of numbers that specifies the linear combination of parameters in the null hypothesis. The ordering of the numbers follows the alphameric ordering (numbers first, in ascending order, then alphabetical order) of the levels of the classification variable, unless specified otherwise with an ORDER= option in the PROC GLM statement.

Starting with one of the simpler comparisons, ACME versus AJAX, you want to test H0:μACME=μAJAX. This hypothesis must be expressed as a linear combination of the means equal to 0, that is, H0: μACME – μAJAX=0. In terms of all the means, the null hypothesis is

H0: 1 * μACME – 1 * μAJAX + 0 * μCHAMP + 0 * μTUFFY + 0 * μXTRA= 0 .

Notice that the BRAND means are listed in alphabetical order. All you have to do is insert the coefficients on the BRAND means in the list of effect coefficients in the CONTRAST statement. The coefficients for the levels of BRAND follow the alphabetical ordering.

proc glm; class brand;

model wear = brand;

contrast 'ACME vs AJAX' brand 1 -1 0 0 0;

Results appear in Output 3.12.

Output 3.12 Analysis of Variance and Contrast with PROC GLM

| Contrast | DF | Contrast SS | Mean Square | F Value | Pr > F |

| ACME vs AJAX | 1 | 0.15125000 | 0.15125000 | 7.26 | 0.0166 |

Output from the CONTRAST statement, labeled ACME vs AJAX, shows a sum of squares for the contrast, and an F-value for testing H0: μACME=μAJAX. The p-value tells you the means are significantly different at the 0.0166 level.

Actually, you don’t have to include the trailing zeros in the CONTRAST statement. You can simply use

contrast 'ACME vs AJAX' brand 1 -1;

By default, if you omit the trailing coefficients they are assumed to be zeros.

Following the same procedure, to test H0: μTUFFY=μXTRA, use the statement

contrast 'TUFFY vs XTRA' brand 0 0 0 1 -1;

The contrast U.S. versus non-U.S. is a little more complicated because it involves fractions. You can use the statement

contrast 'US vs NON-U.S.' brand .33333 .33333 .33333 -.5 -.5;

Although the continued fraction for 1/3 is easily written, it is tedious. Other fractions, such as 1/7, are even more difficult to write in decimal form. It is usually easier to multiply all coefficients by the least common denominator to get rid of the fractions. This is legitimate because the hypothesis you are testing with a CONTRAST statement is that a linear combination is equal to 0, and multiplication by a constant does not change whether the hypothesis is true or false. (Something is equal to 0 if and only if a constant times the something is equal to 0.) In the case of U.S. versus non-U.S., the assertion is that

H0: 1/3(μACME + μAJAX + μCHAMP) = 1/2(μTUFFY + μXTRA)

is equivalent to

H0: 2(μACME + μAJAX + μCHAMP) – 3(μTUFFY + μXTRA) = 0

This tells you the appropriate CONTRAST statement is

contrast 'US vs NON-U.S.' brand 2 2 2 -3 -3;

The GLM procedure enables you to run as many CONTRAST statements as you want, but good statistical practice ordinarily indicates that this number should not exceed the number of degrees of freedom for the effect (in this case 4). Moreover, you should be aware of the inflation of the overall (experimentwise) Type I error rate when you run several CONTRAST statements.

To see how CONTRAST statements for all four comparisons are used, run the following program:

proc glm; class brand;

model wear = brand;

contrast 'US vs NON-U.S.' brand 2 2 2 -3 -3;

contrast 'A-L vs C-L' brand 1 1 -2 0 0;

contrast 'ACME vs AJAX' brand 1 -1 0 0 0;

contrast 'TUFFY vs XTRA' brand 0 0 0 1 -1;

run;

Output 3.13 Contrasts among BRAND Means

| Contrast | DF | Contrast SS | Mean Square | F Value | Pr > F |

| US vs NON-U.S. | 1 | 0.27075000 | 0.27075000 | 13.00 | 0.0026 |

| A-L vs C-L | 1 | 0.09375000 | 0.09375000 | 4.50 | 0.0510 |

| ACME vs AJAX | 1 | 0.15125000 | 0.15125000 | 7.26 | 0.0166 |

| TUFFY vs XTRA | 1 | 0.10125000 | 0.10125000 | 4.86 | 0.0435 |

Results in Output 3.13 indicate statistical significance for each of the contrasts. Notice that the p-value for ACME vs AJAX is the same in the presence of other CONTRAST statements as it was when run as a single contrast in Output 3.12. Computations for one CONTRAST statement are unaffected by the presence of other CONTRAST statements. The contrasts in Output 3.13 have a special property called orthogonality, which is discussed in Section 3.4.6, “Orthogonal Contrasts.”

3.4.4 Linear Combinations of Model Parameters

Thus far, the coefficients in a CONTRAST statement have been discussed as coefficients in a linear combination of means. In fact, these are coefficients on the effect parameters in the MODEL statement. It is easier to think in terms of means, but PROC GLM works in terms of model parameters. Therefore, you must be able to translate between the two sets of parameters.

Models are discussed in more depth in Chapter 4. For now, all you need to understand is the relationship between coefficients on a linear combination of means and the corresponding coefficients on linear combinations of model effect parameters. For the linear combinations representing comparisons of means (that is, with coefficients summing to 0), this relationship is very simple for the one-way classification. The coefficient of an effect parameter in a linear combination of effect parameters is equal to the coefficient on the corresponding mean in the linear combination of means. This is because of the fundamental relationship between means and effect parameters, that is, μi = μ + τi. For example, take the contrast A-Line versus C-Line. The linear combination in terms of means is

2μCHAMP – μACME– μAJAX

= 2(μ + τCHAMP) – (μ + τACME) – (μ + τAJAX)

= 2τCHAMP – τACME– τAJAX

You see that the coefficient on τCHAMP is the same as the coefficient on μCHAMP; the coefficient on τACME is equal to the coefficient on μACME, and so on. Moreover, the parameter disappears when you convert from means to effect parameters, because the coefficients on the means sum to 0.

It follows that, for comparisons in the one-way classification, you may derive coefficients in terms of means and simply insert them as coefficients on model effect parameters in a CONTRAST statement. For more complicated applications, such as two-way classifications, the task is not so straightforward. You’ll see this in Section 3.7, “A Two-Way Factorial Experiment,” and subsequent sections in this chapter.

3.4.5 Testing Several Contrasts Simultaneously

Sometimes you need to test several contrasts simultaneously. For example, you might want to test for differences among the three means for U.S. BRANDs. The null hypothesis is

H0: μACME = μAJAX = μCHAMP

This hypothesis equation actually embodies two equations that can be expressed in several ways. One way to express the hypothesis in terms of two equations is

H0: μACME = μAJAX and H0: μACME = μCHAMP

Why are the two hypotheses equivalent? Because the three means are all equal if and only if the first is equal to the second and the first is equal to the third.

You can test this hypothesis by writing a CONTRAST statement that expresses sets of coefficients for the two equations, separated by a comma. An appropriate CONTRAST statement is

contrast 'US BRANDS' brand 1 -1 0 0 0, brand 1 0 -1 0 0;

Results appear in Output 3.14.

Output 3.14 Simultaneous Contrasts among U.S. BRAND Means

| Contrast | DF | Contrast SS | Mean Square | F Value | Pr > F |

| US BRANDS | 2 | 0.24500000 | 0.12250000 | 5.88 | 0.0130 |

Notice that the sum of squares for the contrast has 2 degrees of freedom. This is because you are testing two equations simultaneously. The F-statistic of 5.88 and associated p-value tell you the means are different at the 0.0130 level of significance.

Another way to express the hypothesis in terms of two equations is

H0: μACME = μAJAX and H0: 2 μCHAMP = μACME + μAJAX

A contrast for this version of the hypothesis is

contrast 'US BRANDS' brand 1 -1 0 0 0,

brand 1 1 -2 0 0;

Results from this CONTRAST statement, not included here, are identical to Output 3.10.

3.4.6 Orthogonal Contrasts

Notice that the sum of squares, 0.245, in Output 3.14 is equal to the sum of the sums of squares for the two contrasts ACME vs AJAX (0.15125) and A-L vs C-L (0.09375) in Output 3.13. That occurs because the two sets of coefficients in this CONTRAST statement are orthogonal. Arithmetically, this means the sum of products of coefficients for the respective means is 0—that is, (1×1) + [1×(–1)] + (0×2) = 0. Moreover, all four of the contrasts in Output 3.13 form an orthogonal set. You can verify this by multiplying pairs of coefficients and adding the products. Therefore, the sum of the four contrast sums of squares in Output 3.9 is equal to the overall BRAND SS (0.617) in Output 3.9.

Statistically, orthogonal means that the sums of squares for the two contrasts are independent. The outcome of one of them in no way influences the outcome of any other. Sets of orthogonal comparisons are commonly considered desirable, because the result of any one of them tells you (essentially) nothing about what to expect from any other comparison. However, desirable as it is to have independent tests, it is more important to construct sets of contrasts to address the objectives of the investigation. Practically meaningful contrasts are more desirable than simply orthogonal ones.

3.4.7 Estimating Linear Combinations of Parameters: The ESTIMATE Statement

The CONTRAST statement is used to construct an F-test for a hypothesis that a linear combination of parameters is equal to 0. In many applications, you want to obtain an estimate of the linear combination of parameters, along with the standard error of the estimate. You can do this with an ESTIMATE statement. The ESTIMATE statement is used in much the same way as a CONTRAST statement. You could estimate the difference μACME – μAJAX with the following statement:

estimate 'ACME vs AJAX' brand 1 -1 0 0 0;

This statement is exactly like the CONTRAST statement for ACME vs AJAX, with the keyword CONTRAST replaced by the keyword ESTIMATE.

Output 3.15 Estimating the Difference between BRAND Means

| Standard | ||||

| Parameter | Estimate | Error | t Value | Pr > |t| |

| ACME vs AJAX | 0.27500000 | 0.10206207 | 2.69 | 0.0166 |

Results shown in Output 3.15 include the value of the estimate, a standard error, a t-statistic for testing whether the difference is significantly different from 0, and a p-value for the t-statistic. Note the p-value (0.0166) for the t-test is the same as for the F-test for the contrast in Output 3.12. This is because the two tests are equivalent; the F is equal to the square of the t.

For the present application, the estimate of μACME – μAJAX can be computed as

The standard error is

In more complicated examples, such as two-way classification with unbalanced data, more complicated computations for means are required.

Suppose you want to estimate μCHAMP –1/2(μACME+ μAJAX). You can use the following statement:

estimate 'AL vs CL' brand -.5 -.5 1 0 0;

The coefficients in the above ESTIMATE statement are not equivalent to the coefficients (–1 –1 2 0 0) as they would be in a CONTRAST statement. The latter set of coefficients would actually estimate twice the mean difference of interest. You can avoid the fractions by using the DIVISOR option:

estimate 'AL vs CL' brand -1 -1 2 0 0 / divisor=2;

Now suppose you want to estimate a linear combination of means that does not represent a comparison of two groups of means. For example, maybe you want to estimate the average of the three U.S. means, 1/3(μACME + μAJAX + μCHAMP). The coefficients do not sum to 0, so you can’t simply take coefficients of the means and use them in the ESTIMATE statement as coefficients on model effect parameters. The μ parameter does not disappear when you convert from means to effect parameters:

1/3(μACME + μAJAX + μCHAMP)

=1/3(μ + τACME + μ + τAJAX + μ + τCHAMP

= μ + 1/3(τACME + τAJAX + τCHAMP)

You see that the parameter ? remains in the linear combination of model effect parameters. This parameter is called INTERCEPT in CONTRAST and ESTIMATE statements. This is because ? shows up as the intercept in a regression model, as discussed in Chapter 4 where the connection between analysis-of-variance models and regression models is explained. An appropriate ESTIMATE statement is

estimate 'US MEAN' intercept 1 brand .33333 .33333 .33333 0 0;

or equivalently

estimate 'US MEAN' intercept 3 brand 1 1 1 0 0 / divisor=3;

Results from this ESTIMATE statement appear in Output 3.16.

Output 3.16 Estimating the Mean of U.S. BRANDS

| Standard | ||||

| Parameter | Estimate | Error | t Value | Pr > |t| |

| US MEAN | 2.25000000 | 0.04166667 | 54.00 | <.0001 |

In this application the estimate and its standard error are useful. For example, you can construct a 95% confidence interval:

2.25 ± 2.13(0.0417)

Again, the estimate is 2.25 = 1/3(2.325 + 2.050 + 3.375), and the standard error is [(1/4 + 1/4 + 1/4) MS(ERROR)]1/2. Since MS(ERROR) is the basic variance estimate in this formula, the degrees of freedom for the t-statistic are there for MS(ERROR). The t-statistic is computed to test the null hypothesis

H0: μACME + μAJAX + μCHAMP = 0

Of course, this hypothesis is not of practical interest.

3.5 Randomized-Blocks Designs

The randomized-blocks design assumes that a population of experimental units can be divided into a relatively homogeneous subpopulations that are called blocks. The treatments are then randomly assigned to experimental units within the blocks. If all treatments are assigned in each block, the design is called a randomized-complete-blocks design. Blocks usually represent naturally occurring differences not related to the treatments. In analysis of variance, the extraneous variation among blocks can be partitioned out, usually reducing the error mean square. Also, differences between treatment means do not contain block variation. In this sense, the randomized-blocks design controls block variation.

A classic example of blocks is an agricultural field that is divided into smaller, more homogeneous subfields. Other examples of blocks include days of the week, measuring or recording devices, and operators of a machine. The paired two-sample design, such as the PULSE data in Section 3.2.2, “Two Related Samples,” is a special case of the randomized-complete-blocks design with the two samples as treatments and pairs as the blocks.

In the following example, five methods of applying irrigation (IRRIG) are applied to a Valencia orange tree grove. The trees in the grove are arranged in eight blocks (BLOC) to account for local variation. That is, variation among trees within a block is minimized. Assignment of the irrigation method to trees within each block is random, giving a randomized-blocks design. Each of the five irrigation methods appears in all eight blocks and there are no missing data, making this a randomized-complete-blocks design. At harvest, for each plot the fruit is weighed in pounds. The objective is to determine if method of irrigation affects fruit weight (FRUITWT) and to rank the irrigation treatments accordingly.

The data appear in Output 3.17. The following SAS DATA step often provides a convenient shortcut for data entry, because it allows you to put the data for all eight blocks on a single line for each treatment. You can modify these statements to put the data for all treatments on a single line for each block.

data methods;

input irrig $ @@;

do bloc=1 to 8;

input fruitwt @@;

output;

end;

datalines;

trickle 450 469 249 125 280 352 221 251

basin 358 512 281 58 352 293 283 186

spray 331 402 183 70 258 281 219 46

sprnkler 317 423 379 63 289 239 269 357

flood 245 380 263 62 336 282 171 98

;

proc sort;

by bloc irrig;

proc print;

var bloc irrig fruitwt;

run;

Output 3.17 Data for the Randomized-Complete-Blocks Design

| Obs | bloc | irrig | fruitwt |

| 1 | 1 | basin | 358 |

| 2 | 1 | flood | 245 |

| 3 | 1 | spray | 331 |

| 4 | 1 | sprnkler | 317 |

| 5 | 1 | trickle | 450 |

| 6 | 2 | basin | 512 |

| 7 | 2 | flood | 380 |

| 8 | 2 | spray | 402 |

| 9 | 2 | sprnkler | 423 |

| 10 | 2 | trickle | 469 |

| 11 | 3 | basin | 281 |

| 12 | 3 | flood | 263 |

| 13 | 3 | spray | 183 |

| 14 | 3 | sprnkler | 379 |

| 15 | 3 | trickle | 249 |

| 16 | 4 | basin | 58 |

| 17 | 4 | flood | 62 |

| 18 | 4 | spray | 70 |

| 19 | 4 | sprnkler | 63 |

| 20 | 4 | trickle | 125 |

| 21 | 5 | basin | 352 |

| 22 | 5 | flood | 336 |

| 23 | 5 | spray | 258 |

| 24 | 5 | sprnkler | 289 |

| 25 | 5 | trickle | 280 |

| 26 | 6 | basin | 293 |

| 27 | 6 | flood | 282 |

| 28 | 6 | spray | 281 |

| 29 | 6 | sprnkler | 239 |

| 30 | 6 | trickle | 352 |

| 31 | 7 | basin | 283 |

| 32 | 7 | flood | 171 |

| 33 | 7 | spray | 219 |

| 34 | 7 | sprnkler | 269 |

| 35 | 7 | trickle | 221 |

| 36 | 8 | basin | 186 |

| 37 | 8 | flood | 98 |

| 38 | 8 | spray | 46 |

| 39 | 8 | sprnkler | 357 |

| 40 | 8 | trickle | 251 |

3.5.1 Analysis of Variance for Randomized-Blocks Design

The following analysis of variance for the randomized-complete-blocks design provides a test for the differences among irrigation methods:

| Source | DF |

|---|---|

| BLOC | 7 |

| IRRIG | 4 |

| ERROR | 28 |

| TOTAL | 39 |

Use the following SAS statements to compute the basic analysis of variance:

proc glm;

class bloc irrig;

model fruitwt=bloc irrig;

BLOC and IRRIG appear in the CLASS statement because the data are classified according to these variables. The MODEL statement specifies that the response variable to be analyzed is FRUITWT. The two sources of variation in the analysis-of-variance table (other than ERROR and TOTAL) are BLOC and IRRIG, so these variables appear on the right side of the MODEL statement. The analysis appears in Output 3.18.

Output 3.18 Analysis of Variance for the Randomized-Complete-Blocks Design

| Dependent Variable: wear | |||||

| Sum of | |||||

| Source | DF | Squares | Mean Square | F Value | Pr > F |

| Model | 11 | 445334.0250 | 40484.9114 | 12.04 | <.0001 |

| Error | 28 | 94146.7500 | 3362.3839 | ||

| Corrected Total | 39 | 539480.7750 | |||

| R-Square | Coeff Var | Root MSE | wear Mean |

| 0.825486 | 21.71153 | 57.98607 | 267.0750 |

| Source | DF | Type I SS | Mean Square | F Value | Pr > F |

| bloc | 7 | 401308.3750 | 57329.7679 | 17.05 | <.0001 |

| irrig | 4 | 44025.6500 | 11006.4125 | 3.27 | 0.0254 |

| Source | DF | Type III SS | Mean Square | F Value | Pr > F |

| bloc | 7 | 401308.3750 | 57329.7679 | 17.05 | <.0001 |

| irrig | 4 | 44025.6500 | 11006.4125 | 3.27 | 0.0254 |

The top section contains lines labeled MODEL, ERROR, and CORRECTED TOTAL. The total variation, as measured by the total sum of squares, is partitioned into two components: variation due to the effects in the model (MODEL) and variation not due to effects in the model (ERROR). The bottom section of the output contains lines labeled BLOC and IRRIG. These partition the MODEL sum of squares into two components: sum of squares due to the effects of blocks (BLOC) and sum of squares due to the effects of treatment (IRRIG). In most cases when MODEL is partitioned into two or more sources of variation, the F-test for MODEL has no useful interpretation; you want to interpret the BLOC and IRRIG sources of variation separately.

In the GLM output, there are two sets of sums of squares, TYPE I and TYPE III. For balanced data such as the randomized-complete-blocks design with no missing data, these sums of square types are identical. In analysis of variance, the types of sum of squares matter when the data are unbalanced. An example is when you have missing data or incomplete-blocks designs. Chapter 5 discusses the different types of sums of squares.

You can summarize the key features of Output 3.18 in the following ANOVA table:

| Source | DF | SS | MS | F | p-value |

|---|---|---|---|---|---|

| BLOC | 7 | 401308.375 | |||

| IRRIG | 4 | 44025.650 | 11006.4125 | 3.27 | 0.0254 |

| ERROR | 28 | 94146.750 | 3362.3839 | ||

| TOTAL | 39 | 539480.775 |

3.5.2 Additional Multiple Comparison Methods

In Section 3.4, “The Analysis of One-Way Classification of Data,” you saw how to compare treatment means using least significant difference tests, basically two-sample t-tests in the context of analysis of variance, and contrasts. In addition, there are many multiple comparison tests available in PROC ANOVA and PROC GLM. It bears repeating that you should use contrasts whenever the structure of the treatment design permits, and it is usually advisable to structure the treatment design to facilitate using contrasts tailored to the specific objectives of the study. However, there are many situations where no obvious structure exists, and imposing structure would be artificial and inappropriate. These are the cases for which you should use multiple comparison tests.

In this section the randomized-blocks example shown above is used to illustrate some of these tests. The tests illustrated in this example are summarized below, including information pertaining to their error rates and option keywords.

❏ Least Significant Difference (LSD)

comparisonwise error rate (ALPHA=probability of Type I error for any one particular comparison)

❏ Duncan’s New Multiple Range (DUNCAN)

error rate comparable to k–1 orthogonal comparisons tested simultaneously

❏ Waller-Duncan (WALLER)

error rate dependent on value of analysis-of-variance F-test

❏ Tukey’s Honest Significant Difference (TUKEY)

experimentwise error rate (ALPHA=probability of one or more Type I errors altogether).

You may wonder why there are so many different tests. In mean comparisons, two types of error are possible. The test may incorrectly declare treatment means that are actually equal to be different; this is called a Type I error. Or the test may fail to declare a difference between treatment means that are not equal; this is called a Type II error. Multiple comparison tests differ in their Type I error rate, that is, the probability of incorrectly declaring treatment means to be different. The LSD test has the highest Type I error rate and Tukey’s the lowest, with Duncan and Waller-Duncan in the middle. When Type I error rate is reduced, all other things being equal, Type II error rate increases. The reason for so many tests is that different situations call for different priorities. For example, in the early stages of research, when you may be trying to identify new treatments that show any evidence of promise, Type I error may not be serious because follow-up research will reveal spurious differences. However, Type II error is serious because a potentially valuable treatment will go unnoticed. On the other hand, in later stages of research, Type I error may be much more serious, because it may mean allowing an ineffective product to be recommended as if it were effective, possibly with tragic consequences. As you can imagine, there is no one “correct” test for all situations—you must evaluate each case based on the relative consequences of Type I and Type II error.

For the LSD, DUNCAN, and TUKEY options, ALPHA=.05 unless the ALPHA= option is specified. Only ALPHA= values of .01, .05, or .1 are allowed with the Duncan’s test. The Waller test is based on Bayesian principles and utilizes the Type I/Type II error seriousness ratio, called the k-ratio, instead of an ALPHA=value. In practice, ALPHA=.05 for the DUNCAN option and KRATIO=100 for the WALLER option produce similar results.

The following SAS statements illustrate the options:

proc glm;

class bloc irrig;

model fruitwt=bloc irrig;

means irrig/duncan lsd tukey waller;

means irrig/duncan tukey alpha=0.1;