5 Technology for Telecommunications: Integrated Optics and Microelectronics

5.1 Introduction

In Chapter 4 we have presented a survey of fiber technology and of the technology needed to fabricate optical filter and wavelength multiplexer and demultiplexers for telecommunications.

In this chapter we will present a survey of the so-called integrated technology that is the technology leveraging planar processes to manufacture electronics and optical components.

The first part of the chapter is devoted to optical planar components built on the III–V platform.

The most important of those components is the semiconductor laser, both for the huge number of telecom and non-telecom applications and for the key role it plays in telecom equipments.

We will not try to make a survey of the semiconductor laser technology per se, but we will only summarize the characteristics and the main evolution potentialities of the devices used in telecommunications.

These devices are essentially signal sources or pump lasers for fiber amplifiers and we will consider both the applications, which require quite different devices.

We will add here also a brief description of the linear characteristics of semiconductor optical amplifiers (SOAs), which will be considered for the potentialities related to their nonlinear behavior in Chapter 10.

After a brief analysis of the modulators, both from III–V materials and from lithium niobate (LiNbO3), the first part of the chapter devoted to planar optics comes to an end.

In the second part of the chapter we will analyze the impact of electronic evolution on telecommunication equipments.

Electronics is by far the most important technology used in telecommunication equipments and modern telecommunications would be unconceivable without very large-scale integrated circuits.

Naturally it is not possible to make a review of electronics technology from first principles, and we will assume that the reader has already a familiarity with basic electronics principles and potentialities.

Thus only the most recent electronic development that has an impact on telecommunications will be reviewed, excluding wireless technologies, both traditional radio bridges and wireless access in all its different standards.

After a brief review of the evolution of the complementary metal oxide semiconductor (CMOS) transistors, the base element of electronic circuitry, the discussion on electronics is divided into the analysis of error correction and compensators for transmission systems and the analysis of the base elements of electronics switching.

Only the analysis of the switch fabric itself, starting from the elementary cross-point, is delayed to Chapter 7, in the more suitable framework of the switching and routing machine architectures.

5.2 Semiconductor Lasers

5.2.1 Fixed-Wavelength Edge-Emitting Semiconductor Lasers

Semiconductor lasers are the most diffused laser category, whose application ranges from consumer products (like compact disc players) to highly specialized industrial applications like transmitters in optical fiber systems and sensor equipment [1].

Here we will limit our review to two categories of semiconductor lasers that are instrumental in developing optical transmission systems: transmission lasers and pump lasers.

Transmission lasers are used to provide the optical carrier over which the information is coded.

Different applications have different requirements for the optical carrier parameters like power, linewidth, wavelength stability, and so on, as well as different cost targets; thus there are a wide variety of transmission lasers.

Depending on the specific application, the modulation of the carrier can be performed by an external modulator (a component completely different from the source laser), by a modulator integrated into the same chip of the laser or by the laser itself, with the so-called direct laser modulation.

Something similar happens for pump lasers, whose application ranges from the cheap erbium-doped fiber amplifiers (EDFAs) that could be used in the optical access network to the high-performance distributed Raman amplifiers used in ultra-long haul dense wavelength division multiplexing (DWDM) systems.

5.2.1.1 Semiconductor Laser Principle

The principle at the base of the working of a semiconductor laser is to create population inversion between valence and conduction electrons (or holes, depending on the material), exciting electrons from the valence to the conduction band of a semiconductor via current injection.

In order to achieve photon-stimulated emission, the transition between valence and conduction bands corresponding to the desired laser frequency has to be a “direct transition.”

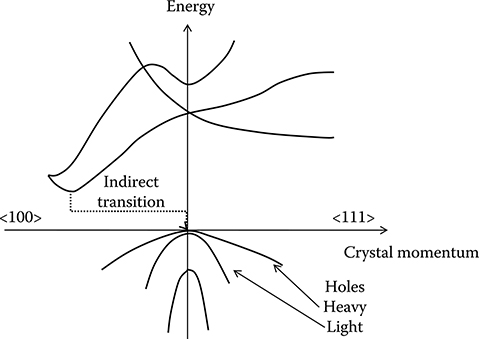

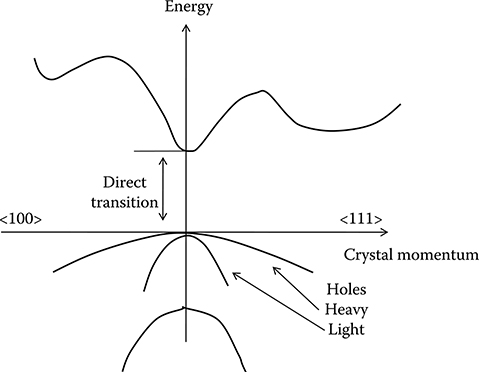

This means that pseudo-electrons in the valence and conduction bands must have the same crystal momentum so that decay can happen with photon emission without the need of higher-order processes to compensate the momentum difference. In Figure 5.1 [2] the band structure of silicon, characterized by an indirect transition between the valence and the conduction band, is shown, while the band structure of an InGaAs alloy [2], characterized by a direct transition in the near-infrared region, is shown in Figure 5.2.

The requirement of direct transitions practically selects the materials that are suited to build semiconductor lasers: they are essentially the alloys of III–V semiconductors, starting from GaAs and GaAsP, used for pump lasers, to InGaAsP, which is generally the alloy in the active region of lasers used for transmission.

Once the material is selected, a structure suitable for electron pumping has to be realized. The best possible structure is an inversely polarized p–n junction. In a p–n junction under inverse polarization, the field in the junction zone is opposite to the electron flux; thus if a current is pumped in the junction, electrons pass through being promoted to higher-energy levels to overcome the junction potential barrier. In this condition the average population of the conduction band can become greater than the population of the valence band, thus causing population inversion.

Figure 5.1 Schematic representation of the band structure of silicon.

Figure 5.2 Schematic representation of the band structure of a II–V alloy having direct optical transition at the band gap.

However, if a simple junction is used, creating the conditions for lasing operation is quite difficult.

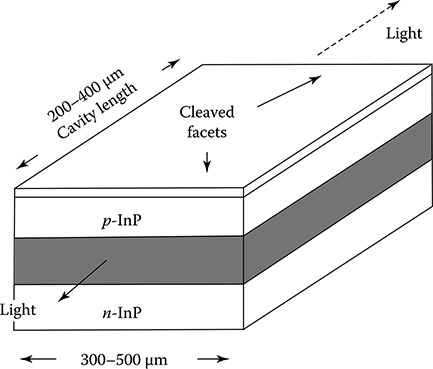

As a matter of fact, in a structure like that of Figure 5.3, there is no lateral confinement either for the injected current or for the optical field. Thus on the one hand, there is a constant electron loss due to the leak current flowing away from the inversion zone on the side of the device and, on the other hand, the optical mode is very large and the superposition of the mode with the inverted area of the junction where optical gain is concentrated is quite scarce.

Figure 5.3 Simpler possible structure of a semiconductor laser.

In order to achieve effective lasing operation, both the current and the optical mode have to be effectively confined in the junction area.

Several architectures have been proposed to achieve vertical and lateral confinement, at present the most used solution is constituted by heterostructure index–guided architecture [1].

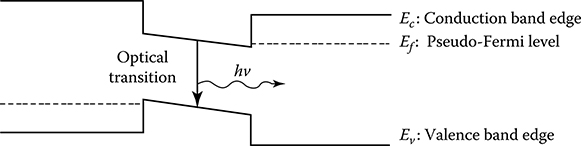

The heterostructure solution consists in realizing a p–i–n junction where the neutral zone is realized in a different alloy with a smaller band gap with respect to the doped zones. The band profile across the heterostructure is shaped approximately as shown in Figure 5.4 when an inverse polarization is applied.

The fact that the intrinsic material has a smaller energy gap with respect to the surrounding doped layers creates a sort of trap for the injected carriers: when the carriers arrive in the intrinsic zone, they fall in the potential energy hole and are confined to the intrinsic zone.

If the heterostructure provides confinement in the direction of the current injection, confinement in the lateral direction has to be achieved via some other system.

The most used structure for lateral confinement is the so-called index-confined architecture, where the optical mode is confined in the laser-active region by realizing an optical waveguide whose core is the active region itself. In this way the different materials provide confinement of the current and the diffraction index difference provides confinement of the field in the same region.

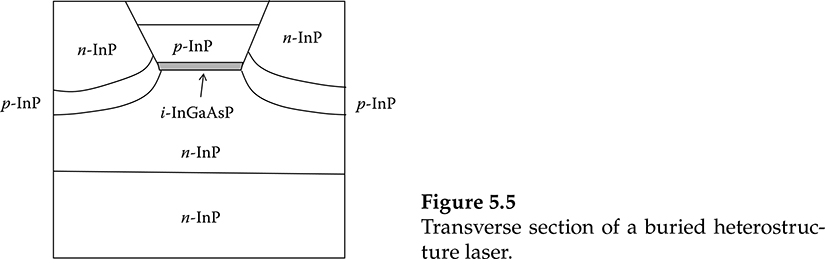

A transversal section of a buried heterostructure laser is represented in Figure 5.5. The gray area represents the active waveguide that is buried below the surface structure and assures contemporary good lateral current confinement and good superposition between the optical mode and the inverted region.

Figure 5.4 Schematic band profile of a heterojunction in inverse polarization.

Figure 5.5 Transverse section of a buried heterostructure laser.

Once inversion is effectively realized, in order to start the lasing operation, a positive counterreaction has to be introduced via an optical cavity to sustain spontaneous oscillations of the structure.

The easier way to do this is to cleave the front and rear facets of the laser to create a reflectivity.

The inner laser waveguide behaves like a Fabry–Perot (FP) cavity assuring the needed feedback to the laser.

The structure of an FP laser is schematized in Figure 5.3. With the typical dimensions of semiconductor lasers, the FP cavity has a free spectral range so small that several modes are enhanced by the optical gain.

Thus in general, FP semiconductor lasers oscillate in multimode regime even if, with some care in designing the laser, few modes can be selected. Even when single-mode operation is achieved by using strongly wavelength-dependent mirrors (that can be realized by multiple layer coating of the FP facets) this is not a stable condition and side modes can start to oscillate due to environmental fluctuations or laser modulation.

In order to achieve real single-mode operation a strongly wavelength-selective feedback has to be introduced.

This can be done in several ways, among which distributed feedback (DFB) is by far the most used.

5.2.1.2 Semiconductor Laser Modeling and Dynamic Behavior

Once confinement is achieved both for the optical field and for the injected charges, the laser can be considered as a waveguide inserted into a resonant cavity with the core subject to population inversion to generate optical gain.

A physical modeling of the semiconductor laser would require a quantum representation of both the optical field and the pseudo-particle population inside the crystal.

In this way the laser equations will appear as a couple of interacting equations, one for the density matrix elements representing the electron population and the other for the quadrature operators of the field [3].

Even though this analysis is possible, it is quite complicated and, from the point of view of the physical comprehension of laser dynamics, is not more effective with respect to a semiclassical model.

In a semiclassical model, the laser behavior is represented with a set of rate equations for the propagating field and for the carrier density.

The only element that cannot rise from a semiclassical analysis is the spontaneous emission noise, which is a pure quantum element (compare Section 4.3.1.1). Thus the noise terms have to be added phenomenologically to the semiclassical rate equations [4].

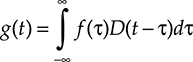

With this in mind, the rate equations can be derived by the coupled field wave equation and electrons balance equation and by applying the slowly varying and rotating wave approximation to the wave equation [5].

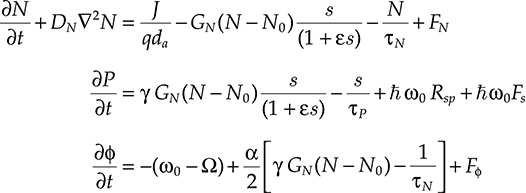

At the end of these procedures, calling N(x,y,z,t) the carrier population in the conduction band, S(x,y,z,t) the optical energy inside the cavity, which is proportional to the photon density, and ϕ(x,y,z,t) the field phase, the coupled equations of a semiconductor laser can be written as

∂N∂t+DN∇2N=1qda−GN(N−N0)s(1+ɛs)−NτN+FN∂P∂t=γGN(N−N0)s(1+ɛs)−sτp+ħω0Rsp+ħω0Fs∂ϕ∂t=−(ω0−Ω)+α2[γGN(N−N0)−1τN]+Fϕ(5.1)

(5.1)

The symbols that appear in the rate equations have the following meanings:

DN is the carrier diffusion coefficient. In many problems the carrier density can be considered approximately constant in the active region and zero elsewhere, thus neglecting the diffusion term DN ∇ 2N and the dependence of N from the spatial coordinates.

J is the pumping current.

da is the active layer thickness.

q is the electron charge.

τN is the carrier’s lifetime.

GN is the population inversion induced gain.

(N − N0) is the population inversion factor.

Ɛ is the gain saturation constant.

τP is the photon’s lifetime.

γ is the confinement factor, that is, the ratio between the active region volume and the modal volume. This parameter is evaluated by solving the mode propagation into the active region.

ω0 is the angular frequency of the optical field.

Ω is the resonance frequency of the laser cavity.

Rsp is the spontaneous emission rate.

α is the so-called linewidth enhancement factor. The fundamental limit for the linewidth of a free-running laser is provided by the Schawlow Townes formula. Semiconductor lasers exhibit significantly higher linewidth values due to a coupling between intensity and phase noise, caused by the dependence of the refractive index on the carrier density. In the semiclassical model, the linewidth enhancement factor α quantifies this amplitude–phase coupling mechanism; essentially, α is a proportionality factor relating phase changes to changes of the amplitude gain [6].

Fj, j = S, N, ϕ are the noise terms that are added phenomenologically to the rate equations. These are independent random processes whose first moments have to be determined from the results of the quantum theory. In particular we have

〈Fj(t,x,y,z)〉=0j=S,N,ϕ(5.2)

![]()

(5.2)

〈Fj(t,x,y,z)Fk(t′,x′,y′,z′)〉=Djkδj,kδ(t,t′)δ(x,x′)δ(y,y′)δ(z,z′)(5.3)

![]()

(5.3)

Djk are the elements of the so-called diffusion matrix which, in the framework of the semiclassical approximation, can be written as

D=(DSSDSNDSϕDSNDNNDNϕDSϕDNϕDϕϕ)=(RspS−RspS0−RspSRspSħω0+NτN000ħω0Rsp4S)(5.4)

(5.4)

Realistic values of the parameters of a standard DFB laser used as a source in DWDM systems are reported in Table 5.1.

To describe the laser behavior on the grounds of Equation 5.1 what we have assumed up to now is not sufficient.

As a matter of fact, these equations are stochastic equations that cannot be solved if the distribution of the noise term is not known.

The most correct distribution of the terms Fj (j = S, N, ϕ) is not Gaussian: it is sufficient to consider that the term representing the shot noise (i.e., the fluctuation of the optical power) should be distributed following a Poisson distribution.

However, in the case of a stable lasing operation, it is possible to demonstrate that, due to the fact that the number of excited carriers and of the photons is very high, the Gaussian approximation implies a small error, in line with the overall accuracy of the semiclassical model.

Starting from Equations 5.1 and from the laser structure the main characteristics of a specific type of semiconductor laser can be derived. Despite the intrinsic complexity of their behavior, gain saturation happens also in semiconductor lasers.

In particular, due to the presence of the saturation constant, increasing the power in the cavity, the laser inner gain decreases so that the dependence of the emitted power from the pump is not exponential as foreseen in a laser without gain saturation.

Moreover, the dependence of the carrier density on a temperature typical of a p–i–n junction induces a sensible dependence of the curve relating the pumping current and the emitted power on temperature.

TABLE 5.1 Values of the Microscopic Parameters of a DFB Used at the Transmitter in DWDM Systems

A typical emitted power versus current characteristic of a DFB laser is shown in Figure 5.6. From the figure, the laser threshold, the dependence of the laser behavior on temperature, and the saturation of the cavity gain are evident, so that at each temperature a maximum emitted power exists at the edge of saturation.

Above the maximum emitted power, the gain saturation drives a decrease of the emitted power while increasing the driving current.

When emitting in continuous wave (CW) mode, a semiconductor laser is affected both by phase and by intensity noise due to the amplified spontaneous emission into the laser cavity and to the fluctuations of the carrier density.

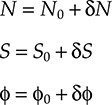

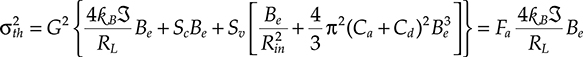

The noise characteristics can be obtained from the rate equations with a perturbation approach, at least as far as the noise is small [7]. In particular, substituting the variables of the rate equations the following expressions

N=N0+δNS=S0+δSϕ=ϕ0+δϕ(5.5)

(5.5)

where N0, S0, and ϕ0 are the stationary solutions of the rate equations without noise, and linearizing with respect to the noise terms, the noise statistical characteristics can be derived.

In particular, the linearized equations assume a simpler form by introducing the vector ˉX=(S,N,ϕ)![]() and the matrix

and the matrix

Γ=(ΓSSΓSNΓSϕΓNSΓNNΓNϕΓϕSΓϕNΓϕϕ)=(∈S0τP(1+∈S0)γGNS0ħω0(1+∈S0)01τP(1+∈S0)1τN+γGNS0ħω0(1+∈S0)00αγGN20)(5.6)

(5.6)

Figure 5.6 Typical emitted power versus current characteristic of a DFB laser.

In order to evidence the macroscopic noise terms in the expression of the emitted field, it can be written as

→E=√P0a(x,y)r(t)eiωt+φ(t)→x=√ℑS0τca(x,y)√(1+δSS0)eiωt+[ϕ(t)+Ψ]→x(5.7)

(5.7)

where

r(t) and φ(t) are the relative intensity noise (RIN) and the phase noise

ℑ is the end mirror transmittance

τc is the cavity round trip time that allows us to pass from internal energy to emitted power

ψ is the phase contribution of the extraction mirror

The power spectral density of the RIN is given by

RIN(ω)=ħωS202DNNΓ2SN+2DSS(Γ2NN+ω2)+4DSNΓSNΓNN[(Ω2R−ω2)2+ω2(ΓNN+ΓSS)2](5.8)

(5.8)

where it is not difficult to recognize the typical behavior of a resonance, whose natural frequency is Ω2R=(ΓSSΓNN+ΓSNΓNS)![]() and whose dumping rate is (ΓNN + ΓSS).

and whose dumping rate is (ΓNN + ΓSS).

The observation that the laser behavior is characterized by a dumped resonance is very general, not limited exclusively to the RIN spectrum.

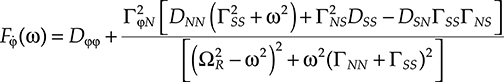

As a matter of fact, the same behavior is exhibited by the frequency noise (i.e., the process ˙φ=δφ/δt![]() ), whose power spectral density is given by [8]

), whose power spectral density is given by [8]

F˙φ(ω)=Dφφ+Γ2φN[DNN(Γ2SS+ω2)+Γ2NSDSS−DSNΓSSΓNS][(Ω2R−ω2)2+ω2(ΓNN+ΓSS)2](5.9)

(5.9)

Besides the presence of the laser characteristic resonance, the expression of the frequency noises spectrum allows also the other typical diode laser mechanism to be evidenced.

As a matter of fact, it is made by two terms: the first is due to the phase of random emitted photons, and the second is proportional through Γ2φN![]() to α2 and represents the additional phase noise due to the coupling between amplitude and phase through the carrier density [9].

to α2 and represents the additional phase noise due to the coupling between amplitude and phase through the carrier density [9].

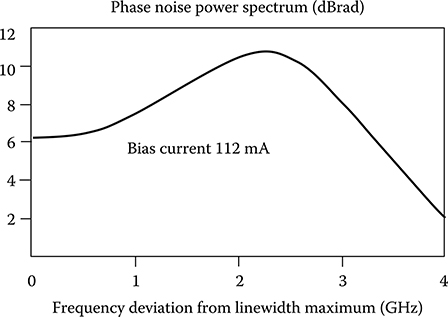

The power spectral densities of the RIN and of the phase noise for a typical DFB laser emitting at 1550 nm are represented in Figures 5.7 [10] and 5.8 respectively.

From the theory we have presented, it results that the same phenomenon causes both the excess RIN and the excess phase noise with respect to the quantum limit. Thus a relationship must exist between the RIN and the phase noise spectra.

This relationship can be obtained by simplifying the denominator in the expressions of the two spectra and deriving an expression for the relation between them.

This expression can be experimentally verified by passing from the phase noise to the linewidth that is one of the most commonly measured quantities in a laser.

Figure 5.7 Power spectral density of the RIN for a typical DFB laser emitting at 1550 nm.

(After Aragon Photonics Application Note, Characterization of the main parameters of DFB using the BOSE. 2002, www.aragonphotonics.com [accessed: May 25, 2010].)

Figure 5.8 Power spectral density of the phase noise for a typical DFB laser emitting at 1550 nm.

(After Travagnin, M., J Opt. B. Quant. Semiclass. Opt., 2, L25, 2000.)

A rigorous derivation of the laser linewidth that is possible starting from (5.9) [8] would conclude that the laser linewidth is not Lorentian, but is composed by a Lorentian lobe plus two side peaks far from the central wavelength, exactly the frequency of relaxation oscillations. However, in high-quality lasers these lobes are so reduced that a Lorentian approximation of the linewidth is more than accurate.

Assuming a Lorentian linewidth, the expression of the full width at half maximum (FWHM) is given by

Δv=Rsp(1+α2)(4πr)(5.10)

(5.10)

where r is the cavity refraction index.

The curve relating the linewidth and the maximum of the RIN spectrum is shown in Figure 5.9 besides a set of experimental points from [11]. In particular the theoretical curve is obtained by best fitting the experimental data.

Another important characteristic of the semiconductor lasers is that they can be directly modulated acting on the bias current. However, when the bias current fluctuates, a complex dynamical behavior is observed, mainly due to the coupling between field amplitude and phase and to the resonance at ΩR.

This phenomenon causes the contemporary presence of intensity and phase modulation, which always creates a frequency chirp in the direct modulated pulse train.

Besides this fundamental effect, a second phenomenon constraining the direct modulation bandwidth of semiconductor lasers is the presence of parasitic capacities in the structure. Reducing them is a key issue to increase the direct modulation bandwidth.

Considering again the rate equations with spatially constant variables and neglecting the noise terms, it is possible to study direct modulation by applying the substitutions in Equation 5.5 where now the fluctuations are due not to the noise but to the modulation.

In this condition the equations can be linearized again around the laser bias point. In this case Equation 5.7 can be used to pass from the field into the cavity to the emitted field so that the modulation index (i.e., the derivative of the emitted optical power with respect to the pump current fluctuations) results:

δPδJ=−ħωqdaℑτNΓNSω2m−Ω2R+iωm(ΓNN+ΓSS)(5.11)

(5.11)

where ωm is the modulation frequency. The presence of the natural laser resonance is always evident and in this case it limits the modulation bandwidth.

If the expression of the coefficients Γkj is substituted in Equation 5.11 it is evident that, besides the presence of a resonance, gain saturation (represented by the factor ε also contributes to shrink the bandwidth with respect to the theoretical limit, and that higher the gain saturation coefficient, the smaller is the modulation bandwidth.

Similarly to the power modulation index, a frequency modulation index can be evaluated whose expression is

Figure 5.9 Linewidth versus RIN spectrum peak relationship for a DFB laser.

(After JDSU Application Note, Relative Intensity Noise, Phase Noise and Linewidth, JDSU Corporate, s.l., 2006, www.jdsu.com [accessed: May 25, 2010].)

δ˙ϕδJ=ΓϕNδNδJ(5.12)

(5.12)

Equation 5.12 represents the theoretical frequency response of a semiconductor laser that is responsible for both the possibility of modulating the output field frequency by modulating the bias current (property exploited for example to implement the Brillouin dither) and the chirp that unavoidably is present when the laser is directly modulated.

Equation 5.12, however, due to all the approximations that are the base to derive the rate equations, does not take into account several phenomena that cause the deviation of the frequency response of real lasers from the simple proportionality to the carrier density variations.

Generally the frequency response is almost flat up to the resonance of the laser, but here it shows the resonance effects through a peak and then decreases rapidly.

5.2.1.3 Quantum Well Lasers

A quantum well laser is a laser diode in which the active region of the device is formed by one or more regions so narrow that quantum confinement occurs, alternated by wider regions of higher bandgap.

The carriers in the active region are generally divided into two populations that occupy a completely different set of energy levels: carriers in the wells and carriers out of the wells.

Carriers out of the wells are ordinary pseudo-particles obeying the rules of carrier motion in crystals, and in particular they cannot have any energy in the forbidden band of the semiconductor forming the active region.

Inside the quantum well, quantum confinement of the carriers creates energy sub-bands inside the forbidden energy band. The central energy of the sub-bands depends on the well thickness.

This is easily understood remembering that in the simple case of a one-dimensional infinite energy well the energy levels of a particle are equal to En = n2(ħ2π2/8mL2 ), where n is the quantum number and L is the width of the well.

Due to these characteristics the wavelength of the light emitted by a quantum well laser is determined by the width of the active region rather than just the bandgap of the material from which it is constructed. This means that much shorter wavelengths can be obtained from quantum well lasers than from conventional laser diodes using a particular semiconductor material. The efficiency of a quantum well laser is also greater than a conventional laser diode due to the stepwise form of its density of states function.

Beside the use of a quantum well, in order to shape the energy levels inside the active region, the so-called strain technique can be incorporated.

This consists in introducing in the quantum well zone a tensile or compressive strain, whose effect is to change the conduction sub-bands due to the quantum well.

One of the main effects of a controlled strain is to reduce the threshold current of the laser due to the increased separation between energy bands that renders recombination more difficult [12].

5.2.1.4 Source Fabry–Perot Lasers

Probably FP semiconductor lasers used as signal sources are the lasers produced in larger volumes on the market for a huge number of applications, from telecommunications to consumer electronics to sensors and so on.

Figure 5.10 Spectrum of an FP InGaAsP laser emitting in a quasi-single-mode regime.

In telecommunications such lasers are adopted at transmitters either to generate the carrier or to generate the information-carrying signal through direct modulation when single-mode laser operation is not required but the cost of the source is a key issue. As a matter of fact, due to the simpler structure and the great volume production, FPs are cheaper with respect to single-mode lasers like DFB.

Thus, FPs are the standard optical sources in several types of access systems, from point-to-point to A and B class G-PON. Moreover, such lasers are used in the low-performance client cards of transmission systems, where it is needed to connect systems in the same central office and the connection speed is not so high.

The typical emission spectrum of an FP InGaAsP laser emitting in a quasi single-mode regime is plotted in Figure 5.10. The side modes suppression ratio (SMSR) is about 19 dB, which is much lower than the typical 35–40 dB of a DFB laser, but is enough for several applications, especially if the laser has not to be modulated.

The presence of a few lasing modes in an FP laser generates the growth of a particular noise phenomenon when it is directly modulated: the mode partition noise [13].

Mode partition noise is due to the random fluctuations of the lateral modes that causes a change in the spectrum and a fluctuation in the power of the main mode due to the fact that the steady-state power emitted from the laser in a first approximation is constant and depends only on the pump and on the laser quantum efficiency.

The effect is an enhancement of the RIN well above the limit given by the spectrum in a strict single mode operation. This effect is shown in Figure 5.11 where the fact that the sensible amount of RIN is mainly due to the mode partition noise is clear from the form of the RIN spectrum.

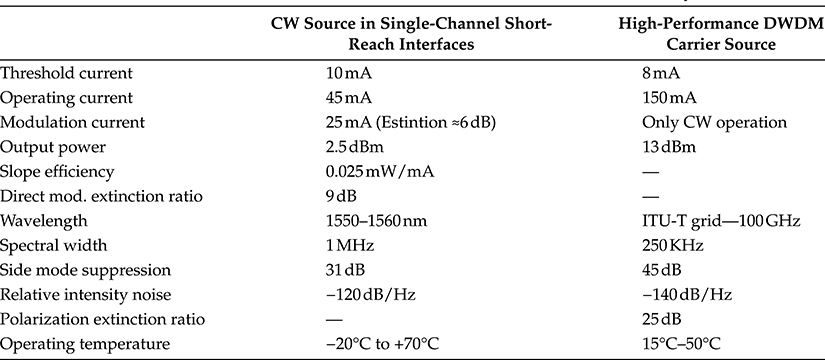

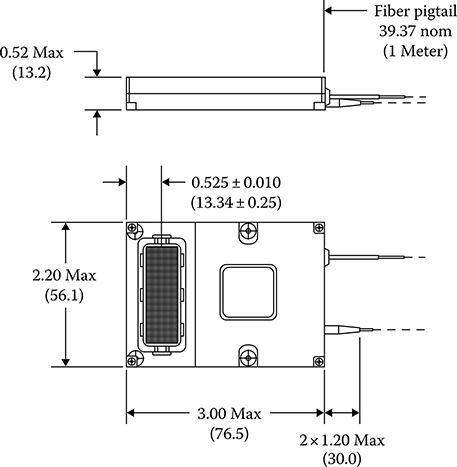

A selection of characteristics of practical FP diode lasers for different applications is reported in Table 5.2. From the table the wide span of applications covered by FP lasers corresponding to the possibility of matching different set of requirements is evident.

5.2.1.5 Source DFB Lasers

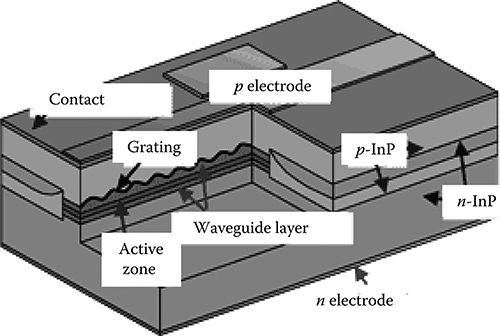

In a DFB laser the feedback is obtained by building a grating immediately on top of the active zone or on the side of it, influencing the propagation of the tail of the guided mode. Via interaction with the mode tail, the grating blocks the amplification of all the wavelengths, save the one resonating with the grating itself, thus guaranteeing single-mode operation.

Figure 5.11 RIN spectrum induced by relevant mode partition noise in a multimode FP laser.

(After Wentworth, R. H. et al., J. Lightwave Technol., 10(1), 84, 1992.)

TABLE 5.2 Characteristics of Practical FP Diode Lasers for Different Applications

The scheme of a DFB semiconductor laser is shown in Figure 5.12 while its single-mode spectrum is shown in Figure 5.13.

Altering the temperature of the device causes the pitch of the grating to change due to the dependence of the refractive index on temperature. This dependence is caused by a change in the semiconductor laser’s bandgap with temperature and thermal expansion.

Figure 5.12 Schematic section of a high-performance DFB laser.

Figure 5.13 Emission spectrum of a typical DWDM DFB.

A change in the refractive index alters the wavelength selection of the grating structure and thus the wavelength of the laser output, producing a narrow band wavelength tunable laser.

The tuning range is usually on the order of 6 nm for an ∼50 K (90°F) change in temperature, while the linewidth of a DFB laser is on the order of a few megahertz. Altering of the current powering the laser will also tune the device, as a current change causes a temperature change inside the device.

If this temperature sensitivity can be exploited to tune the laser on the opportune wavelength, it also implies that, in order to match the stability requirements of high-performance DWDM systems, the laser has to be temperature-stabilized. This is generally done through a Peltier temperature controller and a feedback control loop that assure sufficient temperature stability in the face of environment changes and the device heat production during working.

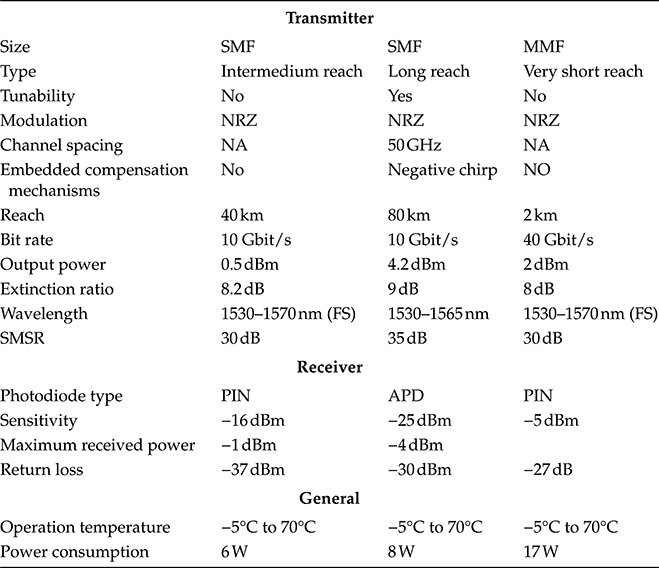

TABLE 5.3 Characteristics of Practical DFB Lasers for Use as Sources in Telecommunication System

There are generally two distinct types of DFB lasers. Traditionally, DFBs are antireflection-coated on one side of the cavity and coated for high reflectivity on the other side (AR/HR). In this case the grating forms the distributed mirror on the antireflection-coated side, while the semiconductor facet on the high reflectivity side forms the other mirror. These lasers generally have higher output power since the light is taken from the AR side, and the HR side prevents power being lost from the back.

Unfortunately, during the manufacturing of the laser and the cleaving of the facets, it is virtually impossible to control at which point in the buried grating the laser cleaves to form the facet. So sometimes the laser HR facet forms at the crest of the buried grating, and sometimes on the slope. Depending on the phase of the grating and the optical mode, the laser output spectrum can vary. Frequently, the phase of the highly reflective side occurs at a point where two longitudinal modes have the same cavity gain, and thus the laser operates at two modes simultaneously. Thus such AR/HR lasers have to be screened at manufacturing and parts that are multimode or have poor SMSR have to be scrapped. Additionally, the phase of the cleaving affects the wavelength, and thus controlling the output wavelength of a batch of lasers in manufacturing can be a challenge.

An alternative approach is a phase-shifted DFB laser. In this case both facets are antireflection-coated and there is a phase shift in the cavity. This could be a single 1/4 wave shift at the center of the cavity, or multiple smaller shifts distributed in the cavity. Such devices have much better reproducibility in wavelength and theoretically they are all single mode, independent of the production process.

A selection of characteristics of practical DFB lasers for use as sources in telecommunication system is reported in Table 5.3.

5.2.2 High-Power Pump Lasers

If the ability to produce laser diodes with a strictly monochromatic output field that are stable in wavelength and power is instrumental to design optical transmitters for DWDM systems, high-power durable pump lasers are equally instrumental to realize optical amplifiers.

The success of fiber amplifiers, both based on erbium-doped fibers and Raman effect, is largely due to the availability of high-power laser diodes at suitable pump wavelengths.

Considering laser diodes designed to pump optical amplifiers, the key performances to care for are the emitted power, the stability of the wavelength, and the ability to emit a mode that can be focused easily on the small spot represented by a fiber-optic core.

Reliability is also a key property of these lasers, due to the fact that the reliability of the whole amplifier is largely determined by the reliability of the pumps.

The lifetime of semiconductor lasers decreases more than linearly, increasing the average emitted power, due to the fact that the more photons are present inside the laser cavity, the faster is the growth of fabrication micro-defects up to a state where they thwart the laser working.

Thus a major challenge for the manufacturers of pump lasers is to assure a long lifetime without scarifying the emitted power.

Another key design point for this type of lasers is the power consumption, which has to be limited as much as possible.

For this reason, it is important to have a high number of degrees of freedom in designing pump lasers, a condition that is assured by the use of not only a carefully selected alloy of III–V semiconductors for each laser section, but also a strained multi-quantum well (MQW) structure in the active zone.

In this way, the emitted wavelength is determined mainly by the MQW and the threshold current can be reduced allowing the emission of the required power at a lower bias current.

Moreover, in order to maintain the structure as simple as possible and avoid local micro-defects due to complex fabrication processes, almost all the pump lasers have an FP structure.

Since the emission wavelength is determined by the MQW structure, the base laser material can be selected to assure stability and reliability to the laser, leveraging on suitable fabrication processes.

Typical wavelengths for pump diode lasers used in telecommunication amplifiers are 800 nm, 980 nm, and a wide band around 1480 nm, where almost all the pump lasers for Raman amplification are located.

A key parameter for a power laser that has to work as an amplifier pump is the brightness. It is defined as the maximum power density that can flow through the output mirror per unit area and per unit solid angle.

Whatever the potential output power, the real laser performance is limited by the brightness limit at which the output mirror undergoes catastrophic damage that ruins the laser working.

In order to improve this threshold, it is important to coat the output facets of the laser with suitable coatings that tune the mirror reflectivity to the optimal value.

Just to give an example, an uncoated InGaAs/GaAs laser emitting at 980 nm can have a brightness limit of 10 MW/cm2, while a similar uncoated laser realized in InGaAsP/InGaAs to pump at 1480 nm does not go over 5 MW/cm2.

A suitable coating realized with a set of dielectric films can raise these figures up to 20 and 15 MW/cm2 respectively, improving greatly the laser performances.

In Table 5.4 a set of characteristics of practical pump lasers for different applications is reported.

TABLE 5.4 Characteristics of Practical Pump Lasers for Different Applications

5.2.3 Vertical Cavity Surface-Emitting Lasers

The vertical cavity surface-emitting laser (VCSEL) is a type of semiconductor laser diode with laser beam emission perpendicular from the top surface, contrary to conventional edge-emitting semiconductor lasers, which emit from surfaces formed by cleaving the individual chip out of a wafer [14].

There are several advantages to producing VCSELs when compared with the production process of edge-emitting lasers.

Edge-emitters cannot be tested until the end of the production process. If the edge-emitter does not work the production time, the processing materials and especially the test cost in term of manpower have been wasted.

On the contrary, VCSELs can be tested at several stages throughout the process to check for material quality and processing issues.

Additionally, because VCSELs emit the beam perpendicular to the active region of the laser as opposed to parallel as with an edge emitter, tens of thousands of VCSELs can be processed simultaneously on a 3-in. wafer.

Furthermore, even though the VCSEL production process is more labor- and material-intensive, the yield can be controlled to a more predictable outcome.

The VCSEL laser resonator consists generally of two distributed Bragg reflectors (DBRs) parallel to the wafer surface obtained alternating deposited layers of different materials.

The active region consists of one or more quantum wells allowing the control of both the threshold current, which in this kind of laser design can get very high if the laser is not designed carefully, and the emitted wavelength through the quantum well’s structure.

The planar DBR mirrors consist of layers with alternating high and low refractive indices. Each layer has a thickness of a quarter of the laser wavelength in the material, yielding intensity reflectivity above 99%. High reflectivity mirrors are required in VCSELs to balance the short axial length of the gain region.

In common VCSELs the upper and lower mirrors are doped as p-type and n-type materials, forming a diode junction. In more complex structures, the p-type and n-type regions may be buried between the mirrors, requiring a more complex semiconductor process to make electrical contact with the active region, but eliminating electrical power loss in the DBR structure.

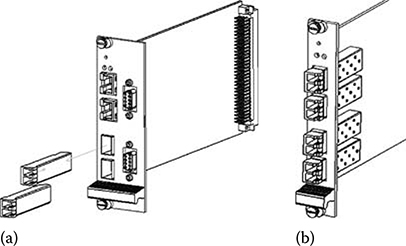

In Figure 5.14 three different VCSEL architectures are shown that essentially differ for the way in which the Bragg reflector is realized and, as a consequence, in the mirror’s structure and position.

Figure 5.14 Scheme of three different VCSEL architecture: A VCSEL relying on ion implantation zones for current confinement is shown in (a), A VCSEL achieving current confinement via a passivation zone is shown in (b) while a structure using two confinement zones on both sides of the wafer is shown in (c).

The architecture shown in Figure 5.14a is the simplest to realize, but also the one with lower performance. One of the fundamental problems of this architecture and, in some way, of all VCSELs, is the fact that the optical mode and the current are parallel, traversing the laser-active zone from top to bottom.

To realize current confinement, generally in this type of structure the active region is surrounded by a region where the current cannot penetrate, for example, a zone where a proton implantation has been done.

The architecture shown in Figure 5.14b presents a passivation level immediately on top of the active zone that has the role of confining the injected current, as the proton-bombed zone in architecture Figure 5.14a.

Finally, architecture Figure 5.14c is more complex, requiring to process both sides of the wafer, but provides also better current confinement and in general better performances.

In their telecommunication application, where long wavelength lasers are needed to match the fiber transmission windows, VCSELs have also some challenge to overcome to be suitable substitutes of edge-emitting lasers in low-performance applications.

The first and perhaps more important point is the emitted power. While long-wavelength VCSELs emit several milliwatts, moving the wavelength toward the telecommunication region the emitted power decreases and common VCSELs at 1550nm emit a power on the order of 0.1mW [15], even if particular architectures have demonstrated larger emitted powers.

An example of high-performance VCSELs [16] emitting at 1551 nm, whose optical power versus current characteristic is plotted in Figure 5.15, is reported in [16,17], demonstrating that progress in this direction is rapid and products are almost ready to hit the market with suitable emitted power characteristics.

A second important challenge is constituted by the operating temperature [18]. Long-wavelength VCSELs are quite temperature-sensitive, while their application target requires uncooled sources.

An example is shown in Figure 5.16 [102], where the temperature sensitivity of the bias current of the VCSEL considered in Figure 5.15 is shown.

Also from this point of view progress seems to be rapid.

Figure 5.15 Long-wavelength VCSEL (emitting at 1550 nm) current optical power characteristic.

(After RayCan, 1550 nm Vertical-cavity surface-emitting laser, 090115 Rev 4.0.)

Figure 5.16 Long-wavelength VCSEL (emitting at 1550 nm) current temperature characteristic.

(After RayCan, 1550 nm Vertical-cavity surface-emitting laser, 090115 Rev 4.0.)

The third point is direct modulation, which is another key characteristic for low-end source lasers for telecommunication equipment. This point, which was a key issue for a certain time, seems to be solved with more recent VCSEL structures, so that direct modulation at 1.25 and 2.5 Gbit/s seems a consolidate feature.

Moreover, the frequency response of long-wavelength direct-modulated VCSELs has an interesting flat characteristic.

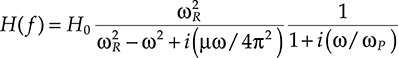

As a matter of fact, from the analysis of a high-confinement VCSEL it is possible to evaluate its response to frequency modulation by obtaining the following approximate equation that is valid for small modulation regimes [19]:

H(f)=H0ω2Rω2R−ω2+i(μω/4π2)11+i(ω/ωP)(5.13)

(5.13)

Figure 5.17 Spectral response to amplitude direct modulation for different values of the bias current of a long-wavelength VCSEL emitting at 1550 nm.

(After Hofmann, W. et al., Uncooled high speed (>11 GHz) 1.55 μm VCSELs for CWDM access networks, Proceedings of European Conference on Optical Communications—ECOC 2006, s.n., Cannes, France, 2006.)

where

ωR is the natural resonance frequency of the VCSEL

μ is the dumping factor of the relaxation oscillations

ωP is a characteristic parameter whose expression can be derived from more fundamental characteristics of the VCSEL and that represents the intrinsic low-pass response of the structure in the absence of laser effect due to parasitic and thermal effects

The frequency response deriving from Equation 5.13 is plotted in Figure 5.17 [19] for an experimental VCSEL emitting at 1550 nm up to 2 mW. The resulting modulation bandwidth is never lower than 10.8 GHz if the bias current is greater than 4.3 mA, qualifying this experimental VCSEL as a very interesting source for high-capacity access networks like G-PON and 10 G-PON and for coarse-wavelength division multiplexing (CWDM) systems.

5.2.4 Tunable Lasers

Almost all semiconductor lasers are tunable by controlling the laser working temperature. Thermal tuning is generally limited to few nanometers, but for devices specifically designed for tuning where it can reach 5–6 nm.

Thermal tuning is generally used to maintain the laser on the correct wavelength through contrasting changes during an operation due to aging and environment.

Another way of tuning a standard semiconductor laser is to change the current injection. As a matter of fact, continuous current injection changes the carrier’s density inside the laser cavity and causes a slight change of the emission wavelength.

Although this second method of tuning is even less effective than thermal tuning it indicates that there are two fundamental ways to tune a semiconductor laser: changing the index through a change in carrier concentration or changing the laser cavity length.

In all the cases, if tuning is achieved by index change, Δλ/λ ∼ r/r; if tuning is achieved by changing the cavity length, Δλ/λ ∼ L/L.

TABLE 5.5 Characteristics of Practical Tunable Lasers for Use as Transmitters in Telecommunication Systems

Network applications involving DWDM systems require a much wider tuning with respect to the potentiality of thermal or current injection tuning in order to use tunable laser effectively.

Generally tuning over an extended C band is required, that is, tuning over 40 nm, while retaining the characteristics of fixed-wavelength lasers.

Today technology offers essentially three types of widely tunable lasers: multisection lasers, external cavity lasers, and laser arrays [1]. In Table 5.5 the main parameters of practical tunable lasers for use as transmitter in telecommunication systems are summarized.

5.2.4.1 Multisection Widely Tunable Lasers

The simpler type of multisection widely tunable laser is a multisection DFB. Since a standard DFB can be tuned thermally only about 5 nm or less, wider tunability is achieved by creating a laser with multiple cavities. Nonuniform excitation along the cavity in DFB laser enables a change in the lasing condition.

Multi-electrode DFB lasers have been realized to achieve nonuniform excitation where the electrode is divided into two or three sections along the cavity [20].

The tuning function is provided by varying the injection current ratio into two sections. A typical geometry for this kind of laser is shown in Figure 5.18.

Tuning in these devices results from the combined effects of shifting the effective Bragg wavelength of the grating in one or several sections and the accompanying change of the optical path length for the change of the refractive index itself.

Combining these effects with thermal discontinuous tuning, a bandwidth on the order of 15 nm can be achieved, which is much more than pure thermal tuning, but it is not enough to cover the entire C band.

Much wider tuning is achieved using multisection DBR lasers.

A principal scheme of a tunable DBR is represented in Figure 5.19 [21]. The central region of the laser is an active region where a heterojunction is realized to allow population inversion and optical gain. The laser cavity is realized by cleaving one of the active region facets and providing, on the other hand, wavelength-selective feedback through a Bragg grating realized in a portion of the laser waveguide external to the active region, called the Bragg grating region.

Figure 5.18 Lateral section of a multisection tunable DFB laser.

Figure 5.19 Lateral section of a multisection tunable DBR laser.

Between the active region and the Bragg grating region there is an intermediate part of the waveguide that is used to adapt the field phase to the cavity length through the change of the refraction index caused by carrier injection. This zone is called phase adaptation zone.

In order to satisfy the resonance requirements, the laser mode has to satisfy the relation

ϕ1−ϕ2=2kπ![]()

where

ϕ1 and ϕ2 are phase change of the Bragg reflector in the active and the phase control regions, respectively

k is an integer

Phase change ϕ2 can be written as

ϕ2=βaLa+βpLp=(2πλ)(raLa+rpLp)(5.14)

![]()

(5.14)

where

subscripts a and p denote the active and phase control regions, respectively

β indicates a propagation constant

λ is the wavelength of the lasing mode

r is the refraction index

The phase ϕ1 accumulated by the lasing mode passing through the Bragg reflector can be derived starting from the properties of Bragg reflectors.

The characteristic parameter γ of the Bragg reflector can be defined, following [22], as

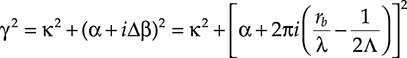

γ2=κ2+(α+iΔβ)2=κ2+[α+2πi(rbλ−12Λ)]2(5.15)

(5.15)

where Λ, κ, α, and rb denote the corrugation period, the corrugation coupling coefficient, the loss of the corrugated region, and the equivalent refractive index for the Bragg reflector, respectively.

The phase change experimented by the field when reflected by the Bragg reflector can be expressed as a function of the characteristic parameter γ and the overall cavity length L as

exp(iϕ1)=−iκrb1γtgh(γL)+(α+iΔβ)=−iκrb1γtgh(γL)+[α+2πi(rb/λ)−(1/2Λ)](5.16)

(5.16)

Substituting Equations 5.14 and 5.15 in Equation 5.16 the relation determining the possible lasing wavelength of the DBR laser can be obtained [1,21].

In particular, the laser will pass from one wavelength to the other when the index of the Bragg reflector section is changed by changing the carrier density with the injection of a current in the area; in general, however, the mode will start free oscillations only if the index of the phase matching section is suitably adjusted to satisfy the phase-matching condition (Equation 5.15).

It is clear that the phase-matching conditions can be attained contemporarily by a set of modes, due to the presence of the addendum 2kπ in the phase-matching condition.

Among the phase-matched modes only one starts laser oscillation: the mode having the highest cavity gain.

The cavity gain of a mode in the DBR structure is given by the gain of the gain section minus the losses in the phase-matching and Bragg sections. But these losses are again controlled by the carrier densities; thus the specific mode to start laser oscillations among those permitted by the phase-matching condition can be selected by correctly polarizing the three laser regions.

The DBR laser is a good tunable laser, but the tuning range is just slightly wider than 5 nm and never exceeds 10 nm, since it is essentially due to the change of the index in the Bragg section (the other indexes are changed to satisfy the phase matching and to select the correct mode, and do not directly contribute to tuning); thus the relationship Δλ/λ ∼ r/r still holds and the limited changing range of the refraction index defines the changing range of the emitted wavelength. It is possible to combine index-driven tuning with thermal tuning and in this way a tuning range as wide as 22 nm has been reached with simple DBR structures.

In order to achieve much wider tuning ranges, the tuning cannot be based only on index changes. Now it is useful to remember that only three causes can generate the tuning of a semiconductor laser structure [23]:

Index changes

Cavity length changes

Mirror wavelength-selective reflectivity tuning

Somehow all these three techniques have to be used if a tuning range twice the DBR maximum of 22 nm has to be reached.

This is possible by using a structure with multiple cavities and a short grating as reflector for each cavity. Sampled-grating DBR (SG-DBR) and super-structure grating DBR (SSG-DBR) lasers implement this idea in two different manners [24].

The sampled-grating design uses two different multielement mirrors to create two reflection combs with different free spectral.

The laser operates at a wavelength where a reflection peak from each mirror coincides. Since the peak spacing is different for various mirrors, only one pair of the peaks can line up at a time, so that, when the lasing wavelength passes from a couple of peaks to the others a big change of emitted wavelength is obtained with a small change of the mirror peak positions (Vernier effect [25]).

The scheme of a discrete tuning SG-DBR and a plot showing the idea of the Vernier effect are shown in Figure 5.20.

Even if this simple explanation of the SG-DBR principle seems to limit this kind of laser to step tuning, a suitable design can achieve also semi-continuous tuning, at the expense of a more complex control algorithm.

Most of the features of the SG-DBR are shared by the SSG-DBR [26] design. In this case, the desired multi-peaked reflection spectrum of each mirror is created by using a phase modulation of grating rather than an amplitude modulation as in the SG-DBR.

Periodic bursts of a grating with chirped periods are typically used. This multielement mirror structure requires a smaller grating depth and can provide an arbitrary mirror peak amplitude distribution if the grating chirping is controlled.

Using SG-DBR or SSG-DBR, discontinuous tuning ranges as wide as 60 nm have been reached, arriving in this way to oversatisfy the requirements.

On the positive side, they are compact, they maintain the properties of fixed-wavelength lasers, and, perhaps more importantly, they are suitable to be integrated with other devices leveraging the InP platform.

Figure 5.20 Lateral section of a multisection sampled grating DBR (SG-DBR) tunable laser. In the lower part of the figure the Vernier effect used to tune the laser by superimposing only one passband of the two gratings that create the laser cavity is also illustrated.

For example, if the output power has to be increased, it is possible to integrate immediately out of the laser, on the same waveguide, a semiconductor optical amplifier to achieve high output power, or if a compact component that can be directly modulated is needed, an InP modulator (either Mach–Zehnder or electro-absorption [EA]) can be integrated at the laser output.

Moreover, the typical reliability of integrated components is higher than that of components composed by different and possibly moving macroscopic parts.

On the negative side, there is the complex electronic control, which is more complex for more performing structures and the difficult processes needed to produce these lasers.

5.2.4.2 External Cavity Lasers

Limitation in tuning range experienced by integrated lasers when tuning is driven by the change of the optical cavity length is due to the fact that in integrated devices this is possible only by injecting carriers and changing the refraction index.

The situation is completely different in external cavity lasers, where the external part of the cavity can change its length with physical means in order to realize sufficient variations for very wide tuning.

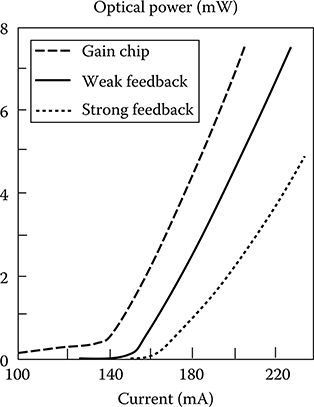

The principal scheme of an external cavity laser is shown in Figure 5.21: after reducing the reflectivity of the cleaved active chip facet, part of the emitted radiation is reintroduced in the cavity of an FP semiconductor laser from an external etalon so as to realize a twocavity system: the FP cavity inside the chip and the external cavity.

The effect of the feedback on the original laser emission can be analyzed by introducing two characteristic factors of the external cavity:

The field phase shift Δϕ = ω0τ experienced during a single external cavity round trip, where ω0 is the field angular frequency and τ = 2Lc/c is the cavity round trip, Lc being the cavity length.

The feedback strength κ, defined as the amount of optical power reinjected into the laser.

The feedback strength can be evaluated starting from the reflectivity RE of the external mirror and Rs of the facet of the chip obtaining

Figure 5.21 Schematic view of an external cavity laser.

κ=1−Rsτs√RERs(5.17)

(5.17)

where τs is the laser cavity round trip time.

Naturally the external cavity will influence the laser behavior only if the feedback is not too weak. In order to state a qualitative condition that will help us to understand the order of magnitude of the parameters, let us state that the feedback is relevant for the laser dynamics only if it is more powerful than the spontaneous emission. This seems a reasonable condition since it requires that the feedback be visible by the laser above the noise plateau.

Since in a real laser the reflectivity of the external mirror will be on the order of 0.5 to allow sufficient feedback and sensible output optical power, it can be put at approximately Rs/(1 − Rs) ≈ 1, so that the aforementioned condition becomes

RE≫(Rspτsr)2(5.18)

(5.18)

Equation 5.18 states an important property of external cavity lasers: the shorter the cavity (i.e., the smaller the τs), the more sensitive is the laser to small amounts of feedback. Thus, if the feedback has to strongly influence the laser behavior it needs to design a short cavity laser.

Moreover, it is to be noted that Rsp/r is practically proportional to the laser linewidth Δν (compare Equation 5.8), but for a factor on the order of 1. This factor, due to the condition ≫ appearing in Equation 5.18 can be neglected and the condition can also be written as RE ≫ (Δντs)2 showing that the purer the laser emission (i.e., the smaller the Δν), the more the laser is sensible to reflections.

In order to study an external cavity semiconductor laser, the rate equations have to be written taking into account the electrical field composing the optical wave and not the optical energy, since the feedback has to be represented in terms of the electrical field.

Neglecting the space dependence of the variables and writing the linearly polarized electrical field as E(t) = A(t) exp[iω0t + ϕ(t)], the external cavity rate equations may be written as follows:

ⅆAⅆt=12{g[N(t)−N0]−1τP}A(t)+κτicos[υ(t)]A(t−τ)ⅆϕⅆt=α2{g[N(t)−N0]−1τP}−κτisin[υ(t)]A(t−τ)A(t)ⅆNⅆt=J−N(t)τNA(t)−g[N(t)−N0]A2(t)(5.19)

(5.19)

where τi is the round trip time of the gain chip inside the laser and A′(t) = (κ/τi ) cos[ϑ(t)]A(t − τ) is the intensity of the delayed light injected from the external cavity to the active cavity. The overall ratio between the emitted and the reinjected intensity is indicated by κ and the phase coupling angle is given by the following formula:

υ(t)=ω0τ+ϕ(t)−ϕ(t−τ)(5.20)

![]()

(5.20)

In Equation 5.19 also the noise terms have been neglected, since we are interested in the analysis of the deterministic behavior of the laser.

From the solution of the rate equations the dynamic of the external cavity laser can be studied.

In particular the following property is derived. The laser operation is strongly dependent on the so-called feedback parameter defined as ξ=√(1+α2)κτ![]() , where α is the linewidth enhancement factor of the gain chip.

, where α is the linewidth enhancement factor of the gain chip.

If ξ < 1, the laser is in a regime of weak feedback, the laser cavity coincides with the chip cavity, and the feedback is a perturbation for the laser working. In this regime the rate equations can be solved using the perturbation theory where the “small” parameter is κ.

If ξ < 1 but κ ≪ 0.1, different regimes alternate with stable and unstable regions where, depending on the cavity length and feedback intensity, there is a strong mode hopping or a single-mode operation. However, when single-mode operation is achieved, the laser behavior in this region is quite unstable and changes in environmental parameters or aging can completely change the laser emission.

Moreover, laser tuning is difficult due to this instability. Thus this region is difficult to exploit practically.

If ξ ≫ 1 so that κ > 0.1, a stable operation regime of high injection is reached. The laser cavity is de facto the extended cavity (i.e., the chip plus the external cavity) and the presence of the chip internal facet can be considered a small perturbation to the extended laser working.

In this regime the laser is quite stable, its linewidth is sensibly reduced with respect to the value of the free running chip laser, and tuning can be operated by changing the cavity length without altering laser stability and without provoking mode hopping.

This is the regime in which practical external cavity tunable lasers work.

Considering from now on only the strong feedback regime, the laser output power can be evaluated once the exact laser structure is known. Considering in particular the structure in Figure 5.21, the output power is given by

Po=ηħωɛ(J−Jth)[ln(1/√Rs)αLi+ln(1+/TRsRi)](5.21)

(5.21)

where

η is the chip quantum efficiency

ε is the dielectric constant

Li is the length of the gain chip

T is the total loss internal to the external cavity (e.g., focusing lenses)

Ri is the reflectivity of the cleaved chip facet

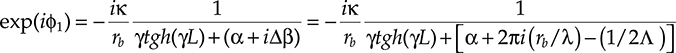

The aforementioned equations for power output indicate that, for a given injection current, the external-cavity laser generally has a somewhat lower power output than that of the solitary diode laser and, moreover, has higher threshold current, as shown in Figure 5.22.

As far as tunability is concerned, the emitted frequency is given, with a very good approximation, by the simple equation

Figure 5.22 Modification of the current optical power characteristic of an FP laser as a consequence of the antireflection coating of a facet and the addition of an external cavity.

ω=2π(Lir+Lcc+k)(5.22)

![]()

(5.22)

where k is an integer. Also in this case, among all the possible oscillating modes, generally only one experiences spontaneous oscillations, that is, the mode having the greater net cavity gain.

Different schemes have been proposed to change the external cavity length, but in practice two have been adopted in commercial products: the use of a wavelength-selective liquid crystal mirror that can be tuned by the applied voltage and a mechanically movable grating mounted on a micromachining electromechanical switch (MEMS) actuator.

In both cases the selectivity of the tunable mirror does not assure stable single-mode operation. On the other hand, a tunable laser for application in DWDM transmission does not need to be continuously tunable; on the contrary it has to be restricted to the International Association for Standardization in Telecommunications (ITU-T) DWDM wavelengths.

Taking into account such a restriction, sufficient wavelength selectivity can be achieved by inserting a solid-state etalon in the laser cavity, whose FSR coincides with the ITU-T grid spacing.

In order to have good control of the optical signal reinjection and to avoid the creation of spurious cavities, special gain chips are produced with a curve-active waveguide, so that the entire cavity appears at the end to be a curve.

The resulting more practical scheme of the external cavity laser is shown in Figure 5.23.

Beside tunability, one of the most interesting features of the high-feedback external-cavity laser is its narrow linewidth. By extending the optical cavity length, the spontaneous recombination phase fluctuation in the laser linewidth can be dramatically reduced. Since resonance oscillations are damped by the small reflectivity of the internal-gain chip facet, the linewidth can be considered Lorentian with an FWHM given by

Δv=ħω0P0gRsp(Δvf)2αt(1+α2)(5.23)

![]()

(5.23)

Figure 5.23 Practical implementation of an external cavity laser based on a liquid crystal mirror and on a curved cavity gain chip.

where

P0 is the power in the mode

Rsp is the number of spontaneous emission photons in the mode

g is the local gain

Δvf is the free running linewidth of the gain chip

The linewidth enhancement factor of the gain chip is indicated as usual with α.

The total cavity loss is indicated with αt=a−ln(T2√RsRi)![]() , where a is the optical propagation loss.

, where a is the optical propagation loss.

It is not difficult to derive from Equation 5.23 substituting realistic values to the parameters that the external cavity reduces the linewidth of the gain chip of a factor that can be something like 5 or 7 so that external-cavity lasers with a linewidth of 250 or 500 kHz are used as sources in DWDM systems.

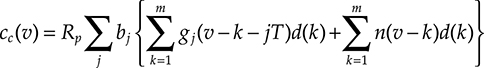

5.2.4.3 Laser Arrays

Laser arrays, where each laser in the array operates at a particular wavelength, are an alternative to tunable lasers. These arrays incorporate a combiner element, which makes it possible to couple the output to a single fiber.

If each laser in the array can be tuned by an amount exceeding the wavelength difference between the array elements, a very wide total tuning range can be achieved.

The DFB laser diode array might be the most promising configuration for wavelength division multiplexing (WDM) optical communication systems due to its stable and highly reliable single-mode operation.

Figure 5.24 Block scheme of a laser array used as a tunable laser.

A possible scheme of a laser array used as tunable laser is sketched in Figure 5.24, where a free space propagation (FSP) region, similar to that used in array waveguides (AWGs), is used to collect the laser output and an SOA to compensate losses.

In principle, this is the easiest way of realizing a tunable laser since it is simply a collection in a single chip of a set of well-known devices: DFB lasers, passive waveguides, an MEMS deflector or an FSP, in case of an output SOA to reinforce the signal.

In practice, the real difficulty consists exactly in creating a chip where all these components are realized together.

In particular, two kinds of implementation problems arise, design and fabrication problems.

From a design point of view, thermal and electrical isolation of all the components is not a trivial problem, since the component footprint has to fit into a standard laser package. Moreover, the design has to be so accurate that all the individual lasers hit the correct wavelength region so that each laser can be tuned on the frequencies for which it is designed by simple thermal tuning.

In case an MEMS deflector is used to focus the light emitted from the laser that is currently switched on onto the output fiber, this element has to be realized with great care for reliability. As a matter of fact, laser interfaces are the elements with a smaller lifetime in a DWDM terminal even when fixed lasers are used. Further shortening of the laser life span is not acceptable from a system point of view.

On the other hand, if an FSP element is used it could be difficult to have a sufficiently high output power without an amplifier at the output of the FSP. In this case, besides the need of a very low-noise and wide-spectrum amplifier, further integration problems could arise.

However, laser arrays have been the first “tunable lasers” to hit the market and they still are a type of tunable lasers widely used in practical systems.

5.3 Semiconductor Amplifiers

Before closing the section on semiconductor lasers a brief presentation of an SOA is needed.

If the reflectivity of the chip facets is eliminated from the structure of an FP laser the chip cannot generate self-oscillation due to lack of feedback, but it can maintain an optical gain due to population inversion in the heterojunction depletion zone.

The result is a traveling-wave semiconductor amplifier.

The dynamics of an SOA is from several points of view similar to that of an ideal amplifier described in Section 4.3.1 with the advantage of having a broad gain curve and a small form factor. Two phenomena limit the use of SOAs in telecommunication systems as signal amplifiers:

As reported in Table 5.1 the typical carrier’s lifetime is on the order of a few nanoseconds, that is, the order of magnitude of the bit time in 10 and 40 Gbit/s transmissions. Thus we have to expect that gain saturation happens during the amplification of signal pulses creating nonlinear pulse distortion as analyzed in Section 4.3.1.3.

The carrier dynamics in a semiconductor creates a link between the phase and the amplitude of the amplifier field so that pure amplitude gain cannot be achieved, but an induced chirp is always present.

Due to these elements the only practical use of SOAs as linear amplifiers is in InP-integrated components where an SOA section is often used to compensate the losses of attenuating components like multiplexers.

The nonlinear dynamics of SOAs is on the contrary an opportunity if they are used not as linear amplifiers but as elements in optical processing circuits.

This is the reason why a more detailed analysis of SOAs, with a particular attention to their nonlinear dynamics, will be delayed up to Chapter 10, where optical signal processing in new-generation networks will be discussed.

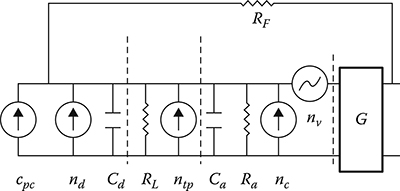

5.4 PIN and APD Photodiodes

A photodiode is in general terms a diode whose structure has been designed so as to allow the incoming light beam to reach a zone very near to the p–n junction [27].

If the junction is inversely polarized, all the carriers created by absorbed photons are immediately removed from the junction area due to the inverse polarization field and constitute the photocurrent.

Since the number of generated carriers is proportional to the incoming photon number, the intensity of the photocurrent is proportional to the optical power, so that a photodiode performs quadratic detection of the incoming field.

Since for telecom applications the interesting wavelength region is the near infrared, telecom photodiodes are built using materials with a high-absorption coefficient in this region of the spectrum.

Almost all the photodiodes used in telecom equipments are built using III–V alloy like InGaAsP or InP in order to optimize the absorption in the desired wavelength region [105], but the research on new materials has recently pointed out new alternatives that could give good results in the future [28,29].

Essentially two types of photodiodes are used: PIN photodiodes, where the junction is a p–i–n junction so as to optimize the absorption efficiency, and Avalanche photodiodes (APD), where internal gain is achieved by the avalanche multiplication of the photo-generated carriers.

Considering PIN photodiodes, the first important characteristic is the quantum efficiency η of the photodiode, defined as the probability that an incoming photon will generate a carrier couple that will contribute to the photocurrent.

The expression of the quantum efficiency is given by

η=(1−f)Γ(1−e−αd)(5.24)

![]()

(5.24)

where

f is the facet reflectivity, so that (1 − f) is the probability that the photon is not reflected

α is the absorption coefficient of the junction zone

d is its thickness, so that (1 − e−αd) is the probability that the photon is absorbed in the junction zone creating free carriers

Γ is the probability that the created carrier exits from the junction depletion zone without recombination

From Equation 5.24 the importance of the absorption coefficient is evident and it is this characteristic that selects the photodiode material once the wavelength region is determined.

The photocurrent created by a PIN photodiode as a consequence of the detection of a light beam is composed by four components:

The first term is constituted by the photon-generated carrier flux that is given by cpc = ηqΦ, where q is the electron charge and Φ is the photon flux, that is, the number of photons per unit time arriving on the photodiode front area. It is important to underline that Φ is a random variable, corresponding to the measure of the quantum operator number (nˆ) relative to the incoming field quantum state.

In almost all the telecommunication applications, the incoming field can be considered a time sequence of quasi-stationary coherent states; thus Φ is a Poisson variable whose parameters slowly change as a function, for example, of the field modulation. Assuming a constant incoming field and separating the average and the fluctuations, the photo-generated carrier flux can be written as

cpc=qη〈Φ〉+qη(Φ−〈Φ〉)=RpP+nsh(t)(5.25)

![]()

(5.25)

where P is the power of the incoming optical beam, Rp = q η/ħω is the so-called photodiode responsivity, and the random term nsh(t), which is in all respects a noise term, is the so-called shot noise. The shot noise has a distribution determined by the fact that the photon flux is a Poisson variable and that an incoming photon has a probability η of generating a photocarrier.

Thus, using the probability composition law, the probability distribution of the photocurrent flux can be written as

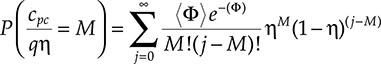

P(cpcqη=M)=∞Σj=0〈Φ〉e−(Φ)M!(j−M)!ηM(1−η)(j−M)(5.26)

(5.26)

However, if the number of photons arriving on the photodiode in the relevant time unit is much higher than 1, the distribution of the number of photocarriers can be assumed Gaussian, with a standard deviation equal to the incoming optical power.

The second current term is the dark current, which is the current that always pass through an inversely polarized p–i–n junction.

The third term is the current generated by thermally excited carriers and, finally, the fourth is the background noise, which is the current generated by the detection of environmentally generated photons.

Summarizing, the photocurrent is composed of four terms, represented in the following equation:

c(t)=RpP(t)+nsh(t)+ndark(t)+nth(t)+ne(t)(5.27)

![]()

(5.27)

The noise term can be considered in general as Gaussian white noise.

Equation 5.27 holds as far as either the incoming signal bandwidth does not exceed the photodiode bandwidth or the incoming power does not go above the photodiode saturation power.

In the first case, due to the finite time response of the photodiode essentially determined by spurious and junction capacities, the photodiode works as a lowpass filter, cutting too high frequencies.

In the second case, the linear relation between average current and optical power does not hold anymore and the photocurrent tends to saturate, increasing the incoming optical power.

The second type of photodetector is the so-called APD [30].

APDs are high-sensitivity, high-speed semiconductor light sensors. Compared to regular PIN photodiodes, APDs have a depletion junction region where electron multiplication occurs, by application of an external reverse voltage.

The resultant gain in the output signal means that low light levels can be measured and that the photodiode bandwidth is very large. Incident photons create electron–hole pairs in the depletion layer of a photodiode structure and these move toward the respective p–n junctions at a speed of up to 105 m/s, depending on the electric field strength.

If the external bias increases this localized electric field to about 105 V/cm, the carriers in the semiconductor collide with atoms in the crystal lattice with sufficient energy to have a nonnegligible ionization probability. Ionization creates more electron–hole pairs, some of which cause further ionization, giving a resultant gain in the number of electron–holes generated for a single incident photon.

Naturally the avalanche process is not a deterministic one and the generated gain also implies a multiplication noise that adds to the other noise terms typical of a PIN photodiode.

The excess noise is generally represented as a sort of shot noise amplification, so that the expression for the power of the shot noise term has to be modified as follows:

σ2shot=2G2meRpPoFA(5.28)

![]()

(5.28)

where

Gm is the avalanche gain

FA is the excess noise factor, which is a function of the ionization ratio ri, and can be written as [31,32]

FA=riGm+(1−ri)(2−1Gm)(5.29)

(5.29)

Besides multiplication noise, when using an APD, it is to take into account that the dark current noise also is amplified by the avalanche mechanism.

In order to give a correct expression of the amplified dark current noise, one has to take into account that dark current can be divided into two components, depending on the physical phenomenon generating it.

There is a bulk dark current and a surface leakage dark current; the first is really amplified by the APD, and the second does not undergo amplification due to the fact that it is a surface phenomenon.

Thus, indicating with Sbd and Ssd the spectral densities of the two components, the final APD dark current power in the detector bandwidth will be [32]

σ2dc=GmSsdBe+G2mSbdFABe(5.30)

![]()

(5.30)

where Be is the electrical bandwidth of the front end immediately following the photodiode.

5.5 Optical Modulation Devices

5.5.1 Mach–Zehnder Modulators

As we have been discussing the modulation performances of semiconductor lasers at 10 Gbit/s and more it is very difficult to transmit at long distance using laser direct modulation due to the limited modulation bandwidth and to intrinsic chirp associated to it.

Among the external modulators those having better performances, if used in telecommunication equipments, are based on a Mach–Zehnder architecture where the interferometer arms are realized with a material exhibiting a strong electro-optic effect (such as LiNbO3 or the III–V semiconductors such as GaAs and InP) [33,34].

For telecommunication applications, the ones most used are those based on LiNbO3 and on InP.

By applying a voltage on the interferometer branches through suitable electrodes, the optical signal in each path is phase-modulated as the optical path length is altered by the variable microwave electric field.

Injecting an optical field in the interferometer and combining at its output the fields coming from the two branches of the phase modulation it is converted into amplitude modulation due to interference.

If the phase modulation is exactly equal in each path but different in sign, the modulator is chirp-free; this means that the output is only intensity-modulated without incidental phase (or frequency) modulation.

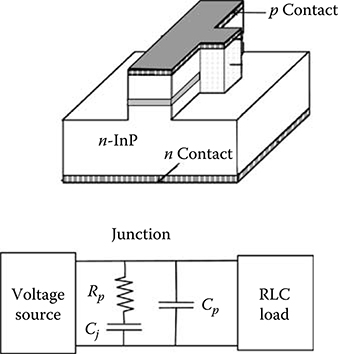

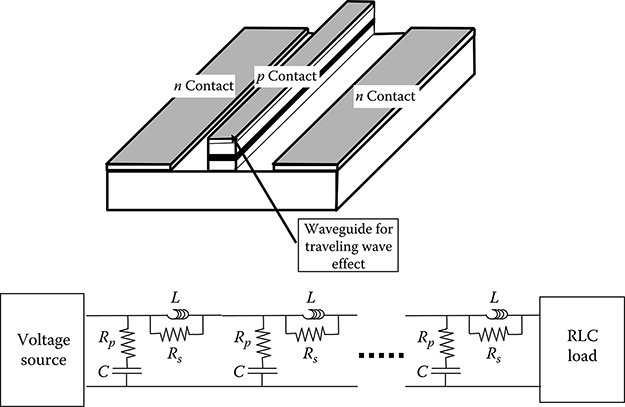

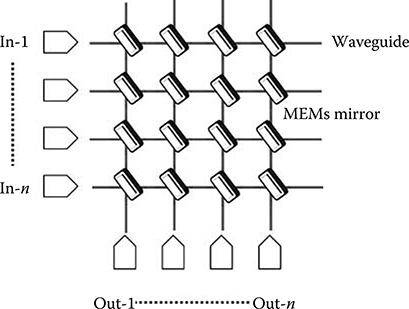

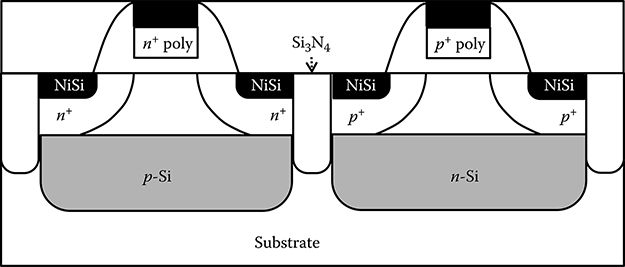

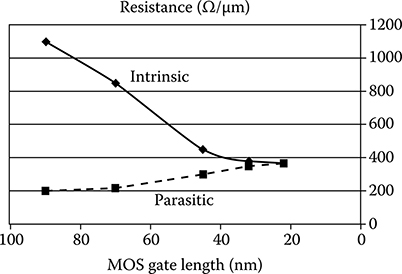

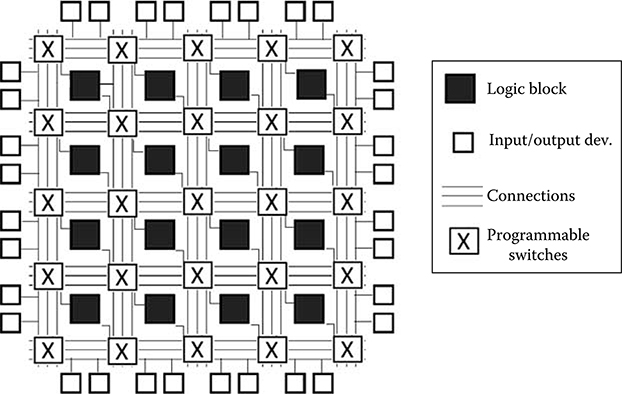

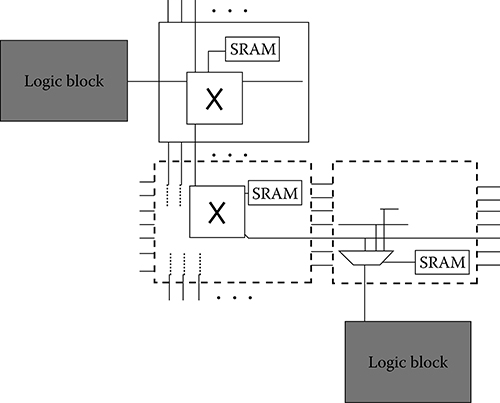

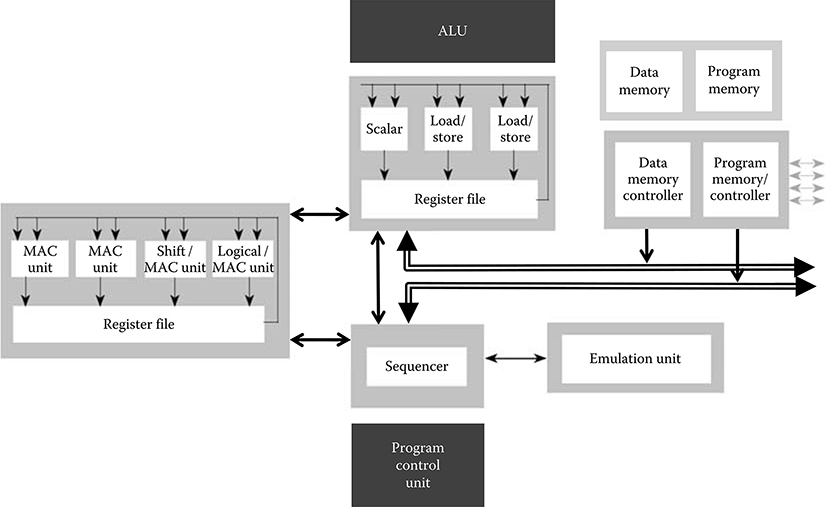

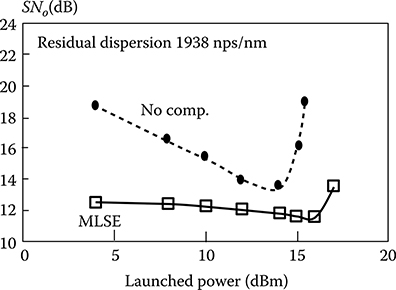

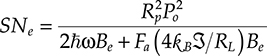

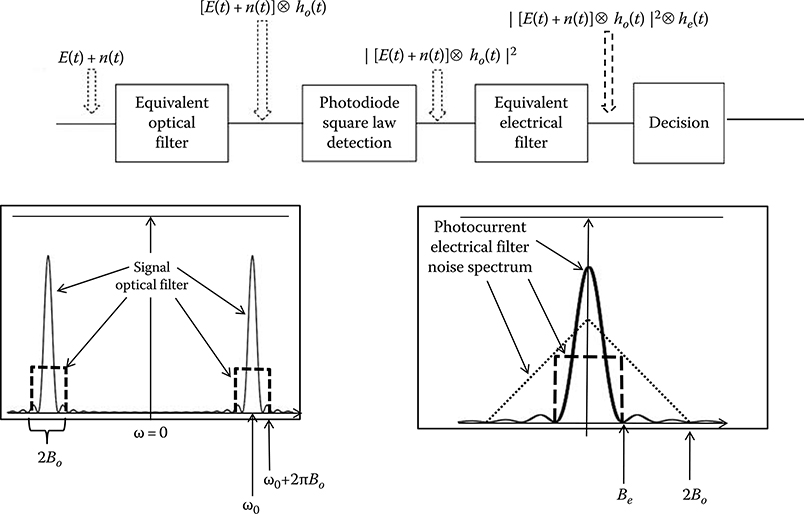

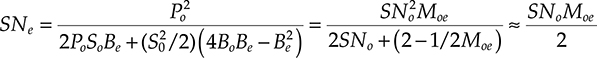

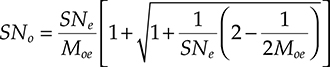

In Figure 5.25 the schematic of a Mach–Zehnder modulator is shown with a particular reference to a LiNbO3 modulator.