8 Convergent Network Management and Control Plane

8.1 Introduction

In Chapter 2 we have seen that the main target of major telecommunication carriers is to decrease the operational expenditure (OPEX) necessary to maintain and operate the network. In Chapter 3 we have seen that there are different implementations of IP over optical architecture, but it is a fact that the old architecture made by two independent networks, one for data and one for telephone, has almost disappeared everywhere.

With the convergence of transport over one architecture, independently on the service bundles offered by the carrier to different categories of customers, also the division of the network management in two completely different architectures becomes inefficient: central management for the physical transport (SDH/SONET and optical transport network [OTN]) and automatic control plane for the packet layer (IP and Ethernet) have to merge.

As a matter of fact, with the network technology evolution and the introduction of a great number of new services, static centralized management is demonstrated to be not suitable for the carriers needs.

It is true that TMN-based management had the merit of introducing a never before seen ability of controlling the network and of assuring performances and reliability, but with a traffic more and more dynamic, the great number of manual operations required by centralized network managers are a source of both costs and errors.

On the other hand, the packet layer is managed through an automatic control plane that, even if is sometimes poor in terms of reporting with respect to the carriers expectations, is very effective in decreasing costs and eliminating errors.

Thus, it is quite natural to envision a new control plane that is capable of automatically managing all the network layers in an integrated manner and is also sufficiently rich in functionalities so as to be at least comparable with traditional TMN.

Different initiatives are ongoing to create new control plane standards. In particular, ITU-T, the world standardization institute that is traditionally carrier-driven, has developed the automatic switched optical network (ASON) standard with the aim of providing a framework for the development of the convergent network control plane [1].

Also IETF, that is the facto the Internet standardization body and is traditionally vendordriven, has created the generalized-multiprotocol label switch (GMPLS) protocol suite, a complete control plane whose standardization is in some parts still ongoing, whose target is to generalize the ideas at the base of MPLS [32, 33, 34] to be able to manage not only packet-oriented networks, but also circuit-oriented networks like the WDM layer or the next generation SONET layer.

At a first glance, it could seem that ASON and GMPLS are two competing standards and that only one of them will prevail with time, but this is completely false. As a matter of fact, ASON is not a protocol suite, even if it gives a lot of recommendations about protocols, but it is more an architecture and a framework in which the protocols of the new control plane have to fit. Thus, it is well possible to fit GMPLS protocols in the ASON architecture, even if this is not completely free of work since the two standards were born independently.

The work of fitting GMPLS into the ASON architecture is ongoing in another industry standardization group: the Optical Internetworking Forum (OIF). The OIF is issuing a series of documents called implementation agreements (AI) [2] whose role is exactly to fit GMPLS into the ASON architecture pointing out where there are difficulties, corrections to be applied to existing GMPLS protocols or different interpretations of the ASON architecture.

Moreover, OIF is promoting a wide experimentation activity whose goal is to set up test beds for the new control plane that verify the functionalities of its parts. This is a very important activity since, if there is a sort of progressive experimental verification of the functions of the new control plane, they can be introduced gradually into the carrier networks, thereby distributing the costs over a long time and allowing the new procedures to be introduced progressively.

This chapter is divided into three parts: in the first we will analyze the ASON architecture, in the second we will review the GMPLS protocol suite, and in the third we will discuss how a multilayer network based on an integrated control plane can be designed and optimized.

8.2 ASON Architecture

8.2.1 ASON Network Model

Historically the control plane accompanies the packet-switched networks, which are not supposed to have a rich management plane as the transport networks. The first point to solve if a control plane is to be developed so to manage a whole network how will the control plane protocols and the network manager interact and how will they divide the functions.

As a matter of fact, several control plane functions are performed in traditional transport networks by the central network manager so that both control plane and network manager have to be redefined in a functional sense.

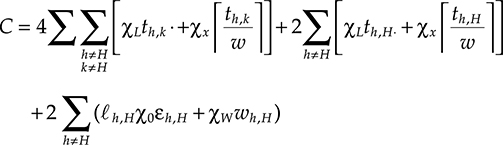

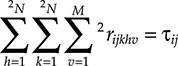

The ASON architecture states that the control plane is responsible for the actual resource and connection management within an automatically switched network (ASN). The control plane resides in a distributed network intelligence constituted by the optical connection controllers (OCCs); the OCC can run in separate workstations as the element managers but generally directly reside into the control unit of the equipments dedicated to switching at each network layer. The OCCs are interconnected via the interface called network to network interfaces (NNI) and run the control plane protocol suite having the following functions:

Network topology discovery (that is, resource discovery)

Address assignment to an equipment port when it is discovered and address advertisement to all the networks

Signaling (connections setup, management, and tear down)

Connections routing

Connection protection/restoration

Traffic engineering

Resource assignment (e.g., wavelength assignment in the WDM layer, time slot assignment in the SONET layer etc.)

The management plane is responsible for managing the control plane. Its responsibilities include the following:

Configuration management of the control plane resources

Definition of the administrative areas

Control plane policies definition

Few of the traditional network manager functionalities should also be maintained on the data plane entities:

Fault management

Performance management

Accounting and security management

The management plane is constituted by the central management unit, which runs the network manager, and the local management units, which run the element managers, and by the management network that connects all the entities of the management plane.

The central network management entity is connected to an OCC in control plane via the network management interface for ASON control plane (NMI-A) and to one of the switches via network management interface for the transport network (NMI-T).

Using these interfaces, the management plane can access to all the control plane entities via the control plane connectivity to perform control plane management and to all the data plane entities to perform reporting of data plane status and those functionalities that are responsibility of the management plane. The management structure of the ASON network is illustrated in Figure 8.1.

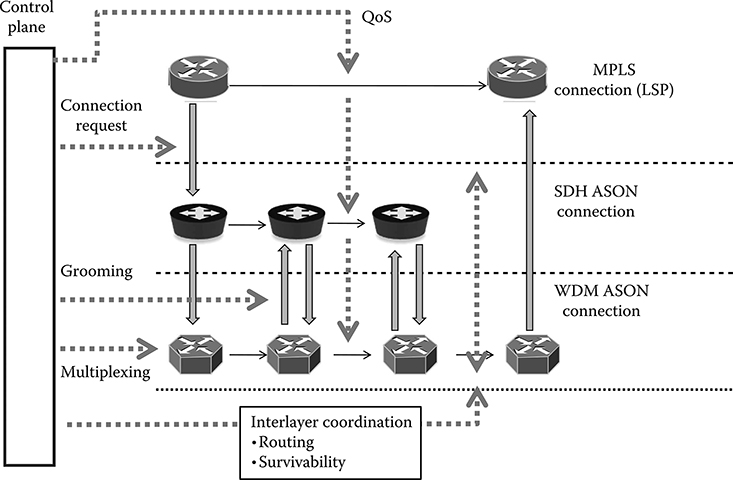

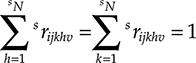

The network model on which the ASON standard is based is a multilayer model in which a single control plane coordinates the activity of all the layers. This vertical structure is reproduced in Figure 8.2, where there is evidence to show that all the layers define a specific physical or virtual connection, so that in its whole we can talk about a virtual connection–oriented network.

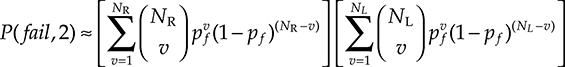

Connectionless layer can be clients of an ASON network, but cannot be managed by an ASON control plane. The ASON network is intrinsically a multilayer network and different network topologies can be defined at each layer. Let us refer just for an example to Figure 8.3 where a three layer network is represented.

At the base physical layer, there is the set of fiber cables represented by gray bold lines and the set of buildings that are the carrier points of presence in the area.

In all the buildings there is an optical cross connect (OXC), but not all the cables are equipped with WDM systems, quite a realistic situation due to the fact that cables are generally deployed with long-term plans that are completely independent of the network that will be realized on the cable infrastructure.

The WDM layer has probably different clients, one of that is an MPLS layer composed by a set of label-switched routers (LSRs). Not in all the nodes there is a LSR, but only in the nodes where such a machine is needed. As a consequence of this complex network architecture, three network topologies can be proposed: a physical network topology composed by cable ducts and buildings, a WDM virtual network topology composed by WDM systems and OXCs, and a MPLS virtual network topology composed by LSRs with the connectivity that is offered to them by the underlying transport layer

Figure 8.1 ASON standard layered model for the Automatic Switched Network management; standard interfaces between management and control plane (NMI-A) and between management and data plane (NMI-T) are shown.

Figure 8.2 ASON Network model: a multilayer network in which a single control plane coordinates the activity of all the layers.

Figure 8.3 Scheme of a three layer network where the deployed equipment is shown in the physical topology plot (left side) and the virtual topologies at each layer are evidenced as graphs (right side).

The different topologies are shown as graphs in Figure 8.3.

The situation of this example can generally be replayed in all multilayer network architecture, identifying a specific topology (physical of virtual) at each layer of the network. Identifying the correct topology is very important since in a multilayer network managed by a single control plane, every routing element refers for its routing protocols to the topology that is specific to that layer.

Under a horizontal point of view, ASON is organized in administrative domains. The administrative domain is a part of the network that has its own autonomy, for example, the network belonging to a carrier in the framework on a national multicarrier network.

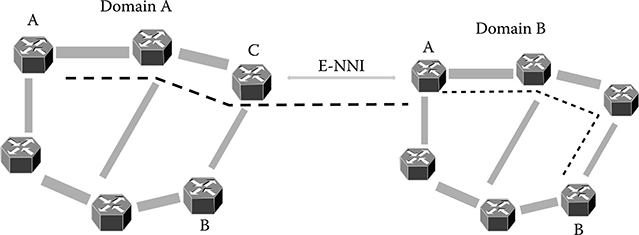

The concept of administrative domain is needed to allow the network to hide its own internal data to the overall network and to encapsulate all the internal addressing into a global network address so that they cannot be seen by other administrative domains. This hiding and encapsulating strategy has a twofold function: it is needed in order to allow carriers to hide data relative to their network to competitors even if roaming among different carriers have to be provided by the control plane, but it is also needed to reduce the dimension of the routing tables in the network routing elements. As a matter of fact, when the network is divided into administrative domains, every switching element stores the complete topology of its own domain and a metatopology relative to the connections with different domains. The partition of the ASON network is shown in Figure 8.4.

Figure 8.4 Domain partition of the ASON network model.

When a connection has to be set-up with a node in a different domain, the precise node address is encapsulated into the global domain address. The switch that has to set up the connection simply send a connection set-up protocol to the point of contact with the destination domain and it will be this node that is inside the domain of the connection end point to complete the connection setup establishing the path into the destination domain. This procedure is shown in Figure 8.5, where the logical working of encapsulation is shown.

Let us image that the address of every node is a couple of letters, so that node A of domain A has an address (A, A). Let us image that a connection has to be established from (A, A) to (B, B). The node (A, A) receives the instruction to open a connection to (*, B). The exact destination node address is hidden since it belongs to a different administrative domain whose topology is not known to node (A, A).

Figure 8.5 Example of inter-domain routing in an ASON network.

Node (A, A) has a routing table knowing that the external network to network (E-NNI) interface toward administrative domain B is between node (C, A) and (A, B) so node (A, A) sets up a link to node (C, A) that forwards the link through the interdomain interface to node (A, B).

Now Node (A, B) knows that a connection between node (*, A) is to set up with node (B, B) starting from the connection that was set up through the interdomain interface with domain A.

Node (A, B) knows exactly from the routing table the position within domain B of the node (B, B) and set up the second part of the connection, represented in the figure by the dotted line.

Besides the separation of information belonging to competitor carriers, in our example the administrative domain separation cause every node to store a topology made of six nodes: five in the same domain and one is a virtual node that is the connection with the other domain.

If all the information was shared among all the network nodes, every node should store a topology of 11 nodes. The concept of administrative domain is also a powerful instrument for the gradual introduction of the ASON architecture in carrier networks. As a matter of fact, an abrupt introduction of a new architecture in a big network like that of an incumbent carrier is not feasible. The new technology is in general introduced gradually in pace with CAPEX expenditure and market request.

Thus during the introduction of the ASON control plane, for a long time there will be parts of the carrier network that will include subsets of ASON features, possibly different subsets depending on the circumstances and on the kind of equipment the carrier has installed in that part of the network, and parts of the network that are not yet migrated.

The network has to work in this hybrid situation without any service disruption: this can be achieved by defining every area of the network where there is a different ASON-compatible technology as a different administrative domain, and interface these administrative domains with those parts of the network where ASON is not yet implemented by suitable interfaces.

Besides the administrative domains, the ASON standard defines another horizontal entity: the routing area. The routing area is generally a subpart of an administrative domain that has the characteristic to be represented by other routing areas like a single virtual node.

Thus, exactly like for the administrative domain, when a node external to the routing area wants to set up a connection with a node inside the routing area it sends the connection setup protocol to the virtual node representing all the routing area that physically corresponds to the point of contact of the destination routing area with the origination one.

Only when the connection setup protocol arrives at the gateway of the routing area it completes the connection setup inside the area. Differently from the administrative domain, routing areas are not insulated under an administrative point of view and the internal information is not completely hidden.

This is due to the different destination of the routing areas with respect to the adminis-trative domains: routing areas are used inside a big administrative domain to reduce the complexity of the address tables of the routing entities by introducing a routing hierarchy while the administrative domain serves mainly to divide parts of the network that have to be administrated independently either because they are owned by different carriers or because they are built on different technologies.

8.2.2 ASON Standard Interfaces

In correspondence to the structure of the network in administrative domains, the ASON standard defines a set of interfaces that are conceived for compatibility between different equipments and different particular implementations of the control plane.

As a matter of fact, it should be remembered that ASON is not a complete protocol suite, but more a reference architecture. Thus it is well possible that different vendors use different specific sets of protocols, all ASON-compatible.

In this case, naturally every different protocol suite will be confined to a different administrative domain and suitable interfaces will guarantee the cross-compatibility.

The first standard interface that is the optical user network interface (O-UNI). This is the interface connecting into the data plane the client equipment with a network equipment through an optical signal.

Somehow the O-UNI is a limited functionality interface, since it has also to shield the network from any improper client activity that could either interfere with the network working or try to detect information about the network that the carrier wants to hide to the customer.

The activity of the O-UNI is thus limited to resource discovery, signaling and, naturally, data exchange. In particular, the following protocols flow through the O-UNI:

Connection request and creation

Connection parameters change

Connection delete

Client identity change

Data transit

Just to do an example, it is through the O-UNI that the Ethernet switch belonging to a corporate network asks to the public network the creation of a virtual connection for a data center back-up operation.

In particular, the ASON-enabled Ethernet switch sends through the O-UNI a suitable signal to the multilayer node to which it is connected for specifying the request of a mission-critical data connection between two points of the network. The desired format is Gbit Ethernet and the network back-up service foresees a specific service level agreement.

The ASN uses all the control planes to provision the connection and, when the connection is correctly provisioned, a return message is returned through the O-UNI to the switch that starts the data transfer.

At the end of the back-up, the private network manager decides not to destroy the connection, but to reduce its capacity to 100 Mbit/s and to use it for another scope. This step is signaled through an OUNI-compatible protocol to the network that makes all the steps that are needed to change not only the capacity of the connection but also the related SLA. All the public network planes are involved in the operation: the data plane has to be suitably reconfigured by the control plane protocols so as to find an efficient way to satisfy the customer request (in case the network could also decide to destroy the old connection and to set up a new one) while the management plane is engaged in guaranteeing, among the other things, that the SLAs with other customers are not violated, that the billing is done in the correct way and that all is reported to the central management place.

After a certain time also, the new connections become useless, at that point the switch of the private network uses the O-UNI-compatible protocol to delete the connection, an operation that forces the network to a connection tear down.

A second interface completely included into the data plane is the E-NNI interface, that is, the interface that is defined to connect different administrative domains.

Due to its definition, the E-NNI can connect areas of the network that are managed by different carriers, but also areas that implement different ASON-compatible protocol suites.

Due to the fact that internal data relative to an administrative domain have to be hidden to entities belonging to a different administrative domains; also the E-NNI interface has the role to allow a limited number of interactions between the different administrative domains and to avoid that one of them makes undue influences on the working of the other.

All the functionalities carried out by the O-UNI are also carried out by the E-NNI; in addition, the E-NNI has to manage cross-domain survivability.

An example is given in Figure 8.6, where end-to-end dedicated optical channel protection is carried out to protect an optical layer connection between two OXC’s belonging to different administrative domains. The working path (the dotted line in the figure) is used in the normal network status to convey low-priority traffic.

Let us image that a failure happens on the working path (the continuous line path) between nodes (D, A) and (E, A). The connection originator node (A, A) performs low-priority traffic switch-off and commutes the traffic to be protected on the working path.

However, this operation has to be advertised also to the nodes of the domain B to drive resources reservation in the transit nodes and correct switching of the connection destination node.

This is a specific role of the E-NNI-compatible protocols that have to do that without allowing one domain to know hidden information from the other domain.

In conclusion, the functions carried out by the E-NNI are as follows:

Connection request and creation

Connection parameters change

Connection delete

Client identity change

Data transit

Protection/restoration management

Figure 8.6 Inter-domain path protection via the E-NNI interface.

The third standard interface is the I-NNI (internal Network to Network Interface) that is designed to connect network equipments belonging to the same administrative domain.

All control plane protocols transiting through the I-NNI interface have the role to exchange information. While the O-UNI and the E-NNI are objects of a great standard work within ITU-T and OIF, since they assure the cross-compatibility of any specific control plane implementation and its ability to interwork with its clients, the I-NNI is specific to any control plane implementation and has not been standardized in detail.

The functionalities of the I-NNI are strictly related to the functionalities of the control plane and it can be said that the best I-NNI definition is that it has to be able to convey all the protocols constituting the control plane.

8.2.3 ASON Control Plane Functionalities

The ASON architecture, besides the general structure of the ASN network and the interfaces between different network entities, define the functionalities the protocol suite composing the control plane has to carry out.

In general, ASON does not assume that vertical interoperability will exist between different implementations of the control plane, since the internetworking is guaranteed by the possibility of assigning to any technology its own administrative domain and to connect them via the O-UNI, but if different implementations of the protocols are ASON-compatible, they have to provide the same basic set of functionalities.

8.2.3.1 Discovery

The discovery function can be divided into three different subfunctions:

Neighbor discovery

Resource discovery

Service discovery

The neighbor discovery is responsible for determining the state of local links connecting to all neighbors. This kind of discovery is used to detect and maintain node adjacencies; without it, it would be necessary to manually configure the interconnection information in management systems or network elements.

The neighbor discovery usually requires some manual initial configuration and automated procedures running between adjacent nodes when the nodes are in operation.

Three instances of neighbor discovery are defined in ASON, that is

Physical media adjacency discovery

Data plane layer entities adjacency discovery

Control entities logical adjacency discovery

Physical media discovery has to be done first to verify the physical connectivity between two ports. Generally this verification is carried out through an exchange of messages between adjacent network entities that verify both the network entities and the connecting link functionality.

The layer adjacency discovery is used for building the layer specific network topology (see Figure 8.3) that has to be stored into the local CC to support routing.

As a matter of fact, logical adjacencies between both data and control entities are created by layer adjacency discovery. Moreover, layer adjacency discovery, fixing the topology of the layer, also identifies link connection endpoints that are needed to describe connections through the cascade of links composing them, to manage such connections in correspondence to client request changes and to assure proper network working when a failure happens.

Discovery processes involve exchange of messages containing identity attributes. Relevant protocols may operate in either an acknowledged or unacknowledged mode. In the first case, the discovery messages can contain the near-end attributes and the acknowledgment can contain the farend identity attributes. The service capability information can be also contained in the acknowledgment message.

In the unacknowledged mode both ends send their identity attributes. Recommendation G.7714 discusses the following two discovery methods:

Trace identifier method

Test signal method

In the trace identifier method, the discovery is carried out by using the trail identifiers that are included in all the overheads of the transport ITU-T standards (both in SONET/SDH and in OTN, see Chapter 2).

In particular, the trail termination points are first identified and then links connections are inferred. Just to give an example, let us imagine to have an ASON-managed next generation SONET network and to consider path layer discovery. A trace identifier discovery protocol will reside into the CC and will detect in all the network entities if the J0 field of the SONET path layer header is terminated and regenerated (the J0 field is the trail identifier in this case).

Once all the points in which the J0 is terminated and recreated are detected, the path layer logical topology is inferred by connecting the point where a value of J0 is created with the point where such a value is terminated with a virtual link (that is, links at the path layer).

It is to be observed that, under a layering point of view, the trail reports an information that is typical of the service layer, thus the trace identifier method performs discovery in layer one by exploiting also information relative to the client layer.

In the test signal method, test signals are used to directly find associations between subnetwork termination points (see network layering, Chapter 3) without discovering any service layer trails. While the use of overhead elements identifying a trail allows discovery to be carried out during normal network working, the use of data field to send test messages implies that the client data transmission is suspended. Thus the first discov-ery method is a type of in-service discovery, while the second is a type of outof-service discovery.

The resource discovery has a wider scope than the neighbor discovery. It allows every node to discover network topology and resources. This kind of discovery determines what resources are available, what are the capabilities of various network elements, and how the resources are protected. It improves inventory management as well as detects configuration mismatches.

It has to be considered that, if an OXC has five DWDM output ports, this means that it switches probably more than 600 optical channels and that more than 1200 fiber patch-cords (every channel comes in and goes out) physically comes out from some OXC card to be plugged on some other card or on an external equipment. Since physical connections of fiber patchcords have to be done manually, the probability that some error occurs when a new equipment is installed or when an upgrade is done is not negligible.

Many errors can be detected via the equipment self-test, but not all, and there is a prob-ability that an error manifests its presence much later with respect to the equipment installation, since without any specific test, it will become evident only when a certain card is really used (let us think, e.g., about redundancy cards). Thus, having an automatic procedure that through the discovery of network links at each network layer points out errors in connecting different equipments is very important to simplify the network operation.

The service discovery is responsible for verifying and exchanging information about service capabilities of the network, for example, services supported over a trail or link. Such capabilities may include, as an example, the quality of service (QoS) a certain network link is capable of providing in terms for example of error probability, connectivity restoration time in case of failure or packets average delay.

8.2.3.2 Routing

Routing is used to select paths for establishment of connections through the network. Although some of the well-known routing protocols developed for the IP networks can be adopted, it has to be noted that optical technology is essentially an analog rather than digital technology, thus routing is strongly influenced by the transparency degree of the network. To be specific, several types of transparency can be defined at different network layers. Examples are as follows:

Service transparency: the layer ability to work ignoring the services managed by the client layer

Protocol transparency: the ability to transport any client layer protocol

Bit Rate transparency: the ability to work at any bit rate within a given maximum

Optical transparency: the absence of network elements where the signal is electronically regenerated

On the ground of the aforementioned definitions, any OTN network is service and protocol transparent, while it is not bit rate transparent. The transparency that is relevant discussing about routing is the optical transparency. The optical layer of a network can be divided into optically transparent isles, which are network areas where the optical signal is never electronically regenerated.

The optical transparency areas can be so small as a single DWDM link connecting two electrical core OXCs or so big as an entire routing zone where optical interconnected rings are deployed. While the optical signal traverse a single optical transparent area, transmission impairments accumulated along the optical paths and this has to be taken into account while calculating the route (see Chapter 10 for details). Under the point of view of the routing strategies, ASON supports hierarchical, source-based, and step-by-step routing.

In the first case, CCs are related to one another in a hierarchical manner as we have seen defining administrative domains and routing areas. Source routing is based on a federation of distributed connection and routing controllers. The path is selected by the first connection controller in the routing area. This component is supported by a routing controller that provides routes within the domain of its responsibility. Step-by-step routing requires less routing information in the nodes than the previous methods. In such a case path selection is invoked at each node to obtain the next link on a path to a destination.

8.2.3.3 Signaling

Signaling protocols are used to create, maintain, restore, and release connections. Such protocols are essential to enable fast provisioning or fast recovery after failures. Signaling network in ASTN should be based on common channel signaling, which involves separa-tion of the signaling network from the transport network. Such a solution supports scalability, a high degree of resilience, efficiency in using signaling links, as well as flexibility in extending message sets.

A variety of different protocols can interoperate within a multidomain network and the interdomain signaling protocols shall be agnostic to their intradomain counterparts. As a matter of fact, interdomain signaling is managed by the domain interface protocols related to E-NNI interface, which are completely independent of the internal protocols of the domain.

8.2.3.4 Call and Connection Control

Call and connection control are separated in the ASON architecture. A call is an association between endpoints that supports an instance of service, while a connection is a concatenation of link connections and subnetwork connections (connections crossing the border between nearby administrative domains or routing areas) that allows transport of user information.

A call may embody any number of underlying connections, including zero. The call and connection control separation makes also sense for restoration after faults. In such a case, the call can be maintained (i.e., it is not released) while restoration procedures are underway. The call control must support coordination of connections in a multiconnection call and the coordination of parties in a multiparty call. It is responsible for negotiation of endtoend sessions, call admission control, and maintenance of the call state. The connection control is responsible for the overall control of individual connections, including setup and release procedures and the maintenance of the state of the connections.

8.2.3.5 Survivability

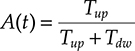

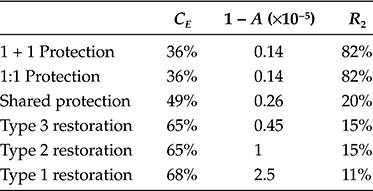

As detailed in Chapter 2, survivability can be attained by either protection or restoration mechanisms, or both in some cases, where protection is based on the replacement of a failed resource with a preassigned standby resource, while restoration, is based on rerouting using available but not preprovisioned spare capacity.

Since the ASON architecture is intrinsically a multilayer architecture, protection or restoration may be applied at different layers allowing very high availability levels to be achieved, but also requiring appropriate layers coordination. In the ASON architecture, protection management is in general terms a responsibility of the management plane.

The management plane performs protection configuration and failure identification and reporting after that the protection mechanism has assured service continuity. However, the control plane is not out from the protection management activity. First, the management plane should inform the control plane about all failures of transport resources as well as their additions or removals. Unsuccessful transport plane protection actions may trigger restoration supported by the control plane.

Moreover, the control plane supports the protection switching by suitable control plane protocols that are used in any case to advertise all the network entities in the data plane of the performed protection and, in some cases, necessary to coordinate protection switching itself.

Figure 8.7 Protection mechanism in an OCh-SPRing.

Just to do an example, let us image to have an optical channel shared protection ring (OCh-SPRing detailed in Chapter 3). The OCH-SPRing mechanism is shown in Figure 8.7; the wavelengths on each fiber is divided into two groups: a working group and a protection group, where the working wavelengths on the outer fiber are devoted to protection in the inner fiber and vice versa. Working channels, as shown in the figure, are routed bidirectionally using both the fibers on working wavelengths.

When a failure occurs, switching happens at the OCh end and the channels affected by the failures are rerouted in the opposite ring direction by using the other fiber and the set of protection wavelengths. As it is also evident from Figure 8.7, if several optical paths are routed in the ring on the same wavelengths, the corresponding protection wavelengths have to be shared among all those paths (from where comes the name shared protection).

Moreover, also where a unidirectional failure occurs, that is a failure affecting only one direction, both the optical channels ends have to switch to change the ring configuration from working to protection. This means that, when a node detects a failure via a signal power off or a signal quality major alarm (e.g., an estimated BER greater than 10−10), it has to inform the other node at the end of the affected channel of the failure. Moreover also all the other nodes in the ring have to be advertised that protection switching occurred, since the protection wavelengths are no more available.

This means that a control plane protocol has to be started from the CC supervisioning the node that detected the failure with the primary role to make handshaking with the note at the other end of the affected link. The messages are first sent on the other direction of the path: if the failure is unidirectional, they will be detected and the other node will answer on the protection path, if the failure is bidirectional also the other node has detected the failure and after some time the handshake will start on the protection path in both directions.

Before engaging the protection path for the first protection handshake, another protocol can be used to advertise all the nodes of the ring that the protection wavelengths has been reserved by the nodes involved in the failure so that no other node will try to use them. After the successful handshake of the nodes at the two ends of the affected path, which also has the scope of verifying that the protection path works correctly, the affected optical channel is switched on the protection wavelengths in both the directions.

It is clear that there is a complex activity to perform if the protection mechanism involves shared resources to coordinate protection switching in a network. Under a functional point of view, this operation of advertising and handshaking could also be forced by the management plane that can naturally reach all the nodes of the data plane. However, protection has to be carried out generally within 50 ms, or a similar short time, thus an intervention of a SW manager running on a centralized workstation is not feasible and real-time protocols are needed.

However, all the protection mechanisms are controlled and managed by the management plane. Just for an example, if protection switching does not work, it is the management plane to detect and to manage the result, invoking if is the case protection on an upper layer or resorting to restoration coordinated by the control plane.

A particular circumstance is created in the case of control plane protection in consequence of a failure either of a CC or of a control plane connection. In this case, only the source and destination connection controllers are involved in managing protection and it is suggested to avoid shared protection techniques not to be obliged to create protocols of a sort of meta-control plane.

Differently from protection, the restoration is completely managed by the control plane. During restoration, the control plane uses specific protocols to build new routes for the connections that have been disrupted from a failure starting from the spare capacity available in the network. In the ASON standard, such a rerouting service is performed on a per-routing domain basis, i.e., the rerouting operation takes place between the edges of the routing domain and is entirely contained within it. This assumption does not exclude requests for an end-to-end rerouting service.

Naturally, if restoration mechanism is foreseen to increase the network availability, the spare capacity has to be suitably distributed in the network so to permit restoration after the desired class of failures. Hard and soft rerouting services can be distinguished. The first one is a failure recovery mechanism and is always triggered by a failure event. Soft rerouting is associated with such operations as path optimization, network maintenance, or planned engineering works, and is usually activated by the management plane.

In soft rerouting the original connection is removed after creation of the rerouting connection, while in hard rerouting, the original connection segment is released prior to creation of a new alternative segment.

8.3 GMPLS Architecture

If ASON is a reference architecture that is intended to assure functionalities standardization and interoperability to different control and management plane implementations, GMPLS is the IETF-driven effort to standardize a specific control plane architecture that extends the concepts of MPLS to a multilayer network including TDM and WDM layers implementing physical circuit switching.

Within the GMPLS architecture, different GMPLS models are defined to comply with different levels of GMPLS implementation and with gradual control plane introduction in the carrier networks. The first model, intended mainly for the first implementation of the control plane, is the so-called overlay model. In this model the packet layer, implementing MPLS as control plane and constituted by LSRs, is considered as a client of the underlying transport network that can be either a TDM network (like SDH or SONET) or a WDM network.

GMPLS is used in the overlay model as a control plane of the transport network and it communicates with the overlay MPLS control plane via the UNI interface. In the peer model, the IP/MPLS layer operates as a full peer of the transport layer. Specifically, the IP routers are able to determine the entire path of the connection, including through the optical devices.

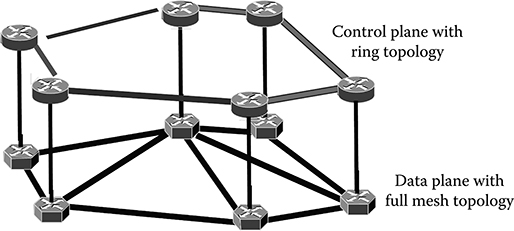

A sort of GMPLS overlay model is obtained using ASON terminology by connecting a standard MPLS layer with an ASON transport network via O-UNI interfaces so that conceptually there is some correspondence between GMPLS and ASON configurations. As in the case of the ASON architecture, the GMPLS control plane is extended to support multiple switching layers. Moreover, also in GMPLS, there is a clear separation between the multilabel control plane (MLCP) and the data plane at each layer of the network so that at a specific layer they could have a different topology or be supported by different transmission media as shown in Figure 8.8.

Unlike control signaling in MPLS that following the IP network paradigm is always in-band, the MLCP can manage the GMPLS network out-of-band; hence control signaling need not follow the forwarded data. This implies that the MLCP can continue to function although there is a disruption in the data plane and vice versa and allows for separate control channels to be used for the MLCP. By deploying the MLCP on separate control channels, the other channels are completely dedicated to forwarding data.

In GMPLS, the MLCP utilizes routing and signaling protocols to manage all the network functionalities. These protocols are extensions to well-known protocols of the TCP/IP protocol suite. As such, the MLCP implements IPv4 or IPv6 addressing. This also applies to the data plane, but in cases where addressing is not feasible, or convenient, unnumbered links (i.e., links without network addresses) are supported. MLCP addresses are not required to be globally unique (however global uniqueness is required to allow for remote management). However, addressing in the MLCP is separated from that in the data plane.

In the GMPLS original version, the network manager has only the role of defining the network when it is initialized and of reporting network events to the central network control room. Moreover, the network manager also implements traffic engineering tools that can be run off line on the network manager workstation to verify if the network is optimized.

If the connection routing and resource assignment is not optimized, as surely will happen after a certain period of network working, the network manager is able to force the network to exit from the present configuration to assume a better one, for example, rerouting some paths. This original role of the network manager in GMPLS networks is completely different from what is prescribed from ASON and probably at the end of the story the ASON version will prevail if not for other reasons since it is pushed from the network owners.

Figure 8.8 Communication structure dedicated to the control plane and its independence from the network on the data plane.

GMPLS uses generalized labels to perform routing of generalized label switched path (GLSP). In contrast to MPLS where labels merely represent network traffic, generalized labels represent network resources. For example, a generalized label on an optical link could identify a wavelength or a fiber, while on a packet-switched link, it would simply identify network traffic, just as in MPLS. In the lower layers, generalized labels are “virtual,” meaning that they are not inserted into the network traffic, but instead implied by the network resource being used (e.g., wavelength or fiber). This is necessary since neither packets nor frames are recognized at the lowest layers that GMPLS supports.

Generalizing the label format, the conventional MPLS label has been extended to 32 bits. The labeling of ports of routing equipment in GMPLS follows a particular convention that allows the control plane to identify the functionality of an equipment from the labeling of its ports.

In particular, the ports of routing equipments are classified in several classes that are as follows:

Fiber switching capable ports (FSC): These are the ports of equipment that can switch the entire fiber signal as a whole.

Wavelength switching capable ports (WSC): These are ports of equipment that can switch the single wavelength signal as a whole; since generally the port is occu-pied by a WDM system, a demultiplexer is expected so to divide at least a certain number of wavelengths (see the OADM structure in Chapter 6) so to be able to deal with each wavelength independently.

Time division multiplexing switching capable ports (TSC): These are the ports of equipment that can switch the single TDM frame; since generally the port is occupied by a complete TDM frame, a TDM demultiplexer is expected after the TSC.

Layer 2 frames switching capable ports (L2SC): These are essentially the ports of Ethernet equipments.

Packet switching capable ports (PSC): These are the ports of LSR and IP routers.

Every type of port has a different kind of generalized label so that the control plane can identify the type of port and so understand the equipment capability. An example of different types of ports is reported in Figure 8.9, where a core node hosting the overlay of an OXC and an LSP router is schematically shown. A few of the wavelength GLSPs transiting through the OXC are also sent to the LSR both for local dropping and for grooming, while other wavelength GLSP directly transit through the OXC.

This is a typical overlay node configuration, where the OXC ports are wavelength capable ports, while the LSR ports are packet capable ports. Finally ports with multiple switching capability can be defined, for example both PSC and WSC. When a GLSP requires the use of the port it also requires the functionality he needs among those available.

8.3.1 GMPLS Data Paths and Generalized Labels Hierarchy

A data path between two GLSRs is a different concept with respect to the GLSP. A GLSP consists of a sequence of consecutive labels, which, when swapped in a specific order, carries data from one point in a label switched network to another. In short, a GLSP is represented by the distributed state needed to send data along a specific route.

Figure 8.9 Different types of ports in the overlay of an OXC and an LSP router. (a) Shows a locally dropped GLSP, and (b) a pass-through GLSP. ΛSC indicates a wavelength switch capable port.

Because GLSPs might differ in link composition, an entity requesting labels for a GLSP needs to specify three major parameters: switching type, encoding type, and generalized payload-ID (G-PID). Switching type defines how an interface switches data. Because this is always expected to be known, a switching type needs only be specified for an interface with multiple switching capabilities.

Encoding type is needed to specify the specific encoding of the data associated with the GLSP. For example, data associated with an L2SC interface might be encoded as Ethernet.

The G-PID finally defines the client layer of the GLSP. In GMPLS, bidirectional GLSPs are considered the default. Bidirectional GLSPs are established through simultaneous label distribution in both directions. Since generalized labels are nonhierarchical, they do not stack. This is because some supported switching media cannot stack. Given an optical link, for example, it is not possible to encapsulate a wavelength in another and then get it back again.

In GMPLS, tunneling data through different layers is therefore based on GLSP nesting (i.e., encapsulating GLSPs within GLSPs). Nesting of GLSPs can be realized either within a single network layer or nesting GLSPs of a layer through a GLSP of another layer. Examples of the nesting system are reported in Figure 8.10.

Figure 8.10 Examples of Generalized Labels nesting: on the left (a) labels relative to different wavelength channels are nested into the fiber label, on the right (b) labels relative to different MPLS LSPs are nested into the wavelength path label.

In particular in Figure 8.10a, a set of wavelengths are nested into a fiber link by nesting the labels associated with wavelength channels resources into the label associated to the fiber channel.

This kind of nesting allows the wavelength channels to be considered as a single entity, to be routed always together, switched together and protected together. This kind of nesting is useful, for example, if a 100 Gbit/s virtual channel is transmitted as a group of four 20 Gbit/s channels. In this case, the association made through GLSPs nesting within the same layer allows the 100 Gbit/s channel to be recognized as a single entity by the network even if it is constituted by parallel wavelength channel.

Another case in which nesting wavelength into a fiber channel is useful when a client layer requires a SLA prescribing the same network traversing time for different wavelength channels. This can be achieved by nesting them into the same GLSP.

In Figure 8.10b the nesting of several MPLS LSPs into a lower layer wavelength channel is shown. Physically the GLSPs are multiplexed into a wavelength channel using OTN frame and GFP adaptation, under a logical point of view, in order to recognize that the GLSPs will travel the network in the same route, a GLSP is built nesting the individual layer two labels into a layer one label attached to the wavelength channel resources.

In general, ordering GLSPs hierarchically within a network layer requires that GLSP are encoded in a hierarchical way. A natural GLSP hierarchy can be established based on interface types and it is also the hierarchy used to build the examples of Figure 8.10. At the top of this hierarchy are FSC interfaces followed, in decreasing order, by LSC, TDM, L2SC, and PSC interfaces. This order is because wavelengths can be encapsulated within a fiber, time slots in wavelengths, data link layer frames in time slots, and finally network layer packets in data link layer frames. As such, an GLSP starting and ending on PSC interfaces can be nested within higher ordered GLSPs.

8.3.2 GMPLS Protocol Suite

Protocols of the GMPLS protocol suite can be divided into three distinct sets based on their functionality:

Routing protocols must be implemented by the MLCP to disseminate the network topology and its traffic engineered (TE) attributes. For this purpose, open shortest path first (OSPF) with TE extensions (OSPF-TE) or intermediate system to intermediate system (IS–IS) are currently defined. To account for multiple layers, however, GMPLS needs to add some minor extensions to these existing protocols. The GMPLS routing process using OSPF-TE is explained in the following sections.

Signaling protocols are concerned with establishing, maintaining, and removing network state (i.e., setting up and tearing down GLSPs). For signaling, GMPLS can use either Resource ReSerVation Protocol (RSVP) with TE extensions (RSVP-TE) or constraint-based routing-label distribution protocol (CR-LDP). Again, supporting multiple layers requires some extensions to existing protocols.

Link management is performed by a new protocol called the link management protocol (LMP), which has been defined within the GMPLS standard. LMP can be used by GMPLS network elements to discover and monitor their network link connectivity. To enable link discovery between an optical switch and an optical line system, LMP has been further extended, creating LMP-WDM.

In the following subsections, the working of the most used among the protocols of the GMPLS protocol suite will be described in some detail so as to give an idea of how GMPLS implements its functionalities.

8.3.2.1 Open Shortest Path First with Traffic Engineering

To enable automated configuration of the controlled network, GMPLS defines an intradomain routing process. Via this routing process, the network topology and its TE attributes are disseminated within the TE domain. This routing process is implemented using routing protocols specified for the MLCP. However, this routing process is not used for routing user traffic, but only for distributing information in the MLCP. To understand this point, we have to remember that, taking as an example the optical domain, user signals are organized in optical channels that are routed like circuits in that layer. Extensions to existing protocols necessary for this routing process were therefore created in the relevant IETF and OIF standards.

Essentially, with OSPF-TE, participating generalized label switched routers (GLSRs) first establish routing adjacencies by exchanging hello messages. After routing adjacencies have been established, the GLSRs then synchronize their link state databases. This is done by exchanging database description packets.

The database description packets contain at least one database structure referred to as a link state advertisement (LSA). Different LSA types exist but all share a common 20-byte header whose structure is shown in Figure 8.11.

The primary OSPF-TE operation is to flood LSAs throughout the MLCP domain by appending them to “link state update” messages periodically sent between adjacent GLSRs. To avoid interference with any ordinary routing processes, a TE LSA is made opaque. Such an opaque LSA is a special type of LSA only processed by specific applications (e.g., the GMPLS routing process). By extending the link state database with TE information, a traffic engineering database (TED) is produced. From this TED, a network graph with traffic engineering content can be computed. Constructing a TE network graph is necessary to provide input for the constraint-based algorithms subsequently used to compute network paths.

When flooding LSAs, each OSPF-TE routing message contains a common 24-byte header, which is used to forward it. This routing message header includes information about message type, addressing, and integrity. Within this header, LSAs are then encapsulated and specific payloads appended to each LSA. In order to enable advertisement of TE attributes in opaque LSAs, the LSA payload consists of type-lengthvalue (TLV) records. These are information records composed by three fields: two 2-byte fields, the “Type” and “Length” fields, and a variable length field, the “Value” field. Using TLVs, router addresses and TE links can be expressed.

In GMPLS, if an advertising router is reachable, a “router address”-TLV can be used to describe a network address at which this router (i.e., GLSR) can always be reached. In turn, the “link”-TLV can be used to abstract advertised TE links. Multiple TE attributes can be represented on each link using fields that are related to the “link”-TLV address, which are called sub-TLV. Thus, in the case of a link address, the TLV appears as the first field and pointer of a bigger record containing all the information that are needed to the MLCP about the link in order to perform routing operations.

Figure 8.11 Common 20-byte header of OSPF-TE LSA messages.

Table 8.1 Record Containing the Information Needed to the MLCP to Perform Routing Operations

Sub-TLV Name |

Type |

Length |

Value |

Link local/remote identifiers |

11 |

8 bytes |

2 × 4 bytes local/remote link identifiers |

Link protection type |

14 |

4 bytes |

1 byte for link protection (3 bytes reserved) |

Interface switching capability descriptor |

15 |

Variable |

Minimum 36 bytes for ISCD information |

Shared risk link group |

16 |

Variable |

N × 4 bytes for link SRLG identification |

The structure of this bigger record is reported in Table 8.1. Two links attributes of Table 8.1 are worth a comment. The first is the last attribute of the record, advertising that the link is part of a shared risk link group (SRLG). An SRLG is a group of links that will fail contemporary when a certain type of failure happens.

As an example, we can look at Figure 8.12, where typical situations are represented. Physical fiber connections are routed through multifiber cables and links having different destinations are built over a cable structure that generally has been deployed without any knowledge of the overlay network.

Thus, also links that have completely different source and destinations can share in a part of their path the same fiber cable. If the cable is cut, for example, due to civil works, all those links goes down, thus they have to be put together in the same SRLG.

The definition of SRLGs is fundamental when defining protection or restoration strategies. As a matter of fact, when the protection capacity is preprovisioned, it is fundamental to avoid that a link is protected by another link in the same SRLG, otherwise a single failure could hit both working and protection link, making the protection useless. In the case of restoration, when routing of the restoration path is performed, also links in the same SRLG has to be avoided. This is why the SRLG is indicated among the information related to the routing protocol.

Figure 8.12 Concept of SRLG.

The second field that is worth a comment is the interface switching capable description. It is clear that a link will terminate on the same type of interfaces and this field let the routing protocol know what kind of interfaces there are at the link extremes. During GLSPs, this information can be very useful to balance sparing of higher layer interface use achieved by transparent pass-through of the traffic not destined to the node with transmission resources sparing achieved by grooming (see Section 8.4: Design and Optimization of ASON/GMPLS Networks).

Once the OSPF-TE has flooded the network and created all the nodes capable of routing GLSPs, a database of the routing area topology, the OSPF-TE routing engine can start every time there is a GLSP to route either if it is a new path or it is needed to carry out restoration of a failed path.

The OSPF-TE routing engine is based on the so called Dijkstra algorithm (from the name of the inventor), which is an algorithm that is able to find in a graph with tagged links the shortest path between two given nodes. In GMPLS, what should be the tagging of the links of the network is not prescribed in a mandatory way; generally every link is associated to its length and the GLSP are routed along the shortest path in term of kilometers. This is a reasonable solution since the cost of a DWDM system is roughly proportional to its length due to the presence of EDFA sites. Thus, since if I occupy preferably the shortest links I will have to upgrade them sooner, this strategy tends to minimize the cost of network upgrades.

However, in specific situations, it is possible to tag the network links with other numbers. For example, a competitive carrier leasing fibers from another company could tag the link with the leasing price so to use always the cheaper links. This direct routing through the topology of the network with the Dijkstra algorithm becomes increasingly complex while the network dimension increases, so that the routing process has to be simplified in some way if OSPF-TE has to scale up to the dimension of an incumbent carrier network. The solution to this problem is the division of the network in routing areas and the execution of the routing in a hierarchical manner.

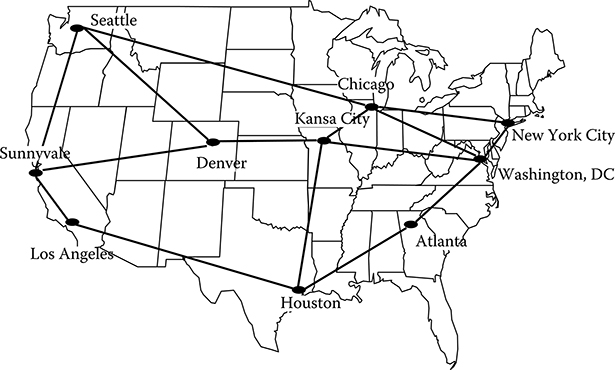

In particular, OSPF-TE individuates in the network a set of routing entities (GLSR) that are suitably distributed to constitute the so-called network backbone. All the other GLSRs are divided in routing areas constituted by nearby nodes. Each distinct routing area has to be in contact with the backbone at least in two points to assure effective network survivability. An example of this hierarchical organization of the network is reported in Figure 8.13.

Staring from this structure, a new higher level topology (the area topology) is constructed substituting all the routing areas with virtual nodes; this new topology has for sure at least interconnection degree equal to 2 due to the presence of the backbone and to the way in which the areas have been constructed.

The routing between nodes in different areas is performed in two steps: first the route is individuated in the area topology, then, knowing for each traversed areas the incoming and the outcoming nodes, the path route is specified also within areas.

8.3.2.2 IS–IS Routing Protocol

IS–IS is designed and optimized to provide routing within a single network domain, while passage through a domain interface for multidomain GLSPs have to be realized with a different protocol (e.g., the BGP, see Chapter 3, Table 3.1 IP protocol suite). IS–IS is based on the concept of routing domains. An IS–IS routing domain is a network in which all the equipments that perform GLSP routing run the integrated IS–IS routing protocol to support intradomain exchange of routing information.

Figure 8.13 GMPLS partition of the network in routing areas and backbone. On the right of the figure the virtual topology where each routing area is represented by a virtual node is represented.

The underlying goal is to achieve consistent routing information within a domain by having identical link-state databases on all routing entities in that area. Hence, all routing entities in an area are required to be configured in the same way.

IS–IS protocol supports a two-level hierarchy for managing and scaling routing in large networks. A network domain can be divided into small segments known as areas. The topology resulting from the substitution of each area with a virtual node and each connection between nodes in different areas with a connection between the corresponding virtual nodes is called area topology. This construction allows hierarchical routing to be carried out within the domain.

This means that the routing between nodes in different areas is performed in two steps: first the route is individuated in the area topology, then, knowing for each traversed areas the incoming and the outcoming nodes, the path route is specified also within areas. Following the same network representation, IS–IS routing entities are hierarchically ordered depending on their ability to route GLSPs only within their area or also toward other routing areas. In particular routing entities that are only able to route paths within their area are called Level 1 routers (not to confound with Layer 1 entities; this is a specific IS–IS hierarchy completely different from the network layering).

Following the same criterion, routing entities that can route GLSPs only between virtual nodes that represents routing areas are called Level 2 routers (or inter area routers) and finally routing entities that are able to route GLSPs both inside the area and between different areas are considered logically as a superposition of communicating Level 1 and Level 2 routers and are called Level 1–2 routers.

Level 2 routers are interarea routers that can only form relationships with other Level 2 routers; in other words, they can route through the area topology constituted by virtual nodes representing the routing areas. Similarly Level 1 routers can exchange information only with other Level 1 routers. Level 1–2 routers exchange information with both levels and are used to connect the interarea routers with the intraarea routers.

Integrated IS–IS uses the legacy CLNP node-based addresses [35–37] to identify routers even in pure IP environments. The CLNP addresses, which are known as network service access points (NSAPs), are made up of three components: an area identifier (area ID) prefix, followed by a system identifier (SysID), and an N-selector. The N-selector refers to the network service user, such as a transport protocol or the routing layer.

A group of routing entities belongs to the same area if they share a common area ID. Note that all routing entities in an IS–IS domain must be associated with a single physical area, which is determined by the area ID in the router address. IS–IS packets can be divided into three categories:

Hello packets are used to establish and maintain adjacencies between IS–IS neighbors.

Link-state packets are used to distribute routing information between IS–IS nodes.

Sequence number packets are used to control distribution of linkstate packets, essentially providing mechanisms for synchronization of the distributed link-state databases on the routers in an IS-IS routing area.

Each type of IS–IS packet is made up of a header and a number of optional variable-length fields organized in records and containing specific routing-related information. The variable length fields are called TLV as in the case of the OSPF-TE from the form of the record that is organized into three fields: type, length, and value.

Each variable-length field has a 1-byte type label (the Type field) that describes the information it contains. The second field is a length information that contains the length of the third field whose content is the specific information that is carried by the TLV (see Table 8.1 for examples). The different types of IS–IS packets have a slightly different composition of the header, but the first 8 bytes are repeated in all packets. Each type of packet then has its own set of additional header fields, which are followed by TLVs.

Table 8.2 lists TLVs specified in ISO 10589 while Table 8.3 lists the TLVs introduced by IETF in RFC 1195.

Enhancements to the original IS–IS protocol are normally achieved through the introduction of new TLV fields. Note that a key strength of the IS–IS protocol design lies in the ease of extension through the introduction of new TLVs rather than new packet types.

The routing layer functions provided by the IS–IS protocol can be grouped into two main categories: subnetwork-dependent functions and subnetwork-independent functions.

Table 8.2 List of TLVs Specified in ISO 10589

Table 8.3 List of the TLVs Introduced by IETF in RFC 1195

The subnetwork-dependent functions involve operations for detecting, forming, and maintaining routing adjacencies with neighboring routing entities over various types of interconnecting network links. The subnetwork-independent functions provide the capabilities for exchange and processing of routing information and related control information between adjacent routers as validated by the subnetwork-dependent functions.

The routing information base is composed of two databases that are central to the operation of IS–IS: the link-state database and the forwarding database. The link-state database is fed with routing information by the update process. The update process generates local link-state information, based on the adjacency database built by the subnetwork- dependent functions, which the router advertises to all its neighbors in link-state packets.

A routing entity also receive similar link-state information from every adjacent neighbor, keeps copies of GLSPs received, and readvertises them to other neighbors. Routing entities in an area maintain identical link-state databases, which are synchronized using SNPs. This means that routing entities in an area will have identical views of the area topology, which is necessary for routing consistency within the area. The decision process creates the forwarding database by running the Dijkstra algorithm on the link-state database so selecting for each GLSP and for the forward of each control packet the shortest path in terms of whatever weight is associated to each network link in the topology database.

The IS–IS forwarding database, which is made up of only best IS–IS routes, is fed into the routing information base.

8.3.2.3 Brief Comparison between OSPF-TE and IS–IS

IS–IS and OSPF-TE are link-state protocols, that is routing protocols that perform routing on the ground of information on the state of the links of the network that is derived from a map of the network topology that each routing network element stores and updates using the protocol flooding functionality. Moreover, OSPF-TE and IS–IS have several other common characteristics:

They use the Dijkstra algorithm for computing the best path through the network.

They allow any link tag as weight for the Dijkstra calculation.

They exchange via protocol packets records composed by sets of TVL fields that describe the characteristics of the link they are advertising.

They use the concept of subnetwork and virtual node to leverage on hierarchical routing to simplify routing tables and routing computation.

They can use multicast to discover neighboring routing equipment using hello packets.

They can support authentication of routing updates.

As a result, OSPF-TE and IS–IS are conceptually similar.

While OSPF-TE is natively built to route IP and is itself a Layer 3 protocol that runs on top of IP, so that OSPF-TE packets are a particular type of IP packets, IS–IS is natively an OSI network layer protocol (it is at the same layer as CLNS [35–37]), a fact that may have allowed OSPF to be more widely used. IS–IS does not use IP to carry routing information messages and its packets are not IP packets.

In line of principle IS–IS does not need IP addressing, even if, since some form of addressing is needed to route GLSPs through the network, in practical applications IP addressing is almost always used in conjunction with IS–IS.

Routing entities of a network implementing IS–IS build a topological representation of the network. This map indicates the subnetworks that each IS–IS enabled equipment can reach, and the lowest cost (shortest) path to any subnetwork is used to forward IS–IS packets and to route GLSPs.

Due to its structure, IS–IS is also more scalable that OSPF-TE: given the same set of resources, IS–IS can support more routing entities in an area with respect to OSPF-TE. IS–IS differs from OSPF-TE also in the way that “routing areas” are defined and interarea routing is performed. No hierarchy similar to that present in IS–IS is present in OSPF-TE, whose routing entities are all on the same ground, capable of interarea or intraarea routing when it is needed.

In OSPF, areas are delineated on the interface such that an area border router (ABR) is actually in two or more areas at once, effectively creating the borders between areas inside the ABR, whereas in IS–IS area borders are in between routers, designated as Level 2 or Level 1–2. The result is that an IS–IS router is only ever a part of a single area. IS–IS also does not require the backbone (or Area Zero as it is sometimes called) through which all interarea traffic must pass, while OSPF-TE needs this definition to manage multiarea routing domains.

The logical view is that OSPF creates something of a spider web or star topology of many areas all attached directly to area zero and IS–IS by contrast creates a logical topology of a backbone of Level 2 routers with branches of Level 1–2 and Level 1 routers forming the individual areas.

8.3.2.4 Resource Reservation Protocol with Traffic Engineering Extensions

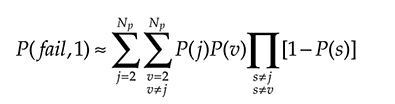

Because RSVP-TE inherits its design from the RSVP protocol, it is based on distributing various signaling objects. These signaling objects have been grouped. These groups contain mandatory and optional signaling objects; encapsulating the groups with a common header, distinct signaling messages are created.

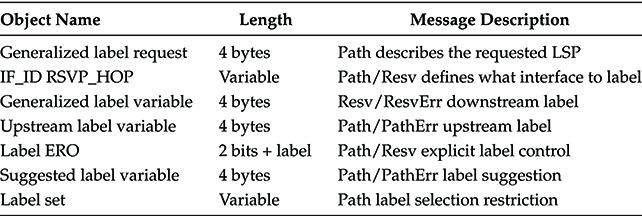

When a GLSR receives a signaling message, the resident objects are examined and interpreted based upon the message type indicated by the common header. Extending the RSVP-TE protocol for GMPLS was thus a matter of generalizing existing signaling objects, including some new objects that are reported in Table 8.4, and adding some minor signaling enhancements.

Considering the RSVP-TE protocol with GMPLS extensions for signaling, each signaling message contains a common 8-byte header. The common header defines the message type followed by the encapsulated objects. Encapsulated objects are of variable length and contain a 4-byte header defining the object length, class, and type within class.

Table 8.4 New Objects Introduced in the Adaptation of RSVP-TE for the GMPLS

The first signaling activity of RSVP-TE is to distribute the labels that are used in the GLSPs. The mechanism used by RSVP-TE is downstream-on-demand label distribution: this means that upstream GLSRs request downstream GLSRs to select labels for the links connecting them. In this way, each GLSR acknowledges a request to install an GLSP, forward the request to the next downstream hop, and awaits the response.

As a response is returned upstream, the GLSR can install a cross-connection (i.e., state describing ingoing and outgoing labeltointerface mappings and associated network resources) for this GLSP. Here, downstream is defined as the direction from GLSP ingress to GLSP egress for a unidirectional GLSP. In the case a bidirectional GLSP is to be set up, this is done by contemporary setup of two unidirectional GLSPs.

Once all the labels are assigned, the GLSP can be set up. As a matter of fact, GLSR can route the signal incoming from an input port toward the output port with the same label and the GLSP is identified in the path topology through the vectors whose elements are the labels of the links traversed by the path in the correct order.

As for all signaling protocols, RSVP-TE operation is composed of three main functions:

GLSP setup that is carried out via labels distribution.

GLSP management, a function composed by various elements among which error management is probably the most important.

GLSP tear down that is carried out by labels removal.

8.3.2.4.1 GLSP Setup

Establishing bidirectional GLSPs employing RSVP-TE for signaling requires full sets of Path and Reservation (Resv) messages to be exchanged between two GLSRs. This procedure is sketched in Figure 8.14.

Initially, a sender GLSR (GLSP ingress) requests a GLSP to be set up by sending a Path message downstream to the next hop. This Path message contains an UPSTREAM_LABEL object defining the label to use in the upstream direction, objects describing the data flow, and a GENERALIZED_LABEL_REQUEST object for requesting the GLSP.

If the Path message is successfully received, the next hop then reserves path state to enable correct signaling of returning Resv messages and saves the upstream label. The next hop then selects its own upstream label, creates state for the upstream direction, replaces the upstream label in the Path message, and passes it on downstream to the next hop. This procedure is repeated until the next hop is the receiver GLSR (GLSP egress). The GLSP has now been established in the upstream direction, but no state has been saved in the downstream direction. Consequently, the receiver GLSR selects a downstream label and returns a Resv message upstream. This Resv message mimics the Path message, but inserts a GENERALIZED_LABEL object defining the selected downstream label. If the Resv message is received successfully, the previous hop (the signaling direction has changed) then sets state for the downstream direction, replaces the downstream label with its own selected label, and passes the Resv message further upstream.

Figure 8.14 Establishment of a bidirectional GLSPs employing RSVP-TE for signaling; full sets of Path and Reservation (Resv) messages are exchanged between two GLSRs.

This procedure is repeated until the sender GLSR successfully receives the Resv message corresponding to a dispatched Path message. Now, the request has been fully established and is ready to tunnel data in both directions. This procedure is shown in Figure 8.14 while the final aspect of the GLSP as a cascade of labels is sketched in Figure 8.15.

It is possible using RSVP-TE to route a certain GLSP on a preassigner route. This is needed in order to traffic engineer the network. As a matter of fact, if a generic network is considered, the OSPF routing criterion used both by OSPF-TE and IS–IS is a suboptimum routing strategy. It is not difficult to find examples where routing a few GLSPs along a longer path allows a more efficient exploitation of the network resources.

Figure 8.15 Generalized Label Assignment for a bidirectional GLSP.

Figure 8.16 An example of suboptimum routing performed with OSPF-TE. Each link between a couple of GLSR is assumed to have enough capacity to support only two GLSPs.

Such an example is provided in Figure 8.16. Here a network of five nodes with an incomplete mesh connection is represented. For simplicity let us image that each link is physi-cally realized with a WDM system with two wavelengths and that all the wavelengths have the same capacity, which we assume equal to 1.

Let us also imagine to tag every link with a weight that is the “cost” of using a wavelength along that link. We could also think that the weight is the link length and that it is assumed as a cost due to the fact that WDM systems have a cost roughly proportional to length.

Such GLSPs, each of which for simplicity is imaging to have unitary capacity, so that it completely uses a single wavelength, correspond to traffic requests arriving at the network control plane one after the other so that they are routed in successive times and when one of them is routed the network control plane does not know what set of traffic requests will arrive in the future.