8. Audio

The iOS device is a media master; its built-in iPod features expertly handle both audio and video. The iOS SDK exposes that functionality to developers. A rich suite of classes simplifies media handling via playback, search, and recording. This chapter introduces recipes that use those classes for audio, presenting media to your users and letting your users interact with that media. You see how to build audio players and audio recorders. You discover how to browse the iPod library and how to choose what items to play. The recipes you’re about to encounter provide step-by-step demonstrations showing how to add these media-rich features to your own apps.

Recipe: Playing Audio with AVAudioPlayer

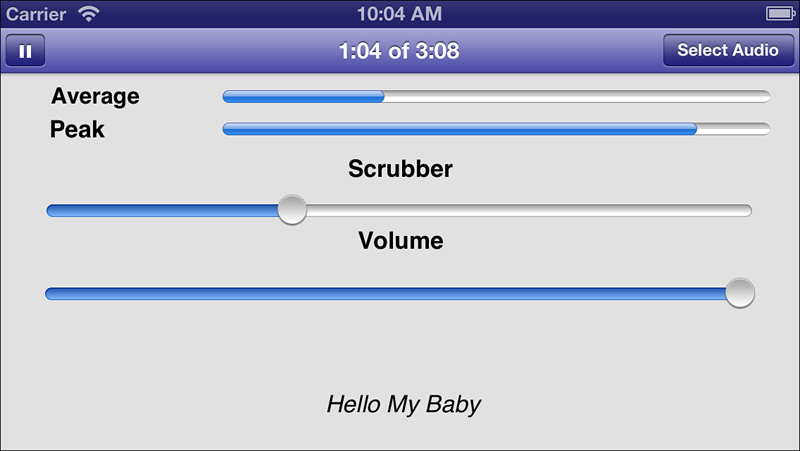

The AVAudioPlayer class plays back audio data. Part of the AVFoundation framework, it provides a simple-to-use class that offers numerous features, several of which are highlighted in Figure 8-1. With this class, you can load audio, play it, pause it, stop it, monitor average and peak levels, adjust the playback volume, and set and detect the current playback time. All these features are available with little associated development cost. As you are about to see, the AVAudioPlayer class provides a solid API.

Figure 8-1. The features highlighted in this screenshot were built with a single class, AVAudioPlayer. This class provides time monitoring (in the title bar center), sound levels (average and peak), scrubbing and volume sliders, and play/pause control (at the right of the title bar).

Initializing an Audio Player

The audio playback features provided by AVAudioPlayer take little effort to implement in your code. Apple has provided an uncomplicated class that’s streamlined for loading and playing files.

To begin, allocate a player and initialize it, either with data or with the contents of a local URL. This snippet uses a file URL to point to an audio file. It reports any error involved in creating and setting up the player. You can also initialize a player with data that’s already stored in memory using initWithData:error:. That’s handy for when you’ve already read data into memory (such as during an audio chat) rather than reading from a file stored on the device:

player = [[AVAudioPlayer alloc] initWithContentsOfURL:

[NSURL fileURLWithPath:path] error:&error];

if (!player)

{

NSLog(@"AVAudioPlayer could not be established: %@",

error.localizedFailureReason);

return;

}

When you’ve initialized the player, prepare it for playback. Calling prepareToPlay ensures that when you are ready to play the audio, that playback starts as quickly as possible. The call preloads the player’s buffers and initializes the audio playback hardware:

[player prepareToPlay];

Pause playback at any time by calling pause. Pausing freezes the player’s currentTime property. You can resume playback from that point by calling play again.

Halt playback entirely with stop. Stopping playback undoes the buffered setup you initially established with prepareToPlay. It does not, however, set the current time back to 0.0; you can pick up from where you left off by calling play again, just as you would with pause. You may experience starting delays as the player reloads its buffers. You normally stop playback before switching new media.

Monitoring Audio Levels

When you intend to monitor audio levels, start by setting the meteringEnabled property. Enabling metering lets you check levels as you play back or record audio:

player.meteringEnabled = YES;

The AVAudioPlayer class provides feedback for average and peak power, which you can retrieve on a per-channel basis. Query the player for the number of available channels (via the numberOfChannels property), and then request each power level by supplying a channel index. A mono signal uses channel 0, as does the left channel for a stereo recording.

In addition to enabling metering as a whole, you need to call updateMeters each time you want to test your levels; this AV player method updates the current meter levels. After you do so, use the peakPowerForChannel: and averagePowerForChannel: methods to read those levels. Recipe 8-6, which appears later in this chapter, shows the details of what’s likely going on under the hood in the player when it requests those power levels. You can see that code requests the meter levels and then extracts either the peak or average power. The AVAudioPlayer class hides those details, simplifying access to these values.

The AVAudioPlayer measures power in Decibels, which is supplied in floating-point format. Decibels use a logarithmic scale to measure sound intensity. Power values range from 0 dB at the highest to some negative value representing less-than-maximum power. The lower the number (and they are all negative), the weaker the signal will be:

int channels = player.numberOfChannels;

[player updateMeters];

for (int i = 0; i < channels; i++)

{

// Log the peak and average power

NSLog(@"%d %0.2f %0.2f",

[player peakPowerForChannel:i],

[player averagePowerForChannel:i];

}

// Show average and peak values on the two meters for primary channel

meter1.progress = pow(10, 0.05f * [player averagePowerForChannel:0]);

meter2.progress = pow(10, 0.05f * [player peakPowerForChannel:0]);

To query the audio player gain (that is, its volume), use the volume property. This property returns a floating-point number, here between 0.0 and 1.0, and applies specifically to the player volume rather than the system audio volume. You can set this property and read it. This snippet can be used with a target-action pair to update the volume when the user manipulates an onscreen volume slider:

- (void) setVolume: (id) sender

{

// Set the audio player gain to the current slider value

if (player) player.volume = volumeSlider.value;

}

Playback Progress and Scrubbing

Two properties, currentTime and duration, help monitor the playback progress of your audio. To find the current playback percentage, divide the current time by the total audio duration:

progress = player.currentTime / player.duration;

To scrub your audio, that is, let your user select the current playback position within the audio track, you may want to pause playback. Although the AVAudioPlayer class in current iOS releases can provide audio-based scrubbing hints, these hints can sound jerky. You may want to wait until the scrubbing finishes to begin playback at the new location. Test out live scrubbing using the sample code for this recipe, and you’ll hear the relative roughness of this feature.

Make sure to implement at least two target-action pairs if you base your scrubber on a standard UISlider. For the first target-action item, mask UIControlEventTouchDown with UIControlEventValueChanged. These event types enable you to catch the start of a user scrub and whenever the value changes. If you choose to pause on scrub, respond to these events by pausing the audio player and provide some visual feedback for the newly selected time:

- (void) scrub: (id) sender

{

// Pause the player -- optional

[self.player pause];

self.navigationItem.leftBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemPlay, @selector(play:));

// Calculate the new current time

player.currentTime = scrubber.value * player.duration;

// Update the title with the current time

self.title = [NSString stringWithFormat:@"%@ of %@",

[self formatTime:self.player.currentTime],

[self formatTime:self.player.duration]];

}

For the second target-action pair, this mask of three values—UIControlEventTouchUpInside | UIControlEventTouchUpOutside | UIControlEventCancel—enables you to catch release events and touch interruptions. Upon release, you want to start playing at the new time set by the user’s scrubbing:

- (void) scrubbingDone: (id) sender

{

// resume playback here

[self play:nil];

}

Catching the End of Playback

Detect the end of playback by setting the player’s delegate and catching the audioPlayerDidFinishPlaying:successfully: delegate callback. That method is a great place to clean up any details, such as reverting the Pause button back to a Play button. Apple provides several system bar button items specifically for media playback:

• UIBarButtonSystemItemPlay

• UIBarButtonSystemItemPause

• UIBarButtonSystemItemRewind

• UIBarButtonSystemItemFastForward

The Rewind and Fast Forward buttons provide the double-arrowed icons that are normally used to move playback to a previous or next item in a playback queue. You could also use them to revert to the start of a track or progress to its end. Unfortunately, the Stop system item is an X, used for stopping an ongoing load operation and not the standard filled square used on many consumer devices for stopping playback or a recording.

Recipe 8-1 puts all these pieces together to create the unified (albeit horribly designed) interface you saw in Figure 8-1. Here, the user can select audio, start playing it back, pause it, adjust its volume, scrub, and so forth.

Recipe 8-1. Playing Back Audio with AVAudioPlayer

// Set the meters to the current peak and average power

- (void) updateMeters

{

// Retrieve current values

[player updateMeters];

// Show average and peak values on the two meters

meter1.progress = pow(10, 0.05f * [player averagePowerForChannel:0]);

meter2.progress = pow(10, 0.05f * [player peakPowerForChannel:0]);

// And on the scrubber

scrubber.value = (player.currentTime / player.duration);

// Display the current playback progress in minutes and seconds

self.title = [NSString stringWithFormat:@"%@ of %@",

[self formatTime:player.currentTime],

[self formatTime:player.duration]];

}

// Pause playback

- (void) pause: (id) sender

{

if (player)

[player pause];

// Update the play/pause button

self.navigationItem.leftBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemPlay, @selector(play:));

// Disable interactive elements

meter1.progress = 0.0f;

meter2.progress = 0.0f;

volumeSlider.enabled = NO;

scrubber.enabled = NO;

// Stop listening for meter updates

[timer invalidate];

}

// Start or resume playback

- (void) play: (id) sender

{

if (player)

[player play];

// Enable the interactive elements

volumeSlider.value = player.volume;

volumeSlider.enabled = YES;

scrubber.enabled = YES;

// Update the play/pause button

self.navigationItem.leftBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemPause, @selector(pause:));

// Start listening for meter updates

timer = [NSTimer scheduledTimerWithTimeInterval:0.1f

target:self selector:@selector(updateMeters)

userInfo:nil repeats:YES];

}

// Update the volume

- (IBAction) setVolume: (id) sender

{

if (player) player.volume = volumeSlider.value;

}

// Catch the end of the scrubbing

- (IBAction) scrubbingDone: (id) sender

{

[self play:nil];

}

// Update playback point during scrubs

- (IBAction) scrub: (id) sender

{

// Calculate the new current time

player.currentTime = scrubber.value * player.duration;

// Update the title, nav bar

self.title = [NSString stringWithFormat:@"%@ of %@",

[self formatTime:player.currentTime],

[self formatTime:player.duration]];

}

// Prepare but do not play audio

- (BOOL) prepAudio

{

NSError *error;

if (![[NSFileManager defaultManager] fileExistsAtPath:path])

return NO;

// Establish player

player = [[AVAudioPlayer alloc] initWithContentsOfURL:

[NSURL fileURLWithPath:path] error:&error];

if (!player)

{

NSLog(@"AVAudioPlayer could not be established: %@",

error.localizedFailureReason);

return NO;

}

[player prepareToPlay];

player.meteringEnabled = YES;

player.delegate = self;

// Initialize GUI

meter1.progress = 0.0f;

meter2.progress = 0.0f;

self.navigationItem.leftBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemPlay, @selector(play:));

scrubber.enabled = NO;

return YES;

}

// On finishing, return to quiescent state

- (void)audioPlayerDidFinishPlaying:(AVAudioPlayer *)player

successfully:(BOOL)flag

{

self.navigationItem.leftBarButtonItem = nil;

scrubber.value = 0.0f;

scrubber.enabled = NO;

volumeSlider.enabled = NO;

[self prepAudio];

}

// Select a media file

- (void) pick: (UIBarButtonItem *) sender

{

self.navigationItem.rightBarButtonItem.enabled = NO;

// Each of these media files is in the public domain via archive.org

NSArray *choices = [@"Alexander's Ragtime Band*Hello My Baby*

Ragtime Echoes*Rhapsody In Blue*A Tisket A Tasket*In the Mood"

componentsSeparatedByString:@"*"];

NSArray *media = [@"ARB-AJ*HMB1936*ragtime*RhapsodyInBlue*Tisket*

InTheMood" componentsSeparatedByString:@"*"];

UIActionSheet *actionSheet = [[UIActionSheet alloc]

initWithTitle:@"Musical selections" delegate:nil

cancelButtonTitle:IS_IPAD ? nil : @"Cancel"

destructiveButtonTitle:nil otherButtonTitles:nil];

for (NSString *choice in choices)

[actionSheet addButtonWithTitle:choice];

// See the Core Cookbook, Alerts Chapter for details on

// the ModalSheetDelegate class

ModalSheetDelegate *msd =

[ModalSheetDelegate delegateWithSheet:actionSheet];

actionSheet.delegate = msd;

int answer = [msd showFromBarButtonItem:sender animated:YES];

self.navigationItem.rightBarButtonItem.enabled = YES;

if (IS_IPAD)

{

if (answer == -1) return; // cancel

if (answer >= choices.count)

return;

}

else

{

if (answer == 0) return; // cancel

if (answer > choices.count)

return;

answer--;

}

// no action, if already playing

if ([nowPlaying.text isEqualToString:[choices objectAtIndex:answer]])

return;

// stop any current item

if (player)

[player stop];

// Load in the new audio and play it

path = [[NSBundle mainBundle]

pathForResource:[media objectAtIndex:answer] ofType:@"mp3"];

nowPlaying.text = [choices objectAtIndex:answer];

[self prepAudio];

[self play:nil];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-6-Advanced-Cookbook and go to the folder for Chapter 8.

Recipe: Looping Audio

Loops help present ambient background audio without having to respond to delegate callbacks each time the loop finishes a single play through. You can use a loop to play an audio snippet several times or play it continuously. Recipe 8-2 demonstrates an audio loop that plays only during the presentation of a particular video controller, providing an aural backdrop for that controller.

You set the number of times an audio plays before the playback ends. A high number (such as 999999) essentially provides for an unlimited number of loops. For example, a 4-second loop would take more than 1,000 hours to play back fully with a loop number that high:

// Prepare the player and set the loops

[self.player prepareToPlay];

[self.player setNumberOfLoops:999999];

Recipe 8-2 uses looped audio for its primary view controller. Whenever its view is onscreen, the loop plays in the background. Hopefully, you choose a loop that’s unobtrusive, sets the mood for your application, and smoothly transitions from the end of playback to the beginning.

This recipe uses a fading effect to introduce and hide the audio. It fades the loop into hearing when the view appears and fades it out when the view disappears. It accomplishes this with a simple approach. A loop iterates through volume levels, from 0.0 to 1.0 on appearing and 1.0 down to 0.0 on disappearing. A call to NSThread’s built-in sleep functionality adds the time delays (one-tenth of a second between each volume change) without affecting the audio playback.

Recipe 8-2. Creating Ambient Audio Through Looping

- (BOOL) prepAudio

{

// Check for the file.

NSError *error;

NSString *path = [[NSBundle mainBundle]

pathForResource:@"loop" ofType:@"mp3"];

if (![[NSFileManager defaultManager]

fileExistsAtPath:path]) return NO;

// Initialize the player

player = [[AVAudioPlayer alloc] initWithContentsOfURL:

[NSURL fileURLWithPath:path] error:&error];

if (!player)

{

NSLog(@"Could not establish AV Player: %@",

error.localizedFailureReason);

return NO;

}

// Prepare the player and set the loops large

[player prepareToPlay];

[player setNumberOfLoops:999999];

return YES;

}

- (void) fadeIn

{

player.volume = MIN(player.volume + 0.05f, 1.0f);

if (player.volume < 1.0f)

[self performSelector:@selector(fadeIn)

withObject:nil afterDelay:0.1f];

}

- (void) fadeOut

{

player.volume = MAX(player.volume - 0.1f, 0.0f);

if (player.volume > 0.05f)

[self performSelector:@selector(fadeOut)

withObject:nil afterDelay:0.1f];

else

[player pause];

}

- (void) viewDidAppear: (BOOL) animated

{

// Start playing at no-volume

player.volume = 0.0f;

[player play];

// fade in the audio over a second

[self fadeIn];

// Add the push button

self.navigationItem.rightBarButtonItem.enabled = YES;

}

- (void) viewWillDisappear: (BOOL) animated

{

[self fadeOut];

}

- (void) push

{

// Create a simple new view controller

UIViewController *vc = [[UIViewController alloc] init];

vc.view.backgroundColor = [UIColor whiteColor];

vc.title = @"No Sounds";

// Disable the now-pressed right-button

self.navigationItem.rightBarButtonItem.enabled = NO;

// Push the new view controller

[self.navigationController

pushViewController:vc animated:YES];

}

- (void) loadView

{

[super loadView];

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Push", @selector(push));

self.title = @"Looped Sounds";

[self prepAudio];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-6-Advanced-Cookbook and go to the folder for Chapter 8.

Recipe: Handling Audio Interruptions

When users receive phone calls during audio playback, that audio fades away. The standard answer/decline screen appears. As this happens, AVAudioPlayer delegates receive the audioPlayerBeginInterruption: callback, as shown in Recipe 8-3. The audio session deactivates, and the player pauses. You cannot restart playback until the interruption ends.

Should the user accept the call, the application delegate receives an applicationWillResignActive: callback. When the call ends, the application relaunches (with an applicationDidBecomeActive: callback). If the user declines the call or if the call ends without an answer, the delegate is instead sent audioPlayerEndInterruption:. You can resume playback from this method.

If it is vital that playback resumes after accepting a call, and the application needs to relaunch, you can save the current time, as shown in Recipe 8-3. The viewDidAppear: method in this recipe, which is called on most kinds of resumptions, checks for a stored interruption value in the user defaults. When it finds one, it uses this to set the current time for resuming playback.

This approach takes into account that the application relaunches rather than resumes after the call finishes. You do not receive the end interruption callback when the user accepts a call.

Recipe 8-3. Storing the Interruption Time for Later Pickup

- (BOOL) prepAudio

{

NSError *error;

NSString *path = [[NSBundle mainBundle]

pathForResource:@"MeetMeInSt.Louis1904" ofType:@"mp3"];

if (![[NSFileManager defaultManager]

fileExistsAtPath:path]) return NO;

// Initialize the player

player = [[AVAudioPlayer alloc]

initWithContentsOfURL:[NSURL fileURLWithPath:path]

error:&error];

player.delegate = self;

if (!player)

{

NSLog(@"Could not establish player: %@",

error.localizedFailureReason);

return NO;

}

[player prepareToPlay];

return YES;

}

- (void)audioPlayerDidFinishPlaying:(AVAudioPlayer *)aPlayer

successfully:(BOOL)flag

{

// just keep playing

[player play];

}

- (void)audioPlayerBeginInterruption:(AVAudioPlayer *)aPlayer

{

// perform any interruption handling here

fprintf(stderr, "Interruption Detected

");

[[NSUserDefaults standardUserDefaults]

setFloat:[player currentTime] forKey:@"Interruption"];

}

- (void)audioPlayerEndInterruption:(AVAudioPlayer *)aPlayer

{

// resume playback at the end of the interruption

fprintf(stderr, "Interruption ended

");

[player play];

// remove the interruption key. it won't be needed

[[NSUserDefaults standardUserDefaults]

removeObjectForKey:@"Interruption"];

}

- (void) viewDidAppear:(BOOL)animated

{

[self prepAudio];

// Check for previous interruption

if ([[NSUserDefaults standardUserDefaults]

objectForKey:@"Interruption"])

{

player.currentTime =

[[NSUserDefaults standardUserDefaults]

floatForKey:@"Interruption"];

[[NSUserDefaults standardUserDefaults]

removeObjectForKey:@"Interruption"];

}

// Start playback

[player play];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-6-Advanced-Cookbook and go to the folder for Chapter 8.

Recipe: Recording Audio

The AVAudioRecorder class simplifies audio recording in your applications. It provides the same API friendliness as AVAudioPlayer, along with similar feedback properties. Together, these two classes leverage development for many standard application audio tasks.

Start your recordings by establishing an AVAudioSession. Use a play and record session if you intend to switch between recording and playback in the same application. Otherwise, use a simple record session (via AVAudioSessionCategoryRecord).

When you have a session, you can check its inputAvailable property. This property indicates that the current device has access to a microphone. The property replaces inputIsAvailable, which was deprecated in iOS 6. If you plan to deploy to pre-6 frameworks, make sure to use the older style property:

- (BOOL) startAudioSession

{

// Prepare the audio session

NSError *error;

AVAudioSession *session = [AVAudioSession sharedInstance];

if (![session

setCategory:AVAudioSessionCategoryPlayAndRecord

error:&error])

{

NSLog(@"Error setting session category: %@",

error.localizedFailureReason);

return NO;

}

if (![session setActive:YES error:&error])

{

NSLog(@"Error activating audio session: %@",

error.localizedFailureReason);

return NO;

}

// inputIsAvailable deprecated in iOS 6

return session.inputAvailable;

}

Recipe 8-4 demonstrates the next step after creating the session. It sets up the recorder and provides methods for pausing, resuming, and stopping the recording.

To start recording, it creates a settings dictionary and populates it with keys and values that describe how the recording should be sampled. This example uses mono Linear PCM sampled 8,000 times a second, which is a fairly low sample rate. Here are a few examples of customizing formats:

• Set AVNumberOfChannelsKey to 1 for mono audio and 2 for stereo.

• Audio formats (AVFormatIDKey) that work well on iOS include kAudioFormatLinearPCM (large files) and kAudioFormatAppleIMA4 (compact files).

• Standard AVSampleRateKey sampling rates include 8,000, 11,025, 22,050, and 44,100.

• For the linear PCM-only bit depth (AVLinearPCMBitDepthKey), use either 16 or 32 bits.

The code allocates a new AVRecorder instance and initializes it with both a file URL and the settings dictionary. When created, this code sets the recorder’s delegate and enables metering. Metering for AVAudioRecorder instances works like metering for AVAudioPlayer instances. You must update the meter before requesting average and peak power levels:

- (void) updateMeters

{

// Show the current power levels

[self.recorder updateMeters];

meter1.progress =

pow(10, 0.05f * [recorder averagePowerForChannel:0]);

meter2.progress =

pow(10, 0.05f * [recorder peakPowerForChannel:0]);

// Update the current recording time

self.title = [NSString stringWithFormat:@"%

[self formatTime:self.recorder.currentTime]];

}

This code also tracks the recording’s currentTime. When you pause a recording, the current time stays still until you resume. Basically, the current time indicates the recording duration to date.

When you’re ready to proceed with the recording, use prepareToRecord and start the recording with record. Issue pause to take a break in recording; resume again with another call to record. The recording picks up where it left off. To finish a recording, use stop. This produces a callback to audioRecorderDidFinishRecording:successfully:. That’s where you can clean up your interface and finalize any recording details.

To retrieve recordings, set the UIFileSharingEnabled key to YES in the application’s Info.plist. This allows your users to access all the recorded material through iTunes’ device-specific Apps pane.

Recipe 8-4. Audio Recording with AVAudioRecorder

- (void)audioPlayerDidFinishPlaying:(AVAudioPlayer *)player

successfully:(BOOL)flag

{

// Prepare UI for recording

self.title = nil;

meter1.hidden = NO;

meter2.hidden = NO;

{

// Return to play and record session

NSError *error;

if (![[AVAudioSession sharedInstance]

setCategory:AVAudioSessionCategoryPlayAndRecord

error:&error])

{

NSLog(@"Error: %@", error.localizedFailureReason);

return;

}

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Record", @selector(record));

}

}

- (void)audioRecorderDidFinishRecording:(AVAudioRecorder *)aRecorder

successfully:(BOOL)flag

{

// Stop monitoring levels, time

[timer invalidate];

meter1.progress = 0.0f;

meter1.hidden = YES;

meter2.progress = 0.0f;

meter2.hidden = YES;

self.navigationItem.leftBarButtonItem = nil;

self.navigationItem.rightBarButtonItem = nil;

NSURL *url = recorder.url;

NSError *error;

// Start playback

player = [[AVAudioPlayer alloc] initWithContentsOfURL:url

error:&error];

if (!player)

{

NSLog(@"Error establishing player for %@: %@",

url, error.localizedFailureReason);

return;

}

player.delegate = self;

// Change audio session for playback

if (![[AVAudioSession sharedInstance]

setCategory:AVAudioSessionCategoryPlayback

error:&error])

{

NSLog(@"Error updating audio session: %@",

error.localizedFailureReason);

return;

}

self.title = @"Playing back recording...";

[player prepareToPlay];

[player play];

}

- (void) stopRecording

{

// This causes the didFinishRecording delegate method to fire

[recorder stop];

}

- (void) continueRecording

{

// resume from a paused recording

[recorder record];

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Done", @selector(stopRecording));

self.navigationItem.leftBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemPause, self,

@selector(pauseRecording));

}

- (void) pauseRecording

{

// pause an ongoing recording

[recorder pause];

self.navigationItem.leftBarButtonItem =

BARBUTTON(@"Continue", @selector(continueRecording));

self.navigationItem.rightBarButtonItem = nil;

}

- (BOOL) record

{

NSError *error;

// Recording settings

NSMutableDictionary *settings =

[NSMutableDictionary dictionary];

settings[AVFormatIDKey] = @(kAudioFormatLinearPCM);

settings[AVSampleRateKey] = @(8000.0f);

settings[AVNumberOfChannelsKey] = @(1); // mono

settings[AVLinearPCMBitDepthKey] = @(16);

settings[AVLinearPCMIsBigEndianKey] = @NO;

settings[AVLinearPCMIsFloatKey] = @NO;

// File URL

NSURL *url = [NSURL fileURLWithPath:FILEPATH];

// Create recorder

recorder = [[AVAudioRecorder alloc] initWithURL:url

settings:settings error:&error];

if (!recorder)

{

NSLog(@"Error establishing recorder: %@",

error.localizedFailureReason);

return NO;

}

// Initialize delegate, metering, etc.

recorder.delegate = self;

recorder.meteringEnabled = YES;

meter1.progress = 0.0f;

meter2.progress = 0.0f;

self.title = @"0:00";

if (![recorder prepareToRecord])

{

NSLog(@"Error: Prepare to record failed");

return NO;

}

if (![recorder record])

{

NSLog(@"Error: Record failed");

return NO;

}

// Set a timer to monitor levels, current time

timer = [NSTimer scheduledTimerWithTimeInterval:0.1f

target:self selector:@selector(updateMeters)

userInfo:nil repeats:YES];

// Update the navigation bar

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Done", @selector(stopRecording));

self.navigationItem.leftBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemPause, self,

@selector(pauseRecording));

return YES;

}

- (BOOL) startAudioSession

{

// Prepare the audio session

NSError *error;

AVAudioSession *session = [AVAudioSession sharedInstance];

if (![session setCategory:AVAudioSessionCategoryPlayAndRecord

error:&error])

{

NSLog(@"Error setting session category: %@",

error.localizedFailureReason);

return NO;

}

if (![session setActive:YES error:&error])

{

NSLog(@"Error activating audio session: %@",

error.localizedFailureReason);

return NO;

}

return session.inputIsAvailable;

}

- (void) viewDidLoad

{

if ([self startAudioSession])

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Record", @selector(record));

else

self.title = @"No Audio Input Available";

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-6-Advanced-Cookbook and go to the folder for Chapter 8.

Recipe: Recording Audio with Audio Queues

In addition to the AVAudioPlayer class, Audio Queues can handle recording and playing tasks in your applications. Audio Queues were needed for recording before the AVAudioRecorder class debuted. Using queues directly helps demonstrate what’s going on under the hood of the AVAudioRecorder class. As a bonus, queues offer full access to the raw underlying audio in HandleInputBuffer. You can do all kinds of interesting things such as signal processing and analysis.

Recipe 8-5 records audio at the Audio Queue level, providing a taste of the C-style functions and callbacks used. This code is heavily based on Apple sample code and specifically showcases functionality that is hidden behind the AVAudioRecorder wrapper.

The settings used in Recipe 8-5’s setupAudioFormat: method have been tested and work reliably on the iOS family of devices. It’s easy, however, to mess up these parameters when trying to customize your audio quality. If you don’t have the parameters set up just right, the queue may fail with little feedback. A quick Google search provides copious settings examples.

Recipe 8-5. Recording with Audio Queues: The Recorder.m Implementation

// Write out current packets as the input buffer is filled

static void HandleInputBuffer (void *aqData,

AudioQueueRef inAQ, AudioQueueBufferRef inBuffer,

const AudioTimeStamp *inStartTime,

UInt32 inNumPackets,

const AudioStreamPacketDescription *inPacketDesc)

{

RecordState *pAqData = (RecordState *) aqData;

if (inNumPackets == 0 &&

pAqData->dataFormat.mBytesPerPacket != 0)

inNumPackets = inBuffer->mAudioDataByteSize /

pAqData->dataFormat.mBytesPerPacket;

if (AudioFileWritePackets(pAqData->audioFile, NO,

inBuffer->mAudioDataByteSize, inPacketDesc,

pAqData->currentPacket, &inNumPackets,

inBuffer->mAudioData) == noErr)

{

pAqData->currentPacket += inNumPackets;

if (pAqData->recording == 0) return;

AudioQueueEnqueueBuffer (pAqData->queue, inBuffer,

0, NULL);

}

}

@implementation Recorder

// Set up the recording format as low quality mono AIFF

- (void)setupAudioFormat:(AudioStreamBasicDescription*)format

{

format->mSampleRate = 8000.0;

format->mFormatID = kAudioFormatLinearPCM;

format->mFormatFlags = kLinearPCMFormatFlagIsBigEndian |

kLinearPCMFormatFlagIsSignedInteger |

kLinearPCMFormatFlagIsPacked;

format->mChannelsPerFrame = 1; // mono

format->mBitsPerChannel = 16;

format->mFramesPerPacket = 1;

format->mBytesPerPacket = 2;

format->mBytesPerFrame = 2;

format->mReserved = 0;

}

// Begin recording

- (BOOL) startRecording: (NSString *) filePath

{

// Many of these calls mirror the process for AVAudioRecorder

// Set up the audio format and the url to record to

[self setupAudioFormat:&recordState.dataFormat];

CFURLRef fileURL = CFURLCreateFromFileSystemRepresentation(

NULL, (const UInt8 *) [filePath UTF8String],

[filePath length], NO);

recordState.currentPacket = 0;

// Initialize the queue with the format choices

OSStatus status;

status = AudioQueueNewInput(&recordState.dataFormat,

HandleInputBuffer, &recordState,

CFRunLoopGetCurrent(),kCFRunLoopCommonModes, 0,

&recordState.queue);

if (status) {

fprintf(stderr, "Could not establish new queue

");

return NO;

}

// Create the output file

status = AudioFileCreateWithURL(fileURL,

kAudioFileAIFFType, &recordState.dataFormat,

kAudioFileFlags_EraseFile, &recordState.audioFile);

if (status)

{

fprintf(stderr, "Could not create file to record audio

");

return NO;

}

// Set up the buffers

DeriveBufferSize(recordState.queue, recordState.dataFormat,

0.5, &recordState.bufferByteSize);

for(int i = 0; i < NUM_BUFFERS; i++)

{

status = AudioQueueAllocateBuffer(recordState.queue,

recordState.bufferByteSize, &recordState.buffers[i]);

if (status) {

fprintf(stderr, "Error allocating buffer %d

", i);

return NO;

}

status = AudioQueueEnqueueBuffer(recordState.queue,

recordState.buffers[i], 0, NULL);

if (status) {

fprintf(stderr, "Error enqueuing buffer %d

", i);

return NO;

}

}

// Enable metering

UInt32 enableMetering = YES;

status = AudioQueueSetProperty(recordState.queue,

kAudioQueueProperty_EnableLevelMetering,

&enableMetering,sizeof(enableMetering));

if (status)

{

fprintf(stderr, "Could not enable metering

");

return NO;

}

// Start the recording

status = AudioQueueStart(recordState.queue, NULL);

if (status)

{

fprintf(stderr, "Could not start Audio Queue

");

return NO;

}

recordState.currentPacket = 0;

recordState.recording = YES;

return YES;

}

// Return the average power level

- (CGFloat) averagePower

{

AudioQueueLevelMeterState state[1];

UInt32 statesize = sizeof(state);

OSStatus status;

status = AudioQueueGetProperty(recordState.queue,

kAudioQueueProperty_CurrentLevelMeter, &state, &statesize);

if (status)

{

fprintf(stderr, "Error retrieving meter data

");

return 0.0f;

}

return state[0].mAveragePower;

}

// Return the peak power level

- (CGFloat) peakPower

{

AudioQueueLevelMeterState state[1];

UInt32 statesize = sizeof(state);

OSStatus status;

status = AudioQueueGetProperty(recordState.queue,

kAudioQueueProperty_CurrentLevelMeter, &state, &statesize);

if (status)

{

fprintf(stderr, "Error retrieving meter data

");

return 0.0f;

}

return state[0].mPeakPower;

}

// There's generally about a one-second delay before the

// buffers fully empty

- (void) reallyStopRecording

{

AudioQueueFlush(recordState.queue);

AudioQueueStop(recordState.queue, NO);

recordState.recording = NO;

for(int i = 0; i < NUM_BUFFERS; i++)

{

AudioQueueFreeBuffer(recordState.queue,

recordState.buffers[i]);

}

AudioQueueDispose(recordState.queue, YES);

AudioFileClose(recordState.audioFile);

}

// Stop the recording after waiting just a second

- (void) stopRecording

{

[self performSelector:@selector(reallyStopRecording)

withObject:NULL afterDelay:1.0f];

}

// Pause after allowing buffers to catch up

- (void) reallyPauseRecording

{

if (!recordState.queue) {

fprintf(stderr, "Nothing to pause

"); return;}

OSStatus status = AudioQueuePause(recordState.queue);

if (status)

{

fprintf(stderr, "Error pausing audio queue

");

return;

}

}

// Pause the recording after waiting a half second

- (void) pause

{

[self performSelector:@selector(reallyPauseRecording)

withObject:NULL afterDelay:0.5f];

}

// Resume recording from a paused queue

- (BOOL) resume

{

if (!recordState.queue)

{

fprintf(stderr, "Nothing to resume

");

return NO;

}

OSStatus status = AudioQueueStart(recordState.queue, NULL);

if (status)

{

fprintf(stderr, "Error restarting audio queue

");

return NO;

}

return YES;

}

// Return the current recording duration

- (NSTimeInterval) currentTime

{

AudioTimeStamp outTimeStamp;

OSStatus status = AudioQueueGetCurrentTime (

recordState.queue, NULL, &outTimeStamp, NULL);

if (status)

{

fprintf(stderr, "Error: Could not retrieve current time

");

return 0.0f;

}

// 8000 samples per second

return outTimeStamp.mSampleTime / 8000.0f;

}

// Return whether the recording is active

- (BOOL) isRecording

{

return recordState.recording;

}

@end

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-6-Advanced-Cookbook and go to the folder for Chapter 8.

Recipe: Picking Audio with the MPMediaPickerController

The MPMediaPickerController class provides an audio equivalent for the image-picking facilities of the UIImagePickerController class. It allows users to choose an item or items from their music library including music, podcasts, and audio books. The iPod-style interface allows users to browse via playlists, artists, songs, albums, and more.

To use this class, allocate a new picker and initialize it with the kinds of media to be used. For audio, you can choose from MPMediaTypeMusic, MPMediaTypePodcast, MPMediaTypeAudioBook, and MPMediaTypeAnyAudio. Video types are also available for movies, TV shows, video podcasts, music videos, and iTunesU. These are flags and can be or’ed together to form a mask:

MPMediaPickerController *mpc = [[MPMediaPickerController alloc]

initWithMediaTypes:MPMediaTypeAnyAudio];

mpc.delegate = self;

mpc.prompt = @"Please select an item";

mpc.allowsPickingMultipleItems = NO;

[self presentViewController:mpc animated:YES];

Set a delegate that conforms to MPMediaPickerControllerDelegate and optionally set a prompt. The prompt is text that appears at the top of the media picker, as shown in Figure 8-2. When you choose to allow multiple item selection, the Cancel button on the standard picker is replaced by the label Done. Normally, the dialog ends when a user taps a track. With multiple selection, users can keep picking items until they press the Done button. Selected items are updated to use grayed text.

Figure 8-2. In this multiple selection media picker, already selected items appear in gray. Users tap Done when finished. An optional prompt field (here, Please Select an Item) appears above the normal picker elements.

The mediaPicker:didPickMediaItems: delegate callback handles the completion of a user selection. The MPMediaItemCollection instance that is passed as a parameter can be enumerated by accessing its items. Each item is a member of the MPMediaItem class and can be queried for its properties, as shown in Recipe 8-6. Recipe 8-6 uses a media picker to select multiple music tracks. It logs the items the user selected by artist and title.

Consult Apple’s MPMediaItem class documentation for the available properties for media items, the type they return, and whether they can be used to construct a media property predicate. Building queries and using predicates is discussed in the next section.

Keep in mind that media item properties are not Objective-C properties. You must retrieve them using the valueForProperty: method, although it’s easy to create a wrapper class that does provide these items as real properties via Objective-C method calls.

Recipe 8-6. Selecting Music Items from the iPod Library

- (void)mediaPicker: (MPMediaPickerController *)mediaPicker

didPickMediaItems:(MPMediaItemCollection *)mediaItemCollection

{

for (MPMediaItem *item in [mediaItemCollection items])

NSLog(@"[%@] %@",

[item valueForProperty:MPMediaItemPropertyArtist],

[item valueForProperty:MPMediaItemPropertyTitle]);

[self performDismiss];

}

- (void)mediaPickerDidCancel:(MPMediaPickerController *)mediaPicker

{

if (IS_IPHONE)

[self dismissViewControllerAnimated:YES completion:nil];

}

// Popover was dismissed

- (void)popoverControllerDidDismissPopover:

(UIPopoverController *)aPopoverController

{

popoverController = nil;

}

// Start the picker session

- (void) action: (UIBarButtonItem *) bbi

{

MPMediaPickerController *mpc =

[[MPMediaPickerController alloc]

initWithMediaTypes:MPMediaTypeMusic];

mpc.delegate = self;

mpc.prompt = @"Please select an item";

mpc.allowsPickingMultipleItems = YES;

[self presentController:mpc];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-6-Advanced-Cookbook and go to the folder for Chapter 8.

Creating a Media Query

Media Queries enable you to filter your iPod library contents, limiting the scope of your search. Table 8-1 lists the nine class methods that MPMediaQuery provides for predefined searches. Each query type controls the grouping of the data returned. Each collection is organized as tracks by album, by artist, by audio book, and so on.

This approach reflects the way that iTunes works on the desktop. In iTunes, you select a column to organize your results, but you search by entering text into the application’s Search field.

Building a Query

Count the number of albums in your library using an album query. This snippet creates that query and then retrieves an array, each item of which represents a single album. These album items are collections of individual media items. A collection may contain a single track or many:

MPMediaQuery *query = [MPMediaQuery albumsQuery];

NSArray *collections = query.collections;

NSLog(@"You have %d albums in your library

", collections.count);

Many iOS users have extensive media collections often containing hundreds or thousands of albums, let alone individual tracks. A simple query like this one may take several seconds to run and return a data structure that represents the entire library.

A search using a different query type returns collections organized by that type. You can use a similar approach to recover the number of artists, songs, composers, and so on.

Using Predicates

A media property predicate efficiently filters the items returned by a query. For example, you might want to find only those songs whose title matches the phrase “road.” The following snippet creates a new songs query and adds a filter predicate to search for that phrase. The predicate is constructed with a value (the search phrase), a property (searching the song title), and a comparison type (in this case, “contains”). Use MPMedia-PredicateComparisonEqualTo for exact matches and MPMediaPredicate-ComparisonContains for substring matching:

MPMediaQuery *query = [MPMediaQuery songsQuery];

// Construct a title comparison predicate

MPMediaPropertyPredicate *mpp = [MPMediaPropertyPredicate

predicateWithValue:@"road"

forProperty:MPMediaItemPropertyTitle

comparisonType:MPMediaPredicateComparisonContains];

[query addFilterPredicate:mpp];

// Recover the collections

NSArray *collections = query.collections;

NSLog(@"You have %d matching tracks in your library

",

collections.count);

// Iterate through each item, logging the song and artist

for (MPMediaItemCollection *collection in collections)

{

for (MPMediaItem *item in [collection items])

{

NSString *song = [item valueForProperty:

MPMediaItemPropertyTitle];

NSString *artist = [item valueForProperty:

MPMediaItemPropertyArtist];

NSLog(@"%@, %@", song, artist);

}

}

Note

If you’d rather use regular predicates with your media collections than media property predicates, I created an MPMediaItem properties category (http://github.com/erica/MPMediaItem-Properties). This category enables you to apply standard NSPredicate queries against collections, such as those returned by a multiple-item selection picker.

Recipe: Using the MPMusicPlayerController

Cocoa Touch includes a simple-to-use music player class that works seamlessly with media collections. Despite what its name implies, the MPMusicPlayerController class is not a view controller. It provides no onscreen elements for playing back music. Instead, it offers an abstract controller that handles playing and pausing music.

It publishes optional notifications when its playback state changes. The class offers two shared instances: the iPodMusicPlayer and an applicationMusicPlayer. Always use the former. It provides reliable state change feedback, which you want to catch programmatically.

Initialize the player controller by calling setQueueWithItemCollection: with an MPMediaItemCollection:

[[MPMusicPlayerController iPodMusicPlayer]

setQueueWithItemCollection: songs];

Alternatively, you can load a queue with a media query. For example, you might set a playlistsQuery matching a specific playlist phrase or an artist query to search for songs by a given artist. Use setQueueWithQuery: to generate a queue from an MPMediaQuery instance.

If you want to shuffle playback, assign a value to the controller’s shuffleMode property. Choose from MPMusicShuffleModeDefault, which respects the user’s current setting, MPMusicShuffleModeOff (no shuffle), MPMusicShuffleModeSongs (song-by-song shuffle), and MPMusicShuffleModeAlbums (album-by-album shuffle). A similar set of options exists for the music’s repeatMode.

After you set the item collection, you can play, pause, skip to the next item in the queue, go back to a previous item, and so forth. To rewind without moving back to a previous item, issue skipToBeginning. You can also seek within the currently playing item, moving the playback point forward or backward.

Recipe 8-7 offers a simple media player that shows the currently playing song (along with its artwork, if available). When run, the user selects a group of items using an MPMediaPickerController. This item collection is returned and assigned to the player, which begins playing back the group.

A pair of observers uses the default notification center to watch for two key changes: when the current item changes and when the playback state changes. To catch these changes, you must manually request notifications. This allows you to update the interface with new “now playing” information when the playback item changes:

[[MPMusicPlayerController iPodMusicPlayer]

beginGeneratingPlaybackNotifications];

You may undo this request by issuing endGeneratingPlaybackNotifications, or you can simply allow the program to tear down all observers when the application naturally suspends or terminates. Because this recipe uses the iPod music player, playback continues after leaving the application unless you specifically stop it. Playback is not affected by the application suspension or teardown:

- (void) applicationWillResignActive: (UIApplication *) application

{

// Stop player when the application quits

[[MPMusicPlayerController iPodMusicPlayer] stop];

}

In addition to demonstrating playback control, Recipe 8-7 shows how to display album art during playback. It uses the same kind of MPItem property retrieval used in previous recipes. In this case, it queries for MPMediaItemPropertyArtwork and, if artwork is found, it uses the MPMediaItemArtwork class to convert that artwork to an image of a given size.

Recipe 8-7. Simple Media Playback with the iPod Music Player

#define PLAYER [MPMusicPlayerController iPodMusicPlayer]

#pragma mark PLAYBACK

- (void) pause

{

// Pause playback

[PLAYER pause];

toolbar.items = [self playItems];

}

- (void) play

{

// Restart play

[PLAYER play];

toolbar.items = [self pauseItems];

}

- (void) fastforward

{

// Skip to the next item

[PLAYER skipToNextItem];

}

- (void) rewind

{

// Skip to the previous item

[PLAYER skipToPreviousItem];

}

#pragma mark STATE CHANGES

- (void) playbackItemChanged: (NSNotification *) notification

{

// Update title and artwork

self.title = [PLAYER.nowPlayingItem

valueForProperty:MPMediaItemPropertyTitle];

MPMediaItemArtwork *artwork = [PLAYER.nowPlayingItem

valueForProperty: MPMediaItemPropertyArtwork];

imageView.image = [artwork imageWithSize:[imageView frame].size];

}

- (void) playbackStateChanged: (NSNotification *) notification

{

// On stop, clear title, toolbar, artwork

if (PLAYER.playbackState == MPMusicPlaybackStateStopped)

{

self.title = nil;

toolbar.items = nil;

imageView.image = nil;

}

}

#pragma mark MEDIA PICKING

- (void)mediaPicker: (MPMediaPickerController *)mediaPicker

didPickMediaItems:(MPMediaItemCollection *)mediaItemCollection

{

// Set the songs to the collection selected by the user

songs = mediaItemCollection;

// Update the playback queue

[PLAYER setQueueWithItemCollection:songs];

// Display the play items in the toolbar

[toolbar setItems:[self playItems]];

// Clean up the picker

[self performDismiss];

;

}

- (void)mediaPickerDidCancel:(MPMediaPickerController *)mediaPicker

{

// User has canceled

[self performDismiss];

}

- (void) pick: (UIBarButtonItem *) bbi

{

// Select the songs for the playback queue

MPMediaPickerController *mpc = [[MPMediaPickerController alloc]

initWithMediaTypes:MPMediaTypeMusic];

mpc.delegate = self;

mpc.prompt = @"Please select items to play";

mpc.allowsPickingMultipleItems = YES;

[self presentViewController:mpc];

}

#pragma mark INIT VIEW

- (void) viewDidLoad

{

self.navigationItem.rightBarButtonItem = BARBUTTON(@"Pick",

@selector(pick));

// Stop any ongoing music

[PLAYER stop];

// Add observers for state and item changes

[[NSNotificationCenter defaultCenter] addObserver:self

selector:@selector(playbackStateChanged)

name:MPMusicPlayerControllerPlaybackStateDidChangeNotification

object:PLAYER];

[[NSNotificationCenter defaultCenter] addObserver:self

selector:@selector(playbackItemChanged)

name:MPMusicPlayerControllerNowPlayingItemDidChangeNotification

object:PLAYER];

[PLAYER beginGeneratingPlaybackNotifications];

}

@end

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-6-Advanced-Cookbook and go to the folder for Chapter 8.

Summary

This chapter introduced many ways to handle audio media, including playback and recording. You saw recipes that worked with high-level Objective-C classes and those that worked with lower-level C functions. You read about media pickers, controllers, and more. Here are some final thoughts to take from this chapter:

• Apple remains in the process of building its AV media playback classes. They are becoming more and more stunningly powerful over time. Due to time and space limitations, this chapter didn’t address many technologies that power audio on iOS: AVFoundation, OpenAL, Core Audio, Audio Units, or Core MIDI. These topics are huge and each worthy of its own book. For more information on these, see Chris Adamson and Kevin Avila’s book, Learning Core Audio: A Hands-On Guide to Audio Programming for Mac and iOS (Addison-Wesley Professional).

• This chapter did not discuss ALAssetsLibrary, although examples of using this class are covered elsewhere in this book and in the Core Cookbook. You might want to look into the MPMediaItemPropertyAssetURL and AVAssetExportSession classes for working with audio library assets.

• Audio Queue provides powerful low-level audio routines, but they’re not for the faint of heart or for anyone who just wants a quick solution. If you need the kind of fine-grained audio control that Audio Queues bring, Apple supplies extensive documentation on achieving your goals.

• When creating local assets using recording features, make sure to enable UIFile-SharingEnabled in your Info.plist to allow users to access and manage the files they create through iTunes.

• The MPMusicPlayerController class introduces a simple way to interact with music from your onboard iTunes library. Be sure to master both AVAudioPlayer use for local data files and MPMusicPlayerController, which interacts with the user’s iTunes media collection.