CHAPTER 6

Liberated Data

Combining big data with human savvy and know-how is a powerful engine for gaining new insights and advancing human betterment. The capture of a stone-cold killer illustrates the point.

From 1974 to 1986, an unknown assailant dubbed “The Golden State Killer” roamed northern California committing heinous crimes. They included 13 murders, 50 rapes, and at least 100 break-ins. He was careful but sadistic, sometimes calling rape victims to say he was coming back to kill them. It took investigators decades, some luck, and breakthrough DNA forensics to identify and capture the elusive predator. DNA forensics is now a validated, accessible source of data that exponentially advances law enforcement’s capabilities.

More than 30 years after the trail went cold, a retiring Sacramento police detective decided to look into the investigation one last time. Using the Golden State Killer’s DNA, the detective submitted a fake ancestry request to GEDmatch, an open-source genomics website that helps researchers and genealogists identify potential relatives.

GEDmatch operates out of the small bungalow home of octogenarian Curtis Rogers, a retired Quaker Oats executive with a profound interest in genealogy. The database stores over a million DNA profiles. It leverages Google Cloud Platform’s massive computing power to search for genetic matches.

After receiving their GEDmatch report, the Sacramento police hired a forensic genetic genealogist, CeCe Moore, to use that information to identify potential suspects. From partial family matches, Moore began building a family tree. She augmented genetic matches with publicly available information including obituaries, marriage licenses, and even Facebook accounts to refine her search. Before long, Moore had her man.

On April 25, 2018, the Sacramento district attorney announced the arrest of James Joseph DeAngelo for crimes committed as the Golden State Killer. It turned out DeAngelo had been hiding in plain sight all along. A 72-year old former police officer, he was arrested by authorities at his suburban Sacramento home, where he lived with his daughter and granddaughter.1

Since then, police departments across America have made at least a dozen more cold-case arrests using genealogy data. Hundreds more cases await follow-up.

When combined with forensics, genetic genealogy is a powerful application of big data.

Big data’s big potential emanates from its remarkable ability to collect, curate, and analyze massive quantities of information quickly and efficiently. In doing so, it propels human problem-solving skills to a higher level. Big data’s expansive applications are helping to solve numerous health and healthcare mysteries that once seemed beyond human capabilities.

For instance, Carrot Health, a rising healthcare start-up based in Minneapolis, Minnesota, can assess and predict the health status of existing populations by analyzing publicly available data from multiple providers. Carrot’s analytics engine taps over 70 different sources to gather the consumer data of 250 million identified US adults. It organizes this data with the help of 5,000 different consumer variables such as demographics, purchasing habits, and lifestyles.

Combined with clinical data, Carrot develops very precise health profiles for specific individuals, including the propensity to develop diabetes and to follow prescribed treatment plans. Carrot’s predictions assist integrated health companies that are managing the care of large populations. Better data and better data analytics lead to earlier and more effective interventions, preventing acute episodes, enhancing medication adherence, addressing social determinants, and improving health.

This is an era of unprecedented data capture. The world creates 2.5 quintillion bytes of data daily. I have no idea how much this is, but it’s enormous and growing exponentially. Google’s search engine processes 40,000 searches every second.2 Finding “signals” within the noise is daunting. Information at such scale is not comprehensible without sophisticated technology.

Healthcare generates more data than any other industry. Only by leveraging artificial intelligence (AI), machine learning (ML), and natural language processing (NLP) tools can healthcare collect, curate, and analyze massive data sets. That data and its analysis can improve care delivery, streamline administrative tasks, and deliver insights that lead to new and better medicines, diagnostics, treatments, and interventions.

Unfortunately, this vision of data-enhanced delivery and discovery remains aspirational. As an industry, healthcare lags behind other industries in understanding and applying big data’s performance and insight-generating capabilities. This is partly a function of the industry’s scale and complexity. More concerning, however, is healthcare’s historic tendency to silo data within closed systems that prevent effective access and sharing. Such hoarding stifles knowledge flows, blocks care coordination, and impedes innovation. Data must flow freely to generate breakthrough insights.

Not surprisingly, the System’s data architects build data systems and infrastructure that enhance billing and maximize revenue collection. Serving consumers, optimizing care outcome, and managing expenses are secondary considerations. Most health companies employ “moated” data architecture because it tightens their grip on patients, clinicians, and revenue capture. The System, as always, looks after itself.

James Hereford, CEO of Fairview Health System in Minnesota (a large system with 12 hospitals and medical centers, and nine emergency departments), understands the value of liberated data and the downsides of keeping it fragmented and inaccessible inside data silos. He emphasized this point in a January 2018 speech where he described relatively closed-system EHRs as “one of the biggest impediments to innovation in healthcare.” He then called on health company executives to “March on Madison” (Wisconsin), the home of the leading EHR company in the United States, Epic Systems Corp.3

Health systems operate like medieval data monasteries, their libraries filled with inaccessible tomes of knowledge. Visionaries like Hereford are storming the castle walls armed with the keys to unlock the imprisoned data. Like Gutenberg’s invention of the printing press in 1440, analytic innovation is liberating data and advancing knowledge. This movement is at the leading edge of a healthcare renaissance and essential to Revolutionary Healthcare.

Today, those monastery walls are splintering as liberated data breaks free. The sweet combination of apps, services, tools, and digital mobility that has transformed every other consumer industry is turning its attention to healthcare.

Healthcare is complex. Big data and digital technologies are not magic. They cannot automatically transform care delivery. Big data works best when combined with human judgment and understanding. The best organizations develop IT systems and workflows that combine high-touch attention with analytic power to improve protocols, enhance insights, and boost performance.

Feisty start-ups like Carrot Health and large innovators like Optum Health are demonstrating big data’s potential in healthcare. New industry entrants like Amazon, Apple, and Google are bringing their data muscle and minds to healthcare. They see opportunity in data liberation. Patients, increasingly frustrated by healthcare’s digital density, want efficient, personalized, and accessible apps and services to manage their health and healthcare jobs-to-be-done. The customer revolution in healthcare runs on liberated big data.

DATA WOES: WHY EVERYONE HATES ELECTRONIC HEALTH RECORDS (EHRS)

Waiting for What Exactly?

A few years ago, Kathy began to experience sharp abdominal pains. Kathy is not a “complainer,” so everyone at home took this pain very seriously. Her husband Chris accompanied her to the emergency department (ED) at the local trauma center known for its exceptional healthcare. Later, Chris wrote to tell me about their experience.

The ED was three blocks from their house. The health system was part of the network offered by Kathy’s health plan. In other words, she was a regular or, at least, a known “consumer” in its system of clinics, hospitals, and trauma centers. Upon arrival, Kathy and Chris went through a typical gauntlet of healthcare data collection. ED personnel carefully recorded her personal and health information into their centralized EHR system, capturing all necessary information for subsequent billing and clinical treatment.

This was an exceptionally time-consuming and frustrating experience. Kathy gave her name, address, gender, contact person, insurance coverage, current medications, general practitioner, description of pain, current diet, smoking or drinking habits, weight (and so on and so on) multiple times to different individuals.

Most of Kathy’s information was already in the system. Noting Kathy’s discomfort, Chris tried to speed up the intake process, but to no avail. They continued to follow the ED’s painstaking digital input procedures.

Sitting in the ED waiting room for the next hour, Chris noticed the diversity of the patients and families also waiting. At least half were Somali/Ethiopian or Hmong, two major immigrant groups in their region. Most of the rest appeared to be poor. Even as a theologian, minister, and former college president, Chris found the intake procedure confusing, frustrating, and stressful, as did Kathy, the patient in pain, who has a PhD in marketing. They both have a significant amount of experience dealing with the healthcare system supporting one of their son’s chronic illness. How could these other patients and families possibly manage, especially if English wasn’t their first language? There were few real “emergencies” among the patients gathered in the ED. Instead, poverty, language barriers, and cultural norms had driven these people to seek expensive, depersonalized emergency care. They had no reasonable alternatives.

After an hour, an RN, a PA, and then a doctor visited Kathy in succession. Each clinician asked many of the same questions and reentered the same information into the EHR. When a doctor finally arrived, he took Kathy’s blood pressure, probed her abdomen, and ordered a CT scan. It took time to arrange the CT scan and even more time for another doctor to interpret the test results. The doctors had no answers. Their examinations and Kathy’s tests showed nothing abnormal. They suggested that she should rest and follow up with her primary care physician to discuss getting a colonoscopy.

Kathy’s abdominal pain persisted, so she followed up with her primary care physician (PCP), who asked if the ED physician had mentioned the 16-millimeter cyst on her liver. No. The PCP referred Kathy to a radiologist, who recommended draining the cyst. Having researched that type of cyst, Kathy asked if draining was the right approach because there’s a high probability of them reoccurring after draining. The radiologist said not to worry.

Five months later, the pain reoccurred. Kathy went to her primary care physician, who thought Kathy was having a heart attack. But her ECG and stress test results were normal. The physician suggested panic attack, prescribed anxiety medication, and sent Kathy for a third heart test—a CT angiogram. Kathy asked the cardiologist to check to see if the liver cyst had returned, and it had. Kathy had herself suggested the correct diagnosis—the liver cyst—after thousands of dollars of tests. The physicians trying to diagnose and treat Kathy never had the needed information.

Kathy spent three more months fighting with her “gold” insurance plan to get the recommended surgery from the right specialty surgeon since there wasn’t one in-network. She even had to talk to the chief medical officer at her insurance company. Nine months after the first visit to the emergency department, Kathy had the procedure, with the hepatobiliary surgeon, and her insurance paid.

“Technology is great!” Chris wrote to me. “But does data make a difference? Is it readily available? Do practitioners make good use of the information already in their system? Do they have the time, expertise, and willingness to evaluate data in the light of the real-life situation of the person sitting in front of them?”

Chris went on to ask other questions. He wondered how technology benefited the culturally, economically, and linguistically diverse individuals waiting in the ED. Would it make their experience of care better or worse? What good is digital health data, let alone artificial intelligence or machine learning, if they fail to support patients and clinicians or, even worse, build barriers between them?

Chris’s conclusion was this:

My experience with Kathy . . . . tells me that we have a long way, a very long way to go before data-driven medical technology is actually workable, no less effective for the average poor soul in the average ED waiting room.

Even as digital IT and AI marvel the medical world with their potential, Chris’s words and Kathy’s experiences are sobering reminders of how repetitive, limiting, and unresponsive healthcare data collection and application are to meeting consumers’ in-the-moment needs.

Clinicians—the World’s Highest-Paid Data Entry Clerks

On the other side of that digital divide, clinicians, especially doctors, suffer as much or even more frustration and stress with EHRs. Dr. Atul Gawande chronicled this experience in a November 2018 New Yorker article titled “Why Doctors Hate Their Computers.”4 Gawande works as a surgeon in the Partners HealthCare system in Boston and is a prolific writer and the new CEO of the Amazon–Berkshire Hathaway–JP Morgan health company. When he speaks, healthcare listens.

In his article, Gawande described a 16-hour mandatory training program to prepare physicians for Partner’s adoption of the Epic Electronic Medical Record system. According to Gawande, installing the Epic EHR systemwide will cost Partners $1.6 billion, most of which is lost productivity while Partners’ 70,000 employees learn how to use the software.

Gawande was among the first people through the training. The training was boring, arduous, frustrating, and often confusing, but Gawande expected it would at least lead to higher productivity, timely access to patient information, and better overall care.

Three years later, he felt the new system had failed to deliver on any of these promises. Worse, it had turned him and his colleagues into data entry clerks. Instead of engaging patients, doctors toiled away on their computers. This diminished patient connection, compromised clinician effectiveness, and intensified stress.

American academic John Culkin observed, “We shape our tools, and thereafter our tools shape us.” For Gawande and his colleagues data compilation and exchange became a grinding chore, often one with indecipherable logic to its operation. Gawande observed that clinicians became more disconnected from one other because of these individualized and time-consuming tasks. While patients may ultimately benefit from easier access to records and information, clinicians operate and suffer like workers assembling Model T Fords in the early 1900s. No wonder clinicians are burning out in record numbers.

Data Shackles

In many industries, new technologies automate and improve workflows. In healthcare, new technologies make care delivery more expensive. According to The Hastings Center, new medical technologies and intensified use of existing technologies are responsible for 40 to 50 percent of the annual increase in care costs.5

The first rule of performance improvement is to fix systems before automating them. Healthcare organizations ignored this wisdom. Instead, the industry spent tens of billions, most of it government funded, automating a broken, fragmented system. EHR companies profited enormously but failed to transform healthcare delivery. Rather than streamlining and improving information access, EHRs frustrate patients (like Kathy and Chris) and providers (like Gawande), even after full implementation.

In many respects, the nation’s investment in EHRs has backfired. A 2019 investigative report, “Death by a Thousand Clicks: Where Electronic Medical Records Went Wrong,” by Kaiser Health News and Fortune magazine chronicles a litany of unintended consequences related to EHR adoption.6 These include upcoding, a flood of false alarms, physician burnout, medical errors, blocked data access, gag clauses, and patient harm. Some of the report’s findings are truly alarming:

• Based on extensive interviews, KHN/Fortune conclude that EHR implementation has been “a tragic missed opportunity. Rather than an electronic ecosystem of information, the nation’s thousands of EHRs largely remain a sprawling, disconnected patchwork . . . that has handcuffed health providers to technology they mostly can’t stand.”

• Twenty-one percent of people surveyed by the Kaiser Family Foundation found mistakes in their EHR.

• Safety-related incidents related to EHRs and other IT systems are skyrocketing.

• An ED doctor makes roughly 4,000 computer clicks over the course of a single shift. This labor-intensive data-entry process invites error and causes physician burnout.

• Alarms, including voluminous false alarms, account for 85 to 99 percent of EHR and medical device alerts.

Despite the nation’s investment in EHRs, healthcare data has become even more siloed, stifled, retrospective, and confined to checkboxes and dropdowns. These limitations contribute to suboptimal care, burdensome workarounds, and enormous financial waste.

Patients suffer. A Johns Hopkins study in 2016 calculated that from 250,000 to 400,000 deaths annually result from medical errors.7 Data systems play a role in many of those deaths. Patient adverse events (PAEs) result from errors in communications and diagnoses orchestrated within EHRs.

Medication errors are commonplace, caused by computerized physician order entry (CPOE) mistakes and flawed EHR documentation. Almost 70 percent of data-related mistakes influence patient care.

Clinicians suffer. On average, physicians spend half of their workday entering data into EHRs and conducting clerical work and spend just 27 percent of their workday with patients.8 Frontline caregivers operate within a data fog. There’s plenty of good data, but it’s not curated, accessible, or prescriptive. Caregivers suffer from alert fatigue caused by cognitive overload and the high number of false alarms. To clear the fog, clinicians default to time-consuming workarounds that are ad hoc, haphazard, and error prone.

Closed data systems have hijacked clinician workflow, pulled them away from bedsides, and contributed to burnout. A 2017 Medscape survey stated that 51 percent of physicians report experiencing frequent or constant feelings of burnout in 2017, up from 40 percent in 2013. Fifty-six percent of physicians blame documentation for that burnout, and 24 percent blame it on increased computerization working with EHRs.9

With limited options, health systems contract with powerful electronic health record providers at enormous cost and underwhelming results. However both health systems and EHR companies benefit from reduced interoperability. Controlling patient data creates market power. A 2014 RAND study suggests that EHR companies and large health systems find solace and profits in interoperability:

The shift [to interoperability] will be less welcome to large legacy vendors because it will blur the competitive edge they currently enjoy. Health care systems may be less-than-enthusiastic adopters because functional health information exchanges will make it easier for patients to see non-affiliated healthcare providers or switch to a competing health care system.

Data oligarchs not only restrict data flow, they stifle rather than cultivate innovative technology companies. They insist on incremental improvement to archaic platforms rather than creation of new, more powerful and user-friendly platform technologies.

For example, many EHR vendors use their licensing agreements to solidify their control of source code and data. They consider their source data proprietary and will not allow third parties to access it without their permission. Carefully worded legal agreements prevent health companies from commercializing innovative applications. Data sharing, to the extent it occurs, is one way (into, not out of, the EHR). Efforts to stimulate app development are equally one-sided.

Accessing source data is an epic challenge for third-party app developers. Pun intended. No EHR vendor facilitates seamless access, but Epic is the most zealous in controlling and blocking its source data. In many interviews, Epic founder and CEO Judy Falkner described the company’s mission as “Do good, have fun and make money.”10 It’s certainly worked for Faulkner. With a net worth of $3.5 billion, she is America’s third richest self-made woman.11 This is the oligarchs’ prerogative. They control the factors of production and generate enormous profits. They will continue until forced to stop.

KNOWLEDGE STOCKS AND FLOWS

In a high-functioning, integrated healthcare ecosystem, big data will flow into knowledge networks that standardize, monitor, and enhance care protocols. Clinicians will navigate voluminous data flows in an intuitive way, like we all experience as consumers. Clinicians and consumers will have sufficient time together to make better medical decisions. Consumers and clinicians will engage. Outcomes will improve. Costs will drop. Achieving this desired level of knowledge exchange and shared decision making requires new ways of thinking about data and knowledge flows in the healthcare ecosystem.

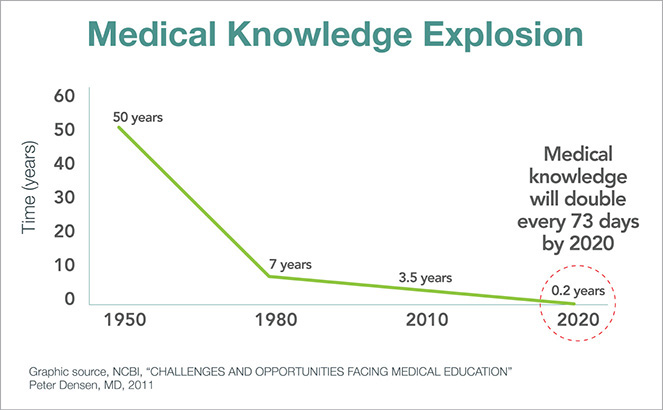

Historically, professionals gained market power by stockpiling knowledge, protecting it, and renting it to others (think doctors, lawyers, accountants, and consultants). That approach to professional services is no longer sustainable. Knowledge creation is occurring at an accelerating rate, so existing knowledge depreciates very quickly. For example, medical knowledge will double every 73 days by 2020 (see Figure 6.1).12 As a consequence, no professional can keep current, and engaging in knowledge exchange is vital to innovation and professional development.13

FIGURE 6.1 In 1950, it took 50 years for the totality of medical knowledge to double. In 2020, it will double every 73 days.

Likewise, professional expertise is fast becoming an artifact of another era. It is easy to find and inexpensive to access. Application of expertise within a specific context is valuable in today’s increasingly interconnected, dynamic, nonstop, and inherently collaborative working environments. Product life cycles are short. New demands for value-driven services are frequent, and customers are impatient for results. In this brave new information age, data must be free and available for knowledge workers to inform, discover, and create. Those who excel in this environment let go of data control and embrace knowledge flow.

Today’s healthcare consumers already participate in knowledge flows. They want more “data liquidity” in their lives, not less. The Healthcare Information and Management Systems Society (HIMSS) holds an annual conference, and in 2018 Accenture released its annual consumer survey on digital health trends.14 Consumer acceptance of digital technologies is broad based and accelerating as these findings illustrate:

• Use of wearable devices quadrupled between 2014 and 2018 (from 9 percent to 33 percent).

• Use of digital health apps tripled during the same time (from 16 percent to 48 percent).

• Consumers increasingly access their digital health records when their providers give them access, particularly to review lab test results and physician notes.

• Consumers show almost universal willingness (88–90 percent) to share personal data with their healthcare professionals.

• Consumers show more willingness to share personal data with insurance companies (72 percent) and online communities (47 percent) than with government agencies (41 percent) and employers (38 percent).

• Consumers have high satisfaction (74 percent) with virtual care.

• Nearly half of consumers (47 percent) prefer immediate virtual care to delayed in-person care, expressing appreciation for virtual care’s convenience and low costs.

• Consumers are accepting of and willing to receive artificial intelligence–related healthcare services.

If the survey swapped the word “consumer” with the word “clinician,” I suspect these poll numbers would be similar or higher. Consumers and doctors are human beings, working with great data capabilities in most of their lives. However, they’re lost within a healthcare data machine. As scientists, doctors know that current technologies are inadequate. It’s time to “think different” and build better ways to collect, curate, and analyze data so Revolutionary Healthcare can achieve its potential.

DATA IS AS DATA DOES

Data does not exist in isolation. It is the middle layer of a three-part integrated hierarchy. Healthcare data flows to and from this middle tier to inform value-based operations (the bottom tier) and fully engaged customers (the top tier). Figure 6.2 captures these flows.

FIGURE 6.2 Free-flowing big data is essential for customer engagement and value-based care delivery.

Revolutionary healthcare organizations use data to generate consistent high-quality outcomes, reduce performance variation, and improve operational efficiency. Data inputs flow to and from operations to improve care design and empower care delivery.

At the individual patient level, all relevant data from all sources flows into algorithms that will enable caregivers to optimize diagnosis and treatment. As data proliferates and analytics advance, individual genetic and environmental characteristics will lead to more personalized and less population-based therapies, more precision and less trial-and-error care. Moreover, pricing and outcomes data will be transparent and available to clinicians and consumers.

At the individual disease level, all relevant information from all sources will flow into data systems for analysis that can advance medical research and protocol development.

Unfortunately, getting the medical treatments “right” will not be sufficient to “win.” Health companies also must engage consumers to gain their trust, confidence, and loyalty. Fully engaging consumers requires a deep understanding of their needs. Revolutionary Healthcare companies listen and respond to customer preferences. They deliver appropriate, user-friendly healthcare services with transparent prices that are unique, personalized, and seamless. They create value, not friction. None of this should surprise. Consumer purchases drive 70 percent of the US economy. Companies succeed and fail based on their ability to read and respond to consumer sentiments.

Consumer-oriented companies spend billions of dollars on polling, focus groups, test marketing, and behavioral and purchase analysis to enhance their product offerings. Companies can’t tell consumers what to do, companies must persuade. However, leading through persuasion is antithetical to most healthcare company cultures, where clinicians have unequal relationships with consumers and dominate medical decision making.

Big data analytics are essential for understanding consumer preferences. Collecting, measuring, and evaluating consumer data drives strategic growth and customer acquisition. Companies use big data to design appealing products and services.

Data wants to be free and flow to where it provides the most value. Healthcare has to overcome legacy IT systems to unlock data’s transformative power. This will require the replacement of billing-centric EHRs run by data oligarchs. They impede progress to maintain their market control and generate outsized profits.

TURBOCHARGED TECHNOLOGIES

Kaveh Safavi, MD, manages Accenture’s global healthcare practice. During dinner several years ago, Kaveh remarked that he was going to use LinkedIn for managing his contact information. Great idea. That way individuals keeping their own information current in LinkedIn would flow to Kaveh. Trouble is not everyone keeps their LinkedIn contact information current and accessible—but imagine if they did. The time-consuming task of managing contacts would disappear. Welcome to the cloud-based world of prepopulated data sets with real-time updates and no duplications or errors!

That world is at our fingertips and already operating at the Joint Commission (JC). Accreditation is necessary for hospitals and other care facilities to receive payment from Medicare and Medicaid. The Joint Commission is an independent, nonprofit organization that accredits and certifies nearly 21,000 US-based healthcare organizations. It also accredits international healthcare organizations. In 2017, the Joint Commission selected Apervita, a Chicago-based healthcare technology company, to automate and streamline its accreditation process for the nation’s hospitals.15

Formerly, hospital accreditation was a costly, manual, labor-intensive, many-months-long process for hospitals. Internally, the Joint Commission needed dozens of consultants to manage its review and approval processes. The Joint Commission now uses Apervita’s cloud platform to manage the accreditation process, with hospitals submitting their quality data with the click of a button, optimizing time savings and removing cost in the process.16 With an automated process and without intermediaries, the Joint Commission is more connected to its hospitals, hospitals can get insights into quality performance while care is happening, and both hospitals and the Joint Commission save money and resources. In the course of one year, the Joint Commission onboarded thousands of hospitals and completely transformed the process.

The Platform-as-a-Service (PaaS) technology makes it easy for hospitals to populate necessary data from multiple sources into accreditation reports for submission. Importantly, hospitals can better triage and diagnose data and quality challenges in real time, allowing them to continuously improve performance prior to submission. After 30 years of a paper-based submission process, the shift to PaaS has been transformational for the Joint Commission and its accredited hospitals.

Apervita’s cloud platform incorporates the scale, efficiencies, and security of Amazon Web Services (AWS) technology, Amazon’s wildly successful cloud-computing service. Healthcare’s near-term data future is in the cloud. Software systems designed for cloud-based applications are faster, more elegant, more secure, can be updated more rapidly, and are more powerful than software solutions that run through data warehouses, which are harder to scale up and down with demand. With enhanced computational power, cloud-based solutions accommodate artificial intelligence (AI) capabilities and machine learning (ML) advances and natural language processing (NLP), which turbocharge data curation and analytics for rapid insights.

It was cloud-based analytics that generated the genetic matching data that led to the Golden State Killer’s capture and arrest.

For Whom Bell’s Law Now Tolls

Technology solutions should make organizational management easier, cheaper, and better like Apervita’s technology is doing for the Joint Commission. In this way, cloud-based technologies are a “force multiplier” for Revolutionary Healthcare. They help health companies get the right data to the right people in real time with useful guidance that improves outcomes. Hallelujah. Healthcare desperately needs this boost, so Chris and Kathy no longer linger in ED waiting rooms with other unfortunate souls waiting for the System to get its act together.

Moore’s law, the doubling of computer power every 18 months or 60 percent annually, powers technological innovation. Nielsen’s law finds that bandwidth (i.e., connection speed) doubles every two years. Together, they govern the speed and power of software-based technology solutions.

Fewer people are familiar with Bell’s law, the most interesting Internet theory of the three. Formulated in 1972, Bell’s law holds that data ecosystems require an entirely new architecture roughly every 10 years to accommodate new programming environments and applications that exploit increasing computational power and speed.

In the 1960s, mainframe computing dominated. In the 1970s, minicomputers emerged. In the 1980s, the personal computer and local-area networks proliferated. In the 1990s, Internet-driven browsers on wide-area networks (WANs) with centralized data warehouses (CDWs) came to the fore. In the 2000s, smartphones emerged along with cloud computing. Businesses shifted from data warehouses to centralized servers (“the cloud”) hosted by technology companies, including Amazon, Microsoft, and Google. Today, the consumer and general business world is on the brink of another shift in the data architecture paradigm with the emergence of blockchain.

Healthcare is playing catch-up. The industry still operates largely via 1990s-era data warehouses that silo information, communicate poorly, and are susceptible to data breaches. Electronic health record (EHR) and enterprise resource planning (ERP) systems and even revenue cycle platforms rely on data warehouses to collect, curate, and analyze data. An entire sector of the healthcare economy devotes itself to physically scanning and digitizing paper health records and images. As recently as 2016, analysts estimated that 85 percent of hospitals still used pagers. Drug dealers don’t even use beepers anymore. Healthcare is keeping the beeper/pager industry alive almost single-handedly.

Riding the Cloud

As the Joint Commission case study illustrated, healthcare is finally migrating into cloud-based technology solutions. Cloud computing places data, software, and applications in secure files within massive “farms” of interconnected computers. All computational activity occurs through the Internet.

Moving into the cloud frees health companies from owning and managing the physical hardware and networking components necessary to run data warehouses. With cloud-based platforms, access to data is faster, easier, more convenient, and secure. Data is always up to date, and software upgrades automatically. Platform capacity scales up or back depending on need. Data is more secure because host companies, like Amazon Web Services (AWS), employ industry best practices to protect it.

Beyond the Cloud to Blockchain

Still several years away from widespread adoption, blockchain will be the next computing system to emerge according to Bell’s law. Cloud-based architecture is highly centralized, requires enormous energy to process data, and at some point will reach capacity growth.

Blockchain technology creates virtual ledgers that record transactions in a transparent, decentralized, and public way. Essentially, blockchain enables parties to execute transactions without intermediaries. Cryptocurrencies, like Bitcoin, were the first applications of blockchain technology, but the technology has almost unlimited application for secure monetary and nonmonetary transactions. Unlike cloud-based technologies that store data centrally, blockchain distributes data throughout any and all connected devices.

Blockchain’s decentralized and distributed operating technology creates a permanent transaction documentation that is tamper-proof, verifiable, and accessible. This preserves historical truth, reduces fraud, and eliminates middlemen. Bitcoin was once considered a shady tool of the dark web, suitable only for criminal transactions. Today, Bitcoin and hundreds of other cryptocurrencies have gone mainstream and now trade openly. The market capitalization for the top 100 cryptocurrencies on December 18, 2018, was $114,976,009,856 as tracked by coinmarketcap.com.20

Beyond cryptocurrencies, individuals and companies are finding multiple uses for blockchain technologies. Pharmaceutical companies are using blockchain technologies to secure their manufacture and distribution of drugs, preventing stolen or counterfeit drugs from entering the supply chain.21 Webjet uses blockchain to arrange and coordinate travel plans for customers, eliminating the need for manual intervention.22 Walmart uses blockchain to identify where products break within its supply chain and to guarantee food safety.23 From these examples, it’s easy to see how blockchain technologies will solve healthcare problems related to patient privacy, care documentation, supply chain integrity, and data exchange.

Humana, Optum, Quest Diagnostics, and others are teaming up in a pilot project to use blockchain to share data. The blockchain pilot enables participants to share the same, completed data records in real time.24 As Lorraine Frias and Mike Jacobs from Optum explain in a Modern Healthcare commentary:

Blockchain can potentially assemble every detail related to healthcare for a consumer. We’ll know if a customer had a tetanus shot at a retail health clinic and the serial number of his knee replacement prosthesis.25

With permission on the blockchain, clinicians will have access to consumers’ complete medical records from all locations. Those records will be secure, accurate, historical, and up-to-date. Blockchain can and will incorporate data from non–health system sources such as wearables. Every piece of data will be time-stamped and unalterable, preventing fraud. As Jill Frew of Cain Brothers writes,

While we are just beginning to understand blockchain’s impact on the healthcare industry, its potential should not be underestimated. In the next five-to-10 years, patient consent and data exchange backed by blockchain could fundamentally alter the way healthcare services are provided by making patient longitudinal data readily available and opening the door to new treatments, new care delivery models and better coordination of care.26

This is the holy grail for liberating and securing health data. Revolutionary Healthcare companies will break down the silos shackling data and use liberated data to break down the System’s competitive barriers to deliver the right care at the right time in the right place at the right price.

Healthcare operating environments are dynamic and complex. Technologies in isolation cannot solve organizational challenges. Blockchain is largely in the future. Back in present time, healthcare needs organizational systems that enable frontline professionals to make the right decisions and take the right actions in real time. Technology and people together drive innovation and progress. For insights on enhancing human performance, we go back to 2004. The second war in Iraq was raging, and a new general arrived to take command and introduced the “team of teams” operating paradigm to manage war in the digital age.

LESSONS FROM IRAQ TO HEALTHCARE’S FRONTLINES27

The teams were operating independently—like workers in an efficient factory—while trying to keep pace with an interdependent environment. We all knew intuitively that intelligence gathered on AQI’s [Al Qaeda in Iraq] communications and operations would almost certainly impact what our operators saw on the battlefield, and that battlefield details would almost certainly represent valuable context for intel analysis, but those elements of our organization were not communicating with each other.

—General Stanley McChrystal from Team of Teams

By 2004, Al Qaeda in Iraq (AQI) was routinely defeating US forces on the battlefield by employing asymmetric hit-and-run tactics that distributed command control and placed a premium on speed. Networked and nonhierarchical, AQI was a new, formidable type of enemy. Recently appointed commander General Stanley McChrystal knew doubling down on current strategies would not reverse the tide. It was time to think differently.

McChrystal discovered the hard way that central command leaders consistently made the wrong tactical decisions because they lacked real-time information and situational context. Data about AQI moved through military intelligence more slowly than the enemy moved in the field. Traditional command-centric management models focus on planning and prediction. Using this reductionist management model, the military was unable to respond effectively within an increasingly complex and unpredictable environment.

McChrystal realized that he needed a new command structure to respond effectively to the AQI threat. US forces needed to organize and act with adaptability and resilience to succeed on the field of battle. His success in creating a flat, adaptable, resilient command structure turned the tide in Iraq.

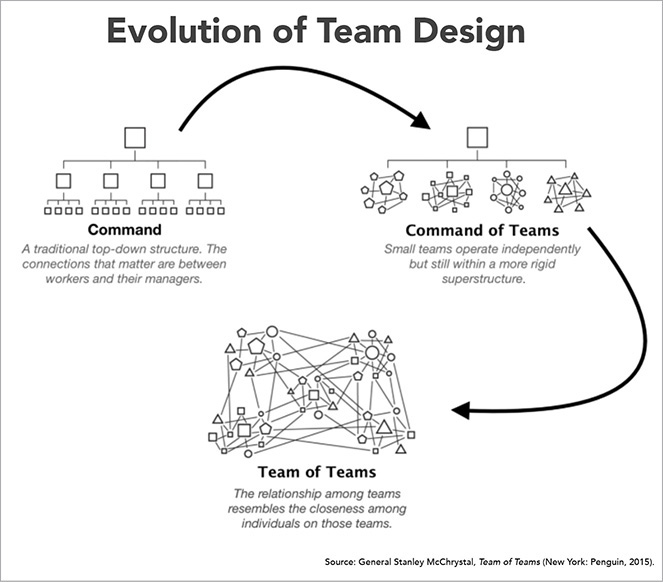

The same combinations of complex and dynamic operating conditions hamper the effectiveness of frontline caregivers in US health systems (Figure 6.3). They lack real-time data and authority to optimize care decision making and outcomes. The military’s lessons learned in Iraq have direct applications to US hospitals.

FIGURE 6.3 As situations become more complex and dynamic, organizations require new architecture to coordinate and act in real time.

US healthcare has the best-trained clinicians, the best equipment, and the best facilities in the world, but it operates inefficiently, makes too many mistakes, frustrates patients, and exhausts frontline personnel. This underperformance occurs despite hospitals having the necessary components of highly efficient and reliable organizations. These include digitized medical records, gigantic data warehouses, elaborate protocols, and centralized performance monitoring.

Like the US military in Iraq circa 2004, the US healthcare system is failing, and it is failing for the same reasons. Outdated management theory is responsible. The industrial age’s primary managerial goal was achieving efficiency at scale. Management systems built to optimize efficiency systematically plan for predictable actions and processes. While hospital operating dynamics have become more complex, healthcare’s managerial models have not adapted to the increased operating complexity.

Health systems and their leaders require new organizational architecture to manage complex hospital operations. Fortunately, the military has developed high-performing management models for complex environments and has pressure-tested them on the front lines.

Building Organizational Adaptability and Resilience Amid Chaos

In Team of Teams, McChrystal identifies three core lessons required for achieving success in dynamic environments.

Lesson #1: Shift from a “Command of Teams” to a “Team of Teams” Model

McChrystal realized AQI’s command structure was not hierarchical. It consisted of a dispersed network of connected groups that shared a common purpose and exchanged extensive information. In contrast, US forces used a central command center to control operations. The high quantity and velocity of information overwhelmed central command and limited its ability to guide frontline personnel.

McChrystal’s solution was to create a “Team of Teams,” an organizing model where the operating dynamics of effective teams replicate themselves throughout the entire organization. Teams that previously operated in silos came together as one with a single “shared consciousness.” In essence, he created a network to defeat a network.

Across health systems today, formal and informal teams must navigate through increasingly complex operating environments to make effective decisions in real time. Current management systems fail to develop the trust and information sharing required for teams to solve dynamic problems. Frontline caregivers undertake heroic actions to overcome legacy systems so patients get the services they require.

Healthcare’s team of teams cannot function until organizational architecture equips them with the information they require and the authority to use it in real time.

Lesson #2: Arm Frontline Teams with Decision-Critical, System-Level Information

McChrystal realized that frontline teams needed faster access to information to make the right operational decisions. Task Force members needed to operate within an interconnected and decentralized system, not through a centralized command structure (Figure 6.4). Information had to be transparent and available to all members. McChrystal’s team assembled the Situational Awareness Room, a vaulted, heavily fortified chamber with large computer screens.

FIGURE 6.4 Changing organizational management paradigm from command and control to team of teams

The Situational Awareness room supported information-sharing techniques proven to drive collaboration and performance. These included the following: (1) face-to-face interaction; (2) direct peer-to-peer communication between teams, not channeled through a leader; and (3) opportunities for side conversations.

With this new communications system, McChrystal’s teams could share the right amount of data and contextual information with each other to act in real time. McChrystal convened a 90-minute daily video conference at 9 a.m. to share vital information with all task force members, eventually 7,500 people around the globe.

Despite deep investments in technology, training, and improvement initiatives, health systems struggle to get real-time information to the decision makers on the front line when they need it to act. Centralized data systems (such as EHRs) bury decision-critical data. Moreover, the data itself is often raw and not easily understood. Sometimes it’s not even collected. Most data is historical, not real time, which leads to retrospective analysis rather than present action.

Additionally, health systems with traditional command centers centralize decision making. These central command structures often fail to respond to real-time events. To be effective at the point of decision making, health systems need to relay real-time information to frontline staff so they can act with maximum effectiveness.

Lesson #3: Build Trust-Based Relationships Among Traditionally Siloed Teams

While shared information forms a common understanding of objectives between teams, McChrystal needed to create strong relationships between teams to improve system-level problem solving. Teams needed to understand one another’s decision dynamics. With a shared consciousness and trust, teams could act independently with a sense of the whole mission. Their individual decisions advanced the overall mission, not just their component part.

McChrystal took a low-tech approach to building relationships and trust. He cross-fertilized teams and established liaison programs. Over time, the trust between individuals grew into trust between teams that, when paired with the common purpose, fostered unprecedented levels of cooperation.

For health systems desiring adaptive managerial architecture, real-time information and technology are not enough. Relationships matter, and trust binds teams together. For frontline staff to take system-level actions in real time, they need to trust that other teams are acting in concert to optimize systemwide performance, not individual-unit performance.

It Takes Teams of Teams to Deliver Great Healthcare

Just as the team-of-teams management model and infrastructure helped US forces in Iraq outmaneuver AQI, health systems can use this model to help frontline teams assess and solve problems in real time on their own authority.

Advanced, cloud-based technology and the team-of-teams organizational architecture give frontline teams the data, tools, trust, and authority to act constructively for patients. Managers and charge nurses get patients into the right beds with less friction. Discharges occur seamlessly. Emergency department diversions (when an ambulance has to take a patient to another ED because the intended ED is too busy) disappear.

Healthcare teams must do a thousand things right to prevent medical errors and deliver appropriate treatments (right care, right time, right place, right price). Coherent protocols, effective communications, real-time data, and understanding patient preferences are essential for success.

It’s always hard to prove a negative. It took centuries to learn that handwashing prevents more deaths than brilliant surgical procedures. Defensive proficiency becomes evident by measuring relative team performance over time. This is true for avoided infections, prevented surgical procedures, and unnecessary diagnostic procedures.

Defensive success rests on constant, high-performing collective action. Enterprise participants coordinate, collaborate, and communicate to make correct decisions in real time. This is difficult but necessary in dynamic environments like operating rooms, trauma centers, intensive care units, ambulances, clinics, and patient bedsides.

These team-of-teams systems proactively identify bottlenecks where patients and providers wait for direction. They minimize error. They empower frontline staff. They optimize operational flow by balancing people, equipment, and facilities at capacity. They save lives.

KNOWLEDGE EXCHANGE AND VALUE CREATION

On October 8, 2018, Paul Romer, an economist at New York University, received two phone calls at home. He didn’t answer either because he thought they were spam calls. Turns out it was the Swedish Academy calling to inform Romer that he had just won the 2018 Nobel Prize in Economics.

During the 1990s, Romer published several pathbreaking papers that established how voluntary knowledge exchange expands human capital and societal productivity. Unlike products and services, knowledge flows do not have diminishing returns. Instead, ideas are “nonrival.” Many people can use them.

As such, free knowledge exchange generates the ideas and innovation that foster growth. Scale and clusters are also important. Knowledge flows are more vigorous where talented people with similar interests concentrate. Think of the great hubs of innovation like Silicon Valley today and Detroit in the early 1900s.

Knowledge exchange is the elixir of the digital age. Ideas, information, and opinion spread like wildfire. Web-enabled education, connection, and communication amplify and compound the impact of knowledge exchange with good and bad effect. Blockchain enables students to transfer money out of repressive countries to fund their education. Terrorists in Iraq used technology to outfox elite US forces until McChrystal found a new way to combat them.

Healthcare’s data silos impede participants from exchanging knowledge and advancing care, but here’s the good news. The silos are cracking, and data is breaking free. Given its historic suppression, liberating healthcare data will have a disproportionate positive impact on service delivery, outcomes, and customer experience. Healthcare can replicate what other industries have already successfully implemented. Healthcare can avoid mistakes other industries have made.

Righteous exchange of liberated data is a force multiplier of awesome proportions. It is the tornado blowing Revolutionary Healthcare into every town, hamlet, and city in America.