Chapter 5. Collection types

It’s better to have 100 functions operate on one data structure than 10 functions on 10 data structures.

Alan Perlis[1]

1 “Epigrams on Programming,” ACM SIGPLAN 17, no. 9 (September 1982).

This chapter covers

- Persistence, sequences, and complexity

- Vectors: creating and using them in all their varieties

- Lists: Clojure’s code form data structure

- How to use persistent queues

- Persistent sets

- Thinking in maps

- Finding the position of items in a sequence

Clojure provides a rich set of composite data types, or collection types, and we’ll cover them all: vectors, lists, queues, sets, and maps. In this chapter, we’ll dig into the strengths and weaknesses of each. We’ll spend more time on vectors and maps than on the other types, because those two are used in a wider variety of circumstances and warrant the extra discussion. Finally, we’ll discuss the design of a simple function to use many of the lessons learned in this chapter, and you’ll gain specific insight into the preceding quote.

Before we look at the primary collection types individually, we’ll discuss the things they have in common. For example, you may have heard of Clojure’s sequence abstraction—all the persistent collections use it, so we’ll examine that as well as some algorithmic complexity concepts we’ll refer to throughout the chapter.

5.1. Persistence, sequences, and complexity

Clojure’s collection data types have some unique properties compared to collections in many mainstream languages. Terms such as persistent and sequence come up, and not always in a way that makes their meaning clear. In this section, we’ll define their meanings carefully. We’ll also briefly examine the topic of algorithmic complexity and Big-O notation as they apply to Clojure collections.

The term persistent is particularly problematic because it means something different in other contexts. In the case of Clojure, we believe that a phrase immortalized by Inigo Montoya from the novel and subsequent film The Princess Bride summarizes your likely initial reaction ...

5.1.1. “You keep using that word. I do not think it means what you think it means.”

Although storage to disk may be the more common meaning of persistent today, Clojure uses an older meaning of the word having to do with immutable in-memory collections with specific properties. In particular, a persistent collection in Clojure allows you to preserve historical versions (Okasaki 1999) of its state and promises that all versions will have the same update and lookup complexity guarantees. The specific guarantees depend on the collection type, and we’ll cover those details along with each kind of collection.

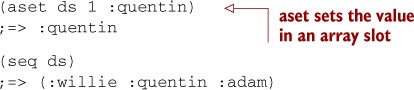

Here you can see the difference between a persistent data structure and one that’s not by using a Java array:

This example creates a three-element array of keywords and uses seq to produce an object that displays nicely in the REPL. Any change to the array ds happens in place, thus obliterating any historical version:

But using one of Clojure’s persistent data structures paints a different picture:

The original vector ds did not change on the replacement of the keyword :barnabas but instead created another vector with the changed value. A natural concern when confronted with this picture of persistence is that a naive implementation would copy the entire collection on each change, leading to slow operations and poor use of memory. Clojure’s implementations (Bagwell 2001) are instead efficient by sharing structural elements from one version of a persistent structure to another. This may seem magical, but we’ll demystify it in the next chapter. For now it’s sufficient to understand that each instance of a collection is immutable and efficient. This fact opens numerous possibilities that wouldn’t work for standard mutable collections. One of these is the sequence abstraction.

5.1.2. Sequence terms and what they mean

The words sequential, sequence, and seq don’t sound very different from each other, but they mean specific things in Clojure. We’ll start with specific definitions of each term to help you tell them apart, and then go into a bit of detail about how they relate to equality partitions and the sequence abstraction.

Seqs, sequentials, and sequences ... oh my

A sequential collection is one that holds a series of values without reordering them. As such, it’s one of three broad categories of collection types along with sets and maps.

A sequence is a sequential collection that represents a series of values that may or may not exist yet. They may be values from a concrete collection or values that are computed as necessary. A sequence may also be empty.

Clojure has a simple API called seq for navigating collections. It consist of two functions: first and rest. If the collection has anything in it, (first coll) returns the first element; otherwise it returns nil. (rest coll) returns a sequence of the items other than the first. If there are no other items, rest returns an empty sequence and never nil. As you may recall from sections 3.1 and 3.2, the way that Clojure treats nil versus empty collections motivates the iteration patterns used in the language. Clojure functions that promise to return sequences, such as map and filter, work the same way as rest. A seq is any object that implements the seq API, thereby supporting the functions first and rest. You might consider it an immutable variant of an enumerator or iterator where the lack of internal state allows near limitless concurrent and parallel iteration over the same seq.

Throughout this book, we’ll use very precise terms for certain aspects and features of Clojure. Table 5.1 provides a summary of some of the collection-specific terms.

Table 5.1. Sequence terms in brief

|

Term |

Brief description |

Example(s) |

|---|---|---|

| Collection | A composite data type | [1 2], {:a 1}, #{1 2}, and lists and arrays |

| Sequential | Ordered series of values | [1 2 3 4], (1 2 3 4) |

| Sequence | A sequential collection that may or may not exist yet | The result of (map a-fun a-collection) |

| Seq | Simple API for navigating collections | first, rest, nil, and () |

| clojure.core/seq | A function that returns an object implementing the seq API | (seq []) ;;=> nil and (seq [1 2]) ;;=> (1 2) |

There’s also a function called seq that accepts a wide variety of collection-like objects. Some collections, such as lists, implement the seq API directly, so calling seq on them returns the collection itself. More often, calling seq on a collection returns a new seq object for navigating that collection. In either case, if the collection is empty, seq returns nil and never an empty sequence. Functions that promise to return seqs (not sequences), such as next, work the same way.

Clojure’s sequence library manipulates collections, strings, arrays, and so on as if they were sequences, using the seq function and seq API.

Clojure includes a few predicates with names like the words just defined. Although they’re not frequently used, it seems worth mentioning that they may not mean exactly what the definitions here might suggest. For example, every object for which sequential? returns true is a sequential collection, but it returns false for some that are also sequential. This is because of implementation details that may be improved in a future version of Clojure.

Equality Partitions

As we mentioned previously, Clojure classifies each collection data type into one of three logical categories or partitions: sequentials, maps, and sets. These divisions draw clear distinctions between the types and help define equality semantics. Specifically, two objects will never be equal if they belong to different partitions. Few collection types are actually sequences, although several such as vectors are sequential.

If two sequentials have the same values in the same order, = returns true for them, even if their concrete types are different, as shown:

(= [1 2 3] '(1 2 3)) ;=> true

Conversely, even if two collections have the same exact values, if one is a sequential collection and the other isn’t, = returns false:

(= [1 2 3] #{1 2 3})

;=> false

Examples of things that are sequential include Clojure lists and vectors, and Java lists such as java.util.ArrayList. In fact, everything that implements java.util.List is included in the sequential partition.

Generally, things that fall into the other partitions include set or map in their name and so are easy to identify.

The Sequence Abstraction

Many Lisps build their data types (McCarthy 1962) on the cons-cell abstraction, an elegant two-element structure illustrated in figure 5.1. The cons-cell is used to build a linked list structure akin to the java.util.LinkedList type in the Java core library. In fact, although the cons-cell is the base structure on which traditional Lisps are built, the name Lisp comes from its focus on list processing.

Figure 5.1. Each cons-cell is a simple pair, a car and a cdr. (A) A list with two cells, each of which has a value—x and y, respectively—as the head (the car in Lisp terminology) and a list as the tail (the cdr). This is very similar to first and rest in Clojure sequences. (B) A cons-cell with a simple value for both the head and tail. This is called a dotted pair but is not supported by any of Clojure’s built-in types.

Clojure also has a couple of cons-cell-like structures that are covered in section 5.4, but they’re not central to Clojure’s design. Instead, the conceptual interface fulfilled by the cons-cell has been lifted off the concrete structure illustrated previously and been named sequence. All an object needs to do to be a sequence is to support the two core functions: first and rest. This isn’t much, but it’s all that’s required for the bulk of Clojure’s powerful library of sequence functions and macros to be able to operate on the collection: filter, map, for, doseq, take, partition ... the list goes on.

At the same time, a wide variety of objects satisfy this interface. Every Clojure collection provides at least one kind of seq object for walking through its contents, exposed via the seq function. Some collections provide more than one; for example, vectors support rseq, and maps support the functions keys and vals. All of these functions return a seq or, if the collection is empty, nil.

You can see examples of this by looking at the types of objects returned by various expressions. Here’s the map class:

(class (hash-map :a 1)) ;=> clojure.lang.PersistentHashMap

Unsurprisingly, the hash-map function returns an object of type PersistentHashMap. Passing that map object to seq returns an entirely new kind of object:

(seq (hash-map :a 1)) ;=> ([:a 1]) (class (seq (hash-map :a 1))) ;=> clojure.lang.PersistentHashMap$NodeSeq

This class name suggests it’s a seq of nodes on a hash map. Similarly, you can get a seq of keys on the same map:

(seq (keys (hash-map :a 1))) ;=> (:a) (class (keys (hash-map :a 1))) ;=> clojure.lang.APersistentMap$KeySeq

Note that these specific class names are an implementation detail that may change in the future, but the concepts they embody are central to Clojure and unlikely to change.

Having laid the foundation for a deeper dive into the sequence abstraction, we now must quickly diverge into a simplified discussion of asymptotic complexity of algorithms and Big-O notation. If you’re already comfortable with these topics, by all means skip to section 5.2. If you need a refresher or an overview, then the next section is a minimalist introduction (Cormen 2009) to the topic.

5.1.3. Big-O

This book isn’t heavily focused on asymptotic complexity, but we do mention it a handful of times throughout, so here we’ll cover the minimum required for understanding these few mentions. You may have gone your entire career without having to understand Big-O notation, and you may go the remainder similarly. But that’s no reason not to learn more; and a bit of understanding about Big-O and its implications will go a long way toward helping you choose between Clojure collections, as well as design and analyze algorithms in general.

Algorithmic complexity is a system for describing the relative space and time costs for algorithms. Typically the complexity of an algorithm is described using what’s known as Big-O notation. For example, you may have heard that finding an element in a linked list is O(n), which is read as order n. This means if you have a list (:a :b :c) of length 3, then verifying that the keyword :c is in that list requires three comparisons. This highlights the worst case of list access because :c is at the end of the list, but you don’t need to worry too much about the worst-case scenario unless that’s the only difference between two algorithms. On the other hand, to verify that :a is in the same list is O(1), which is read as constant time. Finding :a represents the best case for list access because it’s at the front of the list. But you shouldn’t build your hopes that elements will always be at the front—in analyzing algorithms, the best-case scenario is too rare to matter much. What you care about when analyzing algorithms is the expected case, or what you’re likely to see in practice. When looking at a few million runs of verifying that some value is contained in a million different lists, you inevitably see that the average number of comparisons required approaches the length of the list, divided by two. But because doubling the length of the list would also double the number of comparisons done in both the expected and worst cases, they’re grouped into the same Big-O category: O(n), also known as linear time.

Thus two algorithms that are in the same Big-O category may perform very differently, especially on small workloads. This makes the most difference when there’s a large constant term: work that the algorithm has to do up front regardless of the size of the workload.

When the workload is small, an O(1) algorithm with a large constant factor may be more costly than an O(n) algorithm that’s without extra costs. But as the workload increases, an O(1) algorithm always overtakes the O(n) algorithm, as shown in figure 5.2. Big-O doesn’t concern itself with these constant factors or small workloads.

Figure 5.2. In Big-O, regardless of the other ancillary costs, the higher order of magnitude always overtakes the lower eventually.

When learning about Clojure’s persistent data structures, you’re likely to hear the term O(log32 n) for those based on the persistent hash trie and O(log2 n) for the sorted structures. Accessing an element in a Clojure persistent structure by index is O(log n), or logarithmic. Logarithmic complexity describes a class of algorithms that are effectively immune to large changes in the size of their data. In the case of Clojure’s persistent structures, this means there’s little difference in “hops” (such as comparisons) between locating an element in a structure containing 100 elements and one containing 1 million elements. In practice you may notice some difference, because for a billion objects O(log2 n) would require approximately 30 comparisons for a lookup, whereas O(log32 n) would require only about 6. Given the smaller number of operations required for the O(log32 n) data structures, they can be viewed as providing a nearly O(1) lookup and update.[2]

2 Logarithms in Big-O notation are specified without a base because they differ from one another only by a constant factor. Both log32 n and log2 n scale exactly the same way; they’re just multiplied by a smaller constant. In no way is O(log32 n) more like O(1) then O(ln n)—in formal Big-O notation, they’re exactly the same.

We’ve covered the basic ideas behind persistence and the sequence abstraction and even touched on the basics of Big-O notation. Now we’ll discuss Clojure’s primary collection types and how these concepts apply to each, starting with vectors.

5.2. Vectors: creating and using them in all their varieties

Vectors store zero or more values sequentially, indexed by number; they’re a bit like arrays but are immutable and persistent. They’re versatile and make efficient use of memory and processor resources at both small and large sizes.

Vectors are probably the most frequently used collection type in Clojure code. They’re used as literals for argument lists and let bindings, for holding large amounts of application data, as stacks, and as map entries. We’ll also address efficiency considerations, including growing on the right end, subvectors, and reversals, and finally we’ll discuss cases in which vectors aren’t an optimal solution.

5.2.1. Building vectors

The vector’s literal square-bracket syntax is one reason you may choose to use a vector over a list. For example, the let form would work perfectly well, and with a nearly identical implementation, if it took a literal list of bindings instead of a literal vector. But the square brackets are visually different from the round parentheses surrounding the let form itself as well as the likely function calls in the body of the let form, and this is useful for humans (so we hear). Using vectors to indicate bindings for let, with-open, fn, and such is idiomatic Clojure practice and is a pattern you’re encouraged to follow in any similar macros you write.

The most common way to create a vector is with the literal syntax like [1 2 3]. But in many cases, you’ll want to create a vector out of the contents of some other kind of collection. For this, there’s the function vec:

(vec (range 10)) ;=> [0 1 2 3 4 5 6 7 8 9]

If you already have a vector but want to pour several values into it, then into is your friend:

(let [my-vector [:a :b :c]] (into my-vector (range 10))) ;=> [:a :b :c 0 1 2 3 4 5 6 7 8 9]

If you want it to return a vector, the first argument to into must be a vector. The second arg can be any sequence, such as what range returns, or anything else that works with the seq function. You can view the operation of into as similar to an O(n) concatenation based on the size of the second argument.[3] Clojure also provides a vector function to build a vector from its arguments, which is handy for constructs like (map vector a b).

3 Vectors can’t be concatenated any more efficiently than O(n).

Clojure can store primitive types inside of vectors using the vector-of function, which takes any of :int, :long, :float, :double, :byte, :short, :boolean, or :char as its argument and returns an empty vector. This returned vector acts like any other vector, except that it stores its contents as primitives internally. All the normal vector operations still apply, and the new vector attempts to coerce any additions into its internal type when being added:

(into (vector-of :int) [Math/PI 2 1.3])

;=> [3 2 1]

(into (vector-of :char) [100 101 102])

;=> [d e f]

(into (vector-of :int) [1 2 623876371267813267326786327863])

; java.lang.IllegalArgumentException: Value out of range for int:

-8359803716404783817

In addition, all caveats mentioned in section 4.1 regarding overflow, underflow, and so forth also apply to vectors of primitives.

Using vec and into, it’s easy to build vectors much larger than can be conveniently built using vector literals. But once you have a large vector like that, what are you going to do with it?

5.2.2. Large vectors

When collections are small, the performance differences between vectors and lists hardly matter. But as both get larger, each becomes dramatically slower at operations the other can still perform efficiently. Vectors are particularly efficient at three things relative to lists: adding or removing things from the right end of the collection, accessing or changing items in the interior of the collection by numeric index, and walking in reverse order. Adding and removing from the end is done by treating the vector as a stack—we’ll cover that in section 5.2.3.

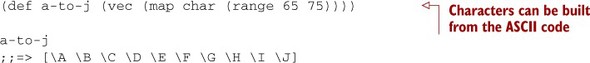

Any item in a vector can be accessed by its index number from 0 up to but not including (count my-vector) in essentially constant time.[4] You can do this using the function nth; using the function get, essentially treating the vector like a map; or by invoking the vector as a function. Look at each of these as applied to this example vector:

4 Several operations on Clojure’s persistent data structures are described in this book as “essentially constant time.” In all cases, these are O(log32 n).

All three of these do the same work, and each returns E:

(nth a-to-j 4) ;;=> E (get a-to-j 4) ;;=> E (a-to-j 4) ;;=> E

Which to use is a judgment call, but table 5.2 highlights some points you might consider when choosing.

Table 5.2. Vector lookup options: the three ways to look up an item in a vector, and how each responds to different exceptional circumstances

|

nth |

get |

Vector as a function |

|

|---|---|---|---|

| If the vector is nil | Returns nil | Returns nil | Throws an exception |

| If the index is out of range | Throws exception by default or returns a “not found” if supplied | Returns nil | Throws an exception |

| Supports a “not found” arg | Yes (nth [] 9 :whoops) | Yes (get [] 9 :whoops) | No |

Because vectors are indexed, they can be efficiently walked in either direction, left to right or right to left. The seq and rseq functions return sequences that do exactly that:

(seq a-to-j) ;=> (A B C D E F G H I J) (rseq a-to-j) ;=> (J I H G F E D C B A)

Any item in a vector can be changed using the assoc function. Clojure does this in essentially constant time using structural sharing between the old and new vectors as described at the beginning of this chapter:

(assoc a-to-j 4 "no longer E") ;=> [A B C D "no longer E" F G H I J]

The assoc function for vectors only works on indices that already exist in the vector or, as a special case, exactly one step past the end. In this case, the returned vector is one item larger than the input vector. More frequently, vectors are grown using the conj function, as you’ll see in the next section.

A few higher-powered functions are provided that use assoc internally. For example, the replace function works on both seqs and vectors, but when given a vector, it uses assoc to fix up and return a new vector:

(replace {2 :a, 4 :b} [1 2 3 2 3 4])

;=> [1 :a 3 :a 3 :b]

The functions assoc-in and update-in are for working with nested structures of vectors and/or maps, like this one:[5]

5 Nested vectors are far from the most efficient way to store or process matrices, but they’re convenient to manipulate in Clojure and so make a good example here. More efficient options include a single vector, arrays, or a library for matrix processing such as Colt or Incanter (http://incanter.org).

(def matrix

[[1 2 3]

[4 5 6]

[7 8 9]])

assoc-in, get-in, and update-in take a series of indices to pick items from each more deeply nested level. For a vector arranged like the earlier matrix example, this amounts to row and column coordinates:

(get-in matrix [1 2]) ;=> 6 (assoc-in matrix [1 2] 'x) ;=> [[1 2 3] [4 5 x] [7 8 9]]

The update-in function works the same way, but instead of taking a value to overwrite an existing value, it takes a function to apply to an existing value. It replaces the value at the given coordinates with the return value of the function given:

(update-in matrix [1 2] * 100) ;=> [[1 2 3] [4 5 600] [7 8 9]]

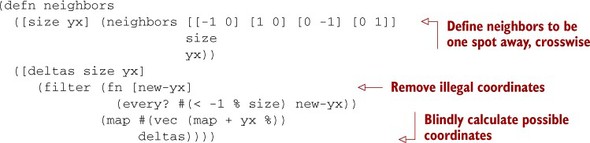

The coordinates refer to the value 6, and the function given here is * taking an argument 100, so the slot becomes the return value of (* 6 100). There’s also a function get-in for retrieving a value in a nested vector. Before exploring its operation, let’s create a function neighbors in the following listing that, given a y-x location in an square 2D matrix, returns a sequence of the locations surrounding it.

Listing 5.1. Function for finding the neighbors of a spot on a 2D matrix

The operation of neighbors is fairly straightforward, but let’s walk through it a little. The deltas local describes that a neighbor can be one spot away, but only along the x or y axis (not diagonally). The function first walks through deltas and builds a vector of each added to the yx point provided. This operation of course generates illegal point coordinates, so those are removed using filter, which checks to ensure that the indices lie between -1 and the provided size.

To show this in action, you can call neighbors, indicating a 3 × 3 matrix and asking for the neighbors of the top-left cell:

(neighbors 3 [0 0]) ;;=> ((1 0) (0 1))

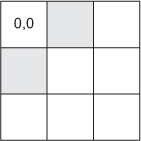

The result indicates that the crosswise neighbors of cell y=0|x=0 are the cells y=1|x=0 and y=0|x=1. You can see how this result is correct graphically in figure 5.3.

Figure 5.3. The crosswise neighbors of cell 0,0

You can also call neighbors with the same-sized matrix but asking for the neighbors of the center cell:

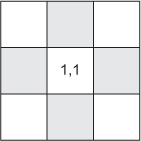

(neighbors 3 [1 1]) ;;=> ((0 1) (2 1) (1 0) (1 2))

The result indicates that the crosswise neighbors of the center cell at y=1|x=1 are the cells forming a plus, as shown in figure 5.4.

Figure 5.4. The crosswise neighbors of cell 1,1

You can test the result of neighbors on cell 0,0 using get-in as follows:

(map #(get-in matrix %) (neighbors 3 [0 0])) ;=> (4 2)

For each neighbor coordinate returned from neighbors, you use get-in to retrieve the value at that point. The position [0 0] corresponding to the value 1 has the neighboring values 4 and 2. You’ll use neighbors again in section 7.4; but next we’ll look at growing and shrinking vectors—treating them like stacks.

5.2.3. Vectors as stacks

Classic stacks have at least two operations, push and pop. With respect to Clojure vectors, these operations are called conj and pop, respectively. The conj function adds elements to, and pop removes elements from, the right side of the stack. Because vectors are immutable, pop returns a new vector with the rightmost item dropped—this is different from many mutable stack APIs, which generally return the dropped item. Consequently, peek becomes more important as the primary way to get an item from the top of the stack:

(def my-stack [1 2 3]) (peek my-stack) ;=> 3 (pop my-stack) ;=> [1 2] (conj my-stack 4) ;=> [1 2 3 4] (+ (peek my-stack) (peek (pop my-stack))) ;=> 5

Each of these operations completes in essentially constant time. Most of the time, a vector that’s used as a stack is used that way throughout its life. It’s helpful to future readers of your code to keep this is mind and use the stack operations consistently, even when other functions might work. For example, last on a vector returns the same thing as peek; but besides being slower,[6] it leads to unnecessary confusion about how the collection is being used. If the algorithm involved calls for a stack, use conj, not assoc, for growing the vector; peek, not last; and pop, not dissoc, for shrinking it.

6 last is slower than peek for a vector because last always walks to the end of the collection given to it, whereas peek is defined in terms of the most efficient operation for the collection type.

Any object that implements clojure.lang.IPersistentStack[7] can use the functions conj, pop, and peek. In addition to vectors, Clojure lists also implement this interface, but the functions operate on the left side of lists instead of the right side as with vectors. When operating on either via the stack discipline, it’s best to ignore the ordering, because it tends to add confusion.

7 The conj function also works with all Clojure’s other persistent collection types, even if they don’t implement clojure.lang.IPersistentStack.

5.2.4. Using vectors instead of reverse

The ability of vectors to grow efficiently on the right side and then be walked right to left produces a noteworthy emergent behavior: you rarely need the reverse function. This is different from most Lisps and schemes. When processing a list, it’s pretty common to want to produce a new list in the same order. But if all you have are classic Lisp lists, often the most natural algorithm[8] leaves you with a backward list that needs to be reversed. Here’s an example of a function similar to Clojure’s map:

8 ... the most natural tail-recursive algorithm, anyway.

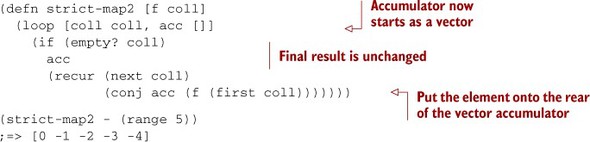

This is adequate code, except for that glaring reverse of the final return value. After the entire list has been walked once to produce the desired values, reverse walks it again to get the values in the right order. This is both inefficient and unnecessary. One way to get rid of the reverse is to use a vector instead of a list as the accumulator:

A small change, but the code is now a touch cleaner and faster. It does return a vector instead of a list, but this is rarely a problem, because any client code that wants to treat this as a seq can usually do so automatically.[9]

9 Another way to get rid of a reverse is to build a lazy sequence instead of accumulating a strict collection. This is how Clojure’s own map function is implemented; type (source map) in your REPL to see it.

The examples we’ve shown so far have all been plain vectors, but we’ll turn now to the special features of some other vector types, starting with subvectors.

5.2.5. Subvectors

Although items can’t be removed efficiently from a vector (except the rightmost item), Clojure provide a fast way to take a slice of an existing vector such as a-to-j (previously defined as [A B C D E F G H I J]) based on start and end indices with the subvec function:

(subvec a-to-j 3 6) ;=> [D E F]

The first index given to subvec is inclusive (starts at index 3), but the second is exclusive (ends before index 6). The new subvector internally hangs on to the entire original a-to-j vector, making each lookup performed on the new vector cause the subvector to do a little offset math and then look it up in the original. This makes creating a subvector fast. You can use subvec on any kind of vector, and it’ll work fine. But there’s special logic for taking a subvec of a subvec, in which case the newest subvector keeps a reference to the original vector, not the intermediate subvector. This prevents subvectors of subvectors from stacking up needlessly, and it keeps both the creation and use of the sub-subvecs fast and efficient.

5.2.6. Vectors as map entries

Clojure’s hash map, just like hash tables or dictionaries in many other languages, has a mechanism to iterate through the entire collection. Clojure’s solution for this iterator is, unsurprisingly, a seq. Each item of this seq needs to include both the key and the value, so they’re wrapped in a map entry. When printed, each entry looks like a vector:[10]

10 In the version of Clojure used while writing the book, we got [:depth 15] as our answer. But because map entry order isn’t guaranteed, you may see something different with a different version of Clojure.

(first {:width 10, :height 20, :depth 15})

;=> [:depth 15]

But not only does a map entry look like a vector, it really is one:

(vector? (first {:width 10, :height 20, :depth 15}))

;=> true

This means you can use all the regular vector functions on it: conj, get, and so on. It even supports destructuring, which can be handy. For example, the following locals dimension and amount take on the value of each key/value pair in turn:

(doseq [[dimension amount] {:width 10, :height 20, :depth 15}]

(println (str (name dimension) ":") amount "inches"))

; width: 10 inches

; height: 20 inches

; depth: 15 inches

;=> nil

A MapEntry is its own type and has two functions for retrieving its contents: key and val, which do exactly the same thing as (nth my-map 0) and (nth my-map 1), respectively. These are sometimes useful for the clarity they can bring to your code, but frequently destructuring is used instead, because it’s so darned handy.

Now you know what vectors are, what specific kinds of vectors are included in Clojure, and some of the things they’re good at doing. To round out your understanding of vectors, we’ll conclude with a brief look at things that vectors are bad at doing.

5.2.7. What vectors aren’t

Vectors are versatile, but there are some commonly desired patterns for which they might seem like a good solution but in fact aren’t. Although we prefer to focus on the positive, we hope a few negative examples will help you avoid using the wrong tool for the job.

Vectors aren’t sparse

If you have a vector of length n, the only position where you can insert a value is at index n—appending to the far-right end. You can’t skip some indices and insert at a higher index number. If you want a collection indexed by nonsequential numbers, consider a hash map or sorted map. Although you can replace values in a vector, you can’t insert or delete items such that indices for the subsequent items would have to be adjusted. Clojure doesn’t currently have a native persistent collection that supports this kind of operation, but a Clojure contrib library aptly named data.finger-tree[11] supporting a structure called finger trees may help for these use cases.

11 The data.finger-tree library is located at https://github.com/clojure/data.finger-tree.

Vectors aren’t queues

Some people have tried to use vectors as queues. One approach would be to push items onto the right end of the vector using conj and then to pop items off the left using rest or next. The problem with this is that rest and next return seqs, not vectors, so subsequent conj operations wouldn’t behave as desired. Using into to convert the seq back into a vector is O(n), which is less than ideal for every pop.

Another approach is to use subvec as a “pop,” leaving off the leftmost item. Because subvec does return a vector, subsequent conj operations will push onto the right end as desired. But as explained earlier, subvec maintains a reference to the entire underlying vector, so none of the items being popped this way will ever be garbage collected. Also less than ideal.

So what would be the ideal way to do queue operations on a persistent collection? Why, use a PersistentQueue, of course. See section 5.5 for details.

Vectors aren’t sets

If you want to find out whether a vector contains a particular value, you might be tempted to use the contains? function, but you’d be disappointed by the results. Clojure’s contains? is for asking whether a particular key, not value, is in a collection. So for a vector, the “keys” are the indices of its elements (the first element is at index 0, and so on). Although it’s sometimes useful to check whether an index is contained in a vector using contains?, more often than not you want to find a value.

In this section, we showed how to create vectors using literal syntax or by building them up programmatically. We looked at how to push them, pop them, and slice them. We also looked at some of the things vectors can’t do well. One of these is adding and removing items from the left side; although vectors can’t do this, lists can, as we’ll discuss next.

5.3. Lists: Clojure’s code-form data structure

Clojure’s PersistentLists are by far the simplest of Clojure’s persistent collection types. A PersistentList is a singly linked list where each node knows its distance from the end. List elements can only be found by starting with the first element and walking each prior node in order, and can only be added or removed from the left end.

Lists are almost exclusively used to represent code forms. They’re used literally in code to call functions, macros, and so forth, as we’ll discuss shortly. Code forms are also built programmatically to then be evaled or used as the return value for a macro. If the final usage of a collection isn’t as Clojure code, lists rarely offer any value over vectors and are thus rarely used. But lists have a rich heritage in Lisps, so we’ll discuss when they should be used in Clojure, and also when they shouldn’t—situations in which there are now better options.

5.3.1. Lists like Lisps like

All flavors of Lisp have lists that they like to use, and Clojure lists, already introduced in chapter 2, are similar enough to be familiar. The functions have different names, but what other Lisps call car is the same as first on a Clojure list. Similarly, cdr is the same as next. But there are substantial differences as well. Perhaps the most surprising is the behavior of cons. Both cons and conj add something to the front of a list, but the order of their arguments is different:

(cons 1 '(2 3)) ;=> (1 2 3) (conj '(2 3) 1) ;=> (1 2 3)

In a departure from classic Lisps, the “right” way to add to the front of a list is with conj. For each concrete type, conj adds elements in the most efficient way, and for lists this means at the left side. Additionally, a list built using conj is homogeneous—all the objects on its next chain are guaranteed to be lists, whereas sequences built with cons only promise that the result will be some kind of seq. So you can use cons to add to the front of a lazy seq, a range, or any other type of seq, but the only way to get a bigger list is to use conj.[12] Either way, the next part has to be some kind of sequence, which points out another difference from other Lisps: Clojure has no dotted pair, a cons-cell whose cdr is a value, not another cons, as we showed earlier in figure 5.1. All you need to know is that if you want a simple pair in a Clojure program, you should use a vector of two items.

12 Or to conj or cons onto nil. This is a special case, because nil isn’t the same as an empty collection of any specific type. Clojure could have left this unsupported, perhaps throwing an exception if you did (cons 1 nil), but instead it provides a reasonable default behavior: building a list one item long.

All seqs print with rounded parentheses, but this does not mean they’re the same type or will behave the same way. For example, many of these seq types don’t know their own size the way lists do, so calling count on them may be O(n) instead of O(1).[13] An unsurprising difference between lists in Clojure and other Lisps is that they’re immutable. At least that had better not be surprising anymore. Changing values in a list is generally discouraged in other Lisps anyway, but in Clojure it’s impossible.

13 You can test for this property of being countable in constant time using the counted? function. For example, (counted? (range 10)) returns true in Clojure 1.0 but false in 1.1, because the implementation of range changed between those versions and no longer provides O(1) counting.

5.3.2. Lists as stacks

Lists in all Lisps can be used as stacks, but Clojure goes further by supporting the IPersistentStack interface. This means you can use the functions peek and pop to do roughly the same thing as first and next. Two details are worth noting. One is that next and rest are legal on an empty list, but pop throws an exception. The other is that next on a one-item list returns nil, whereas rest and pop both return an empty list.

When you want a stack, the choice between using a list and a vector is a somewhat subtle decision. Their memory organization is different, so it may be worth testing your usage to see which performs better. Also, the order of values returned by seq on a list is backward compared to seq on a vector, and in rare cases this can point to one or the other as the best solution. In the end, it may come down primarily to personal taste.

5.3.3. What lists aren’t

Probably the most common misuse of lists is to hold items that will be looked up by index. Although you can use nth to get the 42nd (or any other) item from a list, Clojure has to walk the list from the beginning to find it. Don’t do that. In fact, this is a practical reason why lists can’t be used as functions, as in ((list :a) 0). Vectors are good at looking things up by index, so use one of those instead.

Lists are also not sets. All the reasons we gave in the previous section for why it’s a bad idea to frequently search a vector looking for a particular value apply to lists as well. Even more so because contains? always returns false for a list. See section 5.5.

Finally, lists aren’t queues. You can add items to one end of a list, but you can’t remove things from the other end. So what should you use when you need a queue? Funny you should ask ...

5.4. How to use persistent queues

We mentioned in section 5.2 that new Clojure developers often attempt to implement simple queues using vectors. Although this is possible, such an implementation leaves much to be desired. Instead, Clojure provides a persistent immutable queue that will serve all your queueing needs. In this section, we’ll touch on the usage of the PersistentQueue class, where its first-in-first-out (FIFO) queueing discipline (Knuth 1997) is described by conj adding to the rear, pop removing from the front, and peek returning the front element without removal.

Before going further, it’s important to point out that Clojure’s PersistentQueue is a collection, not a workflow mechanism. Java has classes deriving from the java.util.concurrent.BlockingQueue interface for workflow, which often are useful in Clojure programs, and those aren’t these. If you find yourself wanting to repeatedly check a work queue to see if there’s an item of work to be popped off, or if you want to use a queue to send a task to another thread, you do not want the PersistentQueue, because it’s an immutable structure; thus there’s no way to inherently communicate changes from one worker to another.

5.4.1. A queue about nothing

Search all you like, but the current implementation of Clojure doesn’t provide special syntax like the vector, set, and map literals for creating persistent queues.[14] That being the case, how would you go about creating a queue? The answer is that there’s a readily available empty queue instance to use, clojure.lang.PersistentQueue/EMPTY. The printed representation for Clojure’s queues isn’t incredibly informative, but you can change that by providing a method on the print-method multimethod:

14 The Clojure core language grows carefully, tending to incorporate only features that have proven useful. Queues currently stand at the edge of this growth, meaning there might be more (or less) support for them in the future.

Defining print-method implementations is a convenient mechanism for printing types in logical ways. This fun format, that we call the queue fish, indicates a direction of flow for conj and pop.

You might think that popping an empty queue would raise an exception, but the fact is that this action results in another empty queue. Likewise, peeking an empty queue returns nil. Not breathtaking, for sure, but this behavior helps to ensure that queues work in place of other sequences. The functions first, rest, and next also work on queues and give the results you might expect, although rest and next return seqs, not queues. Therefore, if you’re using a queue as a queue, it’s best to use the functions designed for this purpose: peek, pop, and conj.

5.4.2. Putting things on

The mechanism for adding elements to a queue is conj:

(def schedule

(conj clojure.lang.PersistentQueue/EMPTY

:wake-up :shower :brush-teeth))

schedule

;=> <-(:wake-up :shower :brush-teeth)-<

Clojure’s persistent queue is implemented internally using two separate collections, the front being a seq and the rear being a vector, as shown in figure 5.5. All insertions occur in the rear vector, and all removals occur in the front seq, taking advantage of each collection’s strength. When all the items from the front list have been popped, the back vector is wrapped in a seq to become the new front, and an empty vector is used as the new back.

Figure 5.5. The two collections used internally in a single queue. peek returns the front item of the seq, pop returns a new queue with the front of the seq left off, and conj adds a new item to the back of the vector.

Typically, an immutable queue such as this is implemented with the rear as a list in reverse order, because insertion to the front of a list is an efficient operation. But using a Clojure vector eliminates the need for a reversed list.

5.4.3. Getting things

Clojure provides the peek function to get the front element in a queue:

(peek schedule) ;=> :wake-up

The fact that performing peek doesn’t modify the contents of a persistent queue should be no surprise by now.

5.4.4. Taking things off

To remove elements from the front of a queue, use the pop function and not rest:

(pop schedule) ;=> <-(:shower :brush-teeth)-< (rest schedule) ;=> (:shower :brush-teeth)

Although rest returns something with the same values and even prints the same thing pop returns, the former is a seq, not a queue. This is potentially the source of subtle bugs, because subsequent attempts to use conj on the returned seq won’t preserve the speed guarantees of the queue type, and the queue functions pop, peek, and conj won’t behave as expected.

We’ve talked numerous times in this chapter about the sequence abstraction, and although it’s an important consideration, it shouldn’t always be used. Instead, it’s important to know your data structures, their sweet spots, and their operations. By doing so, you can write code that’s specialized in ways that use the performance characteristics you need for a given problem space. Clojure’s persistent queues illustrate this fact perfectly. To further highlight this point, we’ll now explore Clojure’s set type.

5.5. Persistent sets

Clojure sets work the same as mathematical sets, in that they’re collections of unsorted unique elements. In this section, we’ll cover sets by explaining their strong points, weaknesses, and idioms. We’ll also cover some of the functions from the clojure.set namespace.

5.5.1. Basic properties of Clojure sets

Sets are functions of their elements that return the matched element or nil:

(#{:a :b :c :d} :c)

;=> :c

(#{:a :b :c :d} :e)

;=> nil

Set elements can be accessed via the get function, which returns the queried value if it exists in the given set:

(get #{:a 1 :b 2} :b)

;=> :b

(get #{:a 1 :b 2} :z :nothing-doing)

;=> :nothing-doing

An added advantage of using get is shown here in the second call: get allows a third argument that is used as the “not found” value, should a key lookup fail. As a final point, sets, like all Clojure’s collections, support heterogeneous values.

How Clojure populates sets

The key to understanding how Clojure sets determine which elements are discrete lies in one simple statement. Given two elements evaluating as equal, a set will contain only one, independent of concrete types:

(into #{[]} [()])

;;=> #{[]}

(into #{[1 2]} '[(1 2)])

;;=> #{[1 2]}

(into #{[] #{} {}} [()])

;;=> #{#{} {} []}

From the first two examples, even though [] and () are of differing types, they’re considered equal because their elements are equal or (in this case) empty. But the last example illustrates nicely that collections in an equality partition (as described in section 5.1.2) will always be equal if their elements are equal, but will never be considered equal if the collections are of types in different equality partitions.

As we’ll explain in section 5.5.3, trying to find a value in a vector using the contains? function doesn’t work the way we’d hope. Instead, the some function takes a predicate and a sequence. It applies the predicate to each element in turn, returning the first truthy value returned by the predicate or else nil:

(some #{:b} [:a 1 :b 2])

;=> :b

(some #{1 :b} [:a 1 :b 2])

;=> 1

Using a set as the predicate supplied to some allows you to check whether any of the truthy values in the set are contained within the given sequence. This is a frequently used Clojure idiom for searching for containment within a sequence.

5.5.2. Keeping your sets in order with sorted-set

There’s not much to say about creating sorted sets with the sorted-set function. But there’s a simple rule you should bear in mind:

(sorted-set :b :c :a)

;=> #{:a :b :c}

(sorted-set [3 4] [1 2])

;=> #{[1 2] [3 4]}

(sorted-set :b 2 :c :a 3 1)

; java.lang.ClassCastException: clojure.lang.Keyword cannot be cast to

java.lang.Number

As long as the arguments to the sorted-set function are mutually comparable, you’ll receive a sorted set; otherwise an exception is thrown. This can manifest itself when you’re dealing with sorted sets downstream from their point of creation, leading to potential confusion:

(def my-set (sorted-set :a :b))

;; ... some time later

(conj my-set "a")

;=> java.lang.ClassCastException: clojure.lang.Keyword cannot be cast to

java.lang.String

The difficulty in finding the reason for this exception will increase as the distance between the creation of my-set and the call to conj increases. You can adjust this rule a bit by using sorted-set-by instead and providing your own comparator. This works exactly like the comparator for sorted-map-by, which we’ll cover in section 5.6.2. Sorted maps and sorted sets are also similar in their support of subseq, to allow efficiently jumping to a particular key in the collection and walking through it from there. This is covered in section 5.6.

5.5.3. The contains? function

As we touched on in section 5.2, there’s sometimes confusion regarding the usage of Clojure’s contains? function. Many newcomers to Clojure expect this function to work the same as Java’s java.util.Collection#contains method; this assumption is false, as shown:

(contains? #{1 2 4 3} 4)

;=> true

(contains? [1 2 4 3] 4)

;=> false

If you were to draw a false analogy between Java’s .contains methods and contains?, then both of the function calls noted here should return true. The official documentation for contains? describes it as a function that returns true if a given key exists in a collection. When reading the word key, the notion of a map springs to mind, but the fact that this function also works on sets hints at their implementation details. Sets are implemented as maps with the same element as the key and value,[15] but there’s an additional check for containment before insertion.

15 All implementation caveats apply.

5.5.4. The clojure.set namespace

Mathematical sets form the basis of much of modern mathematical thought, and Clojure’s basic set functions in the clojure.set namespace are a clear reflection of the classical set operations. In this subsection, we’ll briefly cover each function and talk about how, when applicable, they differ from the mathematical model. To start using the functions in the clojure.set namespace, enter the following into your REPL:

(require 'clojure.set)

Or, if you wish to use these functions in your namespace, use the following inclusion:

(ns my.cool.lib (:require clojure.set))

To describe some of the functions in the clojure.set namespace, let’s start with a simple picture. Figure 5.6 describes the nature of Clojure’s set functions, each of which will be shown presently. Note that Clojure’s set functions take an arbitrary number of sets and apply the operation incrementally.

Figure 5.6. The three Venn diagrams show a graphical representation of Clojure’s set functions: intersection, union, and difference.

The intersection function

Clojure’s clojure.set/intersection function works as you might expect. Given two sets, intersection returns a set of the common elements. Given n sets, it incrementally returns the intersection of resulting sets and the next set, as shown in the following code:

(clojure.set/intersection #{:humans :fruit-bats :zombies}

#{:chupacabra :zombies :humans});=>

#{:zombies :humans}

(clojure.set/intersection #{:pez :gum :dots :skor}

#{:pez :skor :pocky}

#{:pocky :gum :skor})

;=> #{:skor}

In the first example, the resulting set is the common elements between the given sets. The second example is the result of the intersection of the first two sets then intersected with the final set.

The union function

There’s also likely no surprise when using the clojure.set/union function:

(clojure.set/union #{:humans :fruit-bats :zombies}

#{:chupacabra :zombies :humans})

;=> #{:chupacabra :fruit-bats :zombies :humans}

(clojure.set/union #{:pez :gum :dots :skor}

#{:pez :skor :pocky}

#{:pocky :gum :skor})

;=> #{:pez :pocky :gum :skor :dots}

Given two sets, the resulting set contains all the distinct elements from both. In the first example, this means :zombies and :humans show up only once each in the return value. Note in the second example that more than two sets may be given to union, but as expected each value given in any of the input sets is included exactly once in the output set.

The difference function

The only set function that could potentially cause confusion on first glance is clojure .set/difference, which by name implies some sort of opposition to a union operation. Working under this false assumption, you might assume that difference would operate thusly:

;; This is not what really happens

(clojure.set/difference #{1 2 3 4} #{3 4 5 6})

;=> #{1 2 5 6}

But if you were to evaluate this expression in your REPL, you’d receive a very different result:

(clojure.set/difference #{1 2 3 4} #{3 4 5 6})

;=> #{1 2}

The reason for this result is that Clojure’s difference function calculates what’s known as a relative complement (Stewart 1995) between two sets. In other words, difference can be viewed as a set-subtraction function, “removing” all elements in a set A that are also in another set B.

5.6. Thinking in maps

It’s difficult to write a program of any significant size without the need for a map of some sort. The use of maps is ubiquitous in writing software because, frankly, it’s hard to imagine a more robust data structure. But we as programmers tend to view maps as a special case structure outside the normal realm of data objects and classes. The object-oriented school of thought has relegated the map to being a supporting player in favor of the class. We’re not going to talk about the merits (or lack thereof) for this relegation here, but in upcoming sections we’ll discuss moving away from thinking in classes and instead thinking in the sequence abstraction, maps, protocols, and types. Having said all that, it need hardly be mentioned that maps should be used to store named values. In this section, we’ll talk about the different types of maps and the trade-offs surrounding each.

5.6.1. Hash maps

Arguably, the most ubiquitous[16] form of map found in Clojure programs is the hash map, which provides an unsorted key/value associative structure. In addition to the literal syntax touched on in chapter 2, hash maps can be created using the hash-map function, which likewise takes alternating key/value pairs, with or without commas:

16 Although with the pervasiveness of the map literal, the ubiquity may instead fall to the array map.

(hash-map :a 1, :b 2, :c 3, :d 4, :e 5)

;=> {:a 1, :c 3, :b 2, :d 4, :e 5}

Clojure hash maps support heterogeneous keys, meaning they can be of any type and each key can be of a differing type, as this code shows:

(let [m {:a 1, 1 :b, [1 2 3] "4 5 6"}]

[(get m :a) (get m [1 2 3])])

;=> [1 "4 5 6"]

As we mentioned at the beginning of this chapter, many of Clojure’s collection types can be used as functions, and in the case of maps they’re functions of their keys. Using maps this way acts the same as the use of the get function in the previous code example, as shown when building a vector of two elements:

(let [m {:a 1, 1 :b, [1 2 3] "4 5 6"}]

[(m :a) (m [1 2 3])])

;=> [1 "4 5 6"]

Providing a map to the seq function returns a sequence of map entries:

(seq {:a 1, :b 2})

;=> ([:a 1] [:b 2])

This sequence appears to be composed of the sets of key/value pairs contained in vectors, and for all practical purposes it should be treated as such. In fact, you can create a new hash map using this precise structure:

(into {} [[:a 1] [:b 2]])

;=> {:a 1, :b 2}

Even if your embedded pairs aren’t vectors, they can be made to be for building a new map:

(into {} (map vec '[(:a 1) (:b 2)]))

;=> {:a 1, :b 2}

Your pairs don’t have to be explicitly grouped, because you can use apply to create a hash map given that the key/value pairs are laid out in a sequence consecutively:

(apply hash-map [:a 1 :b 2])

;=> {:a 1, :b 2}

You can also use apply this way with sorted-map and array-map. Another fun way to build a map is to use zipmap to “zip” together two sequences, the first of which contains the desired keys and the second their corresponding values:

(zipmap [:a :b] [1 2])

;=> {:b 2, :a 1}

The use of zipmap illustrates nicely the final property of map collections. Hash maps in Clojure have no order guarantees. If you do require ordering, then you should use sorted maps, discussed next.

5.6.2. Keeping your keys in order with sorted maps

It’s impossible to rely on a specific ordering of the key/value pairs for a standard Clojure map, because there are no order guarantees. Using the sorted-map and sorted-map-by functions, you can construct maps with order assurances. By default, the function sorted-map builds a map sorted by the comparison of its keys:

(sorted-map :thx 1138 :r2d 2)

;=> {:r2d 2, :thx 1138}

You may require an alternative key ordering, or perhaps an ordering for keys that aren’t easily comparable. In these cases, you must use sorted-map-by, which takes an additional comparison function:[17]

17 Note that simple Boolean functions like > can be used as comparison functions.

(sorted-map "bac" 2 "abc" 9)

;=> {"abc" 9, "bac" 2}

(sorted-map-by #(compare (subs %1 1) (subs %2 1)) "bac" 2 "abc" 9)

;=> {"bac" 2, "abc" 9}

This means sorted maps don’t generally support heterogeneous keys the same as hash maps, although it depends on the comparison function provided. For example, the preceding examples assume all keys are strings. The default sorted-map comparison function compare supports maps whose keys are all mutually comparable with each other. Attempts to use keys that aren’t supported by whichever comparison function you’re using will generally result in a cast exception:

(sorted-map :a 1, "b" 2)

;=> java.lang.ClassCastException: clojure.lang.Keyword cannot be cast to

java.lang.String

One remarkable feature supported by sorted maps (and also sorted sets) is the ability to jump efficiently to a particular key and walk forward or backward from there through the collection. This is done with the subseq and rsubseq functions for forward and backward, respectively. Even if you don’t know the exact key you want, these functions can be used to “round up” the next closest key that exists.

Another way that sorted maps and hash maps differ is in their handling of numeric keys. A number of a given magnitude can be represented by many different types; for example, 42 can be a long, an int, a float, and so on. Hash maps treat each of these different objects as different, whereas a sorted map treats them as the same. You can see the contrast in this example, where the hash map keeps both keys but the sorted map keeps just one:

(assoc {1 :int} 1.0 :float)

;=> {1.0 :float, 1 :int}

(assoc (sorted-map 1 :int) 1.0 :float)

;=> {1 :float}

This is because the comparison function used by the sorted map determines order by equality, and if two keys compare as equal, only one is kept. This applies to comparison functions provided to sorted-map-by as well as the default comparator shown previously.

Sorted maps otherwise work just like hash maps and can be used interchangeably. You should use sorted maps if you need to specify or guarantee a specific key ordering. On the other hand, if you need to maintain insertion ordering, then the use of array maps is required, as you’ll see.

5.6.3. Keeping your insertions in order with array maps

If you hope to perform an action under the assumption that a given map is insertion ordered, then you’re setting yourself up for disappointment. But you might already know that Clojure provides a special map, called an array map, that ensures insertion ordering:

(seq (hash-map :a 1, :b 2, :c 3)) ;=> ([:a 1] [:c 3] [:b 2]) (seq (array-map :a 1, :b 2, :c 3)) ;=> ([:a 1] [:b 2] [:c 3])

When insertion order is important, you should explicitly use an array map. Array maps can be populated quickly by ignoring the form of the key/value pairs and blindly copying them into place. For structures sized below a certain count, the cost associated with map lookup bridges the gap between a linear search through an equally sized array or list. That’s not to say that the map will be slower; instead, it allows the map and linear implementations to be comparable. Sometimes your best choice for a map is not a map at all, and like most things in life, there are trade-offs. Fortunately, Clojure takes care of these considerations for you by adjusting the concrete implementations behind the scenes as the size of the map increases. The precise types in play aren’t important, because Clojure is careful to document its promises and to leave undefined aspects subject to change and/or improvement. It’s usually a bad idea to build your programs around concrete types, and it’s always bad to build around undocumented behaviors. Clojure handles the underlying efficiency considerations so you don’t have to. But be aware that if ordering is important, you should avoid operations that inadvertently change the underlying map implementation from an array map.

We’ve covered the basics of Clojure maps in this section, including common usage and construction techniques. Clojure maps, minus some implementation details, shouldn’t be surprising to anyone. It’ll take a while to grow accustomed to dealing with immutable maps, but in time even this nuance will become second nature.

Now that we’ve looked at Clojure’s primary collection types and their differences in detail, we’ll take some time to work through a simple case study. This case study, creating a function named pos, will illustrate the thought processes you may consider on your way toward designing an API built on the principles of the sequence abstraction.

5.7. Putting it all together: finding the position of items in a sequence

We sometimes underestimate the influence of little things.

Charles W. Chesnutt[18]

18 “Obliterating the Color Line,” Cleveland World, October 23, 1901.

The case study for this chapter is to design and implement a simple function to locate the positional index of an element in a sequence.[19] We’re going to pool together much of the knowledge gained in this chapter in order to illustrate the steps you might take in designing, writing, and ultimately optimizing a Clojure collection function. Of course, we’ll work against the sequence abstraction and therefore design the solution accordingly.

19 Stuart Halloway and Aaron Bedra describe a similar function, index-of-any, in their book Programming Clojure (Pragmatic Bookshelf, 2012) that views the problem largely through the lens of reduced complexity. We like their example and the example in this section because they’re simple yet powerful and nicely illustrative of the way that Clojure functions should be written.

The function, named pos, must

- Work on any collection type, returning indices corresponding to some value

- Return a numerical index for sequential collections or associated key for maps and sets

- Otherwise return nil

Now that we’ve outlined the basic requirements of pos, we’ll run through a few implementations, discussing each along the way toward a Clojure-optimal version.

5.7.1. Implementation

If we were to address each of the requirements for pos literally and directly, we might come up with a function that looks like the following listing.

Listing 5.2. First cut of the position function

Pretty hideous, right? We think so too, but let’s at least check whether it works as desired:

(pos 3 [:a 1 :b 2 :c 3 :d 4])

;;=> 5

(pos :foo [:a 1 :b 2 :c 3 :d 4])

;;=> nil

(pos 3 {:a 1 :b 2 :c 3 :d 4})

;;=> :c

(pos 3 ":a 1 :b 2 :c 3 :d 4")

;;=> 13

Apart from being overly complicated, it’d likely be more useful if it instead returned a sequence of all the indices matching the item, so let’s add that to the requirements. But we’ve built a heavy load with the first cut at pos and should probably step back a moment to reflect. First, it’s probably the wrong approach to handle map types and other sequence types differently. The use of the predicate map? to detect the type of the passed collection is incredibly constraining, in that it forces different collections to be processed differently, and sets are not handled correctly at all. That’s not to say the use of type-based predicates is strictly prohibited, only that you should try to favor more generic algorithms or at least minimize their usage.

As chance has it, the exact nature of the problem demands that we view collections as a set of values paired with a given index, be it explicit in the case of maps or implicit in the case of other sequences’ positional information. Therefore, imagine how easy this problem would be if all collections were laid out as sequences of pairs ([index1 value1] [index2 value2] ... [indexn valuen]). Well, there’s no reason why they can’t be, as shown next:

(defn index [coll]

(cond

(map? coll) (seq coll)

(set? coll) (map vector coll coll)

:else (map vector (iterate inc 0) coll)))

This simple function[20] can generate a uniform representation for indexed collections:

20 Clojure has a core function, keep-indexed, that works similarly but doesn’t implicitly build indices along equality partitions. For a vector, you could build the index as (keep-indexed #(-> [% %2]) [:a :b :c :d]).

(index [:a 1 :b 2 :c 3 :d 4])

;=> ([0 :a] [1 1] [2 :b] [3 2] [4 :c] [5 3] [6 :d] [7 4])

(index {:a 1 :b 2 :c 3 :d 4})

;=> ([:a 1] [:b 2] [:c 3] [:d 4])

(index #{:a 1 :b 2 :c 3 :d 4})

;=> ([1 1] [2 2] [3 3] [4 4] [:a :a] [:c :c] [:b :b] [:d :d])

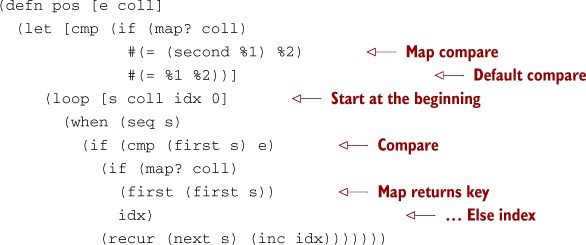

As shown, we’re still using type-based predicates, but we’ve raised the level of abstraction to the equality partitions in order to build contextually relevant indices. Now, the function for finding the positional indices for the desired value is trivial:

(defn pos [e coll]

(for [[i v] (index coll) :when (= e v)] i))

(pos 3 [:a 1 :b 2 :c 3 :d 4])

;=> (5)

(pos 3 {:a 1, :b 2, :c 3, :d 4})

;=> (:c)

(pos 3 [:a 3 :b 3 :c 3 :d 4])

;=> (1 3 5)

(pos 3 {:a 3, :b 3, :c 3, :d 4})

;=> (:a :c :b)

Much better! But there’s one more deficiency with the pos function from a Clojure perspective. Typically in Clojure it’s more useful to pass a predicate function in cases such as these, so that instead of pos determining raw equality, it can build its result along any dimension:

(pos #{3 4} {:a 1 :b 2 :c 3 :d 4})

;=> (:c :d)

(pos even? [2 3 6 7])

;=> (0 2)

We can modify pos only slightly to achieve the ideal level of flexibility.

Listing 5.3. Final version of pos

(defn pos [pred coll] (for [[i v] (index coll) :when (pred v)] i))

We’ve vastly simplified the original solution and generated two potentially useful functions (Martin 2002) in the process. By following some simple Clojure principles, we solved the original problem statement in a concise and elegant manner.

5.8. Summary

Clojure favors simplicity in the face of growing software complexity. If problems are easily solved by collection abstractions, then those abstractions should be used. Most problems can be modeled on such simple types, yet we continue to build monolithic class hierarchies in a fruitless race toward mirroring the “real world”—whatever that means. Perhaps it’s time to realize that we no longer need to layer self-imposed complexities on top of software solutions that are already inherently complex. Not only does Clojure provide the sequential, set, and map types useful for pulling ourselves from the doldrums of software complexity, but it’s also optimized for dealing with them.

Now that we’ve discussed each of these types in detail, let’s take a step back and talk about three important properties of Clojure’s collection types that until now we’ve only touched on lightly: immutability, persistence, and laziness.