Chapter 10. Mutation and concurrency

- Software transactional memory with multiversion concurrency control and snapshot isolation

- When to use refs

- When to use agents

- When to use atoms

- When to use locks

- Vars and dynamic binding

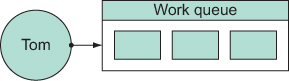

Clojure’s main tenet isn’t the facilitation of concurrency. Instead, Clojure at its core is concerned with the sane management of state, and facilitating concurrent programming naturally falls out of that. Concurrency refers to designing systems using independently executing, logic processes (Pike 2012). A simple concurrent design is a single thread named Tom inserting data into a work queue, as shown in figure 10.1.

Figure 10.1. Tom, alone

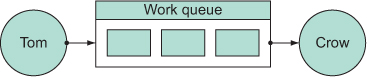

Of course, although this is technically a concurrent design, having Tom work alone doesn’t achieve a high degree of concurrency. But if we add another thread to the mix as in figure 10.2, then the concurrency in the design begins to become more apparent.

Figure 10.2. Tom inserts data into the work queue

Figure 10.2 is the “Hello World” equivalent for a concurrent message queue such as you might create with something like RabbitMQ (www.rabbitmq.com) or HornetQ (www.jboss.org/hornetq). That is, there is a “producer” named Tom that, whenever he can, inserts some data into the work queue. At the same time (concurrently), there is a “consumer” named Crow that takes available data off the work queue and does something with it. You can ignore the matter of what happens if Tom is slower than Crow or vice versa; the point is, the design illustrates that two entities are operating independently. It’s irrelevant whether these two entities actually operate at the same time, because concurrency is more about design than execution details. Sometimes partitioning a system into independent processes is useful regardless of the way that the concurrency is fulfilled at runtime.

In the final illustration of a concurrent design, shown in figure 10.3, you’ll notice that we’ve added another consumer process named Joel. We did this to make one further point: concurrent designs are all about independent processes and not necessarily about operating on related tasks. The details of the tasks are of course domain dependent, but in this design both Crow and Joel could be performing very different tasks on the data fetched from the work queue, or maybe the same task; it doesn’t matter. Concurrency is a design strategy only.

Figure 10.3. Tom inserts data into the work queue while Crow and Joel consume it at their leisure.

Java operates on a shared-state concurrency model built around juggling fine-grained locks that protect access to shared data. Even if you can keep all of your locks in order, rarely does such a strategy scale well, and even less frequently does it foster reusability. But Clojure’s state management is simpler to reason about and promotes reusability.

Clojure Aphorism

A tangled web of mutation means any change to your code potentially occurs in the large.

In this chapter, we’ll take the grand tour of Clojure’s tools for managing state changes, the so-called mutation primitives, and show how Clojure makes concurrent programming not only possible, but fun. Our journey will take us through Clojure’s four major mutable references: refs, agents, atoms, and vars. When possible and appropriate, we’ll also point out the Java facilities for concurrent programming (including locking) and provide information on the trade-offs involved in choosing them.

Concurrency refers to the execution of disparate tasks at roughly the same time, each sharing a common resource, but not necessarily performing related tasks. The results of concurrent tasks often affect the behavior of other concurrent tasks and therefore contain an element of nondeterminism. Parallelism refers to partitioning a task into multiple parts, each run at the same time. Typically, parallel tasks work toward an aggregate goal, and the result of one doesn’t affect the behavior of any other parallel task, thus maintaining determinacy. We’ll stick to a discussion of concurrency in this chapter, deferring parallelism until chapter 11.

Before we dive into the details of Clojure’s reference types, let’s start with a high-level overview of Clojure’s software transactional memory (STM). A notional definition of STM is that it’s a nonblocking way to coordinate concurrent updates between related mutable value cells (Herlihy 1993). We’ll discuss the details of Clojure’s STM implementation via its ref type in the next section.

10.1. When to use refs

A faster program that doesn’t work right is useless.

Simon Peyton-Jones[1]

1 In “Beautiful Concurrency,” Beautiful Code (O’Reilly, 2007).

In chapter 1, we defined three important terms:

- Time —The relative moments when events occur

- State —A snapshot of an entity’s properties at a moment in time

- Identity —The logical entity identified by a common stream of states occurring over time

These terms form the foundation for Clojure’s model of state management and mutation. In Clojure’s model, a program must accommodate the fact that when dealing with identities, it’s receiving a snapshot of its properties at a moment in time, not necessarily the most recent. Therefore, all decisions must be made in a continuum. This model is a natural one, because humans and animals alike make decisions based on their current knowledge of an ever-shifting world. Clojure provides some tools for dealing with identity semantics via, among others, its ref reference type, the change semantics of which are governed by Clojure’s software transactional memory; this ensures state consistency throughout the application timeline, delineated by a transaction. A transaction in Clojure is demarked by the dosync form and is used to build a set of the changeable data cells (Shavit 1997) embedded within that should all change together. Similar to a database transaction, the data set in the dosync block changes all together should the transaction succeed and not at all if it fails.

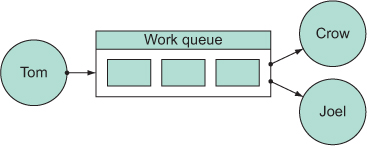

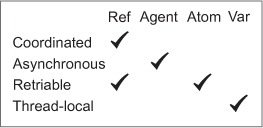

Clojure currently provides four different reference types to aide in concurrent programming: refs, agents, atoms, and vars. All but vars are considered shared references and allow for changes to be seen across threads of execution. The most important point to remember about choosing between reference types is that although their features sometimes overlap, each has an ideal use. The reference types and their primary characteristics are shown in figure 10.4.

Figure 10.4. Clojure’s four reference types are listed across the top, with their features listed down the left. Atoms are for lone synchronous objects. Agents are for asynchronous actions. Vars are for thread-local storage. Refs are for synchronously coordinating multiple objects.

The unique feature of refs is that they’re coordinated. This means reads and writes to multiple refs can be made in a way that guarantees no race conditions. Asynchronous means the request to update is queued to happen in another thread some time later, while the thread that made the request continues immediately. Retriable indicates that the work done to update a reference’s value is speculative and may have to be repeated. Finally, thread-local means thread safety is achieved by isolating changes to state to a single thread.

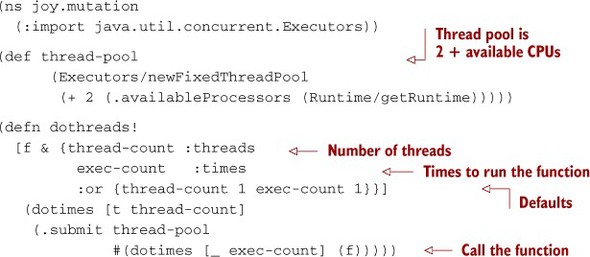

To illustrate some major points, we’ll use a function dothreads! that launches a given number of threads each running a function a number of times:

The dothreads! function is of limited utility—throwing a bunch of threads at a function to see if it breaks:

(dothreads! #(.print System/out "Hi ") :threads 2 :times 2) Hi Hi Hi Hi

Value access via the @ reader feature or the deref function provides a uniform client interface, regardless of the reference type used. On the other hand, the write mechanism associated with each reference type is unique by name and specific behavior, but similar in structure. Each referenced value is changed in accordance with the result[2] of a function. The result of this function becomes the new referenced value. Finally, all reference types provide consistency by allowing the association of a validator function via setvalidator that will be used as the final gatekeeper on any value change.

2 Except for ref-set on refs, reset! on atoms, and set! on vars.

10.1.1. Using refs for a mutable game board

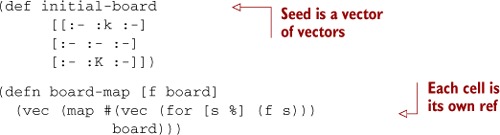

A ref is a reference type allowing synchronous, coordinated change to its contained value. What does this mean? By enforcing that any change to a ref’s value occurs in a transaction, Clojure can guarantee that change happens in a way that maintains a consistent view of the referenced value in all threads. But there’s a question as to what constitutes coordination. Let’s construct a simple vector of refs to represent a 3 × 3 chess board.

Listing 10.1. 3 x 3 chess board representation using Clojure refs

In a change from listing 1.5, the lowercase keyword represents a black king piece and the uppercase a white king piece. We’ve chosen to represent the mutable board as a 2D vector of refs (returned by board-map). There are other ways to represent a board, but we’ve chosen this because it’s nicely illustrative—the act of moving a piece requires a coordinated change in two reference squares, or else a change to one square in one thread could lead to another thread observing that square as occupied. Likewise, this problem requires synchronous change, because it would be no good for pieces of the same color to move consecutively. Refs are the only game in town to ensure that the necessary coordinated change occurs synchronously. Before we show refs in action, let’s define the auxiliary functions.

Listing 10.2. Setting up the refs example

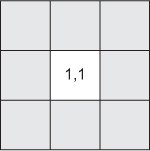

The to-move structure describes the order of moves, so in the base case, it states that the white king :K at y=2,x=1 moves before the black king :k at y=0,x=1. The example reuses the neighbors function from chapter 5 to build a legal-move generator for chess king pieces. You do this by using partial supplied with the kingly position deltas and the board size. An illustration of how the king-moves function calculates the legal moves is shown in figure 10.5.

Figure 10.5. The king neighbors of cell 1,1

The good-move? function states that a move to a square is legal only if the enemy isn’t already located there. The function choose-move destructures the to-move vector and chooses a good move from a shuffled sequence of legal moves. The choose-move function can be tested in isolation:

(reset-board!) (take 5 (repeatedly #(choose-move @to-move))) ;=> ([:K [1 0]] [:K [1 2]] [:K [1 1]] [:K [1 2]] [:K [1 0]])

And now let’s create a function to make a random move for the piece at the front of to-move, shown next.

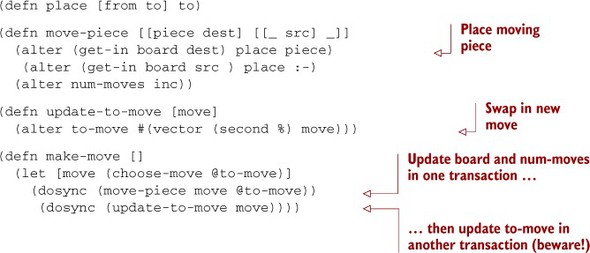

Listing 10.3. Using alter to update refs in a transaction

The make-move function calls alter four different times to update all the refs necessary to represent making a move. You pass the place function to alter in move-piece, which states “given a to piece and a from piece, always return the to piece.” With these functions in place, we reset the board and made a couple of moves:

(reset-board!) (make-move) ;=> [[:k [0 1]] [:K [2 0]]] (board-map deref board) ;=> [[:- :k :-] [:- :- :-] [:K :- :-]] (make-move) ;=> [[:K [2 0]] [:k [1 1]]] (board-map deref board) ;=> [[:- :- :-] [:- :k :-] [:K :- :-]]

This looks pretty good. The specific moves you see should be different, but as long as the white and black kings are taking turns making valid moves, you’re doing well. So why not push it a bit harder? Try running the same make-move function from 100 threads simultaneously:

(dothreads! make-move :threads 100 :times 100) (board-map deref board) ;=> [[:- :- :-] [:- :K :-] [:- :- :K]]

Clearly something has gone awry. Despite using refs for all of the mutable state, we ended up with two white kings on the board and lost the black king entirely. If you run this code on your own machine, you may see something slightly different (for example, two black kings), but in all likelihood what you see will be wrong. The reason lies in splitting the updates of the to and from refs into different transactions described by the two separate uses of dosync in make-move. Judging by its behavior, this is not correct; but to understand why requires an understanding of software transactional memory, starting with what exactly a transaction is.

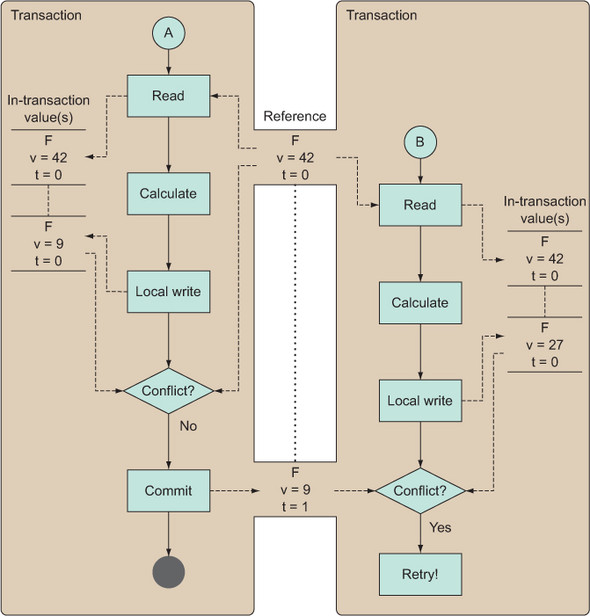

10.1.2. Transactions

Within the first few moments of using Clojure’s STM, you’ll notice something different than you may be accustomed to: no locks. Because there’s no need for ad hoc locking schemes when using STM, there’s no chance of deadlock. Likewise, Clojure’s STM doesn’t require the use of monitors and as a result is free from lost wakeup conditions. Behind the scenes, Clojure’s STM uses multiversion concurrency control (MVCC) to ensure snapshot isolation. In simpler terms, snapshot isolation means each transaction gets its own view of the data it’s interested in. This snapshot is made up of in-transaction reference values, forming the foundation of MVCC (Ullman 1988). As illustrated in figure 10.5, each transaction merrily chugs along making changes to in-transaction values only, oblivious to and ambivalent about other transactions. At the conclusion of the transaction, the local values are examined against the modification target for conflicts. An example of a simple possible conflict is if another transaction B committed a change to a target reference during the time that transaction A was working, thus causing A to retry. If no conflicts are found, then the in-transaction values are committed (set as the final value) and the target references are modified with their updated values. Another advantage that STM provides is that in the case of an exception during a transaction, the in-transaction values are thrown away and the exception is propagated outward. In the case of lock-based schemes, exceptions can complicate matters, because in most cases locks need to be released (and in some cases, in the correct order) before an exception can be safely propagated up the call stack.

Figure 10.5. Illustrating an STM retry: Clojure’s STM works much like a database.

Because each transaction has its own isolated snapshot, there’s no danger in retrying—the data is never modified until a successful commit occurs. STM transactions can easily nest without taking additional measures to facilitate composition. In languages that provide explicit locking for concurrency, matters of composability are often difficult, if not impossible. The reasons for this are far-reaching, and the mitigating forces (Goetz 2006) are complex, but the primary reasons tend to be because lock-based concurrency schemes often hinge on a secret incantation not explicitly understandable through the source itself: for example, the order in which to take and release a set of locks.

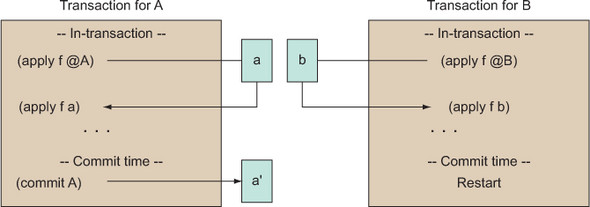

10.1.3. Embedded transactions

In systems that provide embedded transactions, it’s common for transactions to be nested, thus limiting the scope of restarts (Gray 1992). Embedding transactions in Clojure operate differently, as summarized in figure 10.6.

Figure 10.6. A restart in any of Clojure’s embedded transactions A, B, b, or C causes a restart in the entire subsuming transaction. This is unlike a fully embedded transaction system, where the subtransactions can be used to restrain the scope of restarts.

In some database systems, transactions can be used to limit the scope of a restart, as shown when transaction embedded.b restarts only as far back as its own scope. Clojure has only one transaction at a time per thread, thus causing all subtransactions to be subsumed into the larger transaction. Therefore, when a restart occurs in the (conceptual) subtransaction clojure.b, it causes a restart of the larger transaction. Although not shown, some transaction systems provide committal in each subtransaction; in Clojure, commit occurs only at the outermost larger transaction.

10.1.4. The things that STM makes easy

The phrase TANSTAAFL, meaning “There ain’t no such thing as a free lunch,” was popularized in the excellent sci-fi novel The Moon Is a Harsh Mistress (Heinlein 1966) and is an apt response to the view that STM is a panacea for concurrency complexities.

As you proceed through this chapter, we urge you to keep this in the back of your mind, because it’s important to realize that although Clojure facilitates concurrent programming, it doesn’t solve it for you. But there are a few things that Clojure’s STM implementation simplifies in solving difficult concurrent problems.

Consistent information

The STM allows you to perform arbitrary sets of read/write operations on arbitrary sets of data in a consistent (Papadimitriou 1986) way. By providing these assurances, the STM allows your programs to make decisions given overlapping subsets of information. Likewise, Clojure’s STM helps to solve the reporting problem: the problem of getting a consistent view of the world in the face of massive concurrent modification and reading, without manual locking.

No need for locks

In applications of any size, the inclusion of locks for managing concurrent access to shared data adds complexity. Many factors add to this complexity, but chief among them are the following:

- You can’t use locks without supplying extensive error handling. This is critical in avoiding orphaned locks (locks held by a thread that has died).

- Every application requires that you reinvent a whole new locking scheme.

- Locking schemes often require that you impose a total ordering that’s difficult to enforce in client code, frequently leading to a priority-inversion scenario.

Locking schemes are difficult to design correctly and become increasingly so as the number of locks grows. Clojure’s STM eliminates the need for locking and as a result eliminates dreaded deadlock scenarios. Clojure’s STM provides a story for managing state consistently. Adhering to this story will go a long way toward helping you solve software problems effectively. This is true even when concurrent programming isn’t a factor in your design.

ACI

The verbiage of database transactions includes the well-known acronym ACID, which refers to the properties ensuring transactional reliability. All of Clojure’s reference types provide the first three properties: atomicity, consistency, and isolation. The other, durability, is missing due to the fact that Clojure’s STM resides in memory and is therefore subject to data loss in the face of catastrophic system failure. Clojure relegates the problem of maintaining durability to the application developer instead of supplying common strategies by default: database persistence, external application logs, serialization, and so on.

10.1.5. Potential downsides

There are two potential problems inherent in STMs in general, which we’ll only touch on briefly here: write skew and live-lock.

Write skew

For the most part, you can write correct programs by putting all access and changes to references in appropriately scoped transactions. The one exception to this is write skew, which occurs in MVCC systems such as Clojure’s. Write skew can occur when one transaction uses the value of a reference to regulate its behavior but doesn’t write to that reference. At the same time, another transaction updates the value for that same reference. One way to avoid this is to do a “dummy write” in the first transaction, but Clojure provides a less costly solution: the ensure function. This scenario is rare in Clojure applications, but possible.

Live-lock

Live-lock refers to a set of transaction(s) that repeatedly restart one another. Clojure combats live-lock in a couple of ways. First, there are transaction-restart limits that raise an error when breached. Generally this occurs when the units of work within some number of transactions are too large. The second way that Clojure combats live-lock is called barging. Barging refers to some careful logic in the STM implementation that lets an older transaction continue running while younger transactions retry.

10.1.6. The things that make STM unhappy

Certain things can rarely (if ever) be safely performed in a transaction, and in this section we’ll talk briefly about each.

I/O

Any I/O operation in the body of a transaction is highly discouraged. Due to restarts, the embedded I/O could at best be rendered useless and could at worst cause great harm . For example, if you choose to embed log messages inside a transaction, then a new log entry will be created for every restart on said transaction. This circumstance is likely to make your log files hard to read and bloated. It’s advised that you employ the io! macro whenever performing I/O operations:

(io! (.println System/out "Haikeeba!")) ; Haikeeba!

When this same statement is used in a transaction, an exception is thrown:

(dosync (io! (.println System/out "Haikeeba!"))) ; java.lang.IllegalStateException: I/O in transaction

Although it may not be feasible to use io! in every circumstance, it’s a good idea to do so whenever possible.

Class instance mutation

Another thing you should avoid in transactions is object mutation. Unrestrained instance mutation often isn’t idempotent, meaning that running a set of mutating operations multiple times frequently displays different results.

Large transactions

Although the size of transactions is highly subjective, the general rule of thumb when partitioning units of work should always be get in and get out as quickly as possible.

It’s important to understand that transactions help to simplify the management of state, but you should strive to minimize their footprint in your code. The use of I/O and instance mutation is an essential part of many applications; it’s important to work to separate your programs into logical partitions, keeping I/O and its ilk on one side, and transaction processing and mutation on the other.

Fortunately, Clojure provides a powerful toolset for making the management of mutability sane, but none of the tools provide a shortcut to thinking. Multithreaded programming is a difficult problem, independent of specifics, and Clojure’s state-management tools won’t solve this problem magically. It was relying on this sense of magic that got us in trouble with the misbehaving game board example. Let’s see if we can fix the example transactions.

10.2. Refactoring with refs

In this section, we’ll discuss some refactorings that are possible for the chess board example. You’ll first fix some nagging problems with the example. Next, we’ll discuss how you can take advantage of commutativity (action order independence) in the design using Clojure’s commute function. Finally, we’ll talk about how to adjust a ref’s default settings to avoid excessive retries.

10.2.1. Fixing the game board example

Take another look at the make-move function that was misbehaving earlier:

(defn make-move []

(let [move (choose-move @to-move)]

(dosync (move-piece move @to-move))

(dosync (update-to-move move))))

The problem was that the board ended up in a state you wish to be impossible. This implies that the transaction boundaries you provided allow changes to be made independently that you wish to be dependent. Specifically, the two separate dosync forms mean that moving a piece on the board can happen independently of updating whose turn it is.

Being separated into two transactions means they’re (potentially) running on different timelines. Because board and to-move are dependent, their states must be coordinated into a single transaction, but you’ve broken that necessity with make-move. Therefore, somewhere along the line board was updated from two subsequent timelines where it was :K’s turn to move! As shown in figure 10.7, either transaction can commit or be restarted; but because the two refs were never in the same transaction, the occurrences of these conditions become staggered over time, leading to inconsistent values.

Figure 10.7. If refs A and B should be coordinated, then splitting their updates across different transactions is dangerous. Value a? is eventually committed to A, but the update for B never commits due to retry, and coordination is lost. Another error occurs if B’s change depends on A’s value and A and B are split across transactions. There are no guarantees that the dependent values refer to the same timeline.

In the following new version, make-move-v2, the alter function is still called four times, but now in a single dosync. Thus the from and to positions, as well as the to-move refs, are updated in a coordinated fashion:

A single run of make-move still looks okay:

(reset-board!) (make-move) ;=> [[:k [0 1]] [:K [2 0]]] (board-map deref board) ;=> [[:- :k :-] [:- :- :-] [:K :- :-]] @num-moves ;=> 1

You’ve successfully made a change to two board squares, the to-move structure, and num-moves using the uniform state-change model. By itself, this model of state change is compelling. The semantics are simple to understand: give a reference a function that determines how the value changes. This is the model of sane state change that Clojure preaches. But you can now throw a bunch of threads at this solution and still maintain consistency:

(dothreads! make-move-v2 :threads 100 :times 100) (board-map #(dosync (deref %)) board) ;=> [[:k :- :-] [:- :- :-] [:K :- :-]] @to-move ;=> [[:k [0 0]] [:K [2 0]]] @num-moves ;=> 10001

Figure 10.8 shows that at the time of the transaction, the in-transaction value of the to square is set to (apply place @SQUARE-REF PIECE). At the end of the transaction, the STM uses this in-transaction value as the commit value. If any other transaction updated any other coordinated ref before commit time, the entire transaction would be retried.

Figure 10.8. The in-transaction value 9 for the ref num-moves is retrieved in the body of the transaction and manipulated with the alter function inc. The resulting value 10 is eventually used for the committime value, unless a retry is required.

The alter function is not the only way to update the value stored in a ref. Let’s look at a couple others.

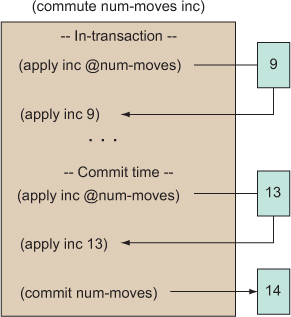

10.2.2. Commutative change with commute

Figure 10.8 showed that using alter can cause a transaction to retry if a ref it depends on is modified and committed while it’s running. But there may be circumstances where the value of a ref in a given transaction isn’t important to its completion semantics. For example, the num-moves ref is a simple counter, and surely its value at any given time is irrelevant for determining how it should be incremented. To handle these loose dependency circumstances, Clojure offers an operation named commute that takes a function to apply to a ref. Of particular note is that the function you give to commute is run at least twice during the course of a transaction to help increase the level of concurrency around the given reference. Increasing the concurrency in a system is good, right? Well, maybe. What if you were to change the move-piece function to use the commute function instead of alter?

(defn move-piece [[piece dest] [[_ src] _]] (commute (get-in board dest) place piece) (commute (get-in board src ) place :-) (commute num-moves inc)) (reset-board!) (dothreads! make-move-v2 :threads 100 :times 100) (board-map deref board) ;=> [[:K :- :-] [:- :- :-] [:- :- :k]] @to-move ;=> [[:K [0 0]] [:k [2 2]]]

Everything looks great! But you can’t assume the same thing will work for update-to-move:

(defn update-to-move [move] (commute to-move #(vector (second %) move))) (dothreads! make-move-v2 :threads 100 :times 100) (board-map #(dosync (deref %)) board) ;=> [[:- :- :-] [:- :K :-] [:- :- :K]] @to-move ;=> [[:K [2 2]] [:K [1 1]]]

Thanks to your rash decision, you’ve once again introduced inconsistency into the system. But why? The reason lies in the fact that the new update-to-move isn’t amenable to the semantics of the commute function. commute allows for more concurrency in the STM by devaluing in-transaction value disparity resulting from another transaction’s commit. In other words, figure 10.9 shows that the in-transaction value of a ref is initially set as when using alter, but the commit time value is reset just before commute commits.

Figure 10.9. The in-transaction value 9 in the nummoves ref is retrieved in the body of the transaction and manipulated with the commute function. But the commute function inc is again run at commit time with the current value 13 contained in the ref. The result of this action serves as the committed value 14.

By retrieving the most current value for a ref at the time of commit, the values committed might not be those corresponding to the in-transaction state. This leads to a condition of update reordering that the application must accommodate. Of course, this new function isn’t commutative, because vector doesn’t give the same answer if its argument order is switched.

Using commute is useful as long as the following conditions aren’t problematic:

- The value you see in-transaction may not be the value that gets committed at commit time.

- The function you give to commute will be run at least twice: once to compute the in-transaction value, and again to compute the commit value. It might be run any number of times.

Although you’ll incur a non-zero cost for running the change function twice, this cost is assumed to be small compared to the cost of tracking a changing reference for the duration of a transaction.

10.2.3. Vulgar change with ref-set

The function ref-set is different from alter and commute in that instead of changing a ref based on a function of its value, it does so given a raw value:

(dosync (ref-set to-move '[[:K [2 1]] [:k [0 1]]])) ;=> [[:K [2 1]] [:k [0 1]]]

In general, this sort of vulgar change should be avoided. But because the refs have become out of sync during your experiments with commute, you can be forgiven in using ref-set to fix it—just this once.

Snapshot isolation means that within a transaction, all enclosed ref states represent the same moment in time. Any ref value that you see in a transaction will never change unless you change it within that transaction. Your algorithms should be devised so that all you care about is that the values of the references haven’t changed before commit (unless your change function is commutative, as mentioned previously). If those values have changed, then the transaction retries, and you try again.

Earlier, we talked about write skew, a condition that occurs when you make decisions based on the in-transaction value of a ref that’s never written to, which is also changed at the same time. Avoiding write skew is accomplished using Clojure’s ensure function, which guarantees that a read-only ref isn’t modified by another thread. The make-move function isn’t subject to write skew because it has no invariants on read data and in fact never reads a ref that it doesn’t eventually write. This design is ideal because it allows other threads to calculate moves without having to stop them, while any given transaction does the same. But in your own applications, you may be confronted with a true read-invariant scenario, and it’s in such a scenario that ensure will help.

10.2.4. Refs under stress

After you’ve created your refs and written your transactions, and simple isolated tests are passing, you may still run into difficulties in larger integration tests because of how refs behave under stress from multiple transactions. As a rule of thumb, it’s best to avoid having both short- and long-running transactions interacting with the same ref. Clojure’s STM implementation usually compensates eventually regardless, but you’ll soon see some less-than-ideal consequences of ignoring this rule.

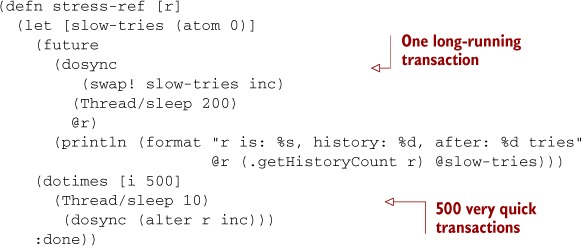

To demonstrate this problem, listing 10.4 shows a function named stress-ref designed specifically to over-stress a ref. It does this by starting a long-running or slow transaction in another thread, where work is simulated by a 200 ms sleep, but all it’s really doing is reading the ref in a transaction. This requires the STM to know of a stable value for the ref for the full 200 ms. Meanwhile, the main thread runs quick transactions 500 times in a row, each one incrementing the value in the ref and thereby frustrating the slow transaction’s attempts to see a stable value. The STM works to overcome this frustration by growing the history of values kept for the ref. But by default, this history is limited to 10 entries, and the perverse function can easily saturate that.

Listing 10.4. How to make a ref squirm

What happens when you run the stress-ref function? r is incremented all the way to 500 without the slow transaction ever succeeding:

(stress-ref (ref 0)) ;=> :done ; r is: 500, history: 10, after: 26 tries

You may see a slightly different number of tries, but the important detail is that the slow transaction is unable to successfully commit and print the value of r until the main thread has finished its frantic looping and returned :done. The ref’s history started at a default of 0 and grew to 10, but this was still insufficient.

Remember that the real problem here is mixing short- and long-running transactions on the same ref. But if this is truly unavoidable, Clojure allows you to create a ref with a more generous cap on the history size:

(stress-ref (ref 0 :max-history 30)) ; r is: 410, history: 20, after: 21 tries ;=> :done

Again, your numbers may be different, but this time the ref’s history grew sufficiently (reaching 20 in this run) to allow the slow transaction to finish first and report about r before all 500 quick transactions completed. In this run, only 410 had finished when the slow transaction committed.

But the slow transaction still had to be retried 20 times, with the history growing one step larger each time, before it was able to complete. If your slow transactions were doing real work instead of sleeping, this could represent a lot of wasted computing effort. If your tests or production environment reveal this type of situation and the underlying transaction size difference can’t be resolved, one final ref option can help. Because you can see that the history will likely need to be 20 anyway, you may as well start it closer to its goal:

(stress-ref (ref 0 :min-history 15 :max-history 30)) ; r is: 97, history: 19, after: 5 tries ;=> :done

This time the slow transaction finished before even 100 of the quick transactions had finished; and even though the history grew to roughly the same size, starting it at 15 meant the slow transaction retried only 4 times before succeeding.

The use of refs to guarantee coordinated change is generally simple for managing state in a synchronous fashion, and tuning with :min-history and :max-history is rarely required. But not all changes in your applications will require coordination, nor will they need to be synchronous. For these circumstances, Clojure also provides another reference type, the agent, that provides independent asynchronous changes. We’ll discuss agents next.

10.3. When to use agents

Like all Clojure reference types, an agent represents an identity, a specific thing whose value can change over time. Each agent has a queue to hold actions that need to be performed on its value, and each action produces a new value for the agent to hold and pass to the subsequent action. Thus the state of the agent advances through time, action after action, and by their nature only one action at a time can be operating on a given agent. Of course, other actions can be operating on other agents at the same time, each in its own thread.

You can queue an action on any agent by using send or send-off, the minor difference between which we’ll discuss later. Agents are integrated with STM transactions, and within a transaction any actions sent are held until the transaction commits or are thrown away if the transaction retries. Thus send and send-off are not considered side effects in the context of a dosync, because they handle retries correctly and gracefully.

Agents are created with the agent function:

(def joy (agent []))

That creates an agent with an initial value of an empty vector. Now you can send an action to it:

(send joy conj "First edition")

Here is its current value:

@joy ;; read as (deref joy) ;=> ["First edition"]

If you slow the action down a little, you can see the old value while the next action is still running. Start by defining a slow version of conj:

(defn slow-conj [coll item] (Thread/sleep 1000) (conj coll item))

As soon as you send an action to the agent, the REPL prints the current value of the agent, but slow-conj is still running so the value printed will not yet have changed:

(send joy slow-conj "Second edition") ;=> #<Agent@5efefc32: ["First edition"]>

But if you wait a second, a final call to deref reveals the consequence of slow-conj:

@joy ;=> ["First edition" "Second edition"]

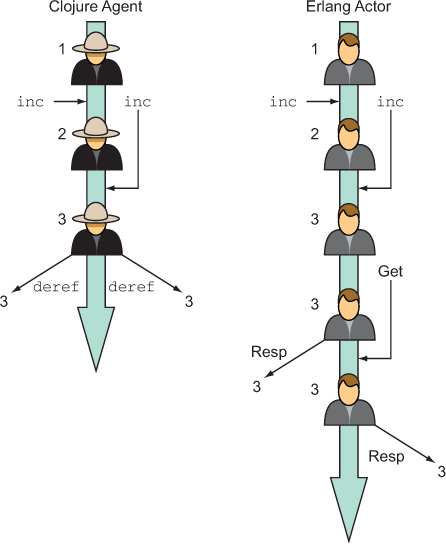

10.3.1. In-process vs. distributed concurrency models

Both Clojure and Erlang are designed (Armstrong 2007) specifically with concurrent programming in mind, and Erlang’s process[3] model is similar in some ways to Clojure agents, so it’s fair to briefly compare how they each approach the problem. Erlang takes a distributed, share-nothing (Armstrong 2007b) approach; Clojure instead promotes shared, immutable data. The key to Clojure’s success is the fact that its composite data structures are immutable, because immutable structures can be freely shared among disparate threads. Erlang’s composite data structures are also immutable, but because the communication model is distributed, the underlying theme is always one of dislocation. The implications of this are that all knowledge of the world under consideration is provided via messages. But with Clojure’s in-process model, data structures are always accessible directly, as illustrated in figure 10.10, whereas Erlang makes copies of the data sent back and forth between processes. This works well for Erlang and allows it to provide its fault-recovery guarantees, but many application domains can benefit from the shared-memory model provided by Clojure.

3 It’s interesting that popular opinion has tagged Erlang processes with the actor tag although the language implementers rarely, if ever, uses that term. Because the Erlang elite choose not to use that term, we’ll avoid doing so ... almost.

Figure 10.10. Clojure agents versus Erlang processes: each agent and process starts with the value 1. Both receive an inc request simultaneously but can process only one at a time, so more are queued. Requests to the process are queued until a response can be delivered, whereas any number of simultaneous derefs can be done on an agent. Despite what this illustration may suggest, an agent is not an actor with a hat on.

The second difference is that Erlang messages block on reception, opening up the possibility for deadlock. On the other hand, when interacting with Clojure agents, both sends and derefs proceed immediately and never block or wait on the agent. Clojure does have an await function that can be used to block a thread until a particular agent has processed a message, but this function is specifically disallowed in agent threads (and also STM transactions) in order to prevent accidentally creating this sort of deadlock.

The final difference lies in the fact that agents allow for arbitrary update functions, whereas Erlang processes are bound to static pattern-matched, message-handling routines. In other words, pattern matching couples the data and update logic, whereas agents decouple them. Erlang is an excellent language for solving the extremely difficult problem of distributed computation, but Clojure’s concurrency mechanisms service the in-process programming model more flexibly than Erlang allows (Clementson 2008).

10.3.2. Controlling I/O with an agent

One handy use for agents is to serialize access to a resource, such as a file or another I/O stream. For example, imagine you want to provide a way for multiple threads to report their progress on various tasks, giving each report a unique incrementing number.

Because the state you want to hold is known, you can go ahead and create the agent:

(def log-agent (agent 0))

Now you supply an action function to send to log-agent. All action functions take as their first argument the current state of the agent and can take any number of other arguments that are sent:

(defn do-log [msg-id message] (println msg-id ":" message) (inc msg-id))

Here msg-id is the state—the first time do-log is sent to the agent, msg-id is 0. The return value of the action function will be the new agent state, incrementing it to 1 after that first action.

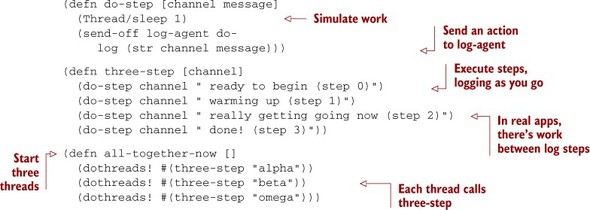

Now you need to do some work worth logging about, but for the example in the next listing, let’s pretend.

Listing 10.5. Controlling I/O with an agent

To see how log-agent correctly queues and serializes the messages, you need to start a few threads, each yammering away at the agent. You do this by calling (all-together-now), which generates output like the following:

0 : alpha ready to begin (step 0) 1 : omega ready to begin (step 0) 2 : beta ready to begin (step 0) 3 : alpha warming up (step 1) 4 : alpha really getting going now (step 2) 5 : omega warming up (step 1) 6 : alpha done! (step 3) 7 : omega really getting going now (step 2) 8 : omega done! (step 3) 9 : beta warming up (step 1) 10 : beta really getting going now (step 2) 11 : beta done! (step 3)

Your output is likely to look different, but what should be exactly the same are the stable, incrementing IDs assigned by the agent, even while the alpha, beta, and omega threads fight for control.

There are several other possible approaches to solving this problem, and it can be constructive to contrast them. The simplest alternative would be to hold a lock while printing and incrementing. In addition to the general risk of deadlocks when a complex program has multiple locks, there are some specific drawbacks even if this would be the only lock in play. Each client thread would block any time there was contention for the lock, and unless some fairness mechanism were used, there’d be at least a slight possibility of one or more threads being starved and never having an opportunity to print or proceed with their work. Because agent actions are queued and don’t block waiting for their action to be processed, neither of these is a concern.

Another option would be to use a queue to hold pending log messages. Client threads would be able to add messages to the queue without blocking and with adequate fairness. But you’d generally need to dedicate a thread to popping messages from the queue and printing them, or write code to handle starting and stopping the printing thread as needed. Why write such code when agents do this for you already? When no actions are queued, the agent in this example has no thread assigned to it.[4]

4 Using agents for logging might not be appropriate in all cases. For example, in probing scenarios, the number of log events could be extremely high. Coupling this volume with serialization could make the agent unable to catch its ever-growing queue.

Agents have other features that may or may not be useful in any given situation. One is that the current state of an agent can be observed cheaply. In the previous example, this would allow you to discover the ID of the next message to be written out, as follows:

@log-agent ;=> 11

Here the agent is idle—no actions are queued or running, but the same expression would work equally well if the agent were running.

Other features include the await and await-for functions, which allow a sending thread to block until all the actions it’s sent to a given set of agents have completed. This could be useful in this logging example if you wanted to be sure a particular message had been written out before proceeding:

(do-step "important: " "this must go out") (await log-agent)

The await-for function is similar but allows you to specify a number of milliseconds after which to time out, even if the queued actions still haven’t completed.

A final feature agents provide is that the set of actions you can send to an agent is open. You can tell an agent to do something that wasn’t even conceived of at the time the agent was designed. For example, you could tell the agent to skip ahead several IDs, and this action would be queued up along with all the log-message actions and executed by the agent when its turn came:

(send log-agent (fn [_] 1000)) (do-step "epsilon " "near miss") ; 1000 : epsilon near miss

This is another area in which Clojure allows you to extend your design on the fly instead of requiring recompiling or even restarting your app. If you’re paying attention, you might wonder why the last example uses send rather than send-off.

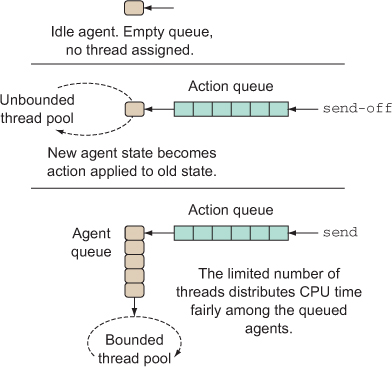

10.3.3. The difference between send and send-off

You can use either send or send-off with any agent. When you use send-off, as in most of the examples so far, only a single action queue is involved: the one managed by the individual agent. Any time the agent has a send-off action queued, it has a thread assigned to it, working through the queue. With send, there’s a second queue: actions still go into the agent’s queue, but then the agent itself queues up waiting for a thread from a fixed-sized pool of threads. The size of this fixed pool is based on the number of processors the JVM is running on, so it’s a bad idea to use send with any actions that might block, tying up one of the limited number of threads. These differences are illustrated in figure 10.11.

Figure 10.11. When an agent is idle, no CPU resources are being consumed. Each action is sent to an agent using either send or send-off, which determines which thread pool is used to dequeue and apply the action. Because actions queued with send are applied by a limited thread pool, the agents queue up for access to these threads—a constraint that doesn’t apply to actions queued with send-off.

You can make this scenario play out if you make a gaggle of agents and send them actions that sleep for a moment. Here’s a little function that does this, using whichever send function you specify, and then waits for all the actions to complete:

(defn exercise-agents [send-fn]

(let [agents (map #(agent %) (range 10))]

(doseq [a agents]

(send-fn a (fn [_] (Thread/sleep 1000))))

(doseq [a agents]

(await a))))

If you use send-off, all the agents begin their one-second wait more or less simultaneously, each in its own thread. The entire sequence of them completes in slightly over one second:

(time (exercise-agents send-off)) ; "Elapsed time: 1008.771296 msecs"

Now we can demonstrate why it’s a bad idea to mix send with actions that block:

(time (exercise-agents send)) ; "Elapsed time: 3001.555086 msecs"

The exact elapsed time you see will depend on the number of processors you have; but if you have fewer than eight, this example will take at least two seconds to complete. The threads in the fixed-size pool are clogged up waiting for sleep to finish, so the other agents queue up waiting for a free thread. Because clearly the computer could complete all 10 actions in about one second using send-off, using send is a bad idea.

So that’s it: send is for actions that stay busy using the processor and not blocking on I/O or other threads, whereas send-off is for actions that might block, sleep, or otherwise tie up the thread. This is why the earlier example used send-off for the threads that printed log lines and send for the one that did no I/O at all.

10.3.4. Error handling

You’ve been fortunate so far—none of these agent actions have thrown an exception. But real life is rarely so kind. Most of the other reference types are synchronous, and so exceptions thrown while updating their state bubble up the call stack in a normal way, to be caught (or not) with a regular try/catch in your application. Because agent actions run in other threads after the sending thread has moved on, you need a different mechanism for handling exceptions that are thrown by agent actions. As of Clojure 1.2, you can choose between two different error-handling modes for each agent: :continue and :fail.

:fail mode

By default, new agents start out using the :fail mode, where an exception thrown by an agent’s action is captured by the agent and held so that you can see it later. Meanwhile, the agent is considered failed or stopped and stops processing its action queue—all the queued actions have to wait patiently until someone clears up the agent’s error.

One common mistake when dealing with agents is to forget that your action function must take at least one argument for the agent’s current state. For example, you might try to reset the log-agent’s current message ID like this:

(send log-agent (fn [] 2000)) ; incorrect @log-agent ;=> 1001

At first glance it looks like the action you sent had no effect or perhaps hasn’t been applied yet. But you’d wait in vain for that agent to do anything ever again without intervention, because it’s stopped. One way to determine this is with the agent-error function:

(agent-error log-agent) ;=> #<IllegalArgumentException java.lang.IllegalArgumentException: ; Wrong number of args passed to: user$eval--509$fn>

This returns the error of a stopped agent, or nil if it’s still running fine. Another way to see whether an agent is stopped is to try to send another action to it:

(send log-agent (fn [_] 3000)) ; java.lang.RuntimeException: Agent is failed, needs restart

Even though this action would have worked fine, the agent has failed, and so no further sends are allowed. The state of log-agent remains unchanged:

@log-agent ;=> 1001

In order to get the agent back into working order, you need to restart it:

(restart-agent log-agent 2500 :clear-actions true) ;=> 2500

This resets the value of log-agent to 2,500 and deletes all those actions patiently waiting in their queue. If you hadn’t included the :clear-actions true option, those actions (not including the one that caused the failure) would have survived, and the agent would have continued processing them. Either way, the agent is now in good working order again, and you can again send and send-off to it:

(send-off log-agent do-log "The agent, it lives!") ; 2500 : The agent, it lives! ;=> #<Agent@72898540: 2500>

Note that restart-agent only makes sense and thus is allowed only when the agent has failed. If it hasn’t failed, any attempt to restart it throws an exception in the thread making the attempt, and the agent is left undisturbed:

(restart-agent log-agent 2500 :clear-actions true) ;=> java.lang.RuntimeException: Agent does not need a restart

This mode is perhaps most appropriate for manual intervention. Agents that normally don’t have errors but end up failing in a running system can use :fail mode to keep from doing anything too bad until a human can take things in hand, check to see what happened, choose an appropriate new state for the agent, and restart it as shown here.

:continue mode

The other error mode that agents currently support is :continue, where any action that throws an exception is skipped and the agent proceeds to the next queued action (if any). This is most useful when combined with an error handler—if you specify an :error-handler when you create an agent, that agent’s error mode defaults to :continue. The agent calls the error handler when an action throws an exception and doesn’t proceed to the next action until the handler returns. This gives the handler a chance to report the error in some appropriate way. For example, you could have log-agent handle faulty actions by logging the attempt:

(defn handle-log-error [the-agent the-err] (println "An action sent to the log-agent threw " the-err)) (set-error-handler! log-agent handle-log-error) (set-error-mode! log-agent :continue)

With the error mode and handler set up, sending faulty actions does cause reports to be printed as you want:

(send log-agent (fn [x] (/ x 0))) ; incorrect

; An action sent to the log-

agent threw java.lang.ArithmeticException: Divide by zero

;=> #<Agent@66200db9: 2501>

(send log-agent (fn [] 0)) ; also incorrect

; An action sent to the log-agent threw

; java.lang.IllegalArgumentException:

; Wrong number of args passed to: user$eval--820$fn

;=> #<Agent@66200db9: 2501>

And the agent stays in good shape, always ready for new actions to be sent:

(send-off log-agent do-log "Stayin' alive, stayin' alive...") ; 2501 : Stayin' alive, stayin' alive...

Note that error handlers can’t change the state of the agent (the one in the example keeps its current message ID of 2501 throughout the preceding tests). Error handlers are also supported in the :fail error mode, but handlers can’t call restart-agent and so are less often useful for :fail than they are for the :continue error mode.

10.3.5. When not to use agents

It can be tempting to repurpose agents for any situation requiring the spawning of new threads. Their succinct syntax and Clojurey feel often make this temptation strong. But although agents perform beautifully when each one is representing a real identity in your application, they start to show weaknesses when used as a sort of “green thread” abstraction. In cases where you need a bunch of worker threads banging away on some work, or you have a specific long-running thread polling or blocking on events, or any other kind of situation where it doesn’t seem useful that the agent maintain a value, you can usually find a better mechanism than agents. In these cases, there’s every reason to consider using a Java Thread directly, or a Java executor (as you did with dothreads!) to manage a pool of threads, or in some cases perhaps a Clojure future (discussed in section 11.1).

Another common temptation is to use agents when you need state held but you don’t want the sending thread to proceed until the agent action you sent is complete. This can be done by using await, but it’s another form of abuse that should be avoided. You’re not allowed to use await in an agent’s action, so as you try to use this technique in more and more contexts, you’re likely to run into a situation where it won’t work. But in general, there’s probably a reference type that will do a better job of behaving the way you want. Because this is essentially an attempt to use agents as if they were synchronous, you may have more success with one of the other shared synchronous types. In particular, atoms are shared and uncoordinated like agents, but they’re synchronous and so may fit better. Another alternative would be a normal lock, as discussed in section 10.5.

10.4. When to use atoms

Atoms are like refs in that they’re synchronous but are like agents in that they’re independent (uncoordinated). An atom may seem at first glance similar to a variable, but as we proceed you’ll see that any similarities are at best superficial. The use cases for atoms are similar to those of compare-and-swap (CAS) spinning operations (keep checking for a value in a loop). Anywhere you might want to atomically compute a value given an existing value and swap in the new value, an atom will suffice. Atom updates occur locally to the calling thread, and execution continues after the atom value has been changed. If another thread B changes the value in an atom before thread A is successful, then A retries. But these retries are spin-loop and don’t occur in the STM, and thus atom changes can’t be coordinated with changes to other reference types. You should take care when embedding changes to atoms in Clojure’s transactions because as you know, transactions can potentially be retried numerous times. Once an atom’s value is set, it’s set, and it doesn’t roll back when a transaction is retried; so in effect, this should be viewed as a side effect. Therefore, use atoms in transactions only when you’re certain that an attempt to update its value, performed numerous times, is idempotent.

Aside from the normal use of @ and deref to query an atom’s value, you can also use the mutating functions swap!, compare-and-set!, and reset!.

10.4.1. Sharing across threads

As we mentioned, atoms are thread-safe and can be used when you require a lightweight mutable reference to be shared across threads. A simple case is one of a globally accessible incrementing timer created using the atom function:[5]

5 In this case, the use of a java.util.concurrent.atomic.AtomicInteger is a valid choice also.

(def ^:dynamic *time* (atom 0)) (defn tick [] (swap! *time* inc)) (dothreads! tick :threads 1000 :times 100) @*time* ;=> 100000

As shown via the use of dothreads!, Atoms are safe to use across threads.

10.4.2. Using atoms in transactions

Just because we said that atoms should be used carefully in transactions, that’s not to say they can never be used that way. In fact, the use of an atom as the reference holding a function’s memoization cache is idempotent on update.

Note

Memoization is a way for a function to store calculated values in a cache so that multiple calls to the function can retrieve previously calculated results from the cache instead of performing potentially expensive calculations every time. Clojure provides a core function memoize that can be used on any referentially transparent function.

Individual requirements from memoization are highly specific to the situation, and a generic approach isn’t always the appropriate solution for every problem. We’ll discuss personalized memoization strategies in section 15.4.1, but for now we’ll use an illustrative example appropriate for atom usage.

Atomic memoization

The core memoize function is great for creating simple function caches, but it has some limitations. First, it doesn’t allow for custom caching and expiration strategies. Additionally, memoize doesn’t allow you to manipulate the cache for the purposes of clearing it in part or wholesale. Therefore, let’s create a function manipulable-memoize that lets you get at the cache and perform operations on it directly. Throughout the book, we’ve mentioned Clojure’s metadata facility, and for this example it will come in handy. As the following listing shows, you can take in the function to be memoized and attach some metadata with an atom containing the cache itself for later manipulation.

Listing 10.6. Resettable memoize function

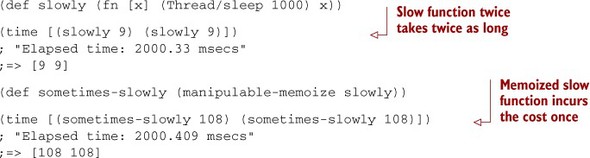

This example slightly modifies the core memoize function to attach the atom to the function being memoized. You can now observe manipulable-memoize in action:

The call to slowly is always ... well ... slow, as you’d expect. But the call to sometimes-slowly is only slow on the first call given a certain argument. This too is as you’d expect. Now you can inspect sometimes-slowly’s cache and perform some operations on it:

(meta sometimes-slowly)

;=> {:cache #<Atom@e4245: {(108) 108}>}

(let [cache (:cache (meta sometimes-slowly))]

(swap! cache dissoc '(108)))

;=> {}

You may wonder why you use swap! to dissoc the cached argument 108 instead of using (reset! cache {}). There are certainly valid use cases for the wholesale reset of an atom’s value, and this case is arguably one. But it’s good practice to set reference values via the application of a function rather than the in-place value setting. This way, you can be more selective about the value manipulations being performed. Having said that, here are the consequences of your actions:

(meta sometimes-slowly)

;=> {:cache #<Atom@e4245: {}>}

(time [(sometimes-slowly 108) (sometimes-slowly 108)])

; "Elapsed time: 1000.3 msecs"

;=> [108 108]

And yes, you were able to remove the cached argument value 108 using the metadata map attached to the function sometimes-slowly. There are better ways than this to allow for pointed cache removal, but for now you can take heart in that by using an atom, you’ve allowed for the local mutation of a reference in a thread-safe way. Additionally, because of the nature of memoization, you can use these memoized functions in a transaction without ill effect. But if you attempt to remove values from a memoization cache, it may not work as you expect. That is, depending on the interleaving of your removal and any restarts, the value(s) you remove might be reinserted the next time through the restart. Even this condition is agreeable, though, if your only concern is reducing total cache size.[6]

6 The discussion of memoization in this section has grown into an official Clojure contrib library, available at https://github.com/clojure/core.memoize.

10.5. When to use locks

Clojure’s reference types and parallel primitives cover a vast array of use cases. Additionally, Java’s rich set of concurrency classes found in the java.util.concurrent package is readily available. But even with this arsenal of tools at your disposal, there still may be circumstances where explicit locking is the only option available, the common case being the modification of arrays concurrently. Let’s start with a simple protocol to describe a concurrent, mutable, safe array that holds an internal array instance, allowing you to access it or mutate it safely. A naive (and incorrect) implementation is shown in the following listing.

Listing 10.7. Simple SafeArray protocol

You use the :refer-clojure namespace directive to :exclude the array and sequence functions that the SafeArray protocol overrides. You do this not only because it’s important to know how to use :refer-clojure, but also because you’re changing the semantics of aset to take a mutating function as its last argument instead of a raw value. You then use the :require directive to alias the Clojure namespace as clj, thus avoiding the need to use the fully qualified function names a la clojure.core/aget.

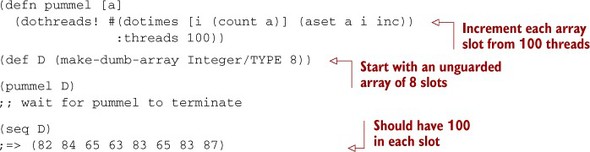

The dumb array created by make-dumb-array is stored in a closure created by reify, and unguarded access is provided without concern for concurrent matters. Using this implementation across threads is disastrous, as you can see:

This is very wrong—100 threads incrementing concurrently should result in 100 for each array slot. To add insult to injury, Clojure doesn’t throw a Concurrent-ModificationException as you might expect, but instead silently goes along doing bad things. Next, we’ll talk a little about locking and provide an alternate implementation for SafeArray using locking primitives.

10.5.1. Safe mutation through locking

Currently, the only way to safely modify and see consistent values for a mutable object (such as an array or a class instance) across threads in Clojure is through locking.

References around evil mutable things

Wrapping a mutable object in a Clojure reference type provides absolutely no guarantees for safe concurrent modification. Doing this will at best explode immediately or, worse, provide inaccurate results.

If at all possible, locking should be avoided; but for those times when it’s unavoidable, the locking macro will help. The locking macro takes a single parameter acting as the locking monitor and a body that executes in the monitor context. Any writes and reads to the monitor object are thread safe, and as a bonus the monitor is always released at the end of the block.[7] One of the major complexities in concurrent programming using locks is that all errors must be handled fully and appropriately; otherwise you risk orphaned locks, and they spell deadlock. But the locking macro always releases the lock, even in the face of exceptions.

7 The locking macro works much like a Java synchronized block.

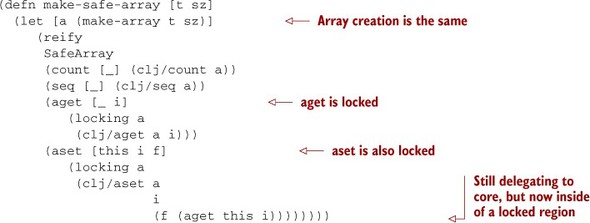

Listing 10.8. Implementating SafeArray using the locking macro

You use the locking macro on both the aget and aset functions so that they can maintain consistency. Because aset calls aget, the locking macro is called twice. This isn’t a problem because locking is reentrant, or able to be called multiple times in the same thread. Typically, you’d have to manage the releasing of a reentrant locking mechanism to match the number of times called, but fortunately locking manages that for you. Here is a use of make-safe-array:

(def A (make-safe-array Integer/TYPE 8)) (pummel A) ;; wait for pummel to terminate (seq A) ;;=> (100 100 100 100 100 100 100 100)

As shown, using locks on the read and write sides of the SafeArray protocol implementation ensures consistency in updates across multiple threads. The locking macro is the simplest way to perform primitive locking in Clojure. But the implementation of make-safe-array is coarse in that the locks used are guarding the entire array. Any readers or writers wishing to access or update any slot in the array must wait their turn, a bottleneck known as contention. If you need finer-grained locking, the locking facilities provided by Java will help you gain more control, as we discuss next.

10.5.2. Using Java’s explicit locks

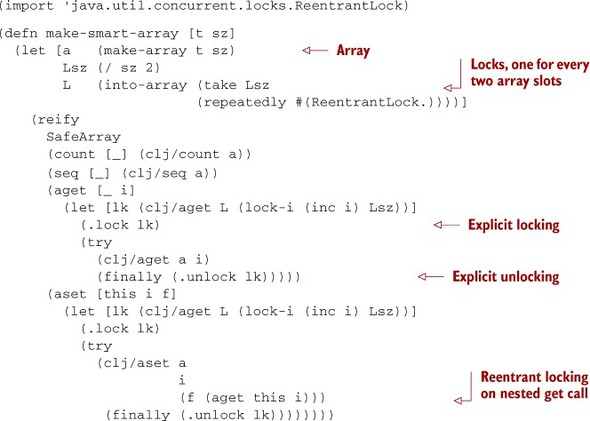

Java provides a set of explicit locks in the java.util.concurrent.locks package. One such lock is provided by the java.util.concurrent.locks.ReentrantLock class. You can create a new type of smart array that provides finer-grained locking on a per-slot basis. Rather than using one lock per array element, you can use a small pool of locks to conserve resources. To accomplish this, first let’s create a useful function called lock-i:

![]()

The lock-i function has one purpose: to calculate a lock number based solely on the array index in question and the total size of the target array. A simple way to stripe an array is to use the classic index modulo array_size formula. The function that uses lock-i is shown next.

Listing 10.9. Implementing SafeArray using ReentrantLock

The first point of note is that you use a technique (simplified for clarity) called lock striping (Herlihy 2008) to reduce the contention of guarding the array as a whole using locking. The target array a’s slots are guarded by a number of locks equal to half its size, each chosen using the simple formula (mod target-index num-locks). This scheme allows readers and writers to (potentially) act independently when accessing different array slots. It’s crucial that you close over the lock instance array L, because for explicit locks to work, each access must lock and unlock the same instance. Additionally, you call the .unlock method in the body of a finally expression, because failing to do so is a recipe for disaster. Unlike the locking macro, the ReentrantLock class doesn’t manage lock release automatically. Observe the following smart array in action:

(def S (make-smart-array Integer/TYPE 8)) (pummel S) ;; wait for pummel to terminate (seq S) ;;=> (100 100 100 100 100 100 100 100)

One flaw of the make-smart-array function is that it uses the same locks for readers and writers. But you can allow for more concurrency if you enable some number of readers to access array slots without blocking at all by using the java.util.concurrent .locks.ReentrantReadWriteLock class. The ReentrantReadWriteLock class holds two lock instances, one for reads and one for writes. You won’t take advantage of that fact here; but if you choose to do so, you can use the implementation of make-smart-array as a guide.

Using the various locking mechanisms, you can guarantee consistency across threads for mutable objects. But as we showed with explicit locks, there’s an expected incantation to unlocking that must be strictly observed. Although not necessarily complex in the SafeArray implementations, the conceptual baggage incurred in the semantics of the explicit-locking scheme doesn’t scale well. The java.util.concurrent package contains a cacophony of concurrency primitives above and beyond simple locks, but it’s not our goal to provide a comprehensive survey.

10.6. Vars and dynamic binding

The last reference type we’ll explore is perhaps the most commonly used: the var. Vars are most often used because of two main features:

- Vars can be named and interned in a namespace.

- Dynamic vars can provide thread-local state.

It’s through the second feature that vars contribute most usefully to the reference type landscape. The thread-local value of a var by definition can only be read from or written to a single thread, and thus it provides the thread-safe semantics you’ve come to expect from a Clojure reference type.

But before you can start experimenting with vars at the REPL, we need to address some consequences of the first feature. The other reference objects we’ve looked at aren’t named and so are generally stored in something with a name. This means when the name is evaluated, you get the reference object, not the value. To get the object’s value, you have to use deref. Named vars flip this around—evaluating their name gives the value, so if you want the var object, you need to pass the name to the special operator var.

With this knowledge in hand, let’s experiment with an existing var. Clojure provides a var named *read-eval*,[8] so you can get its current value by evaluating its name:

8 *read-eval* happens to be a var that has a default configuration useful for this discussion about vars—its actual purpose is unimportant here.

*read-eval* ;=> true

No deref needed, because *read-eval* is a named var. Now for the var object itself:

(var *read-eval*) ;=> #'clojure.core/*read-eval*

When a named var object is printed, it starts with #' and is then followed by the fully qualified name of the var. The #' reader feature expands to the var operator—it means the same thing:

#'*read-eval* ;=> #'clojure.core/*read-eval*

Now that you’ve seen how to refer to var objects, you can look at how they behave. The var *read-eval* is one of those provided by Clojure that’s specifically meant to be given thread-local bindings but by default has only a root binding. You should have seen its root binding when you evaluated it earlier—by default, *read-eval* is bound to true.

10.6.1. The binding macro

The root binding of a var can act as the base of a stack, with each thread’s local bindings pushing onto that stack and popping off of it as requested. The most common mechanism for pushing and popping thread-local bindings is the macro binding. It takes one or more var names and a value for each that initializes the new binding when it’s pushed. These bindings remain in effect until control passes out of the binding macro, at which point they’re popped off the stack.

Here’s a simple example of a function that prints the current value of the var *read-eval*, either the root or thread-local value, whichever is currently in effect:

(defn print-read-eval [] (println "*read-eval* is currently" *read-eval*))

This function calls print-read-eval three times, the first and last of which print the root binding. The middle time, binding is in effect:

(defn binding-play []

(print-read-eval)

(binding [*read-eval* false]

(print-read-eval))

(print-read-eval))

This results in the var temporarily having a thread-local value of false:

(binding-play) ; *read-eval* is currently true ; *read-eval* is currently false ; *read-eval* is currently true

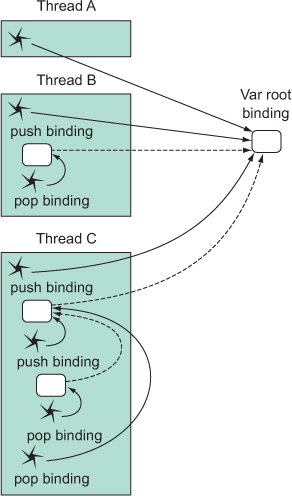

This is a like thread B in figure 10.12, which also shows a more complex scenario than thread A and a simpler one than thread C.

Figure 10.12. Thread-local var bindings. This illustration depicts a single var being used from three different threads. Each rounded box is a var binding, either thread-local or root. Each star is the var being deref’ed, with the solid arrow pointing to the binding used. The dotted lines point from a thread-local binding to the next binding on the stack.

10.6.2. Creating a named var

Vars are most commonly created with the special operator def or one of the many macros that expand to a form that has a def inside:

- defn —Puts a function in a var

- defmacro —Puts a macro in a var

- defonce —Sets the value of an unbound var

- defmulti —Puts a multimethod in a var

There are a few others in clojure.core and many more in contrib. What they have in common is that each of these interns a var in the current namespace. Clojure searches for the named var in the current namespace. If one is found, it’s used; otherwise, a new var is created and added to the namespace, and that one is used.[9] The var (specifically the root binding of the var) is bound to whatever value, function, or macro (and so on) was given. The var itself is returned:

9 Not all macros starting with def necessarily create or intern vars. Some that don’t: defmethod, defrecord, and deftype.

(def favorite-color :green) #'user/favorite-color

As you may have noticed at times in this book, when a var is printed, its fully qualified name is given, along with the namespace where the var is interned (user) and the var’s name (favorite-color). These are preceded by #' because unlike the other reference types, a named var is automatically dereferenced when its name is evaluated—no explicit @ or call to deref is required:

favorite-color ;=> :green

So in order to refer to a var instead of the value it’s bound to, you need to use #' or the special form var, which are equivalent:

(var favorite-color) ;=> #'user/favorite-color

A var can exist (or not exist) in any of four states. The precise state a var is in can be determined using the functions resolve, bound?, and thread-bound? as shown in table 10.1. The first row of the table shows the results of resolve, bound?, and thread-bound? when a var x is unbound. The remaining rows show how to change x to cause those functions to return the values shown.

Table 10.1. Var states

|

Initialization mechanism |

(resolve ‘x) |

(bound? #‘x) |

(thread-bound? #‘x) |

|---|---|---|---|

| (def x) | #'user/x | false | false |

| (def x 5) | #'user/x | true | false |

| (binding [x 7] ...) | #'user/x | true | true |

| (with-local-vars [x 9] ...) | nil | true | true |

10.6.3. Creating anonymous vars

Vars don’t always have names, nor do they need to be interned in a namespace. The with-local-vars macro creates dynamic vars and gives them thread-local bindings all at once, but it doesn’t intern them. Instead, they’re bound to locals, which means the associated var isn’t implicitly looked up by symbolic name. You need to use deref or var-get to get the current value of the var. Here’s an example of a var x created and interned with def, and then a local x that shadows it and is bound to a new var via with-local-vars:

(def x 42)

{:outer-var-value x

:with-locals (with-local-vars [x 9]

{:local-var x

:local-var-value (var-get x)})}

;=> {:outer-var-value 42,

:with-locals {:local-var #<Var: --unnamed-->,

:local-var-value 9}}

Within the body of the with-local-vars macro, you can set the bound value using (var-set <var> <value>), which of course only affects the thread-local value. (Table 10.1 outlines the binding mechanism behind the various ways to create vars in Clojure.) It’s almost stunning how rarely with-local-vars is useful.

10.6.4. Dynamic scope

Vars have dynamic scope, which contrasts with the lexical scope of let locals. The most obvious difference is that with a lexical local, you can easily see where it was initialized by looking at the nested structure of the code. A var, on the other hand, may have been initialized by a binding anywhere earlier in the call stack, not necessarily nearby in the code. This difference can create unexpectedly complex interactions and is one of the few areas where Clojure does little to help address such complexity.

An example of this complexity is shown by using the binding macro or any macro built on top of it, such as with-precision and with-out-str. For example, you can use the with-precision macro to conveniently set up the built-in clojure.core/*math-context* var:

(with-precision 4 (/ 1M 3)) ;=> 0.3333M

You need to use with-precision here because if you don’t tell BigDecimal you’re okay with it rounding off the result, it will refuse to return anything in this case:

(/ 1M 3) ; java.lang.ArithmeticException: Non-terminating decimal expansion; ; no exact representable decimal result.

With that in mind, can you see why with-precision isn’t doing its job in the next snippet? The only thing that makes it different from the example that worked earlier is that it uses map to produce a sequence of three numbers instead of just one:

(with-precision 4 (map (fn [x] (/ x 3)) (range 1M 4M))) ; java.lang.ArithmeticException: Non-terminating decimal expansion; ; no exact representable decimal result.

The problem is that map is lazy and therefore doesn’t call the function given to it immediately. Instead, map returns a partially evaluated data structure. The REPL realizes that structure while trying to print it, so the full evaluation technically happens outside of the with-precision block. Although the map and the function it calls are within the lexical scope of with-precision, and with-precision uses a thread-local binding internally, it doesn’t care about lexical scope. When the division operation is performed, you’ve already left the dynamic scope of with-precision, and it no longer has any effect. The BigDecimal behavior drops back to its default, and it throws an exception.

One way to solve this is to make sure all the division is done before leaving the dynamic scope. Clojure’s doall function is perfect for this:

(with-precision 4 (doall (map (fn [x] (/ x 3)) (range 1M 4M)))) ;=> (0.3333M 0.6667M 1M)

One drawback is that it completely defeats map’s laziness. An alternate solution is to have the function provided to map re-create, when it’s run, the dynamic scope in which the function was created. Clojure provides a handy macro bound-fn to do exactly that:

(with-precision 4 (map (bound-fn [x] (/ x 3)) (range 1M 4M))) ;=> (0.3333M 0.6667M 1M)

Now the sequence being returned is still lazy; but before each item is computed, the dynamic scope of *math-context* is re-created, and the exception is avoided.[10]

10 Of course, if the problem called for it, you could also use (map (fn [x] (with-precision 4 (/ x 3))) (range 1M 4M)), which tightens the dynamic scope to the division itself.