Chapter 4: Running Your Docker Containers

This chapter is probably the most important one in this book. Here, we are going to discuss the concept of Pods, which are the objects Kubernetes uses to launch your Docker containers. Pods are at the heart of Kubernetes and mastering them is essential.

In Chapter 3, Installing Your First Kubernetes Cluster, we said that the Kubernetes API defines a set of resources representing a computing unit. Pods are resources that are defined in the Kubernetes API that represent one or several Docker containers. We never create containers directly with Kubernetes, but we always create Pods, which will be converted into Docker containers on a worker node in our cluster.

At first, it can be a little difficult to understand the connection between Kubernetes Pods and Docker containers, which is why we are going to explain what Pods are and why we use them rather than Docker containers directly. A Kubernetes Pod can contain one or more Docker containers. In this chapter, however, we will focus on Kubernetes Pods, which contain only one Docker container. We will then have the opportunity to discover the Pods that contain several containers in the next chapter.

We will create, delete, and update Pods using the BusyBox image, which is a Linux-based image containing many utilities useful for running tests. We will also launch a Pod based on the NGINX Docker image to launch an HTTP server, before accessing the default NGINX home page via a feature kubectl exposes called port forwarding. It's going to be useful to access and test the Pods running on your Kubernetes cluster from your web browser.

Then, we will discover how to label and annotate our Pods to make them easily accessible. This will help us organize our Kubernetes cluster so that it's as clean as possible. Finally, we will discover two additional resources, which are jobs and Cronjobs. By the end of this chapter, you will be able to launch your first Docker containers managed by Kubernetes, which is the first step in becoming a Kubernetes master!

In this chapter, we're going to cover the following main topics:

- Let's explain the notion of Pods

- Launching your first BusyBox Pod

- Labeling and annotating your Pods

- Launching your first job

- Launching your first Cronjob

Technical requirements

Having a properly configured Kubernetes cluster is recommended to follow this chapter so that you can practice the commands shown as you read. Whether it's a minikube, Kind, GKE, EKS, or AKS cluster is not important. You also need a working kubectl installation on your local machine. Running the kubectl get nodes commands should output you at least one node:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.103.186.internal Ready <none> 6m v1.17.12

You can have more than one node if you want, but at least one Ready node is required to have a working Kubernetes setup.

Let's explain the notion of Pods

In this section, we will explain the concept of Pods from a theoretical point of view. Pods have certain peculiarities that must be understood if you wish to master them well.

What are Pods?

When you want to create, update, or delete a container through Kubernetes, you do so through a Pod – you never interact with the containers directly. A Pod is a group of one or more containers that you want to launch on the same machine, in the same Linux namespace. That's the first rule to understand about Pods: they can be made up of one or more containers but all the containers that belong to the same Pod will be launched on the same worker node. A Pod cannot and won't ever span across multiple worker nodes: that's an absolute rule.

But why do we bother delegating the management of our Docker containers to this intermediary resource? After all, Kubernetes could have a container resource that would just launch a single container. The reason is that containerization invites you to think in terms of Linux processes rather than in terms of virtual machines. You may already know about the biggest and most recurrent Docker anti-pattern, which consists of using Docker containers as virtual machine replacements: in the past, you used to install and deploy all your processes on top of a virtual machine. But Docker containers are no virtual machine replacements, and they are not meant to run multiple processes.

Docker invites you to follow one golden rule: there should be a one-to-one relationship between a Docker container and a Linux process. That being said, modern applications are often made up of multiple processes, not just one, so in most cases, using only one Docker container won't suffice to run a full-featured microservice. This implies that the processes, and thus the containers, should be able to communicate with each other by sharing filesystems, networking, and so on. That's what Kubernetes Pods offer you: the ability to group your containers logically. All the containers/processes that make up a microservice should be grouped in the same Pod. That way, they'll be launched together and benefit from all the features when it comes to facilitating inter-process and inter-container communications.

To help you understand this, imagine you have a working WordPress blog on a virtual machine and you want to convert that virtual machine into a WordPress Pod to deploy your blog on your Kubernetes cluster. WordPress is one of the most common pieces of software and is a perfect example to illustrate the need for Pods. This is because WordPress requires multiple processes to work properly.

WordPress is a PHP application that requires both an HTTP server and a PHP interpreter to work. Let's list what Linux processes WordPress needs to work on:

- An NGINX HTTP server: It's a web application, so it needs an HTTP server running as a process to receive and serve server blog pages. NGINX is a good HTTP server that will do the job perfectly.

- The FPM PHP interpreter: It's a blog engine written in PHP, so it needs a PHP interpreter to work.

NGINX and PHP-FPM are two processes: they are two binaries that you need to launch separately, but they need to be able to work together. On a virtual machine, the job is simple: you just install NGINX and PHP-Fast CGI Process Manager (FPM) on the virtual machine and have both of them communicate through UNIX sockets. You can do this by telling NGINX that the Linux socket PHP-FPM is accessible thanks to the /etc/nginx.config configuration file.

In the Docker world, things become harder because running these two processes in the same container is an anti-pattern: you have to run two containers, one for each process, and you must have them communicate with each other and share a common directory so that they can both access the application code. To solve this problem, you have to use the Docker networking layer to have the NGINX container able to communicate with the PHP-FPM one. Then, you must use a volume mount to share the WordPress code between the two containers. You can do this with some Docker commands but imagine it now in production at scale, on multiple machines, on multiple environments, and so on. As you can imagine, that's the kind of problem the Kubernetes Pod resource solves.

Achieving inter-process communication is possible with bare Docker, but that's difficult to achieve at scale while keeping all the production-related requirements in mind. With tons of microservices to manage and spread on different machines, it would become a nightmare to manage all these Docker networks, volume mounts, and so on. That's why Kubernetes has the Pod resource. Pods are very useful because they wrap multiple containers and enable easy inter-process communication. The following are the core benefits Pods brings you:

- All the containers in the same Pod can reach each other through localhost.

- All the containers in the same Pod share the same network namespace.

- All the containers in the same Pod share the same port space.

- You can attach a volume to a Pod, and then mount the volume to underlying containers, allowing them to share directories and file locations.

With these benefits Kubernetes brings you, it would become super easy to provision your WordPress blog as you can create a Pod that will run two containers: NGINX and PHP-FPM. Since they both can access each other on localhost, having them communicating is super easy. You can then use a volume to expose WordPress's code to both containers.

The most complex applications will forcibly require several containers, so it's a good idea to group them in the same Pod to have Kubernetes launch them together. Keep in mind that the Pod is here for only one reason: to ease inter-container (or inter-process) communications at scale.

Important Note

That being said, it is not uncommon at all to have Pods that are only made up of one container. But in any case, you'll always have to use the Pod Kubernetes object to be able to interact with your containers in Kubernetes.

Lastly, please note that a Docker container that was launched manually on a machine managed by a Kubernetes cluster won't be seen by Kubernetes as a container it manages. It becomes a kind of orphan container outside of the scope of the orchestrator. Kubernetes only manages the container it has launched through its Pod object.

Each Pod gets an IP address

Containers inside a single Pod are capable of communicating with each other through localhost, but Pods are also capable of communicating with each other. At launch time, each Pod gets a single private IP address. Each Pod can communicate with any other Pod in the cluster by calling it through its IP address.

Kubernetes networking models allow Pods to communicate with each other directly without the need for a Network Address Translation (NAT) device. Keep in mind that they are not NAT gateways between Pods in your cluster.

Kubernetes uses a flat network model that is implemented by components called Container Network Interface (CNIs).

How you should design your Pods

Here is the second golden rule about Pods: they are meant to be destroyed and recreated easily. Pods can be destroyed voluntarily or not. For example, if a given worker node running four Pods were to fail, each of the underlying containers would become inaccessible. Because of this, you should be able to destroy and recreate your Pods at will, without it affecting the stability of your application. The best way to achieve this is to respect two simple design rules when building your Pods:

- A Pod should contain everything required to launch a microservice.

- A Pod should be stateless (when possible).

When you start designing Pods on Kubernetes, it's hard to know exactly what a Pod should and shouldn't contain. It's pretty straightforward to explain: a Pod has to contain an application or a microservice. Take the example of our WordPress Pod, which we mentioned earlier: the Pod should contain the NGINX and PHP FPM containers, which are required to launch WordPress. If such a Pod were to fail, our WordPress would become inaccessible, but recreating the Pod would make WordPress accessible again because the Pod contains everything necessary to run WordPress.

That being said, every modern application makes use of database storage, such as Redis or MySQL. WordPress on its own does that too – it uses MySQL to store and retrieve your post. So, you'll also have to run a MySQL container somewhere. Two solutions are possible here:

- You run the MySQL container as part of the WordPress Pod.

- You run the MySQL container as part of a dedicated MySQL Pod.

Both solutions can be used, but the second is preferred. It's a good idea to decouple your application (here, this is WordPress, but tomorrow, it could be a microservice) from its database layer by running them in two separate Pods. Remember that Pods are capable of communicating with each other. You can benefit from this by dedicating a Pod to running MySQL and then giving its IP address to your WordPress blog.

By separating the database layer from the application, you improve the stability of the setup: the application Pod crashing will not affect the database.

To summarize, grouping the layers in the same Pods would cause three problems:

- Data durability

- Availability

- Stability

That's why you should keep your application Pods stateless, by storing their states in an independent Pod. The data layer can be considered an application on its own and has its own treatment decoupled from the application code itself. To achieve that, you should run them in separate Pods.

Now, let's launch our first Pod. Creating a WordPress Pod would be too complex for now, so let's start easy by launching some NGINX Pods and see how Kubernetes manages the Docker container.

Launching your first Pods

In this section, we will explain how to create our first Pods in our Kubernetes cluster. Pods have certain peculiarities that must be understood to master them well.

We are not going to create a resource on your Kubernetes cluster at the moment; instead, we are simply going to explain what Pods are. In the next section, we'll start building our first Pods.

Creating a Pod with imperative syntax

In this section, we are going to create a Pod based on the NGINX image. We need two parameters to create a Pod:

- The Pod's name, which is arbitrarily defined by you

- The Docker image(s) to build its underlying container(s)

As with almost everything on Kubernetes, you can create Pods using either of the two syntaxes available: the imperative syntax and the declarative syntax. As a reminder, the imperative syntax is to run kubectl commands directly from a terminal, while with declarative syntax, you must write a YAML file containing the configuration information for your Pod, and then apply it with the kubectl create -f command.

To create a Pod on your Kubernetes cluster, you have to use the kubectl run command. That's the simplest and fastest way to get a Pod running on your Kubernetes cluster. Here is how the command can be called:

$ kubectl run nginx-Pod --image nginx:latest

In this command, the Pod's name is set to nginx-Pod. This name is important because it is a pointer to the Pod: when you need to run the update or delete command on this Pod, you'll have to specify that name to tell Kubernetes which Pod the action should run on. The --image flag will be used to build the Docker container that this Pod will run.

Here, you are telling Kubernetes to build a Pod based on the nginx:latest Docker image hosted on Docker Hub. This nginx-Pod Pod contains only one container based on this nginx:latest image: you cannot specify multiple images here; this is a limitation of the imperative syntax.

If you want to build a Pod containing multiple containers built from several different Docker images, then you will have to go through the declarative syntax and write a YAML file.

Creating a Pod with declarative syntax

Creating a Pod with declarative syntax is simple too. You have to create a YAML file containing your Pod definition and apply it against your Kubernetes cluster using the kubectl create -f command.

Here is the content of the nginx-Pod.yaml file, which you can create on your local workstation:

apiVersion: v1

kind: Pod

metadata:

name: nginx-Pod

spec:

containers:

- name: nginx-container

image: nginx:latest

Try to read this file and understand its content. YAML files are only key/value pairs. The Pod's name is nginx-Pod, and then we have an array of containers in the spec: part of the file containing only one container created from the nginx:latest image. The container itself is named nginx-container.

Once the nginx-Pod.yaml file has been saved, run the following command to create the Pod:

$ kubectl create -f nginx-Pod.yaml

Pod/nginx-Pod created

$ kubectl apply -f nginx-Pod.yaml # this command works too!

If a Pod called nginx-Pod already exists in your cluster, this command will fail. Remember that Kubernetes cannot run two Pods with the same name: the Pod's name is the unique identifier and is used to identify the Pods. Try to edit the YAML file to update the Pod's name and then apply it again.

Reading the Pod's information and metadata

At this point, you should have a running Pod on your Kubernetes cluster. Here, we are going to try to read its information. At any time, we need to be able to retrieve and read information regarding the resources that were created on your Kubernetes cluster; this is especially true for Pods. Reading the Kubernetes cluster can be achieved using two kubectl commands: kubectl get and kubectl describe. Let's take a look at them:

- kubectl get: The kubectl get command is a list operation; you use this command to list a set of objects. Do you remember when we listed the nodes of your cluster after all the installation procedures described in the previous chapter? We did this using kubectl get nodes. The command works by requiring you to pass the object type you want to list. In our case, it's going to be the kubectl get Pods operation. In the upcoming chapters, we will discover other objects, such as configmaps. To list them, you'll have to type kubectl get configmaps; the same goes for the other object types. kubectl get does not require you to know the name of a precise resource, because it's intended to list.

- kubectl describe: The kubectl describe command is quite different. It's intended to retrieve a complete set of information for one specific object that's been identified from both its kind and object name. You can retrieve the information of our previously created Pod by using kubectl describe Pods nginx-Pod.

Calling this command will return a full set of information available about that specific Pod, such as its IP address and so on.

Now, let's look at some more advanced options about listing and describing objects in Kubernetes.

Listing the objects in JSON or YAML

The -o option is one of the most useful options offered by the kubectl command line. This one has some benefits you must be aware of. That option allows you to customize the output of the kubectl command line. By default, using the kubectl get Pods command will return a list of the Pods in your Kubernetes cluster in a formatted way so that the end user can see it easily. You can also retrieve this information in JSON format or YAML format by using the -o option:

$ kubectl get Pods -o yaml # In YAML format

$ kubectl get Pods -o json # In JSON format

# If you know the Pod name, you can also get a specific Pod

$ kubectl get Pods <POD_NAME> -o yaml

# OR

$ kubectl get Pods <POD_NAME> -o json

This way, you can retrieve and export data from your Kubernetes cluster in a scripting-friendly format.

Backing up your resource using the list operation

You can also use these flags to back up your Kubernetes cluster. Imagine a situation where you created a Pod using the imperative way, so you don't have the YAML declaration file stored in your computer. If the Pod fails, it's going to be hard to recreate it. The -o option helps us retrieve the YAML declaration file of a resource that's been created in Kubernetes, even if we created it using the imperative way. To do this, run the following command:

$ kubectl get Pods/nginx-Pod -o yaml > nginx-Pod.yaml

This way, you have a YAML backup of the nginx-Pod resource as it is running on your cluster. If someone goes wrong, you'll be able to recreate your Pod easily. Pay attention to the nginx-Pod section of this command. To retrieve the YAML declaration, you need to specify which resource you are targeting. By redirecting the output of this command to a file, you get a nice way to retrieve and back up the configuration of the object inside your Kubernetes cluster.

Getting more information from the list operation

It's also worth mentioning the -o wide format, which is going to be very useful for you: using this option allows you to expand the default output to add more data. By using it on the Pods object, for example, you'll get the name of the worker node where the Pod is running:

$ kubectl get Pods -o wide

# The worker node name is displayed

Keep in mind that the -o option can take a lot of different parameters and that some of them are much more advanced, such as jsonpath, which allows you to directly execute kind of sort operations on top of a JSON body document to retrieve only specific information, just like the jq library you used previously if you have already written some Bash scripts that deal with JSON parsing.

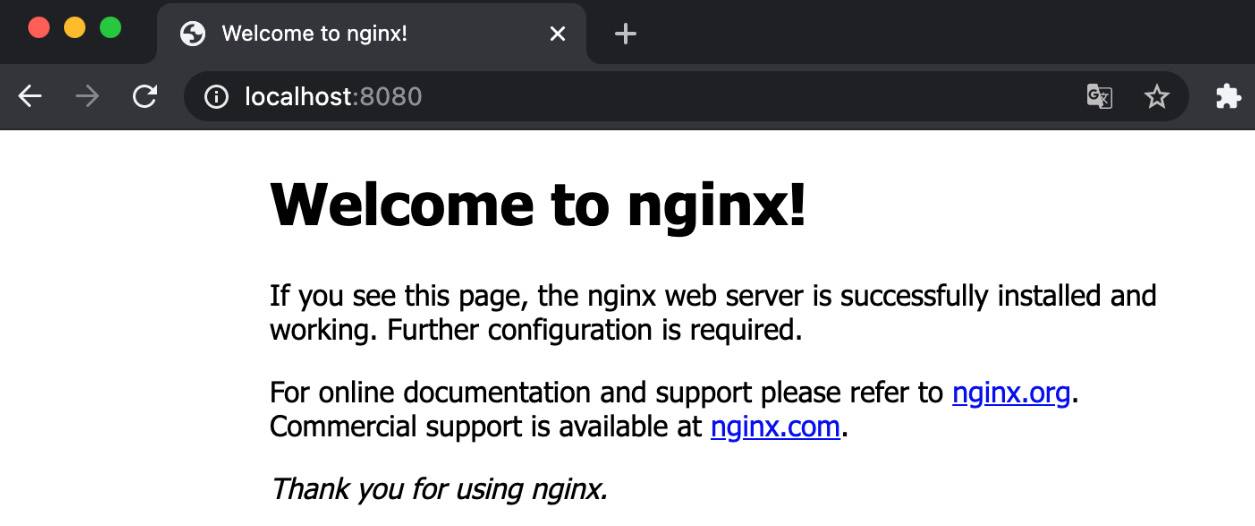

Accessing a Pod from the outside world

At this point, you should have a Pod containing an NGINX HTTP server on your Kubernetes cluster. You should now be able to access it from your web browser. However, this is a bit complicated.

By default, your Kubernetes cluster does not expose the Pod it runs to the internet. For that, you will need to use another resource called a service, which we will cover in more detail in Chapter 7, Exposing Your Pods with Services. However, kubectl does offer a command for quickly accessing a running container on your cluster called kubectl port-forward. This is how you can use it:

$ kubectl port-forward Pod/nginx-Pods 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

This command is quite easy to understand: I'm telling kubectl to forward port 8080 on my local machine (the one running kubectl) to port 80 on the Pod identified by Pod/nginx-Pod.

Kubectl then outputs a message, telling me that it started to forward my local 8080 port to the 80 one of the Pod. If you get an error message, it's probably because your local port 8080 is currently being used. Try to set a different port or simply remove the local port from the command to let kubectl choose a local port randomly:

$ kubectl port-forward Pod/nginx-Pods :80

Now, you can launch your browser and try to reach the http://localhost:<localport> address, which in my case is http://localhost:80:

Figure 4.1 – The NGINX default page running in a Pod and accessible on localhost, which indicates the port-forward command worked!

Entering a container inside a Pod

When a Pod is launched, you can access the Pods it contains. Under Docker, the command to execute a command in a running container is called docker exec. Kubernetes copies this behavior via a command called kubectl exec. Use the following command to access our NGINX container inside nginx-Pod, which we launched earlier:

$ kubectl exec -ti Pods nginx-Pod bash

After running this command, you will be inside the NGINX container. You can do whatever you want here, just like with any other container. The preceding command assumes that the bash binary is installed in the container you are trying to access. Otherwise, the sh binary is generally installed on a lot of containers and might be used to access the container. Don't be afraid to path a full binary path, like so:

$ kubectl exec -ti Pods nginx-Pod /bin/bash

Now, let's discover how to delete a Pod from a Kubernetes cluster.

Deleting a Pod

Deleting a Pod is super easy. You can do so using the kubectl delete command. You need to know the name of the Pod you want to delete. In our case, the Pod's name is nginx-Pod. Run the following command:

$ kubectl delete Pods nginx-Pod

$ # or...

$ kubectl delete Pods/nginx-Pod

If you do not know the name of the Pod, remember to run the kubectl get Pods command to retrieve the list of the Pod and find the one you want to delete.

There is also something you must know: if you have built your Pod with declarative syntax and you still have its YAML configuration file, you can delete your Pod without having to know the name of the container because it is contained in the YAML file.

Run the following command to delete the Pod using the declarative syntax:

$ kubectl delete -f nginx-Pod.yaml

After you run this command, the Pod will be deleted in the same way.

Important Note

Remember that all containers belonging to the Pod will be deleted. The container's life cycle is bound to the life cycle of the Pod that launched it. If the Pod is deleted, the containers it manages will be deleted. Remember to always interact with the Pods and not with the containers directly.

With that, we have reviewed the most important aspects of Pod management, such as launching a Pod with the imperative or declarative syntax, deleting a Pod, and also listing and describing them. Now, I will introduce one of the most important aspects of Pod management in Kubernetes: labeling and annotating.

Labeling and annotating the Pods

We will now discuss another key concept of Kubernetes: labels and annotations. Labels are key/value pairs that you can attach to your Kubernetes objects. Labels are meant to tag your Kubernetes objects with key/value pairs defined by you. Once your Kubernetes objects have been labeled, you can build a custom query to retrieve specific Kubernetes objects based on the labels they hold. In this section, we are going to discover how to interact with labels through kubectl by assigning some labels to our Pods.

What are labels and why do we need them?

Labels are key/value pairs that you can attach to your created objects, such as Pods. What label you define for your objects is up to you – there is no specific rule regarding this. These labels are attributes that will allow you to organize your objects in your Kubernetes cluster: once your objects have been labeled, you can list and query them using the labels they hold. To give you a very concrete example, you could attach a label called environment = prod to some of your Pods, and then use the kubectl get Pods command to list all the Pods within that environment. So, you could list all the Pods that belong to your production environment in one command:

$ kubectl get Pods --label "environment=production"

As you can see, it can be achieved using the --label parameter, which can be shortened using its -l equivalent:

$ kubectl get Pods --l "environment=production"

This command will list all the Pods holding a label called environment with a value of production. Of course, in our case, no Pods will be found since none of the ones we created earlier are holding this label. You'll have to be very disciplined about labels and not forget to set them every time you create a Pod or another object, and that's why we are introducing them quite early in this book: not only Pods but almost every object in Kubernetes can be labeled, and you should take advantage of this feature to keep your cluster organized and clean.

You use labels not only to organize your cluster but also to build relationships between your different Kubernetes objects: you will notice that some Kubernetes objects will read the labels that are carried by certain Pods and perform certain operations on them based on the labels they carry. If your Pods don't have labels or they are misnamed or contain the wrong values, some of these mechanisms might not work as you expect.

On the other hand, using labels is completely arbitrary: there is no particular naming rule, nor any convention Kubernetes expects you to follow. Thus, it is your responsibility to use the labels as you wish and build your own convention. If you are in charge of the governance of a Kubernetes cluster, you should enforce the usage of mandatory labels and build some monitoring rules to quickly identify non-labeled resources.

Keep in mind that labels are limited to 63 characters: they are intended to be short. Here are some label ideas you could use:

- environment (prod, dev, uat, and so on)

- stack (blue, green, and so on)

- tier (frontend and backend)

- app_name (wordpress, magento, mysql, and so on)

- team (business and developers)

Labels are not intended to be unique between objects. For example, perhaps you would like to list all the Pods that are part of the production environment. Here, several Pods with the same label key and value pair can exist in the cluster at the same time without posing any problem – it's even recommended if you want your list query to work. For example, if you want to list all the resources that are part of the prod environment, a label environment such as = prod should be created on multiple resources. Now, let's look at annotations, which are another way we can assign metadata to our Pods.

What are annotations and how do they differ from labels?

Kubernetes also uses another type of metadata called annotations. Annotations are very similar to labels as they are also key/value pairs. However, annotations do not have the same use as labels. Labels are intended to identify resources and build relationships between them, while annotations are used to provide contextual information about the resource that they are defined on.

For example, when you create a Pod, you could add an annotation containing the email of the support team to contact if this app does not work. This information has its place in an annotation but has nothing to do with a label.

While it is highly recommended that you define labels wherever you can, you can omit annotations: they are less important to the operation of your cluster than labels. Be aware, however, that some Kubernetes objects or third-party applications often read annotations and use them as configuration. In this case, their usage of annotations will be explained explicitly in their documentation.

Adding a label

In this section, we will learn how to add and remove labels and annotations from Pods. We will also learn how to modify the labels of a Pod that already exists on a cluster.

You can add a label when creating a Pod. Let's take the Pod based on the NGINX image that we used earlier. We will recreate it here with a label called tier, which will contain the frontend value. Here is the kubectl command to run for that:

$ kubectl run nginx-Pod --image nginx --label "tier=frontend"

As you can see, a label can be assigned using the --label parameter. You can add multiple labels by repeatedly using the --label parameter, like this:

$ kubectl run nginx-Pod --image nginx --label "tier=frontend" --label "environment=prod"

Here, the nginx Pod will be created with two labels.

The --label flag has a short version called -l. You can use this to make your command shorter and easier to read. Here is the same command with the -l parameter used instead of --label:

$ kubectl run nginx-Pod --image nginx -l "tier=frontend" -l "environment=prod"

Another important thing to notice is that labels can be defined with declarative syntax. Labels can be appended to a YAML Pod definition. Here is the same Pod, holding the two labels we created earlier, but this time, it's been created with the declarative syntax:

# ~/labelled_Pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-Pod

labels:

environment: prod

tier: frontend

spec:

containers:

- name: nginx-container

image: nginx:latest

Consider the file that was created at ~/labelled_Pod.yaml. The following kubectl command would create the Pod the same way as it was created previously:

$ kubectl create -f ~/labelled_Pod.yaml

Consider the file created at ~/labelled_Pod.yaml. The following kubectl command would create the Pod the same way as it was created previously. This time, running the command we used earlier should return at least one Pod – the one we just created:

$ kubectl get Pods --label "environment=production"

Now, let's learn how we can list the labels attached to our Pod.

Listing labels attached to a Pod

$ kubectl get Pods --show-labels

There is no dedicated command to list the labels attached to a Pod, but you can make the output of kubectl get Pods a little bit more verbose. By using the --show-labels parameter, the output of the command will include the labels attached to the Pods.

This command does not run any kind of query based on the labels; instead, it displays the labels themselves as part of the output. It can be useful for debugging. Of course, this option can be chained with other options, such as -o wide:

$ kubectl get Pods --show-labels -o wide

This command would then show you the list of the Pods and their labels, as well as the node that the Pod has been scheduled to run on.

Adding or updating a label to/of a running Pod

Now that we've learned how to create Pods with labels, we'll learn how to add labels to a running Pod. You can add, create, or modify the labels of a resource at any time using the kubectl label command. Here, we are going to add another label to our nginx Pod. This label will be called stack and will have a value of blue:

$ kubectl label Pods nginx-Pod stack=blue

This command only works if the Pod has no label called stack. When the command is executed, it can only add a new tag and not update it. This command will update the Pod by adding a label called stack with a value of blue. Run the following command to see that the change was applied:

$ kubectl get Pods nginx-Pod --show-labels

To update an existing label, you must append the --overwrite parameter to the preceding command. Let's update the stack=blue label to make it stack=green; pay attention to the overwrite parameter:

$ kubectl label Pods nginx-Pod stack=green --overwrite

Here, the label should be updated. The stack label should now be equal to green. Run the following command to show the Pod and its labels again:

$ kubectl get Pods nginx-Pod --show-labels

That command will only list nginx-Pod and display its label as part of the output in the terminal.

Important Note

Adding or updating labels using the kubectl label command might be dangerous. As we mentioned earlier, you'll build relationships between different Kubernetes objects based on labels. By updating them, you might break some of these relationships and your resources might start to behave not as expected. That's why it's better to add labels when a Pod is created and keep your Kubernetes configuration immutable. It's always better to destroy and recreate rather than update an already running configuration.

The last thing we must do is learn how to delete a label attached to a running Pod.

Deleting a label attached to a running Pod

Just like we added and updated labels of a running Pod, we can also delete them. The command is a little bit trickier. Here, we are going to remove the label called stack, which we can do by adding a minus symbol (-) right after the label name:

$ kubectl label nginx-Pod stack-

Adding that minus symbol at the end of the command might be quite strange, but running kubectl get Pods --show-labels again should show that the stack label is now gone:

$ kubectl get Pods nginx-Pod --show-labels

Adding an annotation

Let's learn how to add annotations to a Pod. It won't take long to cover this because it works just like it does with labels:

# ~/annotated_Pod.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

tier: webserver

name: nginx-Pod

labels:

environment: prod

tier: frontend

spec:

containers:

- name: nginx-container

image: nginx:latest

Here, I simply added the tier=webserver annotation, which can help me identify that this Pod is running an HTTP server. Just keep in mind that it's a way to add additional metadata.

The name of an annotation can be prefixed by a DNS name. This is the case for Kubernetes components such as kube-scheduler, which must indicate to cluster users that this component is part of the Kubernetes core. The prefix can be omitted completely, as shown in the preceding example.

Launching your first job

Now, let's discover another Kubernetes resource that is derived from Pods: the Job resource. In Kubernetes, a computing resource is a Pod, and everything else is just an intermediate resource that manipulates Pods.

This is the case for the Job object, which is an object that will create one or multiple Pods to complete a specific computing task, such as running a Linux command.

What are jobs?

A job is another kind of resource that's exposed by the Kubernetes API. In the end, a job will create one or multiple Pods to execute a command defined by you. That's how jobs work: they launch Pods. You have to understand the relationship between the two: jobs are not independent of Pods, and they would be useless without Pods. In the end, the two things they are capable of are launching Pods and managing them. Jobs are meant to handle a certain task and then exit. Here are some examples of typical use cases for a Kubernetes job:

- Taking a backup of a database

- Sending an email

- Consuming some messages in a queue

These are tasks you do not want to run forever. You expect the Pods to be terminated once they have completed their task. This is where the Jobs resource will help you.

But why bother using another resource to execute a command? After all, we can create one or multiple Pods directly that will run our command and then exit.

This is true. You can use a Pod based on a Docker image to run the command you want and that would work fine. However, jobs have mechanisms implemented at their level that allow them to manage Pods in a more advanced way. Here are some things that jobs are capable of:

- Running Pods multiple times

- Running Pods multiple times in parallel

- Retrying to launch the Pods if they encountered any errors

- Killing a Pod after a specified number of seconds

Another good point is that a job manages the labels of the Pods it will create so that you won't have to manage the labels on those Pods directly.

All of this can be done without using jobs, but this would be very difficult to manage. Fortunately for us, the Jobs resource exists, and we are going to learn how to use it now.

Creating a job with restartPolicy

Since creating a job might require some advanced configurations, we are going to focus on declarative syntax here. This is how you can create a Kubernetes job through YAML. We are going to make things simple here; the job will just echo Hello world:

# ~/hello-world-job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: hello-world-job

spec:

template:

metadata:

name: hello-world-job

spec:

restartPolicy: OnFailure

containers:

- name: hello-world-container

image: busybox

command: ["/bin/sh", "-c"]

args: ["echo 'Hello world'"]

Pay attention to the kind resource, which tells Kubernetes that we need to create a job and not a Pod, as we did previously. Also, notice apiVersion:, which also differs from the one that's used to create the Pod. You can create the job with the following command:

$ kubectl create -f hello-world-job.yaml

As you can see, this job will create a Pod based on the Docker busybox image. This will run the echo 'Hello World' command. Lastly, the restartPolicy option is set to OnFailure, which tells Kubernetes to restart the Pod or the container in case it fails. If the entire Pod fails, a new Pod will be relaunched. If the container fails (the memory limit has been reached or a non-zero exit code occurs), the individual container will be relaunched on the same node because the Pod will remain untouched, which means it's still scheduled on the same machine.

The restartPolicy parameter can take two options:

- Never

- OnFailure

Setting it to Never will prevent the job from relaunching the Pods, even if it fails. When debugging a failing job, it's a good idea to set restartPolicy to Never to help with debugging. Otherwise, new Pods might be recreated over and over, making your life harder when it comes to debugging.

In our case, there is little chance that our job was not successful since we only want to run a simple Hello world. To make sure that our job worked well, we can read its log. To do that, we need to retrieve the name of the Pod it created using the kubectl get Pods command. Then., we can use the kubectl logs command, as we would do with any Pods:

$ kubectl logs

Here, we can see that our job has worked well since we can see the Hello world message displayed in the log of our Pod. However, what if it had failed? Well, this depends on restartPolicy – if it's set to Never, then nothing would happen and Kubernetes wouldn't try to relaunch the Pods.

However, if restartPolicy was set to OnFailure, Kubernetes would try to restart the job after 10 seconds and then double that time on each new failure. 10 seconds, then 20 seconds, then 40 seconds, then 80 seconds, and so on. After 6 minutes, Kubernetes would give up.

Understanding the job's backoffLimit

By default, the Kubernetes job will try to relaunch the failing Pod 6 times during the next 6 minutes after its failure. You can change this limitation by changing the backoffLimit option. Here is the updated YAML file:

# ~/hello-world-job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: hello-world-job

spec:

backoffLimit: 3

template:

metadata:

name: hello-world-job

spec:

restartPolicy: OnFailure

containers:

- name: hello-world-container

image: busybox

command: ["/bin/sh", "-c"]

args: ["echo 'Hello world'"]

This way, the job will only try to relaunch the Pods twice after its failure.

Running a task multiple times using completions

You can also instruct Kubernetes to launch a job multiple times using the Job object.

You can do this by using the completions option to specify the number of times you want a command to be executed. The number of completions will create five different Pods that will be launched one after the other. Once one Pod has finished, the next one will be started. Here is the updated YAML file:

apiVersion: batch/v1

kind: Job

metadata:

name: hello-world-job

spec:

completions: 10

template:

metadata:

name: hello-world-job

spec:

restartPolicy: OnFailure

containers:

- name: hello-world-container

image: busybox

command: ["/bin/sh", "-c"]

args: ["echo 'Hello world'; sleep 3"]

The completions option was added here. Also, please notice that the args section was updated by us adding the sleep 3 option. Using this option will make the task sleep for 3 seconds before completing, giving us enough time to notice the next Pod being created. Once you've applied this configuration file to your Kubernetes cluster, you can run the following command:

$ kubectl get Pods --watch

The watch mechanism will update your kubectl output when something new arrives, such as the creation of the new Pods being managed by your Kubernetes. If you want to wait for the job to finish, you'll see 10 Pods being created with a 3-second delay between each.

Running a task multiple times in parallel

The completions option ensures that the Pods are created one after the other. You can also enforce parallel execution using the parallelism option. If you do that, you can get rid of the completions option. Here is the updated YAML file:

apiVersion: batch/v1

kind: Job

metadata:

name: hello-world-job

spec:

parallelism: 5

template:

metadata:

name: hello-world-job

spec:

restartPolicy: OnFailure

containers:

- name: hello-world-container

image: busybox

command: ["/bin/sh", "-c"]

args: ["echo 'Hello world'; sleep 3"]

Please notice that the completions option is now gone and that we replaced it with parallelism. The job will now launch five Pods at the same time and will have them run in parallel.

Terminating a job after a specific amount of time

You can also decide to terminate a Pod after a specific amount of time. This can be very useful when you are running a job that is meant to consume a queue, for example. You could poll the messages for 1 minute and then automatically terminate the processes. You can do that using the activeDeadlineSeconds parameter. Here is the updated YAML file:

apiVersion: batch/v1

kind: Job

metadata:

name: hello-world-job

spec:

backoffLimit: 3

activeDeadlineSeconds: 60

template:

metadata:

name: hello-world-job

spec:

restartPolicy: OnFailure

containers:

- name: hello-world-container

image: busybox

command: ["/bin/sh", "-c"]

args: ["echo 'Hello world'"]

Here, the job will terminate after 60 seconds, no matter what happens. It's a good idea to use this feature if you want to keep a process running for an exact amount of time and then terminate it.

What happens if a job succeeds?

If your job is completed, it will remain created in your Kubernetes cluster and will not be deleted automatically: that's the default behavior. The reason for this is that you can read its logs a long time after its completion. However, keeping your jobs created on your Kubernetes cluster that way might not suit you. You can delete the jobs automatically and the Pods they created by using the ttlSecondsAfterFinished option, but keep in mind that this feature is still in alpha as of Kubernetes version 1.12. Here is the updated YAML file for implementing this solution:

apiVersion: batch/v1

kind: Job

metadata:

name: hello-world-job

spec:

ttlSecondsAfterFinished: 30

template:

metadata:

name: hello-world-job

spec:

restartPolicy: OnFailure

containers:

- name: hello-world-container

image: busybox

command: ["/bin/sh", "-c"]

args: ["echo 'Hello world'"]

Here, the jobs are going to be deleted 30 seconds after their completion. If you do not want to use this option or it's not available in your Kubernetes version – for example, you are running a Kubernetes version before 1.12 – then you'll need to delete them manually. This is what we are going to discover now.

Deleting a job

Let's learn how to delete a Kubernetes job manually. Keep in mind that the Pods that are created are bound to the life cycle of their parent. Deleting a job will result in deleting the Pods they manage.

Start by getting the name of the job you want to destroy. In our case, it's hello-world-job. Otherwise, use the kubectl get jobs command to retrieve the correct name. Then, run the following command:

$ kubectl delete jobs hello-world-job

If you want to delete the jobs but not the Pods it created, you need to add the --cascade=false parameter to the delete command:

$ kubectl delete jobs hello-world-job --cascade=false

Thanks to this command, you can get rid of all the jobs that will be kept on your Kubernetes cluster once they've been completed.

Launching your first Cronjob

To close this first chapter on Pods, I suggest that we discover another new Kubernetes resource called Cronjob.

What are Cronjobs?

The name Cronjob can mean two different things and it is important to not get confused:

- The UNIX cron feature

- The Kubernetes Cronjob resource

Historically, Cronjobs are command scheduled using the cron UNIX feature, which is the most robust way to schedule the execution of a command in UNIX systems. This idea was later taken up in Kubernetes.

In Kubernetes, you are not going to schedule the execution of a command but the execution of a Pod. You can do that using the Cronjob resource.

Be careful because even though the two ideas are similar, they don't work the same at all. On UNIX and other derived systems such as UNIX, you schedule commands by editing a file called Crontab, which is usually found in /etc/crontab. In the world of Kubernetes, things are different: you are not going to schedule the execution of commands but the execution of Job resources, which themselves will create Pod resources. You can achieve this by manipulating a new kind of resource called Cronjob. Keep in mind that the Cronjob object you'll create will create Jobs objects.

Think of it as a kind of wrapper around the Job resource: in Kubernetes, we call that a controller. Cronjob can do everything the Job resource is capable of because it is nothing more than a wrapper around the Job resource, according to the cron expression specified.

The good news is that the Kubernetes Cronjob resource is using the cron format inherited from UNIX. So, if you have already written some Cronjobs on a Linux system, mastering Kubernetes Cronjobs will be super straightforward.

But first, why would you want to execute a Pod? The answer is simple; here are some concrete use cases:

- Taking database backups regularly every Sunday at 1 A.M.

- Clearing cached data every Monday at 4 P.M.

- Sending a queued email every 5 minutes

- Various maintenance operations to be executed regularly

The use cases of Kubernetes Cronjobs do not differ much from their UNIX counterparts – they are used to answer the same need, but they do provide the massive benefit of allowing you to use your already configured Kubernetes cluster to schedule regular jobs using your Docker images and your already existing Kubernetes cluster. In the end, the whole idea is to schedule the execution of your commands thanks to Jobs and Pods in a Kubernetes way.

Creating your first Cronjob

It's time to create your first Cronjob. Let's do this using declarative syntax. First, let's create a cronjob.yaml file and place the following YAML content into it:

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello-world-cronjob

spec:

schedule:

ttlSecondsAfterFinished: 30

template:

metadata:

name: hello-world-job

spec:

restartPolicy: OnFailure

containers:

- name: hello-world-container

image: busybox

command: ["/bin/sh", "-c"]

args: ["echo 'Hello world'"]

Before applying this file to the Kubernetes cluster, let's start to explain it. There are two important things to notice here:

- The schedule key, which lets you input the cron expression

- The jobTemplate section, which is exactly what you would input in a job YAML manifest

Let's explain these two keys quickly before applying the file.

Understanding the schedule

The schedule key allows you to insert an expression in a cron format such as Linux. Let's explain how these expressions work: if you already know these expressions, you can skip these explanations.

A cron expression is made up of five entries separated by white space. From left to right, these entries correspond to the following:

- Minutes

- Hour

- Day of the month

- Month

- Day of the week

Each entry can be filled with an asterisk, which means every. You can also set several values for one entry by separating them with a ,. You can also use a – to input a range of values. Let me show you some examples:

- "10 11 * * *" means "At 11:10 every day of every month."

- "10 11 * 12 *" means "At 11:10 every day of December."

- "10 11 * 12 1" means "At 11:10 of every Monday of December."

- "10 11 * * 1,2" means "At 11:10 of every Monday and Tuesday of every month."

- "10 11 * 2-5 *" means "At 11:10 every day from February to May."

Here are some examples that should help you understand how cron works. Of course, you don't have to memorize the syntax: most people help themselves with documentation or cron expression generators online. If this is too complicated, feel free to use this kind of tool; it can help you confirm that your syntax is valid before you deploy the object to Kubernetes.

Understanding the role of the jobTemplate section

We will cover this shortly to make you understand an important concept regarding Kubernetes. If you've been paying attention to the structure of the YAML file, you may have noticed that the jobTemplate key contains the definition of a Job object. The reason is simple: when we use the Cronjob object, we are simply delegating the creation of a Job object to the Cronjob object. This is why the YAML file of the Cronjob object requests that we provide it with a jobTemplate.

Therefore, the Cronjob object is a resource that only manipulates another resource.

In Kubernetes, a lot of things work like this, and this is important to remember. Later, we will discover many objects that will allow us to create Pods so that we don't have to do it ourselves. These special objects are called controllers: they manipulate other Kubernetes resources by obeying their own logic. Moreover, when you think about it, the Job object is itself a controller since, in the end, it only manipulates Pods by providing them with its own features, such as the possibility of running Pods in parallel.

In a real context, you should always try to create Pods using these intermediate objects as they provide additional and more advanced management features.

Try to remember this rule: the basic unit in Kubernetes is a Pod, but you can delegate the creation of Pods to many other objects. In the rest of this section, we will continue to discover naked Pods. Later, we will learn how to manage their creation and management via controllers.

Controlling the Cronjob execution deadline

For some reason, a Cronjob may fail to execute. In this case, Kubernetes cannot execute the Job at the moment it is supposed to start.

Managing the history limits of jobs

When a Cronjob completes, whether it's successful or not, history is kept on your Kubernetes cluster. The history setting can be set at the Cronjob level, so you can decide whether to keep the history of each Cronjob or not, and in case you want to keep it, how many entries should be kept for succeeded and failed jobs.

Let's learn how to do this.

Creating a Cronjob

If you already have the YAML manifest file, creating a Cronjob object is easy. You can do so using the kubectl create command:

$ kubectl create -f ~/cronjob.yaml

cronjob/hello-world-cronjob created

With that, the Cronjob has been created on your Kubernetes cluster. It will launch a scheduled Pod, as configured in the YAML file.

Deleting a Cronjob

Like any other Kubernetes resource, deleting a Cronjob can be achieved through the kubectl delete command. Like before, if you have the YAML manifest, it's easy:

$ kubectl delete -f ~/cronjob.yaml

cronjob/hello-world-cronjob deleted

With that, the Cronjob has been destroyed by your Kubernetes cluster. No scheduled jobs will be launched anymore.

Summary

We have come to the end of this chapter on Pods and how to create them; I hope you enjoyed it. You've learned how to use the most important objects in Kubernetes: Pods.

The knowledge you've developed in this chapter is part of the essential basis for mastering Kubernetes: all you will do in Kubernetes is manipulate Pods, label them, and access them. In addition, you saw that Kubernetes behaves like a traditional API, in that it executes CRUD operations to interact with the resources on the cluster. In this chapter, you learned how to launch Docker containers on Kubernetes, how to access these containers using kubectl port forwarding, how to add labels and annotations to Pods, how to delete Pods, and how to launch and schedule jobs using the Cronjob resource.

Just remember this rule about Docker container management: any container that will be launched in Kubernetes will be launched through the object. Mastering this object is like mastering most of Kubernetes: everything else will consist of automating things around the management of Pods, just like we did with the Cronjob object; you have seen that the Cronjob object only launches Job objects that launch Pods. If you've understood that some objects can manage others, but in the end, all containers are managed by Pods, then you've understood the philosophy behind Kubernetes, and it will be very easy for you to move forward with this orchestrator.

Also, I invite you to add labels and annotations to your Pods, even if you don't see the need for them right away. Know that it is essential to label your objects well to keep a clean, structured, and well-organized cluster.

However, you still have a lot to discover when it comes to managing Pods because so far, we have only seen Pods that are made up of only one Docker container. The greatest strength of Pods is that they allow you to manage multiple containers at the same time, and of course, to do things properly, there are several design patterns that we can follow to manage our Pods when they are made of several containers.

In the next chapter, we will learn how to manage Pods that are composed of several containers. While this will be very similar to the Pods we've seen so far, you'll find that some little things are different and that some are worth knowing. First, you will learn how to launch multi-container Pods using kubectl (hint: kubectl will not work), then how to get the containers to communicate with each other. After that, you will learn how to access a specific container in a multi-container Pod, as well as how to access logs from a specific container. Finally, you will learn how to share volumes between containers in the same Pod.

As you read the next chapter, you will learn about the rest of the fundamentals of Pods in Kubernetes. So, you'll get an overview of Pods while we keep moving forward by discovering additional objects in Kubernetes that will be useful for deploying applications in our clusters.