6.4.5 Environment Sensing

The measurement made in the environment can be used to improve the estimation of the state X (e.g., location) in this environment. Imagine that we are sleepwalking around the house in the middle of the night. When we wake up, we can figure out where we are by using our senses (sight, touch, etc.).

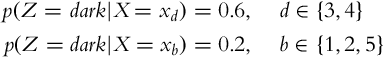

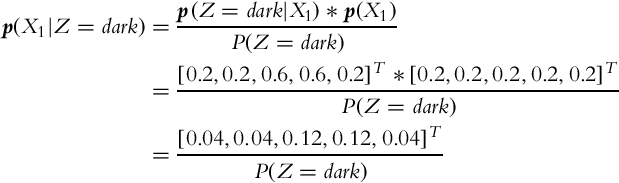

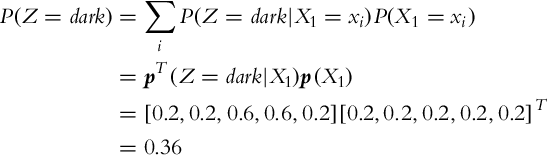

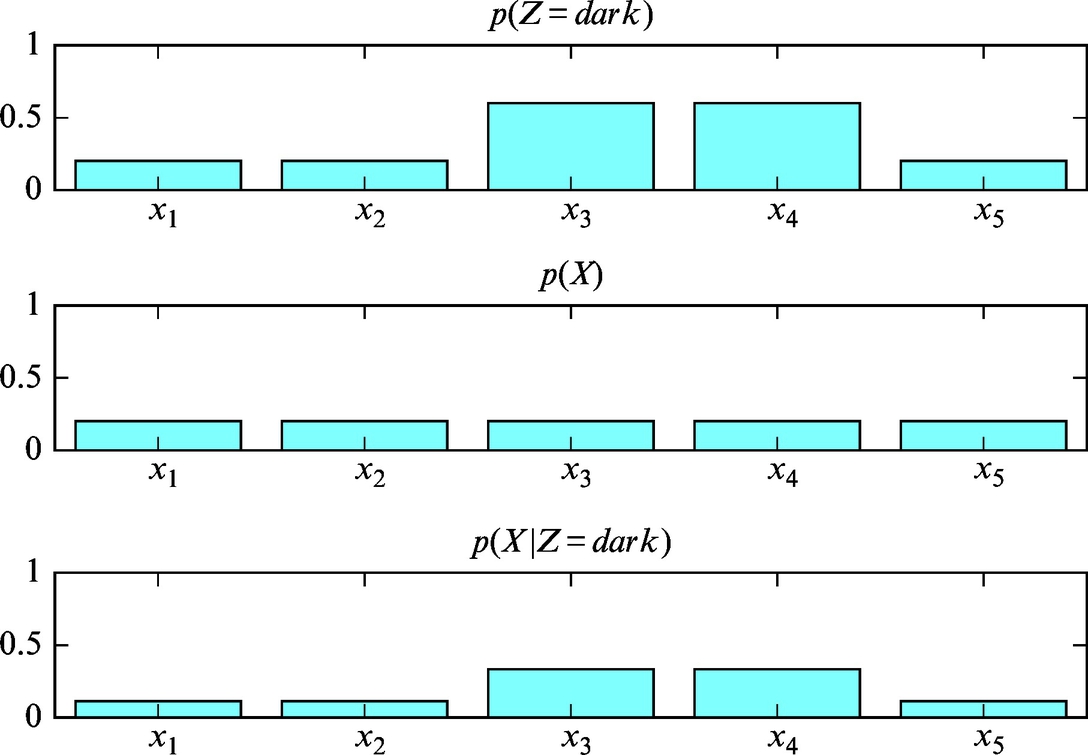

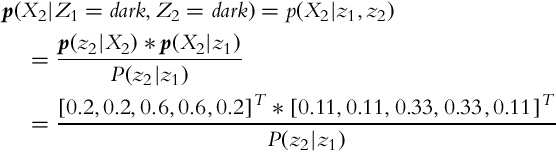

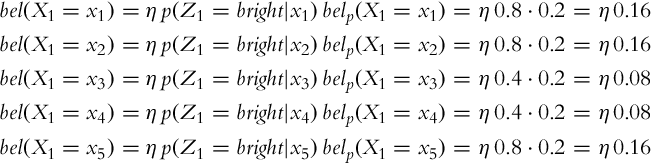

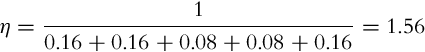

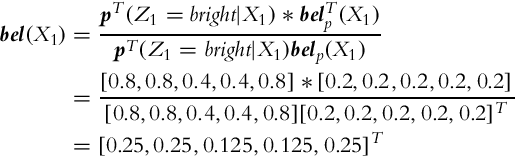

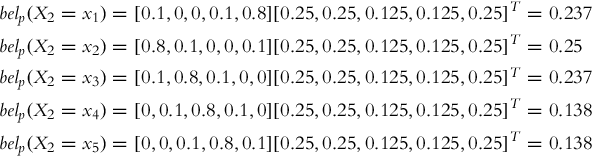

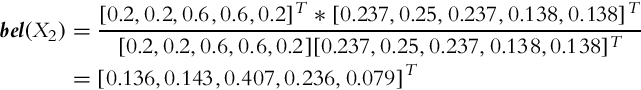

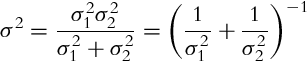

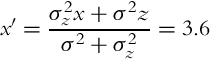

Mathematically, the initial knowledge about the environment can be described with probability distribution p(x) (prior). This distribution can be improved when a new measurement z (with belief p(z|x)) is available if the probability after the measurement is determined p(x|z). This can be achieved using the Bayesian rule ![]() . The probability p(z|x) represents the statistical model of the sensor, and bel(x) is state-estimate belief after the measurement is made. In the process of perception, the correction step of the Bayesian filter is evaluated.

. The probability p(z|x) represents the statistical model of the sensor, and bel(x) is state-estimate belief after the measurement is made. In the process of perception, the correction step of the Bayesian filter is evaluated.

6.4.6 Motion in the Environment

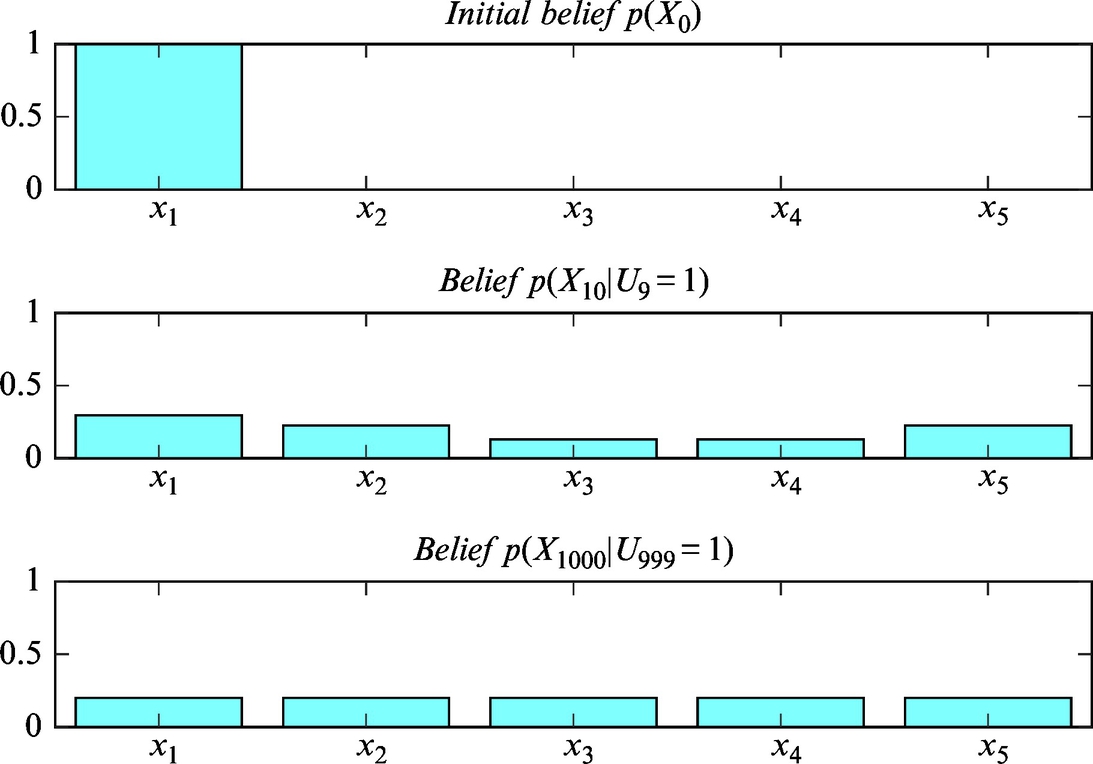

Mobile systems can move in the environment using some actuators (e.g., motorized wheels) and a control system. Every movement has small or large uncertainty; therefore, the movement of the mobile system through the environment increases the uncertainty about the mobile system state (pose) in the environment.

Consider that we are standing in well-known environment. We close our eyes and make several steps. After a few steps we know approximately where we are, since we know how big are our steps and we know in which direction the steps were made. Therefore, we can imagine where we are. However, the lengths of our steps are not known precisely, and also the directions of the steps are hard to estimate; therefore, our knowledge about our pose in space decreases with time as more and more steps are made.

In the case of movement without observing the states through measurement, the relation (6.28) can be reformulated:

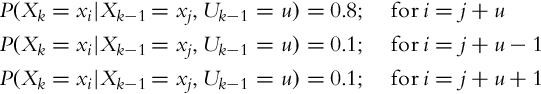

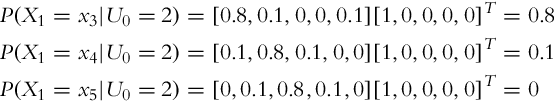

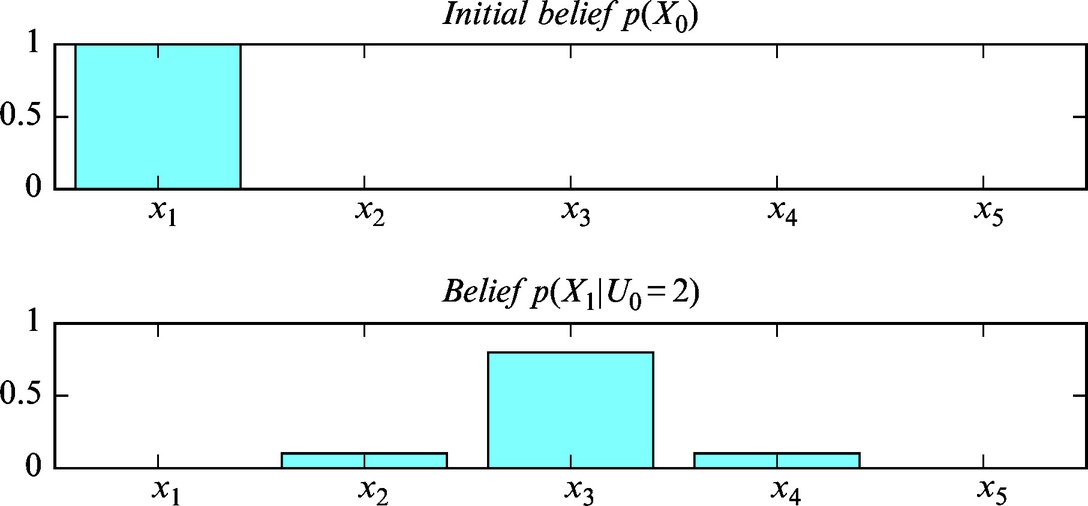

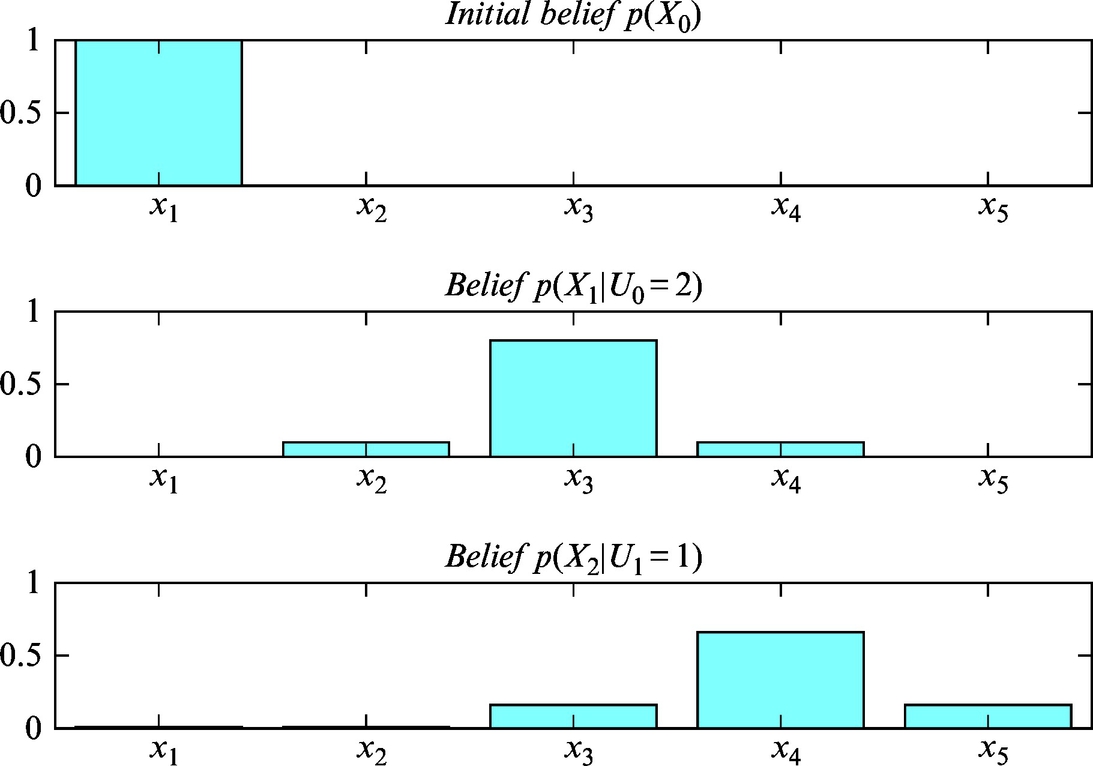

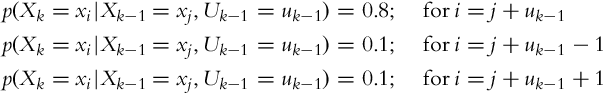

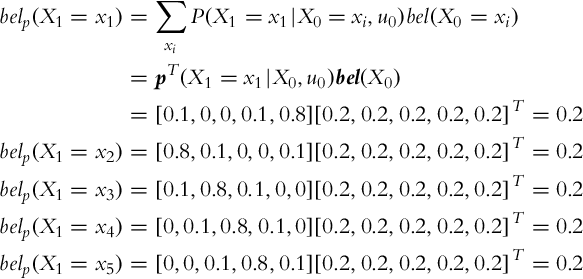

The belief in the new state p(xk|u0:k−1) depends on the belief in the previous time step p(xk−1|u0:k−2) and conditional state transition probability p(xk|xk−1, uk−1). The probability distribution p(xk|, u0:k−1) can be determined with integration (or summation in the discrete case) of all possible state transition probabilities p(xk|xk−1, uk−1) from previous states xk−1 into state xk, given the known action uk−1.

6.4.7 Localization in the Environment

The mobile system can estimate its location in the environment even if it does not know its initial location but has a map of the environment. The location of the mobile system can be determined precisely with probability distribution. The process of determining the location in the environment is known as localization. Localization combines the process of observation (measurement) and action (movement). As already mentioned, measurements made in the environment increase the knowledge about the location, but the movement of the mobile system through the environment decreases this information.

Localization is a process in which the mobile system repeatedly updates the probability distribution that represents the knowledge about the mobile system’s location in the environment. The peak in the probability distribution (if it exists) represents the most probable mobile system location.

The localization process is a realization of the Bayesian filter (Algorithm 3), which combines the processes of movement and perception.

6.5 Kalman Filter

Kalman filter [6] is one of the most important state estimation and prediction algorithms, which has been applied to a diverse range of applications in various engineering fields, and autonomous mobile systems are no exception. The Kalman filter is designed for state estimation of linear systems where the system signals may be corrupted by noise. The algorithm has a typical two-step structure that consists of a prediction and a correction step that are evaluated in every time step. In the prediction step, the latest system state along with state uncertainties are predicted. Once a new measurement is available, the correction step is evaluated where the stochastic measurement is joined with the predicted state estimate as a weighted average, in a way that less uncertain values are given a greater weight. The algorithm is recursive and allows an online estimation of the current system state taking into account system and measurement uncertainties.

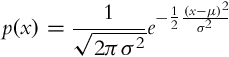

A classical Kalman filter assumes normally distributed noises, that is, the probability distribution of noise is a Gaussian function:

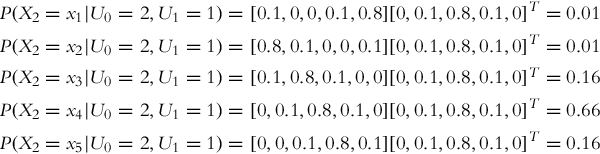

where μ is the mean value (mathematical expectation) and σ2 is the variance. The Gaussian function is a unimodal (left and right from the single peak the function monotonically decreases toward zero)—more general distributions are normally multimodal (there are several local peaks). If the probability distributions of continuous variables are assumed to be unimodal, the Kalman filter can be used for optimum state estimation. In the case that the variables are not all unimodal, the state estimation is suboptimal; furthermore, the convergence of the estimate to the true value is questionable. The Bayesian filter does not have the aforementioned problems, but its applicability is limited to simple continuous problems and to discrete problems with a finite countable number of states.

In Fig. 6.16 an example of continuous probability distribution, which is not unimodal, is shown. The continuous distribution is approximated with a Gaussian function and with a histogram (domain is divided into discrete intervals). The approximation with a Gaussian function is used in the Kalman filter, and the histogram is used in the Bayesian filter.

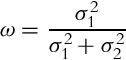

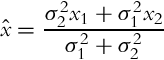

The essence of the correction step (see Bayesian filter (6.29)) is information fusion from two independent sources, that is, sensor measurements and state predictions based on the previous state estimations. Let us use Example 6.15 again to demonstrate how two independent estimates of the same variable x can be jointed optimally if the value and variance (belief) of each source is known.

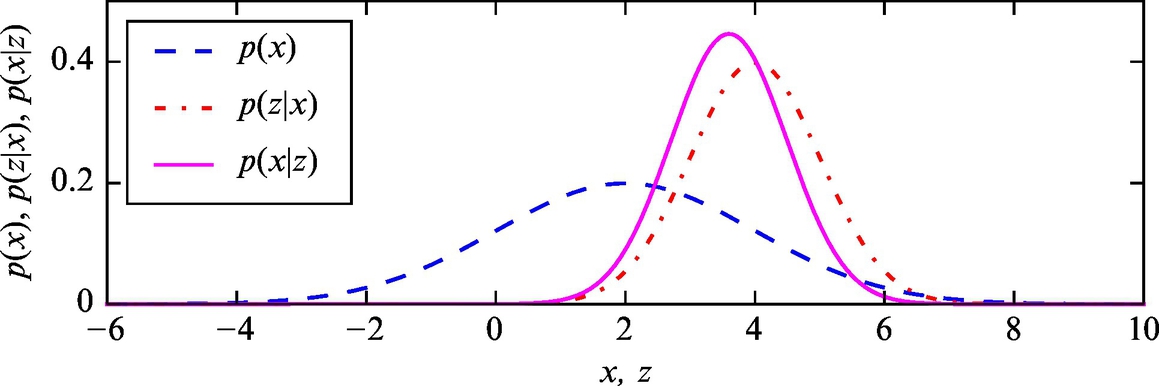

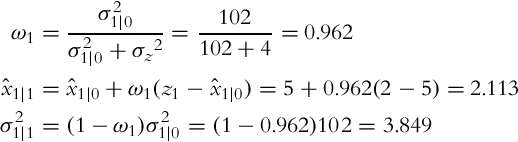

Let us use the findings from Example 6.15 in the derivation of the recursive state estimation algorithm. In every time step a new state measurement z(k) = x(k) + n(k) is obtained by the sensor, where n(k) is the measurement noise. The measurement variance ![]() is assumed to be known. The updated optimum state estimate is a combination of the previous estimate

is assumed to be known. The updated optimum state estimate is a combination of the previous estimate ![]() and the current measurement z(k), as follows:

and the current measurement z(k), as follows:

The updated state variance is

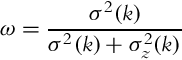

where

Therefore, given known initial state estimate x, ![]() and the corresponding variance σ2(0) the measurements z(1), z(2), … can be integrated optimally, in a way that the current state and state variance are estimated. This is the basic idea behind the correction step of the Kalman filter.

and the corresponding variance σ2(0) the measurements z(1), z(2), … can be integrated optimally, in a way that the current state and state variance are estimated. This is the basic idea behind the correction step of the Kalman filter.

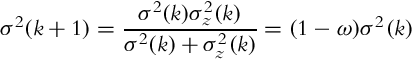

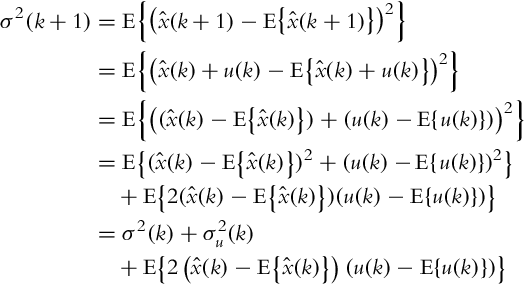

The prediction step of the Kalman filter provides the given state prediction a known input action. The initial state estimate ![]() has a probability distribution with variance σ2(k). In the same way, the action u(k), which is responsible for transition of the state x(k) to x(k + 1), has probability distribution (transition uncertainty)

has a probability distribution with variance σ2(k). In the same way, the action u(k), which is responsible for transition of the state x(k) to x(k + 1), has probability distribution (transition uncertainty) ![]() . Using Example 6.17 let us examine the value of the state and the variance after the action is executed (after state transition).

. Using Example 6.17 let us examine the value of the state and the variance after the action is executed (after state transition).

Simplified Implementation of the Kalman Filter Algorithm

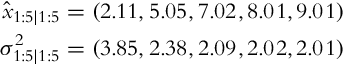

The Kalman filter algorithm for a simple case with only a single state is given in Algorithm 4, where the variables with a subscript (⋅)k|k−1 represent the estimated values in the prediction step, and variables with subscript (⋅)k|k represent the values from the correction step. For improved readability the following notation is used: u(k − 1) = uk−1 and z(k) = zk.

The Kalman filter has two steps (prediction and correction step) that are executed one after another in the loop. In the prediction step only the known action is used in a way that enables prediction of the state in the next time step. From the initial belief a new belief is evaluated, and the uncertainty of the new belief is higher that the initial uncertainty. In the correction step the measurement is used to improve the predicted belief in a way that the new (corrected) state estimate has lower uncertainty than the previous belief. In both steps only two inputs are required: in the prediction step the value of previous belief ![]() and executed action uk−1 need to be known, and in the correction step the previous belief

and executed action uk−1 need to be known, and in the correction step the previous belief ![]() and measurement zk are required. The state transition variance

and measurement zk are required. The state transition variance ![]() , input action variance

, input action variance ![]() , and measurement variance

, and measurement variance ![]() also need to be given.

also need to be given.

6.5.1 Kalman Filter in Matrix Form

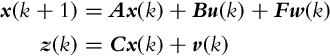

Multiple input, multiple state, and multiple output systems can be represented in a matrix form for improved readability. A general linear system can be expressed in a state-space form as

where x is the state vector, u is the input (action) vector, and z is the output (measurement); A is the state matrix, B is the input matrix, F is the input noise matrix, C is the output matrix; w(k) is the process noise and v is the output (measurement) noise. In the case the noise w is added to the system input u, the following relation holds: F = B. The process noise w(k) and measurement noise v(k) are assumed to be independent (uncorrelated) white noises with zero mean value and covariance matrices ![]() and

and ![]() .

.

The probability distribution of the states x that are disturbed by a white Gaussian noise can be written in a matrix form as follows:

where P is the state-error covariance matrix.

The Kalman filter is an approach for filtering and estimation of linear systems with continuous state space, which are disturbed with normal noise. The noise distribution is represented with a Gaussian function (Gaussian noise). The input and measurement noises influence the internal system states that we would like to estimate. In the case that the model of the system is linear, the Gaussian noise propagated through the model (e.g., from inputs to the states) is also a Gaussian noise. The system must therefore be linear, since this requirement ensures Gaussian distribution of the noise on the states, an assumption used in the derivation of the Kalman filter. The Kalman filter state estimate converges to the true value only in the case of linear systems that are disturbed with Gaussian noise.

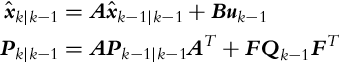

The Kalman filter for a linear system (6.34) has a prediction step:

and a correction step:

In the prediction part of the algorithm the a priori estimate ![]() is determined, which is based on the previous estimate

is determined, which is based on the previous estimate ![]() (obtained from measurements up to the time moment k − 1) and input u(k − 1). In the correction part of the Kalman filter the posteriori estimate

(obtained from measurements up to the time moment k − 1) and input u(k − 1). In the correction part of the Kalman filter the posteriori estimate ![]() is calculated, which is based on the measurements up to time step k. The state correction is made in a way that the difference between the true and estimated measurement is calculated (

is calculated, which is based on the measurements up to time step k. The state correction is made in a way that the difference between the true and estimated measurement is calculated (![]() ); this difference is also known as innovation or measurement residual. The state correction is calculated as a product of Kalman gain Kk and innovation. The prediction part can be evaluated in advance, in the time while we are waiting for the new measurement in the time step k. Notice the similarity of the matrix notation in Eqs. (6.35), (6.36) with the notation used in Algorithm 4.

); this difference is also known as innovation or measurement residual. The state correction is calculated as a product of Kalman gain Kk and innovation. The prediction part can be evaluated in advance, in the time while we are waiting for the new measurement in the time step k. Notice the similarity of the matrix notation in Eqs. (6.35), (6.36) with the notation used in Algorithm 4.

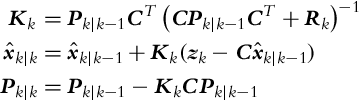

Let us derive the equation for the calculation of the state error covariance matrix in the prediction part of the Kalman filter:

where in line six of the derivation we took into account that the process noise wk in time step k is independent of the state estimate error in the previous time step (![]() ).

).

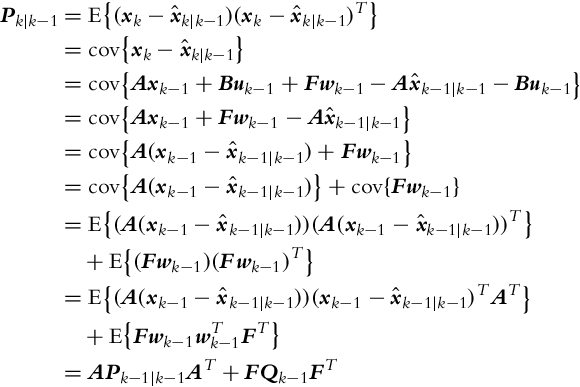

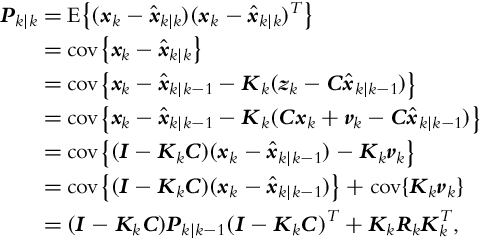

Let us also derive the equation for computation of the state error covariance matrix in the correction part of the Kalman filter:

where in line six of the derivation we took into account that the measurement noise vk is uncorrelated with the other terms. The obtained relation for calculation of the covariance matrix Pk|k is general and can be used for arbitrary gain Kk. However, the expression for Pk|k in Eq. (6.36) is valid only for optimum gain (Kalman gain) that minimizes the mean squared correction error ![]() ; this is equivalent to the minimization of the sum of all the diagonal elements in the correction covariance matrix Pk|k.

; this is equivalent to the minimization of the sum of all the diagonal elements in the correction covariance matrix Pk|k.

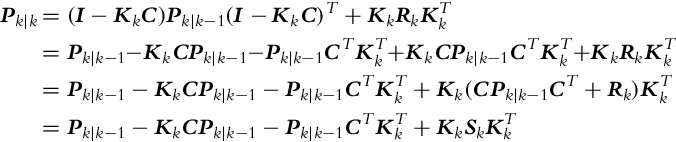

The general equation for Pk|k can be extended and the terms rearranged:

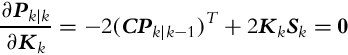

where Sk = CPk|k−1CT +Rk represents the innovation covariance matrix (![]() ). The sum of the diagonal terms of Pk|k is minimal when the derivative of Pk|k with respect to Kk is zero:

). The sum of the diagonal terms of Pk|k is minimal when the derivative of Pk|k with respect to Kk is zero:

which leads to the optimum gain in Eq. (6.36):

The correction covariance matrix at optimum gain can be derived if the optimum gain is postmultiplied with ![]() and inserted into the equation for Pk|k:

and inserted into the equation for Pk|k: