CHAPTER 9

Applying the Personalization and Embodiment Principles

Use Conversational Style, Polite Wording, Human Voice, and Virtual Coaches

CHAPTER SUMMARY

Some e-learning lessons rely on a formal style of writing to present information, direct wording for feedback and advice, and machine synthesized voices to deliver the words. In this chapter we summarize the empirical evidence that supports the personalization principle—i.e., that people learn better when e-learning environments use a conversational style of writing or speaking (including using first- and second-person language), polite wording for feedback and advice, and a friendly human voice. We also explore preliminary evidence for how to use on-screen pedagogical agents, focusing on the role of human-like embodiment. In particular, the embodiment principle is that people learn better from online agents that use human-like gesture and movement.

Since the previous edition of this book, new evidence has emerged concerning the role of politeness in on-screen agents’ feedback and hints and the role of human-like gestures by the on-screen agent. Another important advance has been the establishment of boundary conditions which specify when the personalization principle is most likely to be effective–such as the finding that in some cases personalization works best for less experienced learners and when the amount of personalization is modest enough to not detract from the lesson.

The personalization and embodiment principles are particularly important for the design of pedagogical agents–on-screen characters who help guide the learning processes during an instructional episode. While research on agents is somewhat new, we present evidence–including new evidence since the previous edition–for the learning gains achieved in the presence of an agent as well as for the most effective ways to design and use agents. The psychological purpose of conversational style and pedagogical agents is to induce the learner to engage with the computer as a social conversational partner.

Personalization Principle: Use Conversational Rather Than Formal Style, Polite Wording Rather Than Direct Wording, and Human Voice Rather Than Machine Voice

Does it help or hurt to change printed or spoken text from formal style to conversational style? Would the addition of a friendly on-screen coach distract from or promote learning? In this chapter, we explore research and theory that directly addresses these issues.

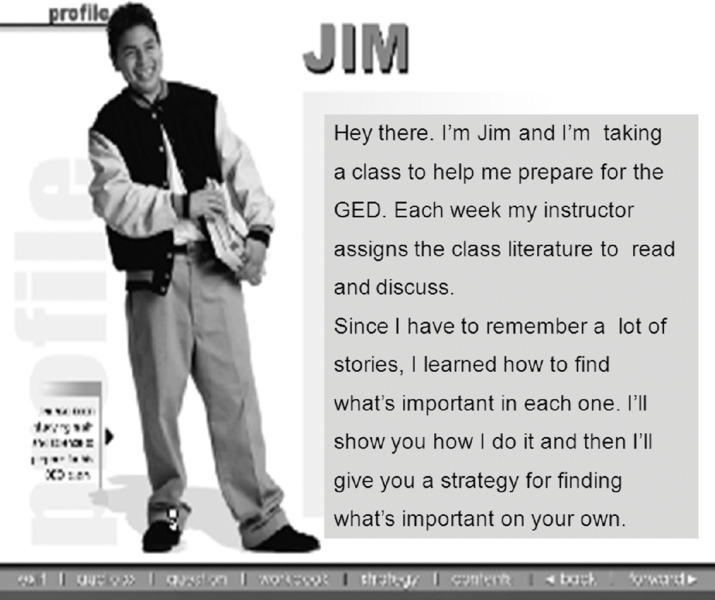

Consider the lesson introduction shown in Figure 9.1. As you can see, an on-screen agent uses an informal conversational style to introduce the lesson. This approach resembles human-to-human conversation. Of course, learners know that the character is not really in a conversation with them, but they may be more likely to act as if the character is a conversational partner. Now, compare this with the introduction shown in Figure 9.2. Here the overall feeling is quite impersonal. The agent is gone and the tone is more formal. Based on cognitive theory and research evidence, we recommend that you create or select e-learning courses that include some spoken or printed text that is conversational rather than formal.

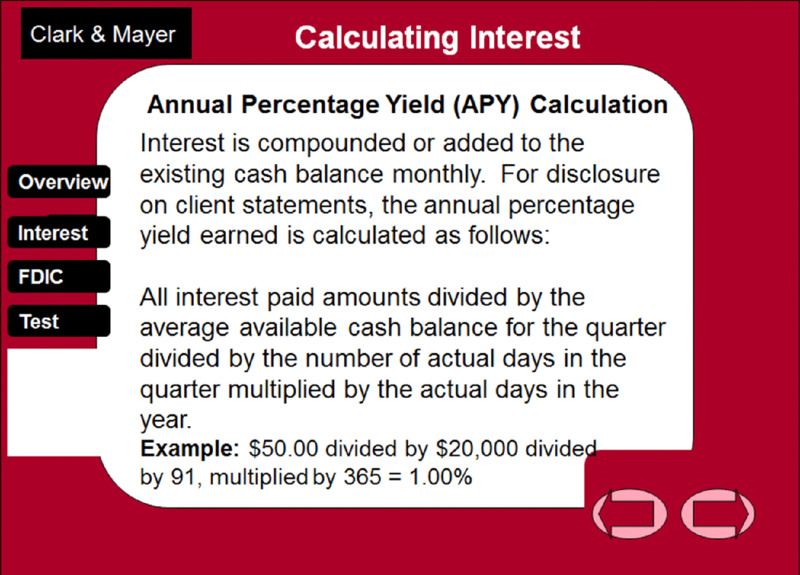

Let’s look at a couple of e-learning examples. The screen in Figure 9.3 summarizes the rules for calculating compound interest. Note that the on-screen text is quite formal. How could this concept be made more conversational? Figure 9.4 shows a revised version. Rather than passive voice, it uses second person active voice and includes a comment about how this concept relates to the learner’s job. It rephrases and segments the calculation procedure into four directive steps. The overall result is a more user-friendly tone.

Figure 9.3 Passive Voice Leads to a Formal Tone in the Lesson.

Figure 9.4 Use of Second Person and Informal Language Lead to a Conversational Tone in the Lesson.

Psychological Reasons for the Personalization Principle

Let’s begin with a common sense view that we do not agree with, even though it may sound reasonable. The rationale for putting words in formal style is that conversational style can detract from the seriousness of the message. After all, learners know that the computer cannot speak to them. The goal of a training program is not to build a relationship but rather to convey important information. By emphasizing the personal aspects of the training—by using words like “you” and “I”—you convey a message that training is not serious. Accordingly, the guiding principle is to keep things simple by presenting the basic information.

This argument is based on an information delivery view of learning in which the instructor’s job is to present information and the learner’s job is to acquire the information. According to the information delivery view, the training program should deliver information as efficiently as possible. A formal style meets this criterion better than conversational style.

Why do we disagree with the call to keep things formal and the information delivery view of learning on which it is based? Although the information delivery view seems like common sense, it is inconsistent with how the human mind works. According to cognitive theories of learning, humans strive to make sense of presented material by applying appropriate cognitive processes. Thus, instruction should not only present information but also prime the appropriate cognitive processing in the learner. Research on discourse processing shows that people work harder to understand material when they feel they are in a conversation with a partner rather than simply receiving information (Beck, McKeown, Sandora, Kucan, & Worthy, 1996). Therefore, using conversational style in a multimedia presentation conveys to the learners the idea that they should work hard to understand what their conversational partner (in this case, the course narrator) is saying to them. In short, expressing information in conversational style can be a way to prime appropriate cognitive processing in the learner.

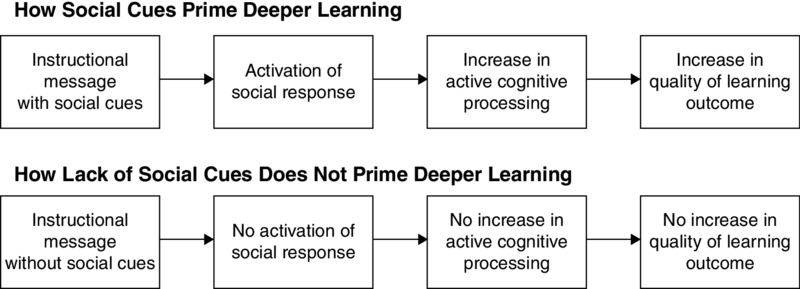

According to cognitive theories of multimedia communication (Mayer, 2009, 2014), Figure 9.5 shows what happens within the learner when a lesson contains conversational style and when it does not contain conversational style. On the top row, you can see that instruction containing social cues (such as conversational style) activates a sense of social presence in the learner (i.e., a feeling of being in a conversation with the author). The feeling of social presence, in turn, causes the learner to engage in deeper cognitive processing during learning (i.e., by working harder to understand what the author is saying), which results in a better learning outcome. In contrast, when an instructional lesson does not contain social cues, the learner does not feel engaged with the author and therefore will not work as hard to make sense of the material. In Chapter 1 we introduced the concept of psychological engagement during learning. Making your materials more personable is another instructional technique that promotes relevant psychological engagement. The challenge for instructional professionals is to avoid over-using conversational style to the point that it becomes distracting to the learner.

Figure 9.5 How the Presence or Absence of Social Cues Affects Learning. .

Adapted from Mayer, 2014b

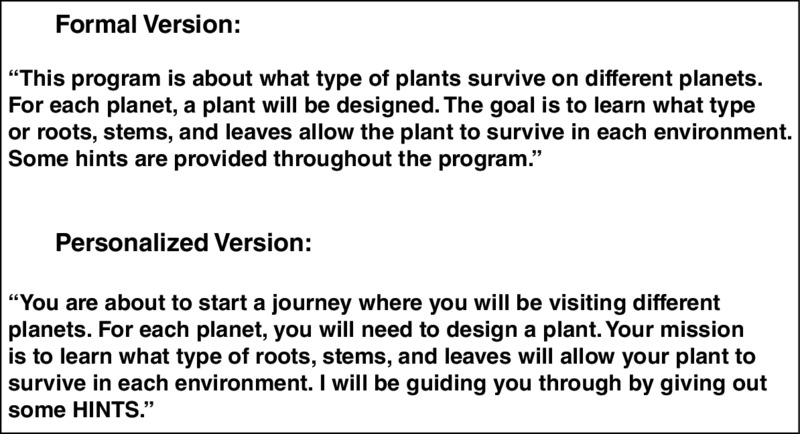

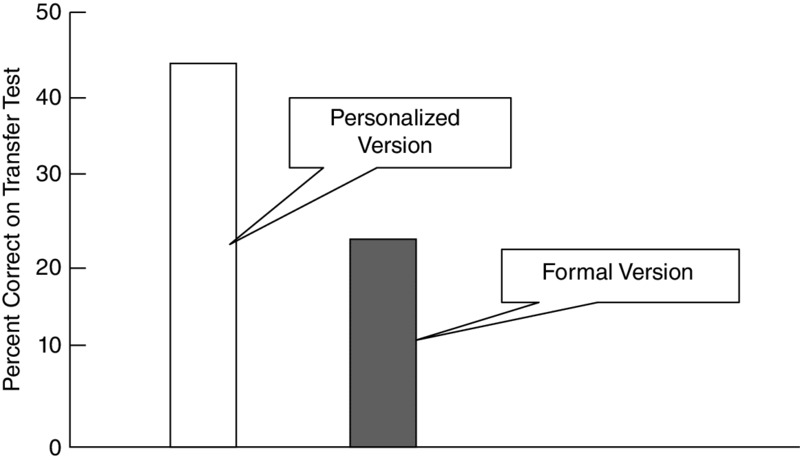

Promote Personalization Through Conversational Style

Although this technique as it applies to e-learning is just beginning to be studied, there is already preliminary evidence concerning the use of conversational style in e-learning lessons. In a set of five experimental studies involving a computer-based educational game on botany, Moreno and Mayer (2000b, 2004) compared versions in which the words were in formal style with versions in which the words were in conversational style. For example, Figure 9.6 gives the introductory script spoken in the computer-based botany game; the top portion shows the formal version and the bottom shows the personalized version. As you can see, both versions present the same basic information, but in the personalized version the computer is talking directly to the learner. In five out of five studies, students who learned with personalized text performed better on subsequent transfer tests than students who learned with formal text. Overall, participants in the personalized group produced between 20 to 46 percent more solutions to transfer problems than the formal group, with effect sizes all above 1. Figure 9.7 shows results from one study where improvement was 46 percent and the effect size was 1.55, which is considered to be large.

Figure 9.6 Formal Versus Informal Lesson Introductions Compared in Research Study. .

From Moreno and Mayer, 2000b

Figure 9.7 Better Learning from Personalized Narration. .

From Moreno and Mayer, 2000b

People can also learn better from a narrated animation on lightning formation when the speech is in conversational style rather than formal style (Moreno & Mayer, 2000b). For example, consider the last sentence in the lightning lesson: “It produces the bright light that people notice as a flash of lightning.” To personalize, we can simply change “people” to “you.” In addition to changes such as this one, Moreno and Mayer (2000b) added direct comments to the learner, such as, “Now that your cloud is charged up, let me tell you the rest of the story.” Students who received the personalized version of the lightning lesson performed substantially better on a transfer test than those who did not, yielding effect sizes greater than 1 across two different experiments.

These results also apply to learning from narrated animations involving how the human lungs work (Mayer, Fennell, Farmer, & Campbell, 2004). For example, consider the final sentence in the lungs lesson: “During exhaling, the diaphragm moves up creating less room for the lungs, air travels through the bronchial tubes and throat to the nose and mouth where it leaves the body.” Mayer, Fennell, Farmer, and Campbell (2004) personalized this sentence by changing “the” to “your” in 5 places, turning it into: “During exhaling, your diaphragm moves up creating less room for your lungs, air travels through your bronchial tubes and throat to your nose and mouth where it leaves your body.” Overall, they created a personalized script for the lungs lesson by changing “the” to “your” in 11 places. Across three experiments, this fairly minor change resulted in improvements on a transfer test yielding a median effect size of .79.

Using different materials, Kartal (2010) gave students multimedia lessons on stellar evolution and death that included illustrations and animation along with printed and spoken words. The words were either in formal style (e.g., “The white dwarf cools down slowly in time”) or enhanced with additional personalized comments (e.g., “The white dwarf cools down slowly in time. Now we know what will happen to our smallest star in the end.”). On a subsequent problem-solving test students performed better if they had received personalized rather than formal wording, with a medium-to-large effect size of .71.

Overall, there is evidence that personalization can result in improvements in student learning. However, there may be some important boundary conditions, so these results should not be taken to mean that personalization is always a useful idea. There are cases in which personalization can be overdone. For example, consider what happens when you add too much personal material, such as, “Wow, hi dude, I’m here to teach you all about _______, so hang on to your hat and here we go!” The result can be that the advantages of personalization are offset by the disadvantages of distracting the learner and setting an inappropriate tone for learning. Thus, in applying the personalization principle it is always useful to consider the audience and the cognitive consequences of your script—you want to write with sufficient informality so that the learners feel they are interacting with a conversational partner but not so informally that the learner is distracted or the material is undermined. In fact, implementing the personalization principle should create only a subtle change in the lesson; a lot can be accomplished by using a few first- and second-person pronouns or a friendly comment.

Promote Personalization Through Polite Speech

A related implication of the personalization principle is that on-screen agents should be polite. For example, consider an instructional game in which an on-screen agent gives you feedback. A direct way to put the feedback is for the agent to say, “Click the ENTER key,” and a more polite wording is, “You may want to click the ENTER key” or “Do you want to click on the ENTER key?” or “Let’s click the ENTER key.” A direct statement is, “Now use the quadratic formula to solve this equation,” and a more polite version is “What about using the quadratic formula to solve this equation?” or “You could use the quadratic formula to solve this equation,” or “We should use the quadratic formula to solve this equation.” According to Brown and Levinson’s (1987) politeness theory, these alternative wordings help to save face–by allowing the learner to have some freedom of action or by allowing the learner to work cooperatively with the agent. Mayer, Johnson, Shaw, and Sandhu (2006) found that students rated the reworded statements as more polite than the direct statements, indicating that people are sensitive to the politeness tone of feedback statements. Students who had less experience in working with computers were most sensitive to the politeness tone of the on-screen agent’s feedback statements, so they were more offended by direct statements (such as “Click the ENTER key”) and more impressed with polite statements (such as “Do you want to click the ENTER key?”).

Do polite on-screen agents foster deeper learning than direct agents? A study by Wang, Johnson, Mayer, Rizzo, Shaw, and Collins (2008) indicates that the answer is yes–especially for less experienced learners. Students interacted with an on-screen agent while learning about industrial engineering by playing an educational game called Virtual Factory. On a subsequent problem-solving transfer test, students who had learned with a polite agent performed better than those who learned with a direct agent, yielding an effect size of .73. Importantly, the effect was strong and significant for students without a background in engineering but not for students with a background in engineering.

In a related set of experiments by McLaren, DeLeeuw, and Mayer (2011), students learned to solve chemistry stoichiometry problems with a web-based intelligent tutor that provided hints and feedback using either polite language (e.g., “Shall we calculate the result now?”) or direct language (e.g., “The tutor wants you to calculate the result now.”). The results showed a pattern in which students with low knowledge of chemistry performed better on a subsequent problem-solving test if they had learned with a polite rather than a direct tutor, whereas high knowledge learners showed the reverse trend.

Overall, there is evidence that student learning is not only influenced by what on-screen agents say but also by how they say it. An important boundary condition is that the positive effects of politeness are strongest for learners who do have much knowledge of the domain. These results have important implications for virtual classroom facilitators. In many virtual classrooms, only the instructor’s voice is transmitted. The virtual classroom instructor can apply these guidelines by using polite conversational language as one tool to maximize the benefits of social presence on learning.

Promote Personalization Through Voice Quality

Research summarized by Reeves and Nass (1996) shows that, under the right circumstances, people “treat computers like real people.” Part of treating computers like real people is to try harder to understand their communications, especially when computers use voice to present words (Nass & Brave, 2005). Consistent with this view, Mayer, Sobko, and Mautone (2003) found that people learned better from a narrated animation on lightning formation when the speaker’s voice was human rather than machine-simulated, with an effect size of .79. In another study, Atkinson, Mayer, and Merrill (2005) presented online mathematics lessons in which an on-screen agent named Peedy the parakeet explained the steps in solving various problems. Across two experiments, students performed better on a subsequent transfer test when Peedy spoke in a human voice rather than a machine voice, yielding effect sizes of .69 and .78. Mayer and DaPra (2012) found that college students performed better on transfer tests when they learned about solar cells from an online narrated slideshow with a human-like on-screen agent when the narration was in a human voice rather than a machine voice, yielding an effect size of 0.63. We can refer to these findings as the voice principle: People learn better from narration with a human voice than a machine voice. An important boundary condition is that the advantage of a human voice is eliminated when the on-screen agent does not use human-like gestures, thus reminding the learner that the agent is not human (Mayer & DaPra, 2012). Nass and Brave (2005) have provided additional research showing that characteristics of the speaker’s voice can have a strong impact on how people respond to computer-based communications.

Embodiment Principle: Use Effective On-Screen Coaches to Promote Learning

In the previous section, we provided evidence for writing with first- and second-person language, speaking with a friendly human voice, and using polite wording to establish a conversational tone in your training. In some of the research described in the previous section, the instructor was an on-screen character who interacted with the learner. A related new area of research focuses specifically on the role of on-screen coaches, called pedagogical agents, on learning.

In this section, we extend the personalization principle to on-screen agents by considering the degree to which on-screen agents need to be human-like to promote learning. In particular, we examine the idea that people learn better when the on-screen agent behaves in a human-like way by using human-like gesture, body movement, facial expression, and eye-gaze, which has been called the embodiment principle (Fiorella & Mayer, in press; Mayer, 2014).

What Are Pedagogical Agents?

Pedagogical agents are on-screen characters who help guide the learning process during an e-learning episode. Personalized speech and human-like gesture are important components in animated pedagogical agents developed as on-screen tutors in educational programs (Cassell, Sullivan, Prevost, & Churchill, 2000; Moreno, 2005; Moreno, Mayer, Spires, & Lester, 2001; Veletsianos & Russell, 2014). Agents can be represented visually as cartoon-like characters, as talking-head video, or as virtual reality avatars; they can be represented verbally through machine-simulated voice, human recorded voice, or printed text. Agents can be representations of real people using video and human voice or artificial characters using animation and computer-generated voice. Our major interest in agents concerns their ability to employ sound instructional techniques that foster learning.

On-screen agents are appearing frequently in e-learning. For example, Figure 9.8 introduces Jim in a lesson on reading comprehension. Throughout the lesson, Jim demonstrates techniques he uses to understand stories followed by exercises that ask learners to apply Jim’s guidelines to comprehension of stories.

Figure 9.8 On-Screen Coach Used to Give Reading Comprehension Demonstrations. .

With permission from Plato Learning Systems

Figure 9.9 shows a screen from a guided discovery e-learning game called Design-A-Plant in which the learner travels to a planet with certain environmental features (such as low rainfall and heavy winds) and must choose the roots, stem, and leaves of a plant that could survive there. An animated pedagogical agent named Herman-the-Bug (in lower left corner of Figure 9.9) poses the problems, offers feedback, and generally guides the learner through the game. As you can see in the figure, Herman is a friendly little guy and research shows that most learners report liking him (Moreno & Mayer, 2000b; Moreno, Mayer, Spires, & Lester, 2001).

Figure 9.9 Herman-the-Bug Used in Design-A-Plant Instructional Game. .

From Moreno, Mayer, Spires, and Lester, 2001

In another program an animated pedagogical agent is used to teach students how to solve proportionality word problems (Atkinson, 2002; Atkinson, Mayer, & Merrill, 2005). In this program, an animated pedagogical bird agent named Peedy provides a step-by-step explanation of how to solve each problem. Although Peedy doesn’t move much, he can point to relevant parts of the solution and make some simple gestures as he guides the students. Peedy and Herman are among a small collection of agents who have been examined in controlled research studies.

Computer scientists have done a fine job of producing life-like agents who interact well with humans (Cassell, Sullivan, Prevost, & Churchill, 2000). For example, some classic on-screen agents include Steve, who shows students how to operate and maintain the gas turbine engines aboard naval ships (Rickel & Johnson, 2000); Cosmo, who guides students through the architecture and operation of the Internet (Lester, Towns, Callaway, Voerman, & Fitzgerald, 2000); and Rea, who interacts with potential home buyers, takes them on virtual tours of listed properties, and tries to sell them a house (Cassell, Sullivan, Prevost, & Churchill, 2000).

In spite of the continuing advances in the development of on-screen agents, research on their effectiveness is in early stages (Atkinson, 2002; Moreno, 2005; Moreno & Mayer, 2000b; Moreno, Mayer, Spires, & Lester, 2001; Veletsianos & Russell, 2014; Wouters, Paas, & van Merriënboer, 2008). Let’s look at some important questions about agents in e-learning courses and see how the preliminary research answers them.

Do Agents Improve Student Learning?

An important primary question is whether adding on-screen agents can have any positive effects on learning. Even if computer scientists can develop extremely lifelike agents that are entertaining, is it worth the time and expense to incorporate them into e-learning courses? In order to answer this question, researchers began with an agent-based educational game, called Design-A-Plant, described previously (Moreno, Mayer, Spires, & Lester, 2001). Some students learned by interacting with an on-screen agent named Herman-the-Bug (agent group) shown in Figure 9.9, whereas other students learned by reading the identical words and viewing the identical graphics presented on the computer screen without the Herman agent (no-agent group). Across two separate experiments, the agent group generated 24 to 48 percent more solutions in transfer tests than did the no-agent group.

In a related study (Atkinson, 2002), students learned to solve proportionality word problems by seeing worked-out examples presented via a computer screen. For some students, an on-screen agent spoke to students, giving a step-by-step explanation for the solution (agent group). For other students, the same explanation was printed as on-screen text without any image or voice of an agent (no-agent group). On a subsequent transfer test involving different word problems, the agent group generated 30 percent more correct solutions than the no-agent group. Although these results are preliminary, they suggest that it might be worthwhile to consider the role of animated pedagogical agents as aids to learning.

Do Agents Need to Look Real?

As you may have noticed in the previously described research, there were many differences between the agent and no-agent groups so it is reasonable to ask which of those differences has an effect on student learning. In short, we want to know what makes an effective agent. Let’s begin by asking about the appearance of the agent, such as whether people learn better from human-looking agents or cartoon-like agents. To help answer this question, students learned about botany principles by playing the Design-A-Plant game with one of two agents—a cartoon-like animated character named Herman-the-Bug or a talking-head video of a young male who said exactly the same words as Herman-the-Bug (Moreno, Mayer, Spires, & Lester, 2001). Overall, the groups did not differ much in their test performance, suggesting that a real character did not work any better than a cartoon character. In addition, students learned just as well when the image of the character was present or absent as long as the students could hear the agent’s voice. These preliminary results (including similar findings by Craig, Gholson, & Driscoll, 2002) suggest that a lifelike image is not always an essential component in an effective agent.

Although on-screen agents may not have to look real, there is some evidence that they should behave in a human-like way in terms of their gestures, movements, and eye-gaze. For example, Lusk and Atkinson (2007) found that students learned better from an on-screen agent who demonstrated how to solve mathematics problems when the on-screen agent was fully embodied (i.e., used human-like locomotion, gestures, and eye-gazes) rather than minimally embodied (i.e., was physically present but did not move, gesture, or gaze at the learner). In an eye-tracking study, Louwerse, Graesser, McNamara, and Lu (2009) found that learners looked at gesturing on-screen agents as they spoke, indicating that the learners were treating the on-screen agents as conversational partners.

Overall, the research shows that on-screen pedagogical agents do not need realistic humanlike appearance but do need realistic humanlike behavior.

Do Agents Need to Sound Real?

Even if the agent may not look real, there is compelling evidence that the agent has to sound conversational. First, across four comparisons (Moreno & Mayer, 2004; Moreno, Mayer, Spires, & Lester, 2001), students learned better in the Design-A-Plant game if Herman’s words were spoken rather than presented as on-screen text. This finding is an indication that the modality effect (as described in Chapter 6) applies to on-screen agents. Second, across three comparisons (Moreno & Mayer, 2000b), as reported in the previous section, students learned better in the Design-A-Plant game if Herman’s words were spoken in a conversational style rather than a formal style. This finding is an indication that the personalization effect applies to on-screen agents. Finally, as reported in the previous section, Atkinson and colleagues (Atkinson, 2002; Atkinson, Mayer, & Merrill, 2005) found some preliminary evidence that students learn to solve word problems better from an on-screen agent when the words are spoken in a human voice rather than a machine-simulated voice. Overall, these preliminary results show that the agent’s voice is an important determinant of instructional effectiveness.

Should Agents Use Human-Like Gestures?

According to the cognitive theory of multimedia learning, on-screen pedagogical agents promote learning by serving as social cues that prime deeper cognitive processing in learners. In order to make on-screen agents feel more like social partners, they do not need to look exactly like humans but they need to act like humans—using human-like gestures, movements, facial expression, and eye-gaze. We call this the embodiment principle, and you can see that it is an extension of the personalization principle to the characteristics of on-screen agents.

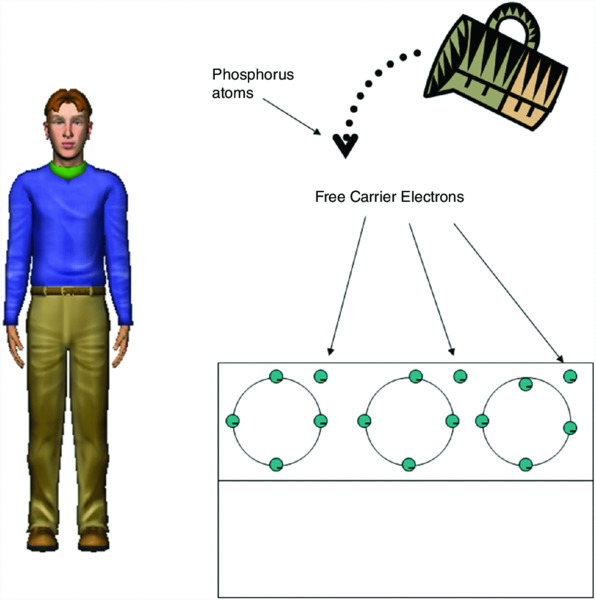

In order to test the embodiment principle, Mayer and DaPra (2012) asked college students to learn about how solar cells work by watching a narrated online slideshow. In one version of the slideshow (low-embodiment), an on-screen pedagogic agent stood motionless to the left of the slide, as shown in Figure 9.10, with only his lips moving in sync with the speech. In another version (high-embodiment), the on-screen agent used human-like gestures, body movement, facial expression, and eye-gaze as he spoke. Across three experiments using human voice, students receiving the high-embodiment lesson performed better on a transfer test than those receiving the low-embodiment lesson, yielding effect sizes of .92, 1.10, and .58, respectively. Thus, even when the on-screen agent is a cartoon-like character, human-like behavior fosters better learning.

Figure 9.10 Agent Stands to Left in Slideshow on Solar Cells. .

From Mayer and DaPra, 2012

Interestingly, Mayer and DaPra found that having no image of the agent on the screen was better for learning about solar cells than having a motionless one. Presumably, seeing an on-screen agent that does not act like a human is distracting, and possibly unsettling, to the learner. In a recent meta-analysis based on 14 experiments, Mayer (2014b) reported that adding a static image of an on-screen agent—such as a headshot or a motionless full-body agent—does not have a substantial effect on learning.

Implications for e-Learning

Although it is premature to make firm recommendations concerning on-screen pedagogical agents, we are able to offer some suggestions based on the current state of the field. We suggest that you consider using on-screen agents, and that the agent’s words be presented as speech rather than text, in conversational style rather than formal style, polite wording rather than direct wording, and with human-like rather than machine-like articulation. Based on preliminary work on embodiment of on-screen agents, we also suggest that you consider using on-screen agents that display human-like gestures and facial expressions.

We further suggest that you use agents to provide instruction rather than for entertainment purposes. For example, an agent can explain a step in a demonstration or provide feedback to a learner’s response to a lesson question. In contrast, the cartoon puppy in Figure 9.11 is not an agent since he is never used for any instructional purpose. Likewise, there is a common unproductive tendency to insert theme characters from popular games and movies added only for entertainment value which serve no instructional role. These embellishments are likely to depress learning, as discussed in Chapter 8.

Figure 9.11 The Puppy Character Plays No Instructional Role So Is Not an Agent.

Based on the cognitive theory and research we have highlighted in this chapter, we can propose the personalization and embodiment principles. First, concerning personalization, present words in conversational style rather than formal style. In creating the script for a narration or the text for an on-screen passage, you should use some first- and second-person constructions (that is, involving “I,” “we,” “me,” “my,” “you,” and/or “your”) to create the feeling of conversation between the course and the learner. However, you should be careful not to overdo the personalization style because it is important not to distract the learner. Use polite wording rather than direct wording, especially for beginners. Use a natural human voice rather than a machine voice. Second, concerning embodiment, use on-screen agents to provide coaching in the form of hints, worked examples, demonstrations, and explanations, but be sure the agents use human-like gesture and movements.

What We Don’t Know About Personalization and Embodiment

Although personalization and embodiment can be effective in some situations, additional research is needed to determine when it becomes counterproductive by being distracting or condescending. Further work also is needed to determine the conditions under which on-screen agents are most effective, including the role of gesturing, eye fixations, and locomotion.

In addition, we do not know whether specific types of learners benefit more than others from the personalization and embodiment principles. For example, would there be any differences between novice and experienced learners, learners who are committed to the content versus learners who are taking required content, or male versus female learners? When it comes to the gender of the narrator, does the content make a difference? For example, in mathematics, which is considered a male-dominant domain, a female narrator was more effective than a male narrator (Nass & Brave, 2005). Finally, research is needed to determine the long-term effects of personalization and embodiment, e.g., does the effect of conversational style (or politeness) diminish as students spend more time with the course?

Chapter Reflection

- If you are designing an e-lesson for new sales associates or for experienced database technicians would you use an agent? Why or why not?

- Have you experienced or would you anticipate objections in your organization to applying personalization in wording and/or use of agents? What kinds of objections might be raised and how would you respond?

- Can you think of situations in which attempts to apply the personalization principle would violate the coherence principle?

COMING NEXT

The next chapter on segmenting and pretraining completes the basic set of multimedia principles in e-learning. These principles apply to training produced to inform as well as to increase performance; in other words they apply to all forms of e-learning. After reading the next chapter, you will have topped off your arsenal of basic multimedia instructional design principles described in Chapters 4 through 10.

Suggested Readings

- Mayer, R.E. (2014). Principles based on social cues: Personalization, voice, and image principles. In R.E.Mayer (Ed.), Cambridge handbook of multimedia learning (2nd ed., pp. 345–368). New York: Cambridge University Press. Summarizes how social cues such as conversational style, human voice, polite wording, and human-like gesture can promote deeper learning.

- Mayer, R.E., & DaPra, C.S. (2012). An embodiment effect in computer-based learning with animated pedagogical agents. Journal of Experimental Psychology: Applied, 18, 239–252. Provides a recent example of research on the embodiment principle for on-screen agents.

- Moreno, R., & Mayer, R.E. (2004). Personalized messages that promote science learning in virtual environments. Journal of Educational Psychology, 96, 165–173. Provides a useful example of research on the personalization principle in online learning.

CHAPTER OUTLINE

- Segmenting Principle: Break a Continuous Lesson into Bite-Size Segments

- Psychological Reasons for the Segmenting Principle

- Evidence for Breaking a Continuous Lesson into Bite-Size Segments

- Pretraining Principle: Ensure That Learners Know the Names and Characteristics of Key Concepts

- Psychological Reasons for the Pretraining Principle

- Evidence for Providing Pretraining in Key Concepts

- What We Don#x2019;t Know About Segmenting and Pretraining