CHAPTER 13

Does Practice Make Perfect?

CHAPTER SUMMARY

In this chapter we offer five important evidence-based principles to guide your development and distribution of, as well as follow-up to practice exercises in multimedia learning environments. First, there is considerable evidence that well-developed practice interactions promote learning—especially in asynchronous e-learning. To maximize the benefits of these practice interactions, we present evidence and examples for the following principles:

- Include sufficient practice to achieve the learning objective.

- Require learners to respond in job-realistic ways.

- Incorporate effective feedback to learner responses.

- Distribute practice among the learning events rather than aggregated in one location.

- Apply the multimedia principles we reviewed in Chapters 4 through 10.

Recent research on feedback offers new recommendations since our previous edition. These include providing explanatory rather than corrective feedback, giving explanations that address the task and the process to achieve the task, and minimizing ego-focusing feedback such as praise or normative scores.

What Is Practice in e-Learning?

Effective e-learning engages learners with the instructional content in ways that foster the selection, organization, integration, and transfer of new knowledge. First, the learner’s attention must be drawn to the important information in the training. Then the learner must integrate the instructional words and visuals with each other and with prior knowledge. Finally, the new knowledge and skills that are built in the learner’s long-term memory must be transferred to the job after the training event. Effective practice exercises should support all of these psychological processes. Recent research that used eye tracking to monitor attention found that after answering practice questions, learners focused more on questioned information during restudy compared to individuals who did not answer practice questions (Dirkx, Thoma, Kester, & Kirschner, 2015). In this chapter we review research and guidelines for optimizing learning from online practice.

In Chapter 11 we distinguished among four quadrants of engagement, shown in Figure 13.2. This chapter focuses on Quadrant 4—overt physical activity in the form of online interactions that promote relevant psychological activity. Quadrant 4 activities may include interactions among students, between students and instructors, and between students and content. The visible output from Quadrant 4 interactions allows for feedback, which, when effectively designed, is one of the most powerful instructional methods you can use. Therefore, we recommend that you incorporate healthy amounts of Quadrant 4 activities in your lessons. However, if the practice behavior falls into Quadrant 1 (high physical activity but inappropriate psychological activity), the result is engagement that does not support processing associated with the learning goal. Instead, it is important to design practice that promotes both behavioral and appropriate psychological activity. In addition, effective feedback on behavioral responses is essential to gain maximum value from practice exercises.

Figure 13.2 Practice Exercises Should Fall into Quadrant 4 of the Engagement Matrix.

Adapted from Stull and Mayer, 2007.

For example, consider the questions shown in Figures 13.3 and 13.4. Both use a multiple-choice format. However, to respond to the question in Figure 13.3, the learner needs only to recognize the facts provided in the lesson. These types of lower-level questions may have occasional utility but should not predominate lesson engagement. In contrast, to respond to the question in Figure 13.4, learners need to apply their understanding of the drug features to physician profiles. This question requires not only behavioral activity but also job-relevant psychological engagement. This question stimulates a deeper level of processing than the question shown in Figure 13.3 and falls into Quadrant 4 of the engagement matrix.

Figure 13.3 This Multiple-Choice Question Requires the Learner to Recognize Correct Drug Facts.

Figure 13.4 This Multiple-Select Question Requires the Learner to Match Drug Features to the Appropriate Physician Profile.

Formats of e-Learning Practice

e-Learning practice interactions may use formats similar to those used in the classroom, such as selecting the correct answer in a multiple-choice list, checking a box to indicate whether a statement is true or false, or even typing in short answers. Other interactions use formats that are unique to computers, such as drag and drop and touch screen. Interactions may also require an auditory response in both synchronous and asynchronous forms of e-learning. For example, a second language asynchronous lesson requires learners to respond to questions with simple auditory phrases. Interactions may be designed as solo activities, but can also be collaborative, as with discussion boards or breakout rooms in virtual classes. We discuss collaborative learning in greater detail in Chapter 14.

Some e-learning is termed high engagement because the environment is highly interactive. Problem-based learning, simulations, and games are three examples of high engagement e-learning. In lower engagement lessons, tutorials may provide information interspersed with questions. As we have discussed in Chapter 11, high engagement environments can impose irrelevant mental load on learners and are not always effective. Likewise, tutorials that include many recall questions are not effective for higher level learning outcomes. In this chapter we review evidence behind basic guidelines for practice that helps learners build relevant work-related skills.

Is Practice a Good Investment?

We’ve all heard the expression that “practice makes perfect,” but what evidence do we have that practice leads to skill acquisition? We have two streams of evidence on the benefits of practice: (1) meta-analysis of experiments that compare learning from different types of online practice and (2) studies of top performers in music, sports, and games such as chess and Scrabble.

Meta-Analysis of Multimedia Interactivity

Bernard, Abrami, Borokhovski, Wade, Tamin, Surkes, and Bethel (2009) conducted a meta-analysis of seventy-four experiments that compared different types of practice interactions in synchronous and asynchronous multimedia courses. They found that courses rated high in practice interaction strength based on the number and/or quality of interactions resulted in better learning compared to low-strength interactions, especially in asynchronous courses. The research team concludes that “when students are given stronger versus weaker course design features to help them engage in the content, it makes a substantial difference in terms of achievement” (p. 1265).

Practice Among Elite Performers

Sloboda, Davidson, Howe, and Moore (1996) compared the practice schedules of higher and lower performing teenage music students of equal early musical ability and exposure to music lessons. All of the students began to study music around age six. However, the higher performers had devoted much more time to practice. By age twelve higher performers were practicing about two hours a day, compared to fifteen minutes a day for the lower performers. The researchers concluded that “there was a very strong relationship between musical achievement and the amount of formal practice undertaken” (Sloboda, Davidson, Howe, & Moore, 1996, p. 287). In fact, musicians who had reached an elite status at a music conservatory had devoted over 10,000 hours to practice by the age of twenty!

However, time devoted to practice activity does not tell the whole story. Most likely you know individuals of average proficiency in an avocation such as golf or music who spend a considerable amount of time practicing, with little improvement. Based on studies of expert performers in music, sports, typing, and games such as Scrabble, Ericsson (2006) concludes that practice is a necessary but not sufficient condition to reach high levels of competence. What factors differentiate practice that leads to growth of expertise from practice that does not?

Ericsson (2006) refers to practice that builds expertise as deliberate practice. He describes deliberate practice as tasks presented to performers that “are initially outside their current realm of reliable performance, yet can be mastered within hours of practice by concentrating on critical aspects and by gradually refining performance through repetitions after feedback” (p. 692). Deliberate practice involves five basic elements: (1) effortful exertion to improve performance, (2) intrinsic motivation to engage in the task, (3) carefully tailored practice tasks that focus on areas of weakness, (4) feedback that provides knowledge of results, and (5) continued repetition over a number of years (Kellogg & Whiteford, 2009).

In summary, research on expert performance and experimental comparisons among multimedia courses, with different types and levels of interactivity, recommend effective practice engagement opportunities. What are some guidelines you can apply to create “high-strength” interactive multimedia learning environments? In our third edition, we reviewed evidence to support the five principles to follow. Here we extend and update these guidelines with recent research.

Principle 1: Add Sufficient Practice Interactions to e-Learning to Achieve the Objective

Practice exercises are expensive. First, they take time to design and to program. Even more costly will be the time learners invest in completing the practice. Does practice lead to more learning? How much practice is necessary? In this section we describe evidence that will help you determine the optimal amount of practice to include in your e-learning environments.

The Benefits of Practice

Some e-learning courses in both synchronous and asynchronous formats include little or no opportunities for overt practice. In Chapter 1 we classified these types of courses as receptive based heavily on an information acquisition learning metaphor. Can learning occur without practice? How much practice is needed?

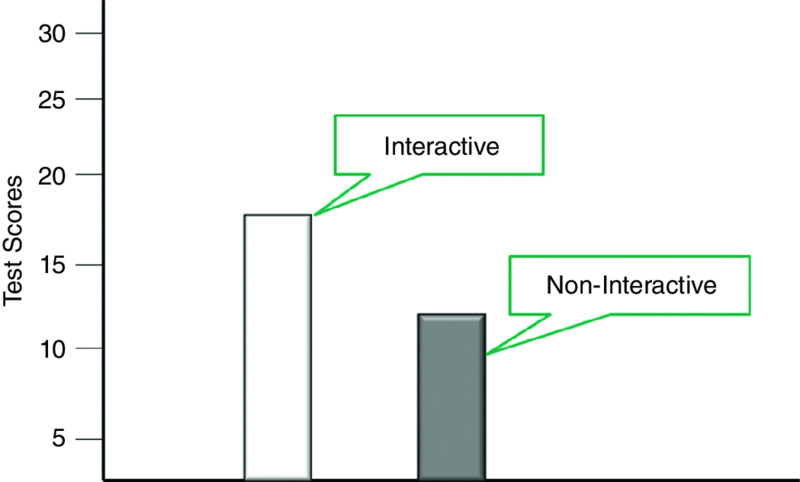

Moreno and Mayer (2005, 2007) examined learning from a Design-A-Plant game described in Chapter 9. In the game participants construct plants from a choice of roots, leaves, and stems in order to build a plant best suited to an imaginary environment. The object of the game is to teach the adaptive benefits of plant features for specific environments such as heavy rainfall, sandy soil, etc. They compared learning from interactive versions in which the learner selected the best plant parts to survive in a given environment with the same lesson in which the on-screen agent selected the best parts. As you can see in Figure 13.5, participant interactivity improved learning with an effect size of .63, which is considered high.

Figure 13.5 Better Learning from e-Learning with Practice Interactions.

Based on data from Experiment 2, Moreno and Mayer, 2005.

Practice Benefits Diminish Rapidly

Practice can improve performance indefinitely, although at diminishing levels. Timed measurements of workers using a machine to roll cigars found that after thousands of practice trials conducted over a four-year period, proficiency continued to improve (Crossman, 1959). Proficiency leveled off only after the speed of the operator exceeded the physical limitations of the equipment. In plotting time versus practice for a variety of motor and intellectual tasks, researchers have observed a logarithmic relationship between amount of practice and time to complete tasks (Rosenbaum, Carlson, & Gilmore, 2001). Thus, the logarithm of the time to complete a task decreases with the logarithm of the amount of practice. This relationship, illustrated in Figure 13.6, is called the power law of practice. As you can see, while the greatest proficiency gains occur on early trials, even after thousands of practice sessions, small incremental improvements continue to accrue. Practice likely leads to improved performance in early sessions as learners find better ways to complete the tasks and in later practice sessions as automaticity increases efficiency.

Figure 13.6 The Power Law of Practice: Speed Increases with Practice But at a Diminishing Rate.

Elite performers in athletics, music, and games such as chess and Scrabble have devoted 10,000+ hours to deliberate practice. However, proficient performance in most jobs will not require elite levels of performance. You will need to consider the return on investment on your practice interactions. How much practice will you need to provide to ensure your learners have an acceptable level of job proficiency? We turn to this question next.

Adjust the Amount of Practice Based on Task Criticality

Schnackenberg and others compared learning from two versions of computer-based training, one offering more practice than the other (Schnackenberg, Sullivan, Leader, & Jones, 1998; Schnackenberg & Sullivan, 2000). In their experiment, two groups were assigned to study a full practice version lesson with 174 information screens and sixty-six practice exercises or a lean practice version with the same 174 information screens and twenty-two practice exercises. Participants were divided into high- and low-ability groups based on their grade point averages and randomly assigned to complete either the full or lean practice versions. Outcomes included scores on a fifty-two-question test and average time to complete each version. Table 13.1 shows the results.

Table 13.1. Better Learning with More Practice.

| 66 Practices | 22 Practices | |||||||||||||

| Low | High | Low | High | 32.25 | 41.82 | 28.26 | 36.30 | 146 | 107 | 83 | 85 | |||

| Ability Level | Test Scores | Time to Complete (minutes) |

From Schanckenberg, Sullivan, Leader, and Jones, 1998.

As expected, higher-ability learners scored higher, and the full version took longer to complete. The full practice version resulted in higher average scores, with an effect size of .45, which is considered moderate. The full practice version resulted in increased learning for both higher- and lower-ability learners. The authors conclude: “When instructional designers are faced with uncertainty about the amount of practice to include in an instructional program, they should favor a greater amount of practice over a relatively small amount if higher student achievement is an important goal” (Schnackenberg, Sullivan, Leader, & Jones, 1998, p. 14).

Notice, however, that lower-ability learners required 75 percent longer to complete the full practice version than the lean practice version for a gain of about four points on the test. Does the additional time spent in practice warrant the learning improvement? The answer in this research, as in your own training, will depend on the consequences of error on task performance.

If your goal is to build knowledge and skills, you need to add practice interactions. To decide how much practice your e-learning courses should include, consider the nature of the job task and the criticality of job performance to determine whether the extra training time is justified by the improvements in learning. More critical skills such as tasks with safety consequences clearly warrant lengthy periods of deliberate practice. In other situations, however, much more limited numbers of practice exercises may suffice. We recommend that you start with a relatively low amount of practice relative to the learning goal criticality and test the lesson with a pilot group representative of the intended audience. If the learning goal is not reached, identify gaps in the training, including the possibility of adding practice.

Principle 2: Mirror the Job

Skill building requires practice on the component skills that are required for a specific work domain. Therefore, your interactions must require learners to respond in a job-realistic context. Questions that ask the learner to merely recognize or recall information presented in the training will not promote learning that translates into effective job performance. In short, the practice exercises should require the same skills as are required on the job.

Begin with a job and task analysis in order to define the specific cognitive and physical processing required in the work environment. Then, create transfer appropriate interactions—activities that require learners to respond in similar ways during the training as in the work environment. The more the features of the job environment are integrated into the interactions, the more likely the right cues will be encoded into long-term memory for later transfer. The Jeopardy game shown in Figure 13.1 requires only recall of information. Neither the psychological nor the physical context of the work environment is reflected in the game. In contrast, the question shown in Figure 13.4 requires learners to process new content in a job-realistic context and therefore is more likely to support transfer of learning. If games such as Jeopardy are popular with your audience, you could start with this type of practice to promote learning of lower-level factual information and then progress to higher-level interactions that require learners to apply facts to job scenarios.

Principle 3: Provide Effective Feedback

In a comparison of meta-analyses of 138 different factors that affect learning, Hattie (2009) ranked feedback as number 10 in influence. In a second analysis, Hattie and Gan (2011) report an average effect size of .79 based on a review of twelve meta-analyses. Johnson and Priest (2014) report a median effect size of .72 based on an analysis of eight lessons that included games and tutorials. Both effect sizes from these separate studies indicate a high positive potential for the learning benefits of feedback. With effective feedback you can likely expect an approximate three-quarters of a standard deviation improvement in performance.

In spite of the known benefits and extensive use of feedback, hundreds of research experiments on feedback reveal both positive effects in some situations and negative effects in others (Kluger & DeNisi, 1996; Shute, 2008). For example, feedback may have positive or negative effects, depending on prior knowledge of the learner, the type of feedback given, and how learners receive and respond to feedback. Here we provide some guidelines to help you maximize learning from feedback.

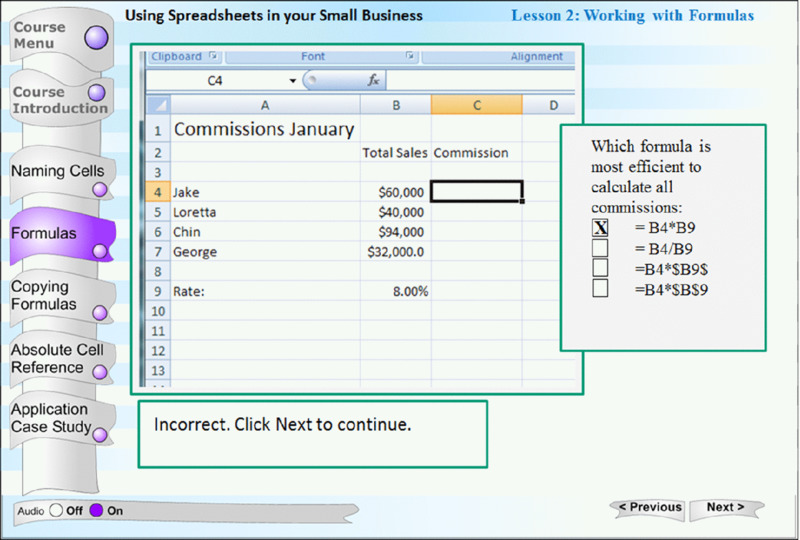

Provide Explanatory Feedback

Take a look at the two feedback responses to the incorrect question response shown in Figures 13.7 and 13.8. The feedback in Figure 13.7 tells you that your answer is wrong. However, it does not help you understand why your answer is wrong. The feedback in Figure 13.8 provides a much better opportunity for learning because it incorporates an explanation. A missed question offers a teachable moment. The learner is open to a brief instructional explanation that will help build a correct mental model. Although the benefits of explanatory feedback seem obvious, crafting explanatory feedback is much more labor-intensive than corrective feedback, which can be automated in many authoring tools with only a few key strokes. What evidence do we have that explanatory feedback will give a return sufficient to warrant the investment?

Figure 13.7 This Feedback Tells the Learner That the Response Is Incorrect.

Figure 13.8 This Feedback Tells the Learner That the Response Is Incorrect and Provides an Explanation.

Evidence for Benefits of Explanatory Feedback

Moreno (2004) compared learning from two versions of a computer botany game called Design-A-Plant, described previously in this chapter. Either corrective or explanatory feedback was offered by a pedagogical agent in response to the learner’s creation of a plant designed to live on a new planet. For explanatory feedback, the agent made comments such as: “Yes, in a low sunlight environment, a large leaf has more room to make food by photosynthesis” (for a correct answer) or “Hmmm, your deep roots will not help your plant collect the scarce rain that is on the surface of the soil” (for an incorrect answer). Corrective answer feedback told the learners whether they were correct or incorrect but did not offer any explanation. As you can see in Figure 13.9, better learning resulted from explanatory feedback, with a large effect size of 1.16. Students rated the version with explanatory feedback as more helpful than the version with corrective feedback. Moreno and Mayer (2005) reported similar results using the same botany game environment in a follow-up study. They found that explanatory feedback resulted in much better learning than corrective feedback, with a very high effect size of 1.87.

Figure 13.9 Better Learning from Explanatory Feedback.

From data in Experiment 1, Moreno, 2004.

More recently Van der Kleij, Feskens, and Eggen (2015) conducted a meta-analysis on feedback based on seventy effect sizes drawn from forty research studies. They reported a substantial effect size for explanatory feedback compared to no feedback (median effect size of .61), as well as compared to corrective feedback (median effect size of .49). In fact, corrective feedback generally had no effect on learning. The benefits of explanatory feedback are especially pronounced for higher order learning outcomes compared to lower-level learning. Taken together, we have strong empirical evidence to support our recommendation to provide explanatory feedback.

Emphasize Three Categories of Feedback

Hattie and Gan (2011) propose four categories of feedback: (1) task-focused feedback such as the Excel feedback shown in Figure 13.8, (2) process feedback that provides suggestions on strategies and cues for successful responses, (3) self-regulation feedback that guides learners to monitor and reflect on their responses, and (4) ego-directed feedback such as praise. In Table 13.2 we illustrate feedback for each category.

Table 13.2. Four Types of Feedback.

| Focus | Feedback That | Example |

| Task | Focuses on the correctness or quality of the response | Yes, Dr. Jones and Dr. Chi have practice profiles likely to include a healthy percentage of obesity with and without diabetes. |

| Process | Focuses on the strategies used to arrive at the response | Although Dr. Zuri’s practice is in a rural setting, what questions could you ask to trigger potential future application of Lestratin? |

| Self-regulation | Directs the learners to monitor their response and reflect on their learning | Correct assessment. Now that you have completed this case study, what might you do differently on the job? |

| Ego | Directs learners’ attention to themselves rather than to the instructional criterion | Great Work! You will no doubt be one of the top 100 sales performers for Lestratin. |

Based on Hattie and Gan, 2011.

Of the four categories, Hattie and Gan discourage the use of ego-directed feedback most commonly given as praise. They note that “Praise usually contains little task-related information and is rarely converted into more engagement, commitment to the learning goals, enhanced self-efficacy, or understanding about the task” (p. 261).

In their meta-analysis Van der Kleij, Feskens, and Eggen (2015) found that most feedback in the studies included in their analysis focuses on task and/or process levels. They recommend additional research that combines process and regulatory feedback, as there have been promising outcomes from a few studies that evaluated these feedback types.

Provide Auditory Feedback for Visual Tasks

In Chapter 6 we discussed the modality effect—that learning is better from an auditory explanation of a complex visual than from a textual explanation. The modality effect may also apply to feedback in some situations. Fiorella, Vogel-Walcutt, and Schatz (2012) found that in a visual simulation military training requiring the learner to make decisions about selecting and firing on targets, auditory feedback provided during the simulation resulted in better simulation training performance and simulation post-test scores for more challenging scenarios. This evidence suggests the use of audio feedback in graphic-intense learning environments commonly used in problem-based learning, simulations, and games. More research is needed to confirm these findings and to clarify when textual feedback is more effective than auditory feedback.

Provide Step-by-Step Feedback When Steps Are Interdependent

In many problem-solving tasks, a wrong step early in problem solving can derail the remaining attempted steps. Corbalan, Paas, and Cuypers (2010) compared the effects of feedback given on the final solution with feedback given on all solution steps on learning and motivation in linear algebra problems. The research team found that participants were more motivated and learned more when feedback was provided on all solution steps rather than just the final step. The research suggests that electronic environments should incorporate step-wise guidance in highly structured subjects such as linear algebra.

In contrast to highly structured domains such as mathematics, there is some evidence that delayed feedback may be more effective for conceptual or strategic skills, as well as for simpler tasks (Shute, 2008). However, we will need more research for firm recommendations on the timing of feedback for different tasks and learners.

Assign Guided Peer Feedback as a Practice Exercise

Peer feedback refers to ratings and evaluative comments given by one learner on the product of another learner. Peer feedback is common in educational and training courses that use a portal or discussion board to augment synchronous or asynchronous e-learning. Typically, learners post assignments and other classmates comment on those assignments. Is peer feedback effective? What are the best techniques to optimize effective peer ratings and comments?

Gan and Hattie (2014) and Cho and MacArthur (2011) are among researchers who have evaluated the benefits of structured peer feedback. Both studies focused on feedback given on science laboratory reports. In the Cho and MacArthur study, three groups of undergraduates were assigned to either (1) read a lab report and provide a rating and comments (that is, peer review), (2) only read the reports, or (3) a no assignment control group. Then all participants wrote a different laboratory report as a post-test.

Those who reviewed lab reports wrote better post-test reports than those who only read, with an effect size of nearly 1.0. The quality of the post-test report was better among those whose review comments focused on problem detection and solution suggestions. An important element for peer review success was a training session for student reviewers using example reports that were of varied quality. During the training, participants practiced evaluating reports using a rating scale.

The Gan and Hattie experiment provided students with questions to guide their reviews. For example, they asked learners to post written feedback on what was done well or not done well, along with suggestions for improvement.

Note that in these studies, the learning of the reviewers, not those who received feedback was measured. Evidence recommends structured giving of feedback as a practice activity that benefits the reviewer. We will need additional evidence regarding the benefits of peer reviews for the recipient of the reviews.

Tips for Feedback

- After the learner responds to a question, provide feedback that tells the learner whether the answer is correct or incorrect and provide a succinct explanation.

- Focus the explanation in the feedback on either the task itself, the process involved in completing the task, or on self-monitoring related to the task.

- Avoid feedback such as “Well Done!” that draws attention to the ego and away from the task.

- Avoid normative feedback, such as grades that encourage learners to compare themselves with others.

- Emphasize progress feedback in which attention is focused on improvement over time.

- Position the feedback on the screen so that the learners can see the question, their response to the question, and the feedback in close physical approximation to minimize split attention.

- For multi-step problems for which steps are interdependent, provide step-by-step feedback.

- For a question with multiple answers, such as the example in Figure 13.4, show the correct answers next to the learner’s answers and include an explanation for the correct answers.

- Provide training and guidance for reviewers assigned to provide peer feedback.

Principle 4: Distribute and Mix Practice Among Learning Events

We’ve seen that the benefits of practice have a diminishing effect as the number of exercises increases. However, there are some ways to extend the long-term benefits of practice just by where you place and how you sequence even a few interactions.

Distribute Practice Throughout the Learning Environment

The earliest research on human learning conducted by Ebbinghaus in 1913 showed that distributed practice yields better long-term retention. According to Druckman and Bjork: “The so-called spacing effect–that practice sessions spaced in time are superior to massed practices in terms of long-term retention-–is one of the most reliable phenomena in human experimental psychology. The effect is robust and appears to hold for verbal materials of all types as well as for motor skills” (1991, p. 30). Based on a more recent review of the spacing effect, Dunlosky, Rawson, Marsh, Nathan, and Willingham (2013) “rate distributed practice as having high utility” (p. 39). As long as eight years after an original training, learners whose practice was spaced showed better retention than those who practiced in a more concentrated time period (Bahrick, 1987).

The spacing effect, however, does not necessarily result in better immediate learning. In some cases, the benefits of spaced practice are realized only after a period of time. Since most training programs do not measure delayed learning, the benefits of spaced practice often go unnoticed. Only in long-term evaluation would the benefits of spacing be seen. Naturally, practical constraints will dictate the amount of spacing that is feasible.

At least four recent studies show the benefits of distributed practice. Two studies focused on reading skills, one on mathematics, and a fourth on science. Seabrook, Brown, and Solity (2005) showed that recall of words among various age groups was best for words in a list that were repeated after several intervening words than for words that were repeated in sequence. To demonstrate the application of this principle to instructional settings, they found that phonics skills taught in reading classes scheduled in three two-minute daily sessions showed an improvement six times greater than those practicing in one six-minute daily session.

Rawson and Kintsch (2005) compared learning among groups of college students who read a text once, twice in a row, or twice with a week separating the readings. They found that reading the same text twice in a row (massed practice) improved performance on an immediate test, whereas reading the same text twice with a week in between readings (distributed practice) improved performance on a delayed test.

Rohrer and Taylor (2006) used mathematical permutation problems to compare the effects of spaced and massed practice on learning one week and four weeks after practice. After completing a tutorial in session 1, students were assigned ten practice problems. The massed group worked all ten practice problems in the second session, whereas the spaced practice group worked the first five problems in session 1 and the second five problems in session 2. Learning in the two groups was equivalent after one week, but spaced learners had much better four-week retention of skills.

Kapler, Weston, and Wiseheart (2015) assigned a lesson on meteorology followed by an online review at either one or eight days after the initial lesson. This experiment was conducted in a classroom rather than a laboratory setting. Both the review and the final test included higher level and factual questions. The final test was given eight weeks later. The research team found better learning of both higher level and factual questions among those who reviewed eight days after the lesson than those who reviewed one day after the lesson.

Taken together, evidence continues to recommend practice that is scheduled throughout a learning event, rather than concentrated in one time or place. To apply this guideline, incorporate review practice exercises among the various lessons in your course, and within a lesson distribute practice throughout rather than all in one place. Also consider ways to leverage media in ways that will extend learning over time. For example, schedule an asynchronous class a week prior to an instructor-led synchronous session. Follow these two sessions by an assignment in which learners post products to a discussion board and conduct peer reviews. The use of diverse delivery media to spread practice over time will improve long-term learning.

Mix Practice Types in Lessons

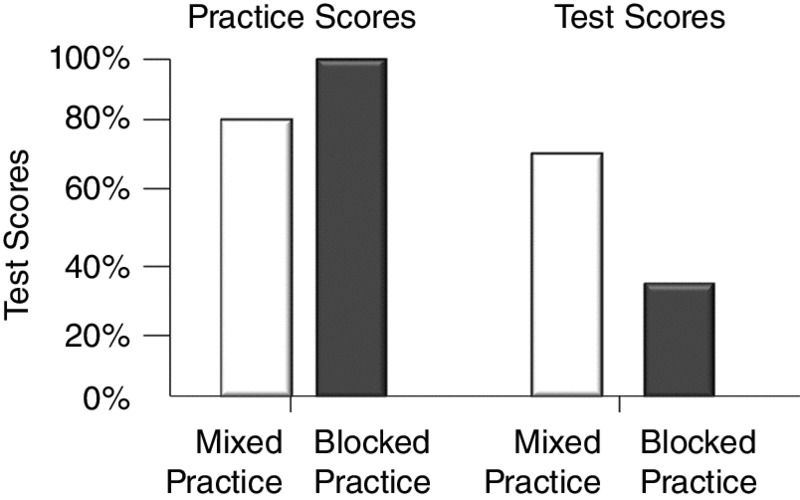

Imagine you have three or more categories of skills or problems to teach, such as how to calculate the area of a rectangle, a circle, and a triangle. A traditional approach is to show an example followed by practice of each area calculation separately. For example, first demonstrate how to calculate the area of a rectangle followed by five or six problems on rectangles. Next show how to calculate the area of a circle followed by several problems on circles. This traditional approach is what instructional psychologists called blocked practice. Practice exercises are blocked into learning segments based on their common solutions.

In contrast, however, research suggests that a mixed practice (or interwoven) format will lead to poorer practice scores but, counter-intuitively, pay off in better learning on a test given a day later. For example, Taylor and Rohrer (2010) asked learners to calculate the number of faces, edges, corners, or angles in four unique geometric shapes. Following a tutorial that included examples, learners were assigned thirty-two practice problems—eight of each of the four types. The blocked group worked eight faces problems, eight edges problems, eight corners problems, and eight angles problems, for a total of thirty-two problems. The mixed group worked a practice problem from each of the four types eight times, also for a total of thirty-two problems. For example, in the mixed group the learner would work one problem dealing with faces followed by a problem dealing with edges, then a problem dealing with corners, and finally a problem dealing with angles. This pattern was repeated eight times. One day after practice, each student completed a test. As you can see in Figure 13.10, the practice scores in the blocked practice group were higher than those in the mixed group. However, the mixed practice group scored much better on the test. In a review of research on interweaving, Dunlosky, Rawson, Marsh, Nathan, and Willingham (2013) rated mixed practice as having “moderate utility” (p. 44).

Figure 13.10 Mixed Practice Leads to Poorer Practice Scores But Better Learning.

Based on data from Taylor and Rohrer, 2010.

Recall from Chapter 12 that varied context examples led to better learning than examples that used a similar cover story. The benefits of mixed practice may be based on a similar mechanism. By mixing together problems that must be discriminated in order to identify the most appropriate solution, learners have more opportunities to match problem solutions to problem types. In situations in which problem types are easy to discriminate, mixed practice may have less benefit.

Tips for Determining the Number and Placement of Practice Events

We have consistent evidence that practice interactions promote learning. However, the greatest amount of learning accrues on the initial practice events. We also know that greater long-term learning occurs when practice is distributed throughout the learning environment rather than all at once. In addition, when it’s important to discriminate among different problem types, it’s better to mix types during practice than to group them by the same type. To summarize our guidelines for practice, we recommend that you:

- Analyze the task performance requirements:

- Is automatic task performance needed? If so, is automaticity required immediately or can it develop during job performance?

- Does the task require an understanding of concepts and processes along with concomitant reflection?

- Assign larger numbers of exercises when automaticity is needed.

- For tasks that require automatic responses, use the computer to measure response accuracy and response time. Once automated, responses will be both accurate and fast.

- Distribute practice among lessons in the course, within any given lesson, and among multiple learning events.

- In synchronous e-learning courses, extend learning by designing several short sessions of one to two hours with asynchronous practice assigned between sessions.

- When your goal is to teach discrimination among problem types, mix them up during practice rather than segregating them by type.

Principle 5: Apply Multimedia Principles

In Chapters 4 through 9, we presented six high-level principles pertaining specifically to the use of graphics, text, and audio in e-learning. Here are some suggestions for ways to apply those principles to the design of practice interactions.

Modality and Redundancy Principles

While engaging in practice interactions, learners often must refer back to the directions and the questions as well as to the feedback. Therefore, we recommend you generally present practice interactions and accompanying feedback in text. To apply the redundancy principle, rely on text alone rather than a combination of text and audio that repeats the on-screen text. An exception would be any situation in which the practice question involves an auditory discrimination, such as in a second language class.

Contiguity Principle

According to the contiguity principle, text should be closely aligned to the graphics it is explaining to minimize extraneous cognitive load. Since you will often use text for your questions and feedback, the contiguity principle is especially applicable to design of practice questions. Clearly distinguish response areas by placement, color, or font, and place them adjacent to the question. In addition, when laying out practice that will include feedback to a response, leave an open screen area for feedback near the question and as close to the response area as possible so learners can easily align the feedback to their response and to the question. In multiple-choice or multiple-select items, use color or bolding to show the correct options as part of the feedback.

Recent research shows that contiguity applies also to the type of behavioral interaction required. Rey (2011) found greater transfer learning from a simulation in which learners adjusted parameters via either on-screen scroll bars or drag and drop compared to text input. Having to split attention between the keyboard and the screen when inputting text depressed learning. We will need more research indicating the tradeoffs to different forms of physical engagement during e-learning.

Coherence Principle

In Chapter 8 we reviewed evidence suggesting that violation of the coherence principle imposes extraneous cognitive load and may interfere with learning. We recommend that practice opportunities be free of extraneous visual or audio elements such as gratuitous animations or sounds (applause, bells, or whistles) associated with correct or incorrect responses. Research has shown that, while there is no correlation between the amount of study and grade point average in universities, there is a correlation between the amount of deliberate practice and grades. Specifically, research recommends study in distraction-free environments, for example, alone in a quiet room, rather than with a radio or in a team (Knez & Hygge, 2002; Plant, Ericsson, Hill, & Asberg, 2011). During virtual classroom synchronous sessions, the instructor should maintain silence during practice events.

Tips for Applying the Multimedia Principles to Your Interactions

- Include relevant visuals as part of your interaction design.

- Align directions, practice questions, and feedback in on-screen text so that learners can easily access all the important elements in one location.

- Use on-screen rather than keyboard input modes to minimize split attention.

- Minimize extraneous text, sounds, or visuals during interactions.

What We Don’t Know About Practice

We conclude that, while practice does not necessarily lead to perfect, deliberate spaced practice that includes effective feedback goes a long way to boost learning.

- Is practice effective for problem-solving skills? There is a recent debate regarding the type of content for which practice offers the greatest benefits. A recent review offers evidence that practice will be most effective for lower level factual type content rather than problem-solving skills (van Gog & Sweller, 2015). Other researchers disagree with their conclusion (Karpicke & Aue, 2015; Rawson, 2015). We anticipate more refined guidance in the future that might recommend different types of practice or engagement for learning of procedures, facts, conceptual information, and problem-solving skills.

- What are the best types of explanatory feedback for different learning goals? We saw that explanatory feedback that focuses on the task, process, or regulatory skills is more effective than feedback that merely tells learners whether their responses are correct or incorrect. Most feedback research provides explanatory feedback at the task or process level. Additional studies can shed light on the value of regulatory feedback.

- What other features of feedback can affect its value? For example, should feedback be detailed or brief? Under what conditions is feedback more effective when presented via audio versus text?

- How do learners receive feedback? Little is known about how learners receive feedback. Most research on feedback assumes that the learners are attending to and processing the feedback. This assumption may lead to erroneous conclusions. Eye tracking that indicates when and how learners are attending to feedback may offer renewed insights on basic questions about feedback. We still have lessons to learn from future research on feedback.

Chapter Reflection

- Review some typical e-lessons from your organization or an online source. What proportion of the exercises is recall or recognition versus application? Is this proportion appropriate to the instructional goal? What revisions are needed?

- Review the number and placement of practice exercises in your learning events. Is the amount of practice appropriate to the audience and instructional goal? Are exercises distributed within and among lessons?

- How effective is the feedback provided in lessons you are reviewing or developing? Is the feedback mostly corrective or mostly explanatory?

- Describe or imagine how peer feedback might be used in your environment. How would you support learners to ensure they give effective feedback?

COMING NEXT

From discussion boards to blogs to breakout rooms and social media, there are numerous computer facilities for synchronous and asynchronous forms of collaboration among learners and instructors during e-learning events. There has been a great deal of research on collaborative learning in the past few years. That research is just beginning to provide some general guidelines about how and when to use collaborative learning activities. In the next chapter we look at what we know about online collaboration and learning.

Suggested Readings

- Bernard, R.M., Abrami, P.C., Borokhovski, E., Wade, C.A., Tamin, R.M., Surkes, M.A., & Bethel, E.C. (2009). A meta-analysis of three types of interaction treatments in distance education. Review of Educational Research, 79, 1243–1289. This meta-analysis is a lengthy technical review comparing learning from student-student, student-instructor, and student-content multimedia interactions in synchronous and asynchronous courses.

- Hattie, J., & Gan, M. (2011). Instruction based on feedback. In R.E.Mayer & P. A. Alexander (Eds.), Handbook of research on learning and instruction (pp. 249–271). New York: Routledge. We recommend review articles such as this one to provide a historical and current perspective on feedback.

- Johnson, C.I., & Priest, H.A. (2014). The feedback principle in multimedia learning. In R. E. Mayer (Ed.), The Cambridge handbook of multimedia learning (2nd ed., pp. 449–463). New York: Cambridge University Press. This chapter provides another excellent review of evidence on corrective versus explanatory feedback.

- Moreno, R., & Mayer, R E. (2007). Interactive multimodal learning environments. Educational Psychology Review, 19, 309–326. This review describes five design principles and evidence for interactive multimodal learning environments including guided activity, reflection, feedback, pacing, and pretraining.

- Plant, E.A., Ericsson, K.A., Hill, L., & Asberg, K. (2005). Why study time does not predict grade point average across college students: Implications of deliberate practice for academic performance. Contemporary Educational Psychology, 30, 96–116. This correlational study shows relationships between college student GPA, SAT scores, and the amount and quality of study time.

- Van der Kleij, F.M., Feskens, C.W.R., & Eggen, T.J.H.M. (2015). Effects of feedback in a computer-based learning environment on students’ learning outcomes: A meta-analysis. Review of Educational Research, 85(4), 475–511. A technical review of the features of feedback that improve learning outcomes. The introduction and discussion offer helpful background information on evidence-based feedback.

CHAPTER OUTLINE

- What Is Collaborative Learning?

- What Is Computer-Supported Collaborative Learning (CSCL)?

- Diversity of CSCL Research

- Principle 1: Consider Collaborative Assignments for Challenging Tasks

- Principle 2: Optimize Group Size, Composition, and Interdependence

- Principle 3: Match Synchronous and Asynchronous Assignments to the Collaborative Goal

- Principle 4: Use Collaborative Tool Features That Optimize Team Processes and Products

- Principle 5: Maximize Social Presence in Online Collaborative Environments

- Principle 6: Use Structured Collaboration Processes to Optimize Team Outcomes

- How to Implement Structured Controversy

- Adapting Structured Controversy to Computer-Mediated Collaboration

- What We Don#x2019;t Know About Collaborative Learning