CHAPTER 17

Learning with Computer Games

CHAPTER SUMMARY

Many strong claims are made about the value of games for promoting learning, including the use of games for adult training. However, in taking an evidence-based approach, we recommend a cautious and careful approach to game-based training because all educational games are not equally effective.

First, value-added research compares the learning outcomes of people who play the base version of a game with those who play an enhanced version of the game that has one additional feature. Value-added research suggests that in some cases the instructional effectiveness of educational games can be improved by adding coaching (explanations after moves and advice before moves), self-explanation (questions requiring the player to explain or select an explanation from a menu), pretraining (pregame activities that highlight key concepts), modality (presenting words in spoken form), and personalization (presenting words in conversational style).

Second, cognitive consequences research compares the improvement in cognitive skills of people who are assigned to play an off-the-shelf game for an extensive period of time to those who are assigned to engage in some other activity. Cognitive consequences research shows that playing action video games, such as games in which you must shoot at fast-moving targets, can result in improvements in perceptual attention skills.

Third, media comparison research compares learning of academic content with games versus learning with conventional media. Although there are legitimate methodological concerns with media comparison studies, the current state of the literature shows that the strongest support for game-based learning is with science content.

Overall, research on learning with games shows that well-designed games can have a place in training programs, but there is not research support for wholesale conversion of traditional training formats to game-based formats.

Do Games Have a Place in the Serious Business of Training?

In The Ultimate History of Video Games, Steven Kent (2001) shows how computer games—ranging from Pac-Man to Tetris to Legend of Zelda—were originally designed for entertainment and were met with huge success. Is it possible to repurpose games to teach academic content, that is, can we create what Abt (1970) calls serious games in his classic little book, Serious Games? Over the course of recent years, evidence has been accumulating to address this question, including a recent report from the National Research Council entitled Learning Science Through Computer Games and Simulations (Honey & Hilton, 2011), a series of meta-analyses reported in Computer Games for Learning: An Evidence-Based Approach (Mayer, 2014a), several edited books summarizing research on educational games (O’Neil & Perez, 2008; Tobias & Fletcher, 2011), and a collection of research reviews (Clark, Tanner-Smith, & Killingsworth, 2015; Connolly, Boyle, MacArthur, Hainey, & Boyle, 2012; Sitzmann, 2011; Vogel, Vogel, Cannon-Bowers, Bowers, Muse, & Wright, 2006; Wouters, Van Nimwegen, Van Oostendorp, & Van Der Spek, 2013; Young et al., 2012).

As summarized in Table 17.1, research on learning with computer games can be broken down into three categories (Mayer, 2014a): Value-added research examines the issue of which features increase the instructional effectiveness of computer games; cognitive consequences research examines whether playing off-the-shelf computer games improves cognitive skills; and media comparison research examines whether people learn academic content better with games than with conventional media. We examine each of these issues in the next sections of this chapter.

Table 17.1. Three Types of Game Research.

| Type | Research Question |

| Value added | Which features improve a computer game’s effectiveness? |

| Cognitive consequences | Does game playing improve cognitive skills? |

| Media comparison | Are games more effective than conventional media? |

Which Features Improve a Game’s Effectiveness?

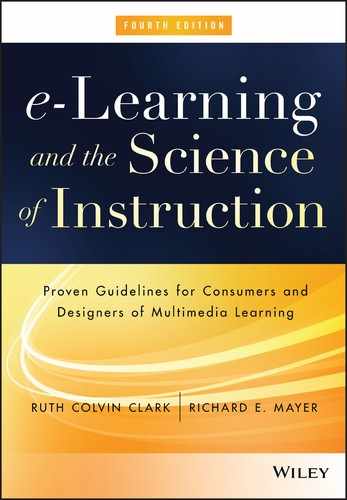

Suppose you have decided to develop a computer game to teach some educational material, such as how an electric circuit works. Which features should you incorporate into the game to maximize learning? This question is informed by what Mayer (2014a) calls value-added research, in which we compare the learning outcome test performance of a group that plays a base version of the game (base group) versus the same game with one feature added (enhanced group). The value-added approach is summarized in Figure 17.1. If adding a feature improves performance by at least an effect size of 0.5, we are interested in including it in our list of effective features, as this means that adding the feature is at least in the medium range and has increased test scores by nearly half of a standard deviation. Improvement of that size shows that the intervention has practical importance in education and training.

Figure 17.1 Value-Added Experiment Compares Base Group to Enhanced Group on Learning Outcome.

Table 17.2 lists and describes five promising features that have been shown to improve performance on learning outcome tests across multiple experiments: coaching, self-explanation, pretraining, modality, and personalization. Each feature is intended to encourage learners to reflect on what they are learning in the game, that is, to engage in deeper cognitive processing of the material, rather than to simply focus on fast-paced actions and winning the game.

Table 17.2. Five Promising Features for Improving Learning with Computer Games.

| Feature | Description | Level of Evidence |

| Coaching | Provide explanations or advice | Moderate |

| Self-explanation | Provide questions asking players to explain or select explanations from a menu | Strong |

| Pretraining | Provide pregame activities | Moderate |

| Modality | Present words in spoken form | Strong |

| Personalization | Present words in conversational style | Strong |

According to the coaching principle, players learn better from educational computer games when they are given explanations after moves they have made or advice before they make a move. As an example of game-based coaching, consider a game on how electrical circuits work, The Circuit Game, in which college students progress through ten levels by amassing points every time they make a correct move. Figure 17.2 gives a screenshot of a game task in which you are shown two identical circuits and asked to drag and drop a component onto either of them in order to make the current flow faster in the circuit on the left. In a value-added study (Mayer & Johnson, 2010), some students (base group) were simply given points and a cheerful ding sound if they were correct on a game task and a buzzer sound if they were wrong, as shown in Figure 17.2. Other students (enhanced group) were in addition given an explanation window after each move, providing the reason for the correct answer in a simple sentence, as shown in Figure 17.3. Providing explanative feedback such as this is a form of coaching. Overall, students in the enhanced group outperformed students in the base group on solving twenty-five circuit problems in Level 10, which served as an in-game transfer test. As summarized in the first line of Table 17.2, in six out of seven experimental comparisons reported in published papers, players performed better on a learning post-test if the game included some form of explanative feedback after key moves or advice before key moves, with a median effect size in the medium-to-large range.

Figure 17.2 The Circuit Game.

From Mayer and Johnson, 2010.

Figure 17.3 The Circuit Game with Coaching Added.

From Mayer and Johnson, 2010.

The self-explanation principle is that players learn better from educational computer games when they are prompted to explain their moves. As an example of game-based self-explanation, we could change the base version of the Circuit Game by asking players to select the reason for their move from a menu after each game task, as shown in Figure 17.4. In this form of self-explanation, students do not need to engage in the tedious task of typing in their explanation so the flow of the game is maintained. Johnson and Mayer (2010) found that students who played the game with self-explanation menus (enhanced group) outperformed students who played the same game without explanation menus (base group) on a post-test, but in another study, students who were asked to type in their explanations did not perform better than the base group. Apparently, self-explanation in games works best when it minimizes disruption to game play. As summarized in the second row of Table 17.2, adding self-explanation prompts to an educational computer game improved learning in five out of six experimental comparisons found in the research literature, yielding a large effect size.

Figure 17.4 The Circuit Game with Self-Explanation Questions Added.

From Johnson and Mayer, 2010.

According to the pre-training principle, players learn better from an educational computer game when they are given pregame activities aimed at building or highlighting game-related concepts. Thus, another way to improve learning in the Circuit Game is to present players with a list of eight principles of electrical circuits before playing the game and ask players to relate the principles to their subsequent game playing. Fiorella and Mayer (2012) found that players who received this type of pretraining performed better on a learning test than players who received the base version. The third row of Table 17.2 summarizes that in seven out of seven experiments, players learned more if they were given some form of pretraining about the concepts in the game, with a median effect size in the medium-to-large range.

In cases when computer games use words, the modality principle calls for presenting words in spoken form rather than printed form. For example, consider the Design-A-Plant game—shown in Figure 17.5—in which players travel to a distant planet and meet Herman-the-Bug, who asks them to design a plant that can survive in the atmospheric conditions of the planet by choosing appropriate roots, stem, and leaves. Then, players see whether their plant survives and Herman explains how plants grow, along with explaining the relevance of the plant’s features. In a series of nine experimental comparisons, players performed better on a transfer post-test when Herman spoke the words, rather than when Herman’s words were printed on the screen (Moreno & Mayer, 2002a; Moreno, Mayer, Spires, & Lester, 2001), yielding a large median effect size, as summarized in the fourth row of Table 17.2. This work shows that the modality principle can be applied to game environments.

Figure 17.5 The Design-A-Plant Game.

From Moreno, Mayer, Spires, and Lester, 2001.

Finally, the personalization principle states that players learn better when words are presented in conversational style rather than formal style. For example, in the Design-A-Plant game, players perform better on a transfer post-test when Herman-the-Bug speaks in conversational style (using “you” and “I”), rather than with formal third-person constructions (Moreno & Mayer, 2000b, 2004). Overall, in eight out of eight comparisons summarized in Table 17.2, players performed better on learning post-tests when words in the game were presented in conversational style or with polite wording, yielding a large effect size.

Can research help us design effective games for learning? The five features summarized in Table 17.2 represent promising additions that game designers should consider when the goal is to improve learning through asking students to reflect on what they are learning in the game. Another important contribution of value-added research is to highlight features that have not yet been shown to be effective. According to a meta-analyses by Mayer (2014a), immersion (that is, using highly realistic graphics or virtual reality) and redundancy (adding on-screen printed text to correspond to spoken words) have been shown to be ineffective. Similarly, there is not yet evidence to support adding other features such as a narrative theme (framing the game within a cover story), competition (offering points that can be redeemed for prizes), and choice (allowing players to choose the format of game characters or backgrounds). Overall, the research evidence provides some useful directions for game designers interested in designing effective games for learning.

Does Game Playing Improve Cognitive Skills?

We know that millions of people spend countless hours playing computer games (Kapp, 2012), but you may wonder whether they can learn anything useful from playing games. From the very start of the video game revolution more than forty years ago, cognitive scientists have been grappling with this question, as exemplified in an early book, Mind at Play, by Loftus and Loftus (1983, p. 121): “It would be comforting to know that the seemingly endless hours young people spend playing Defender and Pac-Man were really teaching them something useful.”

Today computer games are far more sophisticated and far more pervasive—available on smart phones, tablets, laptops, desktops, and consoles so they can be played just about anywhere and anytime. However, the question remains whether playing off-the-shelf computer games can lead to learning anything useful. For example, success in some scientific and technical fields depends on having appropriate spatial skills—such as being able to mentally rotate objects or to track multiple moving objects or to notice objects in the corner of your field of view. Perhaps people can improve their spatial skills by playing certain computer games, such as action video games in which you have to shoot at fast-approaching enemies. Others may wish to improve their reasoning skills or memory skills through playing appropriate brain training computer games. Such games can be played at home, work, or even en route between them.

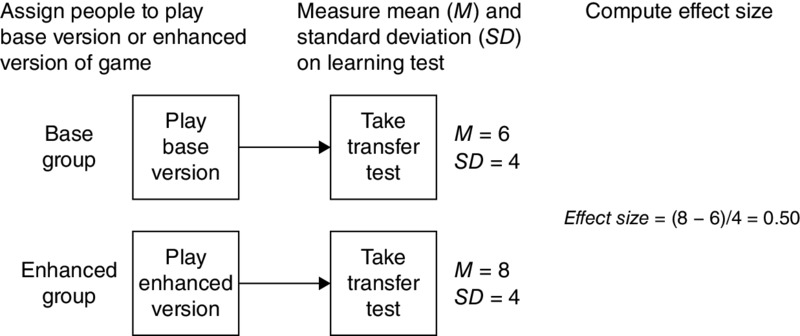

Visionaries foresee a future in which people will learn the skills they need for work and life through playing computer games (McGonical, 2011; Prensky, 2006). In this section, we explore whether there is evidence to support the use of games for training cognitive skills. Mayer (2014a) refers to this kind of research evidence as cognitive consequences research—experiments that examine changes in basic cognitive skills due to playing a computer game for an extended period of time. As shown in Figure 17.6, in cognitive consequences research, researchers compare the post-test score (or pretest-to-post-test gain) on a target cognitive skill for a game group that is assigned to play a computer game for an extended period of time versus a control group that is assigned to engage in an alternative activity. If the game group shows a significantly greater post-test score (or pretest-to-post-test gain) on a test of cognitive skill than the control group, we can conclude that game playing can improve that cognitive skill. We are particularly interested in effect sizes greater than 0.5, which indicate the effect of game playing is educationally important.

Figure 17.6 Cognitive Consequences Experiments Compare Game Group and Control Group on Cognitive Skill.

First, let’s consider the kind of skills that could be targeted by computer games. The left two columns of Table 17.3 name and describe some key skills that have been examined in cognitive consequences research—perceptual attention, mental rotation, spatial visualization, executive function, reasoning, and memory. To measure perceptual attention, you can watch a screen in which an object appears briefly far from the center of the screen where you are looking, and you must indicate the direction of where the object was. To measure mental rotation, you can be shown two objects on a screen, one of which is rotated 180 degrees (or rotated and flipped so it is a different object); then, the computer measures how long it takes you to decide whether they are the same or different. To measure spatial visualization, you can be shown a set of shapes and must determine how they fit together into a rectangle similar to solving a jigsaw puzzle. To measure executive function, you can be shown the word RED printed in green color, and you must say the color the word is printed in as fast as you can. To measure reasoning, we can give you a series of shapes based on a rule and ask you to tell what comes next. To measure memory, you can listen to a list of words and recite them back. These are just some examples of tests that have been used to measure the basic cognitive skills listed in Table 17.3.

Table 17.3. Which Cognitive Skills Can Be Improved Through Game Play?

| Skill | Description | Level of Evidence |

| Perceptual attention | You can quickly attend to and track visual objects on a screen. | Strong |

| Mental rotation | You can mentally rotate a visual image of an object. | Moderate |

| Spatial visualization | You can manipulate a visual image of an object and visualize changes. | Weak |

| Executive function | You can control your cognitive processing. | Weak |

| Reasoning | You can make inferences. | Weak |

| Memory | You can recall what was presented. | Weak |

For example, if you are in a job that requires monitoring visual displays, such as an air traffic controller, a ship pilot, or a power plant manager, you may want to play games intended to improve your perceptual attention skills. Suppose you spend twenty hours on your trusty tablet playing action video games in which you must shoot at fast-moving enemies that appear at various places on the screen. Will that improve your perceptual attention skills as measured by tests that involve different objects than in the game? In a recent review, Adams and Mayer (2014) reported that people who were assigned to play an action game for an extended period of time (game group) showed much greater gains than people who were assigned to engage in an alternative activity such as playing a puzzle game (control group). The median effect size was greater than 1 based on eighteen experimental comparisons, yielding strong evidence for the positive consequences of playing computer games. Many of the studies were conducted by Green and Bavelier (2003, 2006a, 2006b, 2007) and involved ten or thirty hours of game playing. Other kinds of games—such as brain training games, spatial puzzle games, and strategy games—did not produce large effects on perceptual attention skills, perhaps because those games did not require players to repeatedly engage in perceptual attention tasks (Anderson & Bavelier, 2011). Overall, the strongest evidence to date for the cognitive consequences of game playing involves the positive effects of playing action video games on perceptual attention skills, summarized in the third column of Table 17.3.

Let’s consider jobs in science and engineering in which you have to mentally imagine rotations in various objects. Uttal and Cohen (2012) have proposed that spatial skills such as mental rotation serve as a gateway for entry into such fields, and the National Research Council’s (2006, p. 5) report, Learning to Think Spatially, concludes “spatial thinking is integral to the everyday work of scientists and engineers.” Can playing certain computer games help build these kinds of skills? To answer this question, researchers have asked people to spend six to twelve hours playing the classic puzzle game, Tetris, in which you must rotate and align falling shapes so they form complete rows at the bottom of the screen. A screen shot from a Tetris game is shown in Figure 17.7. Adams and Mayer’s (2014) review found that playing Tetris resulted in greater improvements on mental rotation of Tetris shapes than not playing Tetris, yielding a large effect size of 0.82 based on six comparisons, but playing Tetris resulted in small-to-medium effect sizes on mental rotation of other 2-D shapes and small effect sizes for mental rotation of 3-D shapes. As expected, playing strategy games or action games did not improve mental rotation skills. This pattern of findings is consistent with the idea that playing a game does not improve skills that are not directly involved in playing the game.

Figure 17.7 Tetris Game.

Image courtesy of Blue Planet Software, Inc. Tetris ® & © 1985~2015 Tetris Holding.

There is not yet strong evidence that game playing can improve the other kinds of skills listed in Table 17.3. For example, spatial visualization skills were not greatly improved by playing puzzle games such as Tetris or brain-training games. Executive function skills were not substantially improved by playing strategy games. Averaging over a variety of strategy and brain-training games, there was not strong improvement in reasoning skills or memory skills.

Do people learn anything useful from playing commercially available computer games? Based on the current research base, there is not strong evidence that playing off-the-shelf games is an effective way to improve job-related cognitive skills. The main exception is that playing action video games can improve perceptual attention skills and that playing spatial puzzle games can improve mental rotation of shapes similar to the ones in the game. If you want to learn or teach other kinds of cognitive skills, there is not yet suitable evidence to support a role for game playing.

Are Games More Effective Than Conventional Media?

If you have a training goal in mind, you might wonder whether creating a computer game for learning—which can be a costly task—is worth the effort. Can you get equivalent (or even better) learning from less expensive instructional media, such as booklets or PowerPoint presentations? For example, suppose you want to learn some basic electronics about how wet-cell batteries work. Would it be better to learn by playing an adventure computer game or by simply viewing a series of PowerPoint slides that directly provide the needed information? In short, we want to know whether people learn academic content better from a computer game or from conventional media (such as a printed book or PowerPoint presentation).

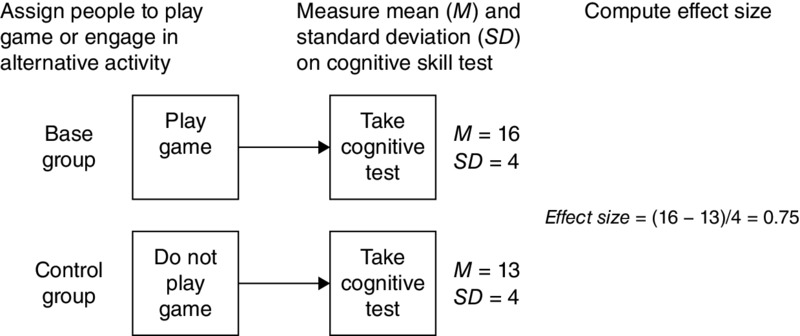

Your decision is best informed by what Mayer (2014a) calls media comparison research, in which we compare the learning outcome test performance of a group that learns with a game (game group) versus a group that learns the same material with conventional media (conventional group). Figure 17.8 summarizes the basic structure of a media comparison experiment in which one group learns about a topic by playing a game (game group), whereas another group learns identical material from conventional media such as watching a PowerPoint presentation (conventional group). If the conventional group performs as well as (or better than) the game group, then it might not make sense to go to the time and expense of creating computer games. We are particularly interested in situations in which the game group outperforms the conventional group on a learning outcome test, with an effect size greater than 0.5.

Figure 17.8 Media Comparison Experiment Compares Game Group and Conventional Group on Learning Outcome.

Media comparison research has been criticized rightfully on the grounds that it is very difficult or even impossible to equate the game and conventional groups on exposure to the same material and the same instructional method (Clark, R.E., 2001). In particular, Clark (2001) argues that instructional methods cause learning, not instructional media. According to this view, the main rationale for using games is that they afford instructional methods that are not available with conventional media or they foster motivation to play that results in greater persistence than conventional media. Thus, in some of the media comparison studies recently reviewed by Mayer (2014a), the game and conventional groups may also involve different content and/or instructional methods so it is not possible to attribute differences in learning solely to differences in media.

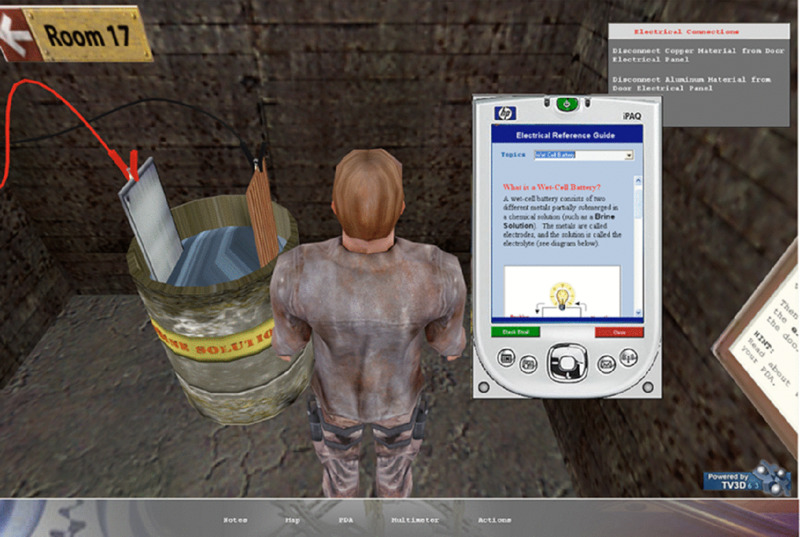

As an example of a media comparison experiment, Adams, Mayer, MacNamara, Koening, and Wainess (2012) sought to teach college students how a wet-cell battery works either by playing an educational adventure game, Cache 17, on a desktop computer or by viewing a series of PowerPoint slides on a desktop computer. A screen shot from the game is shown in Figure 17.9. In the game, players must make their way through a WWII bunker system in search of lost artwork, and along the way must construct a wet-cell battery to provide power to open a stuck door, using information provided on a PDA. On a subsequent test on how a wet-cell battery works, the slide group outperformed the game group, suggesting that the game might not be the most effective way to teach scientific content. It appears that the theme of the game (finding lost artwork) and the actions required in the game (navigating along corridors) may have distracted learners from the core academic content.

Figure 17.9 Cache 17 Game.

From Adams, Mayer, MacNamara, Koenig, and Wainess, 2012.

In contrast, Moreno, Mayer, Spires, and Lester (2001) found that students learned more about how plants grow from playing a computer game, Design-A-Plant, than from reading the same information in an online tutorial. In this case, the theme of the game was to select the roots, stem, and leaves for a plant to grow in a particular climate on a newly discovered planet. Thus, the game directly draws attention to how plant features relate to its survival, which serves the instructional objective.

Media comparison research such as this work is just getting started, with the majority of research studies published within the last ten years. For this reason, a National Research Council report (Honey & Hilton, 2011), Learning Science Through Computer Games and Simulations, concluded that, although there have been numerous development projects aimed at building exciting games for science learning “there is relatively little research evidence on the effectiveness of simulations and games for learning” (p. 21).

Table 17.4 summarizes the instructional effectiveness of using games rather than conventional media for several subject areas based on a meta-analysis by Mayer (2014a). The meta-analysis found the strongest evidence favoring games comes when the content is scientific material, such as in Design-A-Plant. In twelve out of sixteen experiments involving science topics, the game group outperformed the conventional group, with an effect size in the medium-to-large range. Thus, games may be more effective for teaching science than traditional lessons when the games are well-designed and engaging. There was not enough evidence to draw strong conclusions about other content areas, so we will need to revisit this issue as evidence accumulates.

Table 17.4. Is Game Playing More Effective Than Conventional Instruction?

| Content Area | Level of Evidence |

| Science | Strong |

| Mathematics | Weak |

| Language arts | Insufficient |

| Social studies | Insufficient |

Overall, the media comparison approach does not yet provide convincing evidence encouraging us to convert all forms of traditional instruction into games, but games may serve a useful role in some specific situations. The best advice we can provide based on the current state of the research is that there is not strong evidence to support converting conventional training into games, although there may be useful ways to incorporate short, focused games into an instructional program, particularly when the game focuses the players’ attention on the key instructional material.

What We Don’t Know About Learning with Computer Games

Game research is in its initial stages, so there is still much to be learned. Some important questions to be resolved include:

- What features make games effective? We do not yet have enough evidence about the effectiveness of many commonly used features such as narrative theme (basing the game on an elaborate cover story), competition (providing prizes for points earned in the game), and choice (allowing players to choose the characteristics of the game characters and background). On one hand, there is some reason to suppose that such features could distract players away from the core educational content; on the other hand, these kinds of features could increase players’ motivation to persist in playing the game.

- Can games improve players’ cognitive skills? We do not have enough evidence to determine whether brain training games can be effective in improving a range of cognitive skills that transfer to new situations. To date, the strongest effects of game playing have been found when the tests of cognitive skill are very similar to the cognitive activities in the game. The issue of transfer has a long and disappointing history in education, so until there is convincing evidence to the contrary, we cannot assume that cognitive training in games will transfer to new skills and contexts.

- How can games be effectively integrated into traditional instructional contexts? Research is needed on when and how extensively to use games in training programs. Rather than asking whether games are more effective than conventional media in general, it makes more sense to determine specifically when and where games are more effective than conventional media.

- Are games more effective for certain types of learners, certain types of instructional objectives, and certain types of content? Clearly, we have a good start on the science of learning with games, but many questions are still open.

Chapter Reflection

- What role do you see for educational games in your training or educational programs?

- Which instructional objectives are best suited for game-like media?

- Do you see greater utility to adding games to existing courses or to converting existing courses into games?

- Under what conditions might the benefits of developing games outweigh the costs in terms of development time and expense?

COMING NEXT

This chapter concludes our review of the most recent evidence on how to most effectively design, develop, or select e-learning programs that lead to effective and efficient learning. In our next and final chapter, we integrate all of the guidelines presented throughout the book as well as look to the future of research and application of evidence in e-learning.

Suggested Readings

- Honey, M.A., & Hilton, M.L. (Eds.). (2011). Learning science through computer games and simulations. Washington, DC: National Academies Press. This is a consensus report commissioned by the National Research Council examining what the research has to say about the educational effectiveness of computer games and simulations in scientific and technical disciplines, particularly aimed at teaching complex skills.

- Mayer, R.E. (2014a). Computer games for learning: An evidence-based approach. Cambridge, MA: MIT Press. This book gives you an up-to-date review of what the research has to say about which instructional features improve game effectiveness (value added research), which kinds of games improve which kinds of cognitive skills (cognitive consequences research), and whether people learn better with games or conventional media (media comparison research).

- O’Neil, H.F., & Perez, R.S. (Eds.). (2008). Computer games and team and individual learning. Amsterdam: Elsevier. The editors asked chapter authors to summarize research on whether game playing improves learning outcomes and to offer guidance for designing effective games, particularly for adult learners.

- Tobias, S., & Fletcher, J.D. (Eds.). (2011). Computer games and instruction. Charlotte, NC: Information Age Publishing. Here you can pick from twenty-one chapters written by experts on the instructional effectiveness of educational games.

CHAPTER OUTLINE

- Applying the Evidence-Based Guidelines to e-Courses

- Evidence-Based e-Learning in a Nutshell

- Effect Sizes for Principles

- e-Lesson Guidelines Checklist

- Review of Sample 1: Excel for Small Business

- Review of Sample 2: Synchronous Excel Lesson

- Review of Sample 3: Automotive Troubleshooting Simulation

- Reflections on Past Predictions

- Fifteen Years Later

- Beyond 2016 in Multimedia Research

- More Productive Research Questions

- Longer Experimental Treatments with Measures of Delayed Learning

- More Research Conducted in Authentic Environments

- Increased Emphasis on Motivational Aspects of e-Learning

- Increased Emphasis on Metacognitive Aspects of e-Learning

- Increased Focus on the Efficiency of e-Learning

- Increased Emphasis on Assessment in e-Learning

- Increased Transfer of Research-Based Guidelines into Practice