CHAPTER 18

Applying the Guidelines

CHAPTER SUMMARY

This chapter consolidates all the guidelines we have discussed throughout the book by: (1) reviewing the effect sizes for most of the major instructional methods in the book, (2) presenting a checklist of guidelines in a single exhibit you can use as a working aid, and (3) discussing how the guidelines apply or are violated in three short e-learning examples. In this chapter you have the opportunity to consider how all of the guidelines may best apply to your own context.

As a result of a growing research repository on many of these principles, we have been able to expand and update the effect sizes we listed in earlier editions and add new guidelines to our checklist. To conclude, we review our predictions made in previous editions and look ahead to the future directions of multimedia research.

Applying the Evidence-Based Guidelines to e-Courses

The goal of our book is to help consumers and designers of e-learning make decisions based on empirical research and on the psychological processes of learning. In an ideal world, e-courseware effectiveness would be based on measurement of how well and how efficiently learners achieve the learning objectives. This evaluation requires a validation process in which learners are formally tested (using a validated test) after completing the training. In our experience, formal course validation is rare. More often, consumers and designers look at the lesson features or student ratings rather than the learning outcomes to assess its effectiveness. We recommend that, among the features assessed, you include the research-based guidelines we have presented. We recognize that decisions about e-learning alternatives will not be based on evidence alone. A variety of factors shape e-learning decisions, including the desired outcome of the training, the culture of the organization sponsoring the training, the technological constraints of the platforms and networks available to the learners, and pragmatic issues related to politics, time, and budget. That is why you will need to adapt our guidelines to your unique context.

Your technological constraints and development resources will determine whether you will develop and deliver courseware with low-memory-intensive media elements like text and simple graphics or whether you can include media elements that require greater resources, such as video, audio, animation, and simulations. If you are planning an Internet or intranet course, you can engage learners by using collaborative facilities, including discussion boards, chats, and breakout rooms.

Evidence-Based e-Learning in a Nutshell

In consolidating the principles summarized throughout the book, we can make a couple of general statements about the best use of media elements and instructional methods for multimedia instruction intended for novice learners, who are most susceptible to mental overload. In situations that support audio, best learning will result from concise informal narration of relevant graphics. (Please refer to Chapter 6 for exceptions.) In situations that preclude audio, best learning will result from concise informal written explanations of relevant graphics in which the corresponding text and graphic are placed near each other on the screen. In all cases, learning of novices is best promoted by dividing content into short segments and allowing learners to control the rate at which they access each segment. In addition, in lessons of any complexity, the pretraining principle recommends sequencing supporting concepts prior to the process or procedure that is the focus of the lesson.

To support engagement, novice learners benefit from instructional methods that promote generative learning, including worked examples accompanied by self-explanation questions, supported drawing assignments, job-relevant questions, peer teaching assignments, and individual or collaborative problem solving on challenging authentic problems. Learning also benefits from task-focused feedback that includes an explanation of why a particular response is correct or incorrect. Although we have much to learn about their design and best application, scenario-based learning and educational games are emerging as effective high-engagement multimedia approaches.

Effect Sizes for Principles

Table 18.1 lists the average effect sizes on tests of learning for most of the major themes in this book. Recall from Chapter 3 that effect sizes tell us the proportion of a standard deviation of test score improvement you gain when you apply that principle. For example, if you apply the multimedia principle, you can expect an overall test score improvement of at least one standard deviation greater than the same lesson without visuals. For our purposes, we suggest that any effect size greater than 0.5 indicates a practical improvement worth applying. Table 18.1 lists the number of comparisons on which the effect size is based, as well as the source of the data. In general, a higher effect size based on a larger number of comparisons suggests a more robust principle. All things being equal, feedback is one of the more powerful methods you can use, with an effect size of nearly 0.8 based on nearly seven thousand comparisons. In contrast, we can see that learner control has a negligible effect size, supporting our recommendation for program control in most situations (as described in Chapter 15).

Table 18.1. Summary of Median Learning Effect Sizes for e-Learning Design Principles.

| Principle | Median Effect Size | Number of Comparisons | Source |

| Multimedia | 1.39 | 11 | Mayer, 2009 |

| Contiguity | 1.10 | 22 | Mayer & Fiorella, 2014 |

| Coherence | 0.86 | 23 | Mayer & Fiorella, 2014 |

| Modality | 0.76 | 60+ | Mayer & Pilegard, 2014 |

| Redundancy | 0.86 | 16 | Mayer & Fiorella, 2014 |

| Personalization: Informal Language Voice Image |

0.79 0.74 0.20 |

17 6 14 |

Mayer, 2014d |

| Embodiment | 0.36 | 11 | Mayer, 2014d |

| Segmenting | 0.79 | 10 | Mayer & Pilegard, 2014 |

| Pretraining | 0.75 | 16 | Mayer & Pilegard, 2014 |

| Worked Examples | 0.57 | 151 | Hattie, 2009 |

| Worked Example with Engagement | 1.00 | NR* | Renkl, 2014 |

| Generative Methods: Self-Testing (Practice Questions) Peer Teaching Self-Explaining Drawing |

0.62 0.77 0.61 0.40 |

47 19 54 28 |

Fiorella & Mayer, 2015 |

| Feedback | 0.73 0.72 | 196 8 | Hattie, 2009 Johnson & Priest, 2014 |

| Learner Control | 0.05 | 29 | Karich, Burns, & Maki, 2014 |

| Cooperative Learning | 0.59 | 284 | Hattie, 2009 |

| Critical Thinking Interventions: Overall Generic Overall Domain Specific Overall Uses Authentic Problems Includes Dialog Authentic + Dialog + Mentor |

0.30 0.30 0.57 0.25 0.23 0.57 |

341 341 97 22 43 19 |

Abrami et al., 2015 |

| Games vs. Traditional: Science Second Language |

0.69 0.96 |

16 5 |

Mayer, 2014a |

| Methods That Improve Learning from Games: Modality Personalization Pretraining Coaching Self-Explanation |

1.41 1.54 0.77 0.68 0.81 |

9 8 7 7 6 |

Mayer, 2014a |

*NR = Not Reported

Keep in mind, however, that you cannot directly compare these effect sizes because they represent average values drawn from many studies using diverse learners, materials, and conditions. Furthermore, as we have seen, most of the principles are most effective under specified situations called boundary conditions. For example, feedback is most effective when it provides an explanation of the task or the process to complete the task.

With these caveats in mind, you can see that most of the principles we have summarized have effect sizes that exceed our 0.5 guideline. Effect sizes lower than 0.5 indicate that the particular method may not be worth implementing. As you review the table, also consider the cost-benefit of the different methods. For example, if two methods have an effect size of .56 and one is easy and inexpensive to implement, you may emphasize that method over the other. Other factors to consider are efficiency of learning and learner motivation. Methods with lower but positive effect sizes that lead to more efficient learning or to greater learner motivation may be worth prioritizing higher than methods with higher learning effect sizes. We anticipate that, in the future, we will have more research data on the motivational and efficiency benefits of these methods to provide you with a broader perspective from which to make application decisions.

e-Lesson Guidelines Checklist

In this section we offer three brief examples of how the most important guidelines might be applied (or violated) in e-learning courses. Two of the samples reflect a directive architecture for teaching Excel skills—one asynchronous and the other synchronous. The third sample is a simulation based on a guided discovery architecture designed to give automotive technicians practice in troubleshooting.

We do not offer these guidelines as a rating system, and we don’t claim to have included all the important variables you should consider when evaluating e-learning alternatives. Furthermore, which guidelines you will apply will depend on the goal of your training and the environmental considerations mentioned previously. Instead of a rating system, we offer these guidelines as a checklist of research-based features you should consider in your e-learning design and selection decisions.

We have organized the guidelines in Exhibit 18.1 by chapters and according to the technological constraints and training goals for e-learning as summarized in Table 18.2.

Table 18.2. Organization of Guidelines in Exhibit 18.1.

| Guidelines | Apply to |

| 1 to 28 | All forms of e-learning |

| 29 to 46 | e-Learning designed to teach job tasks |

| 47 to 53 | e-Learning with collaborative facilities |

| 54 to 57 | Design of asynchronous e-learning navigation |

| 58 to 67 | e-Learning to build thinking skills and for games |

Feel free to make a copy of Exhibit 18.1 for easy reference as you review the samples to follow.

Review of Sample 1: Excel for Small Business

Figures 18.1 through 18.6 are screen captures from an asynchronous directive Excel lesson. The course is designed to help small business owners use spreadsheets. The course design assumes that learners are new to spreadsheets and Excel. Some of the learning objectives include:

- To identify and name cells

- To construct formulas for common calculations

- To use Excel functions

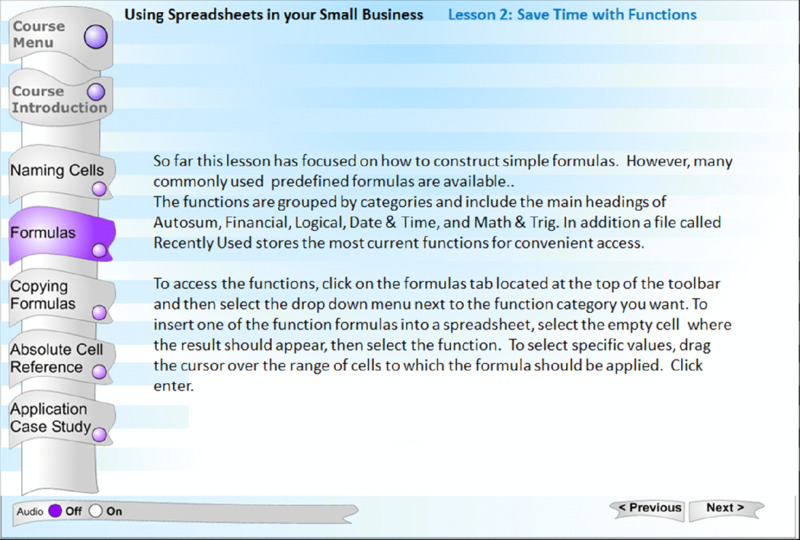

Figure 18.1 What’s Wrong Here?

Take a look at Figure 18.1 on the topic of functions in Excel, review guidelines 1 through 28 and make a list of which guidelines you feel are violated. Then look at Figures 18.2 and 18.3. Put a check by the violations on your list that are remedied in the revisions shown in Figures 18.2 and 18.3. When you are finished, compare your analysis to ours.

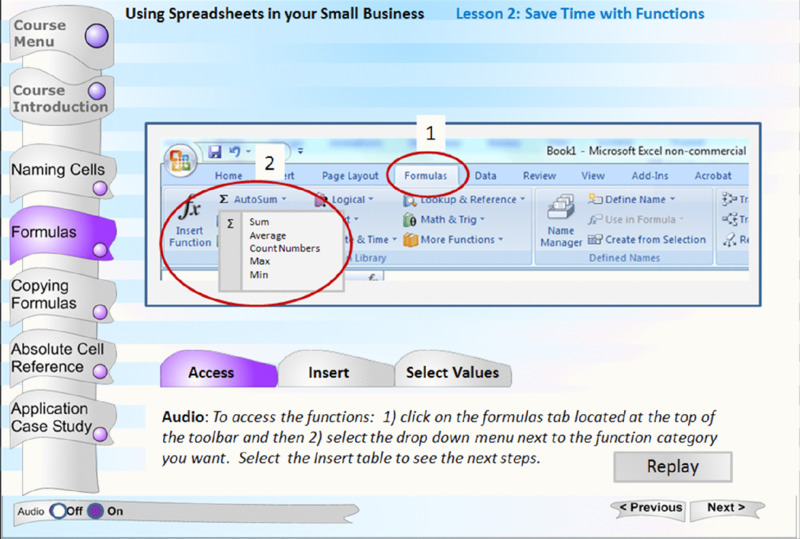

Figure 18.2 What Guidelines Are Applied in This Revision of Figure 18.1?

Figure 18.3 What Guidelines Are Applied in This Revision of Figure 18.1?

As you can see, the screen in Figure 18.1 includes a lot of text presenting an introduction to and procedure for using functions in Excel. Clearly, it violates the multimedia and coherence principles. The revised screen in Figure 18.2 applies the multimedia principle by incorporating a visual of the relevant tool bar as well as the coherence principle by presenting only a small amount of text on the screen. Applying the segmenting principle, the procedure is broken into a few steps organized with the tabs for Access, Insert, and Select Values. Steps are displayed with callouts to maximize contiguity between text and graphics. The revised screen in Figure 18.3 applies the modality principle by using brief audio rather than text to present a few steps at a time. It also helps direct attention to the relevant portion of the visual through the use of cueing circles and numbers corresponding to the steps. As with any audio, controls allow the learner to replay as desired. Since the audio does not repeat on-screen text, the redundancy principle is not violated.

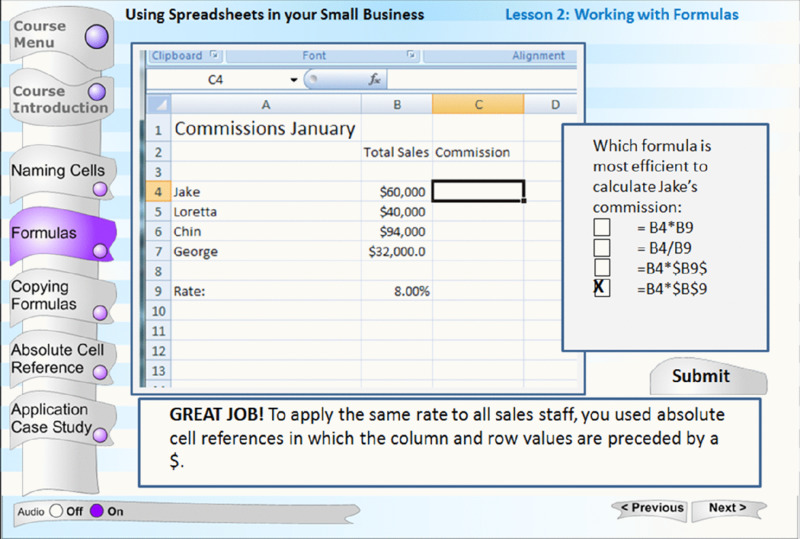

Next take a look at Figure 18.4 and refer to guidelines 29 through 46. Make a list of ways you think this practice exercise could be improved. Then look at a revision in Figure 18.5 and note which violations in your list have been remedied. Are there any further improvements you would make to the revision in Figure 18.5?

Figure 18.4 What’s Wrong Here?

Figure 18.5 What Guidelines Are Applied in This Revision of Figure 18.4?

Figure 18.4 shows a practice exercise with feedback. We note the following major problems. First, the practice question is a recall or regurgitate question. While recall is needed on occasion, we recommend that for most workforce learning applications, you rely on higher-level application questions. Second, the practice directions and input boxes are separated from the spreadsheet, requiring the learner to expend mental effort integrating the two. We recommend better layout contiguity. Third, note that the feedback tells the learner that the answer is incorrect but does not give an explanation. Either correct or incorrect answers may be the result of guessing, so providing an explanation for all response options improves learning. Some of these shortcomings are improved in Figure 18.5. The question is at an application level, is more contiguous with the spreadsheet, and explanatory feedback is provided. However, the feedback statement “Great Job” may draw attention to the ego rather than the task. Research on feedback recommends that praise be avoided in lieu of explanations that focus on the task or process.

Our final screen sample from the asynchronous Excel course in Figure 18.6 shows a worked example with a self-explanation question. The lesson has demonstrated inputting an incorrectly formatted formula in Cell E6 to calculate February profit. The self-explanation question requires the learner to evaluate the demonstration by identifying the error in the formula.

Figure 18.6 Use of Self-Explanation Question to Promote Engagement with an Example.

Review of Sample 2: Synchronous Excel Lesson

Figures 18.7 through 18.10 are taken from a virtual classroom lesson on How to Use Excel Formulas. Synchronous e-learning continues to gain market share in e-learning solutions since our third edition, and you can apply most of the principles in this book to virtual classroom lessons. The goal of the sample lesson is to teach end-user spreadsheet procedures. The lesson objectives are:

- To construct formulas with valid formatting conventions

- To perform basic calculations using formulas in Excel

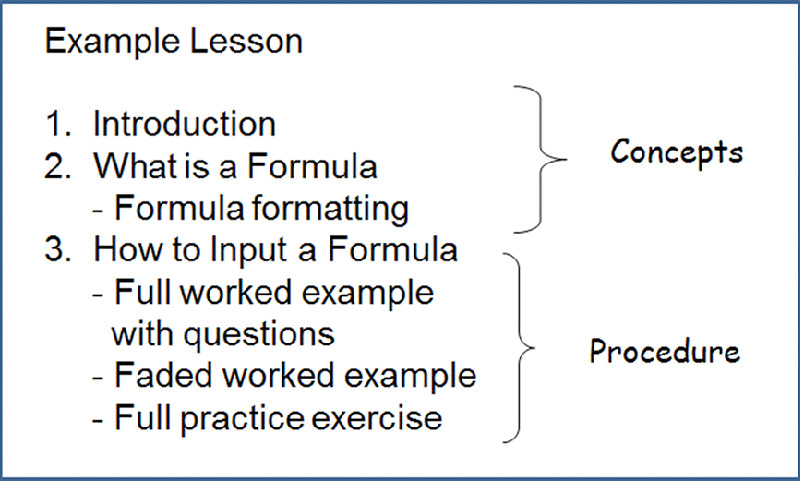

Figure 18.7 Content Outline of Synchronous Excel Lesson.

Figure 18.7 shows a content outline. In applying guideline 17 based on the pretraining principle, the procedural part of the lesson is preceded by important concepts. Before learning the steps to input a formula in Excel, the lesson teaches the concept of a formula, including its formatting conventions. When teaching the procedures, the lesson applies guidelines for worked examples by starting with a full worked example accompanied by self-explanation questions and fades to a full practice exercise.

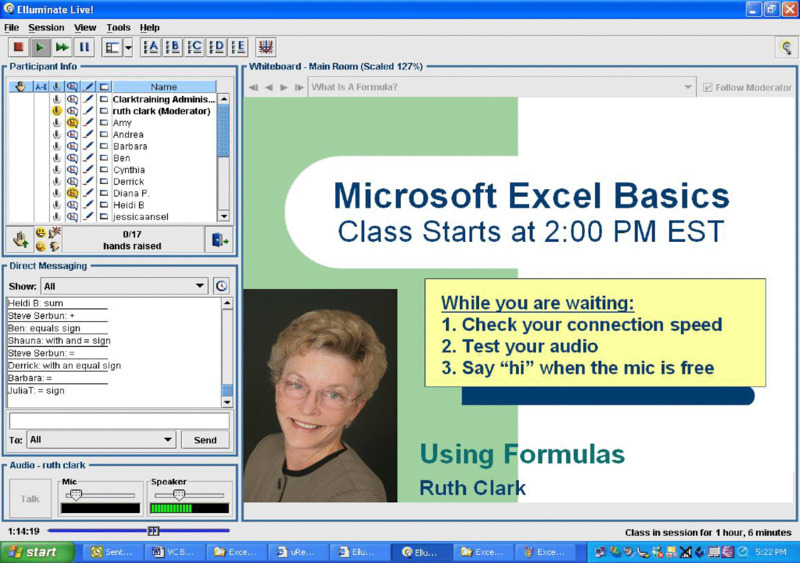

Although virtual classroom tools can project a video image of the instructor, in this lesson the instructor uses audio alone. Research we reviewed in Chapter 9 shows that it is the voice of a learning agent—not the image—that is most instrumental in promoting learning. Since the main instructional message is displayed on the whiteboard slides, the instructor decided to minimize the potential for split attention caused by a video image. The introductory slide is shown in Figure 18.8. The instructor places her photo on this slide to implement guideline 53 to promote social presence. The instructor further builds social presence by inviting participants to use their audio as they join the session and by calling them by name during the session. One of the advantages of the virtual classroom is the opportunity to leverage social presence during learning through chat and audio participation of the learners as well as through collaboration in breakout rooms.

Figure 18.8 Introduction to Synchronous Excel Lesson.

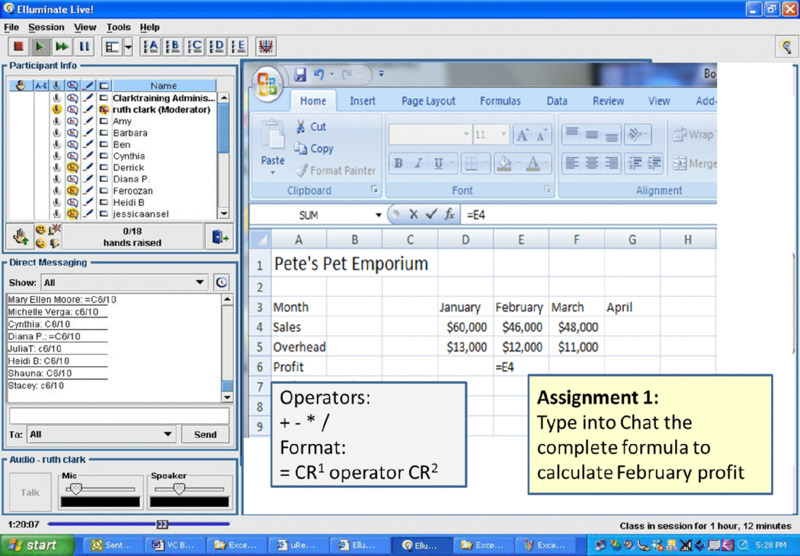

Figure 18.9 illustrates guidance in the form of example fading and memory support in the virtual classroom. The spreadsheet window in the center of the virtual classroom interface is projected to the learners through application sharing. The instructor has completed the first step in the procedure by typing the equal sign and the first cell reference into the correct spreadsheet cell. The instructor asks participants to finish the example by typing the rest of the formula in the chat window. Note that in applying guideline 20, the directions are displayed on the screen in text, since participants need to refer to them as they work the exercise. Additional memory support is provided in the left-hand box on the spreadsheet, which displays the valid operator syntax. The amount of guidance in this example should be faded as the lesson progresses.

Figure 18.9 Guidance from Faded Worked Example and Memory Support.

From Clark and Kwinn, 2007.

From this brief look at some virtual classroom samples, you can see that just about all of the principles we describe in the book apply. Because the class proceeds under instructor rather than learner control, it is especially critical to apply all guidelines that reduce extraneous mental load. Lesson designers should create effective visuals to project on the whiteboard that will be described verbally by the instructor, applying the multimedia and modality principles. The instructor should use a conversational tone and language and incorporate participant audio to apply personalization. Skill-building classes can apply all of our guidelines for faded worked examples and effective generative methods to promote relevant engagement. The presence of multiple participants in virtual sessions lends itself to collaborative projects. Most virtual classroom tools offer breakout rooms in which small teams can carry out assignments. Apply guidelines 47 through 53 as you plan collaborative activities. As with asynchronous e-learning, instructors should minimize irrelevant visual effects, stories, themes, or audio in accordance with the coherence principle.

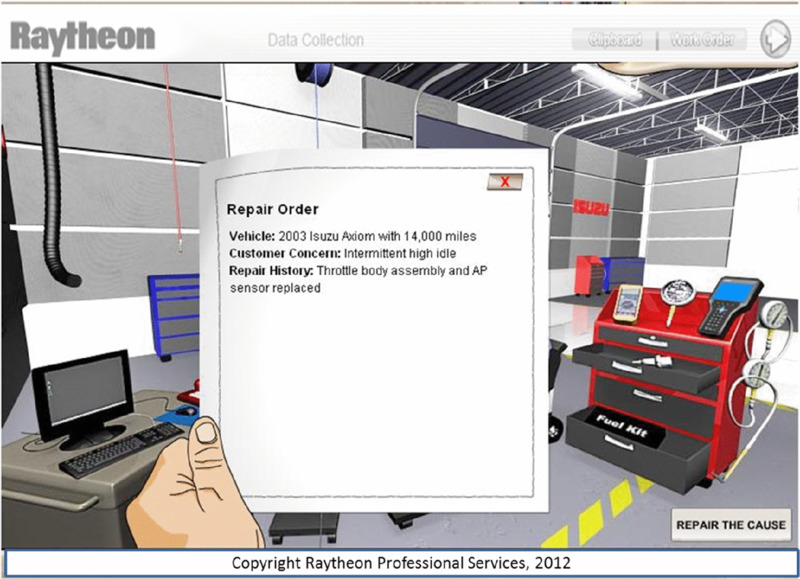

Review of Sample 3: Automotive Troubleshooting Simulation

In Chapter 1 we identified the opportunity to accelerate expertise as one of the unique promises of digital learning environments. Figures 18.10 through 18.13 are from a simulation designed to give experienced automotive technicians compressed opportunities to practice unusual troubleshooting situations. The learner starts with a point of view perspective in the auto shop that includes all common troubleshooting tools. In Figure 18.10 you see the trigger event for the case in the form of a work order. Typical of guided discovery learning environments, the learner is free to use various tools in the shop to diagnose and repair the failure. There are several sources of guidance. First, a telephone offers technical advice. Second, the computer opens to the actual reference system the technician uses on the job. Third, if the learner clicks on a tool that is irrelevant to the current problem, the system responds that the test is not relevant to this problem. This response constrains the environment in order to guide learners to the specific tests relevant to the case.

Figure 18.10 Work Order Triggers Automotive Troubleshooting Case.

With Permission from Raytheon Professional Services.

Figure 18.11 Computer Offers Technical Guidance During Troubleshooting Case.

With Permission from Raytheon Professional Services.

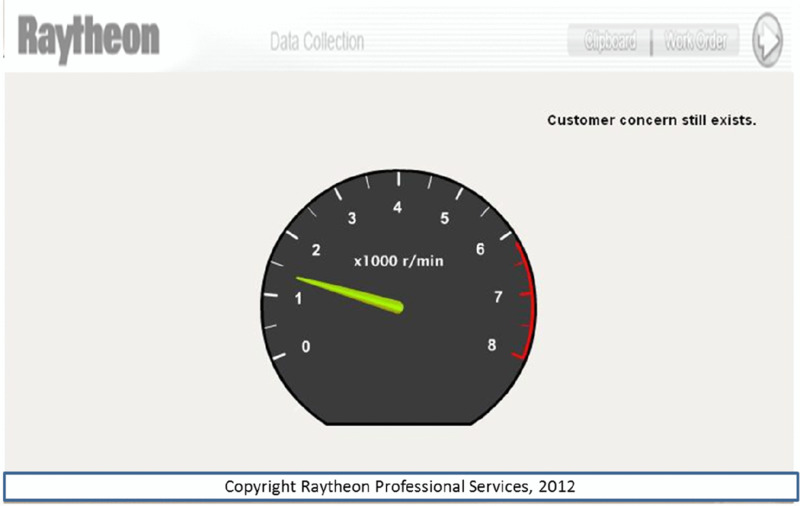

Figure 18.12 Continuing High Idle Shows That the Correct Diagnosis Was Not Selected.

With Permission from Raytheon Professional Services.

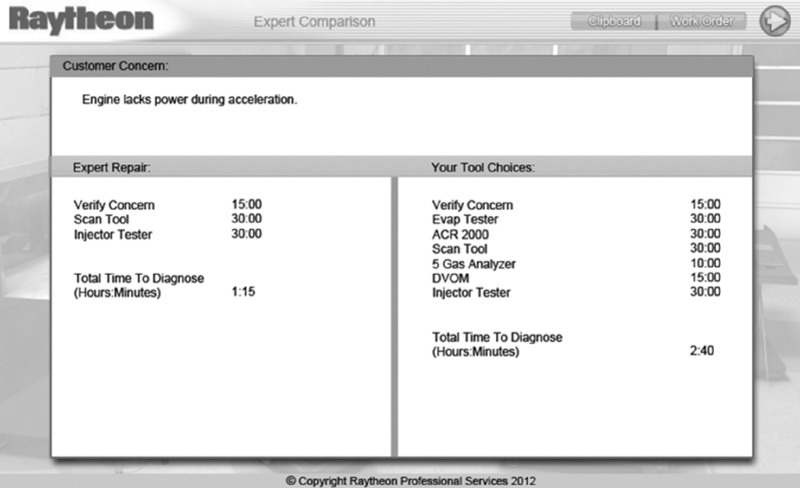

Figure 18.13 End of Troubleshooting Simulation Allows Student-Expert Solution Comparisons.

With Permission from Raytheon Professional Services.

If the learner selects an incorrect failure and repair action, the high idle indicated in Figure 18.12 shows the learner that the failure has not been resolved. Once the case is correctly resolved, the learners get feedback and an opportunity for reflection by comparing their activities in the right window with those of an expert shown in the left window in Figure 18.13.

This lesson applies most guidelines 58 through 64 applicable to e-learning to build thinking skills. By situating the learner in a typical automotive shop, she has virtual access to the tools and resources she would have on the job. The goal, rules, activities, and feedback of the simulation are all aligned to the desired learning outcome, that is, to promote an efficient troubleshooting process to identify and correct the failure. Learners can see a map of their steps and compare it with an expert approach. Thus, the lesson focuses not only on finding the correct answer but on how the answer is derived. There are several sources of structure and guidance available congruent with guideline 62.

Since the structure of the case study is guided discovery, it emphasizes learning during problem solving. Regarding navigation, there is a high level of learner control. Overall, our assessment is that this course offers a good model for simulation environments designed for workers with relevant background knowledge and experience.

Reflections on Past Predictions

In the following section, we review our predictions from our first edition, followed by our observations fifteen years later. Because developing e-learning material for workforce learning is an expensive commitment, we had predicted more examples of online training that apply guidelines proven to lead to return on investment. Specifically, we made the following predictions:

- Fewer Las Vegas–style courses that depress learning by overuse of glitz and games. Instead, the power of technology will be leveraged more effectively to support acquisition and transfer of job-related skills.

- More problem-centered designs that use job-realistic problems in the start of a lesson or course to establish relevance, in the body of the lesson to help learners build related knowledge and skills, and at the end of the lesson to provide practice and assessment opportunities.

- More creative ways to blend computer technology with other delivery media so that the features of a given media are best used to support ongoing job-relevant skill requirements.

Fifteen Years Later

Over the past fifteen years we have seen a gradual increase in the proportion of courses delivered digitally. Fifteen years ago only a little over one-tenth of all learning was delivered via digital devices. By 2013 digital learning accounted for nearly 40 percent of all workforce learning media. Refer to Figure 1.4 (page 15) for a chart showing the growth of technology-delivered training. The growing proportion of online learning reflects (1) cost savings during a time of economic retraction, (2) more pervasive and efficient technology in terms of bandwidth, digital devices, and authoring systems, and (3) growing familiarity with and reliance on technology, including mobile devices. Although we predict that the proportion of electronic delivery will continue to grow with the expansion of training and performance support on mobile devices, at the same time we believe that face-to-face training will continue to account for a substantial proportion of instructional delivery. Better learning through distributed practice will be supported by blends of face-to-face and digital learning.

e-Learning implementations will continue to expand beyond training to include integrated knowledge management resources, including traditional online references, resources, and collaborative tools workers can access during job task completion. For example, if a sales person is writing her first proposal, the company website will offer industry-specific information, sample proposal templates, social networks to mentors, and recorded mini lessons on proposal success.

Scenario-based learning and games are two high-engagement environments that have gained significant attention since our first edition. Just in the past few years, sufficient research on games and scenario-based e-learning has accumulated to support two evidence-based books: Computer Games for Learning (Mayer, 2014a) summarized in Chapter 17 and Scenario-Based e-Learning (Clark, 2013). The research repository has helped us ask and answer more effective questions about these high-engagement environments, such as What specific features improve learning from games and scenario-based e-learning? and For what kinds of skills are games and scenario-based e-learning most effective? As the evidence on games and scenario-based learning grows and is internalized by training professionals, we anticipate expanded use of these environments.

Beyond 2016 in Multimedia Research

In this fourth edition, we have been able to add principles and to qualify previous principles based on the expanding research base that is our primary source of guidance. For some principles, so many experiments have been published that meta-analytic studies offer us opportunities to not only recommend guidelines with greater confidence but also to qualify the situations in which those guidelines will be most applicable. For example, a recent review of the modality principle (use audio to describe complex visuals) included more than sixty research studies comparing learning from lessons that presented words in audio with lessons that presented the same words in written text (Mayer & Pilegard, 2014). A median effect size of 0.76 was reported based on diverse lessons that focused on lightning, fish locomotion, graph reading, science games, geometry, and electric circuits, to name just a few. This substantial effect size based on a large number of experiments gives us greater confidence in the modality principle. In addition, meta-analysis also gives insight into the boundary conditions under which any given principle is most effective. For example, the modality principle applies most when learners are novice to the content and when the audio segments are brief.

What changes do we look for in multimedia research over the next ten years? Our predictions follow.

More Productive Research Questions

Research questions have evolved over the past fifteen years. Early research often focused on whether a given instructional approach was better than traditional face-to-face training. Evidence accumulated over a period of time showed that a better question is: Which instructional techniques are more effective for specific learner populations and learning goals? For example, rather than asking whether games are more effective than traditional lessons, a more productive line of questioning asks: Which features of games make them more effective for learning? We project that reframed and focused research questions such as these will lead to more robust guidelines for practitioners.

We anticipate that, in addition to more research on “What works?” (including replications that probe the robustness of design principles), we will see more research investigating “When does it work?” and “How does it work?” Concerning when a principle works, we expect more research pinpointing the boundary conditions of design principles, particularly whether they work better for certain kinds of learners or learning objectives. Concerning how a principle works, we expect advances in how research contributes to learning theory, so we can have a better idea of cognitive processes during e-learning, such as selecting relevant information, mentally organizing information into a coherent structure, and integrating incoming information with relevant prior knowledge.

Longer Experimental Treatments with Measures of Delayed Learning

Many of the experimental treatments we have to date are quite brief, ranging from just a few minutes up to an hour in length. In addition, learning is generally measured within a few minutes after completion of the lesson. We look forward to more studies that assess the effects of lessons of longer duration—both immediately after completion as well as at a later time period. For example, the spacing effect—the finding that learning is better from spaced practice items than from massed practice items—is generally only seen on a delayed test. Had researchers not measured delayed learning, we would not know of that principle. We anticipate more studies that will report both immediate and delayed learning.

More Research Conducted in Authentic Environments

Many early studies on e-learning principles were conducted as controlled laboratory experiments, with college students as the participants. This work has provided the basis for many design principles. In the future, we expect lab studies to be complemented with more experiments that examine learning by actual trainees in realistic training environments. Expanding the scope of research to include more realistic learning environments can provide more rigorous tests of design principles, yielding insight into when they work best, and can strengthen research-based learning theories that apply beyond the lab.

Increased Emphasis on Motivational Aspects of e-Learning

Most research to date focuses on the learning outcomes of instructional treatments, but a less-studied issue concerns the role of motivation—reflected in the learner’s effort during instruction. We know that asynchronous e-learning is associated with low completion rates, which suggests low motivation by the learners. An instructional method or approach that generates greater motivation than another may lead to increased persistence and effort by learners. It would be useful to have more research studies that measure not only learning outcomes but also motivation. The call for including measures of motivation in addition to learning outcome measures is particularly relevant to research on games for learning, because games are intended to prime motivation in players, reflected in persistence in the game. We predict greater emphasis on motivation measures in future research studies.

Increased Emphasis on Metacognitive Aspects of e-Learning

e-Learning in authentic situations requires learners to actively manage their cognitive processing, including monitoring how well they are learning and adjusting their learning processes accordingly. Preliminary research we discussed in Chapter 15 shows that learners often lack appropriate metacognitive skills, such as knowing how to make choices about what to do next in an e-learning course that offers a lot of choices. Conducting this kind of research requires more in-process measures of what the learner is doing during the learning process, including cognitive neuroscience measures of brain activity, eye-movement measures, physiological measures, as well as data mining of learning activity logs. We foresee future research that examines learners’ cognitive processing during learning in addition to learning outcomes and that examines how to prime or even teach effective metacognitive strategies in learners.

Increased Focus on the Efficiency of e-Learning

To date, most research has sought to determine which instructional design features and instructional methods foster better learning. However, instructional effectiveness must be balanced against costs, including the time learners need to achieve an instructional objective, the time developers need to build a learning environment, and the expense involved. Efficiency measures, regarding both time to achieve learning objectives as well as time to design and develop learning environments, are other important data that can inform practitioner decisions. We expect future research to increasingly include a focus on efficiency on e-learning.

Increased Emphasis on Assessment in e-Learning

The focus of research over the past fifteen years continues to be on improving learning outcomes as measured after lesson completion, but a related issue concerns how to assess how well people are learning during e-learning. Data mining based on analysis of learner activity during learning, such as recording the timing of each keystroke during learning, and on analysis of error patterns in problem solving during learning can lead to adaptive learning systems that individualize learning. Embedded testing—in which assessing learning outcomes is a part of the instructional program—can help us develop ways to use assessment to guide instruction. We expect future research to increasingly examine the place of assessment in e-learning.

Increased Transfer of Research-Based Guidelines into Practice

Research results on applied questions, such as “Should I explain a visual with on-screen text or audio narration?” should translate into improved learning products by practitioners. Research has not always translated into practice in the past because (1) materials and tasks used in experiments were not representative of real-world training settings, (2) practitioners were not aware of research results applicable to their work, and (3) many practitioners and their clients consider themselves learning experts as a result of their years in educational settings. We are encouraged by the evidence-based practice emphasis in the allied health professions, which we believe will spread into educational and training domains. We project a closer alliance between researchers and practitioners mediated by books such as this one, professional societies, joint projects, and educational institutions.

The future is likely to bring continuing advances in educational technology, including improvements in mobile technology, virtual reality, social media, and gaming. However, in spite of the impressive changes in technology, there are some things we hope will not change in the future: (1) using rigorous scientific methodology (including experiments with random assignment of subjects, control groups, and appropriate measures of learning outcomes and processes); (2) focusing on educationally relevant learning tasks to help address practical questions; and (3) grounding research in cognitive learning theory to help address theoretical questions. We expect research on the design of e-learning to maintain high standards of scientific methodology with a balanced focus on both practical and theoretical implications. In short, what should not change is a commitment to evidence-based practice.

In summary, we have seen a healthy accumulation of evidence-based guidelines for the design of e-learning over the past fifteen years. We eagerly look to advances in the quantity, quality, and dissemination of future research that will help guide practitioner decisions in the selection, design, and development of e-learning.

In Conclusion

We began this book in Chapter 1 with a summary of the unique promises and pitfalls inherent in digital technology for instruction. We hope that the guidelines and evidence that we have described in this fourth edition will be a resource that minimizes the pitfalls and optimizes the promise of multimedia learning in your instructional environments.