So far, we have primarily discussed vertex and fragment shaders that take meshes, transform their vertices onto the screen, and color the pixels. Most shaders take this form. We’ve already seen the power of these types of shaders and the broad range of capabilities they have, but they are not the only types of shaders. In the shader pipeline, there are two optional stages that we have not yet encountered: the tessellation shader and the geometry shader. On top of that, there are compute shaders, which operate outside the usual mesh shading pipeline and can be used for arbitrary calculations on the GPU. In this chapter, we will explore some of these strange and exotic new types of shaders and add ever-powerful new tools to our box of tricks.

Tessellation Shaders

A rendering graphics pipeline converts the raw vertex data to per fragment operations through the vertex, tessellation and geometry shader, rasterization, and fragment shader.

The shader pipeline stages. In this example, a quad mesh is turned into a grassy mesh with blades – new geometry – growing out of new vertices created during the pipeline

The tessellation shader can create new vertices by subdividing an existing face of a mesh.

The tessellation shader can create new vertices on the edges or within the face of each triangle of a mesh.

- Calling it a “tessellation shader” is slightly misleading because there are actually two programmable stages and one fixed-function stage involved in the process:

The tessellation control shader (TCS), also called the hull shader, defines how much tessellation should be applied to each face. This shader is not required for tessellation, although we will be including it in each example.

It receives a patch made up of a small handful of vertices, and it can use information about those vertices to control the amount of tessellation. Unlike vertex shaders, hull shaders can access data about multiple vertices at once. We can configure the number of vertices in each patch.

The tessellation primitive generation fixed-function stage, also called the “tessellator,” is situated between the two programmable stages. It creates new primitives (i.e., triangles or quads) based on the hull shader output.

The tessellation evaluation shader (TES), also called the domain shader, is responsible for positioning the vertices output by the tessellator.

Although the domain shader is typically used to interpolate the position of new vertices based on the positions of existing vertices, you may change the position however you want. It is commonly used to offset the positions of vertices.

If some of this went over your head on the first read-through, don’t worry – it clicked for me a lot more the first time after I worked through an example. There are many cases where tessellation can be used to achieve more aesthetically pleasing results, so let’s start with a water wave effect.

Tessellation is often seen as a more advanced shader feature – after all, it’s completely optional, and there are many moving parts to it. I’ve put it in the “Advanced Shaders” chapter for that reason. But keep in mind that, like any other shader, it’s just made up of relatively small functions! Take the code I’ve written and try adding, removing, and hacking bits of it around to see what changes on-screen – hands-on experience will likely help you deepen your understanding of tessellation.

Water Wave Effect with Tessellation

A pair of screens represent the triangular sheet of a wave mesh shaded lightly without tessellation, and the subdivision of each wave quad results in a smoother wave mesh.

Two instances of Unity’s built-in plane mesh with a wave effect applied and different tessellation settings used. On the left, no tessellation is used. On the right, each quad is subdivided eight times, resulting in smoother waves

A screenshot lists the menu under the shading mode, where the shaded wireframe is selected.

Changing the Shading Mode to Shaded Wireframe

We’ll start by creating the effect in shader code and then see how it works in Shader Graph.

In Unity 2021.3 LTS, tessellation is only compatible with HDRP Shader Graph, so unfortunately, we won’t be able to use URP Shader Graph for this effect.

Wave Tessellation in Shader Code

The wave tessellation shader skeleton

URP RenderPipeline tag

URP forward pass tag

Required files for the built-in pipeline

Required files for URP

Wave shader properties

Adding wave shader properties in the built-in pipeline

Adding wave shader properties in URP

#pragma statements for tessellation

Now, you may have noticed something strange in Listing 12-1. Not only do we have the vert function but I’ve added an additional function named tessVert, which looks suspiciously like an extra vertex shader function. Here’s why. Ordinarily, the vertex shader is used to transform data about meshes from object space to clip space, but this shader will be different; I want to offset the vertices of the mesh upward in world space after the tessellation shader has run (indeed, the entire point of the shader is to smooth out the shape of those waves). However, the vertex shader always runs first. Therefore, I’m supplying two vertex functions: one called vert, which is “officially” the vertex shader for this file, and another called tessVert, which I will run manually after all tessellation has been applied.

Wave Vertex Shader

The appdata struct for the wave effect

The tessControlPoint struct for the wave effect

The vert vertex function

That’s the vertex stage complete, and we can move on to the hull shader.

Wave Tessellation Control (Hull) Shader

The tessHull function takes in a patch of control points.

Think of a patch as a single polygon. It can be between 1 and 32 control points, but in our case, we’ll just use 3, which is a triangle.

The hull shader can access all control points in that patch.

The first parameter to the hull shader is the patch itself. We specify what data each vertex holds (in our case, each one is a tessControlPoint) and how many vertices are in the patch (3).

The second parameter is the ID of a vertex in the patch. The hull shader runs once per vertex and outputs one vertex per invocation.

The output of tessHull will just be one vertex. We’ll use the ID parameter to grab a vertex from the patch and then return that vertex.

- To tell Unity we are using triangles, we’ll need a few attributes:

The domain attribute (not to be confused with the domain shader) takes the value tri. Other possible values are quad or isoline – these values depend on what type of mesh you have.

The outputcontrolpoints attribute is used to define how many control points are created per patch. We’ll use the value 3.

The outputtopology attribute is used to define what primitive types should be accepted by the tessellator. This is also based on the mesh used. In our case, we’ll use triangle_cw, which means triangles with clockwise winding order. Other possible values are triangle_ccw (i.e., counterclockwise winding order), point, and line.

The tessHull function

integer – Snap tessellation factors to the next highest integer value. All subdivisions are equally spaced.

fractional_even – When using non-integer factors, an extra subdivision will appear when going between one even-numbered factor and the next. This subdivision is not equally spaced with nearby subdivisions – it grows as the tessellation factor increases until you hit an even number.

fractional_odd – Same as fractional_even, but the changes apply to odd-numbered factors instead.

pow2 – This seems to be the same as integer in the cases I tried out.

Partitioning and patch constant function attributes

An illustration depicts the three shaded quadrilaterals of a unity quad, fragmented into one, two, and four quads with different tessellation factors.

Three versions of the default Unity quad with different tessellation factors. From left to right, the edges and the inside of the mesh use 1, 2, and 4 as their tessellation factors, respectively

The tessFactors struct

The patch constant function

Now that we have handled both sides of the hull shader, we can move on to the domain shader.

Wave Tessellation Evaluation (Domain) Shader

The tessellator takes the control points output by the hull shader and the tessellation factors output by the patch constant function and calculates new control points, which it passes to the domain shader. The domain shader is invoked once per new control point; the parameters to the domain shader are the tessellation factors from the patch constant function, the patch output by the hull shader, and a set of coordinates. These are the barycentric coordinates of the new point, which denote how far the new vertex is from the original three control points on the triangle. For example, a vertex with barycentric coordinates (0.5, 0.5, 0) lies exactly on the halfway point of one of the triangle’s edges. These coordinates use the SV_DomainLocation semantic.

The tessDomain function

Remember that at this point, all the calculations have been operating in object space – it is still necessary to transform from object to clip space. That’s what the tessVert function will do.

Wave Tessellation tessVert Function and Fragment Shader

We will transform the vertices to world space with unity_ObjectToWorld, a matrix that is provided by Unity.

- Then, we’ll apply a height offset based on the time since level start, the _WaveSpeed property value, and the x- and z-positions of the vertex in world space.

By applying a sine function to those variables, the waves will bob up and down over time.

We’ll then multiply the offset by _WaveStrength so that we have control over the physical size of the waves and add it to the y-position in world space.

We can then transform the position from world to clip space with UNITY_MATRIX_VP. We need the positions to be in clip space before rasterization, so we’re finished with positions now.

Finally, we’ll use TRANSFORM_TEX to deal with tiling and offsetting the UVs.

The tessVert function

The fragment shader

With that, you should be able to see tessellation on your objects using this shader, as in Figure 12-2. Obviously it’s difficult to showcase the quality of an animation in a book, so play around with the tessellation factors in the code to see how it impacts the smoothness of the waves on your own computer. You will probably be able to find a sweet spot where the waves start looking smooth, and increasing the tessellation factors past that point has diminishing returns. Now that we have created tessellated waves in shader code, let’s see how the same can be achieved with Shader Graph.

Wave Tessellation in Shader Graph

Tessellation became available in Shader Graph with HDRP version 12.0, corresponding to Unity 2021.2. Unfortunately, URP Shader Graph does not yet support tessellation, so this effect will only work in HDRP. On the flipside, tessellation is a lot easier to achieve with Shader Graph. Let’s see how.

A Color named Base Color that will provide a way to tint the albedo of the water.

A Texture2D called Base Texture that will also affect the albedo.

A Float called Wave Speed that is used to control how fast the waves spread across the surface of the water.

A Float called Wave Strength that represents how high and low the waves travel in world space. A value of 1 means the waves travel one Unity unit up and down.

A Float called Tess Factor (short for “tessellation factor”) that we’ll use to configure how many times the mesh gets subdivided. This property should use a slider between 1 and 64 (1 means no subdivisions, and 64 is the hardware limit).

Max Displacement – The maximum distance, in Unity units, that the tessellated triangles can be displaced from their original position. This isn’t a hard limit, but it prevents triangles being improperly culled.

Triangle Culling Epsilon – Higher values mean that more triangles are culled.

Start Fade Distance – At this distance (in Unity units) from the camera, tessellation will start to fade by reducing the tessellation factor.

End Fade Distance – At this distance (in Unity units) from the camera, tessellation stops (i.e., the tessellation factor is 1).

Triangle Size – When a triangle is above this size, in pixels, HDRP will subdivide it. Lower values mean smaller triangles get subdivided, and therefore the resulting mesh will be smoother.

Tessellation Mode – Choose between None and Phong. With Phong tessellation, Unity will interpolate the newly generated geometry to smooth the mesh.

Tessellation Factor – This is the same as the tessellation factor we saw in the code-based tessellation shader. This is the number of times a triangle is subdivided. However, there is no way to provide different edge factors for each edge or inside factors for the inside of the triangle – they all use the same value.

Tessellation Displacement – This is the offset, in world space, of the vertices of the mesh. The offset is applied after tessellation, so it just happens to be perfect for the wave effect we’re building.

With these blocks accessible on the master stack, we can get to work creating the wave effect. First, connect the Tess Factor property to the Tessellation Factor block. This will let us dynamically change the amount of tessellation on each material that uses this shader.

A screenshot of the shader graph illustrates the calculation of the tessellation displacement through the parameters of wave speed and strength with the tess factor.

Tessellation Factor and Tessellation Displacement in Shader Graph

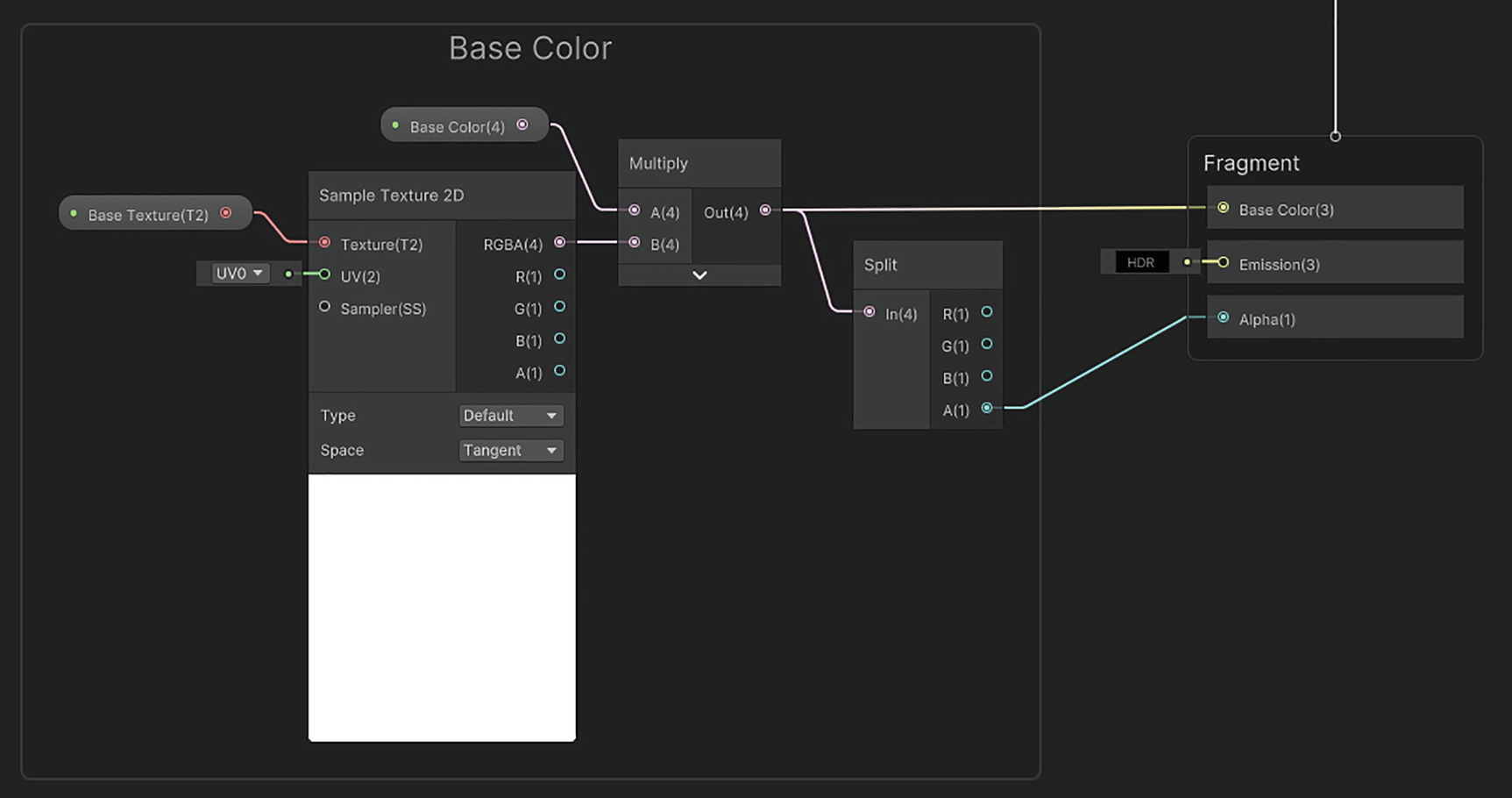

A screenshot of the base color window represents, how the properties of the base color are multiplied and split into output fragments.

Outputting the Base Color

The graph is now complete, and you should see results just like those we saw with the code-based version of the shader (see Figure 12-2). Note that as you change the tessellation factor property, Unity will use fractional_odd subdivision behavior when using non-integer values, rather than the “integer” behavior we used with the code-based shader.

As you can see, tessellation is a powerful technique that can achieve things that are impossible with the vertex and fragment shaders we have used throughout the book so far. In the next example of tessellation, we will build a simplified LOD system that uses a high tessellation factor for objects close to the screen and a low tessellation factor when objects are far from the screen.

Level of Detail Using Tessellation

For the wave shader, we used a uniform amount of tessellation for each object – that is, every triangle of each object using a material with this shader used the same tessellation factor. That doesn’t have to be the case. When a mesh is close to the camera, we want to use a high tessellation factor so that we get the most benefits out of the slightly increased processing time. But when a mesh is far away, we can get away with using a far lower tessellation factor. Even for large objects that exist both close to and far away from the camera, it is in our best interest to use lots of tessellations for the closest triangles and not as much for the furthest ones. In this shader example, we’ll forget about waves and see how we can build a tessellation-based LOD system for a basic stationary mesh. Let’s see how to do this in shader code and then in HDRP Shader Graph.

Level of Detail Tessellation in Shader Code

The TessLOD shader skeleton

When the distance of an edge (in Unity units) from the camera is less than _TessMinDistance, those edges use the full tessellation factor, defined in the _TessAmount property.

When the distance is above _TessMaxDistance, the mesh uses a tessellation factor of 1, which means there is no tessellation at all.

When the distance of an edge is between the two properties, the tessellation factor gets smaller the further from the camera you get.

The Properties block

Adding tessellation LOD properties in the built-in pipeline

Adding tessellation LOD properties in URP

The tessControlPoint struct for the tessellation LOD effect

The vert function for the tessellation LOD effect

Store the position of the three triangles in the patch in variables named triPos0, triPos1, and triPos2. I’ll refer to variables with this naming system as triPosX from now on.

Calculate the midpoint of each edge and store the result in variables called edgePosX.

Get the world-space position of the camera from the built-in _WorldSpaceCameraPos variable.

Calculate the distance of the three edges from the camera and store the result in distX.

Use a bit of math to figure out an edge factor value for each edge, stored in edgeFactorX. These values are normalized between 0 and 1, where 0 corresponds to edges past the _TessMaxDistance and 1 corresponds to edges closer than _TessMinDistance.

Calculate the actual edge tessellation factors, f.edge[X], by squaring edgeFactorX and multiplying by the original _TessAmount (squaring is optional, but I found it looked better than not squaring). This could result in zero factors, which stop the triangle from being rendered, so take the max of this value and 1 so that the factor is always at least 1.

Calculate the inside tessellation factor by taking the mean of the three edge factors.

The patchConstantFunc function for variable tessellation based on distance

The tessDomain function interpolating properties and outputting v2f

The shader is now complete, and you will see a different number of subdivisions on some triangles as you move the camera closer to or further away from certain meshes, as shown previously in Figure 12-2. Try tweaking the min and max distances to see how the fade-out behavior of the tessellation works. Now let’s see how this works in Shader Graph.

Level of Detail Tessellation in Shader Graph

Believe it or not, you already saw how this works in Shader Graph if you followed along with the Waves example for Shader Graph, as this functionality is built into Shader Graph directly! Remember that tessellation only works in HDRP Shader Graph. When tessellation is enabled for a Shader Graph, then a material that uses that shader will have a handful of tessellation-related options exposed in the Inspector. The relevant ones for us are Start Fade Distance and End Fade Distance, which I briefly explained previously.

A simulated wave sheet of meshes shaded lightly, is placed over a circular base with its shadow below.

The Start Fade Distance for this mesh is 5 units, and the End Fade Distance is 15 units, with a base tessellation factor of 64. This produces an extreme transition between high and low tessellation factors on the mesh

We have now thoroughly explored tessellation factors and have seen how they can be used to increase the resolution of vertex-based effects for higher-quality effects. Next, let’s explore another type of optional shader called geometry shaders.

Geometry Shaders

Geometry shaders are another optional stage in the rendering pipeline – see stage 3b in Figure 12-1. A geometry shader function receives an input primitive (such as a point or triangle) and a stream of all primitives in the mesh, and it can create brand-new primitives (based on the one it received as input) and add those to the stream. The original primitive is not automatically added back to the stream, so you may end up with completely new geometry from what you started with.

Although they are powerful, geometry shaders have some drawbacks. Often, they are quite slow, and many use cases for geometry shaders could be solved more efficiently with tessellation or compute shaders, albeit with potentially more complexity on the programmer’s side. Also, hardware and API support for geometry shaders can be spotty, so be sure to check that your project’s target devices will be able to support geometry shaders before diving into using them. Finally, geometry shaders are not supported by Shader Graph at all as of the writing of this book, so regrettably, we will not be able to create node-based geometry shaders.

There are many things you can do with geometry shaders, and in the following example, I will show you how to add small bits of geometry to any mesh to display the direction of the normals on the surface for that mesh.

Visualizing Normals with Geometry Shaders

A simulated sphere is shaded lightly with small spikes over the entire surface of the sphere.

Each “spike” emanating from the surface is used to visualize the direction of the normal vector at each vertex of the mesh

The NormalDebug skeleton shader code

Already, you can see some of the structure of the file coming together. The appdata struct is used to supply input data to the vertex shader, but instead of a v2f struct, we have two new structs called v2g and g2f, meaning “vertex to geometry” and “geometry to fragment,” respectively. That should make the flow of data through this shader clear! Alongside the familiar vert and frag functions, the geom function is the geometry shader function, and I’ve added a helper function called geomToClip that we’ll explore later.

A Color called _DebugColor, which is the color used to visualize the normal vectors at each point. We’ll just use a block color for all normals.

A Float called _WireThickness, which represents the width, in Unity units, of each visualized normal. This should be quite small, so I’ll bound the value between 0 and 0.1.

Another Float called _WireLength, which is unsurprisingly the height of each visualized normal. This can be slightly longer, so I’ll bound the value between 0 and 1.

Properties for the NormalDebug shader

Properties in HLSL in the built-in pipeline

Properties in HLSL in URP

The UnityCG.cginc include file for the normal debug effect in the built-in pipeline

The Core.hlsl include file and relevant tags for the normal debug effect in URP

Using the Cull Off keyword

The appdata struct for the normal debug effect

The v2g struct

The g2f struct

The vert function in the built-in pipeline

The vert function in URP

The geom function signature

Calculating the normal, tangent, bitangent, and eight offset vectors

Building two quads using triangle strips

The geomToClip helper function

The frag function for the normal debug effect

Now we have seen a handful of use cases for both geometry and tessellation shaders in Unity. Next, we’ll explore another type of shader that exists outside the typical graphics pipeline, as it can be used for non-graphics purposes.

Compute Shaders

Compute shaders are a special type of shader, distinct from the rest, that exists outside the graphics pipeline in Figure 12-1. Compute shaders can be used for arbitrary code execution on the GPU, meaning that we don’t have to use them for graphics purposes. With compute shaders, we can run a massively parallel application on the GPU, which is much better suited to certain tasks than the CPU. The best use cases are when you have thousands of small tasks that can run independently to each other – does that sound like vertex or fragment processing to you?

An illustration of simulated base topography depicts a 3 D mesh of grasses over it, in a dark background.

A base terrain mesh with grass blades being generated on each triangle

Grass Mesh Instancing

There are a couple of typical workflows related to compute shaders. The first involves sending data from the CPU (a C# script) to the GPU (the compute shader), doing some processing on the GPU, then reading the results on the CPU side, and doing something with those results. The second involves sending data from CPU to GPU, running the compute shader, and then reading the results inside a separate shader without needing to copy any data back to the CPU – both the shaders can share the same GPU memory. This second approach is useful because copying data between the CPU and GPU and back is time-consuming, so it’s best to minimize the frequency of copying data back and forth as possible.

A terrain mesh and a grass blade mesh.

A C# script to read data from both meshes and set up the data that needs to be sent to the compute shader.

A compute shader that will receive a list of vertices and triangles of the terrain mesh and then generate a transformation matrix for each triangle.

A “regular” shader for rendering each grass blade. The vertex shader reads one of the transformation matrices generated by the compute shader; applies it to the grass mesh to position, scale, and rotate it in object space; and then applies the MVP matrix to transform to clip space. The fragment shader blends two colors between the base and tip of the grass blade.

A trio of pictures represents the simulated meshes of a terrain sheet, a cone with a flat base for a grass blade, and the U V s for the grass blade mesh.

From left to right: the high-poly terrain mesh, the low-poly grass blade mesh, and the UVs for the grass blade mesh. The base and tip of the grass blade mesh are at the bottom and top of the UV space, respectively

Figure 12-10 shows the terrain and grass blade meshes that I am using for this effect, both of which were created in Blender. You can use a lower-poly terrain mesh if you want, but since we’re going to generate a grass blade on every triangle of the terrain, I wanted mine to be high enough poly so that the grass would appear thick.

A screenshot of an inspector window lists the options under the model tab of grass blade import settings. The options under scene and meshes are edited.

Tick the Read/Write box in the mesh import settings; otherwise, the script will fail

Now that we can read data from both meshes, let’s start creating the effect by writing the C# script.

The ProceduralGrass C# Script

The ProceduralGrass C# script

Next, let’s deal with the member variables of this class.

ProceduralGrass Properties

The ComputeShader type is, unsurprisingly, the base type for all compute shaders. It’s like the Shader type that we’ve seen previously. We will use a variable of this type to set parameters with which to run the compute shader.

The GraphicsBuffer type is like another type, ComputeBuffer, which is typically used in compute applications. Both types of buffer store data in a format that can be sent to a compute shader, and they can contain most primitive types and even structs. The GraphicsBuffer type is specifically for graphics-related data, whereas ComputeBuffer is for arbitrary data.

The Bounds type is used for bounding boxes that are used when culling objects. Unity won’t be able to calculate this automatically with the technique we’re using, so we will manually calculate the bounds.

A ComputeShader object to store the compute shader used for the effect.

Two Mesh objects to store the terrain mesh and the grass blade mesh. These are both meshes I created in Blender. The grass blade mesh must be assigned from the Editor, but the terrain mesh should be assigned to a MeshFilter component attached to the same object the script is on.

A float to control the scale and a Vector2 to control the minimum and maximum height of the grass blades.

Six different GraphicsBuffer objects – I’ll explain these as I go through the code.

A Bounds object for the combined bounding box of all the grass blade meshes, which will be generated on the terrain.

Three integers related to the compute shader. I’ll also explain these as I go.

Member variables for the ProceduralGrass script

Let’s now move on to the Start method.

ProceduralGrass Start Method

Accessing the kernel function

A workflow illustrates; The process of importing the stack of vertical bars of the triangle or the index list into the vertex list, and involves the resulting mesh with four vertices.

Indexing into the vertex array. With meshes that share many vertices between multiple faces, this technique saves space over storing all shared vertices as duplicated three-component vectors in the vertex array

A target. This is just a type that tells Unity what we’re using the buffer for. We’ll use Target.Structured, because we will be using StructuredBuffer in the compute shader (more on that later).

The number of entries in the buffer. For us, this is the same size as the vertex array.

A stride value. The “stride” refers to the number of bits each entry in the array takes up, which we use to ensure Unity can pack all the data into the buffer without gaps. We can use the sizeof method to get the size of a float and then multiply by 3 because it’s a Vector3.

We then use the SetData method on the buffer to bind the vertex array to the buffer, followed by the SetBuffer method on the compute shader to bind the buffer to a specific variable name in the compute shader. I’ll use the name _TerrainPositions.

The terrain vertex and triangle buffers

The grass vertex, triangle, and UV buffers

Creating the transformation matrix buffer

Creating the bounding box for the grass blade meshes

Calling the RunComputeShader method

Let’s now look at the RunComputeShader method.

ProceduralGrass RunComputeShader Method

The object-to-world matrix for the transform, which helps us put the grass blades in the correct preliminary position.

The number of triangles in the mesh (i.e., the number of times the compute shader should run).

The minimum and maximum height of each grass blade.

The scale of the grass meshes. If we just used a scale of 1, the grass blades I made in Blender would be about 1 meter in height.

Setting parameters on the compute shader

In addition to sending these parameters, let’s think about how many triangles the compute shader runs at a time and how many sets it will run in total. When we get to writing the compute shader itself, we will specify how many threads are contained in a work group. A single thread runs through the compute shader once, so if we specify, say, 64 threads to a work group, then that group runs through the compute shader 64 times in parallel, with slightly different inputs for each thread. We can divide a work group across one, two, or three dimensions, but we’ll be sticking to one for this shader. We’ll get on to setting the size of a work group later when we write the compute shader, but it’s important to know this information for now.

Work groups and dispatching the compute shader

After the compute shader has finished running, the _TransformMatrices buffer will be full of usable data. This data can be shared between the compute shader and the conventional grass mesh shader. In the Update method, we will create those grass blades using GPU instancing.

ProceduralGrass Script Update Method

Unlike the compute shader, which we can run just once at the start of the game (if you don’t want to move the terrain mesh or animate the grass in any way), we must tell Unity to render the grass blades every frame, so we’ll do it in Update. Although you are familiar with setting properties on materials by this point, we will be doing things in a slightly different way, because we’ll be using GPU instancing. Conventional rendering issues a draw call for every mesh in the scene, whereas GPU instancing can be used to draw multiple instances of the same mesh in a single draw call, removing a lot of overhead, and those instances can even use different properties as we will see. The only additional consideration in URP is that GPU instancing is incompatible with the SRP Batcher, so we won’t need to include shader variables in a constant buffer – we’ll deal with that later. Let’s see how to run an instanced shader.

The RenderParams object we just created.

The topology of the mesh. This can be either Triangles, Quads, Lines, LineStrip, or Points – we’ll choose Triangles.

The index buffer. That’s another name for the triangle buffer that is commonly used in computer graphics.

The number of indices to get from the index buffer. We’ll be using the entire buffer.

The number of instances to render. This is the number of grass blades we’ll have, equal to the number of transformation matrices inside _TransformMatrices.

The Update method

With this method, Unity will render several grass blades. This could mean hundreds, thousands, or even millions of vertices being rendered with surprising efficiency. The final method to write in this script is OnDestroy.

ProceduralGrass Script OnDestroy Method

The OnDestroy method

The script is now complete, but if you attach it to an object right now, then nothing will happen (except maybe a flurry of errors and warnings) since we haven’t written either of the shaders required for the effect. Let’s start by writing the compute shader.

The ProceduralGrass Compute Shader

Compute shaders, which we write with HLSL syntax, are used for arbitrary computation on the GPU. Although this compute shader will serve a graphics purpose in the end, it won’t be displaying any graphics on-screen in and of itself. Create a new compute shader via Create ➤ Shader ➤ Compute Shader, and name it “ProceduralGrass.compute”. I’ll remove all the contents for now, and we’ll write the file from scratch.

Starting off the compute shader

The best number of threads in each work group and in each dimension depends heavily on the nature of the problem you are trying to solve. If the problem is 2D in nature, such as a 2D fluid simulation, then splitting your work groups across the x- and y-axes makes sense. You’d be tempted to see our problem as 2D or even 3D given the shape of the terrain mesh, but in reality, all we’re receiving is 1D lists of vertices and triangles, so that’s why I’m only using threads across one dimension. That said, you can try changing the value to see if performance increases – the optimal values are often hardware-dependent.

Compute shader properties

The randomRange and rotationMatrixY functions

The HLSL sincos function, which you may not have seen before, takes three parameters. The first is the angle in radians. The latter two are output variables; this function simultaneously returns the sine and cosine of the input angle through those latter two parameters, respectively.

Any invocations with an ID higher than _TerrainTriangleCount should end immediately (recall that I talked about the possibility of overshooting if the number of triangles does not divide nicely by the size of each work group).

Find the positions of the three vertices for the current triangle and calculate its center point (triangleCenterPos). This is the “base” position for placing the grass mesh.

Generate two random seeds based on the ID. They are float2 seeds, so we’ll shift the ID components around to get different seeds.

Generate a scaleY value, which represents the height of the grass blade on the current triangle. We’ll use the _MinMaxBladeHeight values to randomize the height.

Generate a random offset value in the x- and z-directions using the two random seeds. This helps ensure the grass does not look too uniform.

Create an initial transformation matrix, grassTransformMatrix, using the scale and offset values described previously. Recall from Chapter 2 how translation and scale can be represented in a 4 × 4 matrix.

Create a random rotation matrix using the rotationMatrixY function. This will rotate each grass blade around the y-axis such that the direction they face is random.

Multiply the randomRotationMatrix, grassTransformMatrix, and _TerrainObjectToWorld matrices together to obtain a single transformation matrix, which transforms one grass blade from object space to world space, adds an offset, scales it, and rotates it. This is stored in the _TransformMatrices buffer.

The TerrainOffsets kernel

The compute shader gets run once per terrain triangle, so the _TransformMatrices buffer will contain one transformation matrix per terrain triangle. As we saw, our C# code then spawns one grass blade mesh instance for each of those transformation matrices. With that in mind, let’s see the shader that is used to draw those grass blades.

The Grass Blade Shader

Grass shader skeleton code

As you can see, we still use the same v2f struct as usual, as we are still passing data from the vertex shader to the fragment shader. It contains clip-space positions and UV coordinates, but you could add other variables such as normals if you wanted to incorporate lighting into this shader.

Declaring properties inside the Properties block

Declaring properties in HLSL in the built-in pipeline

Declaring properties in HLSL in URP

The UnityCG.cginc include file for the grass blade effect in the built-in pipeline

The Core.hlsl include file and relevant tags for the grass blade effect in URP

Let’s move on to the vert function. Unlike the other vertex shader functions we’ve seen so far, this one won’t take an appdata as a parameter. Instead, it will have two parameters: the vertexID, which is unique for each vertex within a mesh and uses the SV_VertexID semantic, and the instanceID, which is different for each mesh being rendered and uses the SV_InstanceID semantic. These values will be used as indices to access the StructuredBuffer objects.

Access a transformation matrix from the compute shader via _TransformMatrices[instanceID]. There is one transformation matrix per instance.

Create a v2f object.

Access the vertex position from the _Positions buffer using vertexID as the index. Convert from float3 to float4 by adding a w component with a value of 1.

Multiply the position by the transformation matrix. The position is now in world space.

Convert from world space to clip space by multiplying the position by UNITY_MATRIX_VP.

Get the correct UV coordinates from the _UVs buffer using vertexID as the index.

Return the v2f.

The vert function for the grass blade effect

The frag function for the grass blade effect

A photograph of a simulated base resembles a lightly shaded quadrilateral in a dark background.

Although it is difficult to tell apart the grass blades at this distance, this screenshot contains millions of them

There are many directions you could take this effect in, such as making the grass sway in the wind, which would require recalculating the transformation matrices every frame and providing a different matrix on any vertices touching the ground. You could also try mixing and matching different grass blade meshes, which would require multiple calls to RenderPrimitivesIndexed. This effect should give you a starting point, but the possibilities are endless!

Summary

The tessellation and geometry shader stages are optional stages that lie between the vertex and fragment shader stages.

- Tessellation involves creating new vertices between the existing ones, thereby subdividing the mesh into a higher-polygon version of itself. There are three major components:

The hull shader sets up the control points for the tessellator.

The patch constant function calculates the tessellation factors for the edges and the inside of each primitive.

The domain shader takes the new control points from the tessellator and interpolates vertex attributes from the original control points.

The geometry shader takes a primitive shape and a stream of primitives and generates new primitives based on the properties of the input primitive and then adds them to the stream.

- Compute shaders can be used for arbitrary processing of large volumes of data on the GPU. They are best used for tasks where there are thousands or millions of small, similar tasks that are independent of one another. Graphics is an excellent example of such a problem.

Compute shaders can still be used for graphics-related problems, such as reading large amounts of mesh data and generating new data related to the mesh.

The Graphics.RenderPrimitivesIndexed method can be used to create thousands or millions of instances of a mesh, provided each instance uses the same shader.