Chapter 2: Integrating Software Applications into the Enterprise

In this chapter, we need to understand the options available for a robust repeatable framework when developing or commissioning new services or software. We must understand how these systems and services can be built securely and validated. As a security professional, it is important to understand how we can provide assurance that products meet the appropriate levels of trust. We need to provide potential customers with the assurance that our services are trustworthy and meet recognized standards.

In this chapter, we will go through the following topics:

- Integrating security into the development life cycle

- Software assurance

- Baselines and templates

- Security implications of integrating enterprise applications

- Supporting enterprise integration enablers

Integrating security into the development life cycle

When considering introducing new systems into an enterprise environment, it is important to adopt a robust repeatable approach. Organizations must incorporate the most stringent standards, consider legal and regulatory requirements, and ensure the system is financially viable.

When developing software, it is important to consider security at every stage of the development process. Some vendors will use a variation called the Secure Development Lifecycle (SDL), which incorporates security requirements at each step of the process.

Systems development life cycle

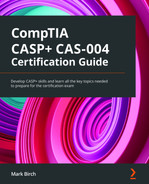

One of the most well understood and widely implemented approaches to systems development is the Systems Development Life Cycle (SDLC). There are normally 5 stages, although in some models there can be as many as 10 stages. Whichever model you adopt, you should allow for a clearly defined pathway from the initial ideas to a functional working product. Figure 2.1 shows the SDLC model:

Figure 2.1 – SDLC process

Let's break down each phase of this process.

Requirements analysis/initiation phase

At the beginning of the life cycle, the requirements for the new tool or system are identified, and we can then create the plan. This will include the following:

- Understanding the goals for the system, as well as understanding user expectations and requirements (this involves capturing the user story – what exactly does the customer expect the system to deliver?).

- Identifying project resources, such as available personnel and funding.

- Discovering whether alternative solutions are already available. Is there a more cost-effective solution? (Note that government departments may be required to look toward third parties such as cloud service providers.)

- Performing system and feasibility studies.

Here are some security considerations:

- Will the system host information with particular security constraints?

- Will the system be accessible externally from the internet?

- Ensuring personnel involved in the project have a common understanding of the security considerations. Regulatory compliance would be an important consideration at this stage (such as GDPR or PCI DSS).

- Identifying where security must be implemented in the system.

- Nominating an individual or team responsible for overseeing all security considerations.

The analysis/initiation phase is critical. Proper planning saves time, money, and resources, and it ensures that the rest of the SDLC will be performed correctly.

Development/acquisition phase

Once the development team understands the customer requirements, development can begin. This phase includes design and modeling and will include the following:

- Outlining all features required for the new system

- Considering alternative designs

- Creating System Design Documents (SDDs)

Here are some security considerations:

- Must perform risk assessments. SAST, DAST, and penetration testing must be performed.

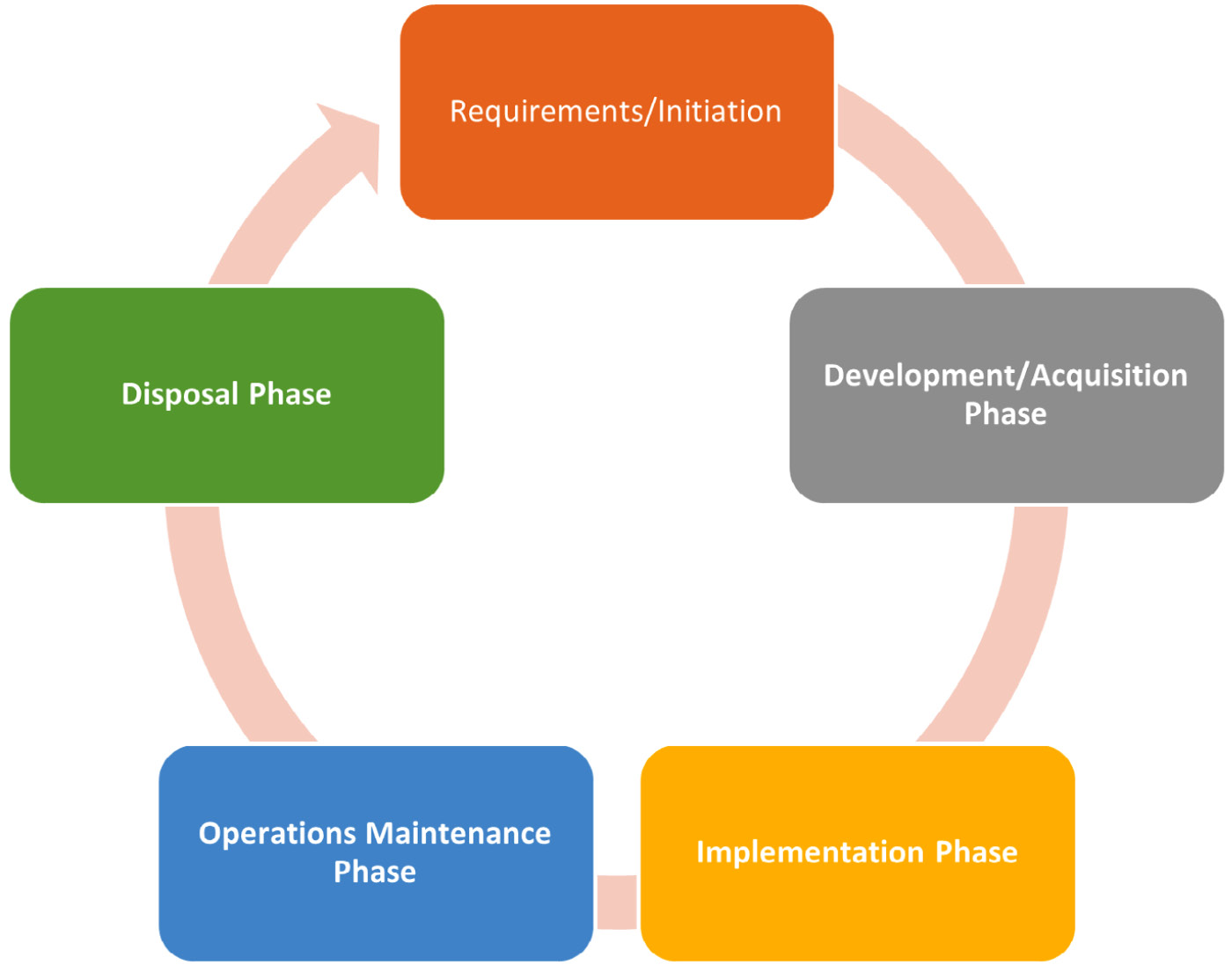

- Plan the system security testing. This is done with the Security Requirements Traceability Matrix (SRTM).

In Figure 2.2 we can see an example of an SRTM document:

Figure 2.2 – SRTM

This is a simplified example – in reality, there would be much more testing to be done.

Implementation phase

During this phase, the system is created from the designs in the previous stages:

- Program code is written.

- Infrastructure is configured and provisioned.

- System testing takes place.

- End user/customer training is done.

- Bug tracking and fixing is carried out.

Here are some security considerations:

- Vulnerability scanning against infrastructure, web servers, and database servers is performed.

- Ensure the SRTM is now implemented for security testing.

Operations maintenance phase

When the system is live, it will need continuous monitoring and updating to meet operational needs. This may include the following:

- Refreshing hardware

- Performance benchmarking

- Patching/updating certain components to ensure they meet the required standards

- Improving systems when necessary

It is important to continually assess the economic viability of legacy systems to assess whether they meet the operational needs of the business. For example, an expensive IBM mainframe computer may have cost over 1 million dollars in 1980, but the costs to keep it running today may be exorbitant.

Here are some security considerations:

- Hardware and software patching

- Ensuring the system is incorporated into a change management process

- Continuous monitoring

Disposal phase

There must be a plan for the eventual decommissioning of the system:

- Plan for hardware, software, and data disposal.

- Data may need to be migrated to a new system – can we output to a common file format?

- Data will either be purged or archived.

Here are some security considerations:

The business must consider the possibility of any remaining data on storage media. It is also important to consider how we might access archived data generated by the system – is it technically feasible? (Could you recover archived family movies that you found in the attic, stored in Betamax format?)

Development approaches

It is important to focus on a development methodology that fits with the project's needs. There are approaches that focus more on customer engagement throughout the project life cycle. Some approaches allow the customer to have a clear vision of the finished system at the beginning of the process and allow the development to be completed within strict budgets, while other approaches lend themselves to prototyping. Whatever your approach, it must align with the customers' requirements.

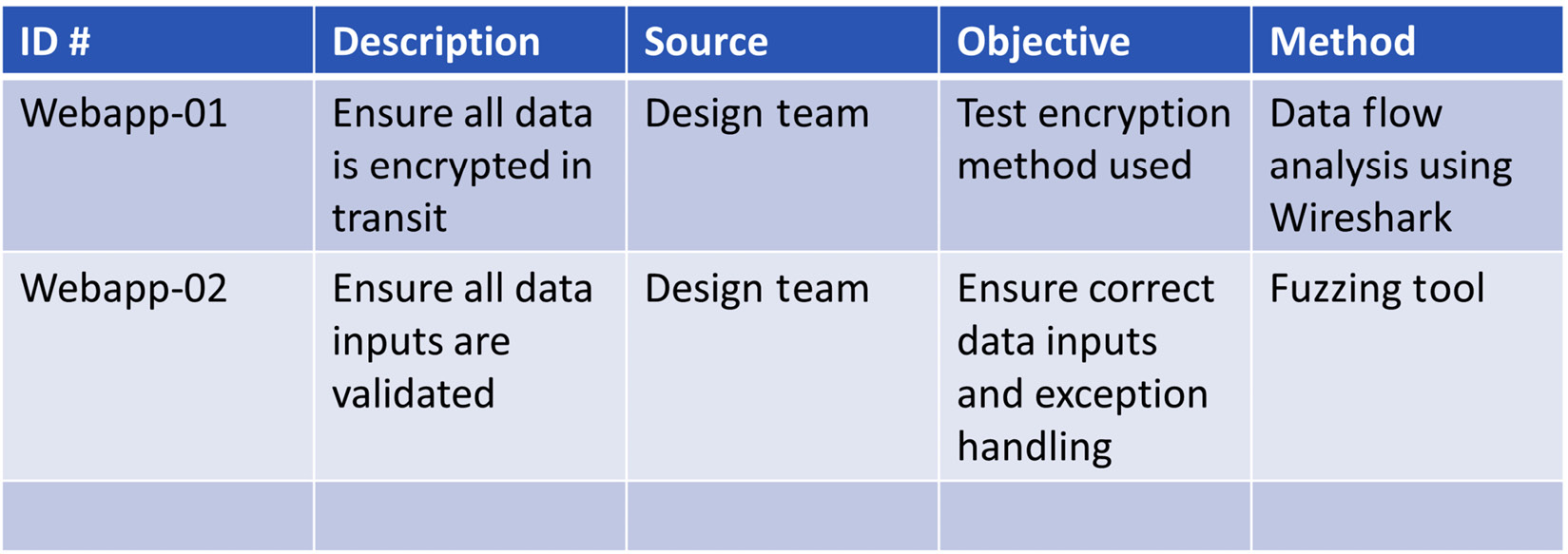

Waterfall

The waterfall has been a mainstay of systems development for many years. It is a very rigid approach with little customer involvement after the requirements phase. This model depends upon comprehensive documentation in the early stages.

At the beginning of the project the customer is involved, and they will define their requirements. The development team will capture the customer requirements, and the customer will now not be involved until release for customer acceptance testing.

The design will be done by the software engineers based upon the documentation captured from the customer.

The next stage will be the implementation or coding, there is no opportunity for customer feedback at this stage.

During verification, we will install, test, and debug, and then perform customer acceptance testing. If the customer is not satisfied at this point, we must go right back to the very start.

Figure 2.3 shows the waterfall methodology:

Figure 2.3 – Waterfall Methodology

Like water flowing down a waterfall, there is no going back.

Advantages of the waterfall method

The following points are advantages when using the waterfall method:

- Comprehensive documentation means onboarding new team members is easier.

- Detailed planning and documentation enable budgets and timelines to be met.

- This model scales well.

Disadvantages of the waterfall method

The following points are disadvantages when using the waterfall method:

- We need all requirements and documentation before or at the start of the project.

- No flexibility, change, or modification is possible until the end of the cycle.

- A lack of customer involvement/collaboration after completing the requirements phase.

- Testing is done only at the end of development.

Agile

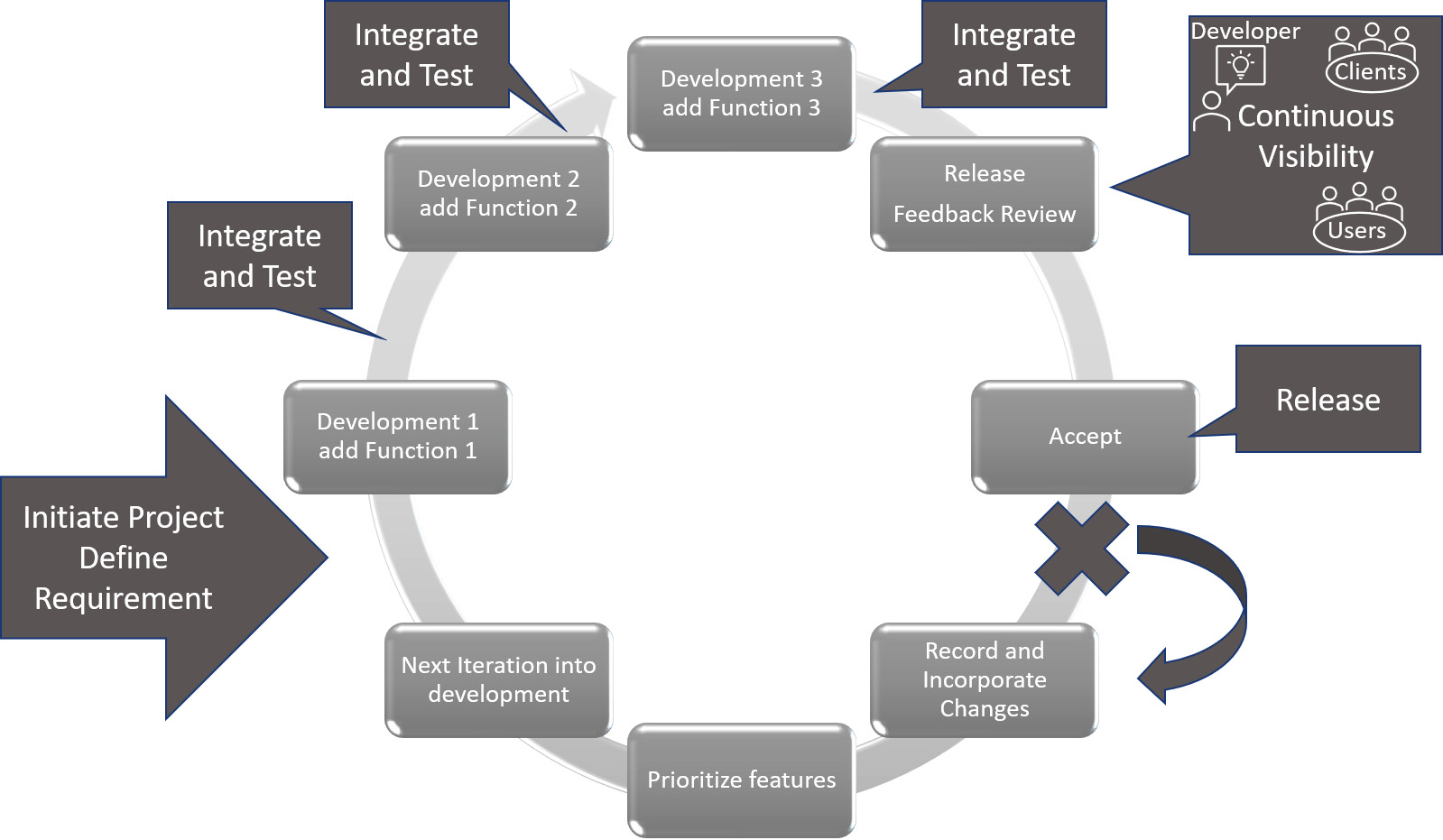

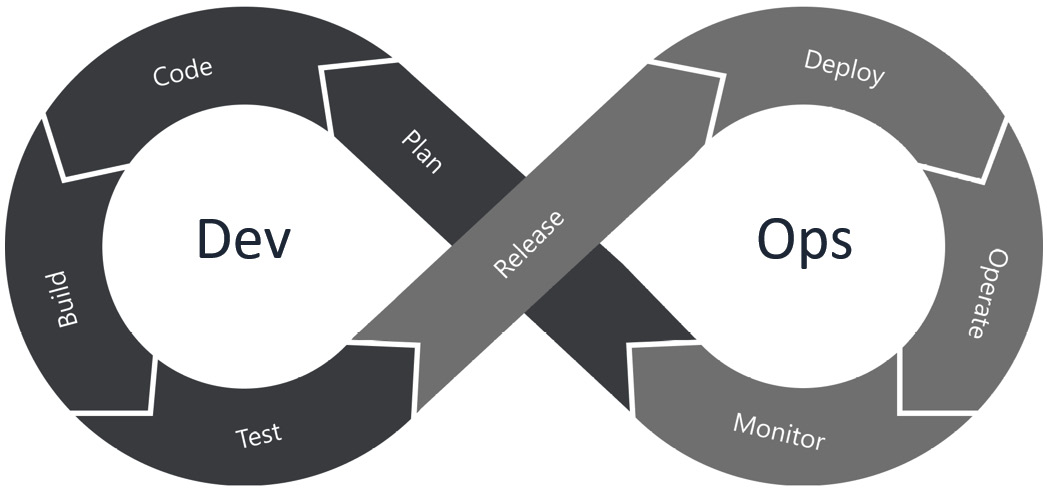

The Agile methodology is based on plenty of customer engagement. The customer is involved not just in the requirements phase. It is estimated that around 80% of all current development programs use this methodology. When using the Agile method, the entire project is divided into small incremental builds. In Figure 2.4 we can see the Agile development cycle:

Figure 2.4 – Agile development cycle

The Agile methodology means the customer will have many meetings with the development team. The customer is involved from the start of the project and during the lifetime of the project. They can review software changes and make requests for modifications, which ensures that the result is exactly what the customer wants.

As functionality is added to the build, the customer can review the progress. When the software is released, the customer is more likely to be satisfied, as they have been involved throughout the project.

In the event the customer is not satisfied, we will record the required changes and incorporate this into a fresh development cycle.

Advantages of the Agile method

The following points are advantages when using the Agile method:

- The use of teams means more collaboration.

- Development is more adaptive.

- The testing of code is done within the development phases.

- Satisfied customers, as continuous engagement during development ensures the system meets customer expectations.

- Best for continuous integration.

Disadvantages of the Agile method

The following points are disadvantages when using the Agile method:

- Lack of full documentation

- Not easy to bring in new developers once the project has started

- Project cost overruns

- Not good for large-scale projects

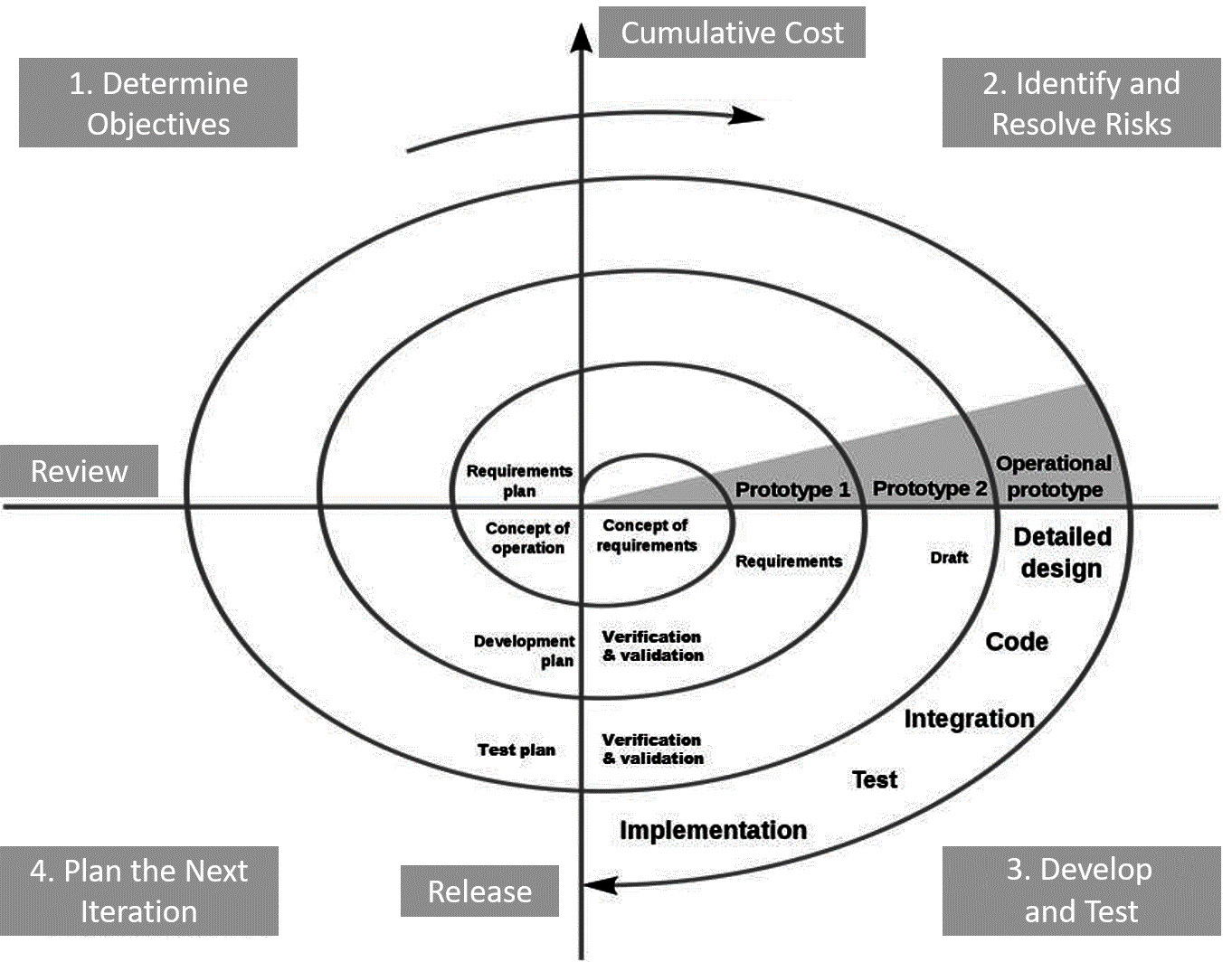

Spiral

The spiral model can be used when there is a prototype. When neither the Agile nor waterfall methods are appropriate, we can combine both approaches.

For this model to be useful, it needs to start with a prototype. We are basically refining a prototype as we go through several iterations. At each stage, we do a risk analysis after we have fine-tuned the prototype – this will be the final iteration that will be used to build through to the final release.

This allows the customer to be involved at regular intervals as each prototype is refined. In Figure 2.5 we can see the spiral model:

Figure 2.5 – Spiral model

Advantages of the spiral model

The following points are advantages when using the spiral method:

- The use of teams means more collaboration.

- The testing of code is done within the development phases.

- Satisfied customers, as continuous engagement during development ensures the system meets customer expectations.

- Flexibility – the spiral model is more flexible in terms of changing requirements during the development process.

- Reduces risk, as risk identification is carried out during each iteration.

- Good for large-scale projects.

Disadvantages of the spiral model

The following points are disadvantages when using the spiral method:

- Time management – timelines can slip.

- Complexity – this model is very complex compared to Agile or waterfall.

- Cost – this model can be expensive as it has multiple phases and iterations.

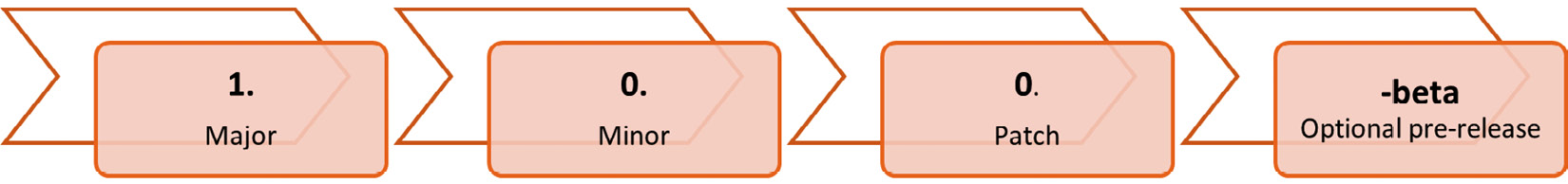

Versioning

Version control is of paramount importance. When considering Continuous Integration/Continuous Delivery (CI/CD), it is important to document build revisions and incorporate this into the change management plan when considering backout plans. For example, Microsoft brings out a major feature release of the Windows 10 operating system bi-annually. The original release was in July 2017 with build number 10240 and version ID 1507. The May 2021 update carries version 21H1 and build 19043. You can check the current build using winver on CMD. Figure 2.6 shows version control:

Figure 2.6 – Versioning

It is important to adopt a methodology when approaching systems or software design. A repeatable process means security will not be overlooked, and we are able to adopt a systematic approach.

Choosing the right methodology is also important so the development team can work efficiently, and the customer will be satisfied during and at the end of the process.

Software assurance

It is important that the systems and services that are developed and used by millions of enterprises, businesses, and users are robust and trustworthy. This process of software assurance is achieved using standard design concepts/methodologies, standard coding techniques and tools, and accepted methods of validation. We will take a look at approaches to ensure reliable bug-free code is deployed.

Sandboxing/development environment

It is important to have clearly defined segmentation when developing new systems. Code will be initially written within an Integrated Development Environment (IDE); testing can be done in an isolated area separate from production systems, often using a development system or network.

Validating third-party libraries

To speed up the development process, it is a common practice to use third-party libraries. Third-party libraries will speed up the development process as developers won't be developing tools or routines that already exist. Examples of popular third-party libraries include Retrofit, Picasso, Glide, and Zxing, which are used by Android application developers. It is critical that any third-party code that is used can be trusted. The use of third-party libraries might raise some security concerns as vulnerabilities in open source libraries are increasingly targets for hackers. It is important to remain updated with current Common Vulnerabilities and Exposures (CVEs) relating to third-party libraries.

It is important to consider the potential remediation that may be required if a vulnerability is discovered in a library that is part of your code base. Over 90% of software development includes the use of some third-party code. A vulnerability in the Apache Struts framework was reported and classified with CVE-2017-5638. Whilst the vulnerability was fixed with a new release of the framework, many customers were impacted. Equifax had 200,000 customer credit card numbers stolen as a result of the vulnerability. The outcome can be very costly in terms of regulatory fines (as with PCI DSS and GDPR, for example), it may also require that applications are re-compiled along with the updated libraries and distributed where necessary.

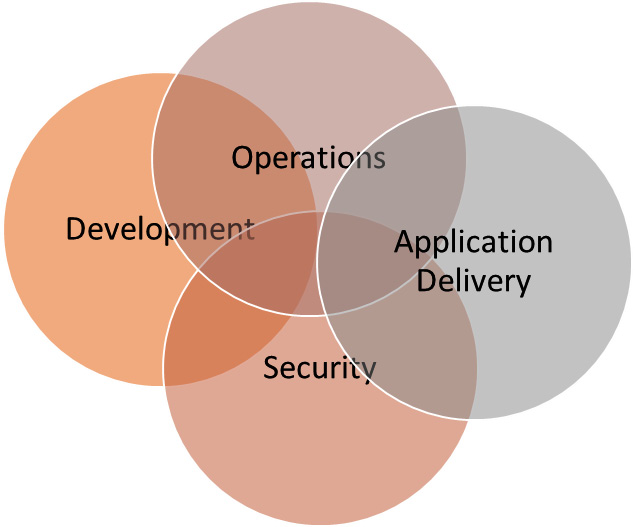

SecDevOps

The term DevOps originates from software development and IT operations. When implemented, it means continuous integration, automated testing, continuous delivery, and continuous deployment. It is a more of a cultural methodology, meaning that development and operations will work as a team.

Over the past few years, it has become commonplace to see Agile development methodology and related DevOps practices being implemented. Adopting these ideas means that the developers improve software incrementally and continuously, rather than offering major updates on annual or bi-annual cycles.

DevOps itself does not deliver cybersecurity. What is needed is SecDevOps. The term stresses that an organization treats security with as much importance as development and operations. Figure 2.7 depicts the close alignment of Development, Operations, Application Delivery, and Security:

Figure 2.7 – SecDevOps

SecDevOps requires the people involved to take a more comprehensive view of a project. All teams need to focus on security as well as development and maintaining operations. It involves many different elements to automate the deployment of new code and new systems. For SecDevOps to be successful, we must adopt practices that ensure code is secure from the inside out, which means integrating testing tools into the build process and continuously checking code right through to deployment. Automated regression testing is a key process, as we are constantly developing and improving our code modules. Regression testing will focus on the changed code. As this is a cultural approach to deploying software, it is important to champion security efforts from the team. Building a team with a strong focus on security is a very important element.

Defining the DevOps pipeline

A DevOps pipeline ensures that the development and operations teams adopt a set of best practices. A DevOps pipeline will ensure that the building, testing, and deployment of software into the operations environment is streamlined. There are several components of a DevOps pipeline as you can see in the following sub-sections.

Continuous integration

CI is the process of combining the code from individual development teams into a shared build/repository. Depending on the size and complexity of the project, this may be done multiple times a day.

Continuous delivery

CD is often used with CI. It will enable the development team to release incremental updates into the operational environment.

Continuous testing

Continuous testing is the process of testing during all the stages of development, the goal being to identify errors before they can be introduced into a production environment.

Testing will identify functional and non-functional errors in the code.

It is important when developing complex systems with large development teams that integration testing is run on a daily basis, sometimes several times a day. It is imperative to test all the units of code to ensure they stay within alignment.

When there is a small change of code and this change must be evaluated within the existing environment, we should use regression testing.

Continuous operations

The concept of continuous operations ensures the availability of systems and services in an enterprise. The result is that users will be unaware of new code releases and patches, but the systems will be maintained. The goal is to minimize any disruption during the introduction of new code.

Continuous operations will require a high degree of automation across a complex heterogeneous mix of servers, operating systems, applications, virtualization, containers, and so on. This will be best served by an orchestration architecture. In Figure 2.8 we can see the DevOps cycle.

Figure 2.8 – DevOps pipeline

DevOps pipelining is a continuous process.

Code signing

When code has been tested and validated, it should be digitally signed to ensure we have trusted builds of code modules.

It is estimated that over 80% of software breaches are due to vulnerabilities present at the application layer. It is important to eliminate these bugs in the code before the software is released. We will now take a look at three different approaches.

Interactive application security testing

The use of an Interactive Application Security Tool (IAST) is a modern approach, addressing the early detection of bugs and security issues. Application code is tested in real time using sensors and deployed agents to detect any potential vulnerabilities. The testing can be automated, which is important when we are looking to incorporate the testing into CI /CD environments.

The benefits of IAST are as follows:

- Accurate

- Fast testing

- Easy to deploy

- On-demand feedback

With demand for the rapid development of applications and new functionality, it is important to deploy the correct tools to reduce risk.

Dynamic application security testing

Dynamic Application Security Testing (DAST) tools are used against compiled code, but they are done at the end of the development cycle. They have no integration with the code and from the outside in. A fuzzer (designed to test input vulnerabilities) is a good example of a DAST tool, and would be part of a black-box test where there is no information given about the actual source code. These types of tests require experienced security auditors. DAST tools can be used without credentials, meaning they can also be used by an attacker.

Static application security testing

Static Application Security Testing (SAST) tools are used to assess source code in an IDE. The challenge to manually review thousands of lines of code is a huge undertaking, so automated tools are used instead, such as IBM Rational App Scan.

SAST tools are run inside the IDE as code is compiled. SAST tools always require direct access to the source code.

SAST tools are known to throw false positives – as they assess code line by line, they are not aware of additional security measures provided by other code modules.

For additional reading on this subject, there is a very useful blog from the Carnegie Mellon University Software Engineering Institute at https://tinyurl.com/seiblog.

Baseline and templates

It is important for an enterprise to adopt standards and methodologies in order to follow a standard repeatable process when developing new systems or software. It is important to consider interoperability when designing or considering systems supplied by third parties.

The National Cyber Security Centre (NCSC), a United Kingdom government agency, offers guidance to UK-based enterprises. They have divided each set of principles into five categories, loosely aligned with the stages at which an attack can be mitigated. Here are the five NCSC categories (also available at https://www.ncsc.gov.uk/collection/cyber-security-design-principles):

- Establish the context

Determine all the elements that compose your system, so your defensive measures will have no blind spots.

- Make compromise difficult

An attacker can only target the parts of a system they can reach. Make your system as difficult to penetrate as possible.

- Make disruption difficult

Design a system that is resilient to denial-of-service attacks and usage spikes.

- Make compromise detection easier

Design your system so you can spot suspicious activity as it happens and take necessary action.

- Reduce the impact of compromise

If an attacker succeeds in gaining a foothold, they will then move to exploit your system. Make this as difficult as possible.

The Open Web Application Security Project (OWASP) is a non-profit foundation created to improve the security of software. There are many community-led open source software projects, and membership runs to tens of thousands of members. The OWASP Foundation is a high-value organization for developers and technology implementors when securing web applications. OWASP offers tools and resources, community and networking groups, as well as education and training events.

When considering security in your application development, there is a very useful source of information in the OWASP Top 10, a list of common threats targeting web applications.

OWASP Top 10 threats (web applications):

- Injection

- Broken authentication

- Sensitive data exposure

- XML External Entities (XXE)

- Broken access control

- Security misconfiguration

- Cross-Site Scripting (XSS)

- Insecure deserialization

- Using components with known vulnerabilities

- Insufficient logging and monitoring

A PDF document with more detail can be downloaded from the following link: https://tinyurl.com/owasptoptenpdf.

Important Note

Specific attack types, including example logs and code, along with mitigation techniques, are covered in Chapter 7, Risk Mitigation Controls.

Secure coding standards

Whilst it is important to work toward industry best standards and apply a repeatable security methodology, it is also of critical importance to adopt practices that allow for validation and interoperability with other vendor solutions. This may mean developing applications to work on a particular vendor operating system or maybe to use an available Software-as-a-Service (SaaS) application hosted on Amazon Web Services (AWS).

Application vetting processes

Whether your application is for internal use or will be sold commercially, it should go through a Quality Assurance (QA) process. Microsoft has strict requirements for Universal Windows Platform applications that will be sold through the Microsoft Store. The testing process is very stringent, as follows:

- Security tests: Your application will be tested to ensure it is malware free.

- Technical compliance tests: These are functional tests, to ensure it follows technical compliance requirements. There is a Microsoft-supplied App Certification Kit (which is free to use), ensuring developers can test the code themselves first.

- Content compliance: This allows for the complete testing of all the features to make sure it meets content compliance.

- Release/publishing: Once an application package has been given the QA green light, it will be digitally signed to protect the application against tampering after it has been released.

We will now look at securing our hosting platforms, by adopting a strong security posture on our application web servers.

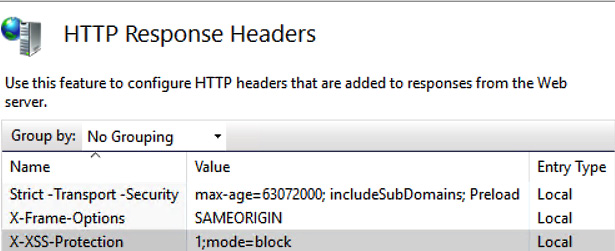

Hypertext Transfer Protocol (HTTP) headers

It is important when considering the hosting of web applications, to adopt a secure baseline on all your web platforms. Proper Hypertext Transfer Protocol (HTTP) headers will help to protect hosted applications.

Whilst secure coding, including input validation, is very important, there are a number of common security configuration settings that should be adopted on the platform hosting the application. Common attacks include Cross-Site Scripting (XSS), Cross-Site Request Forgery (XSRF), Clickjacking, and Man in the Middle (MITM), the following security header settings will not prevent all attacks but will add an extra layer of security to your website by ensuring web browsers are connecting in a secure manner.

XSS-Protection

This HTTP security header ensures that the connecting client uses the functionality of the built-in filter on the web browser. It should be configured as follows:

X-XSS-Protection "1; mode=block"

The X-Frame-Options (XFO) security header helps to protect your customers against clickjacking exploits. The following is the correct configuration for the header:

X-Frame-Options "SAMEORIGIN"

Strict-Transport-Security

The Strict-Transport-Security (HSTS) header instructs client browsers to always connect via HTTPS. Without this setting, there may be the opportunity for the transmission of unencrypted traffic, allowing for sniffing or MITM exploits. The following is the suggested configuration for the header:

Strict-Transport-Security: max-age=315360000

In the preceding example, the time is in seconds, making the time 1 year. (The client browser will always connect using TLS for a period not less than 1 year.)

When the preceding settings are incorporated into your site's .htaccess file or your server configuration file, it adds an additional layer of security for web applications.

If you are configuring Microsoft Internet Information Server (IIS), you can also add these security options through the IIS administration console. In Figure 2.9 we can see HTTP headers being secured on a Microsoft IIS web application server.

Figure 2.9 – Securing HTTP response headers

It is important to harden web application servers before deployment.

The Software Engineering Institute (SEI) CERT Secure Coding Initiative is run from the Software Engineering Institute of Carnegie Mellon University and aims to promote secure coding standards.

In the same way as OWASP publish a top-10 list of vulnerabilities, SEI CERT promotes their top 10 secure coding practices:

- Validate input.

- Heed compiler warnings.

- Architect and design for security policies.

- Keep it simple.

- Default deny.

- Adhere to the principle of least privilege.

- Sanitize data sent to other systems.

- Practice defense in depth.

- Use effective quality assurance techniques.

- Adopt a secure coding standard.

More information about their work and contribution toward secure coding standards can be found at the following link:

https://wiki.sei.cmu.edu/confluence/display/seccode/SEI+CERT+Coding+Standards

Microsoft SDL is a methodology that introduces security and privacy considerations throughout all phases of the development process. It is even more important to consider new scenarios, such as the cloud, Internet of Things (IoT), and Artificial Intelligence (AI). There are many free tools provided for developers including plugins for Microsoft Visual Studio:

https://www.microsoft.com/en-us/securityengineering/sdl/resources

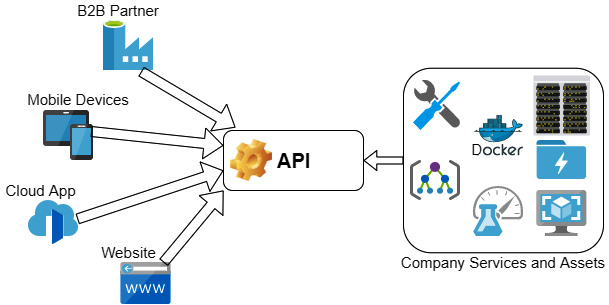

Application Programming Interface (API) management

APIs allow an organization to extend enterprise assets to B2B partners, customers, and other parts of the enterprise. These assets can then be made available through applications in the form of services, data, and applications.

Managing and securing these assets is of critical importance. Data exposure or the theft of intellectual property could be one of many threats. Automation will often be used to provision access to these resources. There are many vendor solutions offered to manage these critical assets. Figure 2.10 shows customers and partners connecting to services through a management API.

Figure 2.10 – Management API

Examples of popular API management platforms include the following:

- MuleSoft Anypoint Platform

- API Umbrella

- Google Apigee API Management Platform

- CA Technologies

- MuleSoft Anypoint Platform

- WSO2

- Apigee Edge

- Software AG

- Tyk Technologies

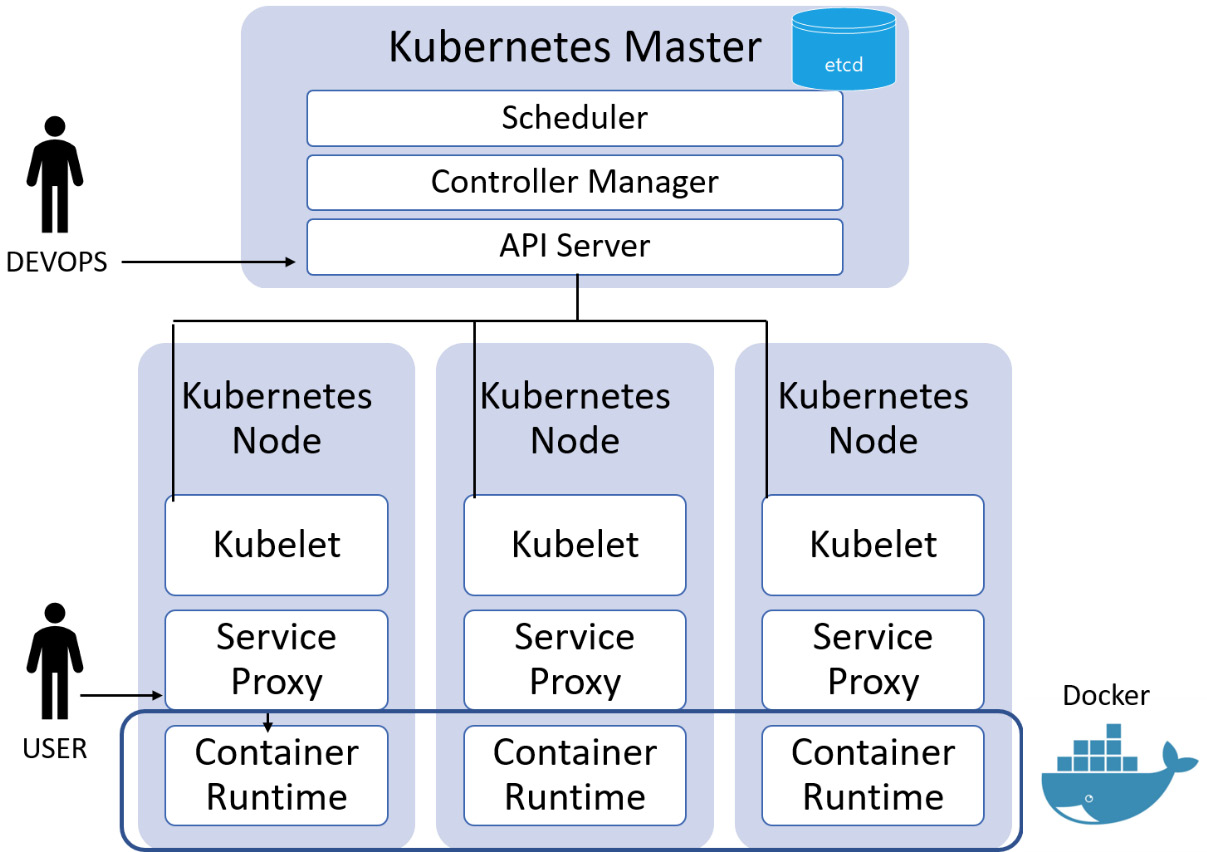

Container APIs

Many applications will be virtualized and deployed in containers (for Docker containers, see Chapter 3, Enterprise Data Security, Including Secure Cloud and Virtualization Solutions). Containers are an efficient way to scale up the delivery of applications, but when deployed across many hardware compute platforms it can become overly complex. Workloads need to be provisioned and de-provisioned on a large scale. There is a new industry approach to address this need, based on an open source API named Kubernetes.

Kubernetes was developed to allow the orchestration of multiple (virtual) servers. Containers are nested into Pods, and Pods can be scaled based upon demand.

An example might be a customer who has purchased an Enterprise Resource Planning (ERP) system comprising multiple application modules of code. The SaaS provider has many customers who require these services, and each customer must be isolated from the others. Whilst this could be done manually, automation will be useful to: Deploy, monitor, scale, and when there is an outage the system will be self-healing (it detects the problem and can restart or replicate failed containers). Figure 2.11 shows an overview of container API management:

Figure 2.11 – Kubernetes orchestration

When considering developing new systems and services it is important to recognize standard approaches and adopt a baseline for ongoing development. You may be working with B2B partners, selling services to external customers, or hosting web-based applications. You need to make sure the enterprise is using standards and approaches that ensure compatibility and security.

Considerations when integrating enterprise applications

Enterprise application integration is critically important. We have major businesses tools and processes that rely on the integration and communication services provided within an enterprise.

There are many examples of business dependencies within an enterprise. Our sales and marketing efforts would be difficult without the CRM business tools now considered commonplace. Human resources teams would plan employee recruitment using guesswork if not for ERP systems. Project planning could not ensure timely delivery of supply-chain raw materials without ERP systems. There are many more examples of these dependencies. Consider the following business enterprise applications.

Customer relationship management (CRM)

CRM is a category of integrated, data-focused software solutions that improve how an enterprise can engage with their customers. CRM systems help to manage and maintain customer relationships, track sales leads, marketing, and automate required actions.

Without the support of an integrated CRM solution, an enterprise may risk loss of opportunities to competitors.

Just imagine if sales staff lost customer contact information, only to learn that a customer then awarded a multimillion-dollar contract to a competitor. Or perhaps sales teams are chasing the same prospect, creating unfriendly, in-house competition. It is important for these teams to have access to a centralized and automated CRM system. With a properly deployed CRM solution, customers can be allocated to the correct teams (data can be properly segmented) and staff will not lose track of customer interactions and miss business opportunities. In Figure 2.12 we can see the components of a CRM system:

Figure 2.12 – CRM components

Without this valuable business tool, we will lack efficiency compared to our competitors.

Enterprise resource planning (ERP)

ERP allows an enterprise to manage a complex enterprise, typically combining many business units. A motor manufacturer such as Ford Motors would utilize this system.

ERP allows all the business units to be integrated and all the disparate processes to be managed. The software integrates a company's accounts, procurement, production operations, reporting, manufacturing, sales, and human resources activities.

If Ford Motors gained a government contract to supply 10,000 trucks, they would use an ERP. They would need to work with human resources to plan labor requirements; sales staff would have worked to win this contract using a CRM; the company would use production-planning software to ensure the smooth running of the assembly lines; accounting software would be used to make sure costs are controlled and invoices are paid; and distribution would be used to ensure the customer receives the trucks.

ERP provides integration of core business processes using common databases maintained by a database management system. Some of the well-known vendors in this market include IBM, SAP, Oracle, and Microsoft.

The business importance of this system will mean strict adherence to security, as the data applications that make up the system share data across all the enterprise departments that provide the data. ERP allows information flow between all business functions and manages connections to outside stakeholders. The global ERP software market was calculated to be worth around $40 billion in 2020. Figure 2.13 shows components of an ERP system:

Figure 2.13 – ERP components

Without having access to this enterprise asset, overall control of end-to-end production processes will be difficult.

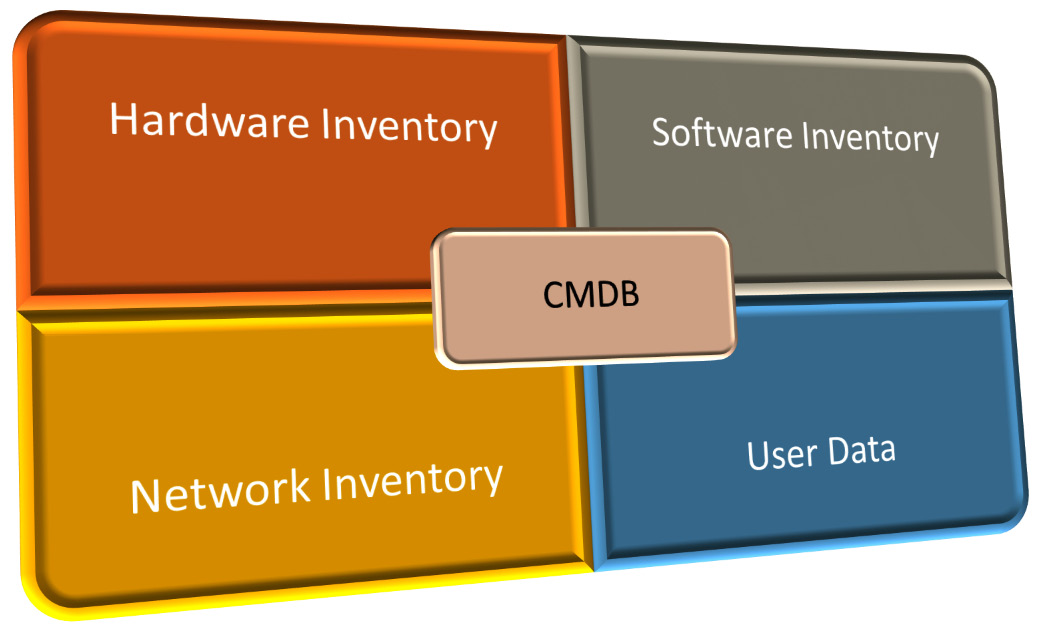

Configuration Management Database (CMDB)

A CMDB is a centralized repository that stores information on all the significant entities in your IT environment. The entities, termed as Configuration Items (CIs), include hardware, the installed software applications, documents, business services, and the people that are part of your IT system. A CMDB is used to support a large IT infrastructure and can be incorporated in endpoint management solutions (such as Microsoft Configuration Manager or Intune), where collections of values and attributes can be automated. This is a very important database for successful service desk delivery in an enterprise. Figure 2.14 shows the components within a CMDB:

Figure 2.14 – CMDB components

If an enterprise does not have visibility of information systems, including both hardware and software, then it will be difficult to manage.

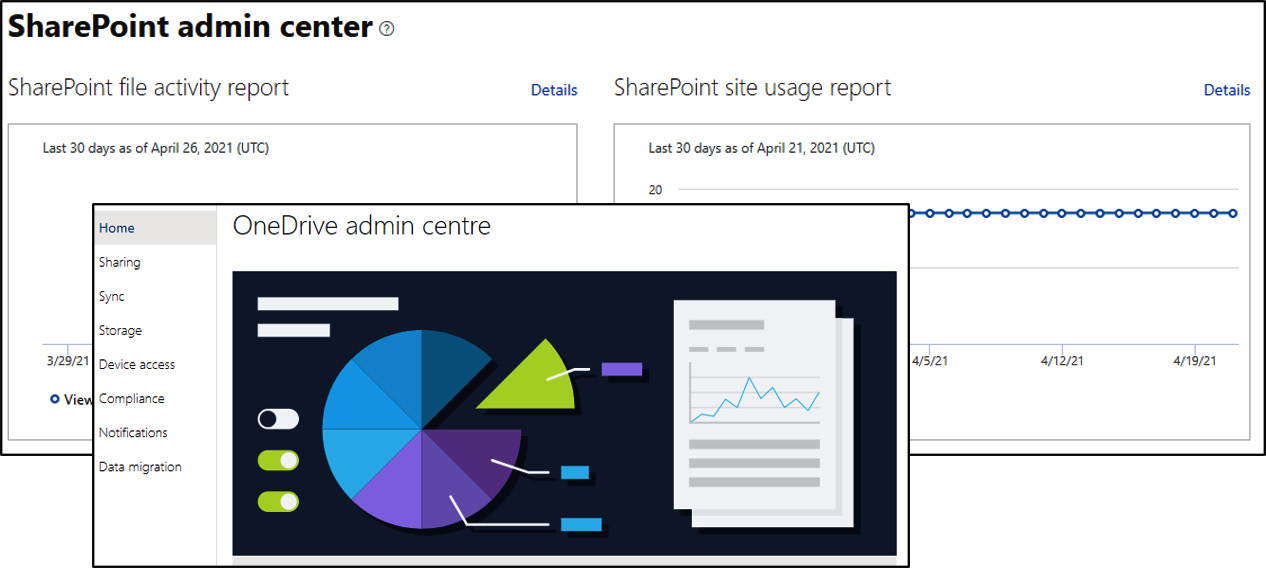

Content management systems

A Content Management System (CMS) allows for the management and day-to-day administration of enterprise data using a web portal.

An administrator can delegate responsibility to privileged users who can assist in the management of resources.

The requirements are to add users to access content and where necessary create, edit, and delete content. Version control is important, meaning documents can be checked out for editing but will still be accessible for read access by authorized users of the system.

Microsoft 365 uses SharePoint to allow participants to interact with a web-based portal in an easy-to-use and intuitive way (normally presented to the end user as OneDrive or Microsoft Teams). Figure 2.15 shows this CMS system:

Figure 2.15 – Microsoft SharePoint

Without access to these enterprise applications, an organization might become uncompetitive in their area of operations. Competitors will gain a business advantage using these enterprise toolsets themselves as the organization will be unable to track their resources and assets. Day-to-day management of the enterprise will be difficult and long-term planning goals will become harder to achieve.

Integration enablers

It is important to consider the less glamorous services that provide unseen services, much like the key workers who drive buses, provide healthcare services, deliver freight, and so on. Without these services, staff would not be able to travel to work, remain in good health, or have any inventory to manufacture products. The following sub-sections list the common integration enablers.

Directory services

The Lightweight Directory Access Protocol (LDAP) is an internet standard for accessing and managing directory services. LDAPv3 is an internet standard documented in RFC 4511.

There are many vendors who provide directory services, including Microsoft Active Directory, IBM, and Oracle. Directory services are used to share information about applications, users, groups, services, and networks across networks.

Directory services provide organized sets of records, often with a hierarchical structure (based upon X.500 standards), such as a corporate email directory. You can think of directory services like a telephone directory, which is a list of subscribers with their addresses and phone numbers. LDAP uses TCP port 389 and is supported using TLS over TCP port 636.

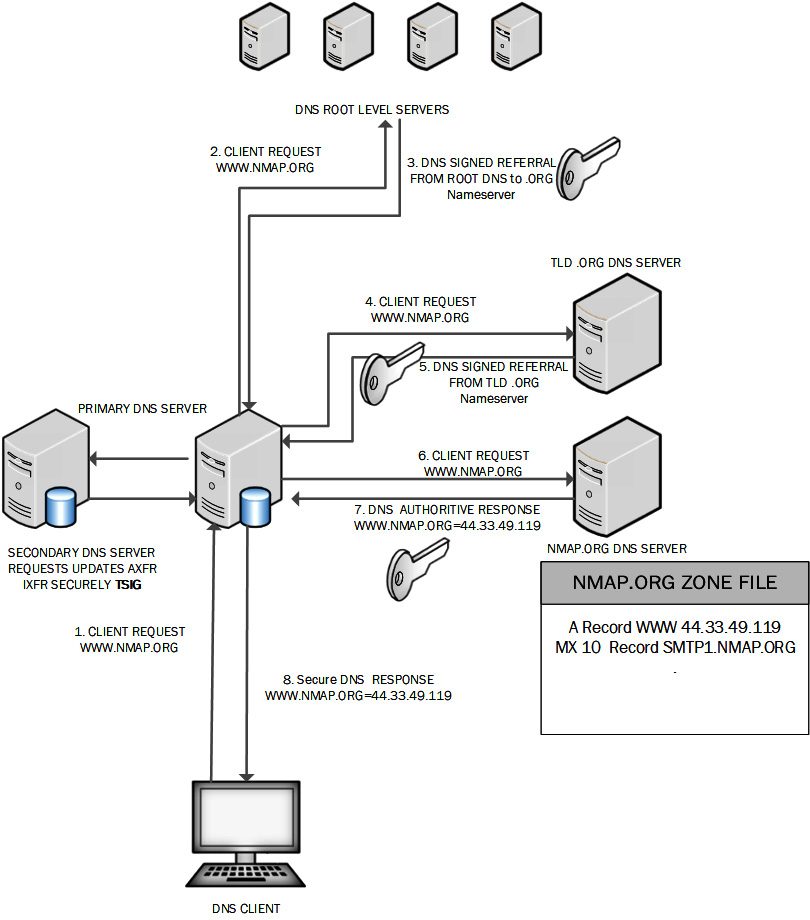

Domain name system

The Domain Name System (DNS) is an important part of the TCP/IP protocol suite. It was implemented in 1984, and on UNIX servers the standard was a daemon named Berkley Internet Naming Daemon (BIND). Common user applications such as web browsers allow the user to type user-friendly domain names, such as www.bbc.com or www.sky.com, but these requests need to be routed across networks using Internet Protocol (IP) addresses. DNS translates domain names to IP addresses so applications can connect to services.

DNS is a critical service for any size of organization. Without DNS our enterprise users will not be able to access intranet services, customers will not be able to access e-commerce sites, and partners will not be able to connect to our APIs. To ensure high availability there should be at least one secondary DNS nameserver, and for a large organization there will be many secondary DNS servers.

It is important for name resolution to be a trustworthy process. When DNS lookups result in malicious additions to the DNS server cache or client cache, it is known as DNS cache poisoning.

When a computer is re-directed to a malicious website as a result of cache poisoning, it is called pharming. To ensure we have secure name resolution, we need to enable DNSSEC extensions for all our DNS servers. There is a useful site dedicated to all things related to DNS security at https://www.dnssec.net/.

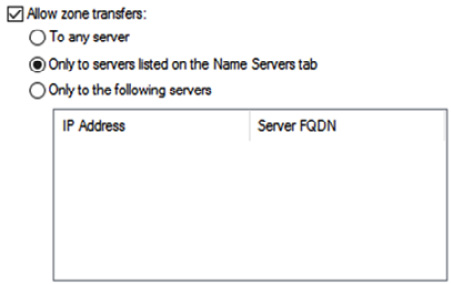

Authoritative name servers (those that host the DNS for the domain) communicate updates using zone transfers (the zone file is the actual database of records). Incremental Transfer (IXFR) describes the incremental transfers of nameserver records, while All Transfer (AXFR) describes the transfer of the complete zone file. It is important that this process is restricted to authorized DNS servers using Access Control Lists (ACLs). It is important that any updates to DNS zone files are trustworthy. RFC2930 defines the use of Transaction Signatures (TSIG) for zone file updates. When TSIG is used, two systems will share a secret key (in an Active Directory domain, Kerberos will take care of this). This key is then used to generate a Hash Message Authentication Code (HMAC), which is then applied to all DNS transactions.

Figure 2.16 shows the security configuration for a zone file:

Figure 2.16 – DNS ACL

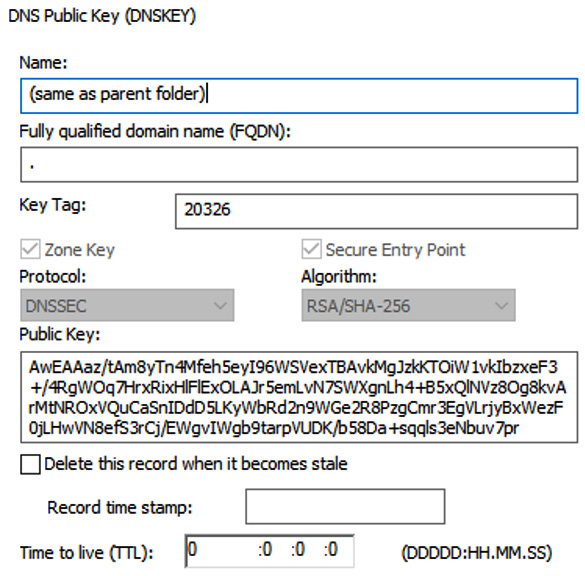

DNSSEC should be implemented to ensure protection from DNS cache poisoning.

To enable DNSSEC on your forwarding DNS servers, it is a requirement to download and install the root server's public key. On Microsoft DNS, this can be done using dnscmd (dnscmd /RetrieveRootTrustAnchors). Figure 2.17 shows a public key record, used to validate DNSSEC responses:

Figure 2.17 – DNSSEC root public key

Once we have implemented DNNSEC, we can then perform DNS lookups on behalf of our organization with the resulting responses being signed by trusted domains. Figure 2.18 shows the secure DNS lookup process:

Figure 2.18 – DNSSEC processing

DNSSEC ensures responses from all DNS servers can be validated, and also allows for secure zone transfers between primary and secondary DNS servers. TSIG ensures the updates to the secondary server are trusted.

Service-oriented architecture

Service-Oriented Architecture (SOA) is an architecture developed to support service orientation, as opposed to legacy or monolithic approaches.

Originally, systems were developed with non-reusable units. For example, a customer can buy a complete ERP system, but a smaller customer would like to have only some of the full functionality (perhaps just the human resource and financial elements). In this situation, with legacy or monolithic approaches, the developers could not easily provide these two modules independently.

Using a more modular approach, developers can easily integrate these modules or services packaged up and can provide these services using standards. SOA allows for a communication protocol over a network. The service, which is a single functional unit, can be accessed remotely and offers functionality, such as a customer being able to access order tracking of a purchased item, without needing to access an entire sales order processing application.

Examples of SOA technologies include the following:

- Simple Object Access Protocol (SOAP)

- RESTful HTTP

- Remote Procedure Calls written by Google (gRPC)

- Apache Thrift (developed by Facebook)

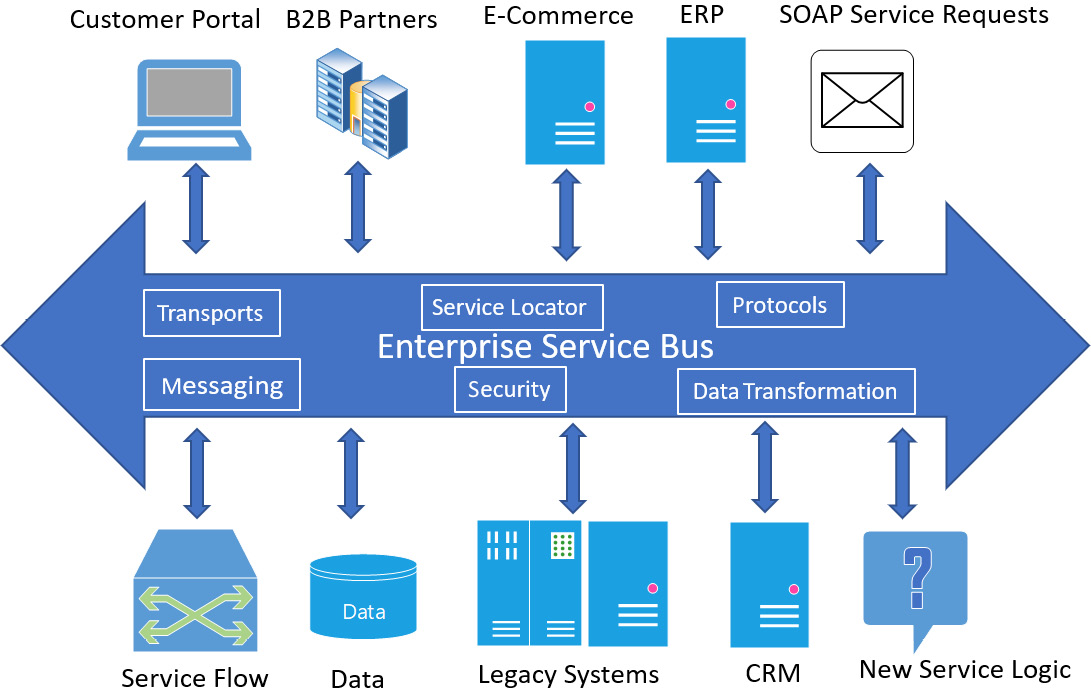

Enterprise service bus

An Enterprise Service Bus (ESB) is used to integrate many different architectures and protocols.

A challenge facing many vendors is integration and competing standards. Legacy information systems such as IBM Systems Network Architecture (SNA) (developed for mainframe computers in the 1970s) does not communicate directly on a TCP/IP network, and does not typically host applications that use modern standards-based communication. Without an ESB these systems would be obsolete.

It is possible to implement an SOA without an ESB, but this would be equivalent to building a huge shopping mall with multiple merchants ready for business but without roads, transportation, and utility services. There would be no easy way to access these merchants' services.

The term middleware is sometimes used as a term to describe these types of connectivity services. We can see the components of an ESB in Figure 2.19:

Figure 2.19 – ESB

There is a requirement for this technology in many large enterprises, where complex heterogeneous systems and workloads need this integration enablement.

Summary

In this chapter, we have taken a look at frameworks used for developing or commissioning new services or software (the SDLC and SDL). We have covered how systems and services can be built securely. As a security professional, it is important to understand how we can provide assurance that products meet the appropriate levels of trust. We have learned how to deploy services that can be considered trustworthy and meet recognized standards.

We have looked at the process of automation by deploying DevOps pipelines. We have looked at the cultural aspects of combining development and operations teams (DevOps) with a focus on security (SecDevOps).

We have examined different development methodologies to understand different approaches to meet customer requirements (waterfall, Agile, and spiral).

We have learned about the importance of testing, including integrated, static, and dynamic testing. We have looked at adopting secure testing environments, including staging and sandboxing. You have learned about the importance of baselines and templates to ensure standards compliance and security are built into new systems and software.

We have looked at guidance and best practices for software development, from government-funded guidance (NCSC), to community-based guidance (OWASP) and commercial enterprises (Microsoft SDL).

We have learned about the security implications of integrating enterprise applications, including CRM, ERP, CMDB, and CMS.

Integration enablers are key to any large organization. We should understand the importance of the key services of DNS, Directory Services, SOA, and ESB.

In this chapter, you have gained the following skills:

- Learned key concepts of the SDLC, including the methodology and security frameworks.

- Gained understanding in DevOps and SecureDevOps

- Learned different development approaches including Agile, waterfall, and spiral

- An understanding of software QA including sandboxing, DevOps pipelines, continuous operations, and static and dynamic testing

- An understanding of the importance of baselines and templates, including NCSC recommended approaches, OWASP industry standards, and Microsoft SDL

- An understanding of the importance of integration enablers including DNS, directory services, SOA, and ESB

These skills learned will be useful during the next chapter, when we take a journey through the available cloud and virtualization platforms.

Questions

Here are a few questions to test your understanding of the chapter:

- Which of the following is a container API?

- VMware

- Kubernetes

- Hyper-V

- Docker

- Why would a company adopt secure coding standards? Choose all that apply.

- To ensure most privilege

- To adhere to the principle of least privilege

- To sanitize data sent to other systems

- To practice defense in depth

- To deploy effective QA techniques

- Why does Microsoft have an application-vetting process for Windows Store applications?

- To ensure products are marketable

- To ensure applications are stable and secure

- To make sure patches will be made available

- To ensure HTTP is used instead of HTTPS

- What is most important for a development team validating third-party libraries? Choose two.

- Third-party libraries may have vulnerabilities.

- Third-party libraries may be incompatible.

- Third-party libraries may not support DNSSEC.

- Third-party libraries may have licensing restrictions.

- What is the advantage of using the DevOps pipeline methodology?

- Long lead times

- Extensive pre-deployment testing

- Continuous delivery

- Siloed operations and development environments

- What is the importance of software code signing?

- Encrypted code modules

- Software QA

- Software integrity

- Software agility

- Which of the following is a common tool used to perform Dynamic Application Security Testing (DAST)?

- Network enumerator

- Sniffer

- Fuzzer

- Wi-Fi analyzer

- What type of code must we have to perform Static Application Security Testing (SAST)?

- Compiled code

- Dynamic code

- Source code

- Binary code

- What will my sales team use to manage sales opportunities?

- CRM

- ERP

- CMDB

- DNS

- What would be a useful tool to integrate all business functions within an enterprise?

- CRM

- ERP

- CMDB

- DNS

- What would be a useful tool to track all configurable assets within an enterprise?

- CRM

- ERP

- CMDB

- DNS

- How can I ensure content is made accessible to the appropriate users through my web-based portal?

- CRM

- CMS

- CMDB

- CCMP

- How can I protect my DNS servers from cache poisoning?

- DMARC

- DNSSEC

- Strict Transport Security

- IPSEC

- What is it called when software developers break up code into modules, each one being an independently functional unit?

- SOA

- ESB

- Monolithic architecture

- Legacy architecture

- What is the most important consideration when planning for system end of life?

- To ensure systems can be re-purposed

- To ensure there are no data remnants

- To comply with environmental standards

- To ensure systems do not become obsolete

- What type of software testing is used when there has been a change within the existing environment?

- Regression testing

- Pen testing

- Requirements validation

- Release testing

- What is it called when the development and operations teams work together to ensure that code released to the production environment is secure?

- DevOps

- Team-building exercises

- Tabletop exercises

- SecDevOps

- What software development approach would involve regular meetings with the customer and developers throughout the development process?

- Agile

- Waterfall

- Spiral

- Build and Fix

- What software development approach would involve meetings with the customer and developers at the end of a development cycle, allowing for changes to be made for the next iteration?

- Agile

- Waterfall

- Spiral

- Build and Fix

- What software development approach would involve meetings with the customer and developers at the definition stage and then at the end of the development process?

- Agile

- Waterfall

- Spiral

- Build and Fix

- Where will we ensure the proper HTTP headers are configured?

- Domain Controller

- DNS server

- Web server

- Mail server

Answers

- B

- B, C, D and E

- B

- A and D

- C

- B

- C

- C

- A

- B

- C

- B

- B

- A

- B

- A

- D

- A

- C

- B

- C