Chapter 8: Implementing Incident Response and Forensics Procedures

When considering all the threats that can impact an organization, it is important to ensure there are policies and procedures in place to deal with unplanned security-related events. To ensure timely responses to security incidents, we should implement detailed planning to provide controls and mitigation. It is important, given the nature of sophisticated, well-funded adversaries, that we use a holistic approach when deploying appropriate threat detection capabilities. Some approaches may involve automation, which can lead to occasional mistakes (false positives and false negatives), so it is important that we also ensure we include humans in the loop. The ever-increasing complexity of attacks and a large security footprint add to these challenges. There is also evidence that Advanced Persistent Threat (APT) actors are likely to target vulnerable organizations. Countering APTs may require that we use advanced forensics to detect Indicators of Compromise (IOCs) and, where necessary, collect evidence to formulate a response.

In this chapter, we will cover the following topics:

- Understanding incident response planning

- Understanding the incident response process

- Understanding forensic concepts

- Using forensic analysis tools

Understanding incident response planning

An organization of any size much have effective cyber-security Incident Response Plans (IRPs). In the case of regulated industries, it may be a regulatory requirement that they have appropriate plans and procedures to mitigate the damage caused by security-related incidents. The Federal Information Security Management Act (FISMA) has very strict requirements for appropriate plans to be in place for federal agencies and contractors. These requirements include providing at least two points of contact with the United States Computer Emergency Readiness Team (US-CERT) for reporting purposes. A NIST SP800-61 publication titled Computer Security Incident Handling Guide offers guidance on effective incident response planning for federal agencies and commercial enterprises. When developing a plan, it is important to identify team members, team leaders, and an escalation process. It is important to ensure there are team members available 24/7 as we cannot predict when an incident will occur. Stakeholder involvement is important while developing the plan. Legal counsel will be important, as well as working with parts of the business such as human resources, as they will offer advice about the feasibility of the plan concerning employee discipline. There is comprehensive guidance available within the NIST SP800-61 documentation on this, which is available at https://tinyurl.com/NIST80061R2. For organizations in the United Kingdom, there are very useful resources available from the National Cyber Security Centre (NCSC); see the following URL: https://tinyurl.com/ncscirt.

Event classifications

There are many ways to identify anomalous or malicious events. We can take advantage of automated tools such as Intrusion Detection Systems (IDS) and Security Incident and Event Monitoring (SIEM). We can also rely on manual detection and effective security awareness training for our staff, which can help in detecting threats early. Service desk technicians and first responders can also be effective in detecting malicious activity. Common Attack Pattern Enumeration and Classification (CAPEC) was established by the US Department of Homeland Security to provide the public with a documented database of attack patterns. This database contains hundreds of references to different vectors of attack. More information can be found at http://capec.mitre.org/data/index.html.

Triage event

It is important to assess the type and severity of the incident that has occurred. You can think of triage as the work that's been done to identify what has happened. This term is borrowed from emergency room procedures when a patient in the ER is triaged to determine what needs to be fixed. This enables the appropriate response to be made concerning the urgency, as well as the team members that are required to respond. It is important to understand systems that are critical to enterprise operations as these need to be prioritized. This information is generally available if Business Impact Analysis (BIA) has been undertaken. BIA will identify mission-essential services and their importance to the enterprise. BIA will be covered in detail in Chapter 15, Business Continuity and Disaster Recovery Concepts. As there will be a finite number of resources to deal with the incident, it is important not to focus on the first-come, first-served approach.

Understanding the incident response process

The incident response process is broken down into six distinct phases. Each of these phases is important and must be completed before moving forward. The following diagram shows these distinct phases:

Figure 8.1 – Incident response process

Now, let's discuss these six phases.

Preparation

While preparing for an IRP, it is a good practice to harden systems and mitigate security vulnerabilities to ensure a strong security posture is in place. In the preparation phase, it is normal to increase the enterprise's resilience by focusing on all the likely attack vectors. Some of the tasks that should be addressed to prepare your organization for attacks include the following:

- Perform risk assessments

- Harden host systems

- Secure networks

- Deploy anti-malware

- Implement user awareness training

It is important to identify common attack vectors. While it is almost impossible to foresee all possible attack vectors, common ones can be documented and incorporated into playbooks.

The following diagram shows some common attack vectors to consider:

Figure 8.2 – Common attack vectors

Although the preparation phase will increase the security posture, we must also have the means to detect security incidents.

Detection

To respond to a security incident, we must detect anomalous activity. The vector that's used for an attack can be varied in that it can include unauthorized software, external media, email attachments, DoS, theft of equipment, and impersonation, to name a few. We must have the means to identify new and emerging threats. Common methods include IDP, SIEM, antivirus, AntiSpam, FIM software, data loss prevention technology, and third-party monitoring services. Logs from key services and network flows (NetFlow and sFlow) can also help detect unusual activity. First responders, such as service desk technicians, may also be able to confirm IOCs when responding to user calls.

Once we have detected such security-related events, we must analyze the activity to prepare a response.

Analysis

Not every reported security event or automatic alert is necessarily going to be malicious; unexpected user behavior or errors may result in false reporting. It is important to discard normal/non-malicious events at this stage. When a security-related event is incorrectly reported, it can result in the following:

- False positives: This is where an event is treated as a malicious or threatening activity but turns out to be benign.

- False negatives: This is when a malicious event is considered non-threatening and no subsequent action is taken when there is a threat.

When the correct tools and investigative practices are followed, then the correct diagnosis should be made, resulting in the following:

- True positives: This means that the event has been identified as malicious and the appropriate action can be taken.

- True negatives: This means that an event has been correctly identified as non-threatening and no remedial action needs to be taken.

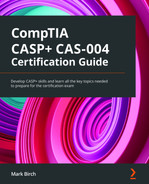

It is important to document the incident response process using issue tracking systems, which should be readily available for IRT members. The following diagram shows information that should be recorded in this application database:

Figure 8.3 – Incident documentation

Security and confidentiality must be maintained for this information.

Containment

It is important to devise containment strategies for different types of incidents. If the incident is a crypto-malware attack or fast-spreading worm, then the response will normally involve quickly isolating the affected systems or network segment. If the attack is a DoS or DDoS that's been launched against internet-facing application servers, then the approach may involve implementing a Remote Triggered Black Hole (RTBH) or working with an ISP offering DDoS mitigation services.

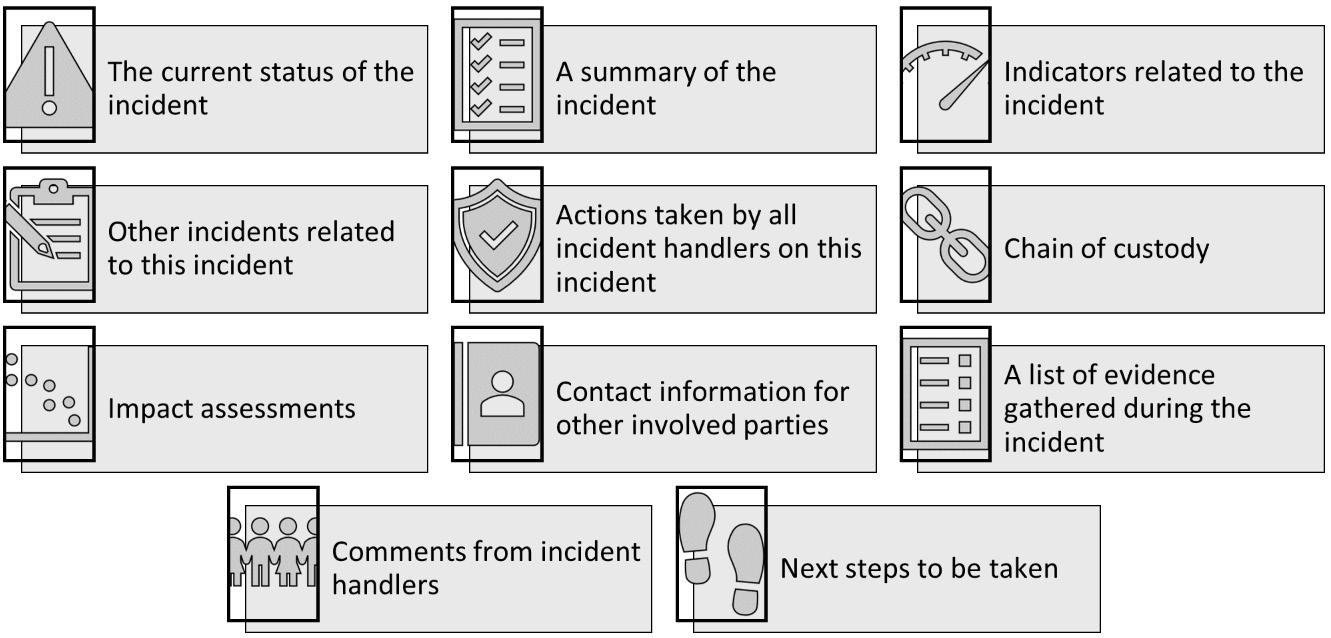

The following diagram shows the criteria that should be considered when planning containment strategies:

Figure 8.4 – Containment strategy

Some containment strategies may involve further analysis of the attack by containing the activity within a sandbox.

Eradication and recovery

Once the incident has been contained, it may be necessary to eradicate malware or delete malicious accounts before the recovery process can begin. Recovery may require replacing damaged hardware, reconfiguring the hardware and software, and restoring from backups. It is important to have access to documentation, including implementation guides, configuration guides, and hardening checklists. This information is typically available within the Disaster Recovery Plan (DRP).

Lessons learned

An important part of the incident response process is the after-action report, which allows improvements to be made to the process. What went well or not well should be addressed at this point. The team should perform this part of the exercise within days of the incident. The following diagram highlights some of the issues that may arise from a lessons learned exercise:

Figure 8.5 – Lessons learned

Lessons learned will allow us to understand what improvements are necessary for the IRP.

Specific response playbooks/processes

It is important to plan for as many scenarios as possible. Typical scenarios could include ransomware, data exfiltration, social engineering, and DDoS, to name a few.

Many available resources are available that an organization can use to prepare for common scenarios. For example, NIST includes an appendix in NIST SP800-61 documenting multiple incident handling scenarios. This document can be found at the following URL: https://tinyurl.com/SP80061.

When considering response actions, we will need to create manual and automated responses.

Non-automated response methods

If manual intervention is required, there should be clear documentation, including CMDB, network diagrams, escalation procedures, and contact lists. If the incident type has not been seen before, all the actions must be captured and documented to create a playbook for the future.

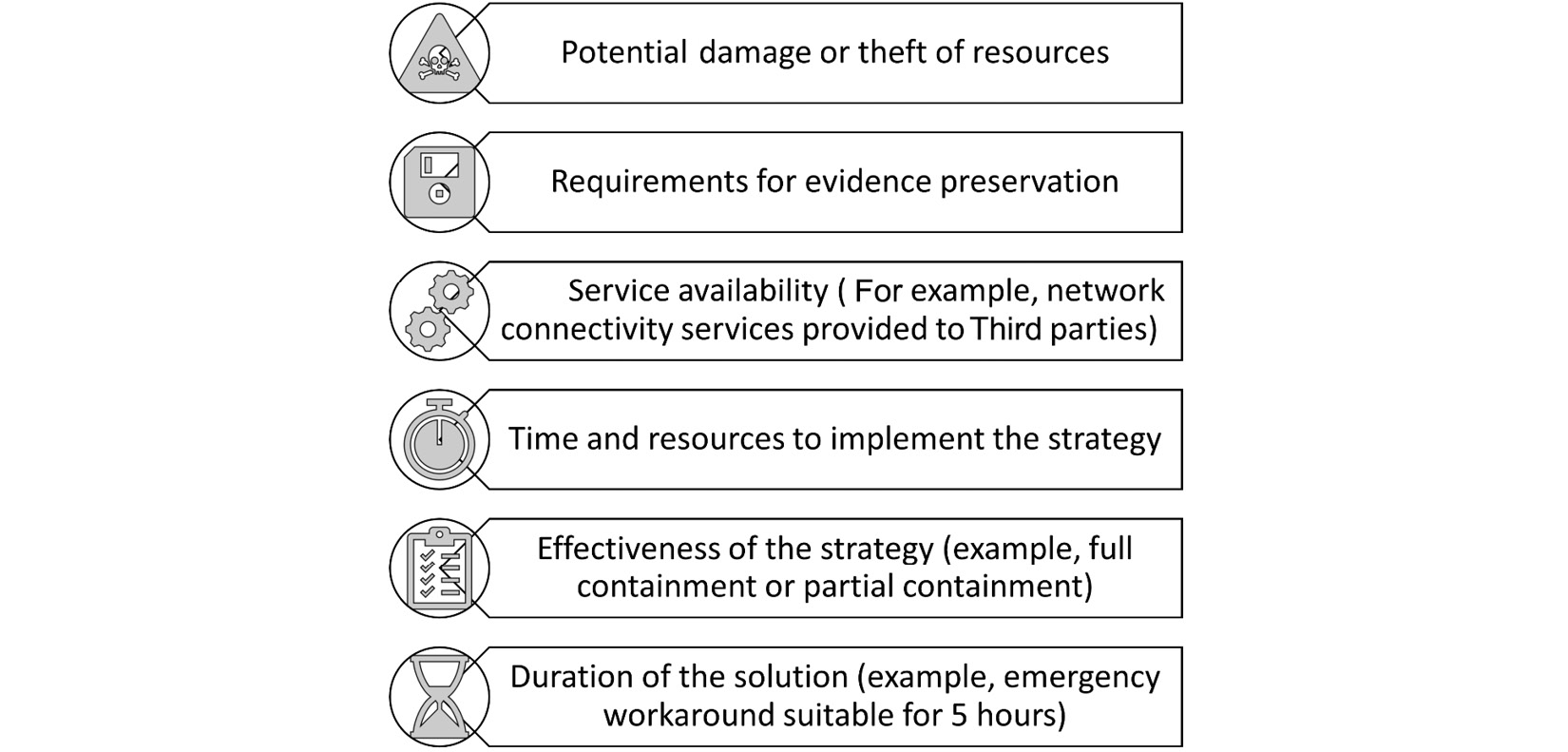

Playbooks

Playbooks can be used to respond to an incident by giving security professionals a set of checks and actions to work through common security scenarios. This can be very beneficial in reducing response times and containing the incident. The following diagram shows a playbook that's been created to help handle a malware incident:

Figure 8.6 – Playbook for a malware incident

Playbooks can be very useful as they provide a step-by-step set of actions to handle typical incident scenarios.

Automated response methods

Automated responses can be triggered by a human-in-the-loop, who may need to activate a response or have a fully automated one. Let's look at some examples of these approaches.

Runbooks

A runbook will allow first responders and other Incident Response Team (IRT) members to recognize common scenarios and document steps to contain and recover from the incident. It could be a set of discrete instructions to add a rule to a firewall or to restart a web server during the recovery phase within incident response. Runbooks do not include multiple decision points (this is what playbooks are used for), so they are ideal for automated responses and will work well when integrated within Security Orchestration and Response (SOAR).

Security Orchestration and Response (SOAR)

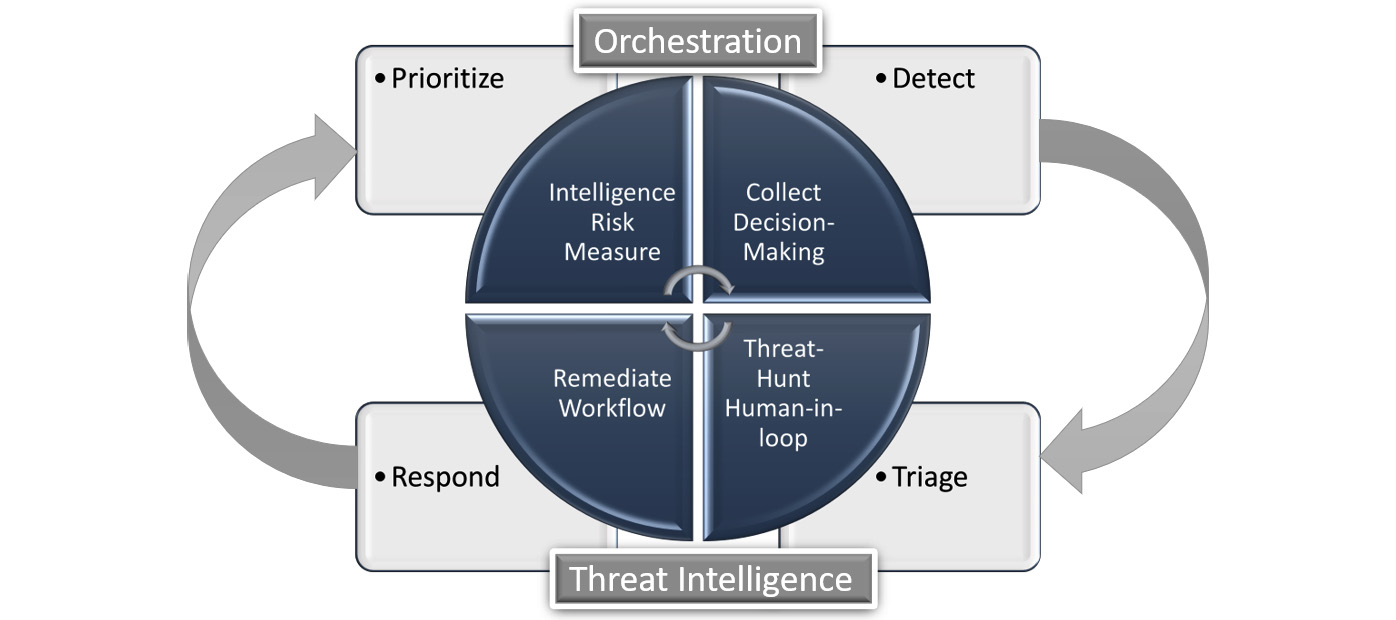

SOAR allows an organization to use advanced detection capabilities to identify threats and then harness orchestration to respond to these threats. Using tools such as SIEM, we can easily detect IOCs and prepare a response. The response may include semi-automation using runbooks or be able to fully automate responses. Orchestration allows for complex tasks to be defined using visual tools and scripting. The following diagram shows the workflow for a SOAR:

Figure 8.7 – SOAR workflow

There are many vendor solutions currently being offered to orchestrate security responses.

Communication plan

It is important to have effective communication plan stakeholder management.

The IRT may need to communicate with many external entities, and it is important to document these entities within the IRP. Some examples of these parties are shown in the following diagram:

Figure 8.8 – Communication plan

It is important to consider how an organization will communicate with media outlets. Rules of engagement should be established before the need arises to deal with reporting to news outlets.

Within incident response, there is a need for investigative tools to understand whether an incident is actually taking place and also to collect evidence for a legal response. The tools and techniques that are used will involve the use of computer forensics.

Understanding forensic concepts

An organization must be prepared to undertake computer forensics to support both legal investigations and internal corporate purposes. When considering different scenarios, it will be important to understand where external agencies or law enforcement need to be involved. If the investigation is to be performed by internal staff, then they should have the appropriate training and tools. Guidance for integrating forensic techniques into incident response is covered in NIST SP800-86. More information can be found here: https://tinyurl.com/nistsp80086.

Forensic process

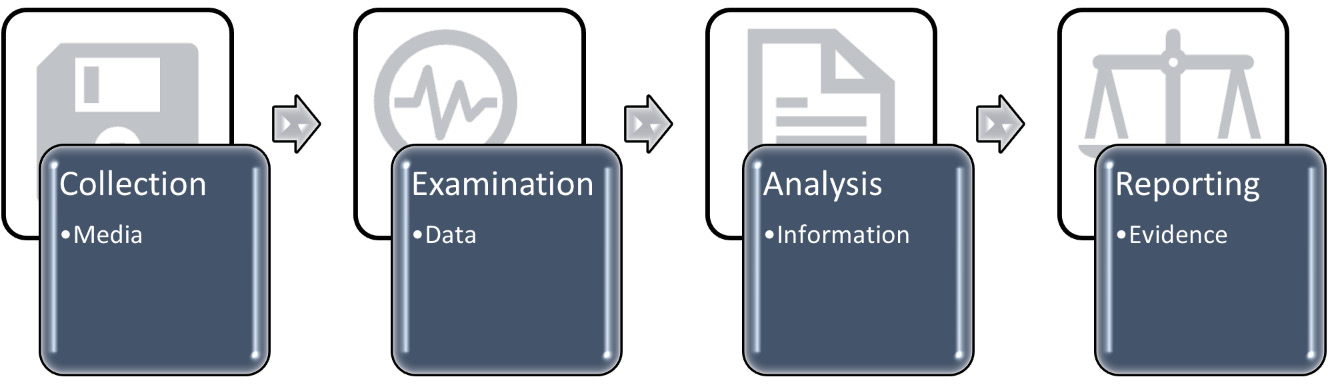

It is important to follow the correct forensic process. This can be broken down into four steps, as shown in the following diagram:

Figure 8.9 – Forensic process

These four steps are covered in the following list. We will discuss the appropriate tools to be used in more detail later in this chapter:

- Data collection involves identifying sources of data and the feasibility of accessing data. For data that's held outside the organization, a court order may be required to gain access to it. It is important to use validated forensic tools and procedures if the outcome is going to be a legal process.

- Once we have collected the data, we can examine the raw data. Such data could include extensive log files or thousands of emails from a messaging server; we can filter out the logs and messages that are not relevant.

- Data analysis can be performed on the data to correlate events and patterns. Automated tools can be used to search through the logged data and look for IOCs.

- During the reporting phase, decisions will be made as to what further action is applicable. Law enforcement will need detailed evidence to construct a legal case. Senior management, however, will require reports in a more business-orientated format to formulate a business response.

It is important to adhere to strict forensics procedures such as the order of volatility, so as not to miss crucial evidence. Also, a chain of custody should be created as the evidence is collected.

Chain of custody

A chain of custody form must begin when the evidence is collected. It is important to document relevant information about where and how the evidence was obtained. When the evidence changes hands, it is important that documentary evidence is recorded, detailing the transaction.

The following diagram shows a typical chain of custody form:

Figure 8.10 – Chain of custody form

Without this important documentation, a legal or disciplinary process may be difficult.

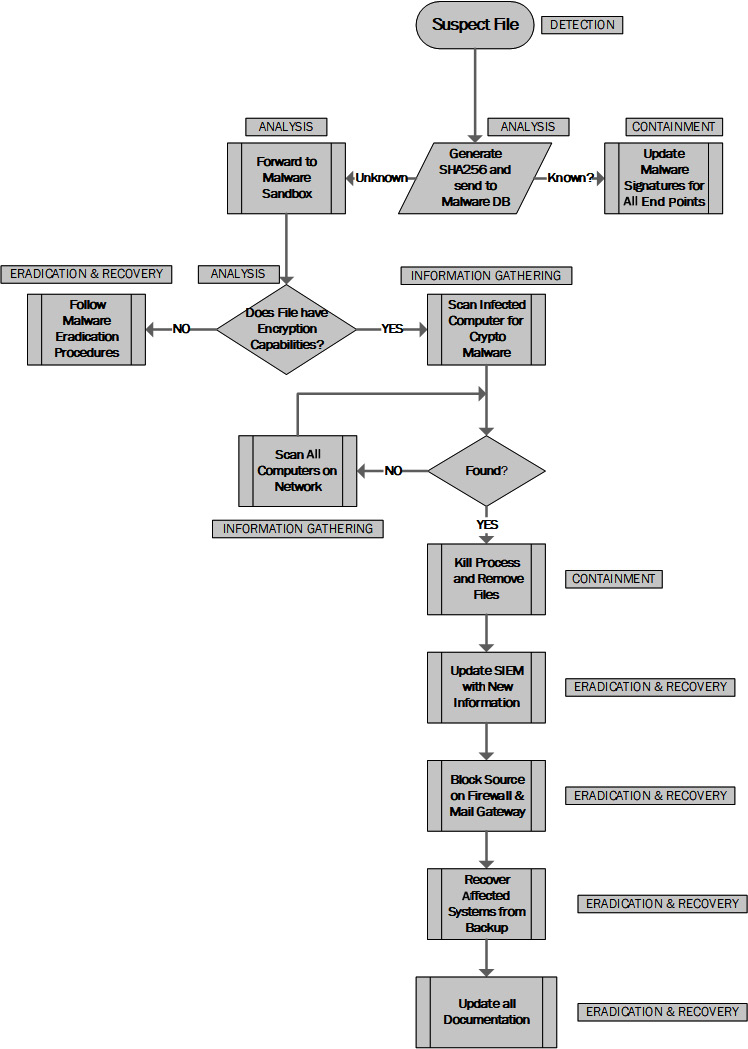

Order of volatility

When you're undertaking computer forensics, it is important to follow standards and accepted practices. Failure to do so may result in a lack of evidence or evidence that is inadmissible. RFC 3227 is the Internet Engineering Taskforce (IETF) standard for capturing data that will be used to investigate data breaches or provide evidence of IOCs. The volatile data is held in a CPU cache that contains data that is constantly being overwritten, while archived media is long term and is stored on paper, a backup tape, or WORM storage. The following screenshot shows some of the storage locations that should be addressed:

Figure 8.11 – Evidence locations

Specialist forensics tools will be required to capture this data.

The order in which efforts are made to safeguard the evidence is important. Allowing the computer to enter hibernation or sleep mode will alter the memory's characteristics and result in missing forensic artifacts. The following diagram shows the correct order of volatility:

Figure 8.12 – Order of volatility

It is important that this order is followed, as failure to do so will likely result in missing evidence.

The list is to be followed in a top-down fashion, with registers and the cache being the most volatile and archived media being the least volatile. Let's look at some common tools that we can use to collect such evidence.

Memory snapshots

Memory snapshots allow forensic investigators to search for artifacts that are loaded into volatile memory. It is important to consider volatile memory as an anomalous activity that may be found that does not get written to storage or logs.

Images

For investigators to analyze information systems while looking for forensic artifacts, the original image/disk mustn't be used (it will be stored securely as the control copy). The image must be an identical bit-by-bit copy, including allocated space, unallocated space, and free and slack space.

Evidence preservation

Once the evidence has been obtained, it is important to store the evidence in a secure location and maintain the chain of custody. We must be able to demonstrate that the evidence has not been tampered with. Hashing the files or images is performed to create a verifiable checksum. Logs can be stored on Write Once Read Many (WORM) to preserve the evidence.

Cryptanalysis

Cryptography may have been used to hide evidence or to render filesystems that were unreadable during a ransomware attack. In these cases, cryptanalysis may be deployed to detect the techniques that were used, as well as the likely attacker.

Steganalysis

Steganography is a technology that's used to hide information inside another file, often referred to as the carrier file. Steganalysis is the technology that's used to discover hidden payloads in carrier files. Digital image files are often used to hide text-based data, such as JPEGs. When text is hidden in a compressed digital image file, it can distort the pixels in the image. These distortions are then identified by the Steganalysis tool.

Using forensic analysis tools

Now that we have a good understanding of the types of evidence that can be collected and the analysis that can be performed, we must assemble a forensic tool kit. We must ensure that the tools that we are using are recognized as valid tools and make sure that they are used correctly. For example, to capture a forensic image of a storage device, we need a tool that performs a bit-by-bit copy. We would not use the same tool that allows us to prepare images/templates for distributing an operating system. In this section, we will identify common tools that are used for forensics analysis.

File carving tools

These tools allow a forensics investigator to search a live system or a forensic image for hidden or deleted files, file fragments, and their contents. Two tools are suitable for this process, shown as follows.

foremost

foremost allows you to retrieve various file types that have been deleted from the filesystem. It will search for file fragments in many different formats, including documents, image formats, and binary files. It can retrieve deleted files from the live file system or a forensics image.

The following screenshot shows help for the foremost command:

Figure 8.13 – The foremost command

Here is an example of using the foremost command:

foremost -t pdf,png -v -i /dev/sda

In this example, we are searching the fixed drive for the .pdf and .png file types.

strings

This is a useful forensics tool for searching through an image file or a memory dump for ASCII or Unicode strings. It is a built-in tool that's included with most Linux/Unix distributions.

The following screenshot shows the options for the strings command:

Figure 8.14 – The strings command

In this example, we are searching within a binary file, where we need to see if there are any embedded copyright strings:

strings python3 | grep copyright

We are using the strings command to search for all the strings within python3 and piping the output to the grep command, to only display strings containing copyright characters.

Binary analysis tools

There are many tools in a forensic toolkit that can be used to identify the content and logic that's used within binary files. As these files are compiled, we cannot analyze the source code, so instead, we must use analysis tools to attempt to see links to other code modules and libraries. We can use reverse engineering techniques to break down the logic of binary files. There are many examples of binary analysis tools, some of which we will look at now.

Hex dump

A hex dump allows us to capture and analyze the data that's stored in flash memory. It is commonly used when we need to extract data from a smartphone or other mobile device. It will require a connection between the forensics workstation and the mobile device. A hex dump may also reveal deleted data, such as SMS messages, contacts, and stored photos that have not been overwritten.

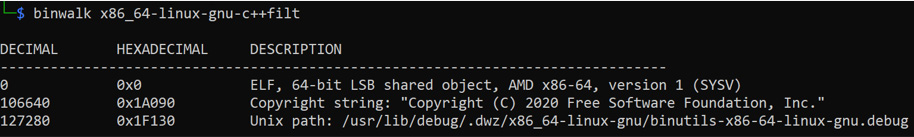

Binwalk

Binwalk is a useful tool in computer forensics as it will search binary images for embedded files and executable code. When running the tool, the results can be placed in a folder for further analysis. It can also be used to compare files for common elements. We may be able to detect signatures from a known malicious file in a newly discovered suspicious file. The output will display the contents and the offsets in decimal and hex of the payloads. The following screenshot shows the output of the binwalk command:

Figure 8.15 – Binwalk

In the preceding output, the file is an Executable Linking Format (ELF) system file comprising a text string and a path to an executable file.

Ghidra

Ghidra is a tool developed by the National Security Agency (NSA) for reverse engineering. It includes software analysis tools that are capable of reverse engineering compiled code. It is a powerful tool that can be automated and also supports many common operating system platforms. It is designed to search out malicious processes embedded within binary files.

GNU Project debugger (GDB)

Debugging tools allow developers to find and fix faults when executing compiled code. It can be helpful to capture information about bugs in the code that cause unexpected outcomes or cause code to become unresponsive. Debuggers can also show other running code modules that may affect the stability of your code. GDB runs on many common operating systems and supports common development languages.

OllyDbg

Another popular reverse engineering tool is OllyDbg. It can be useful for developers to troubleshoot their compiled code and can also be used for malware analysis. OllyDbg may also be used by adversaries to steal Intellectual Property (IP) by cracking the software code.

Readelf

Readelf allows you to display the content of ELF files. ELF files are system files that are used in Linux and Unix operating systems. ELF files can contain executable programs and libraries. In the following example, we are reading all the fields contained within the ssh executable program file:

readelf -a ssh

Objdump

This is a similar tool to Readelf in that it can display the contents of operating system files on Unix-like operating systems.

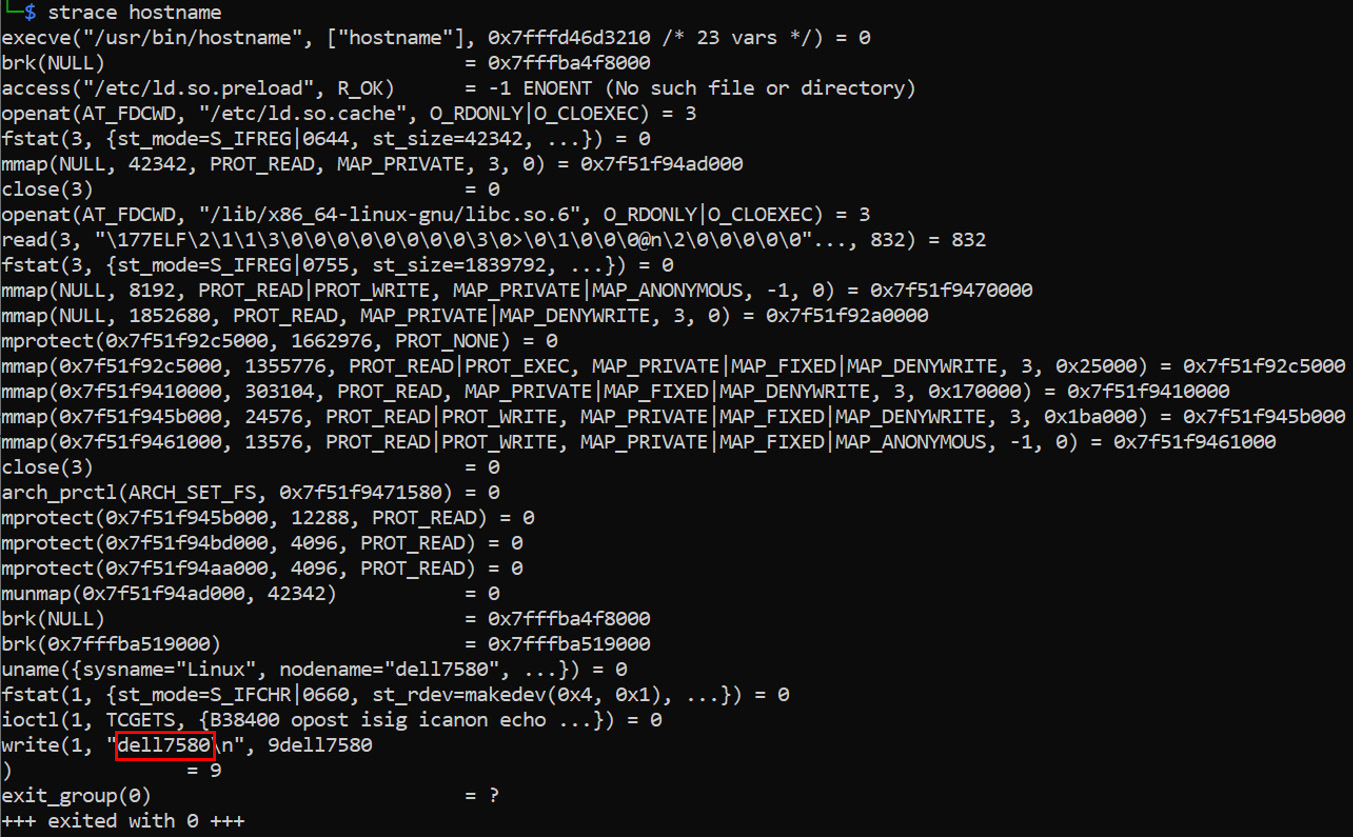

Strace

Strace is a tool for tracing system calls made by a command or binary executable file. The following screenshot shows the Strace output for hostname. The actual output for hostname would be the local system's hostname (dell7580):

Figure 8.16 – The strace command

Here, we can see a very detailed output showing all the system operations that are required to deliver the resultant computer hostname.

ldd

To display dependencies for binary files, we can use the ldd command. This tool is included in most distributions of Linux operating systems and will show any dependent third-party libraries.

The following screenshot shows ldd searching for dependencies in the ssh binary file:

Figure 8.17 – The ldd command

After running the ldd tool for the ssh command, we can see that there are multiple dependencies.

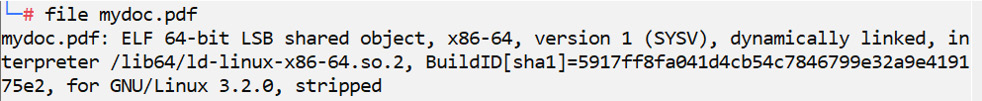

file

The file command is used to determine the file type of a given file. Often, the extension of a file, such as csv, pdf, doc, or exe, will indicate the type. However, many files in Linux do not have extensions. In an attempt to evade detection, attackers may change the extension to make an executable appear to be a harmless document. file will also work on compressed or zipped archives. The following screenshot demonstrates the use of the file command:

Figure 8.18 – The file command

In the preceding example, we can see that a file has been disguised to look like a PDF document but is, in fact, a binary executable.

Analysis tools

Forensic toolkits are comprised of tools that can analyze filesystems, metadata, running operating systems, and filesystems. We need advanced tools to detect the APTs and IOCs that are hidden within our information systems. Let's look at some examples of analysis tools.

ExifTool

To view or edit a file's metadata, we will need a specific analysis tool. ExifTool supports many different image formats, including JPEG, MPEG, MP4, and many more popular image and media formats.

The following command is used to extract metadata from an image that's been downloaded from a website:

exiftool nasa.jpg

The following screenshot shows the output of the preceding command:

Figure 8.19 – ExifTool metadata

This information is very interesting as it displays details of the photographer, their location, and many other details.

Nmap

Nmap can be used during analysis to fingerprint operating systems and services. This will aid security professionals in determining the operating system's build version and the exact versions of the hosted services, such as DNS, SMTP, SQL, and so on.

Aircrack-ng

When we need to assess the security of wireless networks, we can use Aircrack-ng. This tool allows you to monitor wireless traffic, as well as attack (via packet injection) and crack WEP and WPA Pre-Shared Keys (PSKs).

Volatility

The Volatility tool is used during forensic analysis to identify memory-resident artifacts. It supports memory dumps from most major 32-bit and 64-bit operating systems, including Windows, Linux, macOS, and Android. It is extremely useful if the data is in the form of a Windows crash dump, Hibernation file, or VM snapshot.

Sleuth Kit

This tool is a collection of tools that are run from the command line to analyze forensic images. It is usually incorporated in graphical forensic toolkits such as Autopsy. The forensic capture will be performed using imaging tools such as dd. Sleuth Kit also supports dynamically linked storage, meaning it can be used with live operating systems as well as with static images. When it is dynamically linked to operating system drives, it can be useful as a real-time tool, such as when responding to incidents.

Imaging tools

When considering the use of imaging tools to be used in forensic investigation, one of the primary goals is to choose a tool that has acceptance when presenting evidence to a court. Not all tools guarantee that the imaging process will leave the original completely intact, so additional tools such as a hardware write blocker are also important. The following tools are commonly used when the evidence will need to be presented to a court of law.

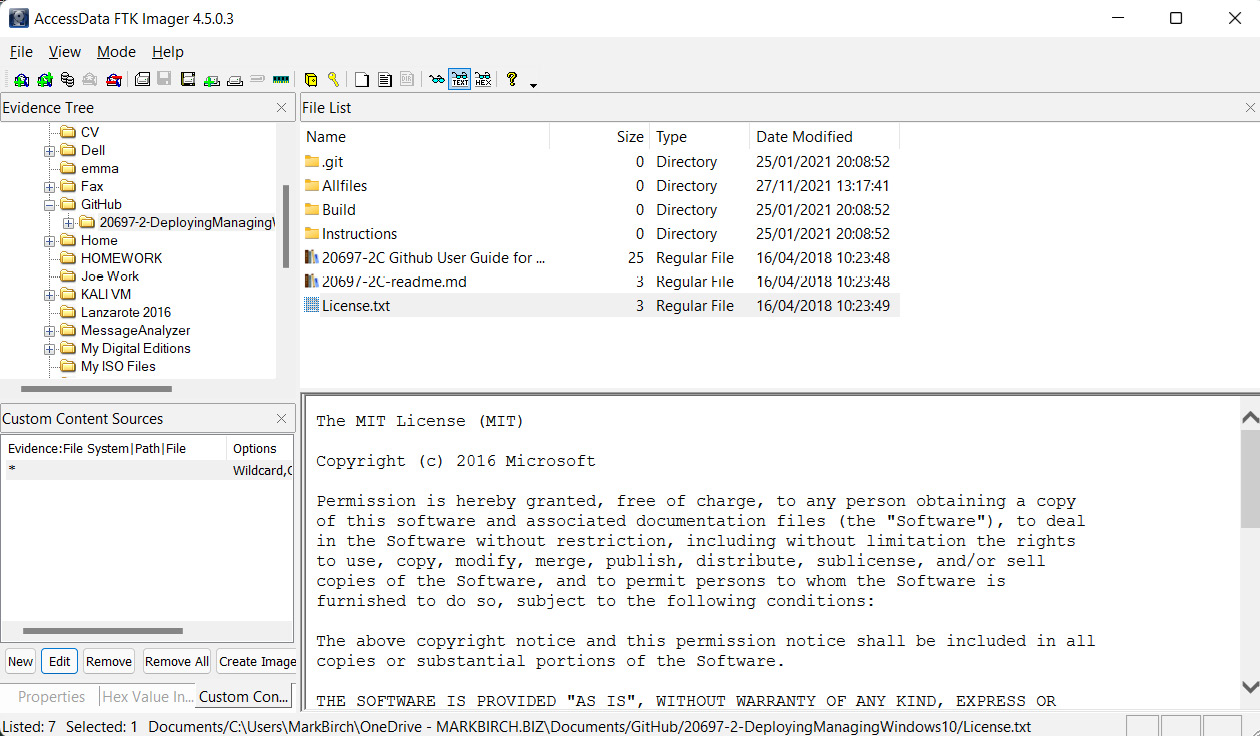

Forensic Toolkit (FTK) Imager

FTK Imager is an easy-to-use graphical tool that runs on Windows operating systems. It can create images in standard formats such as E01, SMART, and dd raw. It lets you hash image files, which is important for integrity, and also logs the complete imaging process. Figure 8.20 shows FTK Imager:

Figure 8.20 – FTK Imager

dd command

The dd command is available on many Linux distributions as a built-in tool. It is known commonly as data duplicator or disk dump. Although on older builds of Linux the tool also allowed for the acquisition of memory dumps, it is not possible to take a complete dump of memory on a modern distribution.

The format of the command is dd if=/dev/sda of=/dev/sdb <options>, where if is the input field and of is the output field. In this case, we are copying from the first physical disk to the second forensics attached disk.

Hashing utilities

When we are working with files and images, it is important to use hashing tools so that we can identify files or images that have been altered from the original. A hash is a checksum for a piece of data. We can use a hash value to record the current state of an image before a cloning process, after which we can hash the cloned image to prove it is the same. During forensic analysis, we can use a database of known hashes for our operating system and application software to spot anomalies. Many hashing utilities are included with operating systems.

Sha<keylength>sum is included with most Linux distributions, allowing for the checksum of a file to be calculated using sha160, 224, 256, 384, and 512. In the following example, a sha256 is being calculated for the Linux grep command:

sha256sum grep

605aaf67445e899a9a59c66446fa0bb15fb11f2901ea33386b3325596b3c8 423 grep

The resulting checksum (also called a digest) is displayed as a 64-character hexadecimal string.

ssdeep can be used to identify similarities in files; it is referred to as a fuzzy hashing program. It can be used to compare a known malicious file with a suspicious file that may be a zero-day threat. It will highlight blocks of similar code and is used within many antivirus products.

Using live collection and post-mortem tools

During forensic analysis, we must closely follow the guidelines for the order of volatility to capture evidence that will be lost or overwritten. Once we have recorded or logged the data, we can analyze and investigate it using a variety of tools.

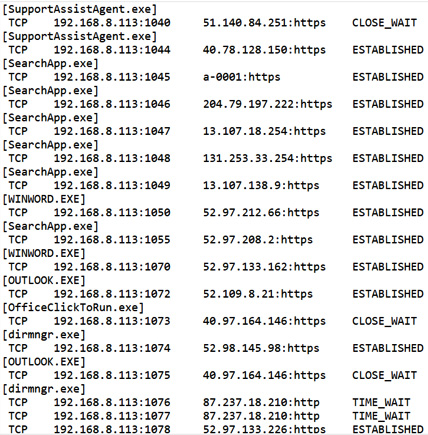

netstat allows a live report to be created on listening ports, connected ports, and the status of connections for local and remote network interfaces. If you need to capture this output for further analysis, it can be piped to a file using the netstat <options> filename syntax. The following screenshot shows the output of the netstat command, saved as a text file:

Figure 8.21 – netstat output

Here, we can see all the connected interfaces and ports, as well as information about the application or service that is using the port.

ps can be used to view running processes on Linux and Unix operating systems. Each process is allocated a process ID, which can be used if the process is to be terminated with the kill command.

The following screenshot shows the output of ps -A (shows all processes) when run on Kali Linux:

Figure 8.22 – The ps command

We can pipe Linux or Windows commands into a file using the pipe, >, operator.

vmstat can be used to show the number of available computing resources and currently used resources. The resources are proc (running processes), memory (used and available), swap (virtual memory on disk), I/O (shows blocks sent and received from disk), system (interrupts per second, hardware calls), and CPU (processor activity). The vmstat command with no options will show the average values since the system was booted. The following screenshot shows an example where there is a 5-second update for the display:

Figure 8.23 – The vmstat command

As the system is relatively idle, there are no obvious big changes in the reported values. It is important to understand that updates every few seconds may cause unnecessary processing in the host system.

lsof allows you to list all opened files, the process that was used to open them, and the user account associated with this. The following screenshot shows all the opened files associated with the user mark:

Figure 8.24 – The isof command

In the preceding example, FD is the file descriptor and TYPE is the file type, such as REG, which means that it is a regular file.

netcat

To execute commands on a remote computer, we can install netcat (nc). netcat can be launched as a listener or a compromised computer to give remote access to attackers. It may also be used in forensics to gather data with a minimum footprint on the system under investigation.

To set up a listening port on the system under investigation, we can type the following command:

nc -l -p 12345

To connect from the forensics workstation, we can use the following command:

nc 10.10.0.3 12345

This will allow us to run commands on the system under investigation to reflect all the outputs on the forensics workstation.

To transfer a file for investigation, we can use the following commands. On the system under investigation, we will wait for 180 seconds on port 12345 to transfer vreport.htm:

nc -w 180 -p 12345 -l < vreport.htm

To transfer the report file to the forensics workstation, we can use the following command:

nc 10.10.0.51 12345 > vreport.htm

We now have a copy of the report on the forensics workstation (the report is a vulnerability scan that was performed with a different tool).

tcpdump can be used to capture real-time network traffic and also to open captured traffic using common capture formats, such as pcap. tcpdump is included by default on most Linux distributions. It is the Linux version of Wireshark.

To capture all the traffic on the eth0 network interface, we can use the following command:

tcpdump -I eth0

The following screenshot shows the traffic that was captured on eth0:

Figure 8.25 – The tcpdump capture

The preceding screenshot shows the command line's tcpdump output, which can also be saved for later analysis.

Conntrack is used by security professionals to view the details of the IPTABLES (firewall) state tables. It is a very powerful command-line tool, but in this instance, it will be of interest to see extra details about the firewall's state table. It allows for detailed tracking of firewall connections. To see a list of all the current connections and their state, we can use the following command:

conntrack -L

The output can be seen in the following screenshot:

Figure 8.26 – Conntrack output

The preceding screenshot shows the detailed output from the firewall state table, including all current connections. The fine detail for this tool is beyond the scope for CompTIA CAS students, though more details can be found at https://conntrack-tools.netfilter.org/manual.html.

Wireshark

One of the most well-known protocol analyzers is Wireshark. It is available for many operating system platforms and presents the security professional with a Graphical User Interface (GUI). Using Wireshark, we can capture traffic in real time to understand normal traffic patterns and protocols. We can also load up packet capture files for detailed analysis. It also has a command-line version called TShark. The following screenshot shows a packet capture using Wireshark:

Figure 8.27 – Wireshark

In the example, we have filtered the displayed packets to show only DNS activity. Wireshark is a very powerful tool. For further examples and comprehensive documentation, go to https://www.wireshark.org/docs/.

Summary

In this chapter, we have considered many threats that can impact an enterprise and identified policies and procedures to deal with unplanned security-related events. We learned about the importance of timely responses to security incidents. Knowledge has been gained on deploying the appropriate threat detection capabilities. We have studied automation, including orchestration and SOAR, also taking care to include a human in the loop. Ever-increasing evidence of APTs means that we need to rely on forensics to detect IOCs and, where necessary, collect the evidence to formulate a response. You should now be familiar with incident response planning and have a good understanding of forensic concepts. After completing the previous section, you should now be familiar with using forensic analysis tools.

Cybersecurity professionals must be able to recognize and use common security tools as these will be important for many day-to-day security activities. Nmap, dd, hashing utilities, netstat, vmstat, Wireshark, and tcpdump are tools that will feature in the CASP 004 certification exam's questions. Specialist binary analysis tools are not as commonly used outside of specialist job roles.

These skills will be useful when we move on to the next chapter, where we will cover securing enterprise mobility and endpoint security.

Questions

Answer the following questions to test your knowledge of this chapter:

- During a security incident, a team member was able to refer to known documentation and databases of attack vectors to aid the response. What is this an example of?

- Event classification

- A false positive

- A false negative

- A true positive

- During a security incident, a team member responded to a SIEM alert and successfully stopped an attempted data exfiltration. What can be said about the SIEM alert?

- It's a false positive.

- It's a false negative.

- It's a true positive.

- It's a true negative.

- During a security incident, a senior team leader coordinated with members already dealing with a breach. They were told to concentrate their efforts on a new threat. What process led to the team leader's actions?

- Preparation

- Analysis

- Triage event

- Pre-escalation tasks

- A CSIRT team needs to be identified, including leadership with a clear reporting and escalation process. At what stage of the incident response process should this be done?

- Preparation

- Detection

- Analysis

- Containment

- During a security incident, a team member responded to a SIEM alert stating that multiple workstations on a network segment have been infected with crypto-malware. what part of the incident response process should be followed?

- Preparation

- Detection

- Analysis

- Containment

- After a security incident, workstations that were previously infected with crypto-malware were placed in quarantine, wiped, and successfully scanned with an updated antivirus. what part of the incident response process should be followed?

- Analysis

- Containment

- Recovery

- Lessons learned

- During a security incident, multiple systems were impacted by a DDoS attack. To mitigate the effect of the attack, a CSIRT team member follows procedures to trigger a BGP route update. This deflects the attack and the systems remain operational. What documentation did the CSIRT team member refer to?

- Communication plan

- Runbooks

- Configuration guides

- Vendor documentation

- Critical infrastructure has been targeted by attackers who demand large payments in bitcoin to reveal the technology and keys needed to access the encrypted data. To avoid paying the ransom, analysts have been tasked to crack the cipher. What technique will they use?

- Ransomware

- Data exfiltration

- Cryptanalysis

- Steganalysis

- During a security incident, multiple systems were impacted by a DDoS attack. A security professional working in the SOC can view the events on a reporting dashboard and call up automated scripts to mitigate the attack. What system was used to respond to the attack?

- Containment

- SOAR

- Communication plan

- Configuration guides

- A technician who is part of the IRT is called to take a forensic copy of a hard drive on the CEO's laptop. He takes notes of the step-by-step process and stores the evidence in a locked cabinet in the CISO's office. What will make this evidence inadmissible?

- Evidence collection

- Lack of chain of custody

- Missing order of volatility

- Missing memory snapshots

- A forensic investigator is called to capture all the possible evidence from a compromised laptop. To save battery life, the system is put into sleep mode. What important forensic process has been overlooked?

- Cloning

- Evidence preservation

- Secure storage

- Backups

- A forensic investigator is called to capture all possible evidence from a compromised computer that has been switched off. They gain access to the hard drive and connect a write blocker, before recording the current hash value of the hard drive image. What important forensic process has been followed?

- Integrity preservation

- Hashing

- Cryptanalysis

- Steganalysis

- Law enforcement needs to retrieve graphics image files that have been deleted or hidden in unallocated space on a hacker's hard drive. What tools should they use when analyzing the captured forensic image?

- File carving tools

- objdump

- strace

- netstat

- FBI forensics experts are investigating a new variant of APT that has replaced Linux operating system files on government computers. What tools should they use to understand the behavior and logic of these files?

- Runbooks

- Binary analysis tools

- Imaging tools

- vmstat

- A forensic investigator suspects stolen data is hidden within JPEG images on a suspect's computer. After capturing a forensic image, what techniques should they use when analyzing the JPEG image files?

- Integrity preservation

- Hashing

- Cryptanalysis

- Steganalysis

- Attackers have managed to install additional services on a company's DMZ network. Security personnel need to identify all the systems in the DMZ and all the services that are currently running. What command-line tool best gathers this information?

- Nmap

- Aircrack-ng

- Volatility

- The Sleuth Kit

- A forensic investigator is called to capture all possible evidence from a compromised computer that has been switched off. They gain access to the hard drive and connect a write blocker. What tool should be used to create a bit-by-bit forensic copy?

- dd

- Hashing utilities

- sha256sum

- ssdeep

- To stop a running process on a Red Hat Linux server, an investigator needs to see all the currently running processes and their current process IDs. What command-line tool will allow the investigator to view this information?

- netstat -a

- ps -A

- tcpdump -i

- sha1sum <filename>

- While analyzing a running Red Hat Linux server, an investigator needs to show the number of available computing resources and currently used resources. The requirements are for the running processor, memory, and swap space on the disk. What tool should be used?

- vmstat

- ldd

- lsof

- tcpdump

- During a live investigation on a Fedora Linux server, a forensic analyst needs to view a listing of all opened files, the process that was used to open them, and the user account associated with the open files. What would be the best command-line tool to use?

- vmstat

- ldd

- lsof

- tcpdump

- While analyzing a running Red Hat Linux server, an investigator needs to run commands on the system under investigation to reflect all the outputs on the forensics workstation. The analyst also needs to transfer a file for investigation using minimum interactions. What command-line tool should be used?

- netcat

- tcpdump

- conntrack

- Wireshark

- Security professionals need to assess the security of wireless networks. A tool needs to be identified that allows wireless traffic to be monitored, and the WEP and WPA security to be attacked (via packet injection) and cracked. What would be the best command-line tool to use here?

- netcat

- tcpdump

- Aircrack-ng

- Wireshark

- A forensic investigator needs to search through a network capture saved as a pcap file. They are looking for evidence of data exfiltration from a suspect host computer. To minimize disruption, they need to identify a command-line tool that will provide this functionality. What should they choose?

- netcat

- tcpdump

- Aircrack-ng

- Wireshark

- A forensic investigator is performing analysis on syslog files. They are looking for evidence of unusual activity based upon reports from User Behavior Analytics (UBA). Several packets show signs of unusual activity. Which of the following requires further investigation?

- nc -w 180 -p 12345 -l < shadow.txt

- tcpdump -I eth0

- conntrack -L

- Exiftool nasa.jpg

- Recent activity has led to an investigation being launched against a recent hire in the research team. Intellectual property has been identified as part of code now being sold by a competitor. UBA has identified a significant amount of JPEG image uploads to a social networking site. The payloads are now being analyzed by forensics. What techniques will allow them to search for evidence in the JPEG files?

- The Steganalysis tool

- The Cryptanalysis tool

- The Binary Analysis tool

- The Memory Analysis tool

Answers

The following are the answers to this chapter's questions:

- A

- C

- C

- A

- D

- C

- B

- C

- B

- B

- B

- A

- A

- B

- D

- A

- A

- B

- A

- C

- A

- C

- B

- A

- A