Chapter 21

Data Center Architecture and Cloud Concepts

If you didn't just skip toward the end of this book, you've trekked through enough material to know that, without a doubt, the task of designing, implementing, and maintaining a state-of-the-art network doesn't happen magically. Ending up with a great network requires some really solid planning before you buy even one device for it. And planning includes thoroughly analyzing your design for potential flaws and optimizing configurations everywhere you can to maximize the network's future throughput and performance. If you blow it in this phase, trust me—you'll pay dearly later in bottom-line costs and countless hours consumed troubleshooting and putting out the fires of faulty design.

Start planning by creating an outline that precisely delimits all goals and business requirements for the network, and refer back to it often to ensure that you don't deliver a network that falls short of your client's present needs or fails to offer the scalability to grow with those needs. Drawing out your design and jotting down all the relevant information really helps in spotting weaknesses and faults. If you have a team, make sure everyone on it gets to examine the design and evaluate it, and keep that network plan active throughout the installation phase. Hang on to this plan even after implementation has been completed because having it is like having the keys to the kingdom; it will enable you to efficiently troubleshoot any issues that could arise after everything is in place and up and running.

High-quality documentation should include a baseline for network performance because you and your client need to know what “normal” looks like in order to detect problems before they develop into disasters. Don't forget to verify that the network conforms to all internal and external regulations and that you've developed and itemized solid management procedures and security policies for future network administrators to refer to and follow.

I'll begin this chapter by going over fundamentals like plans, diagrams, baselines, rules, and regulations and then move on to cover critical hardware and software utilities you should have in your problem resolution arsenal, like packet sniffers, throughput testers, connectivity packages, and even different types of event logs on your servers. And because even the best designs usually need a little boost after they've been up and running for a while, I'll wrap things up by telling you about some cool ways you can tweak things to really jack up a network's performance, optimize its data throughput, and, well, keep it all humming along as efficiently and smoothly as possible.

Data Center Network Architectures

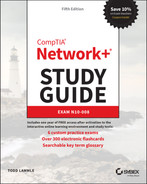

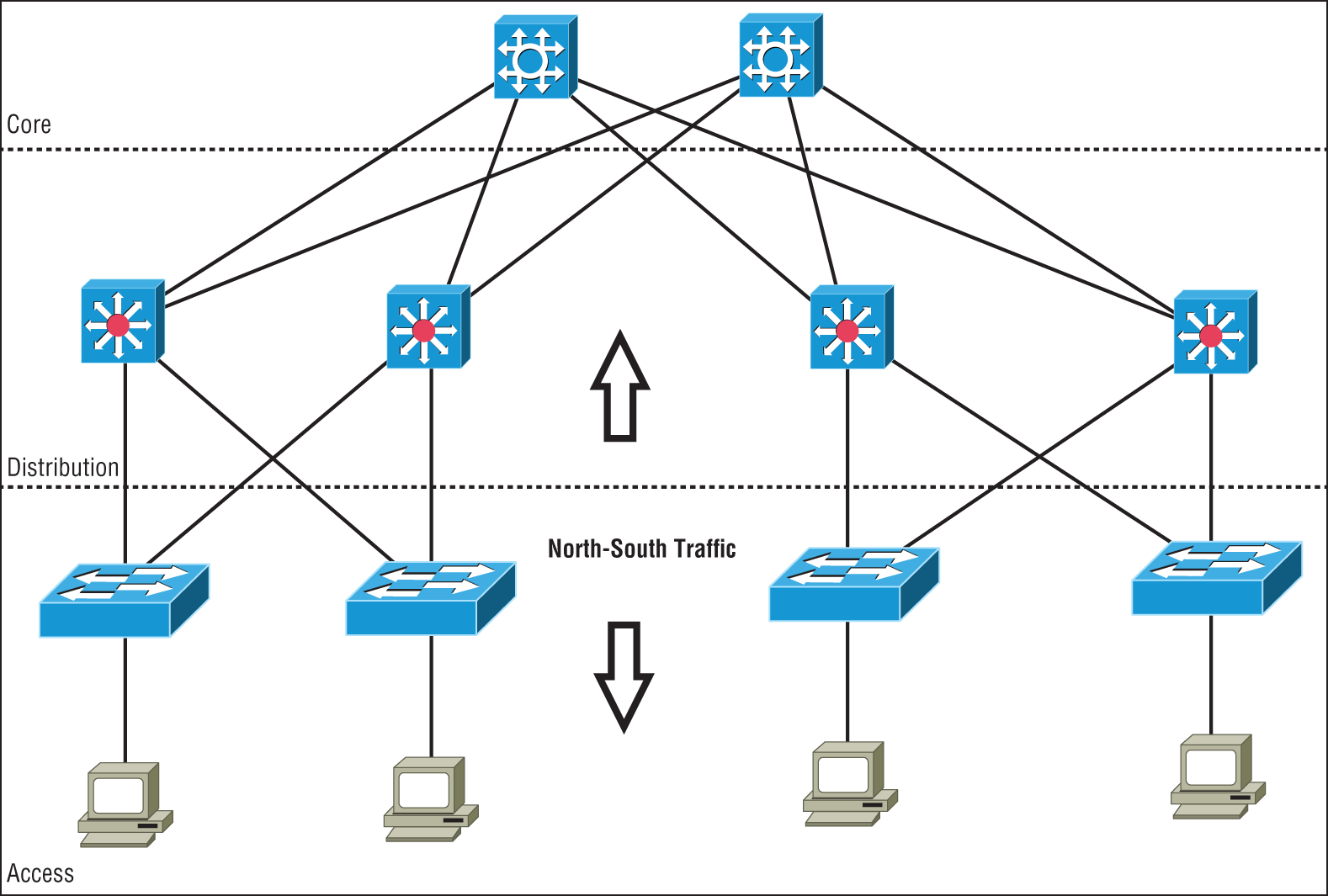

Modern data center networking divides the task up into three sections called tiers or layers, as shown in Figure 21.1. Each layer has a specific function in the data center for various connectivity types. In addition to the traditional data center architectures, I will show you some of the newer designs that are often called the fabric or spine-leaf.

FIGURE 21.1 Data center three-tier architecture

Access/Edge Layer

Starting at the outside of the network and working toward the middle is the access layer, which is also referred to as the edge. This is where all of the devices in the data center attach to the network. This could include servers and Ethernet-based storage devices. The access layer consists of a large number of switches that are often installed at the top or end of each rack to keep cable runs to the servers at a minimum to reduce cable clutter.

Access Ethernet switches are usually fixed port configurations ranging from 12 to 48 ports and are layer two/VLAN based in the most common architecture. The Spanning Tree Protocol (STP) is implemented to prevent network loops from occurring. Access switches will feature high-speed uplink ports from 1G all the way up to 100G to connect to the rest of the network at the distribution layer.

In today's data centers, much of the data flows are between servers, sometimes called East-West traffic. Since the data often stays inside the data center and is server to server, the access switches provide high-speed, low-latency local interconnections between the servers.

Distribution Layer

The middle tier of the three-tier network is called the distribution or aggregation layer. The task of the distribution layer is to provide redundant interconnections for all of the access switches, connect to the core switches, and implement security and access control and layer 3 routing. Distribution switches are chassis-based with modules for different connection types, redundant power, fans, and control logic. Also, the distribution layer provides network redundancy; if one switch should fail, the other can assume the traffic load without incurring any downtime. So, as you would guess, there will always be at least two distribution switches and sometimes more depending on the size of the network. All of the access switches have high-speed uplinks to each of the distribution switches and the distribution switches are all interconnected.

Core Layer

The core layer provides the interconnectivity between all of the network pods in the data center. These are highly redundant and very high-speed interconnection devices. The core switches are usually high-end chassis-based switches with full hardware redundancy as is used in the distribution layer. All of the distribution switches will be connected to redundant core switches to exchange traffic. The core devices can be either routers or switches, depending on the architecture of the data center backbone network, but they do not implement advanced features such as security since the job of the core is to exchange traffic with minimal delays.

Software-Defined Networking

As modern networks grew in complexity and size, it has become increasingly difficult to configure, manage, and control them. There has traditionally been no centralized control plane, which means to make even the simplest of changes often many switches had to be individually accessed and configured.

With the introduction of software-defined networking, a centralized controller is implemented and all of the networking devices are managed as a complete set and not individually. This greatly reduces the amount of configuration tasks required to make changes to the network and allows the network to be monitored as a single entity instead of many different independent switches and routers.

Application Layer

The application layer contains the standard network applications or functions like intrusion detection/prevention appliances, load balancers, proxy servers, and firewalls that either explicitly and programmatically communicate their desired network behavior or provide their network requirements to the SDN controller.

Control Layer

The control layer, or management plane, translates the instructions or requirements received from the application layer devices, proceeds the requests, and configures the SDN-controlled network devices in the infrastructure layer.

The control layer also pushes to the application layer devices information received from the networking devices.

The SDN Controller sits in the control layer and processes configuration, monitoring, and any other application-specific information between the application layer and infrastructure layer.

The northbound interface is the connection between the controller and applications, while the southbound interface is the connection between the controller and the infrastructure layer.

Infrastructure Layer

The infrastructure layer, or forwarding plane, consists of the actual networking hardware devices that control the forwarding and processing for the network. This is where the -/leaf switches sit and are connected to the SDN controller for configuration and operation commands.

The spine and leaf switches handle packet forwarding based on the rules provided by the SDN controller.

The infrastructure layer is also responsible for collecting network health and statistics such as traffic, topology, usage, logging, errors, and analytics and sending this information to the control layer.

Management Plane

SDN network architectures are often broken into three main functions: the management plane, the control plane, and the forwarding plane.

The management plane is the configuration interface to the SDN controllers and is used to configure and manage the network. The protocols commonly used are HTTP/HTTPS for web browser access, Secure Shell (SSH) for command-line programs, and application programming interfaces (APIs) for machine-to-machine communications.

The management plane is responsible for monitoring, configuring, and maintaining the data center switch fabric. It is used to configure the forwarding plane. It is considered to be a subset of the control plane.

The control plane includes the routing and switching functions and protocols used to select the patch used to send the packets or frames as well as a basic configuration of the network.

The data plane refers to all the functions and processes that forward packets/frames from one interface to another; it moves the bits across the fabric.

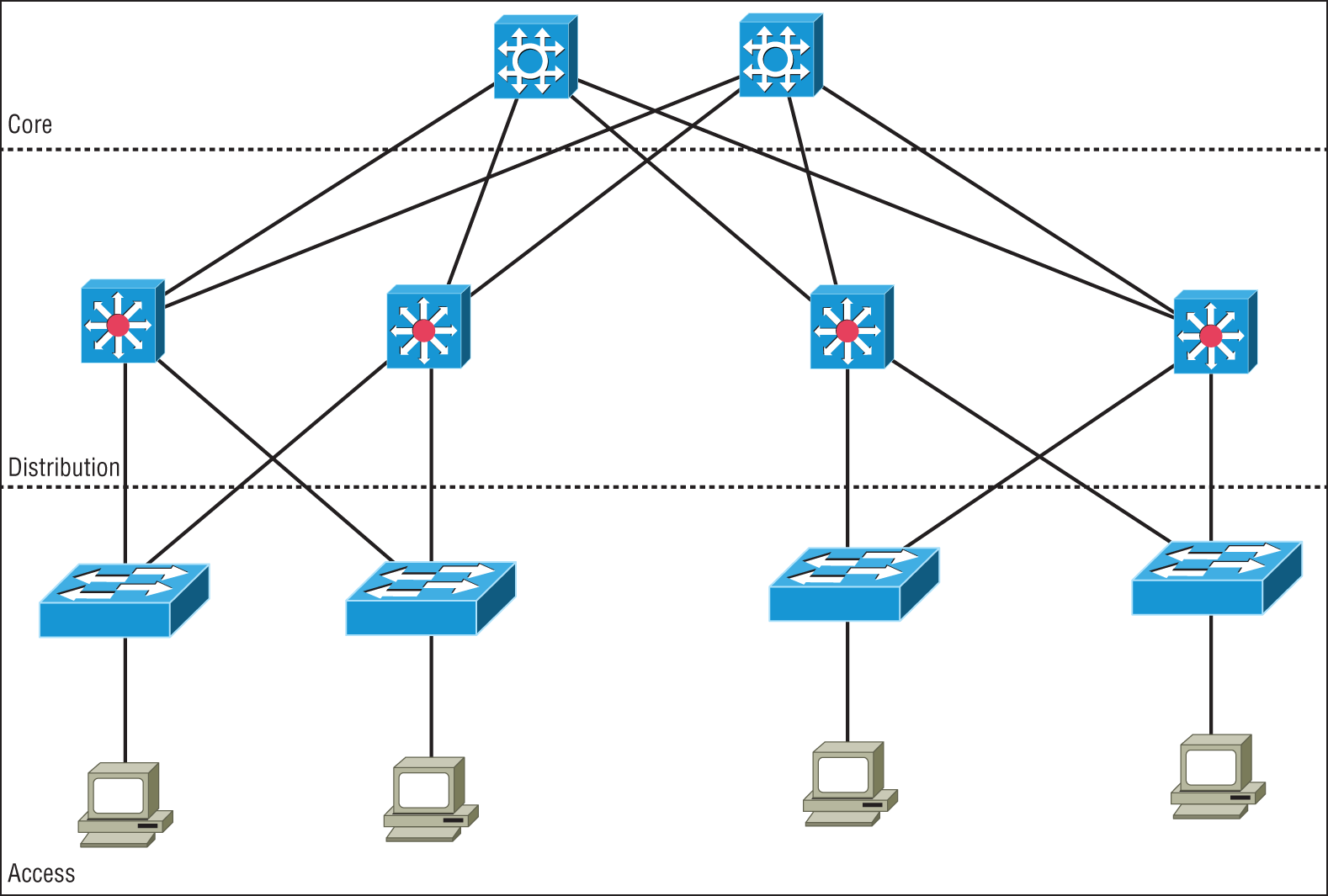

Spine-Leaf–Based Two-Tier Networks

Data center networks are evolving into two-tier fabric-based networks that are also referred to as spine-leaf architecture as is shown in Figure 21.2.

FIGURE 21.2 Spine-leaf fabric architecture

Spine switches have extremely high-throughput, low-latency, and port-dense switches that have direct high-speed (10, 25, 40 to 100 Gbps) connections to each leaf switch.

Leaf switches are very similar to traditional top-of-rack access switches in that they are often 24- or 48-port 1, 10, 25, or 40 Gbps access layer connections but have the increased capability of either 40, 100, or higher uplinks to each spine switch.

The two-tier architecture offers the following advantages over the traditional three-tier designs:

- Resiliency: Each leaf switch connects to every spine switch, the spanning tree is not needed, and due to layer 3 routing protocols being used, now every uplink can be used concurrently.

- Latency: There is a maximum of two hops for any East-West packet flow over very high-speed links, so ultra-low latency is standard.

- Performance: True active-active uplinks enable traffic to flow over the least congested high-speed links available.

- Scalability: You can increase leaf switch quantity to desired port capacity and add spine switches as needed for uplinks.

- Adaptability: Multiple spine-leaf networks across a multitenant or private cloud design are often managed from software-defined networking controllers.

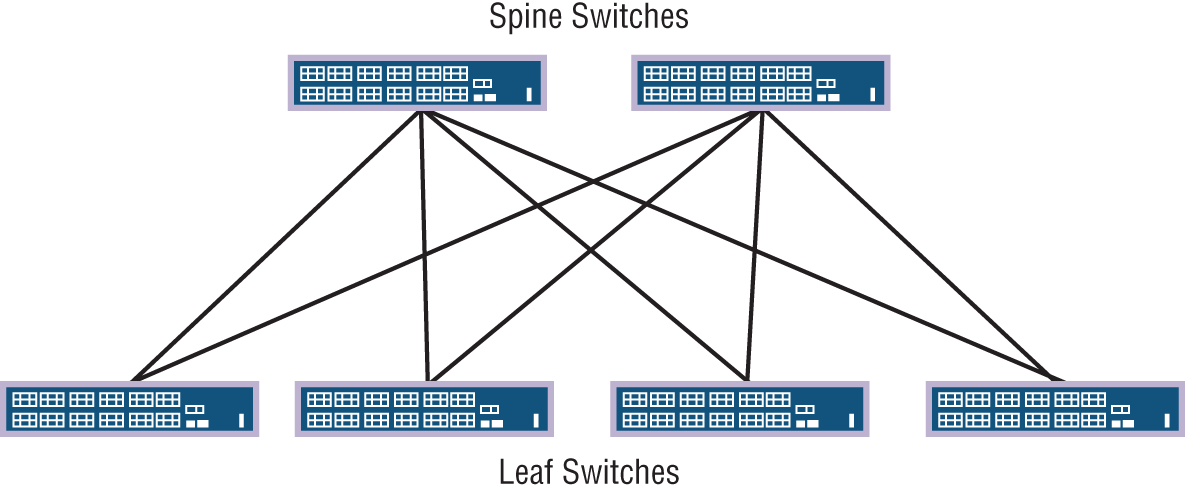

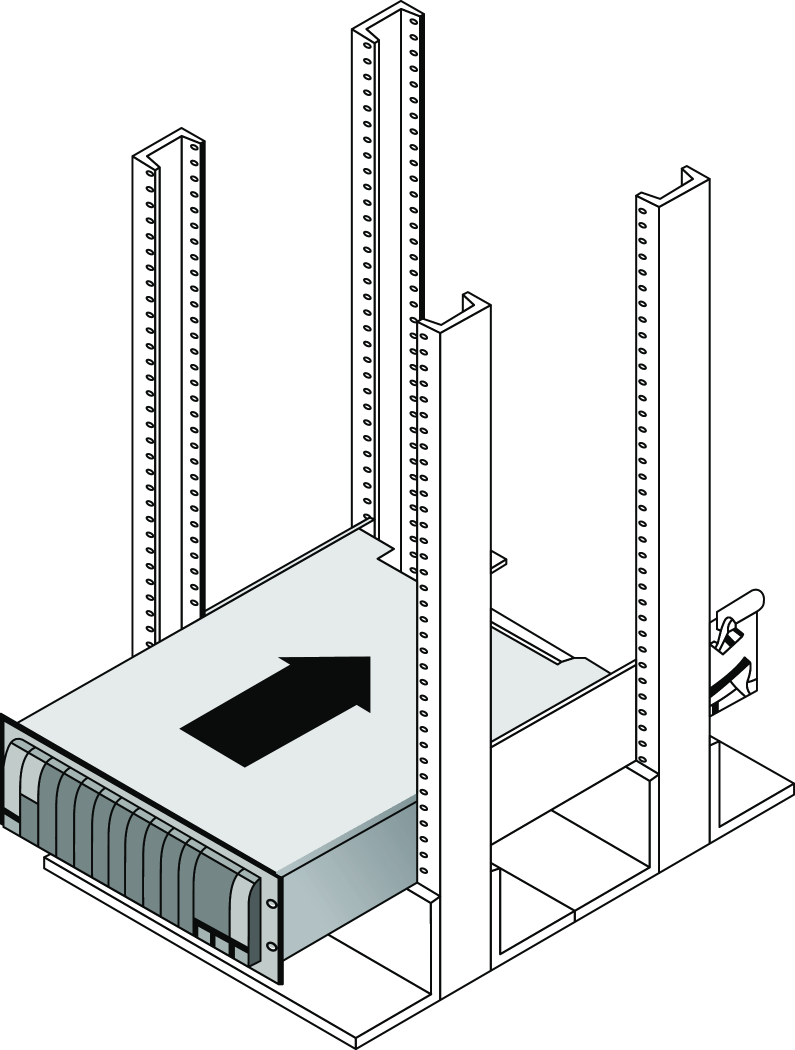

Top-of-Rack Switching

Top-of-rack switching refers to the access switches in the data center network. The objective is to place the switch at the top of each rack and cable the devices in the rack to the local switch as shown in Figure 21.3. The top-of-rack (TOR) switch connects to the distribution or spine switches with high-speed links such as 10G, 25G, 40G, or 100G Ethernet interfaces.

FIGURE 21.3 Top-of-rack switching

The advantage of using a top-of-rack topology is that lower-cost copper or coax cabling is used and the cable density is restricted inside each rack.

If the cable density is low, then an end-of-row approach is used where the switch is placed at the end of a row of data center cabinets and all devises in the row cable to the end-of-row switch.

Backbone

The data center backbone switches or routers are either a spine switch or core switches depending on your topology. Backbone switches have very high-speed interfaces and are used to interconnect the access or leaf switches. The backbone does not have any direct server connections, only connections to other network devices. It is common for backbone switches to have 10G and higher interface speeds and to be highly redundant.

Traffic Flows

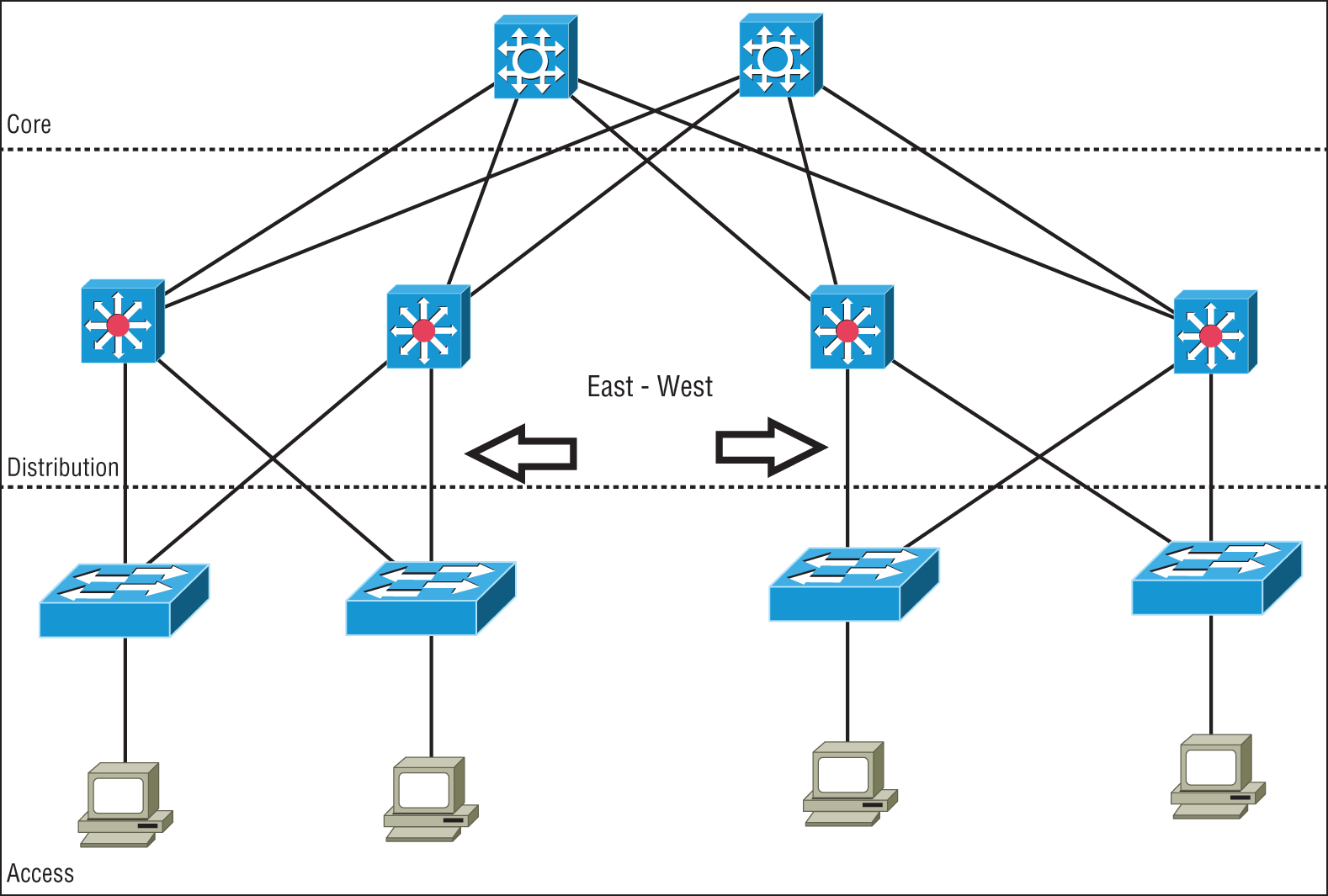

In a data center there are server-to-server communications and also communications to the outside word. These are called North-South and East-West.

Today, there is a substantial amount of traffic between devices in the data center that is often much greater than the flows into and out of the network. It is important to understand your network and make sure it is designed properly so there are no congestion points that could cause slowdowns.

North-South

North-South traffic typically indicates data flow that either enters or leaves the data center from/to a system physically residing outside the data center, such as user to server.

North-South traffic (or data flow) is the traffic into and out of a data center. as shown in Figure 21.4.

FIGURE 21.4 North-South data flow

Southbound traffic is data entering the data center from the outside, such as from the Internet or a private network. Usually the border network consists of a router and firewall to define the border of the data center network with the outside world.

Data leaving the data center is referred to as northbound traffic.

East-West

East-West traffic describes the traffic flow inside a data center and refers to the data sent and received between devices, as shown in Figure 21.5.

FIGURE 21.5 East-West data flow in a data center

With modern decentralized application designs, virtualizations, private clouds, converged and hyper-converged infrastructures, East-West traffic volume is usually greater than the North-South traffic into and out of the data center.

Many applications, containers, servers, and virtualized networking devices exchange data between each other inside the data center. This East-West traffic benefits from high-speed interconnections for low-latency transfers of large amounts of data that the spine-leaf architecture provides.

Branch Office vs. On-premises Data Center vs. Colocation

When deciding where to place a data center, there are many variables that must be taken into account. These factors can often be in conflict with each other when deciding how to deploy your compute and storage resources. Do you place them nearest to the end users? Do you build and manage your own data center? Maybe leasing space would be a better solution?

A branch office can be a large office campus or a remote retail or distribution center. The common factor is that you put the data center nearest the people who access and rely on the services they provide. This can increase uptime because there are no remote links that can go down. It can also add to the fragility unless redundant and backup systems are put in place, which can drive up costs due to the increased number of data centers rather than a larger, centralized data center. Branch office data centers often do not have local technical expertise, and it is generally more difficult to monitor and maintain a large number of small data centers over a more centralized approach. With hyper-converged architectures, it is feasible to place some of your compute and storage resources at the remote locations for backup and local processing while still maintaining a central data center.

The traditional approach has been to maintain one or more private on-premises data centers. This puts everything under your control. A company can place staff and security in the data center and handle all of the operations themselves. It is recommended that a backup data center be deployed that is some distance away in case of an outage at the primary data center due to man-made or natural disasters. With a distance of several hundred miles separating the primary and backup data centers, a hurricane, for example, would not affect the backup if the primary data center fails.

Many companies choose to use the services of co-located (colo) data centers. Specialized co-location data center providers build, manage, and maintain high-end data center facilities and lease space. This allows you to access high-end services such as redundant power, cooling, and telco circuits for less cost than if you were to implement these in an in-house data center.

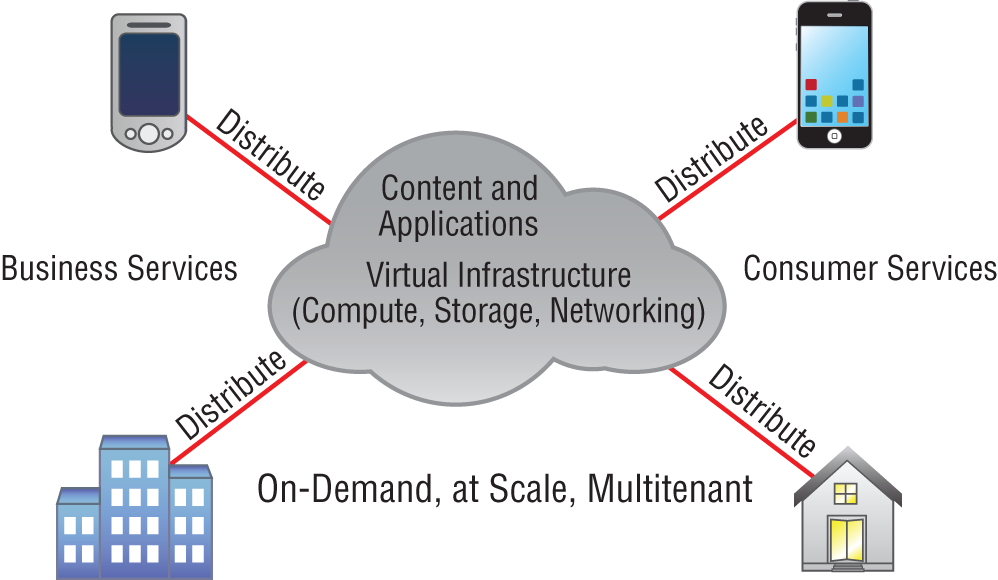

Cloud Computing and Its Effect on the Enterprise Network

Cloud computing is by far one of the hottest topics in today's IT world. Basically, cloud computing can provide virtualized processing, storage, and computing resources to users remotely, making the resources transparently available regardless of the user connection. To put it simply, some people just refer to the cloud as “someone else's hard drive.” This is true, of course, but the cloud is much more than just storage.

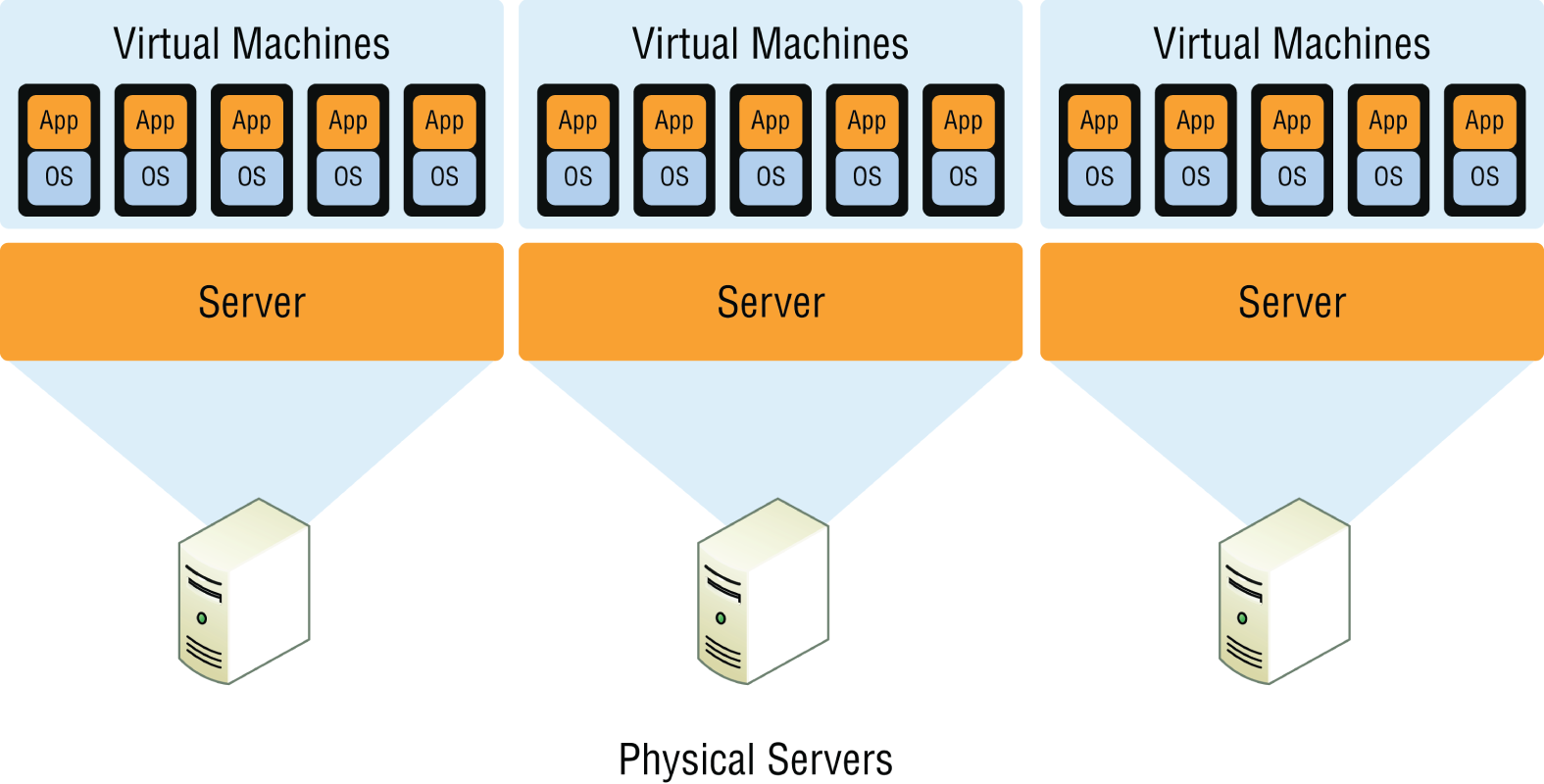

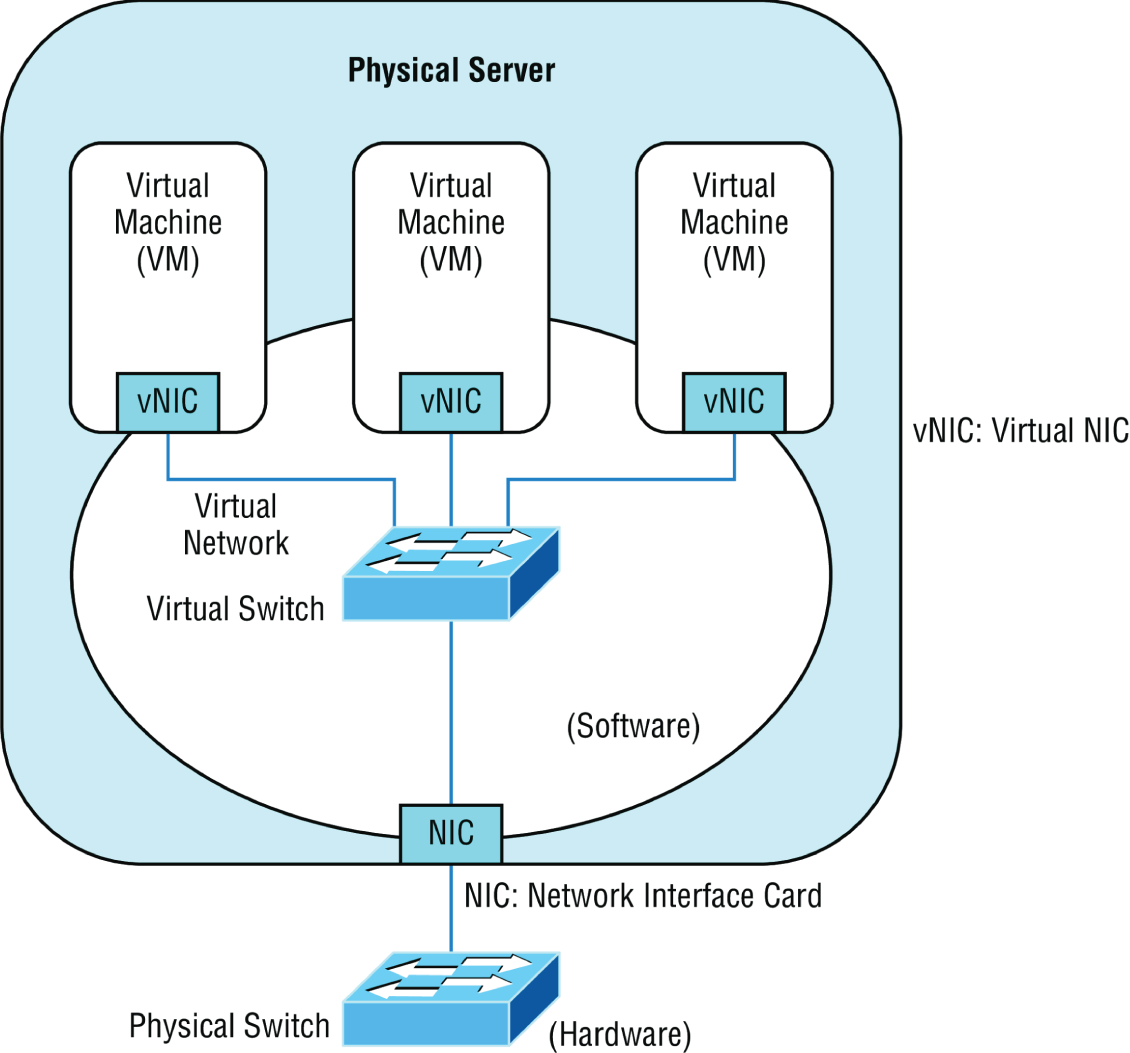

The history of the consolidation and virtualization of our servers tells us that this has become the de facto way of implementing servers because of basic resource efficiency. Two physical servers will use twice the amount of electricity as one server, but through virtualization, one physical server can host two virtual machines, hence the main thrust toward virtualization. With it, network components can simply be shared more efficiently.

Users connecting to a cloud provider's network, whether it be for storage or applications, really don't care about the underlying infrastructure because, as computing becomes a service rather than a product, it's then considered an on-demand resource, as shown in Figure 21.6.

FIGURE 21.6 Cloud computing is on demand.

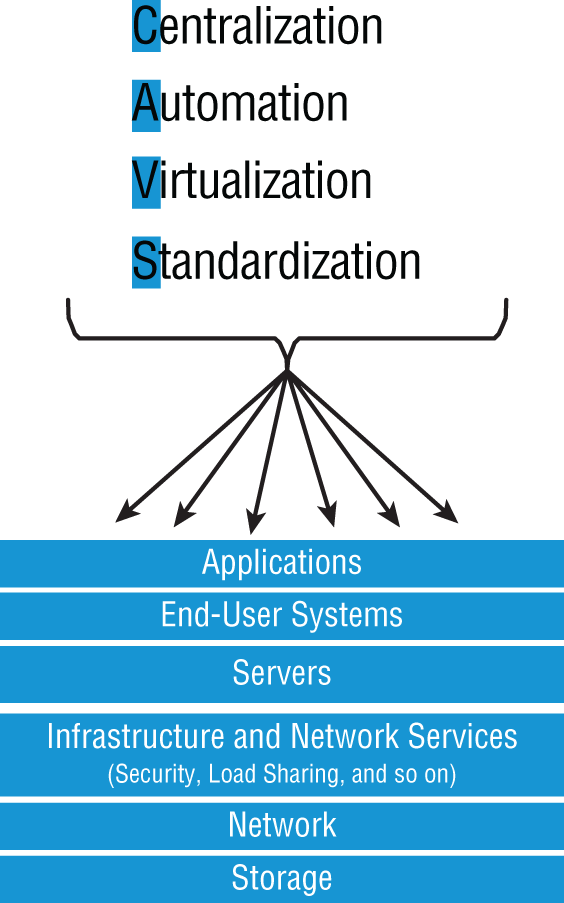

Centralization/consolidation of resources, automation of services, virtualization, and standardization are just a few of the big benefits cloud services offer. Let's take a look at Figure 21.7.

FIGURE 21.7 Advantages of cloud computing

Cloud computing has several advantages over the traditional use of computer resources. The following are the advantages to the provider and to the cloud user.

The advantages to a cloud service builder or provider are:

- Cost reduction, standardization, and automation

- High utilization through virtualized, shared resources

- Easier administration

- Fall-in-place operations model

The advantages to cloud users are:

- On-demand, self-service resource provisioning

- Fast deployment cycles

- Cost effectiveness

- Centralized appearance of resources

- Highly available, horizontally scaled application architectures

- No local backups

Having centralized resources is critical for today's workforce. For example, if you have your documents stored locally on your laptop and your laptop gets stolen, you've pretty much lost everything unless you're doing constant local backups. That is so 2005!

After I lost my laptop and all the files for the book I was writing at the time, I swore (yes, I did that too) to never have my files stored locally again. I started using only Google Drive, OneDrive, and Dropbox for all my files, and they became my best backup friends. If I lose my laptop now, I just need to log in from any computer from anywhere to my service provider's logical drives and presto, I have all my files again. This is clearly a simple example of using cloud computing, specifically SaaS (which is discussed next), and it's wonderful!

So cloud computing provides for the sharing of resources, lower cost operations passed to the cloud consumer, computing scaling, and the ability to dynamically add new servers without going through the procurement and deployment process.

Service Models

Cloud providers can offer you different available resources based on your needs and budget. You can choose just a vitalized network platform or go all in with the network, OS, and application resources.

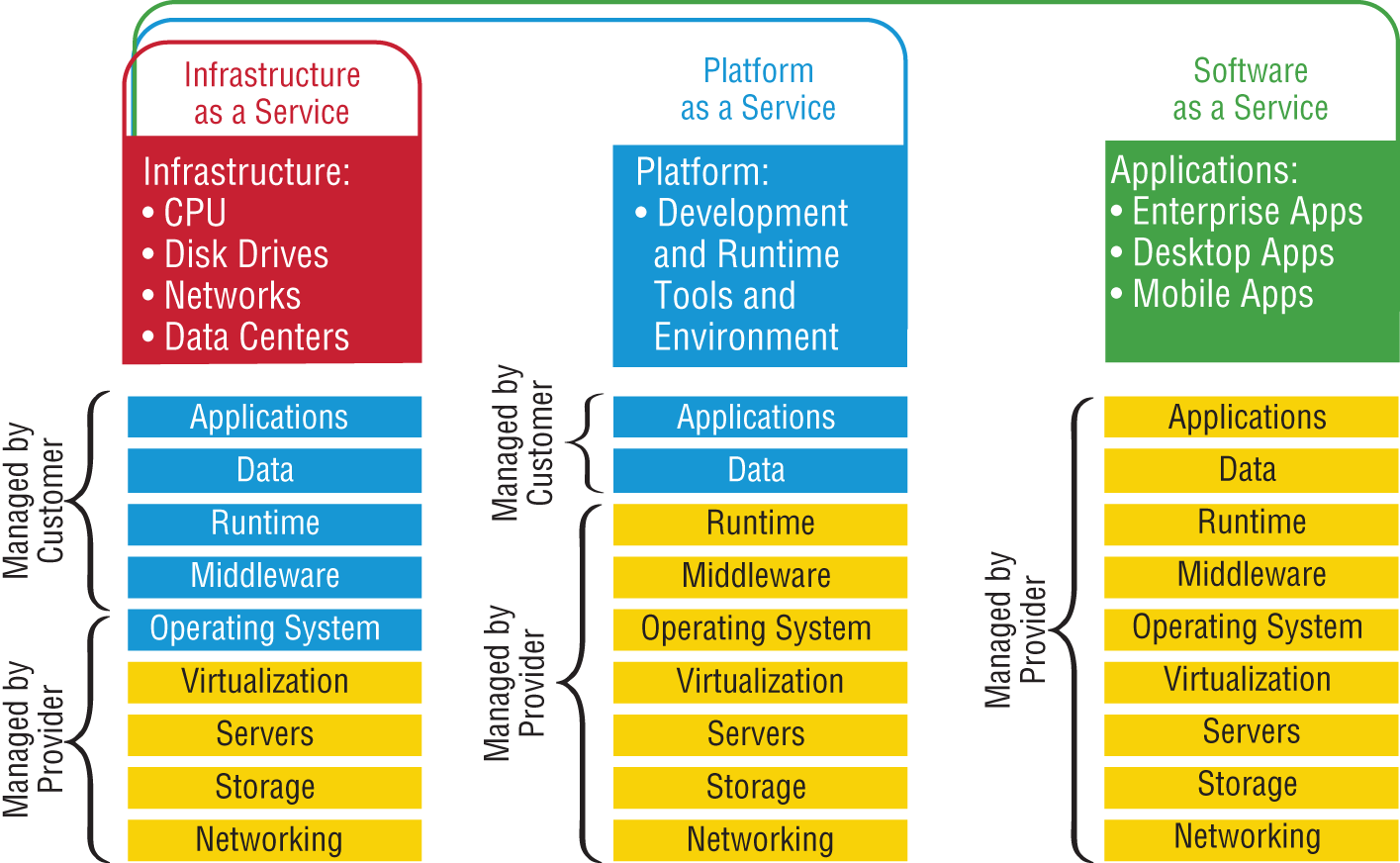

Figure 21.8 shows the three service models available, depending on the type of service you choose to get from a cloud.

You can see that Infrastructure as a Service (IaaS) allows the customer to manage most of the network, whereas Software as a Service (SaaS) doesn't allow any management by the customer, and Platform as a Service (PaaS) is somewhere in the middle of the two. Clearly, choices can be cost-driven, so the most important thing is that the customer pays only for the services or infrastructure they use.

FIGURE 21.8 Cloud computing services

Let's take a look at each service:

- IaaS: Provides only the network. Delivers computer infrastructure—a platform virtualization environment—where the customer has the most control and management capability.

- PaaS: Provides the operating system and the network. Delivers a computing platform and solution stack, allowing customers to develop, run, and manage applications without the complexity of building and maintaining the infrastructure typically associated with developing and launching an application. An example is Windows Azure.

- SaaS: Provides the required software, operating system, and network. SaaS consists of common application software such as databases, web servers, and email software that's hosted by the SaaS vendor. The customer accesses this software over the Internet. Instead of having users install software on their computers or servers, the SaaS vendor owns the software and runs it on computers in its data center. Microsoft Office 365 and many Amazon Web Services (AWS) offerings are perfect examples of SaaS.

- DaaS: Provides the desktop and other resources. DaaS hosts the desktop operating system, such as Windows or Linux, plus the storage, infrastructure, and network resources inside the data center. A data stream of the desktop is accessed from the user's remote device, usually via a web browser or a small application residing on the user's computer, tablet, or phone. This allows all applications, data, and security standards to be hosted inside the data center for centralized management.

So depending on your business requirements and budget, cloud service providers market a very broad offering of cloud computing products, from highly specialized offerings to a large selection of services.

What's nice here is that you're offered a fixed price for each service that you use, which allows you to easily budget wisely for the future. It's true—at first, you'll have to spend a little cash on staff training, but with automation you can do more with less staff because administration will be easier and less complex. All of this works to free up the company resources to work on new business requirements and allows the company to be more agile and innovative in the long run.

Overview of Network Programmability in Enterprise Network

Right now in our current, traditional networks, our router and switch ports are the only devices that are not virtualized. So this is what we're really trying to do here—virtualize our physical ports.

First, understand that our current routers and switches run an operating system, such as Cisco IOS, that provides network functionality. This has worked well for us for 25 years or so, but it is way too cumbersome now to configure, implement, and troubleshoot these autonomous devices in today's large, complicated networks. Before you even get started, you have to understand the business requirements and then push that out to all the devices. This can take weeks or even months since each device is configured, maintained, and monitored separately.

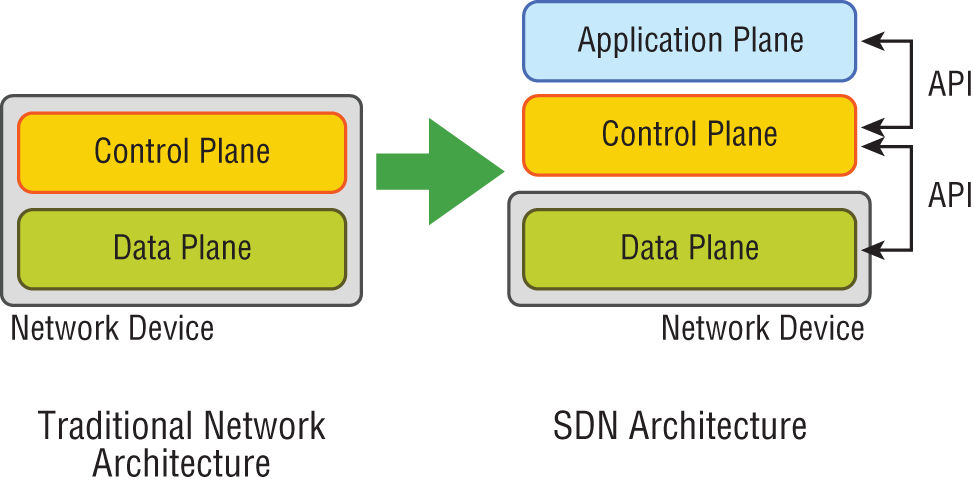

Before we can talk about the new way to network our ports, you need to understand how our current networks forward data, which happens via these two planes:

- Data Plane This plane, also referred to as the forwarding plane, is physically responsible for forwarding frames of packets from its ingress to egress interfaces using protocols managed in the control plane. Here, data is received, the destination interface is looked up, and the forwarding of frames and packets happens, so the data plane relies completely on the control plane to provide solid information.

- Control Plane This plane is responsible for managing and controlling any forwarding table that the data plane uses. For example, routing protocols such as OSPF, EIGRP, RIP, and BGP as well as IPv4 ARP, IPv6 NDP, switch MAC address learning, and STP are all managed by the control plane.

Now that you understand that there are two planes used to forward traffic in our current or legacy network, let's look at the future of networking.

Software-Defined Networking

Traditional networks comprised many discreet devices that were managed and configured individually. Today, SDN controllers are deployed that contain the management plane operations for the complete network.

Now, all of the hardware infrastructure devices are not individually configured by network administrators. All commands and operations are now performed on the SDN controller, which is a computer, or cluster of computers, running specialized applications to monitor and configure the complete network. SDN controllers communicate southbound to the underlying hardware at the infrastructure layer for all control and management operations. There are software portals, or application programming interfaces (APIs), that communicate northbound to other applications that need to monitor and access the data center network fabric. The northbound applications could be any number of devices or applications, such as load balancers, ticketing systems, analytics applications, firewalls, authentication servers, or any application that needs to access the network traffic.

The software-defined network allows for very large-scale deployments to be automated and managed much more efficiently than in the past where each device was a stand-alone system.

SDN controllers also allow the fabric to be partitioned into virtual private clouds that different entities or companies can utilize and still be separated from the other networks running on the same platform.

Application Programming Interfaces (APIs)

If you have worked on any enterprise Wi-Fi installations in the past decade, you would have designed your physical network and then configured a type of network controller that managed all the wireless APs in the network. It's hard to imagine that anyone would install a wireless network today without some type of controller in an enterprise network, where the access points (APs) receive their directions from the controller on how to manage the wireless frames and the APs have no operating system or brains to make many decisions on their own.

The same is now true for our physical router and switch ports, and it's precisely this centralized management of network frames and packets that software-defined networking (SDN) provides to us.

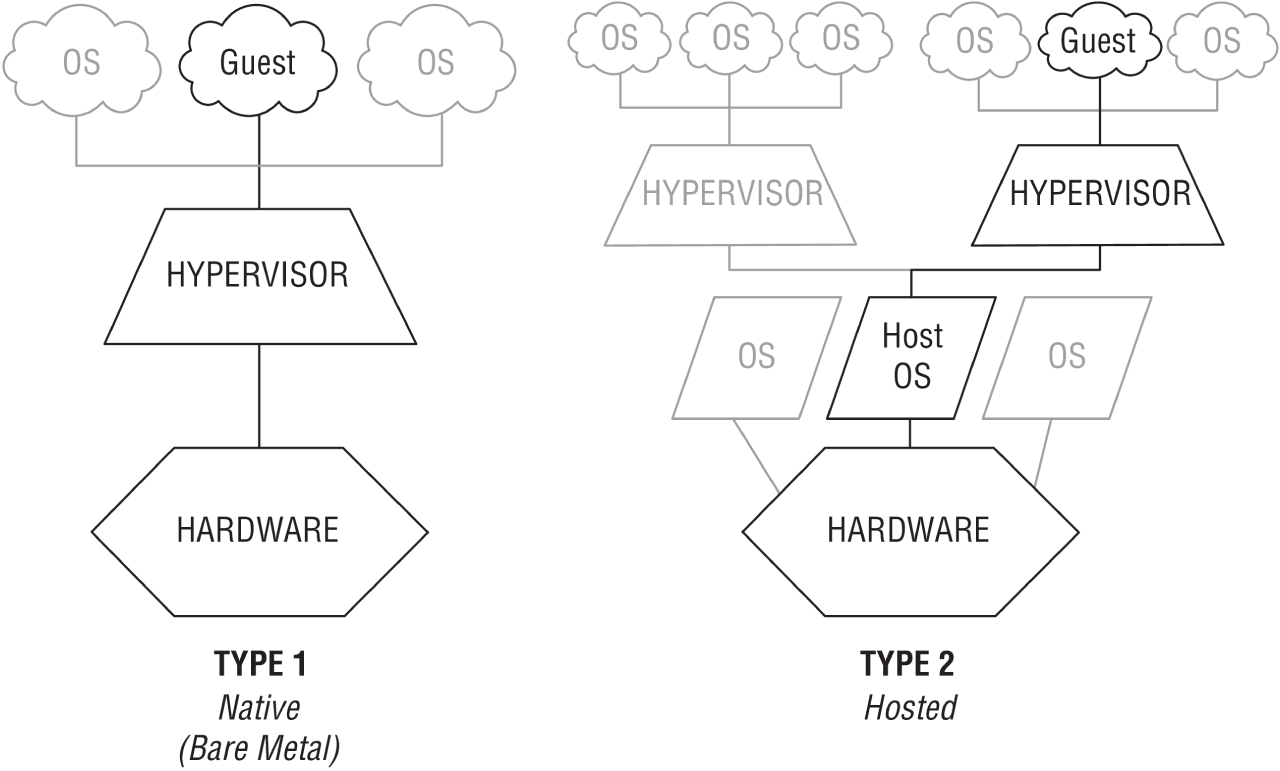

SDN removes the control plane intelligence from the network devices by having a central controller manage the network instead of having a full operating system (Cisco IOS, for example) on the devices. In turn, the controller manages the network by separating the control and data (forwarding) planes, which automates configuration and the remediation of all devices.

So instead of the network devices each having individual control planes, we now have a centralized control plane, which consolidates all network operations in the SDN controller. APIs allow for applications to control and configure the network without human intervention. The APIs are another type of configuration interface just like the CLI, SNMP, or GUI interfaces, which facilitate machine-to-machine operations.

The SDN architecture slightly differs from the architecture of traditional networks by adding a third layer, the application plane, as described here and shown in Figure 21.9:

FIGURE 21.9 The SDN architecture

- Data (or Forwarding) Plane Contains network elements, meaning any physical or virtual device that deals with data traffic.

- Control Plane Usually a software solution, the SDN controllers reside here to provide centralized control of the router and switches that populate the data plane, removing the control plane from individual devices.

- Application Plane This new layer contains the applications that communicate their network requirements toward the controller using APIs.

SDN is pretty cool because your applications tell the network what to do based on business needs instead of you having to do it. Then the controller uses the APIs to pass instructions on to your routers, switches, or other network gear. So instead of taking weeks or months to push out a business requirement, the solution now only takes minutes.

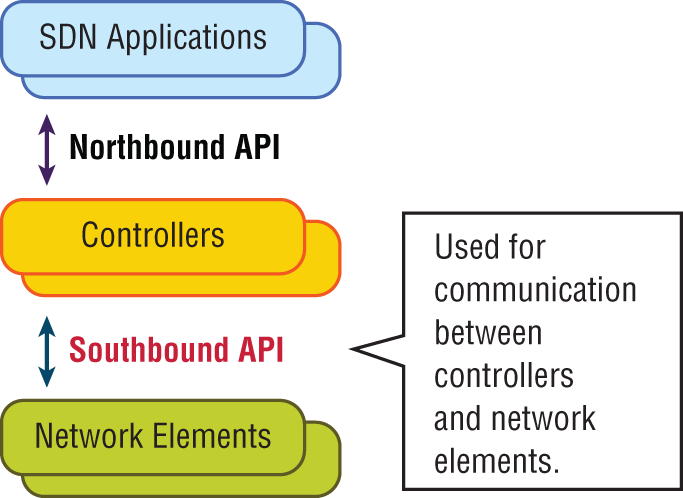

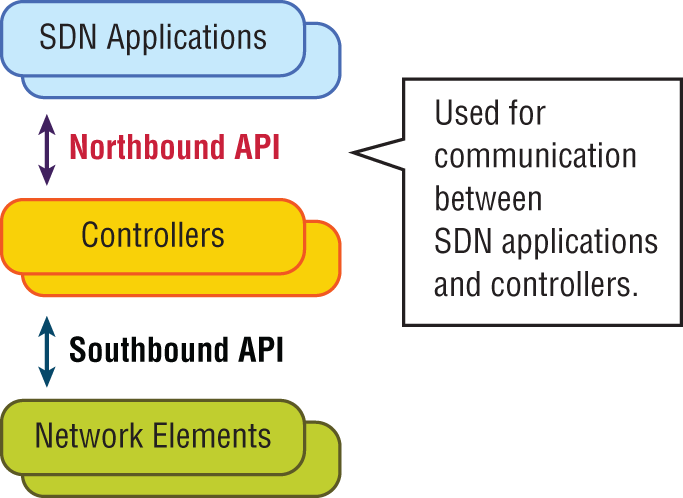

There are two sets of APIs that SDN uses and they are very different. As you already know, the SDN controller uses APIs to communicate with both the application and data plane. Communication with the data plane is defined with southbound interfaces, while services are offered to the application plane using the northbound interface. Let's take a deeper look at this oh-so-vital CCNA objective.

Southbound APIs

Logical southbound interface (SBI) APIs (or device-to-control-plane interfaces) are used for communication between the controllers and network devices. They allow the two devices to communicate so that the controller can program the data plane forwarding tables of your routers and switches. SBIs are shown in Figure 21.10.

FIGURE 21.10 Southbound interfaces

Since all the network drawings had the network gear below the controller, the APIs that talked to the devices became known as southbound, meaning, “out the southbound interface of the controller.” And don't forget that with SDN, the term interface is no longer referring to a physical interface!

Unlike northbound APIs, southbound APIs have many standards, and you absolutely must know them well for the objectives. Let's talk about them now:

- OpenFlow Describes an industry-standard API, which the ONF (

opennetworking.org) defines. It configures white label switches, meaning that they are nonproprietary, and as a result defines the flow path through the network. All the configuration is done through NETCONF. - NETCONF Although not all devices support NETCONF yet, what this provides is a network management protocol standardized by the IETF. Using RPC, you can use XML to install, manipulate, and delete the configurations of network devices.

- onePK A Cisco proprietary SBI that allows you to inspect or modify the network element configuration without hardware upgrades. This makes life easier for developers by providing software development kits for Java, C, and Python.

- OpFlex The name of the southbound API in the Cisco ACI world is OpFlex, an open-standard, distributed control system. Understand that OpenFlow first sends detailed and complex instructions to the control plane of the network elements in order to implement a new application policy—something called an imperative SDN model. On the other hand, OpFlex uses a declarative SDN model because the controller, which Cisco calls the APIC, sends a more abstract, “summary policy” to the network elements. The summary policy makes the controller believe that the network elements will implement the required changes using their own control planes, since the devices will use a partially centralized control plane.

Northbound APIs

To communicate from the SDN controller and the applications running over the network, you'll use northbound interfaces (NBIs), shown in Figure 21.11.

FIGURE 21.11 Northbound interfaces

By setting up a framework that allows the application to demand the network setup with the configuration that it needs, the NBIs allow your applications to manage and control the network. This is priceless for saving time because you no longer need to adjust and tweak your network to get a service or application running correctly.

The NBI applications include a wide variety of automated network services, from network virtualization and dynamic virtual network provisioning to more granular firewall monitoring, user identity management, and access policy control. This allows for cloud orchestration applications that tie together, for server provisioning, storage, and networking that enables a complete rollout of new cloud services in minutes instead of weeks!

Sadly, at the time of this writing, there is no single northbound interface that you can use for communication between the controller and all applications. So instead, you use various and sundry northbound APIs, with each one working only with a specific set of applications.

Most of the time, applications used by NBIs will be on the same system as the APIC controller, so the APIs don't need to send messages over the network since both programs run on the same system. However, if they don't reside on the same system, REST (Representational State Transfer) comes into play; it uses HTTP messages to transfer data over the API for applications that sit on different hosts.

Managing Network Documentation

I'll admit it—creating network documentation is one of my least favorite tasks in network administration. It just isn't as exciting to me as learning about the coolest new technology or tackling and solving a challenging problem. Part of it may be that I think I know my networks well enough—after all, I installed and configured them, so if something comes up, it should be easy to figure it out and fix it, right? And most of the time I can do that, but as networks get bigger and more complex, it gets harder and harder to remember it all. Plus, it's an integral part of the service I provide for my clients to have seriously solid documentation at hand to refer to after I've left the scene and turned their network over to them. So while I'll admit that creating documentation isn't something I get excited about, I know from experience that having it around is critical when problems come up—for myself and for my clients' technicians and administrators, who may not have been part of the installation process and simply aren't familiar with the system.

Using SNMP

In Chapter 6, “Introduction to the Internet Protocol,” I introduced you to Simple Network Management Protocol (SNMP), which is used to gather information from and send settings to devices that are SNMP compatible. Make sure to thoroughly review the differences between versions 1, 2, and 3 that we discussed there! Remember, I told you SNMP gathers data by polling the devices on the network from a management station at fixed or random intervals, requiring them to disclose certain information. This is a big factor that really helps to simplify the process of gathering information about your entire internetwork.

SNMP uses UDP to transfer messages back and forth between the management system and the agents running on the managed devices. Inside the UDP packets (called datagrams) are commands from the management system to the agent. These commands can be used either to get information from the device about its state (SNMP GetRequest) or to make a change in the device's configuration (SetRequest). If a GetRequest command has been sent, the device will respond with an SNMP response. If there's a piece of information that's particularly interesting to an administrator about the device, the administrator can set something called a trap on the device.

So, no whining! Like it or not, we're going to create some solid documentation. But because I'm guessing that you really don't want to redo it, it's a very good idea to keep it safe in at least three forms:

- An electronic copy that you can easily modify after configuration changes

- A hard copy in a binder of some sort, stored in an easily accessible location

- A copy on an external drive to keep in a really safe place (even off site) in case something happens to the other two or the building or part of it burns to the ground

So why the hard copy? Well, what if the computer storing the electronic copy totally crashes and burns at exactly the same time a major crisis develops? Good thing you have that paper documentation on hand for reference! Plus, sometimes you'll be troubleshooting on the run—maybe literally, as in running down the hall to the disaster's origin. Having that binder containing key configuration information on board could save you a lot of time and trouble, and it's also handy for making notes to yourself as you troubleshoot. Also, depending on the size of the intranet and the amount of people staffing the IT department, it might be smart to have several hard copies. Just always make sure they're only checked out by staff who are cleared to have them and that they're all returned to a secure location at the end of each shift. You definitely don't want that information in the wrong hands!

Now that I've hopefully convinced you that you absolutely must have tight documentation, let's take a look at the different types you need on hand so you can learn how to assemble them.

Schematics and Diagrams

Now reading network documentation doesn't exactly compete with racing your friends on jet skis, but it's really not that bad. It's better than eating canned spinach, and sometimes it's actually interesting to check out schematics and diagrams—especially when they describe innovative, elegant designs or when you're hunting down clues needed to solve an intricate problem with an elusive solution. I can't tell you how many times, if something isn't working between point A and point B, a solid diagram of the network that precisely describes what exists between point A and point B has totally saved the day. Another time when these tools come in handy is when you need to extend your network and want a clear picture of how the expanded version will look and work. Will the new addition cause one part of the network to become bogged down while another remains underutilized? You get the idea.

Diagrams can be simple sketches created while brainstorming or troubleshooting on the fly. They can also be highly detailed, refined illustrations created with some of the snappy software packages around today, like Microsoft Visio, SmartDraw, and a host of computer-aided design (CAD) programs. Some of the more complex varieties, especially CAD programs, are super pricey. But whatever tool you use to draw pictures about your networks, they basically fall into these groups:

- Wiring diagrams/schematics

- Physical network diagrams

- Logical network diagrams

- Asset management

- IP address utilization

- Vendor documentation

Wiring Schematics

Wireless is definitely the wave of the future, but for now, even the most extensive wireless networks have a wired backbone they rely on to connect them to the rest of humanity.

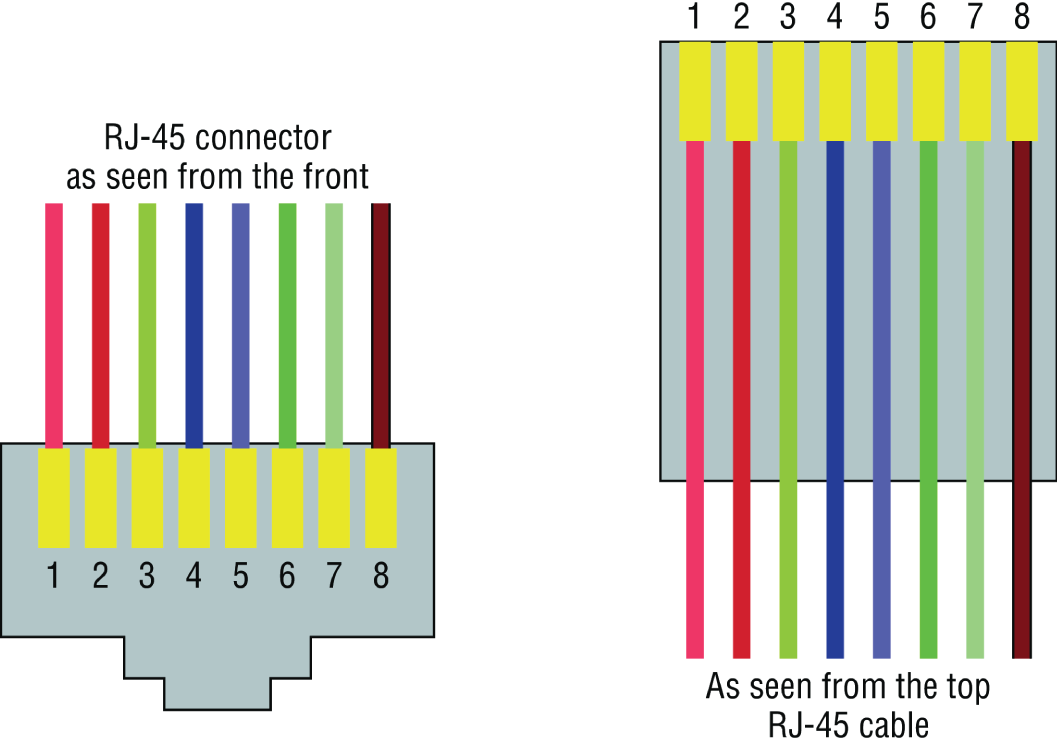

That skeleton is made up of cabled physical media like coax, fiber, and twisted-pair. Surprisingly, it is the latter—specifically, unshielded twisted-pair (UTP)—that screams to be represented in a diagram. You'll see why in a minute. To help you follow me, let's review what we learned in Chapter 3, “Networking Connectors and Wiring Standards.” We'll start by checking out Figure 21.12 (a diagram!) that describes the fact that UTP cables use an RJ-45 connector (RJ stands for registered jack).

FIGURE 21.12 RJ-45 connector

What we see here is that pin 1 is on the left and pin 8 is on the right, so clearly, within your UTP cable, you need to make sure the right wires get to the right pins. No worries if you got your cables premade from the store, but making them yourself not only saves you a bunch of money, it allows you to customize cable lengths, which is really important!

Table 21.1 matches the colors for the wire associated with each pin, based on the Electronic Industries Association and the Telecommunications Industry Alliance (TIA/EIA) 568B wiring standard.

TABLE 21.1 Standard TIA/EIA 568B wiring

| Pin | Color |

|---|---|

| 1 | Orange/White |

| 2 | Orange |

| 3 | Green/White |

| 4 | Blue |

| 5 | Blue/White |

| 6 | Green |

| 7 | Brown/White |

| 8 | Brown |

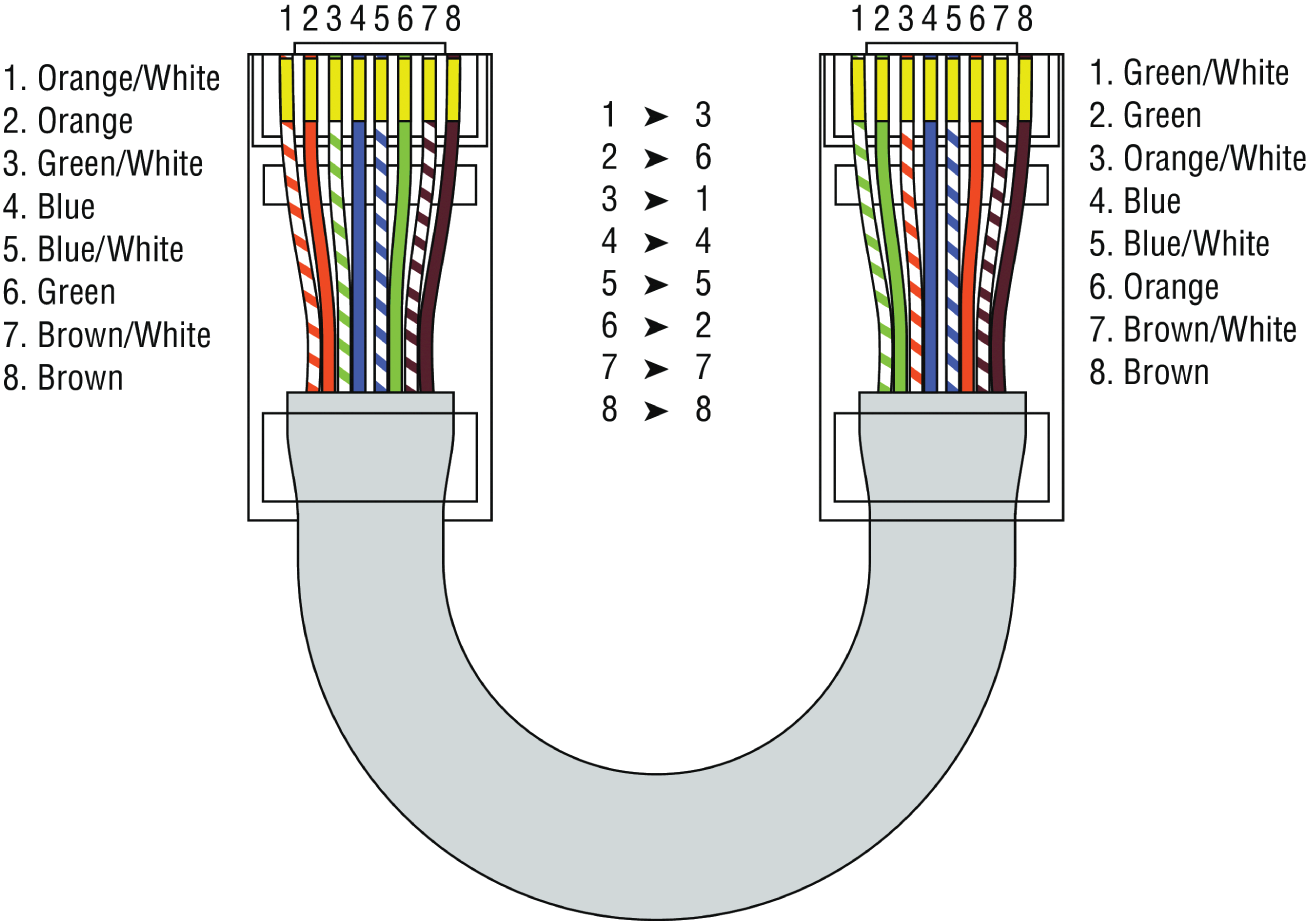

Standard drop cables, or patch cables, have the pins in the same order on both connectors. If you're connecting a computer to another computer directly, you should already know that you need a crossover cable that has one connector with flipped wires. Specifically, pins 1 and 3 and pins 2 and 6 get switched to ensure that the send port from one computer's network interface card (NIC) gets attached to the receive port on the other computer's NIC. Crossover cables were also used to connect older routers, switches, and hubs through their uplink ports. Figure 21.13 shows you what this looks like.

FIGURE 21.13 Two ends of a crossover cable

This is where having a diagram is golden. Let's say you're troubleshooting a network and discover connectivity problems between two hosts. Because you've got the map, you know the cable running between them is brand-new and custom made. This should tell you to go directly to that new cable because it's likely it was poorly made and is therefore causing the snag.

Another reason it's so important to diagram all things wiring is that all wires have to plug into something somewhere, and it's really good to know what and where that is. Whether it's into a hub, a switch, a router, a workstation, or the wall, you positively need to know the who, what, where, when, and how of the way the wiring is attached.

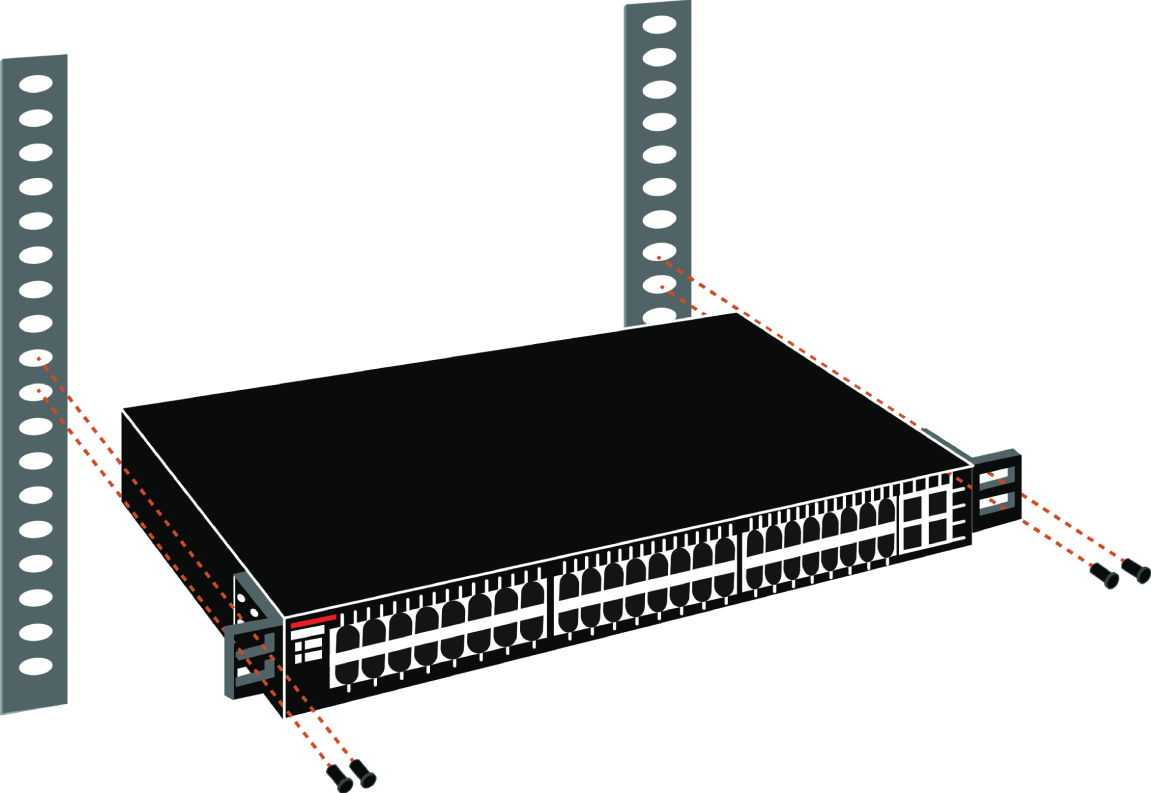

For medium to large networks, devices like switches and routers are rack-mounted and would look something like the switch in Figure 21.14.

FIGURE 21.14 Rack-mounted switches

Knowing someone's or something's name is important because it helps us differentiate between people and things—especially when communicating with each other. If you want to be specific, you can't just say, “You know that router in the rack?” This is why coming up with a good naming system for all the devices living in your racks will be invaluable for ensuring that your wires don't get crossed.

Okay, I know it probably seems like we're edging over into OCD territory, but stay with me here; in addition to labeling, well, everything so far, you should actually label both ends of your cables too. If something happens (earthquake, tsunami, temper tantrum, even repairs) and more than one cable gets unplugged at the same time, it can get really messy scrambling to reconnect them from memory—fast!

Physical Network Diagrams

Physical diagrams were covered in Chapter 14, “Organizational Documents and Policies”; please refer to it for a detailed explanation.

Logical Network Diagrams

Logical diagrams were also covered in Chapter 14; please refer to that chapter for a detailed explanation.

Asset Management

Asset management involves tracking all network assets like computers, routers, switches, and hubs through their entire life cycles. Most organizations find it beneficial to utilize asset identification numbers to facilitate this process. The ISO has established standards regarding asset management. The ISO 19770 family consists of four major parts:

- 19770-1 is a process-related standard that outlines best practices for IT asset management in an organization.

- 19770-2 is a standard for machine encapsulation (in the form of an XML file known as a SWID tag) of inventory data—allowing users to easily identify what software is deployed on a given device.

- 19770-3 is a standard that provides a schema for machine encapsulation of entitlements and rights associated with software licenses. The records (known as ENTs) will describe all entitlements and rights attendant to a piece of software and the method for measurement of license/entitlement consumption.

- 19770-4 allows for standardized reporting of utilization of resources. This is crucial when considering complex data center license types and for the management of cloud-based software and hardware (Software as a Service, or SaaS, and Infrastructure as a Service, or IaaS).

IP Address Utilization

Documenting the current IP addressing scheme can also be highly beneficial, especially when changes are required. Not only is this really helpful to new technicians, it's very useful when identifying IP addressing issues that can lead to future problems. In many cases IP addresses are configured over a long period of time with no real thought or planning on the macro level.

Current and correct documentation can help administrators identify discontiguous networks (where subnets of a major network are separated by another major network) that can cause routing protocol issues. Proper IP address design can also facilitate summarization, which makes routing tables smaller, speeding the routing process. None of these wise design choices can be made without proper IP address documentation.

Vendor Documentation

Vendor agreements often have beneficial clauses that were negotiated during the purchase process. Many also contain critical details concerning SLAs and deadlines for warranties. These documents need to be organized and stored safely for future reference. Creating a spreadsheet or some other form of tracking documentation that alerts you of upcoming dates of interest can be a huge advantage!

Network Monitoring

Identifying performance issues within the network is only one of the reasons to perform structured monitoring. Security issues also require constant monitoring. In the following sections, we'll look into both types of monitoring and cover some of the best practices and guidelines for success.

Baselines

Baselines were covered in Chapter 14; please refer to that chapter for a detailed explanation.

Processes

When monitoring baselines, there are methods that can be used to enhance the process. In this section we'll look at three particularly helpful processes:

- Log Reviewing While regular log review is always recommended anyway, it can have benefits when monitoring baselines. In some cases you may be able to identify a noncompliant device by the entries in its log or in the logs of infrastructure devices.

- Patch Management Issues In some cases, applying patches, especially device driver updates, can be problematic. Issues can include the device no longer working, loss of some key functionality, or generation of odd error messages.

- Rollback While rollback is a general term that applies to reversing any operation about device drivers, it means to remove the newer driver and go back to using the previous driver. This is typically an available option if the last driver you used is the driver to which you want to roll back.

Onboarding and Offboarding of Mobile Devices

Increasingly, users are doing work on their mobile devices that they once performed on laptops and desktop computers. Moreover, they are demanding that they be able to use their personal devices to work on the company network. This presents a huge security issue for the IT department because they have to secure these devices while simultaneously exercising much less control over them.

The security team must have a way to prevent these personal devices from introducing malware and other security issues to the network. Bring your own device (BYOD) initiatives can be successful if implemented correctly. The key is to implement control over these personal devices that leave the safety of your network and return later after potentially being exposed to environments that are out of your control. One of the methods that has been employed successfully to accomplish this goal is network access control (NAC), covered in the next section.

NAC

Today's network access control (NAC) goes beyond simply authenticating users and devices before they are allowed into the network. Today’s mobile workforce presents challenges that require additional services. These services are called Network Access Control in the Cisco world, and Network Access Protection in the Microsoft world, but the goals of these features are the same: to examine all devices requesting network access for malware, missing security updates, and any other security issues any device could potentially introduce to the network.

In some cases NAC goes beyond simply denying access to systems that fail inspection. NAC can even redirect the failed system to a remediation server, which will then apply patches and updates before allowing the device access to the network. These systems can be especially helpful in supporting a BYOD initiative while still maintaining the security of the network.

Policies, Procedures, and Regulations

It's up to us, individually and corporately, to nail down solid guidelines for the necessary policies and procedures for network installation and operation. Some organizations are bound by regulations that also affect how they conduct their business, and that kind of thing clearly needs to be involved in their choices. But let me take a minute to make sure you understand the difference between policies and procedures.

Policies govern how the network is configured and operated as well as how people are expected to behave on it. They're in place to direct things like how users access resources and which employees and groups get various types of network access and/or privileges. Basically, policies give people guidelines as to what they are expected to do. Procedures are precise descriptions of the appropriate steps to follow in a given situation, such as what to do when an employee is terminated or what to do in the event of a natural disaster. They often dictate precisely how to execute policies as well.

One of the most important aspects of any policy or procedure is that it's given high-level management support. This is because neither will be very effective if there aren't any consequences for not following the rules!

Policies

I talked extensively about security policies in Chapter 16, “Common Security Concepts,” so if you're drawing a blank, you can go back there for details. Here's a summary list of factors that most policies cover:

- Security Policies These are policies applied to users to help maintain security in the network:

- Clean-desk policies: These policies are designed to prevent users from leaving sensitive documents on unattended desks.

- Network access (who, what, and how): These policies control which users can access which portions of the network. They should be designed around job responsibilities.

- Acceptable-use policies (AUPs): These policies should be as comprehensive as possible and should outline every action that is allowed in addition to those that are not allowed. They should also specify which devices are allowed, which websites are allowed, and the proper use of company equipment.

- Consent to monitoring: These policies are designed to constantly remind users that their activities are subject to monitoring as they are using company equipment and as such they should have no expectation of privacy.

- Privileged user agreement: Whenever a user is given some right normally possessed by the administrator, they obtain a privileged user account. In this agreement, they agree to use these rights responsibly.

- Password policy: This policy defines the requirements for all passwords, including length, complexity, and age.

- Licensing restrictions: These restrictions define the procedures used to ensure that all software license agreements are not violated.

- International export controls: in accordance with all agreements between countries in which the organization does business, all allowable export destinations and import sources are defined.

- Data loss prevention: This policy defines all procedures for preventing the egress of sensitive data from the network and may include references to the use of data loss prevention (DLP) software.

- Remote access policies: These policies define the requirements for all remote access connections to the enterprise. This may cover VPN, dial-up, and wireless access methods.

- Incident response policies: These policies define a scripted and repeatable process for responding to incidents and responsibilities of various roles in the network in this process.

- Nondisclosure agreement (NDA): All scenarios in which contractors and other third parties must execute a nondisclosure agreement are defined.

- System life cycle: The steps in the asset life cycle are defined, including acquisition, implementation, maintenance, and decommissioning. It specifies certain due diligence activities to be performed in each phase.

- Asset disposal: This is usually a subset of the system life cycle and prescribes methods of ensuring that sensitive data is removed from devices before disposal.

- Change Management These policies ensure a consistent approach to managing changes to network configurations:

- Disposal of network equipment

- Use of recording equipment

- How passwords are managed (length and complexity required, and how often they need to be changed)

- Types of security hardware in place

- How often to do backups and take other fault-tolerance measures

- What to do with user accounts after an employee leaves the company

Procedures

These are the actions to be taken in specific situations:

- Disciplinary action to be taken if a policy is broken

- What to do during an audit

- How issues are reported to management

- What to do when someone has locked themselves out of their account

- How to properly install or remove software on servers

- What to do if files on the servers suddenly appear to be “missing” or altered

- How to respond when a network computer has a virus

- Actions to take if it appears that a hacker has broken into the network

- Actions to take if there is a physical emergency like a fire or flood

So you get the idea, right? For every policy on your network, there should be a credible related procedure that clearly dictates the steps to take in order to fulfill it. And you know that policies and procedures are as unique as the wide array of companies and organizations that create and employ them. But all this doesn't mean you can't borrow good ideas and plans from others and tweak them a bit to meet your requirements.

Standard Business Documents

In the course of supporting mergers and acquisitions, and in providing support to departments within an organization, it's always important to keep the details of agreements in writing to reduce the risk of misunderstanding. In this section, I'll discuss standard documents that are used in these situations. You should be familiar with the purpose of the following documents:

- Statement of Work (SOW) This document spells out all details concerning what work is to be performed, deliverables, and the required timeline for a vendor to perform the specified work.

- Memorandum of Understanding (MOU) This is an agreement between two or more organizations that details a common line of action. It is often used when parties do not have a legal commitment or they cannot create a legally enforceable agreement. In some cases, it is referred to as a letter of intent.

- Master License Agreement (MLA) This is an agreement whereby one party is agreeing to pay another party for the use of a piece of software for a period of time. These agreements, as you would expect, are pretty common in the IT world.

- Service-Level Agreement (SLA) This is an agreement that defines the allowable time in which a party must respond to issues on behalf of the other party. Most service contracts are accompanied by an SLA, which often includes security priorities, responsibilities, guarantees, and warranties.

Regulations

Regulations are rules imposed on your organization by an outside agency, like a certifying board or a government entity, and they're usually totally rigid and immutable. The list of possible regulations that your company could be subjected to is so exhaustively long, there's no way I can include them all in this book. Different regulations exist for different types of organizations, depending on whether they're corporate, nonprofit, scientific, educational, legal, governmental, and so on, and they also vary by where the organization is located.

For instance, US governmental regulations vary by county and state, federal regulations are piled on top of those, and many other countries have multiple regulatory bodies as well. The Sarbanes-Oxley Act of 2002 (SOX) is an example of a regulation system imposed on all publicly traded companies in the United States. Its main goal was to ensure corporate responsibility and sound accounting practices, and although that may not sound like it would have much of an effect on your IT department, it does because a lot of the provisions in this act target the retention and protection of data. Believe me, something as innocent sounding as deleting old emails could get you in trouble—if any of them could've remotely had a material impact on the company's financial disclosures, deleting them could actually be breaking the law. All good to know, so be aware, and be careful!

I'm not going to give you a laundry list of regulations to memorize here, but I will tell you that IT regulations center around something known as the CIA triad:

- Confidentiality: Only authorized users have access to the data.

- Integrity: The data is accurate and complete.

- Availability: Authorized users have access to the data when access is needed.

One of the most commonly applied regulations is the ISO/IEC 27002 standard for information security, previously known as ISO 17799, renamed in 2007 and updated in 2013. It was developed by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC), and it is based on British Standard (BS) 7799-1:1999.

The official title of ISO/IEC 27002 is Information technology - Security techniques - Code of practice for information security controls. Although it's beyond our scope to get into the details of this standard, know that the following items are among the topics it covers:

- Risk assessment

- Security policy

- Organization of information security

- Asset management

- Human-resources security

- Physical and environmental security

- Communications and operations management

- Access control

- Information systems acquisition, development, and maintenance

- Information security incident management

- Business-continuity management

- Compliance

So, what do you take with you from this? Your mission is clear. Know the regulations your company is expected to comply with, and make sure your IT policies and procedures are totally in line with any regulations so it's easy for you to comply with them. No sense getting hauled off to jail because you didn't archive an email, right?

Safety Practices

In the course of doing business, it's the responsibility of the company to protect the safety of its workers, customers, vendors, and business partners. In the following sections, some of the issues that affect safety are considered, along with best practices and guidelines for preventing injuries and damage to equipment.

Electrical Safety

IT personnel spend a great deal of time dealing with electrical devices. Therefore, electrical safety should be stressed in all procedures. In this section, we'll look at key issues involved with electrical safety, including those that are relevant to preventing injuries to people and damage to computer equipment.

- Grounding Grounding is the electrical term for providing a path for an electrical charge to follow to return to earth. To prevent injuring yourself when you are working with equipment, you should ensure that you are grounded. To avoid damaging the equipment with which you are working, it should also be grounded.

You can provide grounding to yourself or the equipment with either a grounding strap or a grounding mat. Either of these should be plugged into the ground of an electrical outlet.

- ESD Electrostatic discharge (ESD) is the technical term for what happens whenever two objects of dissimilar charge come in contact. ESD can be generated easily by walking across a carpeted floor. While the amount of ESD generated doing that may shock you if you touch a doorknob, it's really not enough to harm you. However, even that small amount is enough to seriously damage sensitive parts of computers.

This is exactly why we ground both ourselves and the equipment—to prevent ESD damage. Always use mats and straps to prevent damage when working with computing equipment.

- Static When ESD is created, it's a form of static energy. Extremely dry conditions in the area where computers are utilized make the problem of static electricity worse. This is why the humidity of the area must be controlled; the area must not be too humid, which causes corrosion of electrical connections, and it should not be too dry, which causes static buildup and potential for damage.

Installation Safety

While protecting yourself from electrical injury is very important, it's not the only safety issue you've got to take into consideration. Other types of injuries can also occur, ranging from a simple pulled muscle to a more serious incident requiring a trip to the hospital. The following issues related to installing equipment should also be taken into consideration.

- Lifting Equipment Oftentimes when a piece of equipment is being installed, the time pressures involved and the rush to “get 'er done” can lead to improper lifting. Always keep in mind these safe lifting techniques:

- Be careful to not twist when lifting. Keep the weight at the center of your body.

- Keep objects as close to your body as possible and at waist level.

- Lift with your legs, not your back. When you have to pick up something, bend at the knees, not at the waist. You want to maintain the natural curve of the back and spine when lifting.

- Whenever possible, push instead of pull.

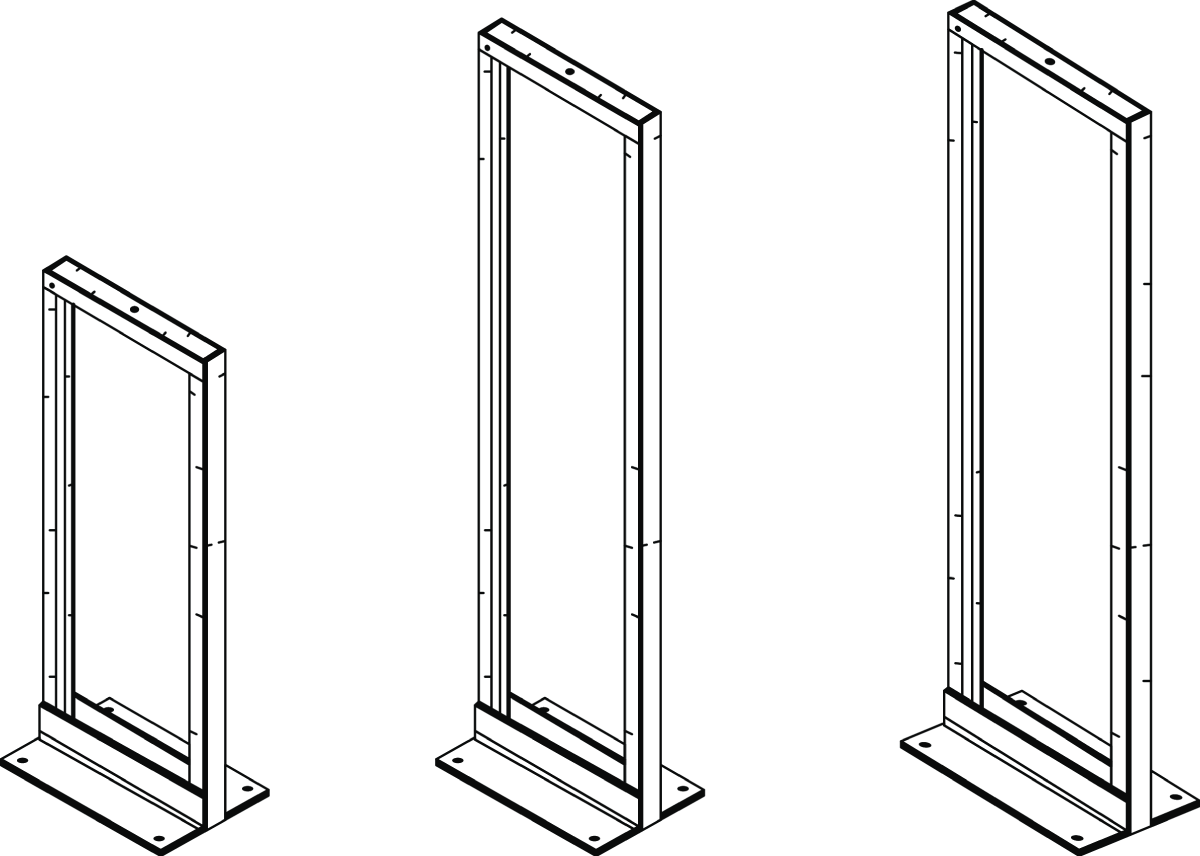

- Rack Installation Even for a small business, it's bad business to operate computing equipment in a poor environment such as on a shelf. There is a reason so many devices come “rack ready.” Racks not only make for a neat and clean server room or closet, but when combined with proper cable management and environmental control, they provide an environment that allows the devices to breathe and stay cool.

When installing racks, always follow the manufacturer's directions and always use the correct tools! Countless screws have been ruined using the wrong tool.

Server racks are measured in terms of rack units, usually written as RU or simply U. One rack unit equals 1.75 inches (44.45 mm) in height, with compliant equipment measured in multiples of U. Network switches are generally 1U to 2U, servers can range from 1U to 4U, and blade servers can be anywhere from 5U to 10U or more.

I'll cover the types of racks you're likely to encounter in more detail later in this chapter.

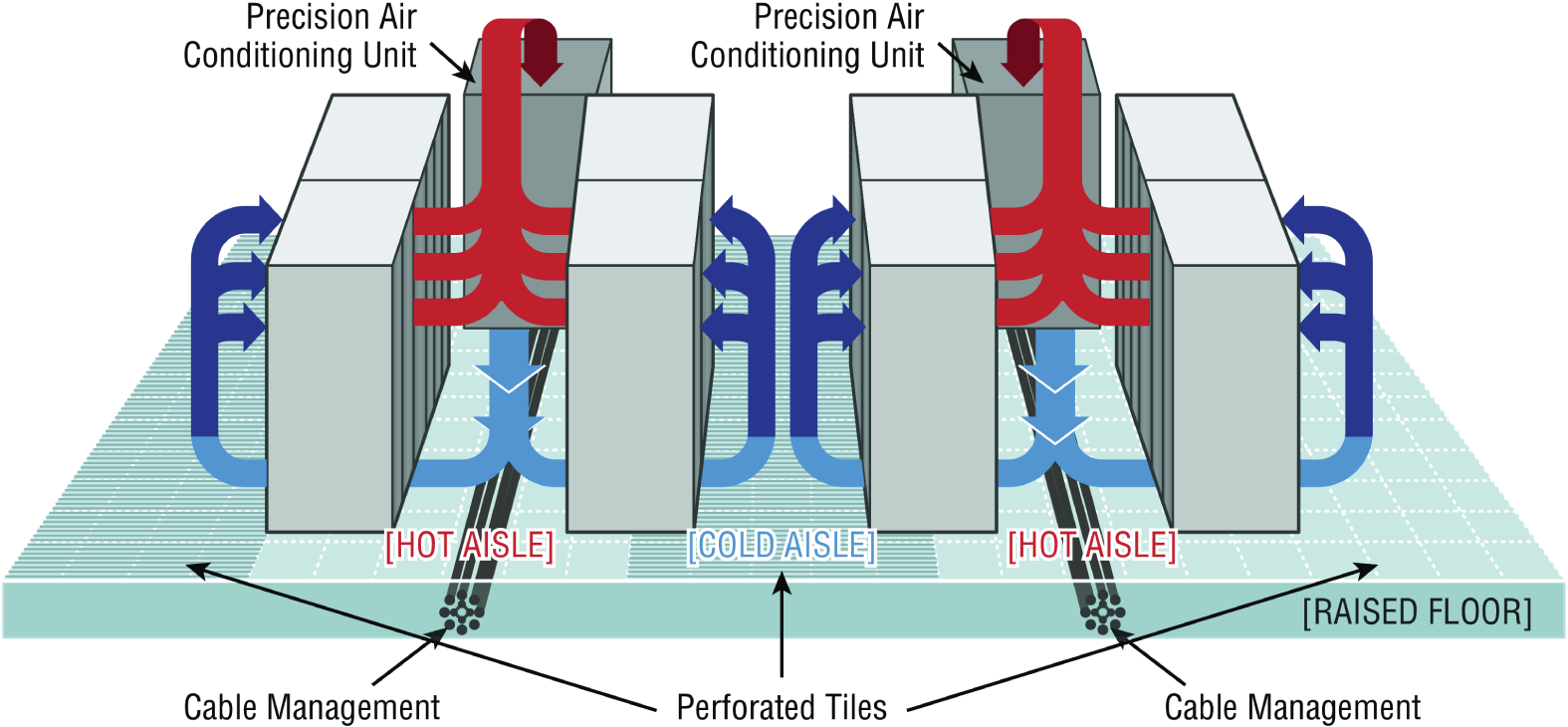

- Placement The most important issue when placing devices is to ensure proper cooling and protection from moisture. It's a good idea to align the racks and install your equipment in hot and cold aisles. The goal of a hot aisle/cold aisle configuration is to conserve energy and lower cooling costs by managing air flow.

Hot aisle/cold aisle design involves lining up racks in alternating rows with cold air intakes facing one way and hot air exhausts facing the other. The rows composed of rack fronts are called cold aisles. Typically, cold aisles face air conditioner output ducts. The rows the heated exhausts pour into are called hot aisles and face air conditioner return ducts. Moreover, all of the racks and the equipment they hold should never be on the floor. There should be a raised floor to provide protection against water.

Figure 21.15 shows a solid arrangement.

FIGURE 21.15 Hot and cold aisles

- Tool Safety It's worth mentioning again that the first step on safely using tools is to make sure you're properly grounded. Besides practicing tool safety for your own welfare, you should do so to protect the equipment. Here are some specific guidelines to follow:

- Avoid using pencils inside a computer. They can become a conductor and cause damage.

- Be sure that the tools you are using have not been magnetized. Magnetic fields can be harmful to data stored on magnetic media.

- When using compressed air to clean inside the computer, blow the air around the components with a minimum distance of 4 inches (10 centimeters) from the nozzle.

- Clean the contacts on components with isopropyl alcohol. Do not use rubbing alcohol.

- Never use a standard vacuum cleaner inside a computer case. The plastic parts of the vacuum cleaner can build up static electricity and discharge to the components. Use only vacuums that are approved for electronic components.

- MSDS In the course of installing, servicing, and repairing equipment, you'll come in contact with many different types of materials. Some are safer than others. You can get all the information you need regarding the safe handling of materials by reviewing the Material Safety Data Sheet (MSDS).

Any type of chemical, equipment, or supply that has the potential to harm the environment or people has to have an MSDS associated with it. These are traditionally created by the manufacturer and describe the boiling point, melting point, flash point, and potential health risks. You can obtain them from the manufacturer or from the Environmental Protection Agency (EPA).

Emergency Procedures

Every organization should be prepared for emergencies of all types. If possible, this planning should start with the design of the facility and its layout. In this section, I'll go over some of the components of a well-planned emergency system along with some guidelines for maintaining safety on a day-to-day basis.

- Building Layout Planning for emergencies can start with the layout of the facility. Here are some key considerations:

- All walls should have a two-hour minimum fire rating.

- Doors must resist forcible entry.

- The location and type of fire suppression systems should be known.

- Flooring in server rooms and wiring closets should be raised to help mitigate flooding damage.

- Separate AC units must be dedicated to the information processing facilities.

- Backup and alternate power sources should exist.

- Fire Escape Plan You should develop a plan that identifies the escape route in the event of a fire. You should create a facility map showing the escape route for each section of the building, keeping in mind that it's better to use multiple exits to move people out quickly. These diagrams should be placed in all areas.

- Safety/Emergency Exits All escape routes on the map should have the following characteristics:

- Clearly marked and well lit

- Wide enough to accommodate the expected number of people

- Clear of obstructions

- Fail Open/Fail Close Door systems that have electronic locks may lose power during a fire. When they do, they may lock automatically (fail close) or unlock automatically (fail open). While a fail close setting may enhance security during an electrical outage, you should consider the effect it will have during an evacuation and take steps to ensure that everyone can get out of the building when the time comes.

- Emergency Alert System All facilities should be equipped with a system to alert all employees when a fire or any other type of emergency occurs. It might be advisable to connect the facility to the Emergency Alert System (EAS), which is a national warning system in the United States. One of the functions of this system is to alert the public of local weather emergencies such as tornadoes and flash floods. EAS messages are transmitted via AM and FM radio, broadcast television, cable television, and the Land Mobile Radio Service as well as VHF, UHF, and FiOS (wireline video providers).

- Fire-Suppression Systems While fire extinguishers are important and should be placed throughout a facility, when large numbers of computing devices are present, it is worth the money to protect them with a fire-suppression system. The following types of systems exist:

- Wet pipe systems use water contained in pipes to extinguish the fire.

- Dry pipe systems hold the water in a holding tank instead of in the pipes.

- Preaction systems operate like a dry pipe system except that the sprinkler head holds a thermal-fusible link that must melt before the water is released.

- Deluge systems allow large amounts of water to be released into the room, which obviously makes this not a good choice where computing equipment will be located.

- Today, most companies use a fire suppressant like halon, which is known as a “Clean Agent, an electrically non-conducting, volatile, or gaseous fire extinguisher that does not leave a residue upon evaporation.” Leaving no residue means not rendering inoperative expensive networking equipment as water can do if released in a data center. It's remarkably safe for human exposure, meaning that it won't poison living things, and it will allow you to leave the area safely, returning only after the fire department gives the all-clear.

HVAC

The heating and air-conditioning systems must support the massive amounts of computing equipment deployed by most enterprises. Computing equipment and infrastructure devices like routers and switches do not like the following conditions:

- Heat. Excessive heat causes reboots and crashes.

- High humidity. It causes corrosion problems with connections.

- Low humidity. Dry conditions encourage static electricity, which can damage equipment.

Here are some important facts to know about temperature:

- At 100 degrees, damage starts occurring to magnetic media.

- At 175 degrees, damage starts occurring to computers and peripherals.

- At 350 degrees, damage starts occurring to paper products.

Implementing Network Segmentation

Maintaining security in the network can be made easier by segmenting the network and controlling access from one segment to another. Segmentation can be done at several layers of the OSI model. The most extreme segmentation would be at layer 1 if the networks are actually physically separated from one another. In other cases, it may be sufficient to segment a network at layer 2 or layer 3. Coming up next, we'll look at some systems that require segmentation from other networks at one layer or another.

SCADA Systems/Industrial Control Systems

Industrial control system (ICS) is a general term that encompasses several types of control systems used in industrial production. The most widespread is supervisory control and data acquisition (SCADA). SCADA is a system operating with coded signals over communication channels to provide control of remote equipment. It includes the following components:

- Sensors, which typically have digital or analog I/O, and these signals are not in a form that can be easily communicated over long distances

- Remote terminal units (RTUs), which connect to the sensors and convert sensor data to digital data (includes telemetry hardware)

- Programmable logic controllers (PLCs), which connect to the sensors and convert sensor data to digital data (does not include telemetry hardware)

- Telemetry systems, which connect RTUs and PLCs to control centers and the enterprise

- Human interface, which presents data to the operator

- ICS server, also called a data acquisition server, which uses coded signals over communication channels to acquire information about the status of the remote equipment for display or for recording functions

The distributed control system (DCS) network should be a closed network, meaning it should be securely segregated from other networks. The Stuxnet virus hit the SCADA used for the control and monitoring of industrial processes.

Medianets

Medianets are networks primarily devoted to VoIP and video data that often require segmentation from the rest of the network at some layer. We implement segmentation for two reasons: first, to ensure the security of the data, and second, to ensure that the network delivers the high performance and low latency required by these applications. One such high-demand application is video teleconferencing (VTC), which I'll cover next.

Video Teleconferencing (VTC)

IP video has ushered in a new age of remote collaboration. This has saved a great deal of money on travel expenses and enabled more efficient use of time. When you're implementing IP video systems, consider and plan for the following issues:

- Expect a large increase in the need for bandwidth.

- QoS will need to be configured to ensure performance.

- Storage will need to be provisioned for the camera recordings.

- Initial cost may be high.

There are two types of VTC systems. Let's look at both:

- ISDN The first VTC systems were ISDN based. These systems were based on a standard called H.320. While the bandwidth in each ISDN line is quite low by today's standard (128 Kbps per line), multiple lines could be combined or bonded.

- IP/SIP VTC systems based on IP use a standard called H.323. Since these work on a packet-switched network, you don't need a direct ISDN link between the sites. Session Initiation Protocol (SIP) can also be used, and it operates over IP but lacks many of the structured call control functions that H.323 provides.

Legacy Systems

Legacy systems are systems that are older and incompatible with more modern systems and equipment. They may also be less secure and no longer supported by the vendor. In some cases, these legacy systems, especially with respect to industrial control systems, use propriety protocols that prevent them from communicating on the IP-based network. It's a good idea to segment these systems to protect them from security issues they aren't equipped to handle or even just to allow them to function correctly.

Separate Private/Public Networks

Public IP addressing isn't typically used in a modern network. Instead, private IP addresses are used and network address translation (NAT) services are employed to convert traffic to a public IP address when the traffic enters the Internet. While this is one of the strategies used to conserve the public IP address space, it also serves to segment the private network from the public network (Internet). Hiding the actual IP address (private) of the hosts inside the network makes it very difficult to make an unsolicited connection to a system on the inside of the network from the outside.

Honeypot/Honeynet

Another segmentation tactic is to create honeypots and honeynets. Honeypots are systems strategically configured to be attractive to hackers and to lure them into spending enough time attacking them to allow information to be gathered about the attack. In some cases, entire networks called honeynets are attractively configured for this purpose.

You need to make sure that either of these types of systems do not provide direct connections to any important systems. Their ultimate purpose is to divert attention from valuable resources and to gather as much information about an attack as possible. A tarpit is a type of honeypot designed to provide a very slow connection to the hacker so that the attack takes enough time to be properly analyzed.

Testing Lab

Testing labs are used for many purposes. Sometimes they're created as an environment for developers to test applications. They may also be used to test operating system patches and antivirus updates. These environments may even be virtual environments. Virtualization works well for testing labs because it makes it easier to ensure that the virtual networks have no physical connection to the rest of the network, providing necessary segmentation.

Security

One of the biggest reasons for implementing segmentation is for security purposes. At layer 1, this means complete physical separation. However, if you don't want to go with complete segmentation, you can also segment at layer 2 on switches by implementing VLANs and port security. This can prevent connections between systems that are connected to the same switch. They can also be used to organize users into common networks regardless of their physical location.

If segmentation at layer 3 is required, this is achieved using access control lists on routers to control access from one subnet to another or from one VLAN to another. Firewalls can implement these types of access lists as well.

Compliance

Finally, network segmentation may be required to comply with an industry regulation. For example, while it's not strictly required, the Payment Card Industry Data Security Standard (PCI DSS) strongly recommends that a credit card network should be segmented from the regular network. If you choose not to do this, your entire network must be compliant with all sections of the standard.

Network Optimization

Regardless of how well a network is functioning, you should never stop trying to optimize its performance. This is especially true when latency-sensitive applications such as VoIP, streaming video, and web conferencing are implemented. In the next several sections, I'll discuss some techniques you can use to ensure that these applications and services deliver on their promise of increased functionality.

Reasons to Optimize Your Network's Performance

So why do we have networks, anyway? I don't mean this in a historical sense; I mean pragmatically. The reason they've become such precious resources is that as our world becomes increasingly smaller and more connected we need to be able to keep in touch now more than ever. Networks make accessing resources easy for people who can't be in the same location as the resources they need—including other people.

In essence, networks of all types are really complex tools we use to facilitate communication from afar and to allow lots of us to access the resources we need to keep up with the demands imposed on us in today's lightning-paced world. And use them we do—a lot! And when we have many, many people trying to access one resource like a valuable file server or a shared database, our systems can get as bogged down and clogged as a freeway at rush hour. Just as road rage can result from driving on one of those not-so-expressways, frustrated people can direct some serious hostility at you if the same thing happens when they're trying to get somewhere using a network that's crawling along at snail speed.