Empirical Validation of Agent-Based Models✶

Thomas Lux⁎,1; Remco C.J. Zwinkels† ⁎Christian Albrechts Universität zu Kiel, Germany

†Vrije Universiteit Amsterdam and Tinbergen Institute, The Netherlands

1Corresponding author. email address: [email protected]

Abstract

The literature on agent-based models has been highly successful in replicating many stylized facts of financial and macroeconomic time series. Over the past decade, however, also advances in the estimation of such models have been made. Due to the inherent heterogeneity of agents and nonlinearity of agent-based models, fundamental choices have to be made to take the models to the data. In this chapter we provide an overview of the current literature on the empirical validation of agent-based models. We discuss potential lessons from other fields of applications of agent-based models, avenues for estimation of reduced form and ‘full-fledged’ agent-based models, estimation methods, as well as applications and results.

Keywords

Agent-based models; Validation; Method of moments; State space models; Sequential Monte Carlo; Switching mechanisms; Reduced form models

1 Introduction

The primary field of development of agent-based models in economics has been the theory of price formation in financial markets. It is also in this area that we find the vast majority of attempts in recent literature to develop methods for estimation of such models. This is not an accidental development. It is rather motivated by the particular set of ‘stylized facts’ observed in financial markets. These are overall statistical regularities characterizing asset returns and volatility, and they seem to be best understood as emergent properties of a system composed of dispersed activity with conflicting centrifugal and centripetal tendencies. Indeed, ‘mainstream’ finance has never even attempted an explanation of these stylized facts, but often has labeled them ‘anomalies’. In stark contrast, agent-based models seem to be generically able to relatively easily replicate and explain these stylized facts as the outcome of market interactions of heterogeneous agents.

The salient characteristics of the dynamics of asset prices are different from those of dynamic processes observed outside economics and finance, but are surprisingly uniform across markets. There are highly powerful tools available to quantify these dynamics, such as GARCH models to describe time-varying volatility (see Engle and Bollerslev, 1986) and Extreme Value Theory to quantify the heaviness of the tails of the distribution of asset returns (see e.g. Embrechts et al., 1997). For a long time very little has been known about the economic mechanisms causing these dynamics. The traditional paradigm building on agent rationality and consequently also agent homogeneity has not been able to provide a satisfying explanation for these complex dynamics. This lack of empirical support coupled with the unrealistic assumptions of the neoclassical approach has contributed to the introduction and rise of agent-based models (ABMs) in economics and finance; see e.g. Arthur (2006) in the previous edition of this handbook.

Whereas the strength of ABMs is certainly their ability to generate all sorts of complex dynamics, their relatively (computationally) demanding nature is a drawback. This was, among others, a reason why Heterogeneous Agent Models (HAMs) were developed as a specific type of agent based models. Most HAMs only consider two very simple types of agents. Specifically, most models contain a group of fundamentalists expecting mean reversion and chartists expecting trend continuation. The main source of dynamics, however, is a switching function allowing agents to switch between the two groups conditional on past performance. Interestingly, even such simplified and stylized versions of ABMs are capable of replicating the complex price dynamics of financial markets to a certain degree; see e.g. Hommes (2006) for an overview. Recent research has also collected catalogs of stylized facts of macroeconomic data, and agent-based approaches have been developed to explain those (e.g. Dosi et al., 2013, 2015).

Due to the aforementioned background of ABMs, the early literature has typically been relying on simulations to study the properties of models with interacting agents. By doing so, authors were able to illustrate the ability of ABMs to generate complex dynamic processes resembling those observed in financial markets. There are, however, several good reasons why especially ABMs should be confronted with empirical data. First of all, ABMs are built on the notion of bounded rationality. This generates a large number of degrees of freedom for the theorist as deviations from rationality can take many forms. Empirical verification of the choices made in building the models can therefore enforce discipline in model design. Second, by confronting ABMs with empirical data, one should get a better understanding of the actual law of motion generating market prices. Whereas simulation exercises with various configurations might generate similar dynamics, a confrontation with empirical data might allow inference on relative goodness-of-fit in comparison to alternative explanations. This is especially appealing because the introduction of ABMs was empirically motivated in the first place. Finally, empirical studies might allow agent based models to become more closely connected to the ‘mainstream’ economics and finance literature. Interestingly, certain elements underlying ABMs have been used in more conventional settings; see for example Cutler et al. (1991) or Barberis and Shleifer (2003), who also introduce models with boundedly rational and interacting agents. Connections between these streams of literature, however, are virtually non-existent. By moving on to empirical validation, which could also serve as a stepping stone towards more concrete applications and (policy) recommendations, the ABM literature should become of interest and relevance to a broader readership.

While ABMs are based on the behavior of and interaction between individual agents, they typically aspire to explain macroscopic outcomes and therefore most empirical studies are also focusing on the market level. The agent based approach, however, by definition has at its root the behavior of individual agents and, by doing so, any ABM necessarily makes a number of assumptions about individual behavior. Stepping away from the rational representative agent approach implies that alternative behavioral assumptions have to be formulated. Whereas rational behavior is uniquely defined, boundedly rational behavior can take many forms. Think, for example, of the infinite number of subsets that can be extracted from the full information set relevant for investing, let alone the sentiment that agents might incorporate in their expectations. To address these two issues, Hong and Stein (1999) define three criteria the new paradigm should adhere to, which serve as a devise to restrict the modeler's imagination. The candidate theory should (i) rest on assumptions about investor behavior that are either a-priori plausible or consistent with casual observation; (ii) explain the existing evidence in a parsimonious and unified way; and (iii) make a number of further predictions that can be subject to out-of-sample testing. Whereas empirical evidence at the macro-level mainly focuses on criteria (ii) and (iii), micro-level evidence is necessary to fulfill criterion (i) and thereby find support for the assumptions made in building the agent based models. This is especially pressing for the reduced form models discussed in Section 3, as a number of assumptions are made for example regarding the exact functional form of the heterogeneous groups.

Taking ABMs to the data is not straightforward due to an often large number of unknown parameters, nonlinearity of the models leading to a possibly non-monotonic likelihood surface, and sometimes limited data availability. As such, one needs to make choices in order to be able to draw empirical inferences. In this review, we distinguish between two approaches. The first approach covers (further) simplifications of ABMs and HAMs to reduced form models making them suitable for estimation using relatively standard econometric techniques. These reduced form models are often sufficiently close to existing econometric models, with the additional benefit of a behavioral economic underpinning. The second approach is less stringent in the additional assumptions made on agent behavior, but requires more advanced estimation methods. Typically, these methods belong to the class of simulation-based estimators providing the additional benefit that the model is not fitted on the mean of the data, as typically is the case when using standard estimation techniques, but on the (higher) moments. This creates a tighter link between the original purpose of ABMs of explaining market dynamics and the empirical approach.

All in all, the empirical literature on agent based models has been mounting over the past decade. There have been interesting advances in terms of methods, models, aggregation approaches, as well as markets, which we will review in this chapter. The empirical results generally appear to be supportive of the agent-based approach, with an emphasis on the importance of dynamics in the composition of market participants. The estimation methods and exact functional forms of groups of agents vary considerably across studies, making it hard to draw general conclusions and to compare results across studies. The common denominator, however, is that virtually all studies find evidence in support of the relevance of the heterogeneity of agents. Allowing agents to switch between groups generally has a positive effect on model fit. These results typically hold both in-sample and out-of-sample. In view of the dominance of financial market applications of agent-based models, most of this survey will be dealing with attempts at estimating ABMs designed to explain asset price dynamics. We note, however, that the boundaries between agent-based models and more traditional approaches are becoming more and more fuzzy. For example, recent dynamic game-theoretic and microeconomic models (Blevins, 2016; Gallant et al., 2016) also entail a framework of a possibly heterogeneous pool of agents interacting in a dynamic setting. Similarly, heterogeneity has been allowed for in standard macroeconomic models in various ways (e.g., Achdou et al., 2015). However, all these approaches are based on inductive solutions of the agents' optimization problem while models that come along with the acronym ABM would typically assume some form of bounded rationality. We stick to this convention and mainly confine attention to ABMs with some kind of boundedly rational behavior. Notwithstanding this confinement, models with a multiplicity of rational agents might give rise to similar problems and solutions when it comes to their empirical validation.

Being boundedly rational agents ourselves, this chapter no doubt suffers from the heuristics we have applied in building a structure and selecting papers. As such, this review should not be seen as an exhaustive overview of the existing literature, but rather as our idiosyncratic view of it. The remainder of the chapter is organized as follows. In Section 2 we discuss which insights economists can gain from other fields when it comes to estimation of ABMs. Whereas Section 3 discusses reduced form models, Section 4 reviews the empirical methods employed in estimation of more general variants of agent-based models. It also proposes a new avenue for estimation by means of state-space methods, which have not been applied in agent-based models in economics and finance so far. Section 5 discusses the empirical evidence for ABMs along different types of data at both the individual and the aggregate level that can be used to validate agent-based models. Section 6, finally, concludes and offers our view on the future of the field.

2 Estimation of Agent-Based Models in Other Fields

The social sciences seem to be the field predestined for the analysis of individual actors and the collective behavior of groups of them. However, agent-based modeling is not strictly confined to subjects dealing with humans, as one could, for example, also conceive of the animals or plants of one species as agents, or of different species within an ecological system. Indeed, biology is one field in which a number of potentially relevant contributions for the subject of this review can be found. Before we move on to such material, we first provide an overview over agent-based models and attempts at their validation in social sciences other than economics.

2.1 Sociology

Sociology by its very nature concerns itself with the effects of interactions of humans. In contrast to economics, there has never been a tradition like that of the ‘representative agent’ in this field. Hence, interaction among agents is key to most theories of social processes. The adaptation of agent-based models on a relatively large scale coincided with a more computational approach that has appeared over the last decades. Many of the contributions published in the Journal of Mathematical Sociology (founded in 1971) can be characterized as agent-based models of social interactions, and the same applies to the contributions to Social Networks (founded in 1979). The legacy of seminal contributions partly overlaps with those considered milestones of agent-based research in economic circles as well, e.g. Schelling's model of the involuntary dynamics of segregation processes among ethnic groups (Schelling, 1971), and Axelrod's analysis of the evolution of cooperation in repeated plays of prisoners” dilemmas (Axelrod, 1984). Macy and Willer (2002) provide a comprehensive overview over the use of agent-based models and their insights in sociological research. More recently, Bruch and Atwell (2015) and Thiele et al. (2014) discuss strategies for validation of agent-based models.

These reviews not only cover contributions in sociology alone, but also provide details on estimation algorithms applied in ecological models as well as systematic designs for confrontation of complex simulation models with data (of which agent-based models are a subset). A systematic approach to estimation of an interesting class of agent-based models has been developed in network theory. The pertinent class of models has been labeled ‘Stochastic Actor-Oriented Models’ (SAOM). It formalizes individuals' decisions to form and dissolve links to other agents within a network setting. This framework bears close similarity to models of discrete choice with social interactions in economics (Brock and Durlauf, 2001a, 2001b). The decision to form, keep or give up a link is necessarily of discrete nature. Similar to discrete choice models, the probabilities for agents to change from one state to another are formalized by multinomial logit expressions. This also allows the interpretation that the agents' objective functions contain a random idiosyncratic term following an extreme value distribution. The objective function naturally is a function evaluating the actor's satisfaction with her current position in the network. This ‘evaluation function’ is, in principle, completely flexible and allows for a variety of factors of influence on individuals' evaluation of network ties: actor-specific properties whose relevance can be evaluated by including actor covariates in the empirical analysis (e.g., male/female), dyadic characteristics of pairs of potentially connected agents (e.g., similarity with respect to some covariate), overall network characteristics (e.g., local clustering), as well as time-dependent effects like hysteresis or persistence of existing links or ‘habit formation’ (e.g., it might be harder to cut a link, the longer it has existed).

Snijders (1996) provides an overview over the SAOM framework. For estimation, various approaches have been developed: Most empirical applications use the method of moments estimator (Snijders, 2001), but recently also a Generalized Method of Moments (GMM) approach has been developed (Amati et al., 2015). Maximum likelihood estimation (Snijders et al., 2010) and Bayesian estimation (Koskinen and Snijders, 2007) are feasible as well. The set-up of the SAOM approach differs from that of discrete choice models in economics in that agents operate in a non-equilibrium setting, while the discrete choice literature usually estimates its models under rational expectations, i.e. assuming agents are operating within an equilibrium configuration correctly taking into account the influence of each agent on all other agents' utility functions. While the SAOM framework does not assume consistency of expectations, one can estimate its parameters under the assumption that the data are obtained from the stationary distribution of the underlying stochastic process. If the model explicitly includes expectations (which is typically not the case in applications in sociology) these should then have become consistent. Recent generalizations include an extension of the decision process by allowing for additional behavioral variables besides the network formation activities of agents (Snijders et al., 2007) and modeling of bipartite networks, i.e. structures consisting of two different types of agents (Koskinen and Edling, 2012). The tailor-made R package SIENA (Snijders, 2017) covers all these possibilities, and has become the work tool for a good part of sociological network research. Economic applications include the analysis of managers' job mobility on the creation of interfirm ties (Checkley and Steglich, 2007), and the analyses of link formation in the interbank money market (Zappa and Zagaglia, 2012; Finger and Lux, 2017). A very similar approach to the estimation of network models of human interactions within the context of development policy can be found in Banerjee et al. (2013).

2.2 Biology

Agent-based simulation models have found pervasive use in biology, in particular for modeling of population dynamics and ecological processes. The range of methods to be found in these areas tends to be wider than in the social sciences. In particular, various simulation-based methods of inference are widely used that have apparently hardly ever been adopted for validation of ABMs in the social sciences or in economics. Relatively recent reviews can be found in Hartig et al. (2011) and Thiele et al. (2014), who both cover ecological applications along with sociological ones. Methods used for estimation of the parameters of ecological models include Markov Chain Monte Carlo (MCMC), Sequential Monte Carlo (SMC), and particle filters, which are all closely related to each other. In most applications, the underlying model is viewed as a state-space model with one or more unobservable state(s) governed by the agent-based model and a noisy observation that allows indirect inference on the underlying states together with the estimation of the parameters of the pertinent model. In a linear, Gaussian framework for both the dynamics of the hidden state and the observation, such an inference problem can be solved most efficiently with the Kalman filter. In the presence of nonlinearities and non-Gaussianity, alternative, mostly simulation-based methods need to be used. An agent-based model governing the hidden states by its very nature typically is a highly nonlinear and non-Gaussian process, and often can only be implemented by simulating its defining microscopic laws of motion. The simulation-based methods mentioned above would then allow to numerically approximate the likelihood function (or if not available, any other objective function) via some population-based evolutionary process for the parameters and states, in which the simulation of the ABM itself is embedded.

Markov Chain Monte Carlo samples the distribution of the model's parameters within an iterative algorithm in which the next step depends on the likelihood of the previous one. In each iteration, a proposal for the parameters is computed via a Markov Chain, and the proposal is accepted with a probability that depends on its relative likelihood vis-à-vis the previous draws and their relative probabilities to be drawn in the Markov chain. After a certain transient this chain should converge to the stationary distribution of the parameters allowing to infer their expectations and standard errors. In Sequential Monte Carlo, it is not one realization of parameters, but a set of sampled realizations that are propagated through a number of intermediate steps to the final approximation of the stationary distribution of the parameters (cf. Hartig et al., 2011). In an agent-based (or population-based) framework, the simulation of the unobservable part (the agent-based part) is often embedded via a so-called particle filter in the MCMC or SMC framework. Proposed first by Gorden et al. (1993) and Kitagawa (1996), the particle filter approximates the likelihood of a state-space model by a swarm of ‘particles’ (possible realizations of the state) that are propagated through the state and observation parts of the system. Approximating the likelihood by the discrete probability function summarizing the likelihood of the particles, one can perform either classical inference or use the approximations of the likelihood as input in a Bayesian MCMC or SMC approach.

Advanced particle methods use particles simultaneously for the state and the parameters (Kitagawa, 1998). With the augmented state vector, filtering and estimation would be executed at the same time, and the surviving particles of the parameters at the end of one run of this so-called ‘auxiliary’ particle filter would be interpreted as parameter estimates. Instructive examples from a relatively large pertinent literature in ecology include Golightly and Wilkinson (2011), who estimate the parameters of partly observed predator-prey systems via Markov Chain Monte Carlo together with a particle filter of the state dynamics, or Ionides et al. (2006), who apply frequentist maximum likelihood based on a particle filter to epidemiological data. MCMC methods have also been applied for rigorous estimation of the parameters of traffic network models, cf. Molina et al. (2005). An interesting recent development is Approximate Bayesian Computation (ABC) that allows inference based on MCMC and SMC algorithms using objective functions other than the likelihood (Sisson et al., 2005; Toni et al., 2008). Since it is likely that for ABMs of a certain complexity, it will not be straightforward to evaluate the likelihood function, these methods should provide a welcome addition to the available toolbox.

2.3 Other Fields

Agent-based models can be viewed as a subset of ‘computer models’, i.e., models with an ensemble of mathematical regularities that can only be implemented numerically. Such models might not have units that can be represented as agents, but might take the form of large systems of complex (partial) differential equations. Examples are various models of industrial processes (cf. Bayarri et al., 2007), or the fluid dynamical systems used in climate change models (Stephenson et al., 2012). In biology, one might, in fact, sometimes have the choice to use an agent-based representation of a certain model, or rather a macroscopic approximation using, for example, a low-dimensional system of differential equations (cf. Golightly et al., 2015, for such an approach in an ecological model). Similar approximation of agent-based models in economics can be found in Lux (2009a, 2009b, 2012). The same choice might be available for other agent-based models in economics or finance (see below Section 4.3). In macroeconomics, dynamic stochastic general equilibrium (DSGE) models are medium-sized systems of non-linear difference equations that have also been estimated in recent literature via Markov Chain Monte Carlo and related methods (e.g., Amisano and Tristani, 2010).

In climate modeling, epidemics (Epstein, 2009), and industrial applications (Bayarri et al., 2007), models have become so complex that an estimation of the complete model often becomes unfeasible. The same applies to computational models in anthropology such as the well-known model of Anasazi settlement dynamics in northeastern Arizona (Axtell et al., 2002). The complexity of such models also implies that only a limited number of scenarios can be simulated and that different models can at best be compared indirectly. The epistemological consequences of this scenario are intensively discussed in climate change research as well as in the social sciences (cf. Carley and Louie, 2008). In the context of very complex models and/or sparse data, empirical validation is often interpreted in a broader sense than estimation proper. Aiming to replicate key regularities of certain data is known in ecology as pattern-oriented modeling (cf. Grimm et al., 2005). This is equivalent to what one would call ‘matching the stylized facts’ in economics. As far as patterns can be summarized as functions of the data and a simulated agent-based model could be replicated without too much computational effort, a more rigorous version of pattern-oriented modeling would consist in a method-of-moments approach based upon the relevant patterns. However, even if only a small number of simulations of a complex simulation model can be run, estimation of parameters through rigorous exploitation of the (scarce) available information is possible. Within the framework of industrial applications and epidemiological dynamics, Bayarri et al. (2007), Higdon et al. (2008), and Wang et al. (2009) provide a systematic framework for a Bayesian estimation approach that corrects the biases and assesses the uncertainties inherent in large simulation models that can neither be replicated often nor selectively modified. In the analysis of complex models of which only a few replications are available often so-called emulation methods are adopted to construct a complete response of model output on parameters. Typically, emulator functions make use of Gaussian processes and principal component analysis (e.g., Hooten and Wikle, 2010; Heard, 2014; Rasouli and Timmermans, 2013). One might envisage that such a framework could also be useful for macroeconomics once agent-based models of various economic spheres are combined into larger models.

3 Reduced Form Models

The literature on agent-based models was initially purely theoretical in nature. As such, the benchmark models did not take the restrictions into account that empirical applications require. A number of issues in the theoretical models need to be addressed before the models can be confronted with empirical data, especially when focusing on market level studies. One direction is to use advanced econometric techniques, which we will discuss in Section 4. Another direction is to rewrite the model in a reduced form. Bringing HAMs to the data introduces a trade-off between the degree of micro-foundation of the (empirical) model and the appropriateness of the model for estimation. A first choice is the form of the dependent (endogenous) variable. Several models, such as, for example, Day and Huang (1990) and Brock and Hommes (1997), are written in terms of price levels. This poses no problems in an analytical or simulation setting, but could become problematic when turning to calibration or estimation. Most standard econometric techniques assume that the input data is stationary. This assumption, however, is typically violated when using financial prices or macroeconomic time series.

The second issue is the identification of coefficients. The theoretical models contain coefficients that might not all be identified econometrically, which means that one could not obtain an estimate of all the behavioral parameters of a model but that, for instance, only composite expressions of the primitive parameters can be estimated. A third issue relates to the switching mechanism that is typically applied in HAMs. Allowing agents to switch between strategies is, perhaps, the identifying characteristic of HAMs and an important source of dynamics in simulation settings. At the same time it poses a challenge for empirical work, as the switching function is by definition non-linear which could create a non-monotonic likelihood surface.

A final issue we want to discuss here, is the choice of the fundamental value in asset-pricing applications. The notion of a fundamental value is intuitively appealing and central to the behavior of ‘fundamentalists’ in HAMs. Empirically, though, the ‘true’ fundamental value is principally unobservable. As there is no objective choice for the fundamental value, any estimation of a model including a fundamental value will therefore inevitably suffer from the ‘joint hypothesis problem’, cf. Fama (1991). We will discuss these four issues in more detail in the following subsections.

3.1 Choice of Dependent Variable

Whereas several HAMs are principally written in terms of price levels, empirical studies using market data are hardly ever based on price levels due to the non-stationarity issue. Possible solutions to this issue include working with alternative econometric methods, such as, e.g., cointegration techniques (Amilon, 2008; Frijns and Zwinkels, 2016a, 2016b) or simulation techniques (Franke, 2009; Franke and Westerhoff, 2011), but the more typical solution is to reformulate the original model such that the left hand side variable is stationary.

Two main approaches consist of empirical models in terms of returns, ΔPt=Pt−Pt−1![]() with Pt

with Pt![]() the asset price at time t, and empirical models in terms of price deviation from the fundamental, Pt−P⁎t

the asset price at time t, and empirical models in terms of price deviation from the fundamental, Pt−P⁎t![]() . The choice between the two is driven by the underlying HAM, or more specifically its micro-foundation and market clearing mechanism.1 Micro-founded equilibrium models based on a Walrasian auctioneer, such as Brock and Hommes (1997, 1998), assume the existence of an all-knowing auctioneer who collects all supply and demand schedules and calculates the market clearing price. These models are typically written in terms of price levels. It is not possible to convert these into price changes because prices are modeled as a non-linear function of lagged prices. Disequilibrium models based on the notion of a market maker, see Beja and Goldman (1980), Day and Huang (1990), or Chiarella (1992) for early examples, assume that net demand (supply) causes prices to increase (decrease) proportionally, without assuming market clearing. These models are typically written in terms of price changes or are easily reformulated as such.2

. The choice between the two is driven by the underlying HAM, or more specifically its micro-foundation and market clearing mechanism.1 Micro-founded equilibrium models based on a Walrasian auctioneer, such as Brock and Hommes (1997, 1998), assume the existence of an all-knowing auctioneer who collects all supply and demand schedules and calculates the market clearing price. These models are typically written in terms of price levels. It is not possible to convert these into price changes because prices are modeled as a non-linear function of lagged prices. Disequilibrium models based on the notion of a market maker, see Beja and Goldman (1980), Day and Huang (1990), or Chiarella (1992) for early examples, assume that net demand (supply) causes prices to increase (decrease) proportionally, without assuming market clearing. These models are typically written in terms of price changes or are easily reformulated as such.2

The most widely applied configuration is the model with a market maker in terms of price changes or returns (see e.g. Frankel and Froot, 1990; Reitz and Westerhoff, 2003; Reitz et al., 2006; Manzan and Westerhoff, 2007; De Jong et al., 2009, 2010; Kouwenberg and Zwinkels, 2014, 2015). The empirical models are typically of the form:

(1) ΔPt=c+wftα(Pt−1−P⁎t−1)+wctβΔPt−1+εt

in which wft![]() and wct

and wct![]() are the fundamentalist and chartist weights, respectively, εt

are the fundamentalist and chartist weights, respectively, εt![]() is the noise factor and c, α, and β are the coefficients to be estimated. Given that both the left hand and right hand side variables are denoted in differences, the stationarity issue is largely extenuated. At the same time, there is no (explicit) micro-foundation in the sense of a utility or profit maximizing framework that motivates the behavior of the two groups of agents in this model.

is the noise factor and c, α, and β are the coefficients to be estimated. Given that both the left hand and right hand side variables are denoted in differences, the stationarity issue is largely extenuated. At the same time, there is no (explicit) micro-foundation in the sense of a utility or profit maximizing framework that motivates the behavior of the two groups of agents in this model.

In a series of papers, Alfarano et al. (2005, 2006, 2007) set up a HAM with trading among speculators and a market maker that results in a dynamic process for log returns. They derive a closed-form solution for the distribution of returns that is conditional on the structural parameters of the model and estimate these parameters via an approximate maximum likelihood approach. In a follow-up study, Alfarano et al. (2008) derive closed form solutions to the higher moments of the distribution.

The second approach is to write the model in terms of price deviations from the fundamental value, xt=Pt−P⁎t![]() , or a variant thereof. The implicit assumption that is made, is that the price and fundamental price are cointegrated with cointegrating vector (1,−1)

, or a variant thereof. The implicit assumption that is made, is that the price and fundamental price are cointegrated with cointegrating vector (1,−1)![]() , such that the simple difference between the two is stationary.3 One example of this approach is Boswijk et al. (2007), who initially base their study on the Brock and Hommes (1998) model in terms of price levels:

, such that the simple difference between the two is stationary.3 One example of this approach is Boswijk et al. (2007), who initially base their study on the Brock and Hommes (1998) model in terms of price levels:

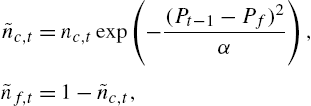

(2) Pt=11+rH∑h=1nh,tEh,t(Pt+1+yt+1),

with Eh,t(⋅)![]() denoting the expectation of agents of group h, nh,t

denoting the expectation of agents of group h, nh,t![]() their number at time t, yt

their number at time t, yt![]() the dividend and r the risk-free interest rate. Dividing the left and right-hand side of Eq. (1) by dividend yt

the dividend and r the risk-free interest rate. Dividing the left and right-hand side of Eq. (1) by dividend yt![]() and assuming that yt+1=(1+g)yt

and assuming that yt+1=(1+g)yt![]() , Eq. (2) can be written in terms of price-to-cash flow

, Eq. (2) can be written in terms of price-to-cash flow

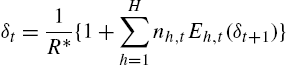

(3) δt=1R⁎{1+H∑h=1nh,tEh,t(δt+1)}

in which δ=Pt/yt![]() and R⁎=(1+g)/(1+r)

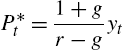

and R⁎=(1+g)/(1+r)![]() . Assuming a fundamental value based on the Gordon growth model, the fundamental is given by P⁎t=1+gr−gyt

. Assuming a fundamental value based on the Gordon growth model, the fundamental is given by P⁎t=1+gr−gyt![]() , such that the fundamental price-to-cash-flow ratio is given by δ⁎t=1+gr−g

, such that the fundamental price-to-cash-flow ratio is given by δ⁎t=1+gr−g![]() . Finally, Boswijk et al. (2007) use xt=δt−δ⁎t

. Finally, Boswijk et al. (2007) use xt=δt−δ⁎t![]() as the input to their empirical model. This approach has been applied, among others, by Chiarella et al. (2014).

as the input to their empirical model. This approach has been applied, among others, by Chiarella et al. (2014).

Clearly, the choice of model has consequences on the results but also on the interpretation of the results. The deviation type models assume that xt![]() is the variable that investors form expectations about, whereas the return type models assume that ΔPt

is the variable that investors form expectations about, whereas the return type models assume that ΔPt![]() is the variable that investors form expectations about. Theoretically these should be equivalent, but we know from social psychology that people respond differently to such different representations of the same information. For example, Glaser et al. (2007) find in an experimental study that price forecasts tend to have a stronger mean reversion pattern than return forecasts. Furthermore, in the deviation type models the two groups of agents rely on the same type of information, namely xt−1

is the variable that investors form expectations about. Theoretically these should be equivalent, but we know from social psychology that people respond differently to such different representations of the same information. For example, Glaser et al. (2007) find in an experimental study that price forecasts tend to have a stronger mean reversion pattern than return forecasts. Furthermore, in the deviation type models the two groups of agents rely on the same type of information, namely xt−1![]() or last period's price deviation. Fundamentalism and chartism are subsequently distinguished by the coefficients in the expectations function, in which a coefficient >1 (<1) implies chartism (fundamentalism). This interpretation, however, is not exactly the same as with the original models of fundamentalists and chartists because chartists do not expect a price trend to continue but rather expect the price deviation from fundamental to increase. Furthermore, this setup is rather restrictive in that it does not allow for the inclusion of additional trader types. In the return based models, on the other hand, agents use different information sets as indicated in Eq. (1). This allows for more flexibility as any trader type can be added to the system. De Jong et al. (2009), for example, include a third group of agents to their model, internationalists, next to fundamentalists and chartists.

or last period's price deviation. Fundamentalism and chartism are subsequently distinguished by the coefficients in the expectations function, in which a coefficient >1 (<1) implies chartism (fundamentalism). This interpretation, however, is not exactly the same as with the original models of fundamentalists and chartists because chartists do not expect a price trend to continue but rather expect the price deviation from fundamental to increase. Furthermore, this setup is rather restrictive in that it does not allow for the inclusion of additional trader types. In the return based models, on the other hand, agents use different information sets as indicated in Eq. (1). This allows for more flexibility as any trader type can be added to the system. De Jong et al. (2009), for example, include a third group of agents to their model, internationalists, next to fundamentalists and chartists.

There is also an important econometric difference between the deviation and return type models. In the deviation type models, the two groups are not identified under the null of no switching because both rely exclusively on xt−1![]() as information; the switching parameter is a nuisance parameter; see Teräsvirta (1994). As a result, the statistical added value of switching is to be determined using a bootstrap procedure. In the return-based models, however, this issue does not hold as both groups remain identified under the null of no switching, and the added value of switching is therefore determined by means of standard goodness-of-fit comparisons.4

as information; the switching parameter is a nuisance parameter; see Teräsvirta (1994). As a result, the statistical added value of switching is to be determined using a bootstrap procedure. In the return-based models, however, this issue does not hold as both groups remain identified under the null of no switching, and the added value of switching is therefore determined by means of standard goodness-of-fit comparisons.4

3.2 Identification

The original HAMs have a relatively large number of parameters, which might not all be identified in an estimation setting. As a result, the econometrician will have to make one or more simplifying assumptions such that all parameters are identified. As in the previous subsection, here we can also make the distinction between models based on a Walrasian auctioneer and models based on a market maker.

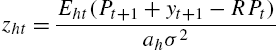

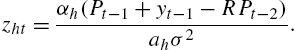

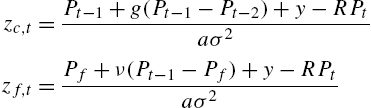

Brock and Hommes (1997) build their model using mean-variance utility functions, resulting in a demand function of group h of the form

(4) zht=Eht(Pt+1+yt+1−RPt)ahσ2

in which R=1+r![]() , a is the coefficient of risk aversion, and σ market volatility. Now assume a very simple structure of the expectation formation rule:

, a is the coefficient of risk aversion, and σ market volatility. Now assume a very simple structure of the expectation formation rule:

(5) Eht(Pt+1+yt+1−RPt)=αh(Pt−1+yt−1−RPt−2)

such that

(6) zht=αh(Pt−1+yt−1−RPt−2)ahσ2.

The empirical issue with such a demand function, is that the coefficients ah![]() and αh

and αh![]() cannot be distinguished from each other. One solution is to take α′h=αh/ahσ2

cannot be distinguished from each other. One solution is to take α′h=αh/ahσ2![]() , assuming volatility is constant such that α′h

, assuming volatility is constant such that α′h![]() is also a constant that can be estimated. This assumption, however, is at odds with the initial motivation of HAMs to provide an economic explanation for time-varying volatility. Therefore, the following steps are typically taken. Summing up the demand functions over groups and equating to supply yields the market clearing condition:

is also a constant that can be estimated. This assumption, however, is at odds with the initial motivation of HAMs to provide an economic explanation for time-varying volatility. Therefore, the following steps are typically taken. Summing up the demand functions over groups and equating to supply yields the market clearing condition:

(7) ΣhnhtEht(Pt+1+yt+1−RPt)ahσ2=zst

in which nht![]() is the proportion of agents in group h in period t, and zst

is the proportion of agents in group h in period t, and zst![]() is the supply of the asset. Brock and Hommes (1997) subsequently assume a zero outside supply of stocks, zst=0

is the supply of the asset. Brock and Hommes (1997) subsequently assume a zero outside supply of stocks, zst=0![]() , such that

, such that

(8) RPt=ΣhnhtEht(Pt+1+yt+1).

In other words, by assuming zero outside supply, the risk aversion coefficients an![]() drop out of the equation and agents effectively become risk neutral provided all groups h are characterized by the same degree of risk aversion (so that groups only differ in their prediction of future price movements). This step eliminates the identification issue, but also reduces the impact of agent's preferences on their behavior. As an alternative avenue, Hommes et al. (2005a, 2005b) introduce a market maker who adjusts the price in the presence of excess demand or excess supply.

drop out of the equation and agents effectively become risk neutral provided all groups h are characterized by the same degree of risk aversion (so that groups only differ in their prediction of future price movements). This step eliminates the identification issue, but also reduces the impact of agent's preferences on their behavior. As an alternative avenue, Hommes et al. (2005a, 2005b) introduce a market maker who adjusts the price in the presence of excess demand or excess supply.

In a setting with a market maker, authors typically start by specifying demand functions of the form

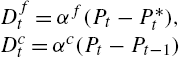

(9) Dft=αf(Pt−P⁎t),Dct=αc(Pt−Pt−1)

with superscripts f and c denoting the pertinent reaction coefficients of fundamentalists and chartists, respectively. Note that these are already simplified in the sense that risk preference is not taken into account. This either implies that agents are risk neutral, or that αf![]() and αc

and αc![]() can be interpreted broader as coefficients capturing both the expectation part and a risk adjustment part, αf=αf′/aσ

can be interpreted broader as coefficients capturing both the expectation part and a risk adjustment part, αf=αf′/aσ![]() . This works again under the assumption that volatility σ is constant.

. This works again under the assumption that volatility σ is constant.

Aggregating demand of the two groups yields market demand:

(10) Dmt=nftDft+nctDct

such that the price equation is given by

(11) Pt=Pt−1+λDmt+εt

in which λ is the market maker reaction coefficient, and εt![]() is a stochastic disturbance.

is a stochastic disturbance.

In this setting, the market maker reaction coefficient λ is empirically not identifiable independently of αf![]() and αc

and αc![]() . Two solutions to this issue have been proposed. Either one assumes that λ=1

. Two solutions to this issue have been proposed. Either one assumes that λ=1![]() such that the estimated coefficient equals αh

such that the estimated coefficient equals αh![]() , or one interprets the estimated coefficient as a market impact factor, equal to αhλ

, or one interprets the estimated coefficient as a market impact factor, equal to αhλ![]() . Both solutions entail that both groups have the same price elasticity of demand.

. Both solutions entail that both groups have the same price elasticity of demand.

Both solutions described here result in extremely simple models of price formation. They do, however, capture the main behavioral elements of HAMs: boundedly rational expectation formation by heterogeneous agents, consistent with empirical evidence, combined with the ability to switch between groups. In addition, simulation exercises also illustrate that certain variants of these models are still able to generate some of the main stylized facts of financial markets, such as their excess volatility and the emergence and breakdown of speculative bubbles (Day and Huang, 1990; Chiarella, 1992). The ABM character underlying these empirical models essentially represents an economic underpinning of time-varying coefficients in an otherwise quite standard econometric model capturing conditional trends and mean-reversion.

3.3 Switching Mechanism

One of the main issues in estimating ABMs follows from the non-linear nature of the model that (mainly) arises from the existence of the mechanism that governs the switching between beliefs. As a result, the likelihood surface tends to be rugged making it challenging to find a global optimum. This issue has been explored either directly or indirectly by a number of papers. Several approaches have been used.

As an early example, Shiller (1984) introduces a model with rational smart money traders and ordinary investors and shows that the proportion of smart money traders varies considerably during the 1900–1983 period by assuming the aggregate effect of ordinary investors to be zero. Frankel and Froot (1986, 1990) have a very similar approach. Specifically, Frankel and Froot (1986) assume that market-wide expected returns are equal to the weighted average of fundamentalist and chartist expectations:

(12) Δsmt+1=ωtΔsft+1+(1−ωt)Δsct+1

with Δsmt+1![]() , Δsft+1

, Δsft+1![]() and Δsct+1

and Δsct+1![]() denoting the expected exchange rate changes of the overall ‘market’, of the fundamentalist group and of the chartist group, respectively, and wt

denoting the expected exchange rate changes of the overall ‘market’, of the fundamentalist group and of the chartist group, respectively, and wt![]() being the weight assigned by ‘the market’ to the fundamentalist forecast.5

being the weight assigned by ‘the market’ to the fundamentalist forecast.5

By assuming that chartists expect a zero return, we get

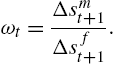

(13) ωt=Δsmt+1Δsft+1.

Frankel and Froot (1990) subsequently proxy Δsmt+1![]() by the forward discount, and Δsft+1

by the forward discount, and Δsft+1![]() by survey expectations. In this way, they implicitly back out the time-varying fundamentalist weight ωt

by survey expectations. In this way, they implicitly back out the time-varying fundamentalist weight ωt![]() from the data. Apart from making some strong assumptions about agent behavior, this method identifies the time-varying impact of agent groups, but does not identify the drivers of this time-variation.

from the data. Apart from making some strong assumptions about agent behavior, this method identifies the time-varying impact of agent groups, but does not identify the drivers of this time-variation.

Reitz and Westerhoff (2003) and Reitz et al. (2006) estimate a model of chartists and fundamentalists for exchange rates by assuming the weight of technical traders to be constant, and the weight of fundamental traders to depend on the normalized misalignment between the market and fundamental price. As such, there is no formal switching between forecasting rules, but the impact of fundamentalists is time-varying. Manzan and Westerhoff (2007) introduce time-variation in the chartist extrapolation coefficient by making it conditional on the current mispricing. Hence, the authors are capturing a driver of dynamic behavior, but do not estimate a full-fledged switching mechanism with switching between groups.

Another approach uses stochastic switching functions to capture dynamic behavior, such as regime-switching models (Vigfusson, 1997; Ahrens and Reitz, 2005; Chiarella et al., 2012) and state-space models (Baak, 1999; Chavas, 2000). The advantage of this approach relative to the deterministic switching mechanism that is typically applied in HAMs is that it puts less structure on the switching mechanism and thereby on the data. Furthermore, there is ample econometric literature studying the characteristics of such models. The drawback is that the estimated model weights have no economic interpretation as is the case for the deterministic switching functions. In other words, the stochastic switching models are able to infer from the data that agents switch between groups, but do not allow to draw inference about the motivation behind switching.6

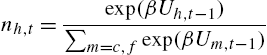

Boswijk et al. (2007) is the first study that estimates a HAM with a deterministic switching mechanism that captures switching between groups as well as the motivation behind switching (in this case, the profit difference between groups). While Boswijk et al. (2007) use U.S. stock market data, De Jong et al. (2009) apply a similar methodology to the British Pound during the EMS crisis and the Asian crisis, respectively. Alfarano et al. (2005) set up an empirical model based on Kirman (1993). In this model, switching is based on social interaction and herding rather than profitability considerations. Alfarano et al. (2005) show that in this model the tail behavior of the distributions of returns is a function of the herding tendency of agents.

Boswijk et al. (2007) rewrite the model of Brock and Hommes (1997) such that it simplifies to a standard smooth transition auto-regressive (STAR) model, in which the endogenous variable is the deviation of the price-earnings ratio from its long-run average and the switching function is a logit function of the form

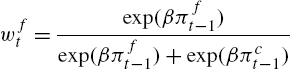

(14) wft=exp(βπft−1)exp(βπft−1)+exp(βπct−1)

in which πf![]() and πc

and πc![]() are measures of fundamentalists' and chartists' performance, respectively.

are measures of fundamentalists' and chartists' performance, respectively.

In this setup, the coefficient β captures the switching behavior of agents, or their sensitivity to performance differences, and is typically denoted the intensity of choice parameter. With β=0![]() , agents are not sensitive to differences in performance between groups and remain within their group with probability 1. With β>0

, agents are not sensitive to differences in performance between groups and remain within their group with probability 1. With β>0![]() , agents are sensitive to performance differences. In the limit, as β tends to infinity, agents switch to the most profitable group with probability 1 such that wft∈{0,1}

, agents are sensitive to performance differences. In the limit, as β tends to infinity, agents switch to the most profitable group with probability 1 such that wft∈{0,1}![]() .

.

The significance of β in this configuration cannot be judged based on standard t-tests as it enters the expression non-linearly. Specifically, for β sufficiently large or sufficiently small, additional changes in β will not result in changes in wft![]() . As such, the standard errors of the estimated β will be inflated. To judge the significance of switching, one therefore needs to examine the model fit.

. As such, the standard errors of the estimated β will be inflated. To judge the significance of switching, one therefore needs to examine the model fit.

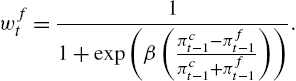

A second issue with this functional form is that the magnitude of β cannot be compared across markets or time periods. This is caused by the fact that its order of magnitude depends on the exact definition and the distributional characteristics of πft![]() . One way to address this issue, is to introduce normalized (unit-free) performance measures in a logit switching function, as first done in Ter Ellen and Zwinkels (2010):

. One way to address this issue, is to introduce normalized (unit-free) performance measures in a logit switching function, as first done in Ter Ellen and Zwinkels (2010):

(15) wft=11+exp(β(πct−1−πft−1πct−1+πft−1)).

The additional benefit of this form is that the distribution of profit differences is less heavy-tailed, causing the estimation to be more precise and less sensitive to periods of high volatility.

Baur and Glover (2014) estimate a model for the gold market with chartists and fundamentalist who switch strategies according to their past performance. They find a significant improvement of fit against a benchmark model without such switching of strategies, but very different estimated parameters in different subsamples of the data. They also compare this analysis with switching depending on selected market statistics and find similar results for the parameters characterizing chartists' and fundamentalists' expectation formation under both scenarios.

3.4 Fundamental Value Estimate

Whereas the fundamentalist–chartist distinction in HAMs is intuitively appealing and consistent with empirical observation,7 the exact functional form of the two groups is less straightforward. Chartism is typically modeled using some form of expectation of auto-correlation in returns, which is consistent with the empirical results of Cutler et al. (1991), who find autocorrelation in the returns of a broad set of assets, and it is also consistent with the tendency of people to erroneously identify trends in random data.8 Fundamentalism is typically modeled as expected mean reversion towards the fundamental value. The main question is, though, what this fundamental value should be.

There are several theoretical properties any fundamental value should have. Therefore, in analytical or simulation settings it is possible to formulate a reasonable process for the fundamental value. Empirically though, one has to choose a specific model. Note, however, that the fundamental value used in an empirical HAM is not necessarily the actual fundamental value of the asset. HAMs are based on the notion of bounded rationality, and it is therefore internally consistent to also assume this for the ability of fundamentalists to calculate a fundamental value. As such, the choice of fundamental value should be based on the question whether a boundedly rational market participant could reasonably make the same choice. In other words, the fundamental value should also be a heuristic.

A number of studies using equity data, starting with Boswijk et al. (2007), use a simple fundamental value estimate based on the Gordon-growth model or dividend-discount model; see Gordon and Shapiro (1956). The model is given by

(16) P⁎t=1+gr−gyt

in which yt![]() is dividend, g is the constant growth rate of dividends, and r is the required return or discount factor.

is dividend, g is the constant growth rate of dividends, and r is the required return or discount factor.

The advantage of this approach is certainly its simplicity. The drawback is that it does not take time-variation of the discount factor into account, as is common in mainstream asset pricing studies, cf. Cochrane (2001). This causes the fundamental value estimate P⁎t![]() to be rather smooth because the discount factor is assumed constant. As such, models using this fundamental value estimate might attribute an excessive amount of price volatility to non-fundamental factors. Hommes and in 't Veld (2017) are the first to address this issue and introduce a stochastic discount factor in HAMs. Specifically, next to the typical Gordon growth model they create a fundamental value estimate based on the Campbell and Cochrane (1999) consumption-habit model. The latter constitutes a typical consumption-based asset pricing model and therefore belongs to a different class of asset pricing models than the endowment based HAMs. The authors find evidence of behavioral heterogeneity, regardless of the underlying fundamental value estimate. Whereas Hommes and in 't Veld (2017) do not fully integrate the two approaches, their paper constitutes an interesting first step towards integrating the two lines of research, which might also help in getting the heterogeneity approach more widely accepted in the mainstream finance and economics literature.9

to be rather smooth because the discount factor is assumed constant. As such, models using this fundamental value estimate might attribute an excessive amount of price volatility to non-fundamental factors. Hommes and in 't Veld (2017) are the first to address this issue and introduce a stochastic discount factor in HAMs. Specifically, next to the typical Gordon growth model they create a fundamental value estimate based on the Campbell and Cochrane (1999) consumption-habit model. The latter constitutes a typical consumption-based asset pricing model and therefore belongs to a different class of asset pricing models than the endowment based HAMs. The authors find evidence of behavioral heterogeneity, regardless of the underlying fundamental value estimate. Whereas Hommes and in 't Veld (2017) do not fully integrate the two approaches, their paper constitutes an interesting first step towards integrating the two lines of research, which might also help in getting the heterogeneity approach more widely accepted in the mainstream finance and economics literature.9

Studies focusing on foreign exchange markets typically use the Purchasing Power Parity (PPP) model as fundamental value estimate; see e.g. Manzan and Westerhoff (2007); Reitz et al. (2006); Goldbaum and Zwinkels (2014). Kouwenberg et al. (2017) illustrate the added value of switching using different fundamental value estimates in a forecasting exercise for foreign exchange rates.

Alternatively, a number of studies circumvent the issue of choosing a particular model to proxy for the fundamental value. In each case, this approach yields a parsimonious proxy for a fundamental value, but also alters the exact interpretation of fundamentalist behavior. Furthermore, the approach is typically quite specific to a certain (institutional) framework and thereby less general. For example, De Jong et al. (2010) make use of the institutional framework of the European Monetary System (EMS), and use the central parity in the target zone regime as the fundamental. Whereas this provides a very clear and visible target, the group of fundamentalists no longer expect mean reversion towards the economic fundamental but expect the current institutional framework to be maintained without adjustments to the central parity.

Ter Ellen and Zwinkels (2010) use a moving average of the price level as fundamental value estimate. Whereas this is again a very parsimonious approach, the nature of fundamentalists in such a setup moves towards chartism as all expectations are based on market information. More recently, Frijns and Zwinkels (2016a, 2016b) have taken advantage of the fact that assets trade on multiple markets in formulating a fundamental value. Specifically, they use cross-listed stocks and the spot and derivatives markets, respectively. This changes the exact interpretation of fundamentalists towards arbitrageurs, but retains the stabilizing character of this particular group of market participants relative to the destabilizing chartists.

As we will see in the next section, the necessity of specifying the time development of the underlying fundamental value only applies to reduced-form models. When using a more general approach, it often suffices to assume a general law of motion of the fundamental value (e.g., Brownian motion). Estimation would, then, allow to identify, for instance, the variance of the innovations of the fundamental value along with the parameters of the agent-based part of the overall model. If the pertinent methodology allows filtering to retrieve unobserved variables, this would also provide an estimated trajectory of the fundamental value as the residual obtained by filtering the empirical data (raw prices or returns) by the behavioral component implied by the ABM. Note that such an approach is very different from the a priori specification of a plausible fundamental dynamic process in the models reviewed above.

4 Estimation Methods

4.1 Maximum Likelihood

4.1.1 General Considerations

By the very nature of agent-based models, maximum likelihood (ML) estimation without any numerical approximation will rarely be possible. Such a completely standard approach will indeed only be possible if the ABM can be represented by a reduced-form equation or a system of equations (e.g., a VAR structure) for which a standard ML estimation approach is available. Examples of such models have been covered in the previous section. Any such statistically convenient framework will be based upon relatively strong assumptions on the behavior of the underlying pool of agents. For instance, in order to end up with a reduced form that is equivalent to a (linear) regime-switching model (e.g. Reitz and Westerhoff, 2003), one has to assume that (i) two different groups of agents with two different linear demand functions exist, (ii) all the agents of one group are characterized by the same elasticities, (iii) markets are always dominated by one of the groups, and (iv) there is a unique (Gaussian) noise factor in each of these regimes. Condition (i) might be relaxed by having a less stringent microstructure based on a market-maker; condition (iii) might be relaxed by allowing for smooth transition models in the statistical implementation of the switching of strategies of agents along some discrete choice formalization.

Still, to be able to derive some simple macroscopic structural form of the agents' aggregate behavior, the stochastic factors have to be conceived a-priori as an additive noise superimposed on the agents' interaction. If, in principle, the agents' behavior is conceived to be of a stochastic nature (reflecting the inability of any model to completely cover all their motivations and idiosyncratic determinants of their behavior), this amounts to evoking the law of large numbers and resorting to the deterministic limiting process for an infinite number of agents in the population.

Maintaining the randomness of individual decisions as via a discrete choice formalization with a finite population would render the noise component of the model much more complicated: The noise would now consist of the set of all the stochastic factors entering the decision of all the agents in the model, i.e. with N agents the model would contain N stochastic processes rather than a single one as in typical structural equations. It is worthwhile to note that for typical candidates of the stochastic utility term in discrete choice models, like the Gumbel distribution, theoretical aggregation results are not available. Aggregation of individual decisions might also be hampered by correlation of their choices if social interactions are an important factor in the agents' decision process.

4.1.2 Maximum Likelihood Based on Numerical Integration

Full maximum likelihood for models with dispersed activity of an ensemble of agents would, in principle, require availability of closed-form solutions for the transient density of the process. Due to the complexity of most ABMs, such information will hardly ever be available. However, certain systems allow at least numerical approximations of the transient density that can be used for evaluation of the likelihood function. Lux (2009a) applies such a numerical approach to estimate a simple model of opinion formation for survey data of a business climate index. The underlying model assumes that agents switch between a pessimistic and optimistic expectation for the prospects of their economy under the influence of the opinion of their peers as well as exogenous factors (information about macroeconomic variables). For this model of social interaction, the transient density of the average opinion can be approximated via the so-called Fokker–Planck or forward Kolmogorov equation. The latter cannot be solved in closed form. However, as it is a partial differential equation, many well-known methods exist to integrate it numerically. It thus becomes possible to use a numerical ML estimator. Application to a business climate index for the German economy shows strong evidence of social interaction (herding), a significant momentum effect besides the baseline interaction and very limited explanatory power of exogenous economic variables.

This framework can, in principle, be generalized to more complex models with more than one dynamic process. Lux (2012) applies this approach to bivariate and trivariate processes. Here the underlying data consists of two sentiment surveys for the German stock market, short-run and medium-run sentiment, and the price of the DAX. The model allows for two interlinked opinion formation processes plus the dynamics of the stock index that might be driven by sentiment along with fundamental factors. Combining pairs of these three processes or all three simultaneously, the transient dynamics can again be approximately described by a (bivariate or trivariate) Fokker–Planck equation. These partial differential equations can again be solved numerically, albeit with much higher computational demands than in the univariate case. As it turns out, social interaction is much more pronounced in short-run than medium-run sentiment. It also turns out that both sentiment measures have little interaction (although they are obtained from the same ensemble of participants). The price dynamics show a significant influence of short-run sentiment which, however, could not be exploited profitably for prediction of stock prices in an out-of-sample forecasting exercise.

4.1.3 Approximate Maximum Likelihood10

When full maximum likelihood is not possible, various approximate likelihood approaches might still be feasible. For example, Alfarano et al. (2005) apply maximum likelihood based upon the stationary distribution of a financial market model with social interaction. Results would be close to the exact likelihood case only if the process converges quickly to its stationary distribution. In a similar framework, Kukacka and Barunik (2017) use the non-parametric simulated maximum likelihood estimator of Kristensen and Shin (2012) which uses simulated conditional densities rather than the analytical expressions and is, in principle, universally applicable. They show via Monte Carlo simulations that this approach can reliably estimate the parameters of a strategy-switching model à la Brock and Hommes (1997). They find significant parameters of the expected sign for the fundamentalist and chartist trading strategies for various stock markets, but the ‘intensity of choice’ parameter turns out to be insignificant which is also found by a number of related studies on similar models.

4.2 Moment-Based Estimators

4.2.1 General Considerations

A most straightforward way to estimate complex models is the Generalized Method of Moments (GMM) and the Simulated Method of Moments (SMM) approach. The former estimates parameters by matching a weighted average of analytical moments, the later uses simulated moments in cases in which analytical moments are not available. Both GMM and SMM have a long legacy of applications in economics and finance (cf. Mátyás, 1999) and should be flexible enough to also be applicable to agent-based models. However, even this very general approach might have to cope with specific problems when applied to typical agent-based models. One of these is the lack of continuity of many moments when varying certain parameters. To see this, consider an ensemble of agents subject to a discrete choice problem of deciding about the most promising trading strategy at any time, where, for the sake of concreteness, we denote the alternatives again as ‘chartism’ and ‘fundamentalism’. There will be two probabilities pcf(⋅)![]() and pfc(⋅)

and pfc(⋅)![]() for switching from one alternative to the other, both depending on statistics of the current and past market development. A simple way to simulate such a framework consists of drawing uniform numbers εi

for switching from one alternative to the other, both depending on statistics of the current and past market development. A simple way to simulate such a framework consists of drawing uniform numbers εi![]() for each agent i and making this agent switch if εi<pcf(⋅)

for each agent i and making this agent switch if εi<pcf(⋅)![]() or pfc(⋅)

or pfc(⋅)![]() depending on which is applicable.

depending on which is applicable.

The important point here is that this type of stochasticity at the level of the individual agent is distinctly different from a standard additive noise at the system level. Even when fixing the sequence of random numbers, any statistics derived from this process will not be smooth under variation of the parameters of the model. Namely, if we vary any parameter that enters as a determinant of pcf(⋅)![]() or pfc(⋅)

or pfc(⋅)![]() and keep the set of random draws constant, there will be a discontinuous move at some point making the agent switch her behavior. The same, of course, applies to all other agents, so that in contrast to a deterministic process with linear noise, a stochastic process with noise at the level of the agent will exhibit in general non-smooth statistics even with “frozen” random draws.

and keep the set of random draws constant, there will be a discontinuous move at some point making the agent switch her behavior. The same, of course, applies to all other agents, so that in contrast to a deterministic process with linear noise, a stochastic process with noise at the level of the agent will exhibit in general non-smooth statistics even with “frozen” random draws.

Luckily, this does not necessarily make all standard estimation methods unfeasible. While standard regularity conditions will typically require smoothness of the objective functions, more general sets of conditions can be established that allow for non-smooth and non-differentiable objective functions, cf. Andrews (1993). The more practical problem is that the rugged surface resulting from such a microfounded process would render standard derivative-based optimization routines useless.

Many recent papers on estimation of ABMs in economics have used various methods to match a selection of empirical moments. This should not be too surprising as, particularly in financial economics, the most prominent aim of the development of ABMs has been the explanation of the so-called stylized facts of asset returns. A list of such stylized facts includes (i) absence of autocorrelations in the raw returns at high frequencies or martingale-like behavior, (ii) leptokurtosis of the unconditional distribution of returns, or fat tails, (iii) volatility clustering or long-term temporal dependence in squared or absolute returns (or other measures of volatility), (iv) positive correlation between trading volume and volatility, and (v) long-term temporal dependence in volume and related measures of trading activity.

All of these features can be readily characterized by statistical moments of the underlying data, and quantitative measures of ‘stylized facts’ (i) to (iii) are typically used as the moments one attempts to match in order to estimate the models' parameter. Both in GMM and SMM, parameter estimates are obtained as the arguments of an objective function that consists of weighted deviations between the empirical and model-generated moments. According to our knowledge, stylized facts (iv) and (v) have been used to compare the output of agent-based models to empirical data (e.g., LeBaron, 2001) but have not been exploited so far in full-fetched estimation as all available studies concentrate on univariate series of returns and neglect other market statistics such as volume. Indeed, it even appears unclear whether well-known models that are able to match (i) to (iii) are also capable to explain the long-lasting autocorrelation of volume and its cross-correlation with volatility.

Almost all of the available literature also uses a simulated method of moments approach as the underlying models appear too complex to derive analytical moment conditions. An exception is Ghonghadze and Lux (2016).

4.2.2 Moment-Based Estimation of Structural Models

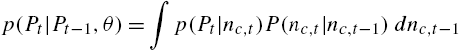

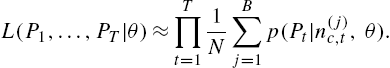

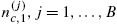

Within structural equation models Franke (2009) and Franke and Westerhoff (2011, 2012, 2016) have applied SMM estimation to a variety of models and have also conducted goodness-of-fit comparisons across different specifications. All the models considered are formulated in discrete time.

Franke (2009) estimates a model proposed by Manzan and Westerhoff (2007), which combines a market maker dynamics for price adjustments with a standard demand function of fundamentalists and a second group of traders, denoted speculators, who react to stochastic news. The author uses a sample of moments of raw and absolute returns, i.e. their means, autocovariances over various lags, and log absolute returns exceeding a certain threshold as a measure related to the tail index. Since it was found that the correlations between the moment conditions were too noisy, only the diagonal entries of the inverse of the variance–covariance matrix of the moment conditions has been used as weight matrix. Although the usual goodness-of-fit test, the so-called J-test for equality of empirical and model-generated moments, could always reject the model as the true data generating process for a sample of stock indices and exchange rates, the fit of the selected moments was nevertheless considered satisfactory.

Shi and Zheng (2016) consider an interesting variation of the discrete choice framework for switching between a chartist and fundamentalist strategy in which fundamentalists receive heterogeneous news about the change of the fundamental value. A certain fraction of agents then chooses one or the other strategy comparing their pertinent expected profits. In the infinite population limit, analytical expressions can be obtained for the two fractions. The resulting price process is estimated via analytical moments (GMM) from which the usual parameters of the demand functions of both groups and the dispersion of fundamental news relative to the agents' prior can be obtained.

Franke and Westerhoff (2011) estimate what they call a ‘structural stochastic volatility model’. This is a model of chartist/fundamentalist dynamics in which both demand functions consist of a systematic deterministic part and a noise factor with different variances for both groups. With an additional switching mechanism between groups this leads to volatility clustering in returns because of the different levels of demand fluctuations brought about by dominance of one or the other group. In their SMM estimation the authors use a weighting matrix obtained from bootstrapping the variability of the empirical moments. Results were again somewhat mixed: While the model could well reproduce the selected moments, the authors found that for two out of six parameters it could not be rejected that they were equal to zero in the application of the model to the US dollar–Deutsche Mark exchange rate series. Note that this implies that certain parts of the model seem to be superfluous (in this case the entire chartist component) and that a more parsimonious specification would probably have to be preferred. In the application to the S&P 500 returns, all parameters were significant. The same applies under a slightly different estimation procedure (Franke and Westerhoff, 2016). In the later paper, the authors also assess the goodness-of-fit of the model via a Monte Carlo analysis (rather than the standard J-test based on asymptotic theory) and found that under this approach, the model could not be rejected.

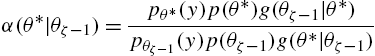

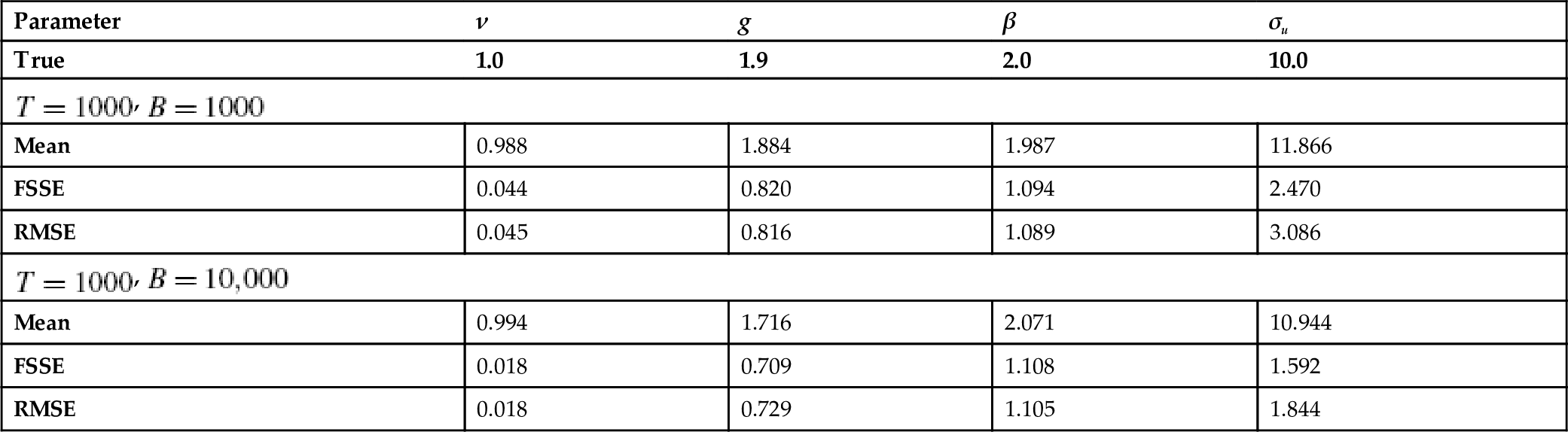

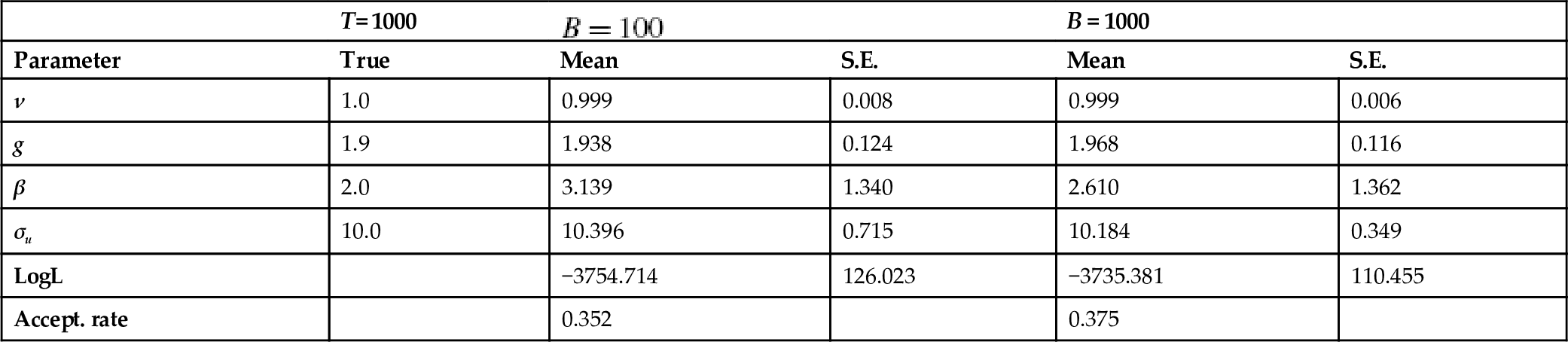

Franke and Westerhoff (2012), finally, use the SMM approach to conduct a model contest between two alternative formalizations of the chartist/fundamentalist approach: one with switching between strategies based on transition probabilities (the approach of their related papers of 2011 and 2016), and one using a discrete choice framework for the choice of strategy in any period. Further variations are obtained by considering different determinants in these switching or choice probabilities: the development of agents' wealth, herding and the effect of misalignment of asset prices. As it turns out, the discrete choice model with herding component in the fitness function performs best in matching the selected moments of S&P 500 returns.