"Tomorrow will give us something to think about."

Predicting the future is a sucker's game. But there is far more to interactive gestures than check-in kiosks, towel dispensers, and the nearly ubiquitous demonstration of scaling and sorting photos! As prices decrease and the availability of these devices (and the tools to create them) increases, we will see more novel implementations of touchscreens everywhere.

Of course, this may not be an entirely good thing. As Chapter 1 pointed out, gestural interfaces shouldn't be used for everything: they are a poor choice, for instance, for heavy data input. But assuredly, we'll see them being used for activities for which they weren't meant to be used, as well as for applications for which we never expected to use them. Despite the long history of this technology, we're entering a time—an interesting and exciting time—much like the early days of the Web in the 1990s or the beginning of personal computing in the 1970s and 1980s, when experimentation and exploration are the norms, when we're still figuring out standards and best practices and what this technology can really do.

It took about six years for the gestural system in Minority Report to move from science fiction to reality; what will the next six years bring? Here are my predictions.

We've seen gestural interfaces in public spaces such as public restrooms, retail environments, and airports. But touchscreens haven't entirely penetrated the home and office environment as of yet (at least not until, like any mature technology, they become invisible and "natural"). But that is changing quickly. Consumer electronics manufacturers are rapidly producing new lines of products that employ touchscreens. As BusinessWeek reported,[43] companies around the world are designing and producing new products with gestural interfaces:

"The touch-screen tech ecosystem now includes more than 100 companies specializing in everything from smudge-proof screens to sensors capable of detecting fingers before they even contact the screen. Sales of leading touch-screen technologies, such as those used in mobile phones and navigation devices, are expected to rise to $4.4 billion in 2012, up from $2.4 billion in 2006, according to iSuppli estimates."

This technology ecosystem, plus the extreme interest by companies in getting in on what is seen as the next wave of product innovation, practically guarantees that touchscreens and gestural interfaces will be entering the home and traditional office over the next several years.

Often when one mentions interactive gestures, the conversation turns to the impact that gestural interfaces will have on the desktop. Will the traditional keyboard-mouse-monitor setup be replaced by a touchscreen? Or a headset and special gloves?

The simple answer is...maybe, but probably not in the near future. It takes a long time for a technology, especially one as deeply ingrained as "traditional" computing, to be supplanted. Besides, a keyboard is still necessary for heavy data input (e.g., email, instant messaging, and word processing), although with a haptic system (see later in this chapter), this could be mitigated. The monitor (or some sort of visual display) is necessary as well, although this could easily be a touchscreen (as it is on some newer systems and on tablet PCs). The most vulnerable part of our existing PC setup is the mouse: the mouse could be replaced (and on many laptops it already has) by touchpads or a gestural means of controlling the cursor and other on-screen objects.

It certainly is possible that some jobs and activities that are currently accomplished using a traditional system will be replaced by a gestural interface. If you aren't doing heavy data entry, for instance, why do you need a keyboard and mouse? Gestural interfaces can and should be used for specialized applications and workstations.

The influence of interactive gestures will likely be felt, however, by the further warping—and even enhancement—of the desktop metaphor. If interactive gestures become one input device (alongside traditional keyboards and voice recognition), they could start to affect basic interactions with the PC. Waving a hand could move the windows to the side of the screen, for example. Finger flicks could allow you to quickly flip through files. Even a double head nod could open an application.

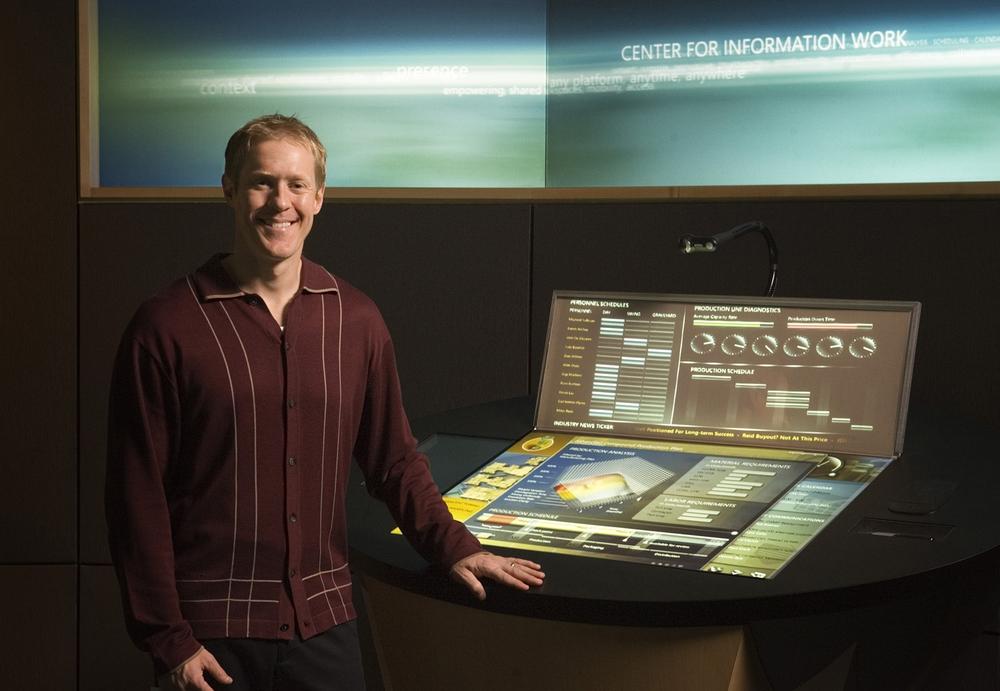

Figure 8-1. DigiDesk, a touchscreen work space that utilizes advanced visualization and modeling of information, smarter integration of metadata, and more sophisticated pattern recognition. Courtesy Microsoft's Center for Information Work.

The home, being semiprivate, may allow for a wider range of gestural products, since in the home you can do certain gestures and activities which you would seldom do in a workplace—for instance, clapping your hands to turn on a washing machine. Although gestural interfaces will naturally be used for "traditional applications" (already millions of people are using them with trackpads), they may find their greatest use in specialized applications and products.

Interestingly, unlike many of the technologies that are currently available, gestural interfaces may be best suited to settings other than the traditional office—at least, not the offices many of us are used to, with walls, desks, and cubicles. No, it is this author's opinion that, although offices will certainly be a rich place to find gestural interfaces, the best use of them may be in other locations: homes, public spaces, and other environments (retail, service environments such as restaurants, and so-called "third places"[44]). These other environments have a wider variety of activities than the traditional workplace, and many of these activities already have associated with them a physical activity (everything from cooking to working out to gaming to simply walking about) that could be further enhanced by making more gestures interactive.[45]

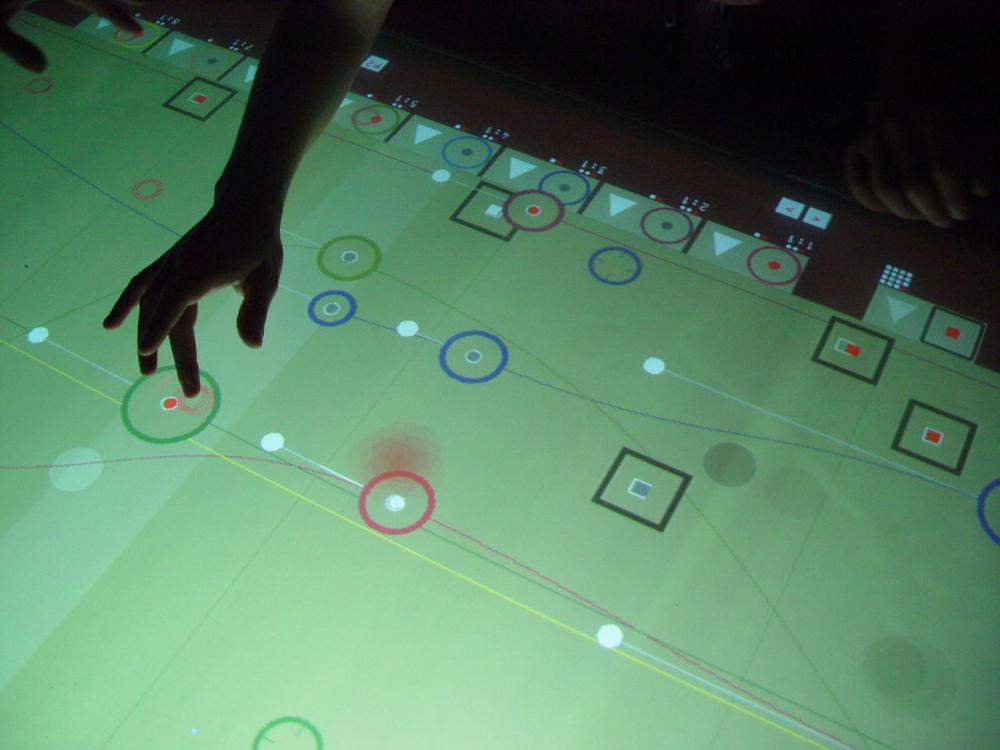

Figure 8-4. loopArenaMTC is a multitouch table used for generating live performances of loop-based computer music. Users are able to control eight MIDI instruments, such as synthesizers or samplers (software and hardware) and a drum machine, in a playful way. Courtesy Jens Wunderling.

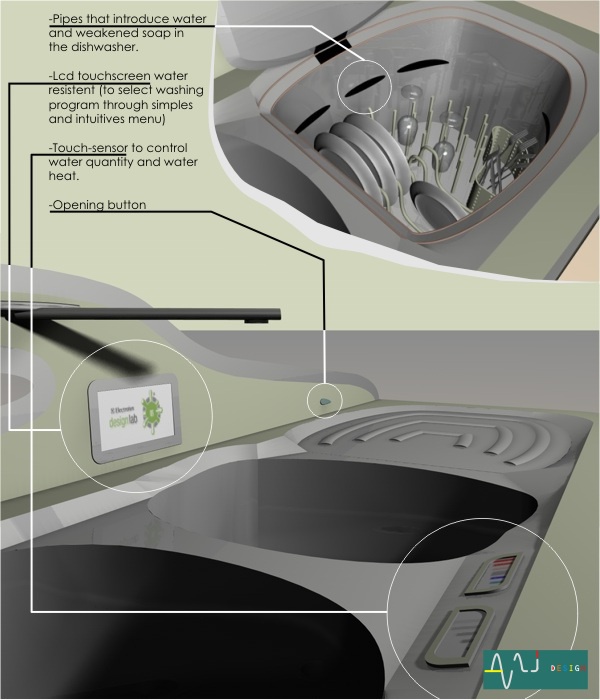

Specialized products will be paired with specialized activities in specialized environments, and that will be a rich source of gestural interfaces. Public restrooms are currently a great example of this, but other spaces could easily take on this sort of "hothouse" environment. The next likely place for such experimentation and adoption is kitchens: they feature lots of activities, plus a contained environment with tons of specialized equipment.

These specialized applications and products will probably require more than off-the-shelf touchscreens to make them work. They could have specialized gear that goes with them. As in many areas of technology, games are leading the way, with many forms of alternative input devices, from the Wiimote to objects shaped (and sort of used) like guitars.

Figure 8-5. Playing Rock Band requires gestures with guitar-shaped and operated objects. We don't typically think of this as a gestural interface because the design dissolves in the behavior so well. Courtesy Kim Lenox.

Figure 8-6. Osmose is a full-body immersion in a 360-degree spherical space. Using a head-mounted display, Osmose uses breathing and balance as the primary means of navigating within the virtual world. By breathing in, users are able to float upward; by breathing out, to fall; and by a subtle alteration in the body's center of balance, to change direction, a method inspired by the scuba diving practice of buoyancy control. Courtesy Char Davies and Immersence Inc.

Few technologies live in isolation, and interactive gestures are no exception. In the future, the line between the following technologies and the world of interactive gestures will only grow blurrier as the technologies and systems grow closer together.

Once we move past just using a keyboard and mouse to interact with our digital devices, our voices seem like the next logical input device. Voice recognition combined with gestures could be a powerful combination, allowing for very natural interactions. Imagine pointing to an object and simply saying, "Tell me about this chair."

Using voice with gestures could also help overcome the limitations of modes using free-form gestures. Users could issue voice commands to switch modes, then work in the new mode with gestures.

Virtual reality (VR) is the technology that always seems on the edge of tomorrow, and gestures, once you are in an immersive space, are a likely way of navigating and controlling the virtual environment. After all, the cameras and projectors are possibly already in place to display the environment, and the user is likely wearing or using some sort of gear onto which other sensors can be placed.

Especially with patterns such as Proximity Activate/Deactivate and Move Body to Activate, interactive gestures encroach on the field known as ubiquitous computing (ubicomp). Ubicomp envisions a world in which computing power has been thoroughly integrated into everyday objects and activities through sensors, networks, and tiny microprocessors. Ubicomp engages many computational devices and systems simultaneously as people go about their ordinary activities—people who may or may not be aware that they are users of a ubicomp system.

With its emphasis on integration with normal activities, interactive gestures are the natural partner to detect and use ubicomp systems. Ubicomp systems, with their ability to sense and distribute information, could be an enormous boon to gestural interfaces, performing activities such as importing personal gestural preferences to objects in any room you are in. Your touchscreen remote control in your hotel room could be exactly the same as yours at home. As you walk into another room of your house, ubicomp systems could dim lights in other rooms, IM your spouse to let her know where you are, and change the cushions on the sofa to reflect your desired firmness. But maybe you don't want any of that; with a gestural system in place, a wave of a hand could make you invisible to the system.

Because in physical spaces we're not typically without our bodies, using our bodies to control any ubicomp system around us seems like a natural pairing.

More and more devices are being built that incorporate Near Field Communication (NFC) technology (a new standard based on RFID), and some manufacturers, such as Nokia, are enabling interactive gestures with their devices as a way of enabling NFC. NFC is a short-range, high-frequency wireless communication technology that enables the exchange of data among devices that are very close together—within 4 inches (10 cm). Since NFC requires the devices in question to be very close in physical proximity to communicate, a gesture such as tapping or swiping devices together is a natural way to trigger communication.[46] With NFC, users can do everything from sharing business cards and images with another device, to paying for a bus ticket, to using their mobile device as a sort of universal controller for all sorts of consumer devices that need a rich interface but don't have the physical form or price range to justify one.[47]

Although the gestures for NFC are fairly limited to touches, taps, presses, and swipes, the use and position of these devices in an environment will have to be carefully designed.

NFC and RFID, once installed in objects and connected to the Internet (neither one a trivial task), will create what has been called The Internet of Things. Objects all over the environment will be equipped with sensors and RFID and/or NFC tags, allowing for communication about their location, creation, and history of use. How people interact with this Internet of Things has yet to be determined, but gestures, particularly gestures with smart objects, will likely play a part as readers, interpreters of data, and controllers.

Up until now, we've mostly discussed gestures in air or touches on screens, assuming that what we're gesturing with (if anything) is simply a "dumb," mostly mechanical device, perhaps filled with sensors, such as the Wiimote. But what if the devices we use are smart themselves—devices that change their functionality depending on the gestures made with them, or that use gestures in clever ways to make interactions subtler and more natural?

Figure 8-8. The "Bar of Soap" is a prototype device that senses how it is being held and adjusts its functionality accordingly. It can "transform" into a phone, camera, game console, or PDA. Courtesy Brandon Thomas Taylor and MIT Media Lab.

A Nokia marketing video[48] announcing the company's new (and as of this writing still unlaunched) S60 series phones with touchscreens contained one small moment, which designers have noted and commented on,[49] that demonstrates this well. In the (admittedly cheesy) video, a woman is talking to her boyfriend. On the table between them is her mobile phone (an S60 Touch of course!), its face up. The phone rings, and rather than interrupt her face-to-face conversation to fumble with the phone or ignore it until the call goes to voicemail (as we would have to do now), she simply flips it over, presumably sending the call to voicemail. It's a great example of a gesture taking on meaning via a smart device.

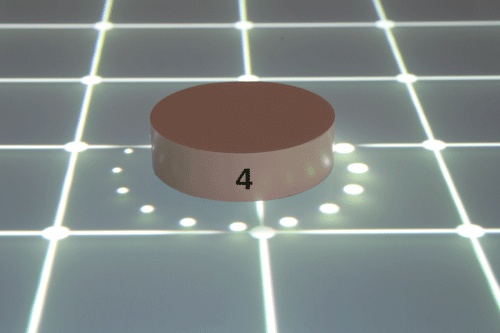

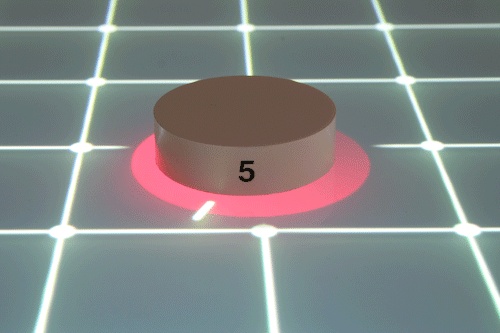

Figure 8-9. In this demo, Microsoft's Surface recognizes the object sitting on it as a mobile phone and can pass information back and forth. Courtesy Microsoft.

This gets us close to the related fields of haptic UIs and tangible UIs. Haptic UIs deliver feedback (in the form of vibrations, shakes, dynamic texture, temperature changes, etc.) that can be felt by touch alone. In fact, many feel that haptic UIs will eventually replace pure touchscreens because of their ability to create a more mechanical feeling (e.g., by creating buttons on the fly), which conveys more feedback to users than visuals or sound alone. And, although this author doesn't feel that pure touchscreens are going away anytime soon, haptics advocates do have a point: there is something more innately satisfying, more natural about the feeling of pushing a mechanical button than tapping a smooth surface. It is likely we will begin to see an integration of haptics with touchscreens, with haptic buttons appearing at specific times (as, for instance, for a keyboard).

Figure 8-10. A prototype device using Nokia's "haptikos" technology. It is usually a standard touchscreen device with a smooth surface, but now the keyboard has a tactile feel when the keys are pressed and released, simulating the feeling of typing on an actual keyboard. Courtesy Nokia.

Tangible UIs take a piece of data or functionality and embody it in a physical object that can then be manipulated manually. A classic example is Durrell Bishop's Marble Answering Machine (1992). In the Marble Answering Machine, a marble represented a single message left on an answering machine. Users dropped a marble into a dish to play back the associated message or return the call.

It's not much of a leap to imagine gestural systems in which users gesture with pieces of data. Rather than dropping a marble into a dish, for instance, users could tap the marble with a finger to hear the message or spin it to return the call.

The smarter our objects become, the more nuanced they can be about interpreting gestures. It's not hard to imagine your touchscreen laptop knowing when you are annoyed (because your taps are more abrupt and forceful) and adjusting accordingly.

Figure 8-11. TangibleTable allows users to manipulate the display embedded into the table via different physical controls that are placed on the table's surface. Courtesy Daniel Guse and Manuel Hollert.

Figure 8-12. Jive is a concept device that combines a touchscreen with a tangible UI. Designed for elders, Jive makes use of "friend pass" cards that are tangible representations of people. Pressing a friend pass to the touchscreen allows the user to see information about that person and communicate with her. Courtesy Ben Arent.

Developers may shudder at this, but there may come a time when applications and websites will have versions that are optimized for gestures. As gestures and touchscreens become part of the personal computing repertoire, it seems probable that more and more work will be done using touchscreens on mobile devices and PCs. Thus, the applications that are presented both via the Web and via the desktop could have to adjust their size and layout for touch targets and fingers, not just cursors.

One can also imagine developers empowering gestural patterns (see Chapter 3 and Chapter 4) in more traditional applications by having the application recognize that it is on a device that can recognize gestures.

As Chapter 6 clearly demonstrates, creating prototypes of gestural interfaces is no easy task, requiring broad knowledge that only a small (albeit growing) number of people possess. Designers and developers need better tools to aid in the creation of custom prototypes: ready-made kits, open source code that can be easily reused, and Plug-and-Play hardware.

This is starting to happen, and communities such as the Natural User Interface Group and the Arduino Community, companies such as Tinker.It, and university research labs at Stanford[50] and Carnegie Mellon[51] are moving toward these goals, but it is still far too difficult for beginners to get started prototyping gestural interfaces, especially for free-form interfaces.

There are two big challenges: overcoming the barrier to entry that electronics and code provide for many, and turning gestures into code. More Plug-and-Play starter kits with a simpler interface than raw code would help the first challenge, whereas code pattern libraries and new developer tools may help the second. Although, as of this writing, developer tools are available for the Wii that turn movements with the Wiimote into code, nothing will do the same with full body movements or gestures that occur without a device. One can imagine in the future software development kits (SDKs) with sets of cameras that can be calibrated to record gestures and turn them into coded patterns, or even sound-studio-like spaces set up for just such recording and translation.[52]

In the mostly awful movie Lost in Space (1998), there is a great scene where Will Robinson (as in "Danger, Will Robinson!") takes control of the family robot by "putting on" a holographic suit to control the robot manually with gestures that the robot then mimics. We're closer to this future than we think.

Although our machines are getting more powerful, unfortunately we humans are not. We remain trapped in bodies that, though capable of many things, are physically limited in many ways. Enter cobots. Invented by professors Edward Colgate and Michael Peshkin, cobots (the name is a mashup of "collaborative robot") are robots for direct physical interaction with humans in a shared workspace. They are part of a broader class of robot-like devices known as Intelligent Assist Devices (IADs).

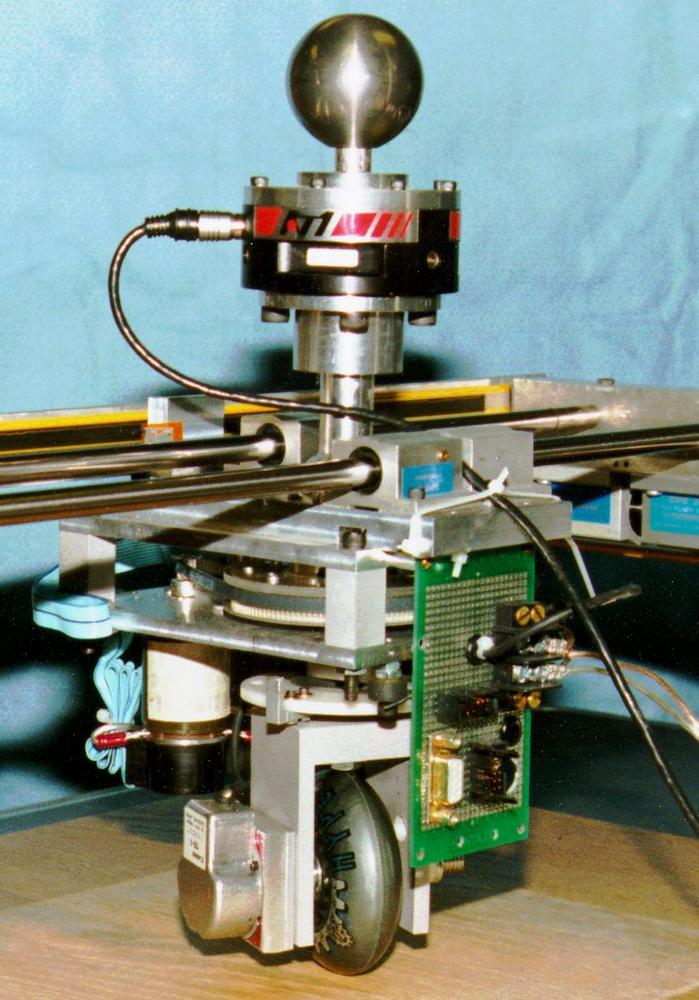

Figure 8-13. This "unicycle" cobot, consisting of a single wheel steered by a motor, demonstrates two essential control modes: "free" mode in which users can steer the wheel wherever they want, and "virtual surface" mode in which the cobot confines the user's motion to a software-defined guiding surface. Courtesy Northwestern McCormick School of Mechanical Engineering.

Their use in particular environments (which could be equipped with sensors), plus the fact that they could be used for physical tasks that lend themselves to movement, make them perfect for extension by interactive gestures. Cobots augment human physicality, making us stronger, more accurate, and faster. Humans can do what they do best—human thought, problem solving, and skilled movements—while machines can do what they do best—more physical power and speed, and the ability to go into environments where it would be otherwise unsafe for people.

Imagine, for instance, a mechanic being able to lift and tilt a car with a gesture. Or a surgeon being able to make incredibly precise incisions and control tiny surgical cobots by touch alone.[53] Or musicians being able to play multiple instruments at once.

Even regular old robots are getting into interactive gestures. Aaron Powers, interaction designer at iRobot, hinted that even the humble floor cleaner Roomba might soon respond to gestures by its owner.[54] Other robots have been built that respond to clapping.

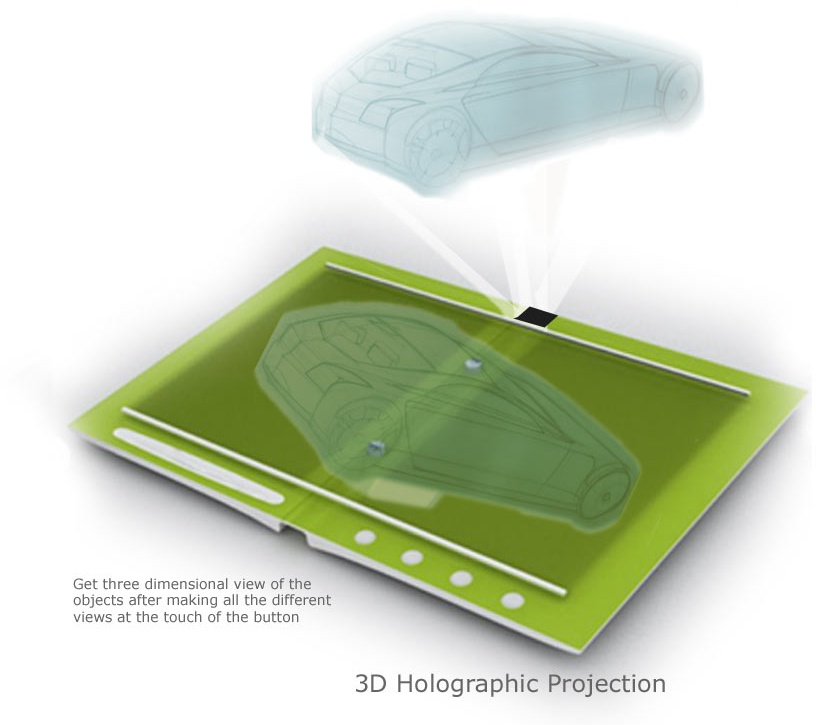

In the movie Iron Man (2008), Tony Stark (played by Robert Downey, Jr.) manipulates in space a piece of the Iron Man armor he's designing. This sort of real-time manipulation of physical products—CAD coupled with 3D hologram imaging—would be a killer app for engineers, architects, and industrial designers alike, not to mention artists. It is difficult (and some would argue impossible) to design 3D objects well on a 2D medium such as a computer screen—or even paper, for that matter. Having the ability to prototype and manipulate objects in 3D space using interactive gestures would create an entirely new tool set for the creation of objects.

Figure 8-14. This concept of a future touchscreen PC by Harsha Kutare and Somnath Chakravorti shows a possibility for using 3D projections as a design tool. Courtesy Microsoft and IDSA.

Additionally, the ability to collaborate on tables and walls digitally but with the ease of analog methods such as whiteboards and paper—unencumbered by keyboards, mice, and the like—will be a tremendous boon to designers, and really to anyone. One only has to sit in a meeting filled with laptops whose screens become de facto barriers to see that other technology solutions would make the situation more collaborative and productive.

Imagine this scenario: you walk into a darkened room. You know there is a light fixture there because you can see it, but there is no wall switch. An icon on the doorframe indicates that it is a gestural interface, but it gives no clue what the required gesture is. You wave your arms frantically to trigger the sensor you know must be there: nothing. Hop up and down: nothing. Clap your hands: nothing. Annoyed, you wonder who designed this room. Is it a Microsoft Rume or an Apple iRoom or a Sony eVironment? For one, you have to wave a hand; for the other, point to the light; for the third, pretend to flip a light switch. Which one is it???

As this, and countless imaginable future scenarios, demonstrates, we probably will need some standards for interactive gestures.

We have an opportunity in front of us now, like the Doug Engelbarts and Larry Teslers and Bill Verplanks of the 1960s and 1970s did, to define the interaction paradigms for the next several decades (at least) in the form of gestural and touch interactions. We need to figure out which common gestures could work across a variety of devices and environments. It's time to step up and start making an effort to define and document a common set of movements and motions that could be used for initiating actions across a variety of platforms. We need to help create this shift in input devices, not just follow along behind the technology. And if we wait, well, we'll simply find individual companies (Apple, Microsoft, Perceptive Pixel, etc.) creating their own standards (as is being done now). Although this isn't necessarily a bad thing, one can easily imagine, as earlier, having to remember a crazy number of movements and gestures for common actions. We'll get a lot of ad hoc solutions—some of which will be great, some not so much. And although there will always be custom solutions for particular problems and environments, standards would help.

But we're not there yet. We are still building the foundations of our gestural language for products. Eventually we'll need some sort of standards board, similar to what the Web has with the W3C, or an advocacy group such as the Web Standards Project, ideally to create a set of standards to be used cross-platform and cross-environment; at a minimum, we will need a set of guidelines for designers, developers, and manufacturers to use and abide by. Hopefully this book, as well as the growing awareness of interactive gestures, will cause organizations such as the Interaction Design Association (IxDA) and the Industrial Design Society of America (IDSA) to help generate and host this initiative.

Of course, one thing standing in the way of standardization is patents. To get a patent in the United States, the idea must be deemed statutory (not natural, an abstract idea, or data), useful, novel, and nonobvious.[55] (The nonobvious part is the hardest to prove.) Gestures, being naturally occurring events, fail the first requirement and probably the nonobvious requirement as well. You most likely cannot patent a gesture.

Note

Many companies are extremely wary of having anyone on the design team who has anything to do with patents, because awareness of a patent and then knowingly or unknowingly copying that patent can lead to additional damages should the company be sued for patent infringement. To be entirely safe and have plausible deniability in the chance patent infringement is claimed, designers should refrain from knowing the contents of any patent or patent application. In fact, if you are concerned, it would be best to stop reading this section right now.

What remains to be seen, however, is whether a gesture tied to a specific action—that is, an interactive gesture—can be patented. So, although companies cannot patent a pinch, they may be able, as Apple is trying to do, to patent "Pinch to Shrink on a Touchscreen Mobile Device." (As of this writing, Apple has filed more than 200 patent claims for gestures on the iPhone alone.) Should Apple win its patent suit, this would mean that anyone using the Pinch to Shrink pattern (see Chapter 3) on a mobile device would be infringing on Apple's patent and would either have to license (i.e., pay for) its use on his device or stop using the gesture altogether.

This would be an unfortunate scenario for...well, anyone other than Apple. As the Wired article "Can Apple Patent the Pinch? Experts Say It's Possible" puts it:[56]

"If Apple's patent applications are successful, other manufacturers may have no choice but to implement multitouch gestures of their own. The upshot: You might pinch to zoom on your phone, swirl your finger around to zoom on your notebook, and triple-tap to zoom on the web-browsing remote control in your home theater.

"That's an outcome many in the industry would like to avoid. Synaptics, a company that by most estimates supplies 65 to 70 percent of the notebook industry with its touchpad technology, is working on its own set of universal touch gestures that it hopes will become a standard. These gestures include scrolling by making a circular motion, moving pictures or documents with a flip of the finger, and zooming in or out by making, yes, a pinching gesture.

"'My guess would be that 80 to 90 percent of consumer notebooks will have these new multigestures by the end of the year [2008],' says Mark Vena, vice president of Synaptics' PC business unit."

It would be as though we would have different key commands—and even different basic actions, such as double-clicking, altogether—for our desktop software applications. Imagine learning new commands for actions such as cut-and-paste for every program. It would be nightmarish.

Of course, patent holders do not have to enforce their patents. For instance, since 2004, Microsoft has owned the patent for double-clicking on "limited resource computing devices," but we haven't seen a wave of patent infringement lawsuits that would pretty much shut down the PDA and mobile phone industries. Companies also swap and license patents from each other all the time, so a patent doesn't necessarily mean stopping implementation of an interactive gesture.

And these patent applications may simply fail. As noted in Chapter 1, gestural interfaces and many of their interaction paradigms have been around for decades—yes, including Pinch to Shrink (done on Pierre Wellner's Digital Desk in the early 1990s)—and thus fail the novelty requirement for patents. It remains to be seen.

However, patents, by restricting use, are also restricting standards. If even basic commands are patented and unavailable for use, having a common set of gestures that work the same way across a multitude of products becomes much more difficult. If a standards body is to be established, it will likely have to negotiate with gestural patent holders and seekers.

Because gestures are often visible by others, many gestural systems are, by their nature, social systems. People can be observed operating gestural systems in ways that they would not operate a standard computer or mobile device. This leads to some ethical principles that designers should abide by regarding privacy and shame.

Gestures are public in a way that most traditional input is not. Thus, care needs to be taken when it comes to privacy. Large (because of the need for decent touch targets) touchscreen buttons can reveal information that users may not want revealed in a public space: PINs, names, credit card information, addresses, and so forth. If users have to enter this sort of information (at a kiosk, say, or an ATM), designers need to craft the hardware or the physical environment or at least be cognizant of the placement of such products to ensure users' privacy.

Figure 8-17. This photo was covertly taken while visiting a big industrial factory. The left sticker says "Touch Screen: NO!!! BEWARE: Don't touch the screen... except me! I am the operator," and the right one says "Don't touch my screen." Usually, stickers do not declare ownership of keyboards; privacy is different on touchscreens. Courtesy Nicholas Nova.

Also because of the social nature of gestures, designers need to create products that do not shame their users. Gestures—even simple gestures—can be difficult to perform, especially for the young, elderly, and mobility-impaired. Wherever possible, they should not unnecessarily embarrass, humiliate, or shame their users.[57] The limits and frailty of the human body should never be discounted, and we should always respect the human dignity of our users and not make them perform gestures that are overly difficult, embarrassing, or inappropriate.

"The best way to predict the future is to invent it," Alan Kay, the inventor of the laptop, famously said, and the future of interactive gestures is waiting to be invented. We're only at the beginning of a revolution now that will ultimately change how we interact with our digital products—which is to say, how we live, work, and play. As more of our world becomes a hybrid of the digital and the physical, our bodies will be used more and more to collaborate with it. No longer do we need to be confined to small screens, keyboards, mice, and the like. Instead, we'll be better able to match the task at hand to the motions required to perform the task, and to do so in spaces other than sitting down at a desk.

By doing so, the hope is that not only will our products "dissolve into behavior," but that they will also be filled with both delight and meaning, that we'll be able to use our digital tools with the nuance and sophistication and emotional palette one has with, say, playing the cello. That is our challenge. Let us rise to it.

When Things Start to Think, Neil Gershenfeld (Hot Paperbacks)

Everyware: The Dawning Age of Ubiquitous Computing, Adam Greenfield (New Riders Publishing)

The Design of Future Things, Don Norman (Basic Books)

"How Bodies Matter: Five Themes for Interaction Design," by Scott Klemmer, Björn Hartmann, and Leila Takayama; proceedings from Designing Interactive Systems 2006

Shaping Things, Bruce Sterling (MIT Press)

[43] "A Touching Story at CES," by Catherine Holahan, BusinessWeek, January 10, 2008. Read it online at http://www.businessweek.com/technology/content/jan2008/tc2008019_637162.htm.

[44] See http://en.wikipedia.org/wiki/The_Third_Place for a thorough definition of third places.

[45] Of course, this isn't always true. Some gestures and physical activities are best left alone. How can holding the hand of a loved one be improved by making hand-holding have some additional digital enhancement, for instance? Sure, it may be possible to add other actions to that activity, but would they be an annoyance or an enhancement?

[46] The Touch Project at the Oslo School of Architecture and Design explores the intersection of NFC and gestures: http://www.nearfield.org/.

[47] See Christof Roduner's paper, "The Mobile Phone as a Universal Interaction Device—Are There Limits?" at http://www.vs.inf.ethz.ch/publ/papers/rodunerc-MIRW06.pdf

[48] Viewable online at http://www.youtube.com/watch?v=nM_q8oAPAKE.

[49] See, for instance, Matt Jones's "Lost futures: Unconscious gestures?" at http://www.blackbeltjones.com/work/2007/11/15/lost-futures-unconscious-gestures/.

[50] See Stanford HCI Group's d.tools at http://hci.stanford.edu/dtools/.

[51] See, for instance, The Context Project at http://www.cs.cmu.edu/~anind/context.html.

[52] See the Smart Laser Scanner for Human-Computer Interface at http://www.k2.t.u-tokyo.ac.jp/fusion/LaserActiveTracking/index-e.html.

[53] See, for instance, "Restoring the Human Touch to Remote-Controlled Surgery," by Anne Eisenberg, in the New York Times, May 30, 2002. Found online at http://biorobotics.harvard.edu/news/timesarticle.htm.

[54] In "What Robotics Can Learn from HCI," ACMinteractions Magazine, XV.2, March/April 2008.

[55] See "Patent Requirements" on Bitlaw.com for more details at http://www.bitlaw.com/patent/requirements.html.

[56] Gardiner, Bryan. February 2, 2008. You can read it online at http://www.wired.com/gadgets/miscellaneous/news/2008/02/multitouch_patents.

[57] See "All watched over by machines of loving grace: Some ethical guidelines for user experience in ubiquitous-computing settings," by Adam Greenfield, at Boxes and Arrows (http://www.boxesandarrows.com/view/all_watched_over_by_machines_of_loving_grace_some_ethical_guidelines_for_user_experience_in_ubiquitous_computing_settings_1_).