"One of the things our grandchildren will find quaintest about us is that we distinguish the digital from the real."

A man wearing special gloves stands in front of a large, translucent screen. He waves his hand in front of it, and objects on the screenmove. It's as though he's conducting an orchestra or is some sort of high-techsorcerer's apprentice, making objects fly about with just a sweep of his arm. He makes another gesture, and a video begins to play. With both hands, he stretches the video to a larger size, filling more of the screen. It's like magic.

Another place, another time: a different man stands in front of an audience. He's running his fingers over a table-size touchscreen before him as though he is a keyboard player in a rock band, his fingers rapidly manipulating images on the screen by dragging them around. He's making lines appear on-screen with his fingers and turning them into silky, ink-like paintings. He's playing, really—showing off. He drags his fingers across the surface and leaves a trail of bubbles. It's also like magic.

The first man doesn't really exist, although you'd probably recognize the actor playing him: Tom Cruise. The scene is from the movie Minority Report (2002), and it gave the general public its first look at a computer that responds to gestures instead of to speech, a keyboard, or a mouse. It was an impressive feat of visual effects, and it made a huge impression on people everywhere, especially interaction designers, some of whom had been working on or thinking about similar systems for years.

The second man does exist, and his name is Jeff Han. Not only did his jumbo touchscreen devices influence Minority Report, but his live demonstrations—first privately and then publicly at the 2006 TED conference[1]—will likely go down in computer history near the "Mother of All Demos" presentation that Doug Engelbart made in 1968, in which he showed now-familiar idioms such as email, hypertext, and the mouse. Han's demos sparked thousands of conversations, blog posts, emails, and commentary.

Figure 1-1. Jeff Han demos a multitouch touchscreen at the 2006 TED conference. Since then, Han has created Perceptive Pixel, a company that produces these devices for high-end clients. Courtesy TED Conferences, LLC.

Since then, consumer electronics manufacturers such as Nintendo, Apple, Nokia, Sony Ericsson, LG, and Microsoft have all released products that are controlled using interactive gestures. Within the next several years, it's not an exaggeration to say that hundreds of millions of devices will have gestural interfaces. A gesture, for the purposes of this book, is any physical movement that a digital system can sense and respond to without the aid of a traditional pointing device such as a mouse or stylus. A wave, a head nod, a touch, a toe tap, and even a raised eyebrow can be a gesture.

In addition to touchscreen kiosks that populate our airports and execute our banking as ATMs, the most famous of the recent products that use gestures are Nintendo's Wii and Apple's iPhone and iPod Touch. The Wii has a set of wireless controllers that users hold to play its games. Players make movements in space that are then reflected in some way on-screen. The iPhone and iPod Touch are devices that users control via touching the screen, manipulating digital objects with a tap of a fingertip.

Figure 1-2. Rather than focusing on the technical specs of the gaming console like their competitors, Nintendo designers and engineers focused on the controllers and the gaming experience, creating the Wii, a compelling system that uses gestures to control on-screen avatars. Courtesy Nintendo.

We've entered a new era of interaction design. For the past 40 years, we have been using the same human-computer interaction paradigms that were designed by the likes of Doug Engelbart, Alan Kay, Tim Mott, Larry Tesler, and others at Xerox PARC in the 1960s and 1970s. Cut and paste. Save. Windows. The desktop metaphor. And so many others that we now don't even think about when working on our digital devices. These interaction conventions will continue, of course, but they will also be supplemented by many others that take advantage of the whole human body, of sensors, of new input devices, and of increased processing power.

We've entered the era of interactive gestures.

The next several years will be seminal years for interaction designers and engineers who will create the next generation of interaction design inputs, possibly defining them for decades to come. We will design new ways of interacting with our devices, environment, and even each other. We have an opportunity that comes along only once in a generation, and we should seize it. How we can create this new era of interactive gestures is what this book is about.

Currently, most gestural interfaces can be categorized as either touchscreen or free-form. Touchscreen gestural interfaces—or, as some call them, touch user interfaces (TUIs)—require the user to be touching the device directly. This puts a constraint on the types of gestures that can be used to control it. Free-form gestural interfaces don't require the user to touch or handle them directly. Sometimes a controller or glove is used as an input device, but even more often (and increasingly so) the body is the only input device for free-form gestural interfaces.

Our relationship to our digital technology is only going to get more complicated as time goes on. Users, especially sophisticated users, are slowly being trained to expect that devices and appliances will have touchscreens and/or will be manipulated by gestures. But it's not just early adopters: even the general public is being exposed to more and more touchscreens via airport and retail kiosks and voting machines, and these users are discovering how easy and enjoyable they are to use.

The ease of use one experiences with a well-designed touchscreen comes from what University of Maryland professor Ben Shneiderman coined as direct manipulation in a seminal 1983 paper.[2] Direct manipulation is the ability to manipulate digital objects on a screen without the use of command-line commands—for example, dragging a file to a trash can on your desktop instead of typing del into a command line. As it was 1983, Shneiderman was mostly talking about mice, joysticks, and other input devices, as well as then-new innovations such as the desktop metaphor.

Touchscreens and gestural interfaces take direct manipulation to another level. Now, users can simply touch the item they want to manipulate right on the screen itself, moving it, making it bigger, scrolling it, and so on. This is the ultimate in direct manipulation: using the body to control the digital (and sometimes even the physical) space around us. Of course, as we'll discuss in Chapter 4, there are indirect manipulations with gestural interfaces as well. One simple example is The Clapper.

Figure 1-3. The Clapper turns ordinary rooms into interactive environments. Occupants use indirect manipulation in the form of a clap to control analog objects in the room. Courtesy Joseph Enterprises.

The Clapper was one of the first consumer devices sold with an auditory sensor.[3] It plugs into an electrical socket, and then other electronics are plugged into it. You clap your hands to turn the electrical flow off (or on), effectively turning off (or on) whatever is plugged into The Clapper. It allows users indirect control over their physical environment via an interactive gesture: a clap.

This use of the whole body, however, can be seen as the more natural state of user interfaces. (Indeed, some call interactive gestures natural user interfaces [NUIs].) One could argue, in fact, that the current "traditional" computing arrangement of keyboard, mouse, and monitor goes against thousands of years of biology. As a 1993 Wired article on Stanford professor David Liddle notes:[4]

"We're using bodies evolved for hunting, gathering, and gratuitous violence for information-age tasks like word processing and spreadsheet tweaking. And gratuitous violence.

"Humans are born with a tool kit at least 15,000 years old. So, Liddle asks, if the tool kit was designed for foraging and mammoth trapping, why not try to make the tasks we do with our machines today look like the tasks the body was designed for? 'The most nearly muscular mentality that we use (in computation) is pointing with a mouse,' Liddle says. 'We use such a tiny part of our repertoire of sound and motion and vision in any interaction with an electronic system. In retrospect, that seems strange and not very obvious why it should be that way.'

"Human beings possess a wide variety of physical skills—we can catch baseballs, dodge projectiles, climb trees—which all have a sort of 'underlying computational power' about them. But we rarely take advantage of these abilities because they have little evolutionary value now that we're firmly ensconced as the food chain's top seed."

Fifteen years after Liddle noted this, it's finally changing.

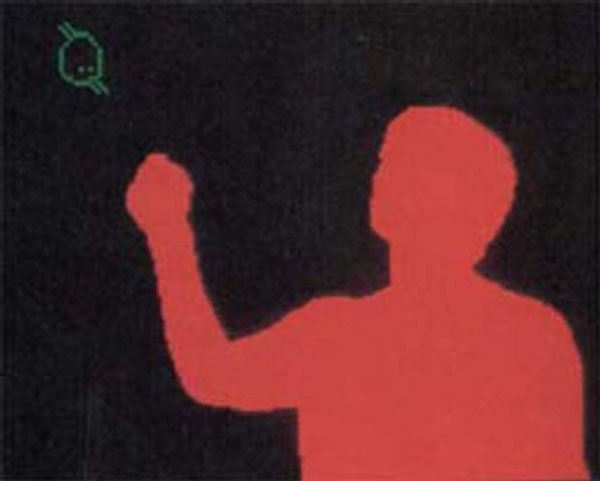

Figure 1-4. How the computer sees us. With traditional interfaces, humans are reduced to an eye and a finger. Gestural interfaces allow for fuller use of the human body to trigger system responses. Courtesy Dan O'Sullivan and Tom Igoe.

As The Clapper illustrates, gestural interfaces are really nothing new. In one sense, everything we do with digital devices requires some sort of physical action to create a digital response. You press a key, and a letter or number appears on-screen. You move a mouse, and a pointer scurries across the screen.

What is different, though, between gestural interfaces and traditional interfaces is simply this: gestural interfaces have a much wider range of actions with which to manipulate a system. In addition to being able to type, scroll, point and click, and perform all the other standard interactions available to desktop systems,[5] gestural interfaces can take advantage of the whole body for triggering system behaviors. The flick of a finger can start a scroll. The twist of a hand can transform an image. The sweep of an arm can clear a screen. A person entering a room can change the temperature.

Figure 1-5. If you don't need a keyboard, mouse, or screen, you don't need much of an interface either. You activate this faucet by putting your hands beneath it. Of course, this can lead to confusion. If there are no visible controls, how do you know how to even turn the faucet on? Courtesy Sloan Valve Company.

Removing the constraints of the k-eyboard-controller-screen setup of most mobile devices and desktop/laptop computers allows devices employing interactive gestures to take many forms. Indeed, the form of a "device" can be a physical object that is usually analog/mechanical. Most touchscreens are like this, appearing as normal screens or even, in the case of the iPhone and iPod Touch, as slabs of black glass. And the "interface"? Sometimes all but invisible. Take, for instance, the motion-activated sinks now found in many public restrooms. The interface for them is typically a small sensor hidden below the faucet that, when detecting movement in the sink (e.g., someone putting her hands into the sink), triggers the system to turn the water on (or off).

Computer scientists and human-computer interaction advocates have been talking about this kind of "embodied interaction" for at least the past two decades. Paul Dourish in his book Where the Action Is captured the vision well:

"By embodiment, I don't mean simply physical reality, but rather, the way that physical and social phenomena unfold in real time and real space as a part of the world in which we are situated, right alongside and around us...Interacting in the world, participating in it and acting through it, in the absorbed and unreflective manner of normal experience."

As sensors and microprocessors have become faster, smaller, and cheaper, reality has started to catch up with the vision, although we still have quite a way to go.

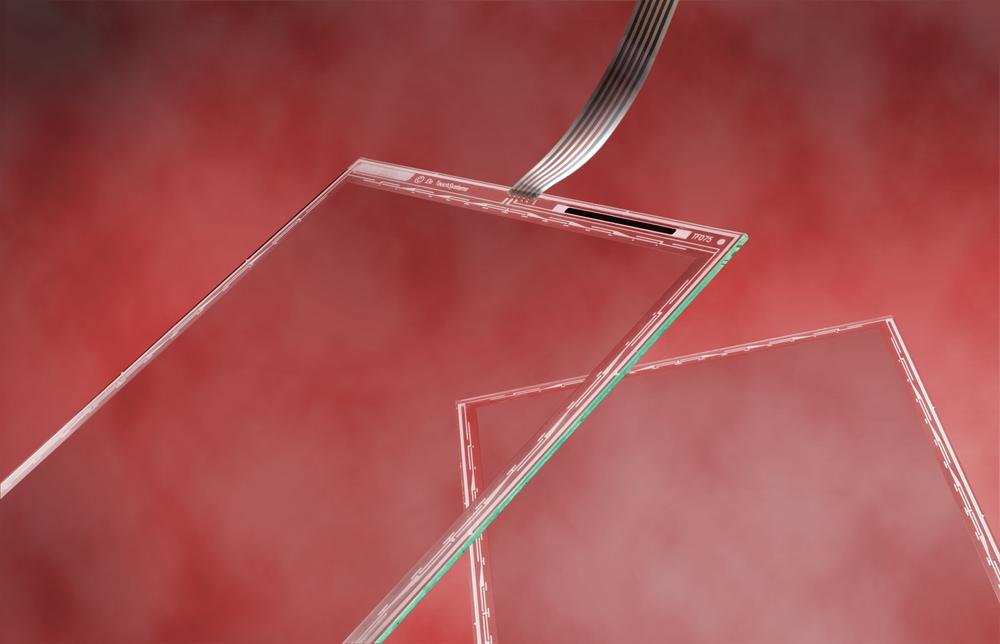

Of course, it hasn't happened all at once. Samuel C. Hurst created the first touch device in 1971, dubbed the Elograph.[6] By 1974, Hurst and his new company, Elographics, had developed five-wire resistive technology, which is still one of the most popular touchscreen technologies used today. In 1977, Elographics, backed by Siemens, created Accutouch, the first true touchscreen device. Accutouch was basically a curved glass sensor that became increasingly refined over the next decade.

Myron Krueger created in the late 1970s what could rightly be called the first indirect manipulation interactive gesture system, dubbed VIDEOPLACE. VIDEOPLACE (which could be a wall or a desk) was a system of projectors, video cameras, and other hardware that enabled users to interact using a rich set of gestures without the use of special gloves, mice, or styli.

Figure 1-7. In VIDEOPLACE, users in separate rooms were able to interact with one another and with digital objects. Video cameras recorded users' movements, then analyzed and transferred them to silhouette representations projected on a wall or screen. The sense of presence was such that users actually jumped back when their silhouette touched that of other users. Courtesy Matthias Weiss.

In 1982, Nimish Mehta at the University of Toronto developed what could be the first multitouchsystem, the Flexible Machine Interface, for his master's thesis.[7] Multitouch systems allow users more than one contact point at a time, so you can use two hands to manipulate objects on-screen or touch two or more places on-screen simultaneously. The Flexible Machine Interface combined finger pressure with simple image processing to create some very basic picture drawing and other graphical manipulation.

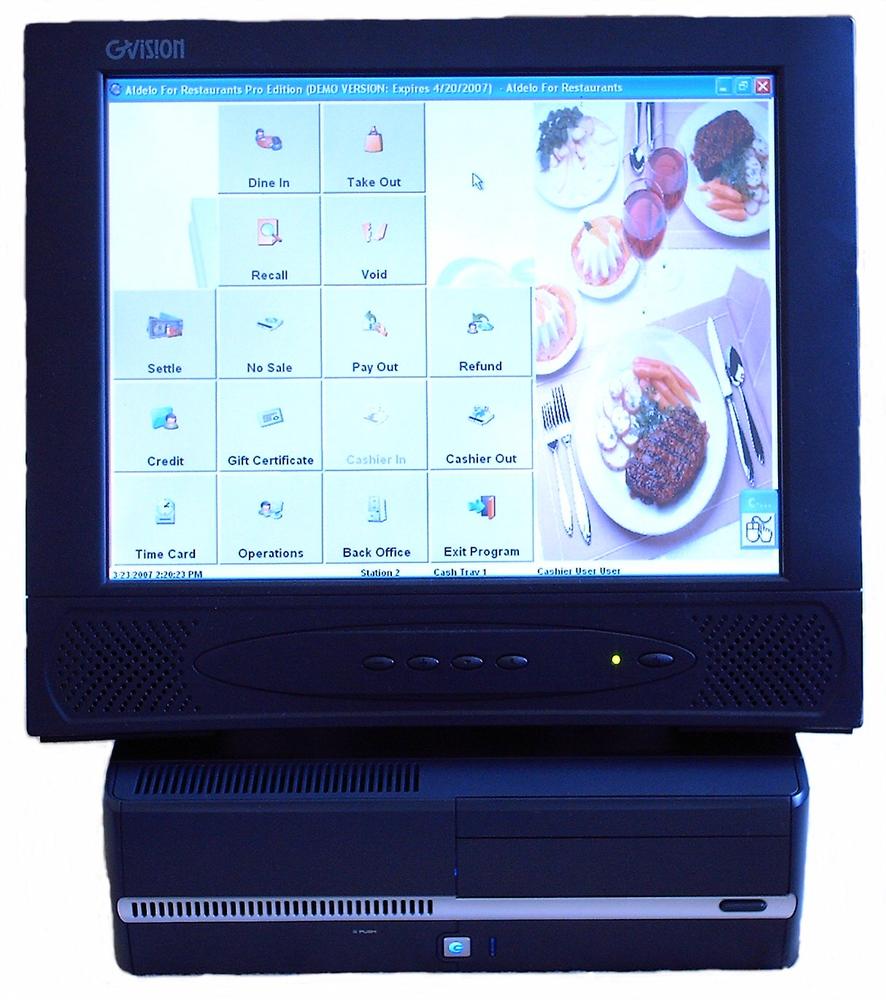

Outside academia, the 1980s found touchscreens making their way to the public first (as most new technology does) in commercial and industrial use, particularly in point-of-sale (POS) devices in restaurants, bars, and retail environments. Currently, touchscreen POS devices have penetrated more than 90% of food and beverage establishments in the United States.[8]

Figure 1-8. A POS touchscreen. According to the National Restaurant Association, touchscreen POS systems pay for themselves in savings to the establishment. Courtesy GVISION USA, Inc.

The Hewlett-Packard 150 was probably the first computer sold for personal use that incorporated touch. Users could touch the screen to position the cursor or select on-screen buttons, but the touch targets (see later in this chapter) were fairly primitive, allowing for only approximate positioning.

Figure 1-9. Released in 1983, the HP 150 didn't have a traditional touchscreen, but a monitor surrounded by a series of vertical and horizontal infrared light beams that crossed just in front of the screen, creating a grid. If a user's finger touched the screen and broke one of the lines, the system would position the cursor at (or more likely near) the desired location, or else activate a soft function key. Courtesy Hewlett-Packard.

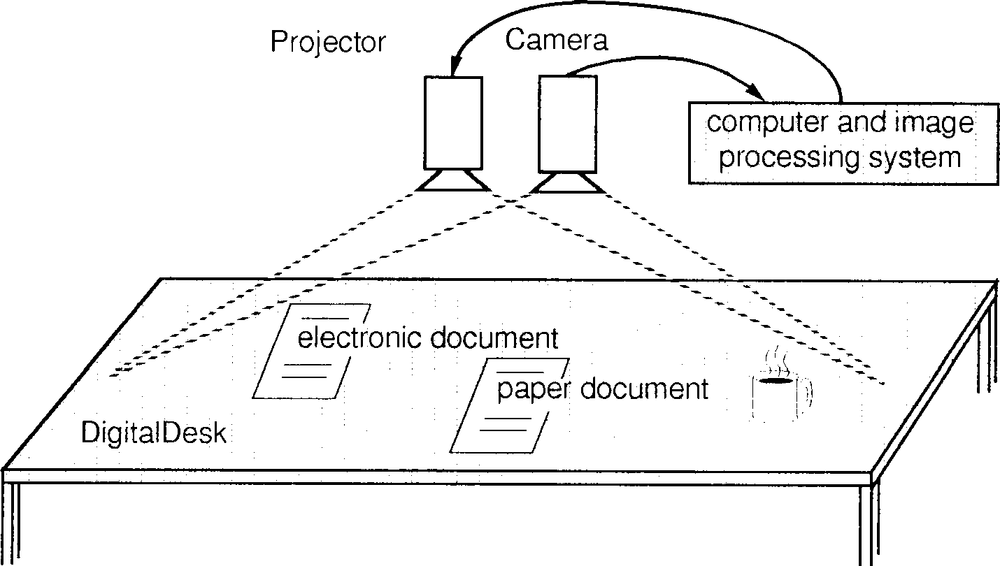

At Rank EuroPARC, Pierre Wellner designed the Digital Desk in the early 1990s.[9] The Digital Desk used video cameras and a projector to project a digital surface onto a physical desk, which users could then manipulate with their hands.[10] Notably, the Digital Desk was the first to use some of the emerging patterns of interactive gestures such as Pinch to Shrink (see Chapter 3).

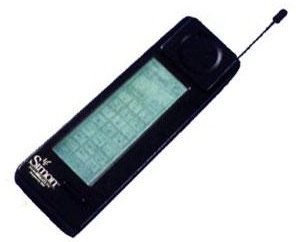

More than a decade before Apple released the iPhone (and other handset manufacturers such as LG, Sony Ericsson, and Nokia released similar touchscreen phones as well), IBM and Bell South launched Simon, a touchscreen mobile phone. It was ahead of its time and never caught on, but it demonstrated that a mobile touchscreen could be manufactured and sold.

Figure 1-11. Simon, released in 1994, was the first mobile touchscreen device. It suffered from some serious design flaws, such as not being able to show more than a few keyboard keys simultaneously, but it was a decade ahead of its time. Courtesy IBM.

In the late 1990s and the early 2000s, touchscreens began to make their way into wide public use via retail kiosks, public information displays, airport check-in services, transportation ticketing systems, and new ATMs.

Figure 1-12. Antenna Design's award-winning self-service check-in kiosk for JetBlue Airlines. Courtesy JetBlue and Antenna Design.

Lionhead Studios released what is likely the first home gaming gestural interface system in 2001 with its game, Black & White. A player controlled the game via a special glove that, as the player gestured physically, would be mimicked by a digital hand on-screen. In arcades in 2001, Konami's MoCap Boxing game had players put on boxing gloves and stand in a special area monitored with infrared motion detectors, then "box" opponents by making movements that actual boxers would make.

Figure 1-13. The Essential Reality P5 Glove is likely the first commercial controller for gestural interfaces, for use with the game Black & White. Courtesy Lionhead Studios.

The mid-2000s have simply seen the arrival of gestural interfaces for the mass market. In 2006, Nintendo released its Wii gaming system. In 2007, to much acclaim, Apple launched its iPhone and iPod Touch, which were the first touchscreen devices to receive widespread media attention and television advertising demonstrating their touchscreen capabilities. In 2008, handset manufacturers such as LG, Sony Ericsson, and Nokia released their own touchscreen mobile devices. Also in 2008, Microsoft launched MS Surface, a large, table-like touchscreen that is used in commercial spaces for gaming and retail display. And Jeff Han now manufactures his giant touchscreens for government agencies and large media companies such as CNN.

The future (see Chapter 8) should be interesting.

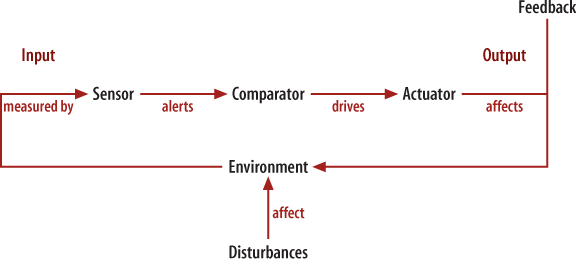

Even though forms of gestural devices can vary wildly—from massive touchscreens to invisible overlays onto environments—every device or environment that employs gestures to control it has at least three general parts: a sensor, a comparator, and an actuator. These three parts can be a single physical component, or, more typically, multiple components of any gestural system, such as a motion detector (a sensor), a computer (the comparator), and a motor (the actuator).

A sensor is typically an electrical or electronic component whose job is to detect changes in the environment. These changes can be any number of things, depending on the type of sensor, of which there are many.[11] The most common types currently used for interactive gestures are:

- Pressure

To detect whether something is being pressed or stood on. This is often mechanical in nature.

- Light

To detect the presence of light sources (also called a photodetector). This is used mostly in environments, especially in lighting systems.

- Proximity

To detect the presence of an object in space. This can be done in any number of ways, from infrared sensors to motion and acoustic sensors.

- Acoustic

To detect the presence of sound. Typically, this is done with small microphones.

- Tilt

To detect angle, slope, and elevation. Tilt sensors generate an artificial horizon and then measure the incline with respect to that horizon.

- Motion

To detect movement and speed. Some common sensors use microwave or ultrasonic pulses that measure when a pulse bounces off a moving object (which is how radar guns catch you speeding).

- Orientation

To detect position and direction. These are often used in navigation systems currently, but position within environments could become increasingly important and would need to be captured by cameras, triangulating proximity sensors, or even GPSes in the case of large-scale use.

It's no exaggeration to state that the type of sensor you employ entirely determines the types of gestural interactions that are possible. If the system can't detect what a user is doing, those gestures might as well not be happening. I can wave at my laptop as much as I want, but if it doesn't have any way to detect my motion, I simply look like an idiot.

It is crucially important to calibrate the sensitivity of the sensor (or the moderation of the comparator). A sensor that is too sensitive will trigger too often and, perhaps, too rapidly for humans to react to. A sensor that is too dull will not respond quickly enough, and the system will seem sluggish or nonresponsive.

The size (for touchscreens) or coverage area of the sensor is also very important, as it determines what kinds of gestures (broad or small, one or two hands, etc.) are appropriate or even possible to have.

Note

The larger the sensor coverage area is, the broader the gesture possible.

Often in more complex systems, multiple sensors will work together to allow for more nuanced movement and complicated gesture combinations. (To have 3D gestures, multiple sensors are a necessity to get the correct depth.) Many Apple products (including Apple laptops) have accelerometers to detect speed and motion built into them, as do Wii controllers. But accelerometers are tuned to themselves, not to the environment, so they alone can't determine the user's position in the room or the direction the user is facing, only whether the device is moving and the direction and speed at which it is moving. For orientation within an environment or sophisticated detection of angles, other sensors need to be deployed. The Wii, for instance, deploys both accelerometers and gyroscopes within its controllers for tilt and motion detection, and an infrared sensor that communicates to the "sensor bar" to indicate orientation[12] for a much wider range of possible gestures.

Once a sensor detects its target, it passes the information on to what is known in systems theory as a comparator. The comparator compares the current state to the previous state or the goal of the system and then makes a judgment. For many gestural interfaces, the comparator is a microprocessor running software, which decides what to do about the data coming into the system via the sensor. Certainly, there are all-mechanical systems that work with gestures, but they tend toward cruder on/off scenarios, such as lights that come on when someone walks past them. Only with a microprocessor can you design a system with much nuance, one that can make more sophisticated decisions.

Those decisions get passed on to an actuator in the form of a command. Actuators can be analog or mechanical, similar to the way the machinery of The Clapper turns lights on; or they can be digital, similar to the way tilting the iPhone changes its screen orientation from portrait to landscape. With mechanical systems, the actuator is frequently a small electric motor that powers a physical object, such as a motor to open an automatic door. As with the comparator's decision making, for digital systems, it is software that drives the actuator. It is software that determines what happens when a user touches the screen or extends an arm.

Of course, software doesn't design and code itself, and sensors and motors and the like aren't attached randomly to systems. They need to be designed.

The design of any product or service should start with the needs of those who will use it, tempered by the constraints of the environment, technology, resources, and organizational goals, such as business objectives. The needs of users can range from simple (I want to turn on a light) to very complex (I want to fall in love). (Most human experience lies between those two poles, I think.) However natural, interesting, amusing, novel, or innovative an interactive gesture is, if the users' needs aren't met, the design is a failure.

The first question that anyone designing a gestural interface should ask is: should this even be a gestural interface? Simply because we can now do interactive gestures doesn't mean they are appropriate for every situation. As Bill Buxton notes,[14] when it comes to technology, everything is best for something and worse for something else, and interactive gestures are no exception.

There are several reasons to not have a gestural interface:

- Heavy data input

Although some users adapt to touchscreen keyboards easily, a keyboard is decidedly faster for most people to use when they are entering text or numbers.

- Reliance on the visual

Many gestural interfaces use visual feedback alone to indicate that an action has taken place (such as a button being pressed). In addition, most touchscreens and many gestural systems in general rely entirely on visual displays with little to no haptic affordances or feedback. There is often no physical feeling that a button has been pressed, for instance. If your users are visually impaired (as most adults over a certain age are) a gestural interface may not be appropriate.

- Reliance on the physical

Likewise, gestural interfaces can be more physically demanding than a keyboard/screen. The broader and more physical the gesture is (such as a kick, for instance), the more likely that some people won't be able to perform the gesture due to age, infirmity, or simply environmental conditions; pressing touchscreen buttons in winter gloves is difficult, for instance. The inverse is also true: the subtler and smaller the movement, the less likely everyone will be able to perform it. The keyboard on the iPhone, for instance, is entirely too small and delicate to be used by anyone whose fingers are large or otherwise not nimble.

- Inappropriate for context

The environment can be nonconducive to a gestural interface in any number of situations, either due to privacy reasons or simply to avoid embarrassing the system's users. Designers need to take into account the probable environment of use and determine what, if any, kind of gesture will work in that environment.

There are, of course, many reasons to use a gestural interface. Everything that a noninteractive gesture can be used for—communication, manipulating objects, using a tool, making music, and so on—can also be done using an interactive gesture. Gestural interfaces are particularly good for:

- More natural interactions

Human beings are physical creatures; we like to interact directly with objects. We're simply wired this way. Interactive gestures allow users to interact naturally with digital objects in a physical way, like we do with physical objects.

- Less cumbersome or visible hardware

With many gestural systems, the usual hardware of a keyboard and a mouse isn't necessary: a touchscreen or other sensors allow users to perform actions without this hardware. This benefit allows for gestural interfaces to be put in places where a traditional computer configuration would be impractical or out of place, such as in retail stores, museums, airports, and other public spaces.

Figure 1-15. New York City in late 2006 installed touchscreens in the back seats of taxicabs. Although clunky, they allow for the display of interactive maps and contextual information that passengers might find useful, such as a Zagat restaurant guide. Courtesy New York City Taxi and Limousine Commission.

- More flexibility

As opposed to fixed, physical buttons, a touchscreen, like all digital displays, can change at will, allowing for many different configurations depending on functionality requirements. Thus, a very small screen (such as those on most consumer electronics devices or appliances) can change buttons as needed. This can have usability issues (see later in this chapter), but the ability to have many controls in a small space can be a huge asset for designers. And with nontouchscreen gestures, the sky is the limit, space-wise. One small sensor, which can be nearly invisible, can detect enough input to control the system. No physical controls or even a screen are required.

- More nuance

Keyboards, mice, trackballs, styli, and other input devices, although excellent for many situations, are simply not as able to convey as much subtlety as the human body. A raised eyebrow, a wagging finger, or crossed arms can deliver a wealth of meaning in addition to controlling a tool. Gestural systems have not begun to completely tap the wide emotional palette of humans that they can, and likely will, eventually exploit.

- More fun

You can design a game in which users press a button and an on-screen avatar swings a tennis racket. But it is simply more entertaining—for both players and observers—to mimic swinging a tennis racket physically and see the action mirrored on-screen. Gestural systems encourage play and exploration of a system by providing a more hands-on (sometimes literally hands-on) experience.

Once the decision has been made to have a gestural interface, the next question to answer is what kind of gestural interface it will be: direct, indirect, or hybrid. As I write this, particularly with devices and appliances, the answer will be fairly easy: direct-manipulation touchscreen is the most frequently employed gestural interface currently. In the future, as an increasing variety of sensors are built into devices and environments, this may change, but for now touchscreens are the new standard for gestural interfaces.

Although particular aspects of gestural systems require more and different kinds of consideration, the characteristics of a good gestural interface don't differ much from the characteristics of any other well-designed interactive system.[15] Designers often use Liz Sanders' phrase "useful, usable, and desirable"[16] to describe well-designed products, or they say that products should be "intuitive" or "innovative." All of that really means gestural interfaces should be:

- Discoverable

Being discoverable can be a major issue for gestural interfaces. How can you tell whether a screen is touchable? How can you tell whether an environment is interactive? Before we can interact with a gestural system, we have to know one is there and how to begin to interact with it, which is where affordances come into play. An affordance is one or multiple properties of an object that give some indication of how to interact with that object or a feature on that object. A button, because of how it moves, has an affordance of pushing. Appearance and texture are the major sources of what psychologist James Gibson called affordances,[17] popularized in the design community by Don Norman in his seminal 1988 book The Psychology of Everyday Things (later renamed The Design of Everyday Things).

- Trustworthy

Unless they are desperate, before users will engage with a device, the interface needs to look as though it isn't going to steal their money, misuse their personal data, or break down. Gestural interfaces have to appear competent and safe, and they must respect users' privacy (see THE ETHICS OF GESTURES in Chapter 8). Users are also now suspicious of gestural interfaces and often an attraction affordance needs to be employed (see Chapter 7).

- Responsive

We're used to instant reaction to physical manipulation of objects. After all, we're usually touching things that don't have a microprocessor and sensor that need to figure out what's going on. Thus, responsiveness is incredibly important. When engaged with a gestural interface, users want to know that the system has heard and understood any commands given to it. This is where feedback comes in. Every action by a human directed toward a gestural interface, no matter how slight, should be accompanied by some acknowledgment of the action whenever possible and as rapidly as possible (100 ms or less is ideal as it will feel instantaneous). This can be tricky, as the responsiveness of the system is tied directly to the responsiveness of the system's sensors, and sensors that are too responsive can be even more irksome than those that are dull. Imagine if The Clapper picked up every slight sound and turned the lights on and off, on and off, over and over again! But not having near-immediate feedback can cause errors, some of them potentially serious. Without any response, users will often repeat an action they just performed, such as pushing a button again. Obviously, this can cause problems, such as accidentally buying an item twice or, if the button was connected to dangerous machinery, injury or death. If a response to an action is going to take significant time (more than one second), feedback is required that lets the user know the system has heard the request and is doing something about it. Progress bars are an excellent example of responsive feedback: they don't decrease waiting time, but they make it seem as though they do. They're responsive.

- Appropriate

Gestural systems need to be appropriate to the culture, situation, and context they are in. Certain gestures are offensive in certain cultures. An "okay" gesture, commonplace in North America and Western Europe, is insulting in Greece, Turkey, the Middle East, and Russia, for instance.[18] An overly complicated gestural system that involves waving arms and dancing aroundin a public place is not likely to be an appropriate system unless it is in a nightclub or other performance space.

- Meaningful

The coolest interactive gesture in the world is empty unless it has meaning for the person performing it; which is to say, unless the gestural system meets the needs of those who use it, it is not a good system.

- Smart

The devices we use have to do for us the things that we as humans have trouble doing—rapid computation, having infallible memories, detecting complicated patterns, and so forth. They need to remember the things we don't remember and do the work we can't easily do alone. They have to be smart.

- Clever

Likewise, the best products predict the needs of their users and then fulfill those needs in unexpectedly pleasing ways. Adaptive targets are one way to do this with gestural interfaces. Another way to be clever is through interactive gestures that match well the action the user is trying to perform.

- Playful

One area in which interactive gestures excel is being playful. Through play, users will not only start to engage with your interface—by trying it out to see how it works—but they will also explore new features and variations on their gestures. Users need to feel relaxed to engage in play. Errors need to be difficult to make so that there is no need to put warning messages all over the interface. The ability to undo mistakes is also crucial for fostering the environment for play. Play stops if users feel trapped, powerless, or lost.

- Pleasurable

"Have nothing in your house," said William Morris, "that you do not know to be useful, or believe to be beautiful." Gestural interfaces should be both aesthetically and functionally pleasing. Humans are more forgiving of mistakes in beautiful things.[19] The parts of the gestural system—the visual interface; the input devices; the visual, aural, and haptic feedback—should be agreeable to the senses. They should be pleasurable to use. This engenders good feelings in their users.

- Good

Gestural interfaces should have respect and compassion for those who will use them. It is very easy to remove human dignity with interactive gestures—for instance, by making people perform a gesture that makes them appear foolish in public, or by making it so difficult to perform a gesture that only the young and healthy can ever perform it. Designers and developers need to be responsible for the choices they make in their designs and ask themselves whether it is good for users, good for those indirectly affected, good for the culture, and good for the environment. The choices that are made with gestural interfaces need to be deliberate and forward-thinking. Every time users perform an interactive gesture, in an indirect way they are placing their trust in those who created it to have done their job ethically.

Although touchscreen gestural interfaces differ slightly from free-form gestural interfaces, most gestures have similar characteristics that can be detected and thus designed for. The more sophisticated the interface (and the more sensors it employs), the more of these attributes can be engaged:

- Presence

This is the most basic of all attributes. Something must be present to make a gesture in order to trigger an interaction. For some systems, especially in environments, a human being simply being present is enough to cause a reaction. For the simplest of touchscreens, the presence of a fingertip creates a touch event.

- Duration

All gestures take place over time and can be done quickly or slowly. Is the user tapping a button or holding it down for a long period? Flicking the screen or sliding along it? For some interfaces, especially those that are simple, duration is less important. Interfaces using proximity sensors, for instance, care little for duration and only whether a human being is in the area. But for games and other types of interfaces, the ability to determine duration is crucial. Duration is measured by calculating the time of first impact or sensed movement compared to the end of the gesture.

- Position

Where is the gesture being made? From a development standpoint, position is often determined by establishing an x/ylocation on an axis (such as the entire screen) and then calculating any changes. Some gestures also employ the z-axis of depth. Note that because of human beings' varying heights, position can be relational (related to the relative size of the person) or exact (adjusted to the parameters of the room). For instance, a designer may want to put some gestures high in an environment so that children cannot engage in them.

- Motion

Is the user moving from position to position or striking a pose in one place? Is the motion fast or slow? Up and down, or side to side? For some systems, any motion is enough to trigger a response; position is unnecessary to determine.

- Pressure

Is the user pressing hard or gently on a touchscreen or pressure-sensitive device? This too has a wide range of sensitivity. You may want every slight touch to register, or only the firmest, or only an adult weight (or only that of a child or pet). Note that some pressure can be "faked" by duration; the longer the press/movement, the more "pressure" it has. Pressure can also be faked by trying to detect an increasing spread of a finger pad: as we press down, the pad of our finger widens slightly as it presses against a surface.

- Size

Width and height can also be combined to measure size. For example, touchscreens can determine whether a user is employing a stylus or a finger based on size (the tip of a stylus will be finer) and adjust themselves accordingly.

- Orientation

What direction is the user (or the device) facing while the gesture is being made? For games and environments, this attribute is extremely important. Orientation has to be determined using fixed points (such as the angle of the user to the object itself).

- Including objects

Some gestural interfaces allow users to employ physical objects alongside their bodies to enhance or engage the system. Simple systems will treat these other objects as an extension of the human body, but more sophisticated ones will recognize objects and allow users to employ them in context.

For instance, a system could see a piece of paper a user is holding as being simply part of the user's hand, whereas another system, such as the Digital Desk system (see Figure 1-12, earlier in this chapter), might see it as a piece of paper that can have text or images projected onto it.

- Number of touch points/combination

More and more gestural interfaces have multitouch capability, allowing users to use more than one finger or hand simultaneously to control them. They may also allow combinations of gestures to occur at the same time. One common example is using two hands to enlarge an image by dragging on two opposite corners, seemingly stretching the image.

- Sequence

Interactive gestures don't necessarily have to be singular. A wave followed by a fist can trigger a different action than both of those gestures done separately. Of course, this means a very sophisticated system that remembers states. This is also more difficult for users (see STATES AND MODES, later in this chapter).

- Number of participants

It can be worthwhile with some devices—such as Microsoft's Surface, which is meant to be used socially for activities such as gaming or collaborative work—to detect multiple users. Two people operating a system using one hand each is very different from one person operating a system using both hands.

When designing a particular interactive gesture, these attributes, plus the range of physical movement (see Chapter 2), should be considered. Of course, simple gestural interfaces, such as most touchscreens, will use only one or two of these characteristics (presence and duration being the most common), and designers and developers may not need to dwell overly long on the attributes of the gesture but instead on the ergonomics and usability of interactive gestures (see Chapter 2).

Many of the traditional interface conventions work well in gestural interfaces: selecting, drag-and-drop, scrolling, and so on. There are several notable exceptions to this:

- Cursors

With gestural interfaces, a cursor is often unnecessary since a user isn't consistently pointing to something; likewise, a user's fingers rarely trail over the touchscreen where a cursor would be useful to indicate position. Users don't often lose track of their fingers! Of course, for gaming, a cursor is often absolutely essential to play, but this is usually on free-form gestural interfaces, not touchscreens.

- Hovers and mouse-over events

For the same reason that cursors aren't often employed, hovers and mouse-over events are also seldom used, except in some free-form games and in certain capacitive systems. Nintendo's Wii, for instance, often includes a slight haptic buzz as the user roles over selectable items. Some sensitive capacitive touchscreens can detect a hand hovering over the screen, but hovers need to be aware of screen coverage (see Chapter 2).

- Double-click

Although a double click can be done with a gestural interface, it should be used with caution. A threshold has to be set (e.g., 200 ms) during which two touch events in the same location are counted as a double click. The touchscreen has to be sensitive and responsive enough to register touch-rest-touch. Single taps to click are safer to use (see TAP TO OPEN/ACTIVATE in Chapter 3).

- Right-click

Most gestural interfaces don't have the ability to bring up an alternative menu for objects. The direct-manipulation nature of most gestural interfaces tends to go against this philosophically. This is not to say that digital objects could not display a menu when selected, just that they frequently avoid this traditional paradigm.

- Drop-down menus

These generally don't work very well for the same reasons as right-click menus, combined with the limitations of hover.

- Cut-and-paste

As of this writing, cut-and-paste is only partially implemented or theorized on most gestural interfaces. It will likely be implemented shortly, but as of summer 2008, it has not been on most common gestural interfaces.

- Multiselect

As humans, we're limited by the number of limbs and fingers we have to select multiple items on a screen or a list. There are ways around this, such as a select mode that could be turned on so that everything on-screen, once selected, remains selected; alternately, an area could be "drawn" that selects multiple items.

- Selected default buttons

Since pressing a return key (and thus pushing a selected button) isn't typically part of a gestural system, all a selected default button can do is highlight probable behavior. Users will have to make an interactive gesture (e.g., pushing a button) no matter what to trigger an action.

- Undo

It's hard to undo a gesture; once a gesture is done, typically the system has executed the command, and there is no obvious way, especially in environments, to undo that action. It is better to design an easy way to cancel or otherwise directly undo an action (e.g., dragging a moved item back) than it is to rely on undo.

Assuredly, there are exceptions to all of these interface constraints, and clever designers and developers will find ways to work around them. For the new conventions that have been established with gestural interfaces, see Chapter 3 and Chapter 4.

Most gestural interfaces are stateless or modeless, which is to say that there is only one major function or task path for the system to accomplish at any given time. An airport kiosk assists users in checking in, for instance. The Clapper turns lights on or off. Users don't switch between "modes" (as, say, between writing and editing modes), or if they do, they do so only in complex devices such as mobile phones.

The reason for this is both contextual and related to the nature of interactive gestures. Gestural interfaces are often found in public spaces, where attention is limited and simplicity and straightforwardness are appreciated; this is combined with the fact that—especially with free-form interfaces—there might be no visual indicator (i.e., no screen or display) to convey what mode the user is in. Thus, doing a gesture to change to another mode may accomplish the task, but how does the user know it was accomplished? And how does the user return to the previous mode? By performing the same gesture? Switching between states is a difficult interaction design problem for gestural interfaces.

Thus, it is considerably easier for users (although not for the designers) to have either clear paths through the gestural system or a single set of choices to execute. For example, a retail kiosk might be designed to help with the following tasks: searching for an item, finding an item in the store, and purchasing an item. It is better to have these activities in a clear path (search to find to buy) than to require users to switch to different modes to execute an action, such as buying.

As users become more sophisticated and gestural interfaces more ubiquitous, this may change, but for now, a stateless design is usually the better design.

Once you've decided that a gestural interface is appropriate for your users and your environment, you need to pair the appropriate gestures to the tasks and goals the users need to accomplish. This requires a combination of three things: the available sensors and related input devices, the steps in the task, and the physiology of the human body. Sensors determine what the system can detect and how. The steps in the task show what actions have to be performed and what decisions have to be made. The human body provides physical constraints for the gestures that can be done (see Chapter 2).

For most touchscreens, this can be a very straightforward equation. There is one sensor/input device (the touchscreen); the tasks (check in, buy an item, find a location, get information) are usually simple. The touchscreen needs to be accessible and used by a wide variety of people of all ages. Thus, simple gestures such as pushing buttons are appropriate.

Note

The complexity of the gesture should match the complexity of the task at hand.

This is to say that simple, basic tasks should have equally simple, basic gestures to trigger or complete them, for instance, taps, swipes, and waves. More complicated tasks may have more complicated gestures.

Take, for example, turning on a light. If you just want to turn on a light in a room, a wave or swipe on a wall should be sufficient for this simple behavior. Dimming the light (a slightly more complex action), however, may require a bit more nuance, such as holding your hand up and slowly lowering it. Dimming all the lights in the house at once (a sophisticated action) may require a combination gesture or a series of gestures, such as clapping your hands three times, then lowering your arm. Because it is likely seldom done and is conceptually complicated, it can have an equally complex associated movement.

This is not to say that all complex behaviors need to or should have accompanying complex gestures—quite the opposite, in fact—only that simple actions should not require complex actions to initiate. The best interactive gestures are those that take the complex and make them simple and elegant.

One way to do this, especially with touchscreen devices, is to make all the features accessible with simple gestures such as taps (via a menu system, say), and then to provide alternative gestures that are more sophisticated (but faster) for more advanced users. In this way, an interactive gesture can act as a shortcut to features in much the same way as a key command works on desktop systems. Of course, communicating this advanced gesture then becomes an issue to address (see Chapter 7).

Rather than have the designer determine the gestures of the system, another method for determining the appropriate action for a gesture is to employ the knowledge and intuition of those who will use it. You can ask the users to match a feature to the gesture they would like to use to employ it. Asking several users will hopefully begin to show patterns matching gestures to features. The reverse of this would be to demonstrate a gesture and see what feature users would expect that gesture to trigger.

Japanese product designer Naoto Fukasawa has observed that the best designs are those that "dissolve in behavior,"[20] meaning that the products themselves disappear into whatever the user is doing. It's seemingly effortless (although certainly not for those creating this sort of frictionless system—intuitive, natural designs require significant effort) and a nearly subconscious act to use the product to accomplish what you want to do. This is the promise of interactive gestures in general: that we'll be able to empower the gestures that we already do and give them further influence and meaning.

Adam Greenfield, author of Everyware, talked about this type of natural interaction in an interview:[21]

"We see this, for example, in Hong Kong where women leave their RFID-based Octopus cards in their handbags and simply swing their bags across the readers as they move through the turnstiles. There's a very sophisticated transaction between card and reader there, but it takes 0.2 seconds, and it's been subsumed entirely into this very casual, natural, even jaunty gesture.

"But that wasn't designed. It just emerged; people figured out how to do that by themselves, without some designer having to instruct them in the nuances...The more we can accommodate and not impose, the more successful our designs will be."

The best, most natural designs, then, are those that match the behavior of the system to the gesture humans might already do to enable that behavior. Simple examples include pushing a button to turn something on or off, turning to the left to make your on-screen avatar turn to the left, putting your hands under a sink to turn the water on, and passing through a dark hallway to illuminate it.

The design dissolves into the behavior.

In the next chapter, we'll look at an important piece of the equation when designing gestural interfaces: the human body.

Where the Action Is, Paul Dourish (MIT Press)

Everyware, Adam Greenfield (New Riders Publishing)

The Ecological Approach to Visual Perception, James Gibson (Lawrence Erlbaum)

Designing Interactions, Bill Moggridge (MIT Press)

The Design of Everyday Things, Don Norman (Basic Books)

Emotional Design, Don Norman (Basic Books)

Designing for Interaction, Dan Saffer (Peachpit Press)

Designing Interfaces, Jenifer Tidwell (O'Reilly)

[1] Watch the demo yourself at http://www.ted.com/index.php/talks/view/id/65.

[2] Shneiderman, Ben. "Direct Manipulation: A Step Beyond Programming Languages." IEEE Computer 16(8): 57–69, August 1983.

[3] The Clapper also had an iconic commercial with an extremely catchy jingle: "Clap on! Clap off!" See the commercial and watch The Clapper in action at http://www.youtube.com/watch?v=WsxcdVbE3mI.

[4] "Dogs Don't Do Math," by Tom Bestor, November 1993. Found online at http://www.wired.com/wired/archive/1.05/dogs.html.

[5] This has some notable exceptions. See later in this chapter.

[6] See http://www.elotouch.com/AboutElo/History/ for a detailed history of the Elograph.

[7] Mehta, Nimish. "A Flexible Machine Interface." M.A.Sc. thesis, Department of Electrical Engineering, University of Toronto, 1982. Supervised by Professor K.C. Smith.

[8] According to The Professional Bar and Beverage Manager's Handbook, by Amanda Miron and Douglas Robert Brown (Atlantic Publishing Company).

[9] Wellner, Pierre. "The DigitalDesk Calculator: Tactile Manipulation on a Desktop Display." Proceedings of the Fourth Annual Symposium on User Interface Software and Technology (UIST): 27–33, 1991.

[10] Watch the video demonstration at http://video.google.com/videoplay?docid=5772530828816089246.

[11] http://en.wikipedia.org/wiki/Sensor has a more complete list, including many sensors that are currently used only for scientific or industrial applications.

[12] See "At the Heart of the Wii Micron-Size Machines," by Michel Marriott, in The New York Times, December 21, 2006; http://www.nytimes.com/2006/12/21/technology/21howw.html?partner=permalink&exprod=permalink.

[13] For a more detailed view of FTIR and DI systems, read "Getting Started with MultiTouch" at http://nuigroup.com/forums/viewthread/1982/.

[14] See Bill Buxton's multitouch overview at http://www.billbuxton.com/multitouchOverview.html.

[15] For a longer discussion, see Designing for Interaction by Dan Saffer (Peachpit Press): 60–68.

[16] See "Converging Perspectives: Product Development Research for the 1990s," by Liz Sanders, in Design Management Journal, 1992.

[17] Gibson, J.J. "The theory of affordances," in Perceiving, Acting, and Knowing: Toward an Ecological Psychology, R. Shaw and J. Bransford (Eds.) (Lawrence Erlbaum): 67–82.

[18] See Field Guide to Gestures, by Nancy Armstrong and Melissa Wagner (Quirk Books): 45–48.

[19] See Don Norman's book, Emotional Design (Basic Books), for a detailed discussion of this topic.

[20] See, for instance, Dwell magazine's interview with Fukasawa, "Without a Trace," by Jane Szita, September 2006, which you can find at http://www.dwell.com/peopleplaces/profiles/3920931.html.

[21] Designing for Interaction by Dan Saffer, p. 217.