"Hands are underrated. Eyes are in charge, mind gets all the study, and heads do all the talking. Hands type letters, push mice around, and grip steering wheels, so they are not idle, just underemployed."

The best interactive gestures are those that combine the correct sensors (for detecting motion) with a human movement to deeply engage in an activity. But what is the range of human movement, and how do we move? Few designers or developers, unless they are trained as industrial designers or create medical devices or exercise equipment, have done much study of the human body. Typography, yes; anatomy, no. Until now.

Before delving into this chapter, go to a public space such as a park or restaurant and watch how people move, gesture, and interact. You'll notice that the range of human movement is fairly broad, thanks to the variety of limbs we have and especially to the dexterity of our hands. But you'll also see patterns emerge, along with a fairly standard lexicon of gestures and movements, and that is what this chapter examines and the book's appendix begins to catalog.

To apply the correct gesture to the correct action, it's helpful to understand a little about how the human body moves. The body is, after all, the primary input device for any gestural interface, and designers and developers should know the basics of its system, just as they understand (at least) the basics of computer systems. This study of the anatomy, physiology, and mechanics of human body movement is called kinesiology.

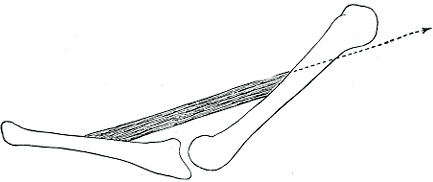

Simply put, contracting muscles cause your body to move. These skeletal muscles are connected to your bones by nonelastic, fibrous tissue called tendons. Your muscles move parts of your skeleton by pulling, via the tendons, on skeletal bones to make them move. Muscles can only pull, not push, and thus can't push themselves back into their original positions. Only another muscle pulling it back into its initial shape can do that.

Thus, there are two kinds of muscles: agonists and antagonists. Agonists (sometimes called prime movers) are the muscles that generate most movement. The biceps of the upper arm is an example of an agonist: your arm curls because the biceps contracts. An antagonist returns the limb to its original, initial position. As its name suggests, it acts in opposition to the agonist muscle. The triceps is an example of an antagonist. It returns the arm from the curled position into which the biceps moves.

This type of pairing of biceps and triceps is called an antagonistic pair. The pair is made up of a flexor muscle, which closes the joint by decreasing the angle between the two bones, and an extensor muscle, which does the opposite, opening the joint by increasing the angle between the two bones.

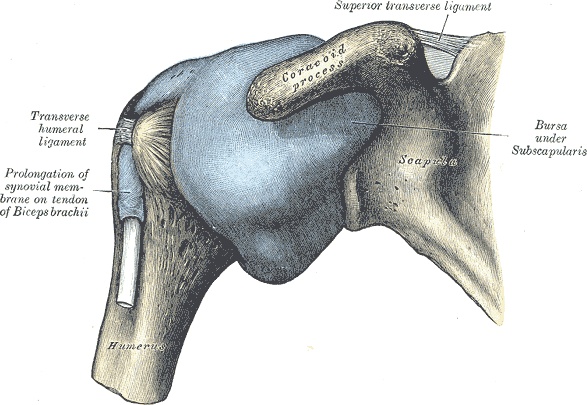

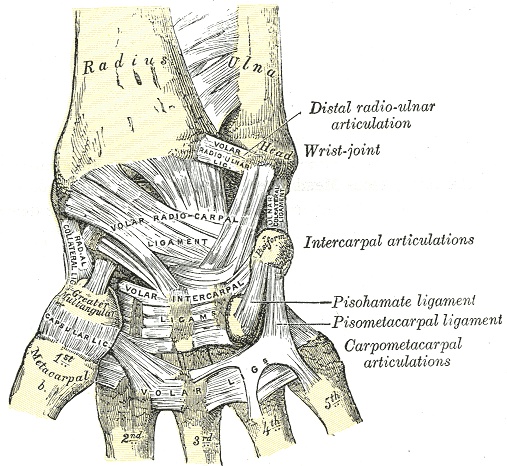

Joints are what allow humans to move at all. A joint is the location at which two or more bones make contact, held together by ligaments, which are short bands of flexible, fibrous tissue. Ligaments can actually prevent or limit certain movements of the joint.

Joints can be categorized functionally by the amount and type of movement they engender. For our purposes, we're mostly concerned with what are called diarthroses. Diarthrodial joints are those that permit a variety of movements and are flexible thanks to a pocket of lubricating synovial fluid in the space between the bones. There are six types of diarthroses:

- Ball and socket

A ball and socket joint, such as that of the hip and shoulder, consists of one rounded bone fitting into the cup-like depression of another. Ball and socket joints allow for a wide range of movement in a circular motion around the center of the "ball."

- Condyloid

A condyloid joint, such as the wrist, is similar to a ball and socket joint, except that the condyloid has no socket; the ball rests against the end of a bone instead.

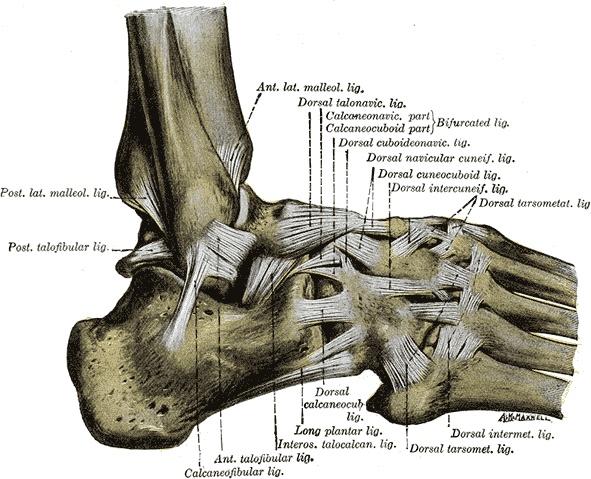

- Gliding

Some of the bones in the wrists and ankles move (in a very limited way) by sliding against each other. These gliding joints occur where the surfaces of two flat bones are held together by ligaments.

- Hinge

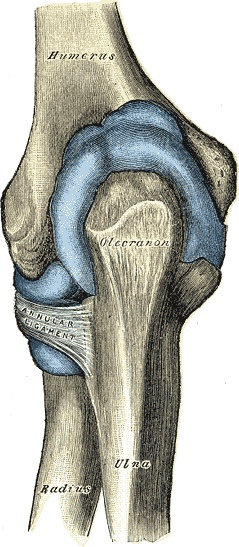

A hinge joint, such as the elbow or those in the fingers, moves in only one plane, back and forth, acting like the hinge of a door (hence the name).

- Pivot

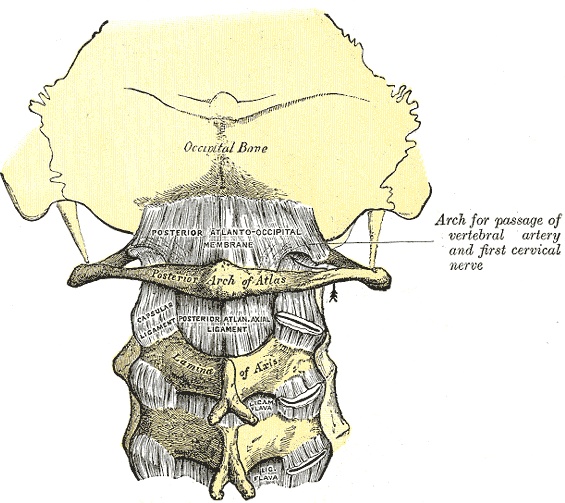

The pivot joint in your neck allows you to turn your head from side to side. Pivot joints are limited in rotation and are made up of one bone fitting into a ring of bone and ligaments.

- Saddle

The only saddle joints in the human body are the thumbs. Saddle joints occur where one bone fits like a saddle on top of another. Saddle joints can rock back and forth and can rotate, much like the condyloid joints.

These joints are the fulcrums on which all the major movements of the body occur. The only other joints we should be concerned with are those between the vertebrae in the spine, connected by pads of cartilage, which can move slightly, allowing for bends and twists of the whole torso.

The medical and kinesiology communities have technical terms for the types of movement the human body is capable of. Frequently, these movements come in pairs: first, there is the execution of the movement, and then the opposite movement that returns the body or limb to a neutral state. The human body is capable of the following broad movements:

- Flexion and extension

A movement that decreases the angle between two parts of the body, such as making a fist or bending an elbow, is called a flexion. Applied to a ball and socket joint such as the hip or shoulder, flexion means the limb has moved forward. The opposite motion is an extension. Extensions increase the angle between two body parts and, in effect, straighten limbs. When you stand up, you cause your knees to extend. Extending the hip or shoulder moves the full limb (leg or arm) backward.

- Rotation

Rotation is any movement in which a body part (aided by a ball and socket, condyloid, pivot, or saddle joint) turns on its axis. Twiddling your thumbs is an example of a rotation.

- Abduction and adduction

Abduction is a motion that moves one part of the body away from its midline. The midline is an imaginary line that divides the body (or a limb) in left/right halves. When referring to fingers and toes, abduction means spreading the digits apart, away from the centerline of the hand or foot. Raising an arm up and to the side is an example of abduction. Adduction is a motion that moves a part of the body toward the body's midline or toward the midline of a limb. Putting splayed fingers back together is an example of adduction.

- Internal and external rotation

Internal rotation turns a limb toward the body's midline. An internal rotation of the hip, for example, would point the toes inward. An external rotation would do the opposite: point the toes outward, away from the midline of the body.

- Elevation and depression

Any movement upward, such as lifting a leg, is an elevation. Depression is the opposite.

- Protraction and retraction

Protraction is the forward movement of an arm, and retraction is the backward stretching movement of the arm. Similarly, protrusion and retrusion are the forward (protrusion) and backward (retrusion) movements of a body part, frequently the jaw.

In addition to these major, broad movements, there are specialized movements that only the hands and feet can perform. These, too, often come in pairs:

- Supination and pronation

Supination occurs when the forearm rotates and the palm of the hand turns upward. The opposite motion, which turns the palm down, is pronation.

- Plantarflexion and dorsiflexion

Two types of flexion of the foot are plantarflexion and dorsiflexion. Plantarflexion is a flexion that pushes the foot down, as though pushing a gas pedal. Dorsiflexion is its opposite: a movement that moves the foot upward.

- Eversion and inversion

Eversion moves the sole of the foot outward, in effect turning the foot sideways. Inversion, which can occur accidentally when the ankle is twisted, turns the sole inward.

- Opposition and reposition

Opposition is one of the things that identify us as humans: the ability to grasp things between the thumb and finger(s) (thus the term opposable thumb). Reposition is the letting go of this grip by spreading the finger(s) and thumb apart.

The last major movement is one that combines several movements—flexion, extension, adduction, and abduction—into one. Circumduction is the circular movement of a body part such as the wrist, arm, or even eye. Rotating the foot at the ankle is an example of circumduction.

We can use these technical terms to more precisely describe nonstandard movements in order to document those movements (see Chapter 5).

With gestural interfaces, key issues regarding the body must be considered when designing the interface itself and the accompanying interactive gestures that control it.

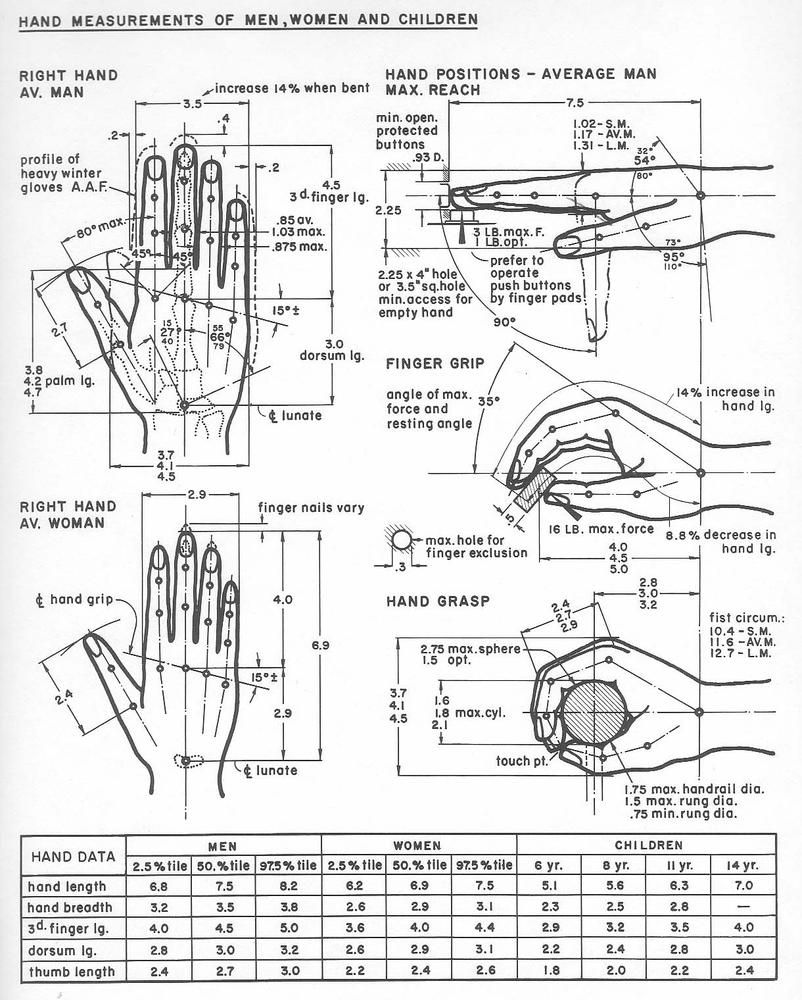

Figure 2-7. Hand data from Henry Dreyfuss's book, Designing for People. Dreyfuss created these composite figures from his research and experience with human physiology. Knowing such information helped Dreyfuss (and countless other industrial designers) to design everything from tanks to telephones. Courtesy Allworth Press.

In 1955, Henry Dreyfuss wrote a seminal book on industrial design called Designing for People (Allworth Press). In it, as well as in a book he coauthored with Alvin R. Tilley, The Measure of Man and Woman: Human Factors in Design (Wiley), Dreyfuss demonstrated how the human body should be considered when designing products. To do so, he created two composite "people," Joe and Josephine, who illustrated the common dimensions of human physiology.

Designers need to be aware of the limits of the human body when creating interfaces that are controlled by it. The simple rule of thumb (pun intended) is this:

Note

The more complicated the gesture, the fewer the people who will be able to perform it.

Almost anyone (see DESIGNING TOUCH TARGETS later in this chapter) can tap a button on a touchscreen. But not everyone is going to be able to wave her left hand while balanced on her right leg! Care should be taken so that anyone who will use the system can follow essential commands. If the users are the general populace, on a system they don't use often (such as a public kiosk) the interactive gestures should be simpler than those used by expert teen gamers on their home gaming system. Motor skills decay as we get older, making it more difficult to perform physical tasks, especially those requiring fine motor skills in the hands.

Designers of gestural interfaces need to know the same things Henry Dreyfuss did: the general capabilities of the average human.

Authors Michael Nielsen, Moritz Störring, Thomas B. Moeslund, and Erik Granum, in their 2003 paper "A Procedure for Developing Intuitive and Ergonomic Gesture Interfaces for Man-Machine Interaction,"[22] have compiled a list of ergonomic principles to be aware of when considering motions:

Avoid "outer positions," those that cause hyperextension or extreme stretches.

Avoid repetition.

Relax muscles.

Utilize relaxed, neutral positions.

Avoid staying in a static position.

Avoid internal and external force on joints.

These principles serve designers well when selecting gestures to make interactive.

Since fingers and hands play such an important role in interactive gestures, especially for touchscreens, let's take a close look at them.

Adult fingers typically have a diameter of 16 mm to 20 mm (0.6 inches to 0.8 inches).[23] Children's and teens' fingers may be smaller, whereas disabled, elderly, or obese people may have misshapen or larger fingers.

For pushing buttons or touching screens, usually the pad of the finger is used instead of the tip. Fingertips are narrow, only 8–10 mm (0.3–0.4 inches) wide. Because of this small surface area, humans usually push buttons at an acute angle using the pad of the finger, not straight on using the tip of the finger. Finger pads are wider than fingertips, but narrower than the full finger, typically 10–14 mm (0.4–0.55 inches).

Fingernails are a blessing and a curse. Long fingernails, although unlikely to scratch a touchscreen, can make it difficult to perform certain gestures. Made of keratin, they are hard and smooth enough to mimic a stylus and are thus more accurate than a fingertip or finger pad. However, fingernails may not provide enough contact with some screens to trigger a touch event, especially capacitive touchscreens. This is especially true of fake fingernails.

Gloves, too, can make it difficult to use gestural interfaces. Not only do they usually inhibit hand movement and increase finger size, but they also may not trigger capacitive touchscreens because they do not conduct electricity. In climates where cold weather often necessitates wearing gloves, care should be taken when deploying gestural interfaces.

Although the exact percentage is unknown, studies have suggested that 7% to 10% of adults are left-handed, meaning they favor their left hand for tasks such as writing and, one can surmise, would do so as well with interactive gestures. Although obviously most gestural interfaces should be optimized for the 90% of right-handers, southpaws should not have to be unduly burdened, such as having to reach across the screen to the righthand side constantly to perform actions. A means to flip the controls or adjust the layout for lefties can be a good design practice.

Fingers also have natural oils and/or can get slippery, which can make it tricky, if not impossible, to manipulate things. Touchscreens, too, can get oily and worn, making them difficult to use. Finger oil (and dirt) also means fingerprints and smudges. Patterns or bright backgrounds can help hide them. Black—seemingly the default choice for many manufacturers—makes fingerprints even more noticeable.

Another ergonomic problem arises with gestural interfaces in that, because there is typically no wrist support, using one for a long period of time can be tiring on the hand and fingers. This is especially true with touchscreens, because often, significant pressure is required to engage them, and the screens, being made of glass, don't bend or give.

Fingers are also just messier and more inaccurate than cursors are. As reported in the New York Times Magazine:[24]

"In 2005, the state of California complained that the [touchscreen voting] machines [Diebold AccuVote-TSX] were crashing. In tests, Diebold determined that when voters tapped the final 'cast vote' button, the machine would crash every few hundred ballots. They finally intuited the problem: their voting software runs on top of Windows CE, and if a voter accidentally dragged his finger downward while touching 'cast vote' on the screen, Windows CE interpreted this as a 'drag and drop' command. The programmers hadn't anticipated that Windows CE would do this, so they hadn't programmed a way for the machine to cope with it. The machine just crashed."

Designers and developers thus need to remember that human fingers are an imperfect input device and account for this "flaw."

Warning

Because of the inaccuracy of our fingers and hands, it is best not to make similar gestures for different actions in the same system for fear of users accidentally triggering one instead of the other.

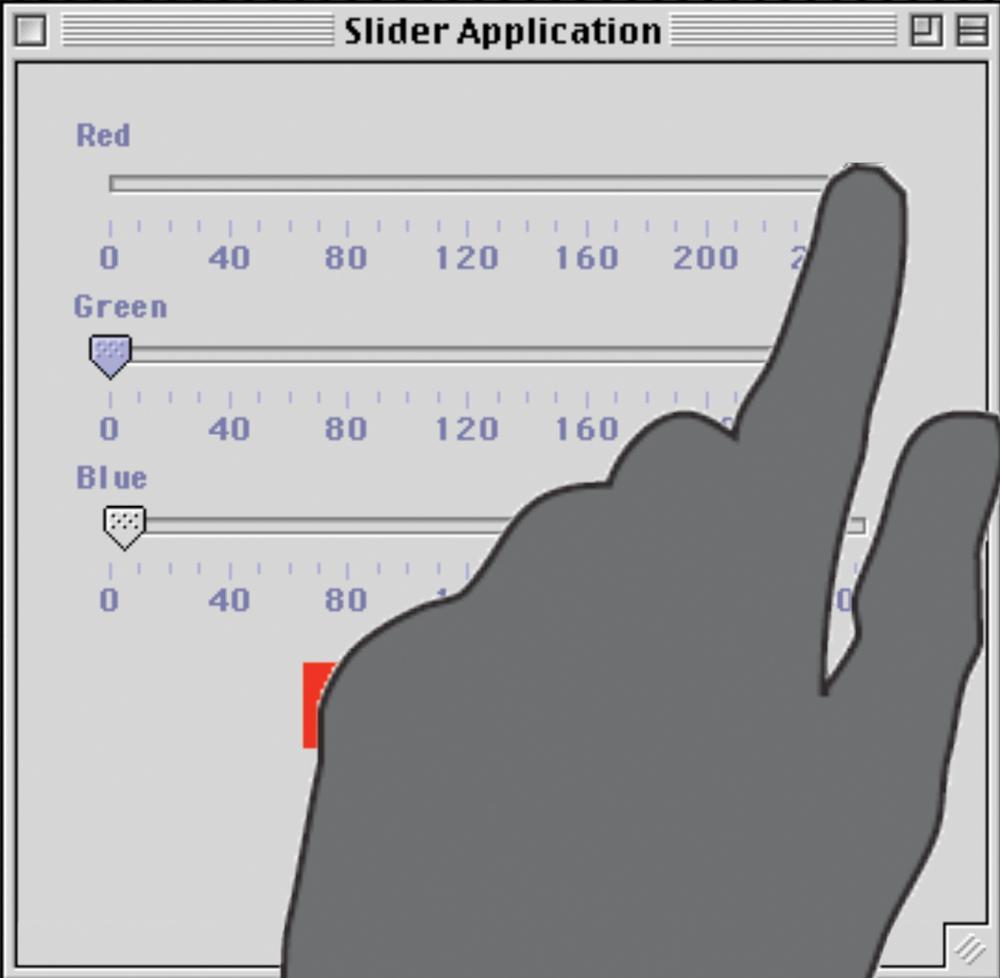

Finally, because, unlike a mouse cursor, our finger pads don't float transparently in space (unless they're Ghost Fingers—see Chapter 3), the rest of the finger, the hand, and the arm will likely cover up some part of the interface while the user is touching it, especially the part of the screen immediately below what the user is interacting with. Thus, it is a good practice to keep the following warning in mind.

Warning

Never put essential information or features such as a label, instructions, or subcontrols below an interface element that can be touched, as it may be hidden by the user's own hand and arm.

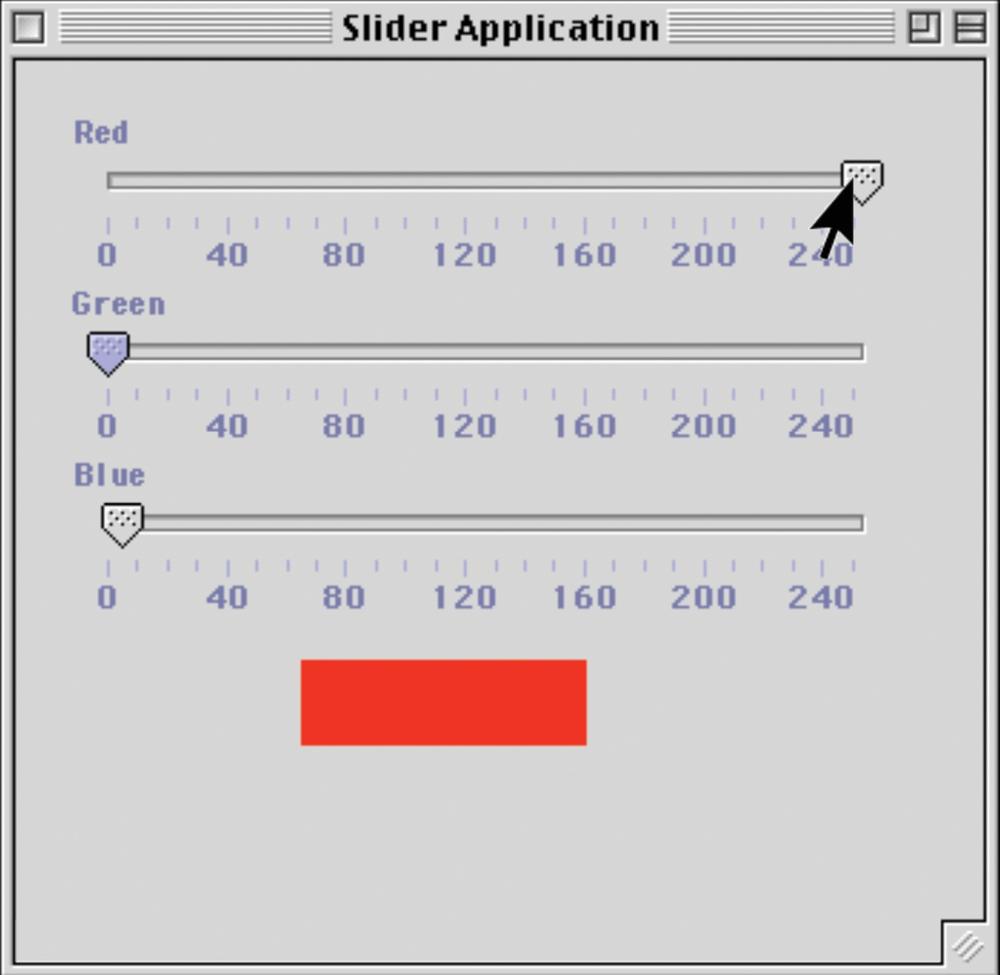

Figure 2-8. An illustration of the difference between designing for a cursor and designing for a hand. The hand covers up not only the labels on the slider, but also the display showing the color that is being created.

Placing menus and controls at the bottom of the screen instead of in their traditional place at the top is helpful to prevent screen coverage.

Hands can also interfere with the technology of touchscreens, causing poor readings and accidental touch events if users rest their palms on the screen while performing a task. For some touchscreens, the solution to this is straightforward: mount the touchscreen vertically so that users cannot rest their palms on its surface. This won't, of course, work with mobile devices, but it is a solution for kiosks and displays.

All of these human factors have some interface implications, especially when designing touch targets.

Since 1954, when it was proposed by psychologist Paul Fitts,[25] Fitts' Law (pronounced "Fittzez Law") has guided computer scientists, engineers, and interaction, interface, and industrial designers when creating products, especially software. Fitts' Law simply states that the time it takes for a user to reach a target by pointing with a finger or with a device such as a mouse is proportional to the distance to the object divided by the size of the object. Thus, a large target that is close to the user is easier to point to than a smaller one farther away.

This law still holds true for interactive gestures, perhaps even more so. Touchscreen and other visual targets need to be designed in such a way so that important controls are close to the user to minimize reaching across the interface. Equally important is that the objects being manipulated (e.g., buttons, dials, etc.) are large enough for an average human fingertip to touch.

The range for what counts as an acceptable target varies widely, but one reasonable guideline is that interface elements that a user has to select and manipulate ideally should be no smaller than the smallest average finger pad, which is to say no smaller than 1cm (0.4 inches) in diameter, or a 1×1 cm square.

However, what 1 cm translates into pixel-wise varies depending on the pixel density or pixels per inch (PPI) of the screen. Pixel density is a measurement of the resolution of a computer display, related to the size of the screen in inches and the number of pixels available (the screen resolution). You determine pixel density by dividing the width (or height) of the display area in pixels by the width (or height) of the display area in inches. The higher the PPI, the larger your interface elements will have to be to create suitable touch targets.

Note

To calculate what an ideal size for a target should be (in inches), use this formula: target = (target size in inches) × (screen width in pixels) / (screen width in inches)

There can be a wide variation in size depending on the PPI. For instance, Nokia's N800 has a 4.1-inch screen size, an 800×480-pixel resolution, and therefore a PPI of 225. A 1cm (0.4-inch) button on it would be 90 pixels. In contrast, a 1cm (0.4-inch) button on a 15-inch touchscreen with a 1,024×768 resolution and thus a PPI of 85 is only 34 pixels, almost two-thirds smaller.[26] In short, whenever possible, measure and prototype your touch targets using actual dimensions and resolutions to ensure that they are correctly sized (see Chapter 6 for more on prototyping). Ideally, you will want to test the touch targets with users as well.

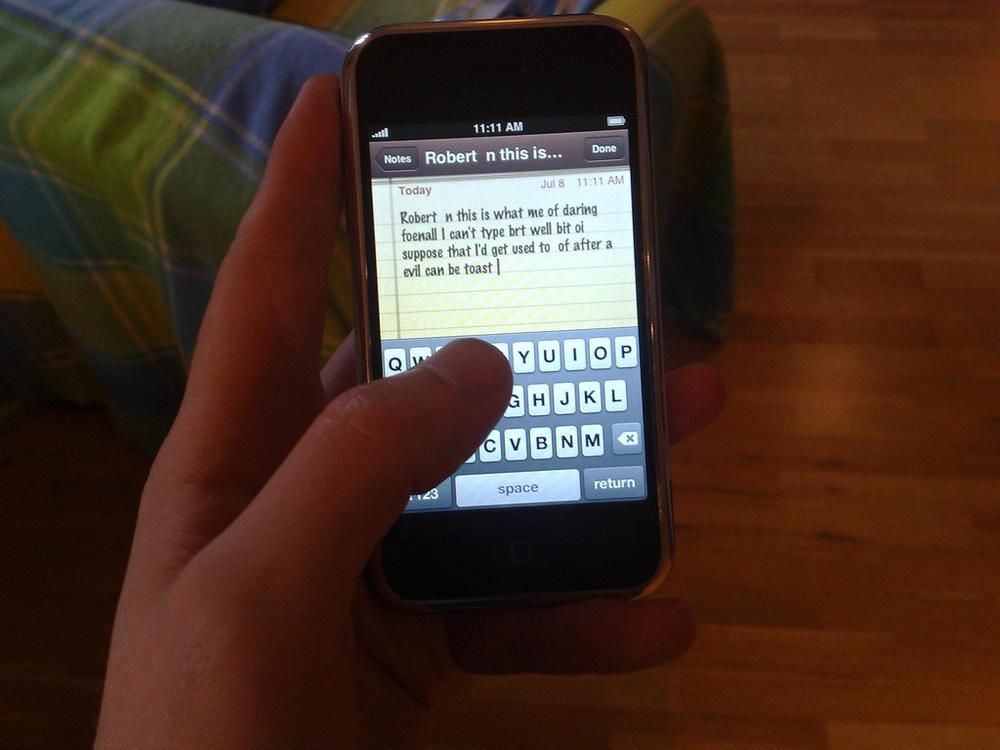

All that being said, many touchscreen devices on the market have much smaller touch targets than this guideline, particularly when it comes to on-screen keyboards.

Figure 2-9. The keyboard on the iPhone contains some of the smallest touch targets (5 mm or 0.2 inches) of any gestural interface. It uses adaptive targets to partially get around this limitation. Courtesy Nick Richards.

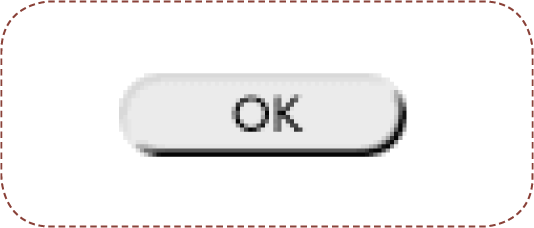

Figure 2-10. An illustration of an iceberg tip. The dotted line represents the invisible edge of the touch target.

There are two ways around the size limitations of touch targets: iceberg tips and adaptive targets. Like icebergs that are mostly underwater, iceberg tips are controls that have a larger target than what is visible. That is, the touch target is larger than the visible icon representing it. Using iceberg tips, designers can increase the size of the touch target without increasing the size of the object itself, which can lead to large, ungainly icons. The implications of this, however, are that there has to be enough space between objects to create this effect. Objects that are directly adjacent won't be able to use this trick to its best effect, unless they make use of adaptive targets.

Adaptive targets are created algorithmically by guessing the next item the user will touch, then increasing the touch target appropriately. Usually, this increases the hidden part of the "iceberg" as described earlier, not the object itself, although that too is possible. This trick is often used with keyboards, such as on the iPhone. For example, if a user types the letters t and h in a row, the system predicts that the next likely letter the user will type is e (forming the word the), and not the letters r, w, or d or the number 3 (i.e., the keys in the general area surrounding e that a user might accidentally touch). Of course, designers and developers need to be careful not to overpredict users' actions, and when making such predictions to always allow users to undo the guess, via either a Delete key or an undo command.

Obviously, some gestural interfaces will be extremely difficult for those with physical challenges to operate, especially for those with limited hand movement. Although no guidelines exist yet, to be accessible to the widest possible user base, touch targets will have to be large (perhaps 150% of the typical size, or 1.5 cm square) and gestures simple and limited (taps, waves, proximity alone). Anything beyond some basic movements (tap, wave, flick, press) that use single fingers or the whole hand as one entity may be challenging for some disabled users. Even patterns such as Pinch to Shrink can be tricky.

On the positive side, some gestural interfaces might be more accessible than most keyboard/mouse systems, requiring only gross motor skills or facial recognition to engage them.

The body is an amazingly flexible tool with a wide variety of gestures. The hands alone have the potential for hundreds of possible configurations that could be used to trigger a system response. It is just up to designers to employ them correctly and not to overstrain users.

The next two chapters examine gestural interfaces that combine some of these physical gestures with system behaviors to form a pattern. Chapter 3 examines those patterns for direct manipulation, and Chapter 4 details those for indirect manipulation.

Designing for People, Henry Dreyfuss (Allworth Press)

Abstracting Craft: The Practiced Digital Hand, Malcolm McCullough (MIT Press)

The Measure of Man and Woman: Human Factors in Design, Alvin R. Tilley and Henry Dreyfuss (Wiley)

[22] You can download the paper from http://citeseer.ist.psu.edu/560798.html

[23] For more, very technical details, see "3-D Finite-Element Models of Human and Monkey Fingertips to Investigate the Mechanics of Tactile Sense," by Kiran Dandekar et al., The Touch Lab, Department of Mechanical Engineering and The Research Laboratory of Electronics, Massachusetts Institute of Technology.

[24] Thompson, Clive. "Can You Count on Voting Machines?", The New York Times Magazine, January 6, 2008.

[25] See Fitts' "The information capacity of the human motor system in controlling the amplitude of movement," in Journal of Experimental Psychology 47(6): 381–391, June 1954.

[26] See https://help.ubuntu.com/community/UMEGuide/DesigningForFingerUIs for a table of some common screen sizes and the corresponding minimum button size.