Chapter 12

Feedback Loop Analysis and Stability

This chapter advances from the very basics to full stability analysis of power converters. Time and frequency domain analyses are first explained along with the s-plane and the underlying concept behind the Laplace transform. Mathematics in the log-plane is described, and passive filter responses are plotted out. The concept of poles and zeros follows. Basic control loop theory is explained, and the plant transfer functions of the fundamental topologies are all derived. Transconductance amplifiers (OTAs) are compared with conventional voltage op-amps, and it is shown how to use them for implementing a feedback section and thereby closing the loop. Types 1–3 compensation techniques are discussed in detail, and ways to carry out pole-zero cancellation to achieve desirable open-loop gain and phase characteristics are explained. Both voltage-mode control and current-mode control are discussed, and detailed numerical examples are included. Extras include a discussion of the RHP zero, subharmonic instability and line feedforward.

Transfer Functions, Time Constant, and the Forcing Function

In converters, we often refer to the steady-state ratio: output divided by input, VO/VIN, as the “DC transfer function” of the converter. We can define transfer functions in many ways. For example, in Chapter 1 we discussed a simple series resistor–capacitor (RC) charging circuit (see top schematic of Figure 1.3). By closing the switch we were, in effect, applying a step voltage to the RC. Let us call the voltage step “vi” (its height).

That was the “input” or “stimulus” to the system. It resulted in an “output” or “response” — which we implicitly defined as the voltage appearing across the terminals of the capacitor, that is, vO(t). So, the ratio of the output to the input was also a “transfer function”:

![]()

Note that this transfer function depends on time. In general, any output (“response”) divided by input (“stimulus”) is called a “transfer function.”

A transfer function need not be “Volts/Volts” (i.e., dimensionless). In fact, neither the input nor the output of any such two-port network need necessarily even be a voltage. The input and output need not even be two similar quantities. For example, a two-port network can be as simple as a current sense resistor. Its input is the current flowing into it, and its output may be considered as the sensed voltage across it. So, its transfer function has the units of voltage divided by current, that is, resistance. Or we could pass a current through the resistor, but consider the response under study as its temperature. So, that would be the output now. Later, when we analyze a power supply in more detail, we will see that its pulse-width modulator (PWM) section, for example, has an input that is called the “control voltage” (output of error amplifier), but its output is a dimensionless quantity: the duty cycle (of the converter). So, the transfer function in that case has the units of Volts−1. We realize the phrase “transfer function” is a very broad term.

In this chapter, we start analyzing the behavior of the converter to sudden changes in its DC levels, such as those that occur when we apply line and load variations. These changes cause the output to temporarily move away from its set DC regulation level VO, and therefore give its feedback circuitry the job of correcting the output in a manner deemed acceptable. Note that in this “AC analysis,” it is understood that what we are referring to as the output or response is actually the change in VO. The input or stimulus, though certainly a change too, is defined in many different ways as we will soon see. In all cases, we are completely ignoring the DC-bias levels of the converter and focusing only on the changes around those levels. In effect, we are studying the converter’s “AC transfer functions.”

How did we actually arrive at the transfer function of the RC circuit mentioned above? For that, we first use Kirchhoff’s voltage law to generate the following differential equation:

![]()

where i(t) is the charging current, q(t) is the charge on the capacitor, vres(t) is the voltage across the resistor, and vcap(t) is the voltage across the capacitor (i.e., vo(t), the output). Further, since charge is related to current by dq(t)/dt=i(t), we can write the above equation as

![]()

or

![]()

To solve this, we “cheat” a little. Knowing the properties of the exponential function y(x)=ex, we do some educated reverse-guessing. And that is how we get the solution:

![]()

Substituting q=C×vcap, we arrive at the required transfer function of the RC-network given earlier.

Note that the differential equation for q(t) above is in general a “first-order” differential equation — because it only involves the first derivative of time.

Later, we will see that there is a better way to solve such equations — it invokes a mathematical technique called the “Laplace transform.” To understand and use that, we have to first learn to work in the “frequency domain” rather than in the “time domain” as we have been doing so far above. We will explain that soon.

Here we note that in a first-order differential equation of the above type, the term that divides q(t) (“RC” in our case) is called the “time constant.” Whereas, the constant term in the equation (“vi/R” in our case) is called the “forcing function.”

Understanding “e” and Plotting Curves on Log Scales

We can see that the solution to the previous differential equation brought up the exponential constant “e,” where e≈2.718. We can ask — why do circuits like this always seem to lead to exponential type of responses? Part of the reason for that is that the exponential function ex does have some well-known and useful properties that contribute to its ubiquity. For example,

![]()

But this in turn can be traced back to the observation that the exponential constant e itself happens to be one of the most natural parameters of our world. The following example illustrates this.

Example:

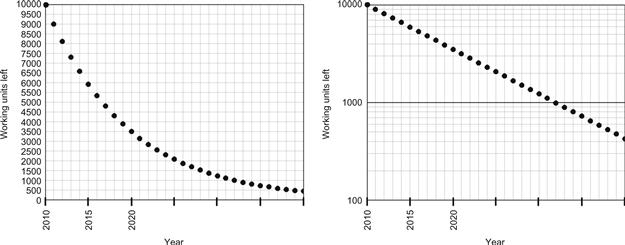

Consider 10,000 power supplies in the field with a failure rate of 10% every year. That means in 2010, if we had 10,000 working units, in 2011 we would have 10,000×0.9=9,000 units. In 2012, we would have 9,000×0.9=8,100 units left. In 2013, we would have 7,290 units left, in 2014, 6,561 units, and so on. If we plot these points — 10,000; 9,000; 8,100; 7,290; 6,561; and so on, versus time, we will get the well-known decaying exponential function. See Figure 12.1. We have plotted the same curve twice: the curve on the right has a log scale on the vertical axis. Note how it now looks like a straight line. It cannot, however, ever go to zero! The log scale is explained further.

Figure 12.1: How a decaying exponential curve is naturally generated.

Note that the simplest and most obvious initial assumption of a constant failure rate has led to an exponential curve. That is because the exponential curve is simply a succession of evenly spaced data points (very close to each other), which are in simple geometric progression — that is, the ratio of any point to its preceding point is a constant (equal intervals). Most natural processes behave similarly, and that is why “e” is encountered so frequently. In Chapter 6, we had introduced Arrhenius’ equation as the basis for failures. That too was based on “e.”

We recall that logarithm is defined as follows — if A=BC, then logB(A)=C, where logB(A) is the “logarithm of A to the base B.” The commonly referred-to “logarithm,” or “log,” has an implied base of 10 (i.e., B=10), whereas the natural logarithm “ln” is an abbreviation for a logarithm with a base “e” (i.e., where B is e=2.718). We will be plotting a whole lot of curves in this chapter on “log scales.”

Remember this: if the log of any number is multiplied by 2.303, we get its natural log. Conversely, if we divide the natural log by 2.303 we get its log. This follows from

![]()

Flashback: Complex Representation

Any electrical parameter is thus written as a sum of real and imaginary parts:

![]()

where we have used “Re” to symbolically denote the real part of the number A and “Im” for its imaginary part. From these components, the actual magnitude and phase of A can be reconstructed as follows:

![]()

![]()

Impedance too is broken up into a vector in this complex representation — except that though it is frequency dependent, it is (usually) not a function of time.

The “complex impedances” of reactive components are

![]()

![]()

To find out what happens when a complex voltage is applied to a complex impedance, we need to apply the complex versions of our basic electrical laws. So Ohm’s law, for example, now becomes

![]()

We also have the following relationships to keep in mind:

Note that in electrical analysis, we set θ=ωt. Here θ is the angle in radians (180° is π radians). Also, ω=2πf, where ω is the angular frequency in radians/s and f the (conventional) frequency in Hz.

As an example, using the above equations, we can derive the magnitude and phase of the exponential function f(θ)=ejθ as follows:

![]()

![]()

Repetitive and Nonrepetitive Stimuli: Time Domain and Frequency Domain Analyses

Strictly speaking, no stimulus is purely “repetitive” (periodic) in the true sense of the word. “Repetitive” implies that the waveform has been exactly that way, since “time immemorial,” and remains so forever. But in the real world, there is actually a definite moment when we apply a given waveform (and another when we remove it). Even an applied “repetitive” sine wave, for example, is not repetitive at the moment it gets applied. Though, much later, the stimulus can be considered repetitive if sufficient time has elapsed from the moment of application to allow the initial transients to die out completely. This is the implicit assumption we make even when we carry out “steady-state analysis” of any circuit or converter.

But sometimes, we do want to know what happens at the exact moment of application of the stimulus. Like the case of the step voltage applied to our RC-network, we could do the same to a power supply, and we would want to ensure that its output doesn’t “overshoot” (or “undershoot”) too much at the instant of application of this “line transient.” We could also apply sudden changes in load to the power supply, and see what happens to the output rail under a “load transient.”

If we have a circuit (or network) constituted only of resistors, the voltage at any point in it is uniquely and instantaneously defined by the applied voltage. If the input varies, so does this voltage, and proportionally so. In other words, there is no “lag” (delay) or “lead” (advance) between the stimulus and the response. Time is not a variable involved in this transfer function. However, when we include reactive components (capacitors and/or inductors) in any network, it becomes necessary to start looking at how the situation changes over time in response to an applied stimulus. This is called “time-domain analysis.” Proceeding along that path, as we did in the first section of this chapter with the RC circuit, can get very intimidating very quickly as the complexity of the circuit increases. We are therefore searching for simpler analytical techniques.

We know that any repetitive (“periodic”) waveform, of almost arbitrary shape, can be decomposed into a sum of several sine (and cosine) waveforms of frequencies. That is what Fourier series analysis is (see Chapter 18 for more on this topic). In Fourier series, though we do get an infinite series of terms, the series is a simple summation consisting of terms composed of discrete frequencies (the harmonics) (see Figure 18.1 in particular). When we deal with more arbitrary waveshapes, including those that are not periodic, we need a continuum of frequencies to decompose that waveform, and then understandably, the summation of Fourier series now becomes an integration over frequency. Note that in the new continuum of frequencies, we also have “negative frequencies,” which are clearly not amenable to intuitive visualization. But that is, how the Fourier series evolved into the “Fourier transform.” In general, decomposing an applied stimulus (a waveform) into its frequency components, and understanding how the system responds to each frequency component, is called “frequency domain analysis.”

Note: The underlying reason for decomposition into components is that the components can often be considered mutually “independent” (i.e., orthogonal), and therefore tackled separately, and then their effects superimposed. We may have learned in our physics class that we can split a vector, the applied force for example, into x and y components, Fx and Fy. Then we can apply the rule Force=mass×acceleration to each x and y component of the force separately. Finally, we can sum the resulting x and y accelerations to get the final acceleration vector.

As mentioned, to study any nonrepetitive waveform, we can no longer decompose it into components with discrete frequencies as we can do with repetitive waveforms. Now we require a spread (continuum) of frequencies. That leads us to the usual simple definition of “Fourier transform” — which is simply the function f(t), multiplied by e−jωt and integrated over all time (minus infinity to plus infinity).

But one condition for using this standard definition of Fourier Transform is that the function f(t) be “absolutely integrable.” This means the magnitude of this function, when integrated over all time, remains finite. That is obviously not true even for a function as simple as f(t)=t for example. In that case, we need to multiply the function f(t) by an exponentially decaying factor e−σt so that f(t) is forced to become integrable for certain values of the real parameter σ. So now, the Fourier transform becomes

In other words, to allow for waveforms (or its frequency components) that can naturally increase or decrease over time, we need to introduce an additional (real) exponential term eσt. However, when doing steady-state analysis, we usually represent a sine wave in the form ejωt, which now becomes eσt×ejωt=e(σ+jω)t. Now we have “a sine wave with an exponentially decreasing (σ positive), or increasing (σ negative), amplitude.” Note that if we are only interested in performing steady-state analysis, we can go back and set σ=0. That takes us back to the case involving only ejωt (or sine and cosine terms), that is, repetitive waveforms.

The result of the integral involving “s” above is called the “Laplace transform,” and it is a function of “s” as explained further in the next section.

The s-Plane

In traditional AC analysis in the complex plane, the voltages and currents were complex numbers. But the frequencies were always real, even though the frequency ω itself may have been prefixed with “j” in a manner of representation. However, now in an effort to include virtually arbitrary waveforms into our analysis, we have in effect, created a “complex-frequency plane” too, that is, s=σ+jω. This is called the s-plane. The imaginary part of this new complex-frequency number “s” is our usual (real and oscillatory) frequency ω, whereas its real part is the one responsible for the observed exponential decay of any typical transient waveform over time. Analysis in this plane is ultimately just a more generalized form of frequency domain analysis.

In this representation, the reactive impedances become

![]()

![]()

Note that resistance still remains just a pure resistance, that is, it has no dependence on frequency or on s.

To calculate the response of complex circuits and stimuli in the s-plane, we need to use the rather obvious s-plane versions of the electrical laws. For example, Ohm’s law is now

![]()

The use of s gives us the ability to solve the differential equations arising from an almost arbitrary stimulus, in an elegant way, as opposed to the “brute-force” method in the time domain (using t). This is the Laplace transform method.

Note: Any such decomposition method can be practical, only when we are dealing with “mathematical” waveforms. Real waveforms may need to be approximated by known mathematical functions for further analysis. And very arbitrary waveforms will probably prove intractable.

Laplace Transform Method

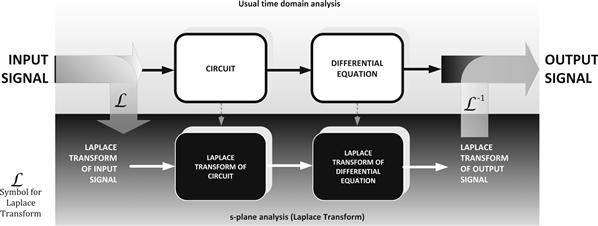

The Laplace transform is used to map a differential equation in the “time domain” (i.e., involving “t”) to the “frequency domain” (involving “s”). The procedure unfolds as explained below.

First, the applied time-dependent stimulus (one-shot or repetitive — voltage or current) is mapped into the complex-frequency domain, that is, the s-plane. Then, by using the s-plane versions of the impedances, we can transform the entire circuit into the s-plane. To this transformed circuit, we apply the s-plane versions of the basic electrical laws and thereby analyze the circuit. We will then need to solve the resultant (transformed) differential equation (now in terms of s rather than t). But as mentioned, we will be happy to discover that the manipulation and solution of such differential equations are much easier to do in the s-plane than in the time domain. In addition, there are also several lookup tables for the Laplace transforms of common functions available, to help along the way. We will thus get the response of the circuit in the frequency domain. Thereafter, if so desired, we can use the “inverse Laplace transform” to recover the result in the time domain. The entire procedure is shown symbolically in Figure 12.2.

Figure 12.2: Symbolic representation of the procedure for working in the s-plane.

A little more math is useful at this point, as it will aid our understanding of the principles of feedback loop stability later.

Suppose the input signal (in the time domain) is u(t) and the output is v(t), and they are connected by a general second-order differential equation of the type

![]()

It can be shown that if U(s) is the Laplace transform of u(t), and V(s) the transform of v(t), then this equation (in the frequency domain) becomes simply

![]()

So,

![]()

We can therefore define G(s), the transfer function (i.e., output divided by input, now in the s-plane), as

![]()

Therefore,

![]()

Note that this is analogous to the time-domain version of a general transfer function f(t):

![]()

Since the solutions for the general equation G(s) above are well-researched and documented, we can easily compute the response (V) to the stimulus (U).

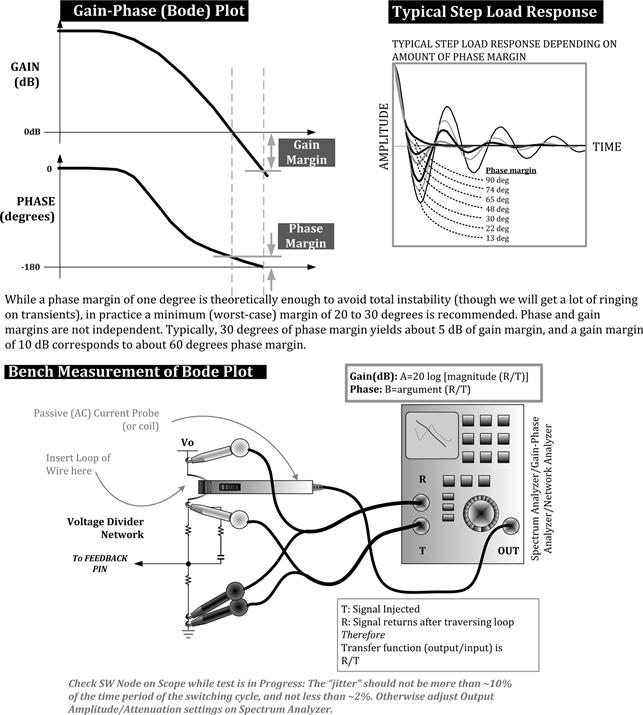

A power supply designer is usually interested in ensuring that his or her power supply operates in a stable manner over its operating range. To that end, a sine wave is injected at a suitable point in the power supply, and the frequency swept, to study the response. This could be done in the lab and/or “on paper” as we will soon see. In effect, what we are looking at closely is the response of the power supply to any frequency component of a repetitive or nonrepetitive impulse. But in doing so, we are, in effect, only dealing with a steady sine wave stimulus (swept). So, we can then put s=jω (i.e., σ=0).

We can ask — why do we need the complex s-plane at all if we are just going to set s=jω anyway at the end? The answer to that is — we don’t always just do that. For example, we may at some later stage want to compute the exact response of the power supply to a specific disturbance (like a step change in line or load). Then we would need the s-plane and the Laplace transform method. So, even though, we may just end up doing steady-state analysis, by having already characterized the system within the framework of s, we retain the option to be able to conduct a more elaborate analysis of the system response to a more general stimulus if required.

A silver lining for the beleaguered power supply designer is that he or she doesn’t usually even need to know how to actually compute the Laplace transform of a function — unless, for example, the exact step response is required to be computed exactly — like an overshoot or undershoot resulting from a load transient. If the purpose is only to ensure sufficient stability margin is present, steady-state analysis serves the purpose. For that we simply sweep over all possible steady frequencies of input disturbance (either on paper or in the lab), and ensure there is no possibility of ever reinforcing the applied disturbance and making things worse. So, in a full-fledged mathematical analysis, it is convenient to work in the generalized s-plane. At the end, if we just want to calculate the stability margin, we can revert to s=jω. If we want to do more, we have that option too.

Disturbances and the Role of Feedback

In power supplies, we can either change the applied input voltage or increase the load. (This may or may not be done suddenly.) Either way, we always want the output to remain well regulated, and therefore, in effect, to “reject” the disturbance.

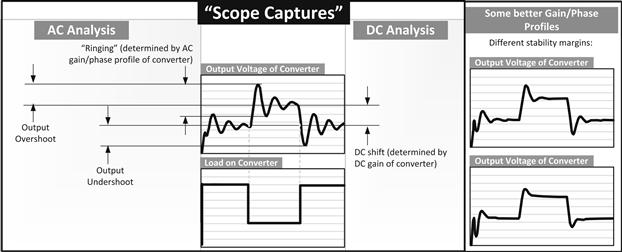

But in practice, that clearly does not happen as perfectly as desired. See Figure 12.3 for typical responses of converters to load transients. If instead of load, we suddenly increase the input voltage to a Buck regulator, the output tends to follow suit initially — since D=VIN/VO, and D has not immediately changed. This means, very briefly, VO is proportional to VIN.

Figure 12.3: Effect of load transients, typical responses and related terms.

To successfully correct the output and perform regulation, the control section of the IC needs to first sense the change in the output, which may take some time. After that it needs to correct the duty cycle, and that also may take some time. Then we have to wait for the inductor and output capacitor to either give up some of their stored energy or to gather some more — whatever is consistent with the conditions required for the new and final steady state. Eventually, the output will hopefully settle down again to its new DC value. We see that there are several such delays in the circuit before we can get the output to stabilize. Minimizing these delays is clearly of great interest. Therefore, for example, just using smaller filter components (L and C) will often help the circuit respond faster.

Note: A philosophical question: how can the control circuit ever know beforehand, how much correction (in duty cycle) to precisely apply (when it senses that the output has shifted from its set value on account of the disturbance)? In fact, it usually doesn’t! It can only be designed to know the general direction to move in, but it does not know beforehand, by how much it needs to move. Hypothetically speaking, we can do several things at our end. For example, we can command the duty cycle to change slowly and progressively, with the output being continuously monitored, and then immediately stop correcting the duty cycle at the very exact moment when the output equals its required regulation level. The duty cycle will thus never exceed the final level it is supposed to be in. However, clearly this is a slow correction process, and so though the duty cycle itself won’t overshoot or undershoot, the output will certainly remain uncorrected for a rather long time. In effect, that amounts to a relative output droop or overshoot, though it is not oscillatory in nature. Another way is to command the duty cycle to change suddenly by a large arbitrary amount (though, of course, in the right direction). However, now the possibility of output overcorrection arises. The output will start getting “corrected” immediately, but because the duty cycle is far in excess of its final steady value, the output will “go the other way,” before the control realizes it. After that, the control does try to correct it again, but it will likely “overreact” again. And so on. In effect, we now get “ringing” at the output. This ringing reflects a basic cause-effect uncertainty that is present in any feedback loop — the control may never fully know for sure whether the error it is seeing on the output is (a) immediate or delayed and (b) whether it is truly an external disturbance, rather than a result of its own attempted correction (coming back to haunt it, in a sense). So, if only after a lot of such avoidable ringing, the output does manage to stabilize, the converter is considered “marginally stable.” In the worst case, this ringing may go on forever, even escalating, before it stabilizes at some constantly oscillating level. In effect, the control loop is now “fully confused,” and the feedback loop is “unstable.”

An “optimum” feedback loop is neither too slow, nor too fast. If it is too slow, the output will exhibit severe overshoot (or undershoot), though the output will not “ring.” If it is too fast (overaggressive), the output will ring severely and even break into full instability (oscillations).

The study of how any disturbance propagates, either getting attenuated, or exacerbated in the process, is called “feedback loop analysis.” In practice, we can test the stability margin of a feedback loop by deliberately injecting a small disturbance at an appropriate point inside it (the “cause”), and then seeing at what magnitude and phase it returns to the same point (the “effect”). If, for example, we find that the disturbance reinforces itself (at the right phase), cause–effect separation will be lost, and instability will result. But if the effect manages to kill or suppress the cause, we will achieve stability.

Note: The use of the word “phase” in the previous paragraph implies we are talking of sine waves once again (there is no such thing as “phase” for a nonsinusoidal waveform). However, this turns out to be a valid assumption because, as we know, arbitrary disturbances can be decomposed into a series of sine wave components of varying frequencies. So, the disturbance/signal we “inject” (either on the bench or on a paper) can be a sine wave of arbitrary amplitude. By sweeping its frequency over a wide range, we can look for frequencies that have the potential to lead to instability. Because one fine day, we may receive a disturbance containing that particular frequency component, and if the margins are insufficient for that frequency, the system will break up into full-blown instability. But if we find that the system has enough margin over a wide range of (sine wave) frequencies, the system would, in effect, be stable when subjected to an arbitrarily shaped disturbance.

A word on the amplitude of the applied disturbances. In this chapter, we are studying only linear systems. That means, if the input to a two-port network doubles, so does the output. Their ratio is therefore unchanged. In fact, that is why the transfer function was never thought of as say, being a function of the amplitude of the incoming signal. But we do know that in reality, if the disturbance is too severe, parts of the control circuit may “rail” — that means, for example, an internal op-amp’s output may momentarily reach very close to its supply rails, thus affording no further correction for some time. We also do realize that there is no perfectly “linear system.” But any system can be approximated by a linear system if the stimulus (and response) is “small” enough. That is why, when we conduct feedback loop analysis of power converters, we talk in terms of “small-signal analysis” and “small-signal models.”

Note: For the same reason, even in bench testing, when injecting a sine wave to characterize the loop response, we must be careful not to apply too high an amplitude. The switching node voltage waveform must therefore be monitored during the test. Too large a jitter in the switching node waveform during the test can indicate possible “railing” (inside the error amplifier circuit). We must also ensure we are not operating close to the “stops” — for example, the minimum or maximum duty cycle limits of the controller and/or the set current limit. But the amplitude of the injected signal must not be too small either, otherwise switching noise is bound to overwhelm the readings (poor signal-to-noise ratio).

Note: For the same reason, most commercial power supply specifications will only ask for a certain transient response for say, from 80% load to max load, or even from 50% to max load, but not from zero to max load.

Transfer Function of the RC Filter, Gain, and the Bode Plot

We know that in general, vo/vi is a complex number called the transfer function. Its magnitude is defined as the “Gain.” Take the simplest case of pure resistors only. For example, suppose we have two 10-k resistors in series and we apply 10 V across both of them. Suppose we define the output as the voltage at the node between the two resistors, we will get 5 V at that point. The transfer function is a real number in this case: 5/10=0.5. So, we can say the gain is 0.5. That is the gain expressed as a pure ratio. We could, however, also express the gain in decibels, as 20×log(|vo/vi|). In our example, that becomes 20×log(0.5)=−6 dB. In other words, gain can be expressed either as 0.5 (a ratio) or in terms of decibels (−6 dB in our case).

Note that by definition, a “decibel” or “dB” is dB=20×log (ratio) — when used to express voltage or current ratios. For power ratios, dB is 10×log (ratio).

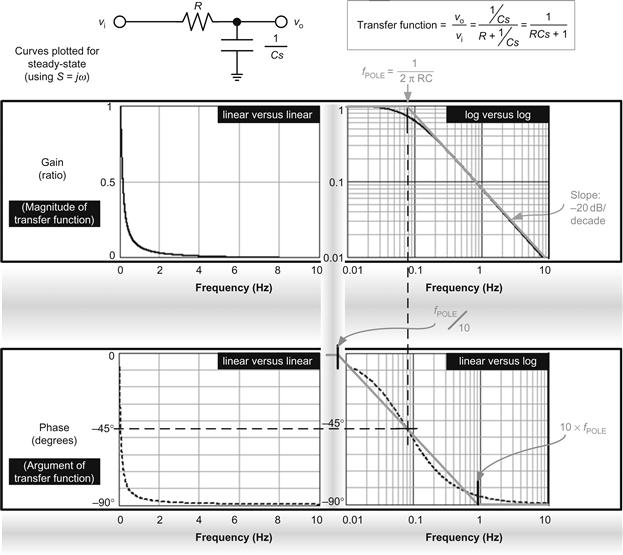

Let us now take our simple series RC-network and transform it into the frequency domain, as shown in Figure 12.4. We can discern that the procedure for deriving its transfer function is based on a simple ratio of impedances, now extended to the s-plane.

Figure 12.4: Analyzing the first-order low-pass RC filter in the frequency domain.

Thereafter, since we are looking at only steady-state excitations (not transient impulses), we can set s=jω, and plot out (a) the magnitude of the transfer function (i.e., its “gain”) and (b) the argument of the transfer function (i.e., its phase) — both in the frequency domain of course. This combined gain-phase plot is called a “Bode plot.”

A word on terminology: Note that initially, we will denote the ratio |vo/vi| as “Gain,” and we will distinguish it from 20×log(|vo/vi|) by calling the latter “GaindB.” But these terms are actually often used interchangeably in literature and later in this chapter too. It can get confusing, but with a little experience it should quickly become obvious what is being meant in any particular context. Usually, however, “Gain” is used to refer to its dB version, that is, 20×log (|vo/vi|).

Note that gain and phase are defined only in steady state as they implicitly refer to a sine wave (“phase” has no meaning otherwise!).

Here are a few observations based on Figure 12.4:

• We have converted the phase angle (which was originally in radians, θ=ωt) into degrees. That is because many engineers feel more comfortable visualizing angle in degrees instead of radians. To this end, we have used the following conversion: degrees=(180/π)×radians.

• Gain (on the vertical axis) is a simple ratio (not in decibels, unless stated otherwise).

• We have similarly converted from “angular frequency” (ω in radians/second) to the usual frequency (in Hz). Here we have used the equation: Hz=(radians/second)/(2π).

• By varying the type of scaling on the gain and phase plots, we can see that the gain becomes a straight line if we use log versus log scaling. Note that in Figure 12.1, we had to use log versus linear scaling to get that curve to look like a straight line.

• We will get a straight-line gain plot in either of the two following cases — (a) if the gain is expressed as a simple ratio (i.e., Vout/Vin), and plotted on a log scale (on the y-axis) or (b) if the gain is expressed in decibels (i.e., 20×log Vout/Vin), and we use a linear scale to plot it. Note that in both cases, on the x-axis, we can either use “f” (frequency) and plot it using a log scale, or take 20×log(f) upfront, and plot it on a linear scale.

• In plotting logs, we must remember that the log of 0 is impossible to plot (log 0→−∞), and so we must not let the origin of a log scale ever be 0. We can set it close to zero, say 0.0001, or 0.001, or 0.01, and so on, but certainly not 0.

• We thus confirm by looking at the curves in Figure 12.4 that the gain at high frequencies starts decreasing by a factor of 10 for every 10-fold increase in frequency. Note that by the definition of decibel, a 10:1 voltage ratio is 20 dB (check 20 log(10)=20). Therefore, we can say that the gain falls at the rate of −20 dB per decade at higher frequencies. A circuit with a slope of this magnitude is called a “first-order filter” (in this case a low-pass one).

• Further, since this slope is constant, the signal must also decrease by a factor of 2 for every doubling of frequency. Or by a factor of 4 for every quadrupling of frequency, and so on. But a 2:1 ratio is 6 dB, and an “octave” is a doubling (or halving) of frequency. Therefore, we can also say that the gain of a low-pass first-order filter falls at the rate of −6 dB per octave (at high frequencies).

• If the x and y scales are scaled and proportioned identically, the actual angle the gain plot will make with the x-axis is −45°. The slope, that is, tangent of this angle is then tan(−45°)=−1. Therefore, a slope of −20 dB/decade (or −6 dB/octave) is often simply called a “−1” slope.

• Similarly, when we have filters with two reactive components (i.e., an inductor and a capacitor), we will find the slope is −40 dB/decade (i.e., −12 dB/octave). This is usually called a “−2” slope (the actual angle being about −63° when the axes are proportioned and scaled identically).

• The bold gray straight lines in the right-hand side graphs of Figure 12.4 form the “asymptotic approximation.” We see that the gain asymptotes have a “break frequency” or “corner frequency” at f=1/(2πRC). This point can also be referred to as the “resonant frequency” of the RC filter, or a “pole” as discussed later.

• Note that the error/deviation between the actual curve and its asymptotic approximation is usually very small (only for first-order filters, as discussed later). For example, the worst-case error for the gain of the simple RC-network in Figure 12.4 is only −3 dB, and that occurs at the break frequency. Therefore, the asymptotic approximation is a valid “shortcut” that we will often use from now on to simplify the plots and their analysis.

• With regard to the asymptotes of the phase plot, we see that we get two break frequencies for it — one at one-tenth, and the other at 10 times the break frequency of the gain plot. The change in the phase angle at each of these break-points is 45° — giving a total phase shift of 90°. It spans two decades (symmetrically around the break frequency of the gain plot).

• Note that at the magnitude of the frequency where the single-pole lies, the phase shift (measured from the origin) is always 45° — that is, half the overall shift — whether we are using the asymptotic approximation or the actual curve.

• Since both the gain and the phase fall as frequency increases, we say we have a “pole” present. In our case, the pole is at the break frequency of 1/(2πRC). It is also called a “single-pole” or a first-order pole, since it is associated with a −1 slope.

• Later, we will see that similar to a “pole,” we can also have a “zero,” which is identifiable by the fact that both the gain and the phase start to rise with frequency from that location.

• In Figure 12.4, we see that the output voltage is clearly always less than the input voltage —that is, true for a (passive) RC-network (not involving op-amps yet). In other words, the gain is less than 1 (0 dB) at any frequency. Intuitively, that seems right because there seems to be no way to “amplify” a signal, without using an active device like an op-amp or transistor for example. However, as we will soon see, if we use passive filters involving both types of reactive components (L and C), we can in fact get the output voltage to exceed the input at certain frequencies. We then have “second-order” filters. And their response is what we more commonly refer to as “resonance.”

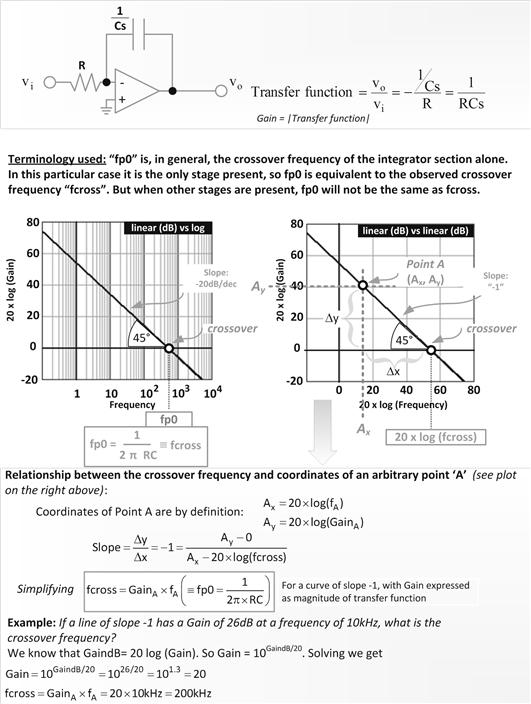

The Integrator Op-amp (“Pole-at-Zero” Filter)

Before we go on to passive networks involving two reactive components, let us look at an interesting active RC-based (first-order) filter. The one chosen for discussion here is the “integrator” because it happens to be the fundamental building block of any “compensation network.”

The inverting op-amp presented in Figure 12.5 has only a capacitor present in its feedback path. We know that under steady DC conditions, all capacitors essentially “go out of the picture.” In our case, we are therefore left with no negative feedback at all at DC — and therefore infinite DC gain (though in practice, real op-amps will limit this to a very high, but finite value). But more surprisingly perhaps, that does not stop us from knowing the precise gain at higher frequencies. If we calculate the transfer function of this circuit, we will see that something “special” once again happens at the point f=1/(2π×RC). However, unlike the passive RC filter, this point is not a break-point, nor a pole or zero location. It happens to be the point where the gain is unity (0 dB). We will denote this frequency as “fp0.”

Figure 12.5: The integrator (pole-at-zero) operational amplifier and some related math.

Note that so far, as indicated in Figure 12.5, the integrator is the only stage present. So, in this particular case, “fp0” is the same as the observed crossover frequency “fcross.” But in general, that will not be so. In general, in this chapter, “fp0” will refer to the crossover frequency the integrator stage would have produced were it present alone.

Note that the integrator has a single-pole at “zero frequency,” though 0 cannot be displayed on a log scale. We always strive to introduce this pole-at-zero because without it, the system would have rather poor DC (low-frequency) gain. The integrator is the simplest way to try to get as high a DC gain as possible. Having a high DC gain is the way to achieve good steady-state regulation in any power converter. This is indicated in Figure 12.3 too (labeled the “DC shift”). A high DC gain will reduce the DC shift.

On the right side of Figure 12.4, we have deliberately made the graph geometrically square in shape. To that end, we have assigned an equal number of grid divisions on the two axes, that is, the axes are scaled and proportioned identically. In addition, we have plotted 20×log(f) on the y-axis (instead of just log(f)). Having thus made the x- and y-axes identical in all respects, we realize why the slope is called “−1” — it really does fall at exactly 45° (now we see that visually too).

We take this opportunity to show how to do some simple math in the log-plane. This is shown in the lower part of Figure 12.5. We have derived one particular useful relationship between an arbitrary point “A” and the crossover frequency “fcross.” A numerical example is also included.

![]()

Note that, in general, the transfer function of any “pole-at-zero” function will always have the following form (X being a general real number)

![]()

The crossover frequency is then

![]()

Mathematics in the Log-Plane

As we proceed toward our ultimate objective of control loop analysis and compensation network design, we will be multiplying transfer functions of cascaded blocks to get the overall transfer function. That is because the output of one block forms the input for the next block, and so on. It turns out that the mathematics of gain and phase is actually much easier to perform in the log-plane rather than in a linear plane. The most obvious reason for that is log(AB)=log A+log B. So, we can add rather than multiply if we use logs. We have already had a taste of this in Figure 12.5. Let us summarize some simple rules that will help us later.

(a) If we take the product of two transfer functions A and B (cascaded stages), we know that the combined transfer function is the product of each:

![]()

But we also know that log(C)=log(AB)=log(A)+log(B). In words, the gain of A in decibels plus the gain of B in decibels gives us the combined gain (C) in decibels. So, when we combine transfer functions, since decibels add up, that route is easier than taking the product of various transfer functions.

(b) The overall phase shift is the sum of the phase shifts produced by each of the cascaded stages. So, phase angles simply add up numerically (even in the log-plane).

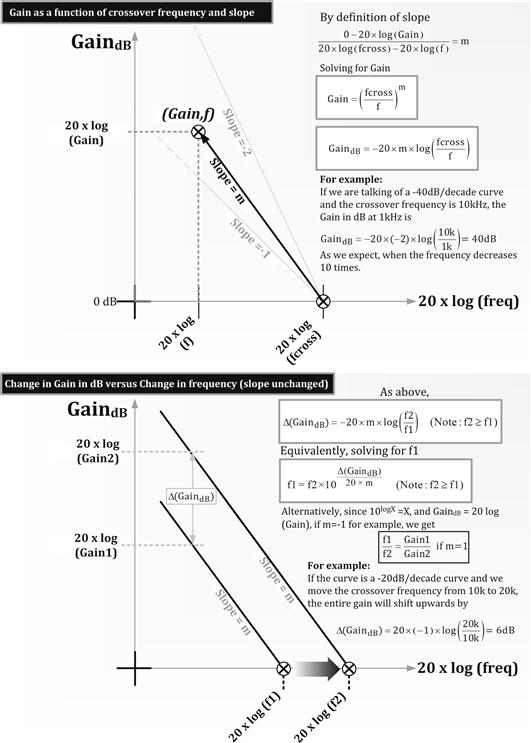

(c) In Figure 12.6, we are using the term GaindB (the Gain expressed in dB), that is, 20 log (Gain), where Gain is the magnitude of the transfer function.

(d) From the upper half of Figure 12.6, we see that if we know the crossover frequency (and the slope of the line), we can find the gain at any frequency.

(e) Suppose we now shift the plotted line vertically (keeping the slope constant) as shown in the lower half of Figure 12.6. Then, by the equation provided therein, we can calculate by what amount the crossover frequency shifts in the process. Or equivalently, if we shift the crossover frequency by a known amount, we can calculate what will be the impact on the DC gain — because we will know by how much the curve has shifted either up or down in decibels.

Figure 12.6: Some more math in the log-plane.

Transfer Function of the Post-LC Filter

Moving toward power converters, we note that in a Buck, there is a post-LC filter present. Therefore, its filter stage can be treated as a simple “cascaded stage” immediately following the switch. The overall transfer function is very easy to compute as per the rules mentioned in the previous section (a product of cascaded transfer functions). However, when we come to the Boost and Buck-Boost, we don’t have a post-LC filter — because there is a switch/diode connected between the two reactive components. However, it can be shown that even the Boost and Buck-Boost can be manipulated into a “canonical model” in which an effective post-LC filter appears at the output (like a Buck) — thus making them as easy to treat as a Buck (i.e., cascaded stages). The only difference is that in this canonical model, the actual inductance L (of the Boost and Buck-Boost) gets replaced by an equivalent (or effective) inductance equal to L/(1−D)2. The capacitor (the C of the LC) remains the same in the canonical model.

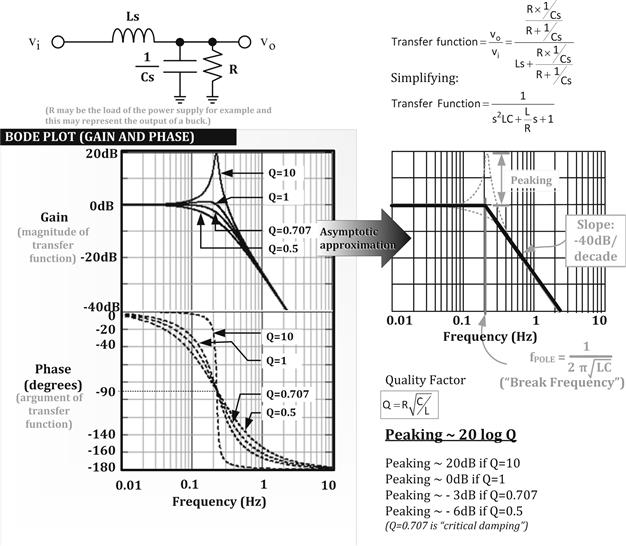

Since the simple LC post-filter now becomes representative of the output section of any typical switching topology, we need to understand it better as shown in Figure 12.7.

• For most practical purposes, we can assume that the break frequency (indicated in Figure 12.7) does not depend on the load or on any associated parasitic resistive elements of the components. In other words, the resonant frequency of the filter-plus-load combination (the break frequency, or “pole” in this case) can be taken to be simply 1/(2π√(LC), that is, no resistance term is included.

• The LC-filter gain decreases at the rate of “−2” at high frequencies. The phase also decreases providing a total phase shift of 180°. So, we say we have a “double-pole” (or second-order pole) at the break frequency 2π√(LC).

• Q is the “quality factor” (as defined in the figure). In effect, it quantifies the amount of “peaking” in the response curve at the break frequency point. Very simply put, if, for example, Q=20, then the output voltage at the resonant frequency is 20 times the input voltage. On a log scale, this is written as 20×log Q, as shown in the figure. If Q is very high, the filter is considered “under-damped.” If Q is very small, the filter is “over-damped.” And if Q =0.707, we have “critical damping.” In critical damping, the gain at the resonant frequency is 3 dB below its DC value, that is, the output is 3 db below the input (similar to an RC filter). Note that −3 dB is a factor of 1/√2=0.707, that is, roughly 30% lower. Similarly, +3 dB is √2=1.414 (i.e., roughly 40% higher).

• As indicated, the effect of resistance on the break frequency is usually minor, and therefore ignored. But the effect of resistance on Q (i.e., on the peaking) is significant (though eventually, that may be ignored too). However, we should keep in mind that the higher the associated series parasitic resistances of L and C, the lower is the Q. On the other hand, if we reduce the load, that is, increase the resistance across the C, Q increases. Remember that a high parallel resistance is in effect a small series resistance, and vice versa. In general, the presence of any significantly large series resistance ends up reducing Q, and any significantly small parallel resistance does just the same.

• As in Figure 12.4, we can use the “asymptotic approximation” for the LC gain plot too. However, the problem with trying to do the same with the phase of the LC is that there can now be a very large error — more so if Q becomes very large. Because if Q is very large, we can get a very abrupt phase shift (full 180°) in the region very close to the resonant frequency — not spread out smoothly over one-tenth to 10 times the break frequency as in Figure 12.4. This sudden phase shift can, in fact, become a real problem in a power supply, since it can induce “conditional stability” (discussed later). Therefore, a certain amount of damping helps from the standpoint of “phase-shift softening,” thereby avoiding any possible conditional stability tendencies.

• Unlike an RC filter, the output voltage can in this case be greater than the input voltage (around the break frequency). But for that to happen, Q must be greater than 1.

• Instead of using Q, engineers often prefer to talk in terms of the “damping factor,” defined as

![]()

So a high Q corresponds to a low ζ.

From the equations for Q and resonant frequency, we can conclude that if L is increased, Q tends to decrease, and if C is increased, Q increases.

Note: One of the possible pitfalls of putting too much output capacitance in a power supply is that we may be creating significant peaking (high Q) in its output filter’s response. And we know that when that happens, the phase shift is also more abrupt, and that can induce conditional instability. So generally, if we increase C but simultaneously increase L, we can keep the Q (and the peaking) unchanged. But the break frequency changes significantly and that may not be acceptable.

Figure 12.7: The LC filter analyzed in the frequency domain.

Summary of Transfer Functions of Passive Filters

The first-order (RC) low-pass filter transfer function (Figure 12.4) can be written in several different ways:

![]()

![]()

![]()

where ωO=1/(RC). Note that the “K” in the last equation above is a constant multiplier often used by engineers who are more actively involved in the design of filters. And in this case, K=ω0.

For the second-order filter (Figure 12.7), various equivalent forms seen in literature are

![]()

![]()

![]()

![]()

where ω0=1/(LC)1/2. Note that here, K=ω02. Also, Q is the quality factor, and ζ is the damping factor defined earlier.

Finally, note also, that the following two relations are very useful when trying to manipulate the transfer function of the LC-filter into different forms

![]()

Poles and Zeros

Let us try to “connect the dots” now. We had mentioned in the case of both the first- and the second-order filters (Figures 12.4 and 12.7) that something called a “pole” exists. We should recognize that we got poles in both cases only because both the first- and the second-order transfer functions had terms in “s” in the denominators of their respective transfer functions. So, if s takes on specific values, it can force the denominator to become zero, and the transfer function (in the complex plane) then becomes “infinite.” That is actually the point where we get a “pole” by definition. Poles occur wherever the denominator of the transfer function becomes zero. In general, the values of s at which the denominator becomes zero (i.e., the location of the poles) are sometimes called “resonant frequencies.” For example, a hypothetical transfer function “1/s” will give us a pole at zero frequency (the “pole-at-zero” we talked about in the integrator shown in Figure 12.5).

Note that the gain, which is the magnitude of the transfer function (calculated by putting s=jω), won’t necessarily be really “infinite” at the pole location as stated rather intuitively above. For example, in the case of the RC filter, we know that the gain is in fact always less than, or equal to unity, despite a pole being present at the break frequency.

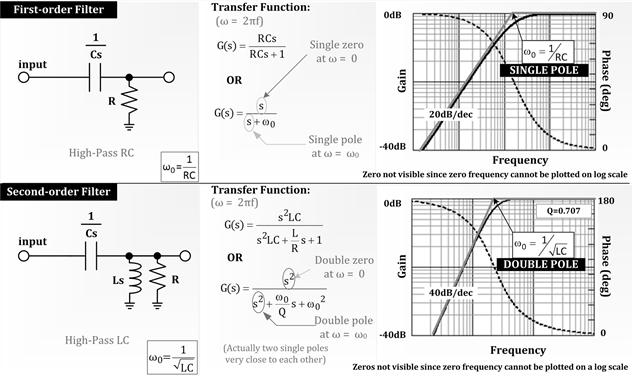

Note that if we interchange the positions of the two primary components of each of the passive low-pass filters we discussed earlier, we will get the corresponding “high-pass” RC and LC-filters, respectively. If we calculate their transfer functions in the usual manner, we will see that besides giving us poles, we also now get single- and double-zeros respectively as indicated in Figure 12.8. Zeros occur wherever the numerator of the transfer function becomes zero. Note that in Figure 12.8, the zeros are not visible, only the poles are. But the presence of the zeros is indicated by the fact that we started from the left of each graph with curves rising upward (rather than being flat with frequency), and for the same reason, the phase started off with 90° for the first-order filter and from 180° for the second-order filter, rather than from 0°.

Figure 12.8: High-pass RC and LC (first-order and second-order) filters.

We had mentioned that gain-phase plots are called Bode plots. In the case of Figure 12.8, we have drawn these on the same graph just for convenience. Here the solid line is the gain, and to read its value, we need to look at the y-axis on the left side of the graph. Similarly, the dashed line is the phase, and for it, we need to look at the y-axis on the right side. Note that for practice, we have reverted to plotting the gain as a simple ratio (not in decibels), but we are now plotting that on a log scale. The reader should hopefully, by now, have learnt to correlate the major grid divisions of this type of plot with the corresponding dB. So a 10-fold increase is equivalent to +20 dB, a 100-fold increase is +40 dB, and so on.

We can now generalize our approach. A network transfer function can be described as a ratio of two polynomials:

![]()

This can be factored out as

![]()

So, the zeros (i.e., leading to the numerator being zero) occur at the complex frequencies s=z1, z2, z3, …. The poles (denominator zero) occur at s=p1, p2, p3, …

In power supplies, we usually deal with transfer functions of the form:

![]()

So the “well-behaved” poles and zeros that we have been talking about are actually in the left-half of the complex-frequency plane (“LHP” poles and zeros). Their locations are at s=−z1, −z2, −z3, −p1, −p2, −p3, …. We can also, in theory, have right-half plane poles and zeros which have very different behavior to normal poles and zeros, and can cause almost intractable instability. This aspect is discussed later.

“Interactions” of Poles and Zeros

We will learn that in trying to find the overall transfer function of a converter, we typically add up several of its constituent transfer functions together. As mentioned, the math is easier to do on a log-plane if we are dealing with cascaded stages. The equivalent post-LC filter, which we studied in Figure 12.7, is one of those cascaded stages. However, for now we will still keep things general here, and simply show how to add up several transfer functions together in the log-plane. We just have several poles and zeros, and we must know how to add these up too.

We can break up the full analysis in two parts:

(a) For poles and zeros lying along the same gain plot (i.e., belonging to the same transfer function/stage) — the effect is cumulative in going from left to right. So, suppose we are starting from zero frequency and move right toward a higher frequency, and we first encounter a double-pole. We know that the gain will start falling with a slope of −2 beyond the corresponding break frequency. As we go further to the right, suppose we now encounter a single-zero. This will impart a change in slope of +1. So the net slope of the gain plot will now become −2+1=−1, after the zero location. Note that despite a zero being present, the gain is still falling, though at a lesser rate. In effect, the single-zero canceled half the double-pole, so we are left with the response of a single-pole (to the right of the zero).

The phase angle also cumulates in a similar manner, except that in practice a phase angle plot is harder to analyze. That is because phase shift can take place slowly over two decades around the resonant frequency. We also know that for a double-pole (or double-zero), the change in phase may in fact be very abrupt at the resonant frequency. However, eventually, a good distance away in terms of frequency, the net effect is still predictable. So, for example, the phase angle plot of a double-pole, followed shortly by a single-zero, will start with a phase angle of 0° (at DC) which will then decrease gradually toward −180° on account of the double-pole. But about a decade below the location of the single-zero, the phase angle will then gradually start increasing (though still remaining negative). It will eventually settle down to −180°+90°=−90° at high frequencies, consistent with a single net pole.

(b) For poles and zeros lying along different gain plots (belonging to say several cascaded stages that are being summed up) — we know that the overall gain in decibels is the sum of the gain of each (also in decibels). The effect of this math on the pole-zero interactions is therefore simple to describe. If, for example, at a specific frequency, we have a double-pole in one plot and a single-zero on the other plot, then the overall (combined) gain plot will have a single-pole at this break frequency. So, we see that poles and zeros tend to “destroy” (cancel) each other out. Zeros are considered to be “anti-poles” in that sense. But poles and zeros also add up with their own type. For example, if we have a double-pole on one plot, and a single-pole on the other plot (at the same frequency), the net gain (on the composite transfer function plot) will change slope by “−3” after this frequency. Phase angles also add up similarly. A few examples later will make this much clearer.

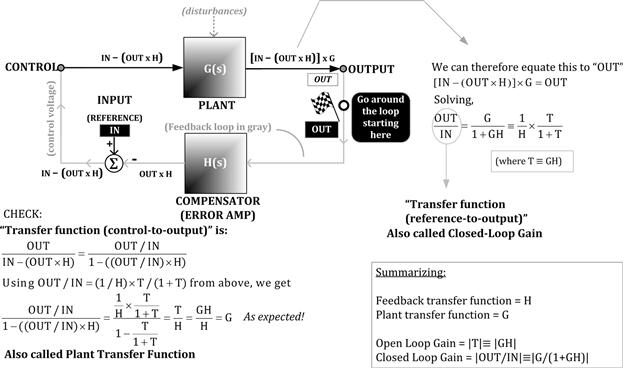

Closed and Open-Loop Gain

Figure 12.9 represents a general feedback controlled system. The “plant” (also sometimes called the “modulator”) has a “forward transfer function” G(s). A part of the output gets fed back through the feedback block, to the control input, so as to produce regulation at the output. Along the way, the feedback signal is compared with a reference level, which tells it what the desired level is for it to regulate to.

Figure 12.9: General feedback loop analysis.

H(s) is the “feedback transfer function,” and we can see this goes to a summing block (or node) — represented by the circle with an enclosed summation sign.

Note: The summing block is sometimes shown in literature as just a simple circle (nothing enclosed), but sometimes rather confusingly as a circle with a multiplication sign (or x) inside it. Nevertheless, it still is a summation block.

One of the inputs to this summation block is the reference level (the “input” from the viewpoint of the control system), and the other is the output of the feedback block (i.e., the part of the output being fed back). The output of the summation node is the “error” signal.

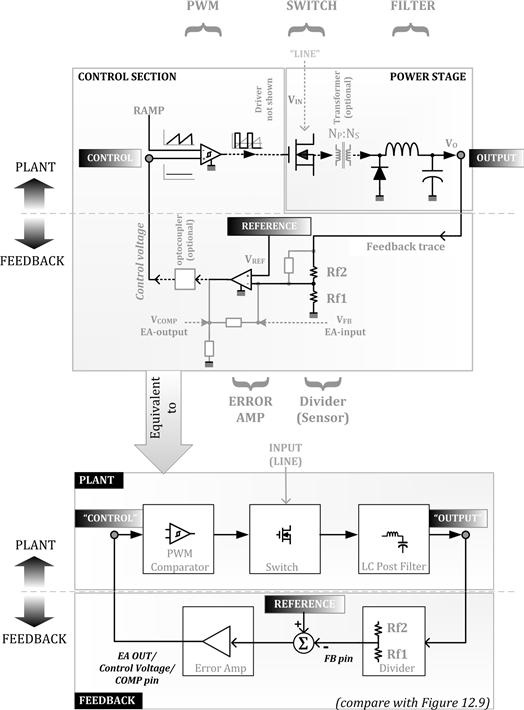

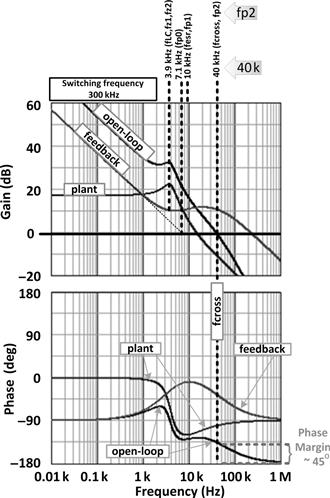

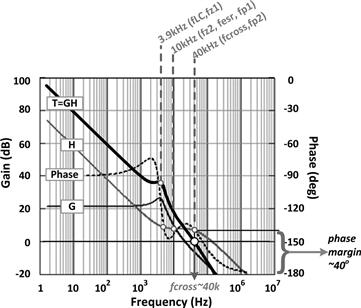

Comparing Figure 12.9 with Figure 12.10, we see that in a power supply, the plant itself can be split into several cascaded blocks. These blocks are — the PWM (not to be confused with the term “modulator” often used in general control loop theory referring to the entire plant), the power stage consisting of the driver-plus-switch, and the LC-filter. The feedback block, on the other hand, consists of the voltage divider (if present) and the compensating error amplifier. Note that we may prefer to visualize the error amplifier block as two cascaded stages — one that just computes the error (summation node) and another which accounts for the gain (and its associated compensation network). But in actual practice, since we apply the feedback signal to the inverting pin of the error amplifier, both functions are combined. Also note that the basic principle behind the PWM stage (which determines the duty cycle of the pulses driving the switch) is explained in the next section and in Figure 12.11.

Figure 12.10: A power converter: its plant and feedback (compensator) blocks.

Figure 12.11: PWM action, transfer function, and line feedforward explained.

In general, the plant can receive various “disturbances” that can affect its output. In a power supply, these are essentially the line and load variations. The basic purpose of feedback is to reduce the effect of these disturbances on the output voltage (see Figure 12.3, for example).

Note that the word “input” in control loop theory is not the physical input power terminal of the converter. Its location is actually marked in Figure 12.9. It happens to be the reference level we are setting the output to. The word “output” in control loop theory, however, is the same as the physical output terminal of the converter.

In Figure 12.9, we have derived the open-loop gain |T|=|GH|, which is simply the magnitude of the product of the forward and feedback transfer functions, that is, obtained by going around the loop fully once. On the other hand, the magnitude of the reference-to-output (i.e., input-to-output) transfer function is called the closed-loop gain. It is |G/(1+GH)|.

Note that the word “closed” has really nothing to do with the feedback loop being literally “open” or “closed” as sometimes thought. Similarly, “GH” is called the “open-loop transfer function” — irrespective of whether the loop is literally “open,” say for the purpose of measurement, or “closed” as in normal operation. In fact, in a typical power supply, we can’t even hope to break the feedback path for the purpose of any measurement. Because the gain is typically so high that even a minute change in the feedback voltage will cause the output to swing wildly. So, in fact, we always need to “close” the loop and thereby DC-bias the converter into full regulation, before we can even measure the so-called “open-loop” gain.

The Voltage Divider

Usually, the output VO of the power supply first goes to a voltage divider. Here it is, in effect, just stepped-down, for subsequent comparison with the reference voltage “VREF.” The comparison takes place at the input of the error amplifier, which is usually just a conventional op-amp (voltage amplifier).

We can visualize an ideal op-amp as a device that varies its output so as to virtually equalize the voltages at its input pins. Therefore, in steady state, the voltage at the node connecting Rf2 and Rf1 (see “divider” block in Figure 12.10) can be assumed to be (almost) equal to VREF. Assuming that no current flows out of (or into) the divider at this node, using Ohm’s law:

![]()

Simplifying,

![]()

So this tells us what ratio of the voltage divider resistors we must have to produce the desired output rail.

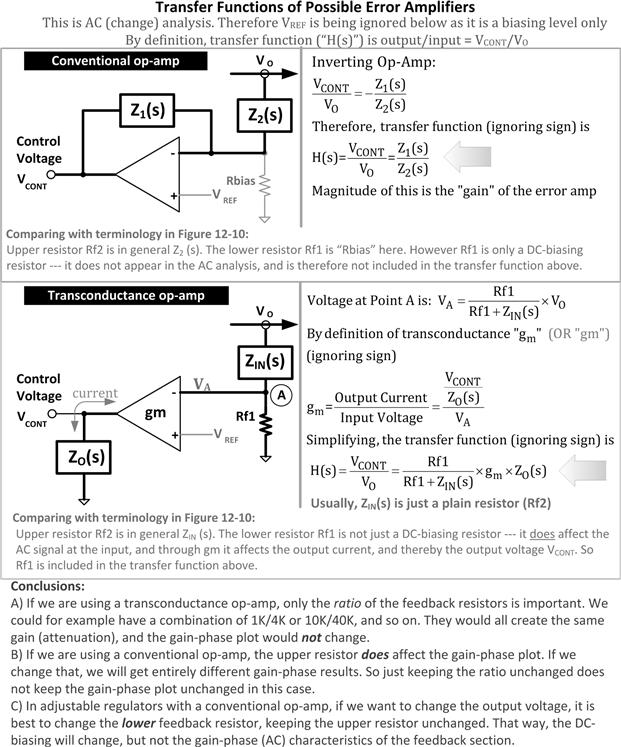

Note, however, that in applying control loop theory to power supplies, we are actually looking only at changes (or perturbations), not the DC values (though this was not made obvious in Figure 12.9). It can also be shown that when the error amplifier is a conventional op-amp, the lower resistor of the divider Rf1 behaves only as a DC biasing resistor and does play any (direct) part in the AC loop analysis.

Note: The lower resistor of the divider Rf1 does not enter the AC analysis, provided we are considering ideal op-amps. In practice, it does affect the bandwidth of a real op-amp, and therefore may on occasion need to be considered.

Note: If we are using a spreadsheet, we will find that changing Rf1 in a standard op-amp-based error amplifier divider does, in fact, affect the overall loop. But we should be clear that that is only because by changing Rf1, we have changed the duty cycle of the converter (via its output voltage), which thus affects the plant transfer function. Therefore, the effect of Rf1 is indirect. Rf1 does not enter into any of the equations that tell us the locations of the poles and zeros of the system.

Note: We will see that when using a transconductance op-amp as the error amplifier, Rf1 does enter the AC analysis.

Pulse-Width Modulator Transfer Function

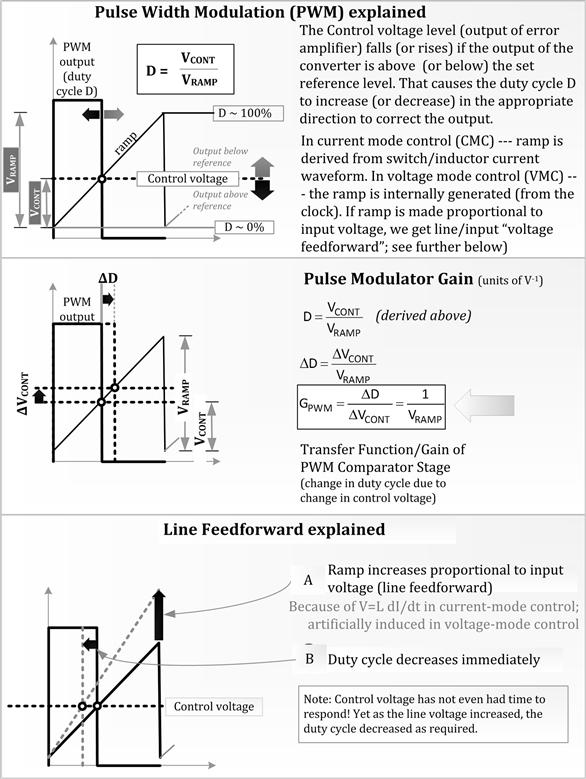

The output of the error amplifier (sometimes called “COMP,” sometimes “EA-out,” sometimes “control voltage”) is applied to one of the inputs of the PWM comparator. This is the terminal marked “Control” in Figures 12.9 and 12.10. On the other input of this PWM comparator, we apply a sawtooth voltage ramp — either internally generated from the clock when using “voltage-mode control,” or derived from the current ramp when using “current-mode control” (explained later). Thereafter, by standard comparator action, we get pulses of desired width with which to drive the switch.

Since the feedback signal coming from the output rail of the power supply goes to the inverting input of the error amplifier, if the output is below the set regulation level, the output of the error amplifier goes high. This causes the PWM to increase the pulse width (duty cycle) and thus try to make the output voltage rise. Similarly, if the output of the power supply goes above its set value, the error amplifier output goes low, causing the duty cycle to decrease (see upper third of Figure 12.11).

As mentioned previously, the output of the PWM stage is duty cycle, and its input is the “control voltage” or the “EA-out.” So, as we said, the gain of this stage is not a dimensionless quantity, but has units of 1/V. From the middle of Figure 12.11, we can see that this gain is equal to 1/VRAMP, where VRAMP is the peak-to-peak amplitude of the ramp sawtooth.

Voltage (Line) Feedforward

We had also mentioned previously that when there is a disturbance, the control does not usually know beforehand how much duty cycle correction to apply. However, in the lowermost part of Figure 12.11, we have described an increasingly popular technique being used to make that a reality, at least when faced with line disturbances. This is called input-voltage/line feedforward, or simply “feedforward.”

This technique requires the input voltage be sensed and the slope of the comparator sawtooth ramp increased if the input goes up. In the simplest implementation, a doubling of the input causes the slope of the ramp to double. Then, from Figure 12.11, we see that if the slope doubles, the duty cycle is immediately halved. In a Buck, the governing equation is D=VO/VIN. So, if a doubling of input occurs, we know that naturally, the duty cycle will eventually halve anyway. So, rather than wait for the control voltage to decrease by half to lower the duty cycle (keeping the ramp unchanged), we could also change the ramp itself — in this case, double the slope of the ramp and thereby achieve the very same result (i.e., halving of duty cycle) almost instantaneously.

Summarizing: the duty cycle correction afforded by this “automatic” ramp correction is exactly what is required for a Buck, since its duty cycle D=VO/VIN. More importantly, this correction is virtually instantaneous — we didn’t have to wait for the error amplifier to detect the error on the output (through the inherent delays of its RC-based compensation network scheme), and respond by altering the control voltage. So, in effect, by input/line feedforward, we have bypassed all major delays, and therefore line correction is almost immediate — and that amounts to almost “perfect” rejection of the line disturbance.

In Figure 12.11, it is implied that the PWM ramp is created artificially from the fixed internal clock. That is called voltage-mode control. In current-mode control, the PWM ramp is basically an appropriately amplified version of the switch/inductor current. We will discuss that in more detail later. Here we just want to point out that the line feedforward technique described in Figure 12.11 is applicable only to voltage-mode control. However, the original inspiration behind the idea does come from current-mode control — in which the PWM ramp, generated from the inductor current, automatically increases if the line voltage increases. That partly explains why current-mode control seems to respond so much “faster” to line disturbances than traditional voltage-mode control and one of its oft-repeated advantages.

However, one question remains: how good is the “built-in” automatic line feedforward in current-mode control? In a Buck topology, the slope of the inductor current up-ramp is equal to (VIN−VO)/L. So, if we double the input voltage, we do not end up doubling the slope of the inductor current. Therefore, neither do we end up automatically halving the duty cycle, as we can do easily in line feedforward applied to voltage-mode control.

In other words, voltage-mode control with proportional line feedforward control, though inspired by current-mode control, provides better line rejection than current-mode control (for a Buck). Voltage-mode control with line feedforward is considered by many to be a far better choice than current-mode control, all things considered.

Power Stage Transfer Function

As per Figure 12.10, the “power stage” formally consists of the switch plus the (equivalent) LC-filter. Note that this is just the plant minus the PWM. Alternatively stated, if we add the PWM comparator section to the power stage, we get the “plant” as per control loop theory, and that was symbolized by the transfer function “G” in Figure 12.9. The rest of the circuit in Figure 12.10 is the feedback block, and this was symbolized by the transfer function H in Figure 12.9.

We had indicated previously that whereas in a Buck, the L and C are really connected to each other at the output (as drawn in Figure 12.10), in the remaining two topologies they are not. However, the small-signal (canonical) model technique can be used to transform these latter topologies into equivalent AC models — in which, for all practical purposes, a regular LC-filter does appear after the switch, just as for a Buck. With this technique, we can then justifiably separate the power stage into a cascade of two separate stages (as for a Buck):

• A stage that effectively converts the duty cycle input (coming from the output of the PWM stage) into an output voltage.

• An equivalent post-LC filter stage that takes in this output and converts it into the output rail of the converter.

With this understanding, we can finally build the final transfer functions presented in the next section.

Plant Transfer Functions of All the Topologies

Let us discuss the three topologies separately here. Note that we are assuming voltage-mode control and continuous conduction mode (CCM). Further, the “ESR (effective series resistance) zero” is not included here (a simple modification introduced later).

(a) Control-to-output transfer (plant) function

The transfer function of the plant is also called the “control-to-output transfer function” (see Figure 12.10). It is the output voltage of the converter, divided by the “control voltage” (i.e., the output of the error amplifier, or “EA-out”). We are, of course, talking only from an AC point of view, and are therefore interested only in the changes from the DC-bias levels.

The control-to-output transfer function is a product of the transfer functions of the PWM modulator, the switch and the LC-filter (since these are cascaded stages). Alternatively, the control-to-output transfer function is a product of the transfer function of the PWM comparator and the transfer function of the “power stage.”

We already know from Figure 12.11 that the transfer function of the PWM stage is equal to the reciprocal of the amplitude of the ramp. And as discussed in the previous section, the power stage itself is a cascade of an equivalent post-LC stage (whose transfer function is the same as the passive low-pass second-order LC filter we discussed previously in Figure 12.7), plus a power stage that finally converts the duty cycle into a DC output voltage VO.

We are now interested in finding the transfer function of the latter stage referred to above.

The overall question is — what happens to the output when we perturb the duty cycle slightly (keeping the input to the converter VIN constant). Here are the steps for a Buck

![]()

![]()

So, in very simple terms, the required transfer function of the intermediate “duty cycle-to-output stage” is equal to VIN for a Buck.

Finally, the control-to-output (plant) transfer function is the product of three (cascaded) transfer functions, that is, it becomes

![]()

Alternatively, this can be written as

![]()

where ω0=1/√(LC) and ω0Q=R/L.

(b) Line-to-output transfer function

Of great importance in any converter design is not what happens to the output when we perturb the reference (which is what the closed-loop transfer function really is), but what happens at the output when there is a line disturbance. This is often referred to as “audio susceptibility” (probably because early converters switching at around 20 kHz would emit audible noise under this condition).

The equation connecting the input and output voltages is simply the DC input-to-output transfer function, that is,

![]()

So, D is also the factor by which the input line (VIN) disturbance gets scaled, and thereafter applied at the input of the equivalent LC post-filter for further attenuation as per Figure 12.7. We already know the transfer function of the LC low-pass filter. Therefore, the line-to-output transfer function is the product of the two cascaded transfer functions, that is,

![]()

where R is the load resistor (at the output of the converter).

Alternatively, this can be written as

![]()

where ω0=1/√(LC), and ω0Q=R/L.

(a) Control-to-output (plant) transfer function

Proceeding similar to the Buck, the steps for this topology are

So the control-to-output transfer function is a product of three transfer functions:

![]()

where L=L/(1−D)2. Note that this is the inductor in the “equivalent post-LC filter” of the canonical model. Also note that C remains unchanged.

Alternatively, the above transfer function can be written as

![]()

where ω0=1/√(LC) and ω0Q=R/L.

Note that we have included a surprise term in the numerator above. By detailed modeling, it can be shown that both the Boost and the Buck-Boost have such a term. This term represents a zero, but a different type to the “well-behaved” zero discussed so far (note the sign in front of the s-term is negative, so it occurs in the positive, i.e., the right-half portion of the s-plane). If we consider its contribution to the gain-phase plot, we will find that as we raise the frequency, the gain will increase (as for a normal zero), but simultaneously, the phase angle will decrease (opposite to a “normal” zero, more like a “well-behaved” pole).

Why is that a problem? Because, later we will see that if the overall open-loop phase angle drops sufficiently low, the converter can become unstable. That is why this zero is considered undesirable. Unfortunately, it is virtually impossible to compensate for (or “kill”) by normal techniques. The usual method is to literally “push it out” — to higher frequencies where it can’t affect the overall loop significantly. Equivalently, we need to reduce the bandwidth of the open-loop gain plot to a frequency low enough that it just doesn’t “see” this zero. In other words, the crossover frequency must be set much lower than the location of the RHP zero.

The name given to this zero is the “RHP zero,” as indicated earlier — to distinguish it from the “well-behaved” (conventional) left-half-plane zero. For the Boost topology, its location can be found by setting the numerator of the transfer function above (see its first form) to zero, that is, s×(L/R)=1. So, the frequency location of the Boost RHP zero is

![]()

Note that the very existence of the RHP zero in the Boost and Buck-Boost can be traced back to the fact that these are the only topologies where an actual LC post-filter doesn’t exist on the output. Though, by using the canonical modeling technique, we have managed to create an effective LC post-filter, the fact that in reality there is a switch/diode connected between the actual L and C of the topology is what is ultimately responsible for creating the RHP zero.

Note: The RHP zero is often explained intuitively as follows — if we suddenly increase the load, the output dips slightly. This causes the converter to increase its duty cycle in an effort to restore the output. Unfortunately, for both the Boost and the Buck-Boost, energy is delivered to the load only during the switch off-time. So, an increase in the duty cycle decreases the off-time, and there is now, unfortunately, a smaller interval available for the stored inductor energy to get transferred to the output. Therefore, the output voltage, instead of increasing as we were hoping, dips even further for a few cycles. This is the RHP zero in action. Eventually, the current in the inductor does manage to ramp up over several successive switching cycles to the new level consistent with the increased energy demand, and so this strange situation gets corrected — provided full instability has not already occurred!

The RHP zero can occur at any duty cycle. Note that its location is at a lower frequency as D approaches 1 (i.e., at lower input voltages). It also moves to a lower frequency if L is increased. That is one reason why bigger inductances are not preferred in Boost and Buck-Boost topologies.

(b) Line-to-output transfer function

We know that

![]()

![]()

Alternatively, this can be written as

![]()

where ω0=1/√(LC) and ω0Q=R/L.

(a) Control-to-output transfer (plant) function

Here are the steps for this topology:

(Yes, it is an interesting coincidence — the slope of 1/(1−D) calculated for the Boost is the same as the slope of D/(1−D) calculated for the Buck-Boost!)

So, the control-to-output transfer function is

where L=L/(1−D)2 is the inductor in the equivalent post-LC filter.

Alternatively, this can be written as

where ω0=1/√(LC) and ω0Q=R/L.

Note that, as for the Boost, we have included the RHP zero term in the numerator (in gray). Its location is similarly calculated to be

![]()

This also comes in at a lower frequency if D approaches 1 (lower input). Compare with what we got for the Boost:

![]()

(b) Line-to-output transfer function

We know that

![]()

![]()

This is alternatively written as

![]()

where ω0=1/√(LC) and ω0Q=R/L.

Note that the plant and line transfer functions of all the topologies calculated above do not depend on the load current IO. That is why gain-phase plots (Bode plots) do not change much if we vary the load current (provided we stay in CCM as assumed above).

Note also that so far we have ignored a key element of the transfer functions — the ESR of the output capacitor and its contribution to the “ESR–zero.”

Whereas the DCR (DC resistance) usually just ends up decreasing the overall Q (less “peaky” at the second-order (LC) resonance), the ESR actually contributes a zero to the open-loop transfer function. And because it affects the gain and the phase significantly, it usually can’t be ignored — certainly not if it lies below the crossover frequency (i.e., at a lower frequency). We will account for it later by just canceling it out with a pole.

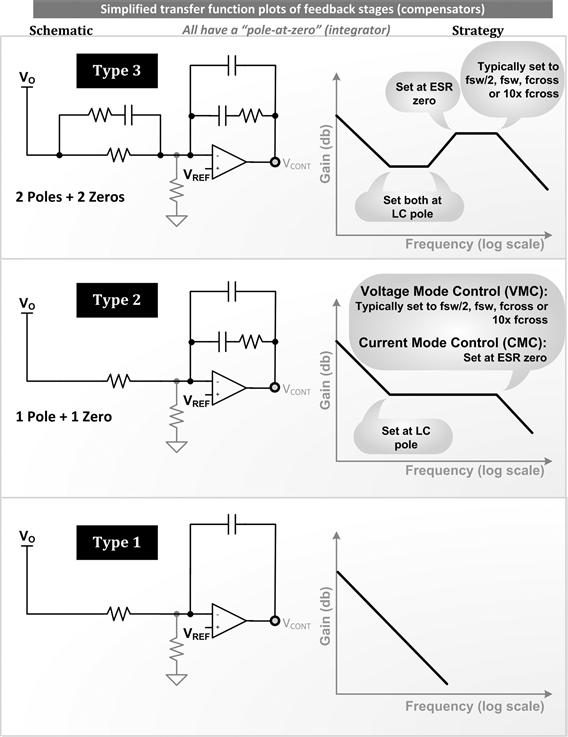

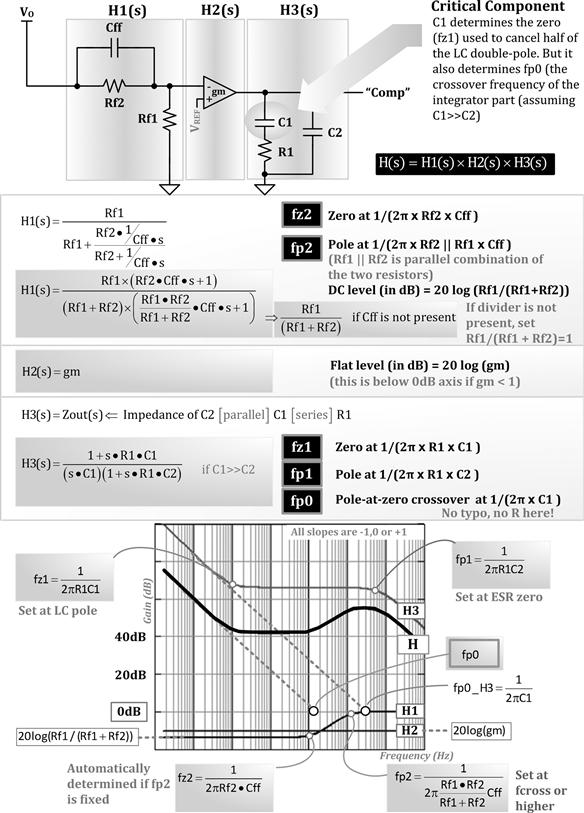

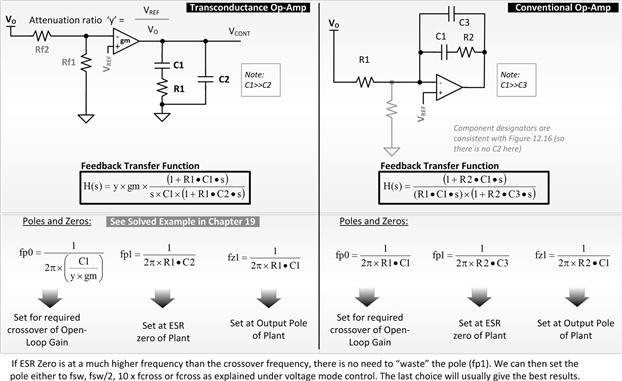

Feedback-Stage Transfer Functions

We can now lump the entire feedback section, including the voltage divider, error amplifier, and the compensation network. However, depending on the type of error amplifier used, these must be evaluated rather differently. In Figure 12.12, we have shown two possible error amplifiers often used in power converters.

Figure 12.12: Possible feedback stages and some important conclusions in their application.

The analysis is as follows:

• The error amplifier can be a simple voltage-to-voltage amplification device, that is, the traditional “op-amp” (operational amplifier). This type of op-amp requires local feedback (between its output and inputs) to make it stable. Under steady DC conditions, both the input terminals are virtually at the same voltage level. This determines the output voltage setting. But, as discussed previously, though both resistors of the voltage divider affect the DC level of the converter’s output, from the AC point of view, only the upper resistor enters the picture. So the lower resistor is considered just a DC biasing resistor, and therefore we usually ignore it in control loop (AC) analysis.

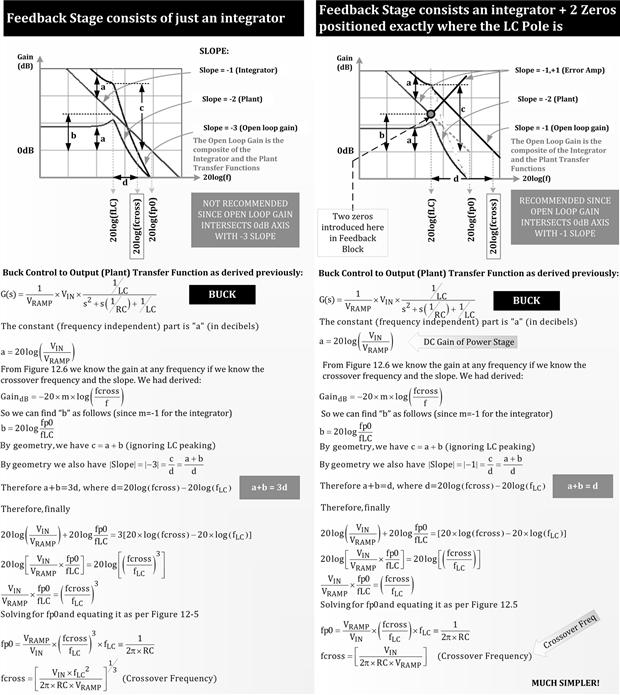

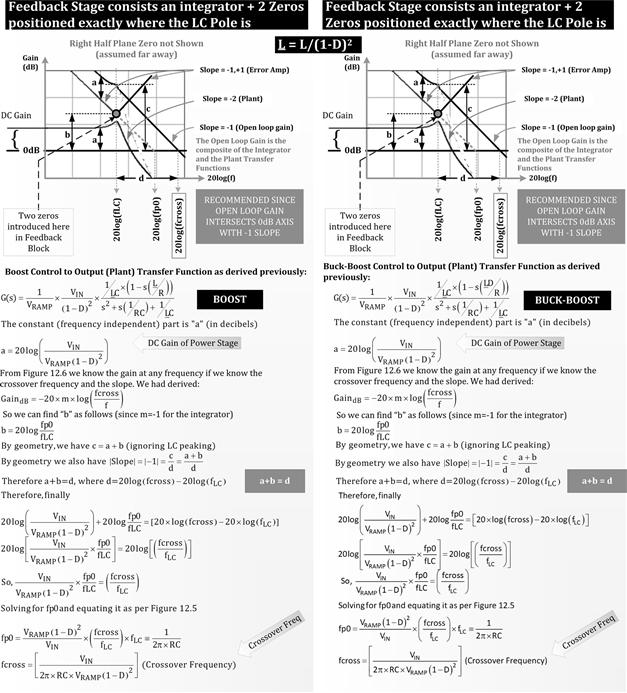

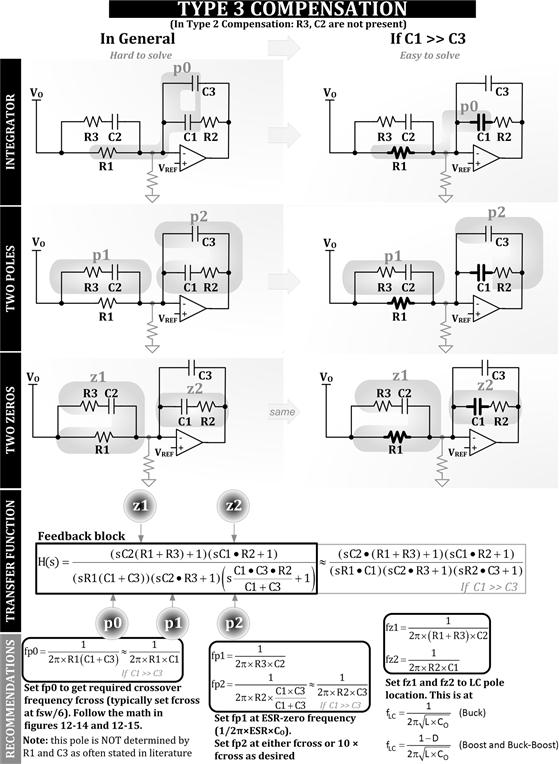

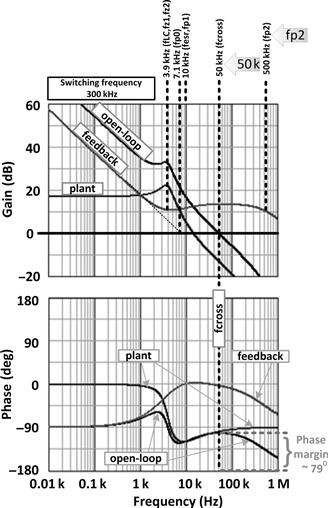

• The error amplifier can also be a voltage-to-current amplification device, that is, the “gm op-amp” (operational transconductance amplifier, or “OTA”). This is an open-loop amplifier stage with no local feedback — the loop is, in effect, completed externally. The end result still is that the voltage at its input terminals returns to the same voltage (just like a regular op-amp). If there is any difference in voltage between its input pins “ΔV,” it converts that into a current ΔI flowing out of its output pin (determined by its transconductance gm=ΔI/ΔV). Thereafter, since there is an impedance ZO connected from the output of this op-amp to ground, the voltage at the output pin of this error amplifier (i.e., the voltage across ZO — also called the control voltage) changes by an amount equal to ΔI×ZO. For the gm op-amp, both Rf2 and Rf1 enter into the AC analysis, because they together determine the error voltage at the pins, and therefore the current at the output of the op-amp. Note that the divider can in this case be treated as a simple (step-down) gain block of Rf1/(Rf1+Rf2) (using the terminology of Figure 12.10), cascaded with the gm op-amp stage that follows.