Defining a Cross-Domain Maturity Model

Abstract

This chapter discusses the need to establish a Master Data Management (MDM) maturity model, how to define the right maturity model, and how to apply maturity measurement consistently across a multi-domain model. It also provides examples of various maturity milestones that should be tracked and covers the difference between behavioral and functional measurement of MDM maturity.

This chapter covers the need to establish a Master Data Management (MDM) maturity model, how to define the right maturity model, and how to apply maturity measurement consistently across a multi-domain model. It also provides examples of various maturity milestones that should be tracked.

Common to any MDM domain strategy is the need to transition master data from an insufficiently managed state to a highly managed and controlled state necessary to achieve goals identified in the MDM program plan. Achieving MDM goals requires a well-orchestrated set of milestones involving various disciplines, practices, capabilities, and quality improvement. Capturing the essence of this as a high-level, measureable roadmap over time is the purpose of a maturity model and the reason that it provides an important tool to track program progress. A multi-domain maturity model is the ability to consistently define and track progress across all MDM domains in the program scope. Let’s break this down further, and then provide an example of a maturity model.

Maturity States

MDM and data governance maturity models tend to reflect either a behavioral or a functional approach for measuring states of maturity:

• Behavioral: Expressing maturity from a behavioral perspective using maturity phase descriptions such as Unaware, Undisciplined, Reactive, Disciplined, Effective, Proactive, and Advanced

• Functional: Expressing maturity from a functional perspective using maturity phase descriptions such as Unstructured, Structured, Foundational, Managed, Repeatable, and Optimized

The actual number of maturity phases in either approach can vary, although four or five is the most common. Both type of approaches have merit and eventually tend to conceptually align at the advanced states, where end-state maturity is commonly expressed as being an advanced or optimized model and process. It is the ability to rationalize and measure the milestones in the earlier stages of the maturity model that differ within each approach.

From a multi-domain program perspective, the functional approach is most effective for measuring the initiation and maturity of MDM practices and capabilities across the domains as the program plan progresses. This approach assumes that behavioral conditions such as reactive and proactive will continue to exist throughout the functional phases and across domains. In reality, there never is a purely proactive state without reactive situations, which is the reason that using the behavioral approach for measuring multi-domain maturity does not provide a truly comprehensive and progressive measurement. Unplanned or unpredictable conditions will always exist, creating reactive situations that must be addressed at any point in an MDM program even when it has reached a highly managed and optimized state. However, as the MDM program and domain areas increase their functional capabilities, there should be shifts to more proactive behavior and less reactive behavior.

How and Where Can Maturity Be Measured?

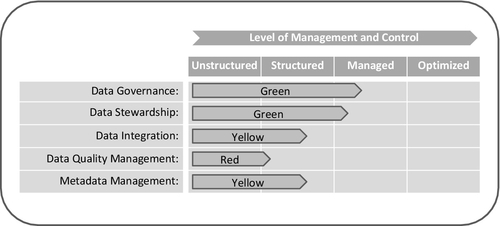

Figure 5.1 is a simple, high-level example of a multi-domain maturity model using the functional approach. This example illustrates the need to gauge maturity across data governance, data stewardship, data integration, data quality management, and metadata management. Defining and measuring the milestones for these practices and capabilities not only help differentiate where the focus, progress, and issues exist across the multi-domain model, but can serve as an excellent overall program dashboard for review with a data governance board or an executive steering committee. The actual maturity phases chosen for use in the model can be different than what is shown in Figure 5.1. No matter which phases are chosen, they should reflect maturity states and milestones that are relevant to the program goals and objectives, are consistent and measureable across multiple domains, and will be meaningful to the intended audiences.

Measuring maturity from a functional perspective should not be interpreted as just measuring from a technical or application capability perspective. This is often the case, and that approach would overlook important MDM capabilities that are driven from a business perspective, such as data governance, stewardship, and quality management at the business-process level. MDM maturity should be understood and measured from both a technical and business capability point of view. As shown in Figure 5.1 and later in this chapter in Figure 5.2, measuring maturity at the program and domain levels should reflect milestones that are achieved by improving people, process, and technology capabilities that all contribute to better quality and control of master data.

The dashboard in Figure 5.1 indicates the following conditions:

• The data governance component of the MDM model has been firmly established across the domains in scope, is being well managed and functioning as planned, is achieving its targeted milestones regarding MDM, continues to gain visibility and momentum across the business and information technology (IT) landscapes, and is influential with improving the level of control and data management focus needed for master data.

• The data stewardship discipline and assignments are moving into place well, although a few additional resources still need to be identified or recruited to fill needs for some domain area support roles. The company has recognized the need for establishing formal data steward job roles and is on target for fulfilling these positions.

• In some domains, the plans, architecture, and capability for master data integration is on track and progressing well. In other domains, the master data integration plans are lagging due to delays in the implementation of new enterprise architecture, systems, and transition plans.

• Important data quality profiling and improvement projects are on hold while the IT organization is renegotiating the application licenses and consulting services contracts with its data quality tool vendor.

• With data governance and data stewardship practices firmly established, good headway is being made with some metadata management objectives, but because plans for data integration and data quality improvement capabilities are lagging behind, this has impacted progress with the metadata management plans.

Data Governance Maturity

The maturity of MDM is highly dependent on the ability of data governance to reach a sufficiently mature state so that it can influence and drive the maturity of the other MDM disciplines and capabilities that this book has been discussing. When and how data governance reaches a mature state will basically come down to a few key elements:

• When ownership, partnership, and cross-functional collaboration are established and continually work well

• When the governance council can repeatedly make timely, actionable decisions

• How effective the governance process is at turning the dials left or right to control its data domain, which involves having control from both a reactive and proactive perspective

The most important part of the MDM process is the ability to recognize and effectively manage business risk and operational issues. This can be accomplished by having established a mature, domain-based data governance dynamic where engagement, review, and decision making are well orchestrated in a timely and fluid process. Domain-based data governance teams aligned with MDM domains will provide timely and effective decision making to support the ongoing MDM goals, objectives, and priorities. Because a domain’s data governance charter can span master data, transactional data, reference data, and metadata, there needs to be clear recognition of the master data and representation from the MDM program office with each domain governance team.

Examples of key MDM milestones for data governance maturity include the following:

• Domain-based data governance charters, owners, teams, and the decision authority have been identified.

• The master data associated to the domains have been identified and approved by data governance from an owning and using perspective.

• Data management policies and standards have been defined and implemented.

• Measurements and dashboards are in place to measure and drive master data quality and control.

Data Stewardship Maturity

For data stewardship to be effective, the concept and practice of data stewardship needs to be clearly imbedded in the key junction points between the entry and management of the master data. It is at these junctions where governance control and quality control can best be influenced through the use of committed and well-focused data stewards.

Probably the most difficult challenge with data stewardship is getting a sufficiently broad and consistent commitment for the data steward roles. Chapter 7 will cover this topic in more detail. If this challenge isn’t well recognized and addressed early in the MDM and data governance planning stages, the MDM initiative is likely to be slowed. Late emergence of these types of underlying issues can be very disruptive. The concept and expectations for data stewardship must be agreed upon early and applied consistently for MDM practices to be well executed because data stewards will play a large role in the actions associated with MDM.

The ability for data stewardship to reach a mature state requires that the data steward model is well executed and functioning cohesively in alignment with the data governance process. Many milestones targeted in the data governance maturity model need to be executed through the responsibilities and action plans assigned to the data stewards.

Similar to data governance, maturity of data stewardship is gauged by the extent to which this discipline can be orchestrated in a timely and fluid manner, but also by how successfully and consistently the data stewards address these actions. A mature data stewardship model should have a visible, closed-loop process that tracks the major action items and mitigation plans that involve the data stewards. Not having clear or consistent closure from data stewards regarding global and local initiatives usually suggests a problem with accountability or priority and signals that the MDM practice may be too focused on meetings and not focused enough on action.

Examples of key MDM milestones for data stewardship maturity include the following:

• A data steward model has been identified with processes, tools, and training needs defined for the data steward roles.

• MDM control points and practices have been defined within each domain.

• Data stewards have been identified and assigned to data quality and metadata management control areas.

• Data stewards are engaged, are meeting quality control requirements, and provide regular status updates to their data governance teams.

Data Integration Maturity

As noted in Chapter 1, achieving a single version of the truth (i.e., a golden record) is a primary objective of MDM. While this may not always be entirely possible for all data entities or records due to system or operational reasons, MDM practices should at least minimize occurrences of data duplication or variation by introducing control processes that meet an acceptable level of quality and integrity defined by data governance. Achieving a single version of the truth or minimizing occurrences involves data integration and rules-based entity resolution, as has already been discussed in Chapter 3.

Many master data quality and integrity issues can be traced to data integration activities that lack sufficient standards and rules during integration, or insufficient user inspection and acceptance criteria. The result is that poor data quality is introduced into systems and business operations, which later creates downstream user frustration, process errors, analytical issues, and back-end correction needs—all of which are classic reactions to poorly integrated data. Much of this can be prevented with more upfront data governance engagement, enforcement of quality control standards, and more effective user acceptance requirements. The data governance and data quality teams must be engaged in data integration plans to review and approve the integration standards, rules, results, and acceptance thresholds. The maturity model should include some key milestones that reflect this need.

Examples of key MDM milestones for data integration maturity include the following:

• Data integration projects that affect master data are reviewed by the domain data governance teams.

• Data stewards are assigned to participate in data integration project teams involving master data.

• IT and data governance teams have agreed on the extract, transform, and load (ETL) rules and quality acceptance requirements associated to data integration projects affecting master data.

• Consistent, reusable quality rules and standards are applied to master data integration projects to ensure that data quality is maintained.

Data Quality Maturity

A single version of the truth and data quality management practices go hand in hand. A single version of the truth implies that the available information is unambiguous, accurate, and can be trusted. Those attributes all require that good data quality management practices are occurring. If this is occurring, this is an indication that there is a quality culture within the company. Establishing this throughout the enterprise is mandatory for achieving maximum benefit from the enterprise data. Data quality is everyone’s responsibility, but that requires organizations to establish the proper foundation through continuous training, clear quality improvement objectives, effective collaboration, and an efficiently adaptive model that can continue to deliver quality results quickly despite constant changes.

To achieve a quality culture and management, there are many programs, mechanisms, roles, and relationships that need to work in harmony. Using the dashboard example presented earlier in Figure 5.1, data quality management cannot reach a mature state unless the data governance, data stewardship, and data integration practices are all enabled, functioning well, and can support data quality requirements. That shouldn’t be a surprise, though, because a quality culture needs people, process, and technology that are all in sync with the recognition, management, and mitigation of quality issues.

Determining the level of cross-functional collaboration for quality management is the first factor in gauging data quality maturity. That collaboration is first established through the maturation of data governance, and then should translate into creating a foundation to drive and mature data quality management. Reaching maturity in data governance should mean that less effort will be needed with data quality management since better governed data needs less correction.

Another key factor in gauging maturity is how well the quality of the data is serving the analytics community. Data quality and integrity issues are the primary cause of the conflict and divide between operational and analytical practices. Solving this gap starts by creating more awareness and connection between data creators and consumers, but ultimately it is about improving the quality, consistency, and context of the master data. Value of the data needs to be measured by how well it is servicing both the operational and analytical process. How valuable the master data is or needs to become should be a well-recognized factor and a key driver in a highly functioning and mature MDM domain model.

Data quality maturity essentially means having reached a state where an acceptable level of quality management and control and a shared view about data value exist between operations and analytics. Reaching this state of acceptability and harmony is, of course, a very tall order. Making significant strides with quality improvement will require time and building out from well-coordinated efforts that are orchestrated from the data governance and data quality forums. This is exactly why MDM and data governance need to be approached as long-term commitments and become well entrenched as priorities in the operational and analytical functions of a company.

Examples of key MDM milestones for data quality maturity include the following:

• A data quality team or cross-functional forum is working in alignment with data governance.

• In each domain, there has been analysis and prioritization of key data quality issues affecting operational and analytical functions.

• Data improvement is occurring, supported by data quality management policies and standards.

• Data quality is being monitored and maintained within governance-approved control targets.

Metadata Management Maturity

Quality management is often discussed in the context of the quality requirements and measurement of the actual data entities and data elements. However, quality of master data assets should include the quality and control of the associated business metadata. Managing quality and control of metadata can be as complex and difficult as the quality and control of the actual data elements. This is because, like the data itself, there are various types and sources of the metadata associated with the data element that can make consistent control of the metadata very difficult across data domains and models.

Therefore, master data needs to have a metadata management plan that identifies what metadata will be in scope, how it will be managed, and what tools and repositories will be involved. Chapter 2 discussed how metadata needs to be inventoried as part of the domain’s master data plan, and Chapter 10 will provide a deeper exploration of the discipline and practice of metadata management in a multi-domain program. Because many actions are needed to define the metadata plan, organize and execute the control process, and ensure that data governance is involved in this, a few key milestones should be summarized in the maturity model.

Examples of key MDM milestones for metadata maturity include the following:

• The key business metadata associated with a domain’s master data has been identified for quality review.

• Quality management objectives and priorities for the metadata has been established and approved through data governance.

• Enterprise policies and standards for metadata management are in place and being enforced by data governance.

• Key data definitions are distinct, with data steward control processes in place to manage the change control of these definitions

Domain Maturity Measurement Views

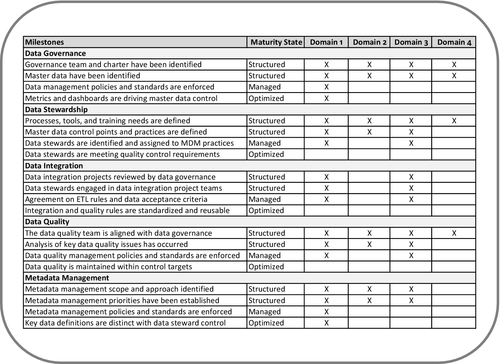

Figure 5.2 provides an example of maturity milestone tracking for each domain in scope. Using this format, many more milestones can be identified and tracked as needed. The achievement of the milestones can be also be indicated by using dates and references to the evidence, which can be useful for auditing purposes.

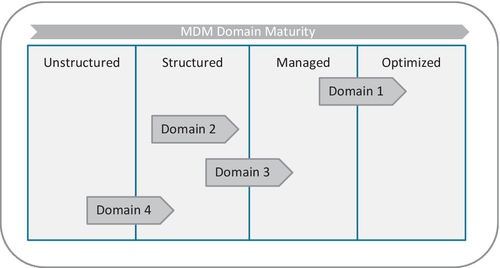

Figure 5.3 expresses the maturity model positioning of each domain based on the milestone tracking and achievements indicated in Figure 5.2. Each maturity level column in Figure 5.3 can be annotated further to show its key milestones as background context for where the domains are being positioned.

Conclusion

Although reaching a mature state of MDM may not be stated explicitly as a goal or objective in strategy and planning, it certainly is implied in the concept of MDM. In other words, reaching maturity is needed to achieve a successful state result from MDM. It is often overlooked in the MDM planning stages that as much focus needs to go into institutionalizing and maturing these practices as goes into initiating them. First, it is necessary to determine what constitutes a mature MDM model and how this can be gauged.

In this chapter, a methodology has been presented for defining and measuring the overall maturity of the multi-domain MDM model by gauging maturity more discretely across the five key disciplines. Each discipline area needs to develop in relationship to and in coordination with the other areas, with data governance and data stewardship having the most influence.

Getting to a mature state of MDM can be a slow, deliberate process that requires some adjustments and out-of-the-box thinking. Take the time to consider what constitutes a mature MDM model and how this can be measured. This will enable an effective tracking approach that will provide valuable guidance during your investment in MDM.