Performance Measurement

Abstract

This chapter discusses how to establish a robust performance measurement model, including examples of various types of metrics needed to measure Master Data Management (MDM) activity and performance factors consistently across domains and in relation to the disciplines of data governance, data quality, metadata management, reference data management, and process improvement. It also addresses performance measurement from strategic, tactical, and operational perspectives.

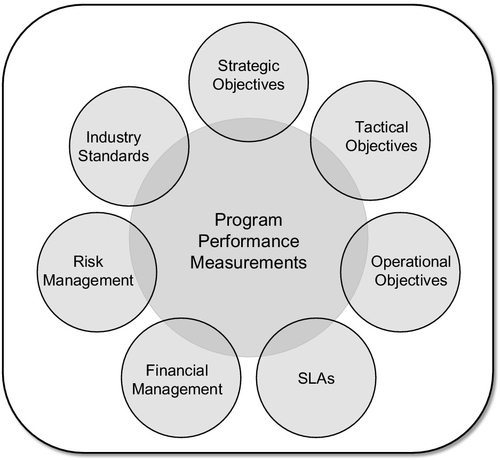

This chapter discusses how to establish a robust performance measurement model across a multi-domain Master Data Management (MDM) program. Many vantage points and factors need to be considered when measuring the program’s performance, including measurement from a strategic, tactical, and operational perspective, from a cross-domain perspective, from a financial and compliance perspective, and in relation to the MDM disciplines of data governance, data quality, metadata management, reference data management, and process improvement. This chapter provides performance measurement examples from each of these perspectives. Figure 11.1 illustrates the various performance measurement areas that will be addressed here.

As was mentioned in Chapter 1, there is no one-size fits-all plan for multi-domain MDM. There are too many factors that will differ from one company to another, or within a company from one domain to another. However, expanding to a multi-domain model and being able to manage the program consistently require an effective top-down management approach involving strategic, tactical, and operationally focused measurement levels. Specific metrics and status updates are required at each level to enable management actions and decisions.

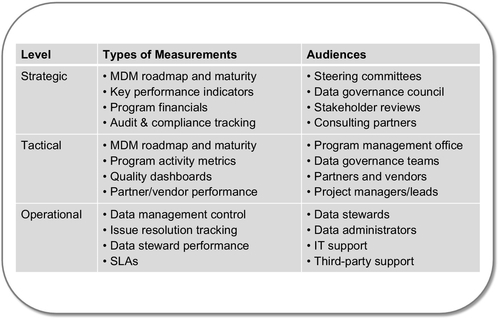

Because of the impact that master data and an MDM program can have on many functional areas, having a variety of well-focused performance measurements defined throughout the program is critical to the effective execution, management, and success of the program. Prior chapters of this book have touched on the importance of program and process area measurements such as those related to maturity, data quality, data integration, data governance, and data stewardship. Specific measurements in each of these areas are needed for the operational and tactical management of the program and contribute to summarized views and executive dashboards needed at the strategic levels. Figure 11.2 provides an example of these types of measurement at the different levels.

Without these types of performance measurements, program management will operate with limited vision. Even if other evidence exists that can reflect areas of program performance, such as meeting minutes, intake log activities, stakeholder testimony, or ad hoc reports that can be pulled together from various MDM and data governance processes, the inability to produce comprehensive, consistent, and well-grounded performance metrics impairs the ability to demonstrate the end-to-end value of the program. The performance of a multi-domain MDM program has many opportunities for measurement at the strategic, tactical, and operational levels. Let’s examine each of these measurement levels more closely.

Strategic-Level Measurements

At the strategic level, steering committees and other executive audiences need to know how the MDM program is tracking to its goals and how this benefits the company, particularly in respect to improving business and information technology (IT) operations and reducing business risk that can result from poor data management. The program goals and objectives should tie back to the MDM value propositions described in the program’s initial business case or return on investment (ROI) analysis (if any) conducted during the program’s planning stages. In order to provide the strategic level of support and ongoing investment needed to sustain a program, a steering committee needs to clearly see progress being made with the program goals and objectives and be informed of any critical path issues that may be affecting this progress. A MDM program will not reach its full potential if the executive sponsors are not well informed and engaged at the strategic level. Let’s take a closer look at important strategic-level performance measurements.

MDM Roadmap and Maturity Tracking

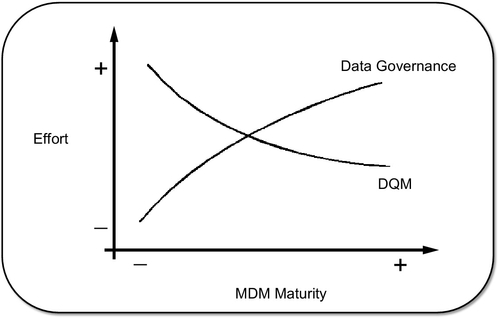

As noted in Chapter 5, MDM domains will likely be at different maturity levels at any particular time depending on the program strategies, priorities, implementation roadmaps, and domain-specific data management capabilities. Data governance and data quality commitment will have the greatest influence on domain maturity. A domain with a high level of data governance focus and Data Quality Management (DQM) capability will enable MDM practices to mature much more rapidly. As data governance practices mature, less effort will be needed for DQM because better-governed data need less correction. Figure 11.3 provides a high-level example of this concept.

Defining and communicating the relationship that data governance and data quality have with MDM maturity will help a program’s steering committee and data governance council recognize the governance and quality investments needed by each domain. Some domains will have more enabling factors to mature than other domains. For example, a Customer domain may have many more opportunities to leverage consultants, best practices, third-party solutions, industry reference data, and other industry standards for managing, maintaining, and measuring customer master data, whereas with Product and Manufacturing domains, MDM has more unique and proprietary aspects and less opportunities to leverage external solutions and services. Fewer enabling factors and opportunities can result in slower progress and maturity of the MDM practices in these domains because more internal planning, resources, and effort are required to move these practices forward.

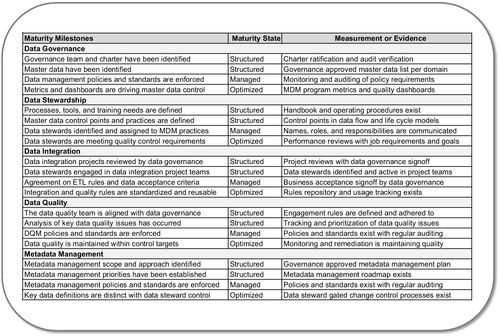

When planning the MDM program roadmap, the Program Management Office (PMO) should quickly identify the information needed to serve as the foundation and baseline data for maturity measurement for each of the major MDM discipline areas—namely, Data Governance, Data Stewardship, Data Integration, Data Quality Management, and Metadata Management. In Chapter 5, Figure 5.2 showed examples of various maturity milestones in each discipline area that can serve as the basis to identify and define more discreet metrics and evidence needed to support the measurement of these maturity milestones. Figure 11.4 provides examples of measurements or evidence supporting the maturity milestones introduced in Figure 5.2.

MDM maturity should not be subjective or hard to articulate. Activity measurements and decisions associated with defining and tracking the program or domain level maturity should be captured as meeting minutes and/or in a program decision log. These will serve as reference points and evidence to support data governance or MDM program audits.

Key Performance Indicators

Key performance indicators (KPIs) should focus on the primary factors that drive the MDM program, improve master data quality, and reduce business risk. Here are some examples of where KPIs should be defined:

Program Performance

• Progress toward the achievement of delivery goals and objectives

• Progress toward the achievement of domain-specific goals and objectives

• Improvement of key capabilities targeted in the program roadmap and maturity model

• Reduction or increase of business risk factors associated to MDM

Data Quality Performance

• The overall state of data quality across each domain area

• Data quality improvement associated with data governance, data stewardship, data integration, or metadata management

• Operational improvement associated with data quality improvements

Program Financials

• Program budget versus program spending/costs

• Running costs of third-party software and data

• Costs related to external consulting services and contingent workforce

• Return on investment (ROI)

Audit and Compliance Tracking

• Performance against MDM program audit requirements

• Performance against regulatory compliance requirements

• Incidences of breaches related to data security and data privacy

Tactical-Level Measurements

At the tactical level, performance measurements will focus more on the program’s key initiatives and processes to produce a more granular set of end-to-end program measurements. These measurements will allow the PMO to have a clear picture of what is driving the program, what is affecting the program goals and objectives, and where adjustments are needed. The results and observations from many of the tactical-level measurements will contribute to the KPIs reported at the strategic level.

MDM Roadmap and Maturity Tracking

The maturity of each domain in relation to the program roadmap and maturity model should be tracked very closely at the tactical level. The maturity milestones noted in Figure 11.4 need to be tracked for each domain in the program scope. Because there will be maturity-enabling or -constraining conditions that will vary with each domain, these conditions must be recognized, monitored, and evaluated at the tactical level to ensure that the MDM program’s plans and priorities can be adjusted when needed to address the variables and maintain program momentum.

For example, each domain can have different types of challenges with executive sponsorship, data steward resources, quality management, data integration, or metadata management. If the PMO does not have sufficient understanding and articulation of the variables, strengths, and weaknesses influencing these domain conditions, the program can struggle with where to focus attention and the tactical plans that are needed to manage the sustainability of the program. Monitoring these conditions across each domain will enable the PMO to best gauge how these conditions are affecting or influencing the program maturity goals and roadmap.

Program Activity Metrics

Program activity metrics are measurements that track important, ongoing program activities that support the fundamental foundation, processes, and disciplines that make up the MDM program. This should include the tracking of key program activities and decisions across data governance, data stewardship, data integration, data quality, and metadata management.

As noted in earlier chapters, the MDM PMO needs to monitor the application and process areas that interact or influence the flow and life cycle of master data. Examining these application and process areas will reveal a significant number of activities associated with the creation, quality, usage, and management of master data. This information can be leveraged to build a program activity measurement model that will support the tactical measurement needs and, in many cases, can be looked at more closely to measure performance specifically at the activity or operational level. For example, at the tactical level, the key quality management initiatives, decisions, and issues across each domain that influence the overall program goals and objectives should be regularly tracked. But to better understand the dynamics that are driving progress or causing issues, there needs to be the ability to closely examine more specific operational and data management activities to understand the process and quality control factors that are influencing positive or negative conditions.

Quality Dashboards

Because of the impact that master data quality has on business operations, customer interactions, and the ability to meet compliance requirements, measuring and managing master data quality will attract the most attention. At the tactical level, the MDM program’s data quality management focus should include not only the quality measurement and management of the master data elements themselves, but also the quality of the business metadata and reference data associated with the master data. Effective tracking and quality control of the master data elements and the associated metadata and reference data will demonstrate a solid DQM and measurement strategy that should be reflected through various metrics and dashboards.

Chapter 9 described quality measurement of the master data elements. A similar approach can be taken with the measurement of the associated metadata and reference data. That is, the quality of the metadata and reference data can also be measured using quality dimensions such as completeness, accuracy, validity, and uniqueness. These types of quality measurements, along with measuring how much of the master data elements and the associated metadata and reference data are under data governance control, should be regularly tracked and reported at the program level. For example, if the most critical master data elements have been identified within each domain, measurements should be established to track the domain performance against the program’s overall quality and improvement goals established by the PMO and data governance teams for these critical data elements and their associated metadata and reference data. A dashboard can be established to compare this DQM performance across each domain.

In general, being able to track the focus and effectiveness of an MDM program’s quality management performance will require a broad understanding of what aspects of master data are being measured and how the quality is being controlled. Data governance and stewardship will play a large role in the quality management approach, which is an important reason why the PMO and data governance teams will need to work closely together on data-quality priorities and initiatives.

Partner/Vendor Performance

Many aspects of a company’s master data can involve external partnerships and third-party vendors. This is particularly true in a multi-domain model, where various partners and vendors can be engaged at many levels; and in many process areas with products and consulting services or vendors of third-party data. All partner and vendor engagements should have a contractual arrangement with delivery or performance requirements described through statements of work, service-level agreements (SLAs), or other binding agreements. This performance, along with the budgets and costs associated with the contracts, should be regularly tracked and evaluated, particularly when approaching contract renewal periods and program budget reviews.

Poor partner and vendor management can contribute to MDM program cost overruns, quality management issues, or other conditions that can negatively affect the program roadmap and maturity. For all partner and vendor contracts, the MDM PMO should ensure that there is an account representative closely engaged with the MDM program so that all necessary interactions and performance reviews with the partners and vendors can be effectively and directly managed.

Operational-Level Measurements

Performance measurement at the operational level will focus more on the processes, applications, and data steward activities associated with master data touch points, quality control, and issue management in each domain. The operational level of performance measurement reflects the degree to which the domain teams and operational areas have a good sense of the day-to-day management and control of the master data. The next sections describe some examples of these activity areas.

Data Management Processes

At the heart of MDM are the processes and controls established to manage the quality and integrity of the master data. These processes and controls are embedded in the key discipline areas that have been discussed throughout this book. The performance of these processes and controls at the operational level must be tracked sufficiently within each domain in order for the MDM program and data governance teams to influence them. Let’s break this down further by looking at some of the key areas of MDM performance at the operational level.

Data Quality Monitoring

Master data quality monitoring (such as that described in Chapter 9) and the performance of the surrounding data steward processes associated with these monitoring tasks should be tracked and regularly reviewed at the domain-team level and contribute to overall data quality assessment at the tactical and strategic levels. Because each domain will have different DQM and improvement requirements, it will define and implement quality monitoring and data steward support practices in tune with its specific requirements and operational processes. For example, the business rules required for monitoring and controlling the quality and integrity of master data associated with user customer accounts will be different from the rules for partner or vendor accounts. Similarly, the business rules required for monitoring the quality and integrity of master data associated with medical products will be different than the rules for financial products.

The priorities for data-quality monitoring and the expected quality performance depend on the company’s business model, strategies, and objectives. From an MDM perspective, this will typically resolve to what the PMO and domain data governance processes have identified as the company’s critical data elements (CDEs). As described in Chapter 2, each MDM domain should identify a CDE list that will determine where MDM and quality control should be primarily focused at the operational level.

Metadata Management

Metadata management involves a connected set of processes, roles, and rules involving data modeling, data governance, and metadata administration. There should be data governance-driven policies and standards for how metadata should be handled and maintained across a data life cycle. Governance policies and the governance teams should set the scope and priorities for the control of metadata. As previously noted, the priorities for metadata management should be closely aligned to quality control priorities for MDM, particularly as related to the most critical data element defined within each domain.

When examining processes, policies, and rules and associated with the creation, use, and documentation of metadata, there should be a number of process points and expectations for how metadata should be managed and controlled. For example, there should be metadata requirements and standards established in solution design processes and with administration teams supporting metadata repositories and data stewards engaged in change control support of business-oriented metadata. From these process points, activity can be measured against the requirements, rules, standards, and policies. These are all opportunities at the operational level for measuring and tracking metadata management performance.

Reference Data Management

Similar to metadata management, there should be various policies, standards, and processes that influence and control how reference data is managed. Many master data elements will have associated reference data, such as industry or internally defined code sets. Because many applications and processes can share in the use of standard reference data, data governance and stewardship are critical for the consistent, enterprisewide use and control of this reference data.

Reference data requirements will typically be defined during the business requirement and data modeling phases of a solution design process. The deployment and management of reference data usually occur through a reference data application where the registration, change control, and deployment of the reference data are controlled. Where there are common reference data widely used across the enterprise and where this reference data are associated with master data, there should be data governance-driven policies and standards for how this is managed and controlled. It is important that reference data associated with master data is unambiguous and has data steward oversight. Priorities for reference data management should be closely aligned to quality control priorities for master data.

With specific reference data management processes and controls in place, activity can be measured against the requirements, rules, standards, and data governance policies, similar to the approach for metadata management measurement. Again, these are all opportunities at the operational level for measuring and tracking data management performance as it relates to master data.

Data Integration and Entity Resolution

Earlier chapters of this book have addressed the fact that data integration and entity resolution are critical functions of MDM. Correct data integration and entity resolution are vital to the accuracy of many types of master data, such as customer, partner, sales, service, and financial data. The performance and quality results from data integration and entity resolution functions need to be monitored and managed at the operational level, evaluated at the tactical level, and reported appropriately where the quality is driving business improvement or affecting business operations.

The performance of entity resolution is particularly critical where it involves or affects the identity, requirements, or interactions with customers, partners, financial transactions, and regulatory agencies. The accuracy of customers, products, and accounts requires precise processes and rules to correctly resolve data from many sources of transactional data and reference data. These entity resolution processes need to be governed by very specific standards and quality requirements. Any exceptional or unexpected results need to be quickly recognized and addressed to avoid or minimize business impacts and costly remediation efforts.

Issue Resolution Tracking

Chapter 5 discussed the need for the MDM PMO to work with the incident tracking and resolution processes within the company to establish rules and methods that can flag master data issues as they are being reported and progressing through these processes. Being able to characterize these incidents and the actions taken provides valuable insight about how well the company is handling master data issues. How master data issues are being reported, what is being reported, what is not being resolved, and the reasons why issues are not being resolved all provide valuable insight to allow the PMO to better capture, evaluate, and report what is happening.

Being able to assess and categorize master data issues and resolution information in relation to the data governance, data stewardship, data integration, DQM, and metadata management helps provide a more granular view of MDM and any support improvement opportunities for the PMO to pursue. There are many points in the flow of master data where issues are being caught and resolved, but also many points where issues are not. Missed opportunities at the source and during other touch points result in master data quality and integrity issues downstream in the data hubs or enterprise warehouses, where there is more exposure to data issues and where more cost and effort are often required to correct them.

Earlier in this chapter, it was mentioned that as data governance practices mature, less effort will be needed for DQM because better-governed data needs less correction (refer to Figure 11.3). Issue resolution tracking and the ability to determine where more monitoring and control can be implemented earlier in the master data flow is a key factor in producing better-governed data.

Data Steward Performance

Chapter 7 indicated that data stewards need to be closely aligned to the touch-point and consumer areas where master data entry, usage, management, and quality control can be most influenced. Being able to achieve and maintain a well-functioning and well-positioned network of data stewards within a master data domain is a maturity factor that needs regular evaluation and attention. At the operational level, data stewards should have clear, measureable data management or quality management responsibilities, such as in support or gatekeeper roles related to master data integration, entity resolution, metadata management, code set change control, incident resolution, quality monitoring, and so on. All of these roles should have clear job descriptions and performance expectations that can regularly be evaluated and adjusted as needed.

Across a multi-domain model, data steward positioning and performance can vary greatly. Some domains can be well advanced with their data steward objectives and practices, whereas other domains may be further behind or even struggling to put data steward resources into place at all. Similar to the ability to track and characterize master data issues and resolution actions, the ability to measure data steward performance across each domain at the operational level and across each MDM discipline will provide valuable insight for the PMO to evaluate and articulate further at the tactical and strategic levels.

Well-positioned data stewards provide a front line of defense for detecting master data issues within operational processes or that flow from one system to another. Operational-level data stewards can help characterize how the source applications and local processes work, their limitations with regard to master data quality control, and the opportunities to better manage this, and they can even take the lead locally to improve awareness and training at the data creation and touch points where quality issues emerge. Even without formal data monitoring and quality controls in place at the source level, data stewards that have a good sense of these process areas can help define and enforce MDM policies and quality standards that can greatly reduce the potential for issues to occur at the operational level. Therefore, the PMO should work closely with these data stewards to define operational level performance measurements.

Service-Level Agreements

Having SLAs is common at the operational level, where there are time-critical requirements associated with data management and corrective action activities. It is important that SLAs reflect reasonable and achievable performance expectations; otherwise, they quickly become an ineffective instrument that may only result in creating contention between parties if expectations cannot be reasonably met. Also, consider that it is far better to allow some latitude and demonstrate patience while getting the support needed than to regularly disparage a support team for not always meeting the SLA terms and conditions.

Support teams generally want to meet their obligations and have happy customers, but backlogs, resource issues, and shifting priorities are all realities when dealing with service delivery and issue resolution. Quality improvement needs typically compete with, and often have a lower priority than, operational support needs. So keep this in mind and be prepared to accept or make adjustments to SLAs from time to time.

By working with the issue resolution teams, with data stewards at the operational level, and with the application support teams in IT, the MDM PMO should be able to determine which SLAs are most important to the quality, support, and timely availability of master data. From that, the PMO can determine where SLA performance should be tracked and adjusted where needed. Here are just a few examples where SLA performance may need to be tracked:

• Refreshing of third-party master data or industry reference data

• Corrective action time for entity resolution process issues or exceptions

• Availability of master data from service partners

• Master data support or data maintenance activity from offshore workers

• Vendor response time related to MDM product issues

In general, SLAs should be tracked in all areas where there are critical data management functions that affect the quality and integrity of master data. These SLAs provide yet another opportunity within each domain for the PMO to examine and understand the many factors that can influence MDM in a multi-domain model.

Other Factors That Can Influence Performance Measurements

Other external or internal conditions can emerge that can influence MDM program performance focus and require additional attention. MDM program priorities and performance goals can be influenced by changes in corporate strategies, mergers and acquisitions, new industry standards, or government regulations. For example, significant changes in a company’s sales numbers, marketing strategies, or financial performance will likely create shifts in data management and analytical strategies. Master data need to be monitored and managed in line with a company’s business priorities. Therefore, the MDM PMO and steering committee need to constantly evaluate these priorities to keep the program focused on providing business value.

Each business unit within a company will have some form of strategic roadmap aligned to the corporate goals and strategies. Often, these are three- to five-year strategic roadmaps. The MDM PMO, steering committees, and data governance council should work together to review and align their data and quality management goals with the strategies and goals at the business unit and corporate levels at least annually.

Customer satisfaction issues that result from master data problems can also have a significant influence on the master data management priorities and performance focus. Customer dissatisfaction can often result from data-quality issues that affect customer identity, customer analytics, and service delivery, or they can stem from data management and information security bugs that create data privacy and regulatory compliance problems. When these types of issues occur, the MDM PMO and data governance need to respond quickly to help the mitigation and status reporting efforts.

Also, MDM programs will always have many processes and performance improvement needs that will be reflected in the program’s roadmap and maturity model assessment. As new MDM products, new benchmarks, and industry best practices emerge, a MDM PMO should take opportunities to evaluate performance of their current products and practices against latest products and practices, particularly where improvement can lead to competitive advantage. Attending industry conferences, engaging a consulting firm to do a program or process assessment, or simply staying in touch with the many MDM-oriented blogs, forums, user groups, and social media channels will keep you up to date on the latest MDM trends and perspectives that can help you identify if capability enhancements should be explored to improve program performance.

Conclusion

This chapter discussed how to establish a robust performance measurement model, including examples of various types of metrics needed to measure MDM activity and performance factors consistently across domains and in relation to the disciplines of data governance, data quality, metadata management, reference data management, and process improvement. It also covered performance measurement from the strategic, tactical, and operational perspectives, indicating how measurement at the operation level influences what is reported up to the strategic level and how many KPIs reported at the strategic level need to be studied to identify the performance factors at the domain and operational levels.

Defining performance measurements and KPIs is a necessary step in the MDM planning and readiness process in order to avoid blind alleys at implementation. Through regular MDM program reviews, the PMO needs to keep a watchful eye on these measurements from both a data-management and quality-performance perspective. As with any performance or quality measurement, the requirements, rules, and criteria likely need to be adjusted over time as quality improvement occurs or corporate strategies change, or if unexpected business impacts emerge that must be addressed.

The MDM PMO and data governance council should conduct an annual review of the existing metrics and performance measurement goals to determine the need for any new metrics or to refocus performance measurement to ensure that MDM program maturity and performance continue to be measured effectively across the MDM model and are aligned with corporate strategies. It will be incumbent on the MDM PMO and data governance council to define a robust set of measures to track program performance and quality of the master data across each domain so that it can fully understand how people, process, and system events affect this data. As is often said, it is very hard to control what cannot be seen and measured. Therefore, the PMO should be diligent about the many scenarios that affect MDM and the opportunities to set expectations or goals and measure performance.

Be sure that performance measurements have a purpose, track important aspects of the program aligned to the key goals and objectives, and will influence decisions that improve the program’s ability to manage and control master data. Over the long haul, a multi-domain MDM program risks failure if there are insufficient performance measurements to navigate and manage the program at the strategic, tactical, and operational levels. The next and final chapter will address the topic of continuous improvement, which will be directly influenced by what the program is able to measure and where improvement can best be focused.