In Chapter 2, we talked about the query optimization process and how it uses the indexes, constraints, and other objects within your database in order to figure out how best to satisfy the query. All those objects in combination are how the optimizer arrives at its estimated values. However, one of the single biggest driving factors in what leads the optimizer to choose one method of query behavior over another is row counts. Since SQL Server can’t count all the rows ahead of time, it maintains information about the distribution of data within a column. That information is called statistics. Statistics are used to establish estimates on row counts for the optimizer so that it can make better choices.

Statistics in query optimization

The behavior of statistics on columns with indexes

Statistics on nonindexed columns when used in join and filter criteria

Analysis of single-column and multicolumn statistics, including the computation of selectivity of a column for indexing

Statistics maintenance

Effective evaluation of statistics used in query execution

Statistics in the Query Optimization Process

As you know from Chapter 2, the optimizer uses a cost-based optimization process. The optimizer picks the appropriate data access and join mechanisms by determining the selectivity of the data, meaning, and how unique is it. Columns in the SELECT clause don’t need statistics. It’s the columns used in the filtering mechanisms—WHERE, HAVING, and JOIN—that have to have statistics. When an index is created (we’ll be discussing indexes in detail in Chapter 8), statistics are automatically created based on the data contained within the column or columns that define that index. Statistics will also be automatically created, by default, on columns that are not a part of an index if those columns are used in filtering criteria.

Having the statistics about the data and the distribution of the data in the columns referenced in predicates is a major driving factor in how the optimizer determines the best strategies to satisfy your query. The statistics allow the optimizer to make a fast calculation as to how many rows are likely to be returned by a given value within a column. With the row counts available, the optimizer can make better choices to find the more efficient ways to retrieve and process your data. In general, the default settings for your system and database will provide adequate statistics so that the optimizer can do its job efficiently. Also, the default statistics maintenance will usually give the optimizer the most up-to-date values within the statistics. However, you may have to determine (through the information in the “Analyzing Statistics” section) if your statistics are being adequately created and maintained. In many cases, you may find that you need to manually take control of the creation and/or maintenance of statistics.

Statistics on Rowstore Indexed Columns

The degree to which a given index can help your queries run faster is largely driven by the statistics on the column, or columns, that define the key of the index. The optimizer uses the statistics from the index key columns to make row count estimates, which drive many of the other decisions. SQL Server has two ways of storing indexes: rowstore and columnstore (we’ll be covering them both in Chapter 9). For rowstore indexes, statistics are created automatically along with the index. This behavior cannot be modified. You can add statistics to a columnstore index if you choose. Nonclustered indexes added to columnstore indexes do have statistics because they are still rowstore indexes.

Changes in data can affect index choice. If a table has only one row for a certain column value, then using the index could be a great choice. If the data changes over time and a large number of rows now match that value, it could be that the index becomes less useful. This is why you need to ensure that you have up-to-date statistics.

By default, SQL Server will update statistics on your indexes as the indexed column’s data changes over time. You can disable this behavior if you choose. There is a setting called Auto Update Statistics that can be changed to control this behavior.

Default automatic statistics maintenance

Table Type | Number of Rows in the Table | Update Threshold |

|---|---|---|

Temporary | Less than six | Six updates |

Temporary | Between six and 500 | 500 updates |

Permanent | Less than 500 | 500 updates |

Both | Greater than 500 | MIN(500+(0.2 * n), SQRT(1,000 * n)) |

In the calculation, “n” represents the number of rows in the table. You’re basically getting the MIN, or minimum, of the two calculations. Let’s assume a larger table with 5 million rows. The first calculation results in a value of 1,000,500. The second calculation results in a value of 70,710. This would then mean that data modifications to only 70,710 rows will result in a statistics update.

Old statistics maintenance thresholds

Table Type | Number of Rows in the Table | Update Threshold |

|---|---|---|

Temporary | Less than six | Six updates |

Temporary | Between 6 and 500 | 500 updates |

Permanent | Less than 500 | 500 updates |

Both | Greater than 500 | 500 + (.2 * n) |

With the older method, more than 1,000,500 rows would have to be inserted, updated, or deleted before the statistics would update automatically. As you can see, that would mean a lot fewer updates, therefore, more out-of-date statistics.

You can also make the choice to update statistics asynchronously. Normally, when statistics need to be updated, a query stops and waits, until that update is complete. However, with asynchronous statistics updates, the query will complete, the statistics will update, and then any other queries using those statistics will get the updated values.

You can disable the automatic statistics maintenance using the ALTER DATABASE command. I strongly recommend that you keep this enabled on your systems. Exceedingly few systems suffer from the automatic statistics maintenance, and most systems benefit from it. You can decide to turn on the asynchronous statistics update if you’re seeing timeouts and waits caused by the statistics update.

I’ll explain ALTER DATABASE later in the chapter.

Benefits of Updated Statistics

Creating a table and index

Retrieving a single row from the table

A flow diagram exhibits, from right to left, nonclustered index seek and R I D lookup with 50% costs, nested loops with 0%, and select with 0%.

An Index Seek is used to retrieve data

Creating and starting an Extended Events session

Adding a single row to the Test1 table

A table has name, timestamp, batch text, duration, logical r e, and c p u time columns and 2 rows. The s q l batch completed entry in the second row is highlighted and where an arrowhead points to.

Extended Events output showing no statistics updates

Adding 1,500 rows

A flow diagram exhibits, from right to left, the connection from table scan with 100% cost, 1502 of 1502, to select with 0% cost.

Execution plan showing a table scan

A table has name, timestamp, batch text, duration, logical r e, statistics list, and status columns and 3 rows. The auto stats entry in the first row is highlighted.

Session output including the auto_stats event

Since we updated so many rows, we exceeded the threshold, so the statistics were updated. I captured the column showing the statistics_list and status from the auto_stats event. You can see that auto_stats was called twice: once, to load and update the dbo.Test.i1 statistics and the second time showing that those statistics were successfully updated. Then, the query ran. The statistics were updated, which resulted in a change to the execution plan. It now has a single table scan instead of 1,501 seek operations. This also illustrates why you want to test carefully if asynchronous statistics updates are desirable. This query could have run with the old execution plan.

Drawbacks of Outdated Statistics

In the previous section, the statistics within SQL Server were automatically updated. The updated statistics are then used to create a new, better, execution plan. That illustrates the advantages of up-to-date statistics. Statistics can become outdated. Then, the optimizer may make poor choices that don’t accurately reflect the data within the system, which will lead to bad performance.

Disabling automatic update of statistics

- 3.

Add 1,500 rows to the table using Listing 5-3.

A flow diagram exhibits, from right to left, nonclustered index seek and R I D lookup with 40% and 59% costs, nested loops with 0%, and select with 0%.

Inaccurate execution plan due to out-of-date statistics

Because the automatic statistics maintenance has been disabled, the optimizer chose an execution plan based on bad estimates of row counts. You readily see that in the execution plan in Figure 5-5 where the estimated number of rows is 2 and the actual is 1,501, a 75050% variance.

The number of logical reads and the duration are significantly higher with out-of-date statistics. That performance degradation and the difference in the number of reads are despite the fact that we are running the exact same query with the exact same result sets. The differences in how the optimizer satisfies a query, even with everything else being identical, result in serious performance changes. The benefits of keeping statistics up to date usually far outweigh the cost of performing the updates.

Enabling automatic statistics update

Statistics on Nonindexed Columns

It’s very common to see columns that are not a part of the index key being used in filtering and join criteria. When this happens, the optimizer still needs to understand the cardinality and data distribution of the column, just like one that has an index on it, in order to make better choices for the execution of the query.

While a column not taking part in an index means that no index exists to help retrieve the data, the optimizer can still use the statistics to create a better plan. This is why, by default, SQL Server will automatically create indexes on columns used for filtering. One scenario where you may consider disabling the automatic creation of statistics is where you are executing a series of ad hoc T-SQL queries that will never be executed again. It’s possible that creating statistics on columns in this scenario could cost more than it benefits you. However, even here, testing to validate how performance is positively or negatively impacted is necessary. For most systems, you should keep the automatic creation of statistics enabled unless you have very clear evidence that the creation of those statistics is actively causing your system pain.

Benefits of Statistics on a Nonindexed Column

Two test tables with varying data distributions

Checking database properties using DATABASEPROPERTYEX

Enabling the automatic creation of statistics

Joining the two test tables

A flow diagram exhibits, from right to left, tests 2 and 1 of clustered index scans with 27% costs, nested loops with 46%, and select with 0%.

Execution plan on two tables without indexes

You can see that the pipes estimated data movement correlates with the actual data, very thin for Test2 and much thicker for Test1. After combining the data in the Nested Loops join, the data output pipe is still thick, representing the 10,000 rows being accessed.

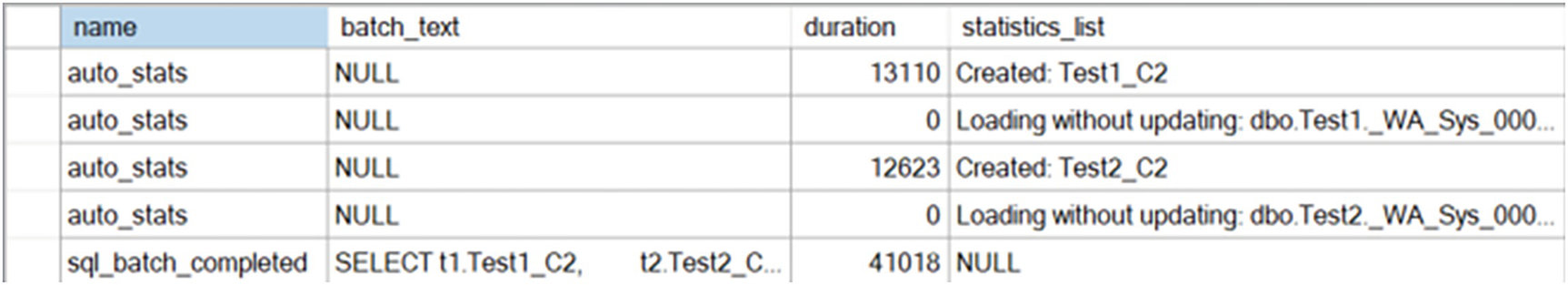

A table has name, batch text, duration, and statistics list columns and row entries of 4 auto stats and 1 s q l batch completed.

Extended Events session showing auto_stats events

Querying the sys.stats table to see table statistics

A zoomed section of an application exhibits a table with name, auto created, and user created columns and 2 rows under the Results tab. The i 1 entry under name is highlighted.

Automatic statistics created for table Test1

The auto_created column lets you know that statistics were automatically created with a value of 1. However, you can also see the standard naming convention with “_WA_Sys*” for all automatically created statistics. Of note here is that the statistics for the index, i1, were automatically created along with the index, but they don’t actually count as being auto_created.

Filtering on different criteria

A flow diagram exhibits, from right to left, tests 1 and 2 of clustered index scans with 27% costs, nested loops with 46%, and select with 0%.

Execution plan changes as the data returned changes

If you were to execute the query, you wouldn’t see any auto_stats events since the statistics are already in place. What we do see is that by changing the query, the execution plan has changed. In Figure 5-6, Test2 was the inner table of the Nested Loops join operation. In Figure 5-9, we’ve swapped to Test1 being the inner table. The optimizer can justify this change based on the difference in the data as reflected in the statistics that were automatically created on the columns in question.

Obviously, if we were concerned with improving performance, we’d likely need to add indexes to the columns in question. However, you can see that the optimizer can use statistics on nonindexed columns that will change plan behaviors.

Comparing Performance with Missing Statistics

Disabling the automatic creation of statistics

A flow diagram exhibits select at 0% cost as an endpoint that test 2 of clustered index scan at 22% cost and test 1 of clustered index scan, with respective warnings, with the same cost via a table spool at 20% connects to via an x marked nested loop at 35% costs.

Execution plan with a number of warnings

Columns With No Statistics: [AdventureWorks].[dbo].[Test2].Test2_C2

This shouldn’t be a surprise since we reset our table and disabled the ability of SQL Server to create statistics. So the optimizer recognizes that these columns are in use in filtering operations, but without statistics.

No Join Predicate

In short, because there is no way for the operator to estimate row counts, it’s effectively assuming that all values will have to be matched to all values. Because of this, it has chosen a Table Spool, a temporary storage mechanism within execution plans, as a way to store the data read from the Clustered Index Scan against table Test1. By doing this, it thinks it can reduce the number of times it has to scan the table.

The number of reads and the duration were much higher on the query without statistics. Because it didn’t have statistics, the optimizer simply made guesses, using mathematical heuristic calculations of course, as to how the data might be distributed. These guesses are simply not as accurate as having actual data for decision-making.

If you do disable the automatic creation of statistics, it might be a very good idea to keep an eye on just how many queries have a need for statistics. Extended Events provides an event called missing_column_statistics.

Analyzing Statistics

Header: Information about the set of statistics you’re looking at

Density Graph: A mathematical construct of the selectivity of the column or columns that make up the statistic

Histogram: A statistical construct that shows how data is distributed across actual values within the first column of the statistic

The most commonly used of these data sets is the histogram. The histogram will show a sample of the data, up to 200 rows worth, and a count of the occurrences of values within each step, one of the 200 rows. If the column allows NULL values, you may see 201 rows, with one added for the NULL value. The steps are generated from the data in question, from a random distribution across the data.

Creating a testing table for statistics analysis

Retrieving statistics using DBCC SHOW_STATISTICS

3 tables with varying numbers of columns and 1, 1, and 2 rows under the Results tab. First Index, 0.5, and 1 as entries in the first row of each table under the name, all density, and range hi key columns are highlighted.

Statistics on index FirstIndex

You get three distinct result sets from the SHOW_STATISTICS command. I outlined what they are at the start of the chapter: Header, Density Graph, and Histogram. Let’s step through these in a little detail and discuss what each means.

Header

The Header contains information about the statistics. Some of the data is straightforward such as the Name and the Updated value. The rest is descriptive about the statistics. The Rows column represents the number of rows in the table at the time that the statistics were created or updated. The Rows Sampled column shows how many of the rows were sampled to create the statistics, in this case, 10,001. The header lists how many Steps are in the histogram, here, only 2. The Density is shown as the average key length for the index. The rest of the columns are just describing the state of the statistics in question and are dependent on other settings, which we’ll cover later in the chapter.

Density

The next data set, visible in the middle of Figure 5-11, is the measure of the density. When creating an execution plan, the optimizer analyzes the statistics of columns used in JOIN, HAVING, and WHERE clauses. A filter with high selectivity limits the number of rows that will be retrieved from a table, which helps the optimizer keep the query cost low. A column with a unique index will have a very high selectivity since it limits the number of matching rows to one.

On the other hand, filters with low selectivity will return larger results from the table. A filter with low selectivity can make a nonclustered index ineffective. Navigating through a nonclustered index to the base table for a large result set is less efficient than simply scanning the clustered index (or the base table in the case of a heap).

Statistics track the selectivity of a column in the form of a density ratio. There are two calculations: one for a single column set of statistics like what we’re dealing with here and a second for compound statistics consisting of more than one column. We’ll cover the compound statistics in a later section. The basic calculation is as follows:

Density = 1/Number of distinct values for a column

Calculating the density value

The results will be the same as those shown in Figure 5-12.

The density is used to estimate the number of rows when the histogram won’t work. The calculation is simple: multiply the density value times the number of rows to get an estimate on the average number of rows for any given distinct value within the table.

Histogram

RANGE_HI_KEY: The top value of each range. There may or may not be values within the range. This value will be an actual value from the data in the column. If it’s a number column, like our INT in the example, it will be a number. If it’s a string, as we’ll show in later examples, it will have some value within the column shown as a string. For example, “London” from a City column.

EQ_ROWS: The number of rows within the range at the point when the statistics were updated, or created, that match the RANGE_HI_KEY value.

RANGE_ROWS: The number of rows between the previous top value and the current top value, not counting either of those two boundary points.

DISTINCT_RANGE_ROWS: The number of distinct values within the range. If all values are unique, then the RANGE_ROWS and DISTINCT_RANGE_ROWS will be equal.

AVG_RANGE_ROWS: The number of rows equal to any potential key value within the range. Basically, RANGE_ROWS/DISTINCT_RANGE_ROWS is the calculation to arrive this value.

Retrieving statistics from the Sales.SalesOrderDetail table

A table has unlabeled, range hi key, range rows, e q rows, distinct range rows, and a v g range columns and 13 rows labeled 81 to 93.

Histogram from the IX_SalesOrderDetail_ProductID statistics

So now, let’s assume we have a value, 827. If we look at the RANGE_HI_KEY values, the closest one, above our value, is 831. If we then look over at the AVG_RANGE_ROWS, we get the value 36.66667. If the optimizer were creating an execution plan, it would estimate that there would be 36.66667 rows that matched the value, 827.

Cardinality

This estimate is predicated on the idea that columns of data are related to one another. By getting the power of 1/2 of the selectivity, then 1/4, 1/8, etc., depending on the number of columns involved, the data is treated as if the columns were interrelated, which they usually are.

Another calculation takes effect when dealing with monotonically increasing values, such as an IDENTITY column. With a fresh set of statistics, which have been created using a FULLSCAN (explained later in the “Statistics Maintenance” section), everything simply works as expected. However, if you have used a sampled method to create the statistics, or there have been additions to the table, then the cardinality estimation assumes an average number of rows from the statistics. Prior to SQL Server 2014, the assumption was that all data only returned one row, whether it did or not. The newer method ensures more accurate execution plans in most cases. However, if you have uneven distribution in your data, referred to as skewed data, it can lead to bad cardinality estimations that can result in badly performing execution plans.

You can use Extended Events to observe how cardinality estimations get made. It’s only useful if you really don’t understand where a particular estimate is coming from. Usually that information is easily correlated between the information in the execution plan and the statistics on the columns or indexes. The event in question is query_optimizer_estimate_cardinality.

The query_optimizer_estimate_cardinality event is part of the Debug channel within Extended Events. The Debug events should be used with care, after careful testing on nonproduction systems. The events in the package are subject to change or removal without notice.

Extended Events session for query_optimizer_estimate_cardinality

SELECT query against several tables

A table with 4 columns and 5 rows with highlighted query optimizer estimate cardinality entry. The details of this event are exhibited as a table with field and value columns and 9 rows.

Session showing output from the query_optimizer_estimate_cardinality event

You can see the auto_stats events firing as they did earlier. Then, highlighted, I have the first query_optimizer_estimate_cardinality event selected, so you can see all the properties of the event at the bottom of the screen. The two areas we have to focus on are the input_relation value and the stats_collected value. Both are XML. The interesting numbers are the Card values. The first is 460.00 (highlighted), showing the overall cardinality of the table, the number of rows. The second value is 1.00, the estimated number of rows from the statistics in question.

A table has 2 columns and 7 entries. The stats collection I D and 38, as entries on the fifth row, are highlighted.

StatsCollectionId matching the operator to the properties

Again, this is only useful in rare circumstances because, as you can see, the cardinality values are included in the operators. Only if you need to look for obscure issues will this be useful.

Another piece of information returned by this event is the Cardinality Estimation Engine version. This is useful when you’re dealing with upgrades or issues caused by the Cardinality Estimator (we’ll discuss this some more later in the chapter).

This event is not available on Azure SQL Database.

Statistics on a Multicolumn Index

When dealing with an index with a single key column, statistics are defined by the histogram and the density value for that column. When an index has a compound key, more than one column defining the key, then the information is a little different. The histogram stays the same. The first column in a compound key is always the one used to create a histogram. This makes choosing the order of your columns in a key important because only that first column will ever get a histogram, so you want to use the column that has the best data distribution, generally, the most selective column, the one with the lowest density. Then density values include the density for the first column and then for each additional column in the index key. Multiple density values ensure that the optimizer can get more accurate row estimates when multiple columns from the index are used in the predicates of a WHERE, HAVING, or JOIN clause. Here again, column order will matter in the Density calculation as described in the earlier section.

Query with two columns in the WHERE clause

Querying the system tables to return a list of statistics

A table has unlabeled, name, auto created, user created, filter definition, column i d, and column name columns and 1 to 8 labeled leftmost row entries under the Results tab. The first entry, P K Product Product I D, under the name column is highlighted.

List of statistics on the Product table

A table has unlabeled, name, auto created, user created, filter definition, column i d, and column name columns and 1 to 10 labeled leftmost row entries under the Results tab. W A sys 0000000 F and 00000006 are added as the last 2 entries.

Added to statistics to the Product table

As you can see, rather than creating a multicolumn set of statistics, one each for each of the columns in question has been added.

Changing the index to have two keys

3 tables with varying numbers of columns and 1, 2, and 2 rows under the Results tab. First Index, 0.5, and 1 as entries in the first row of each table under the name, all density, and range hi key columns are highlighted.

Statistics with a multicolumn density graph

You can now see that the density has changed to show just the density of the first column and then the density with the addition of the second column. Nothing has changed in the histogram because it’s always, only, on the first column.

Statistics on a Filtered Index

Creating an index on the PurchaseOrderNumber column

3 tables with varying numbers of columns and 1, 2, and 152 rows under the Results tab. I X Test, 0.000262674, and NULL as entries in the first row of each table under the name, all density, and range hi key columns are highlighted.

Statistics on an unfiltered index

Altering the index to make it filtered

3 tables with varying numbers of columns and 1, 2, and 151 rows under the Results tab. The filter expression column with open parenthesis open square bracket purchase order number close square bracket is not null close parenthesis entry is highlighted.

New statistics based on the filtered index

The first thing to note between the information in Figure 5-18 and Figure 5-19 is the number of rows, going from 41,465 to 3,806. You can also see that the key length has increased since we no longer have NULL values. You can also see a Filter Expression for the first time in this chapter. I highlighted it in Figure 5-19.

The density measures are an interesting case. The density is close to the same for both values, but the filtered density is slightly lower, meaning fewer unique values. This is because the filtered data, while marginally less selective, is actually more accurate, eliminating all the empty values that won’t contribute to filtering the data. The density of the second value, which is the clustered index pointer (discussed in Chapter 6), is identical with the value of the density previously because each represents the same amount of unique data. Finally, the histogram shows a NULL value in step 1 in Figure 5-18, but not in Figure 5-19.

You can create filtered statistics as well. Doing this lets you create finely tuned histograms. These are especially useful on partitioned tables. This is necessary because statistics are not automatically created on partitioned tables, and you can’t create your own using CREATE STATISTICS. You can create filtered indexes by partition and get statistics or create filtered statistics specially by partition.

Removing the test index

Controlling the Cardinality Estimator

Database Compatibility Level

Trace flag 9481

LEGACY_CARDINALITY_ESTIMATION database setting

FORCE_LEGACY_CARDINALITY_ESTIMATION query hint

Query Store hint for FORCE_LEGACY_CARDINALITY_ESTIMATION

Changing the database compatibility level

That would make the database behave as if it were a SQL Server 2012 database. This means that much of the more modern behaviors of the database are also disabled. This is generally a poor choice.

Controlling the legacy cardinality estimation engine

It’s much easier to understand what you’re doing and easier to query the behaviors you have set through DATABASE SCOPED CONFIGURATION, available in SQL Server 2016 and greater.

Controlling cardinality through a query hint

A zoomed section of a table exhibits two entries in one row under two columns that read cardinality estimation model version 70.

Execution plan property showing the cardinality estimation engine

And finally, you can use the Query Store hint feature if you’re on SQL Server 2022 or in Azure SQL Database and Azure Managed Instance. We’ll cover the Query Store hint feature in Chapter 6.

Statistics Maintenance

Auto Create Statistics: New statistics on columns with no index

Auto Update Statistics: Updating existing statistics automatically

Sampling rate of statistics

Update Statistics Asynchronously: Updating statistics after query execution

You can control these settings across a database, or you can control them on individual indexes and statistics. The Auto Create Statistics is applicable to columns without an index only because SQL Server automatically creates statistics when an index gets created.

Auto Create Statistics

We’ve already discussed how statistics get created on columns without an index when those columns are used as filtering criteria in a JOIN, WHERE, or HAVING clause. This behavior is enabled by default and generally should be left in place.

Auto Update Statistics

We’ve already discussed how statistics get updated. As before, you should leave the defaults in place unless strong testing shows that disabling it on a given system is worth having out-of-date statistics. If you do disable the automatic update of statistics, ensure that you build your own statistics maintenance routines to ensure your statistics are up to date.

Auto Update Statistics Asynchronously

We’ve mentioned this several times in the chapter, but we haven’t yet explained how it works in detail. When Auto Update Statistics Asynchronously is set to on, the basic behavior of statistics in SQL Server isn’t changed radically. When a set of statistics is marked as out of date through the formula established, the statistics update process does not interrupt the execution of the query, like what normally happens. Instead, the query finishes execution using the older set of statistics. Once the query completes, the statistics are updated. This approach can be attractive because when statistics are updated, the current execution plan is removed from cache and a new execution plan is created. Depending on the query in question, creating a new execution plan could be a time-consuming process, delaying the execution of the query. Rather than making a query wait for both the update of the statistics and the generation of the new execution plan, the query completes its run. The next time the same query is called, it will have updated statistics waiting for it, and it will have to recompile only.

Although this functionality does make the steps needed to update statistics and recompile plans faster, it can also cause queries that could benefit immediately from updated statistics and a new execution plan to suffer with the old execution plan. Testing is required before enabling this functionality to ensure it doesn’t cause more harm than good.

If you are attempting to update statistics asynchronously, you must also have AUTO_UPDATE_STATISTICS set to ON.

Manual Maintenance

When experimenting with statistics. Please don’t run the experiments outlined here on your production servers and databases.

After upgrading from a previous version to a new version of SQL Server. Assuming you’re moving to a SQL Server instance of 2016 or greater, you should take advantage of the Query Store as part of your upgrade process. This also means I wouldn’t immediately start updating statistics on your databases. Rather, let them get updated as before. Then, when you change the compatibility mode through your Query Store upgrade process (outlined in Chapter 6), then you may want to also update all statistics.

While executing one-time ad hoc SQL activities. In such cases, you may have to decide between automatic statistics maintenance and a manual process where you control exactly when and how statistics get updated. This is usually only a concern for much larger than normal databases.

Automatic update of statistics is not firing frequently enough. This can be caused by a lot of reasons. You’ll usually identify it when you have slow performance on a query and on looking at the execution plan you have a large disparity between the estimated and actual row counts. In these cases, you may need to manually intervene, either as a one-time activity or as a part of regular maintenance to assist with the automatic maintenance.

Manage Statistics Settings

Turning Auto Create Statistics off

Turning off Auto Update Statistics

Turning on asynchronous statistics update

Turning off automatic update of statistics on a single table

Disabling automatic statistics on a single index

You can also use the UPDATE STATISTICS command’s WITH NORECOMPUTE option to disable this setting for all or individual indexes and statistics on a table in the current database. The sp_createstats stored procedure also has the NORECOMPUTE option. The NORECOMPUTE option will not disable automatic update of statistics for the database, but it will for a given set of statistics.

Avoid disabling the automatic statistics features, unless you have confirmed through testing that this brings a performance benefit. If the automatic statistics features are disabled, then you are responsible for manually identifying and creating missing statistics on the columns that are not indexed and then keeping the existing statistics up to date. In general, you’re only going to want to disable the automatic statistics features for very large tables.

Determining the status of statistics on a table

Resetting statistics on tables and indexes

Create Statistics Manually

CREATE STATISTICS: You can use this command to create statistics on a single or multiple columns of a table or an indexed view. Unlike the CREATE INDEX command, CREATE STATISTICS uses sampling by default.

sys.sp_createstats: This stored procedure creates single-column statistics for all eligible columns for all user tables in the current database. Ineligible columns are excluded: NTEXT, TEXT, GEOMETRY, GEOGRAPHY, IMAGE, and sparse columns, columns that already have statistics or are the first column of an index key. This is a backward compatibility function, and I do not recommend using it.

You will see a statistics object get created for a columnstore index, but the values inside that set of statistics are null. Individual columns on a columnstore index can have system-generated statistics. When dealing with a columnstore index, if you find you’re still referencing individual columns in filtering queries, it’s possible that creating a multicolumn statistic is useful.

Making a statistic incremental to deal with partitioning

UPDATE STATISTICS: You can use this command to update the statistics of an individual index, or all indexes and nonindexed columns of a table or an indexed view.

sys.sp_updatestat: This system stored procedure is used to update statistics of all user tables in the current database. Although, it can only sample statistics and can’t use FULLSCAN. It will also update statistics when only a single action has been performed on a given statistic. In short, this is a rather blunt instrument for maintaining statistics.

You may find that allowing the automatic updating of statistics is not quite adequate in all circumstances. Scheduling UPDATE STATISTICS for the database during off-hours is a perfectly acceptable way to deal with this issue. UPDATE STATISTICS is the preferred mechanism because it offers a greater degree of flexibility and control. It’s possible, because of the distribution of your data, that the sampling method for gathering statistics may not be accurate enough. The sampled method is the default because it’s faster. However, if you are hitting a case where the statistics aren’t accurately reflecting the data, you can force a FULLSCAN so that all the data is used to update the statistics. This is the same as when a statistic is initially created. FULLSCAN can be a costly operation, so it’s best to be selective about which statistics receive this treatment.

When you create your own statistics, they are treated as a permanent object. As such, they can prevent changes to the table. Automatically created statistics are automatically dropped as needed when tables and indexes are modified. Starting in SQL Server 2022, you can modify the creation of your statistics to include the ability to automatically drop. Simply add WITH AUTO_DROP = ON to the end of the statistics creation. You can also modify statistics to automatically drop by adding the same WITH statement during an UPDATE of statistics.

Analyzing the Effectiveness of Statistics for a Query

In order to assist the optimizer in creating the best possible plans for your queries, you must maintain the statistics on your database objects. Issues with old, or incorrect, missing, or inaccurate statistics are quite common. You need to keep your eyes open to the possibility of problems with statistics while analyzing the performance of a query. In fact, checking that the statistics are up to date at the beginning of a query tuning session eliminates an easily fixed problem. In this section, you’ll see what you can do in the event you find statistics to be missing or out of date.

Indexes are available on the columns referred to in the filter and JOIN criteria.

In the case of a missing index, statistics should be available on the columns. It is generally preferable to have the index.

Since outdated statistics are of no use and can even cause harm to your execution plans, it is important that the estimates used by the optimizer from the statistics are up to date.

Resolving a Missing Statistics Issue

Setting up test for missing statistics

Since the index is created on the columns C1 and C2, the statistics on the index contain a histogram for the first column, C1, and density values for the combination of columns (C1 and C1*C2). There are no histograms or density values alone for column C2.

Query demonstrating missing statistics

A flow diagram exhibits, from right to left, the connection from test 1 of table scan with 100% cost, with a warning sign, to select with 0% cost.

Index scan caused by missing statistics

A pop up window labeled Warnings. The text box contains a 1 liner detail for columns with no statistics.

Property values from the warning in the Table Scan operator

You get a warning when there are no statistics on a column used in a filtering operation because the optimizer knows that it’s not going to be able to ensure accurate row estimates. Between this clear warning and the huge disparity between estimated and actual rows, you know that you’re missing statistics. On average, this query ran in 1.4ms with 88 reads.

Using CREATE STATISTICS on a column

Removing a query from the cache

If we then run Listing 5-39 again, Figure 5-23 shows the resulting execution plan.

Reads: 34

A flow diagram exhibits, from right to left, tests 1 of nonclustered index scan and R I D lookup with 75% and 15% costs, nested loops with 10%, and select with 0%.

A different execution plan, thanks to statistics

Because it now has statistics to work with, the optimizer can accurately estimate that only three rows are going to be returned by the query. Because of this, the optimizer also decides that a better approach would be to use the index iFirstIndex to scan for the data. However, because the index only contains some of the data, we have to go to the table and perform a row lookup using the RID Lookup operation. The number of reads went from 88 to 34, a win. However, because of the extra processing needed for the Nested Loops join and the RID Lookup, the duration actually increased from 1.4ms to 4.9ms, a loss. A fix is possible here. Adding the C3 column as an INCLUDE on the index would result in improved performance. We’ll be covering that sort of solution in later chapters in more detail.

Resolving an Outdated Statistics Issue

Outdated or incorrect statistics are just as damaging as missing statistics. The choices the optimizer makes may be highly inappropriate for the current data distribution. Unfortunately, the execution plan won’t show the same glaring warnings for outdated or incorrect statistics as they do for missing statistics. Instead, you’re going to be looking primarily at the comparison of estimated rows to actual rows in the execution plan.

There is a Debug Extended Event that can show you some events when the statistics are wildly off. It’s called inaccurate_cardinality_estimate. However, since it’s a debug event, I’d urge a very cautious approach to its use and only when you have strong filtering in place and only for short periods of time.

Using DBCC SHOW_STATISTICS on iFirstindex

3 tables with varying numbers of columns and 1, 2, and 2 rows. i First Index, 0.5, and 51 as entries in the first row of each table under the name, all density, and range hi key columns are highlighted.

Statistics for iFirstIndex

A SELECT query against the Test1 table

A flow diagram exhibits, from right to left, the connection from test 1 of table scan with 100% cost, 1 out of 5001, to select with 0% cost.

An execution plan with out-of-date statistics

Reads: 88

Duration: 1.4ms

Looking at the execution plan with runtime metrics, we can see that only one row was returned, but that 5,001 were estimated based on our out-of-date statistics. You can also see the estimated number of rows and the actual number of rows in the properties of the operator.

When you see a disparity between estimated and actual where it is several times off, in either direction, it’s possible that the processing strategy chosen by the optimizer may not be cost-effective for the existing data distribution.

Updating the statistics on iFirstIndex

A FULLSCAN might not be necessary in this situation. The sampled method of creating and updating statistics is usually fairly accurate and is much faster, using less resources. However, on systems that aren’t experiencing stress, or during off-hours, I tend to favor using FULLSCAN because of the improved accuracy. Either approach is valid as long as you’re not negatively impacting the system and your statistics are good enough.

Running the query from Listing 5-43 results in a different execution plan shown in Figure 5-26.

Reads: 4

A flow diagram exhibits, from right to left, nonclustered index seek and R I D lookup with 50% costs on the first tests, nested loops with 0%, and select with 0%.

A different execution plan after the statistics are updated

With updated statistics, the optimizer came up with a completely different execution plan. Instead of 5,001 rows, it knows that it’s only got one row to deal with, so retrieving the data from iFirstIndex with an Index Seek operation was chosen. It still had to go and retrieve the rest of the data from the heap.

However, the changes resulted in the reads going from 88 to 4 and the execution time reducing from 1.4ms to 419ms, almost five times faster.

Enabling auto create and update of statistics

Recommendations on Statistics

Throughout the chapter, I’ve made a number of recommendations on how to maintain and manage your statistics. For easy reference, I’ve consolidated these recommendations here along with some extra details.

Backward Compatibility of Statistics

When upgrading your databases between different versions of SQL Server, using the Query Store as a mechanism during the upgrade (outlined in Chapter 6) is generally a good idea. Because of this, the statistics will update naturally over time as the data changes. I recommend letting this happen rather than attempting to update all the statistics after an upgrade. However, this assumes that you’re using Query Store. If not, it’s probably a good idea to update all the statistics after an upgrade to ensure that any changes to the behavior of statistics get incorporated right away.

Auto Create Statistics

This feature should be left on. SQL Server can then create statistics it needs on columns that do not have an index, which will result in better execution plans and usually better performance. You might find that creating compound statistics yourself is beneficial in some circumstances.

Auto Update Statistics

This feature should also be left on, allowing SQL Server to get more accurate execution plans as the data distribution changes over time. Usually, the performance benefit outweighs the overhead cost.

If you do come across issues with the Auto Update Statistics feature and decide to disable it, then you must ensure that you create an automated process to update the statistics on a regular basis. For performance reasons, where possible, run that process during off-peak hours.

You will likely need to supplement the automatic statistics maintenance. Whether you need to do it across your entire database or just for specific indexes or statistics will be driven by the behavior of your systems.

Automatic Update Statistics Asynchronously

Waiting for statistics to be updated before plan generation will be fine in most cases. In cases where the statistics updates, or the recompiles of execution plans from those updates, are more expensive than the cost of working with out-of-date statistics, then enable this functionality. Just understand that it may mean that queries that would benefit from more up-to-date statistics will suffer until the next time they are run. Don’t forget that you need automatic update of statistics enabled in order to enable asynchronous updates.

Amount of Sampling to Collect Statistics

It is generally recommended that you use the default sampling rate. This rate is decided by an efficient algorithm based on the data size and number of modifications. Although the default sampling rate turns out to be best in most cases, if for a particular query you find that the statistics are not very accurate, then you can manually update them with FULLSCAN. You also have the option of setting a specific sample percentage using the SAMPLE number. The number can be either a percentage or a set number of rows.

If this is required repeatedly, then you can add a SQL Server job to take care of it. For performance reasons, ensure that the SQL job is scheduled to run during off-peak hours. To identify cases in which the default sampling rate doesn’t turn out to be the best, analyze the statistics effectiveness for costly queries while troubleshooting the database performance. Remember that FULLSCAN is expensive, so you should run it only on those tables or indexes that you’ve determined will really benefit from it.

Summary

SQL Server’s cost-based optimization process requires accurate statistics on columns used in filtering criteria to determine an efficient processing strategy. Statistics on an index are always created during the creation of the index, and by default, SQL Server also keeps the statistics on indexed and nonindexed columns updated automatically as the data changes over time. This enables the optimizer to determine the best processing strategies based on the current data distribution.

Even though you can disable both the auto create and auto update of statistics, it is recommended that you leave these features enabled. Their benefit to the optimizer is almost always more than their overhead cost. For a costly query, analyze the statistics to ensure that the automatic statistics maintenance lives up to its promise. You can supplement the automatic process with your manual processes as necessary.

Now that we have a firm foundation in how plans are generated from Chapter 4 and how statistics affect that generating from this chapter, we’re going to look at how you can use the Query Store to further understand query and execution plan behavior inside SQL Server in the next chapter.