One of the principal needs in Online Transaction Processing (OLTP) systems is to get as much speed as possible in order to support more transactions. Some systems simply cannot support the transaction loads necessary because of limitations on the speed of disks and disk controllers. Microsoft introduced the in-memory technology in SQL Server and Azure SQL Database to help deal with this situation. While the memory-optimized tools are extremely useful, they are also extremely specialized. As such, they should be approached with some caution. However, on systems with the right amount of memory, in-memory tables and native stored procedures produce lightning-fast speed.

The basics of how in-memory tables work

Improving performance by natively compiling stored procedures

The benefits and drawbacks of natively compiled procedures and in-memory tables

Recommendations for when to use the in-memory technologies

In-Memory OLTP Fundamentals

Disks, disk controllers, and the whole architecture around permanent storage of the information that SQL Server manages have gone through radical changes over the last few years. As such, storage systems are blazingly fast. And yet, it is still possible to have systems that bottleneck on the storage systems.

At the same time, memory operations have also improved equally radically. The amount of memory that can be put on systems has increased in enormous ways, making memory even faster than disks. However, never forget, memory is only, ever, temporary storage. If you want your data persisted, it has to have a way to get to the disk.

Durable: Meaning they get written to disk

Delayed durability: Meaning they get written to disk eventually but could suffer some data loss, the second fastest mechanism

Nondurable: Meaning they’re only ever written to memory with nothing ever written to disk, the fastest, but least safe, mechanism

Microsoft recognized that in order to make transactions faster, they would have to remove the pessimistic locks on data. The in-memory tables use an optimistic locking approach. They’re using a data versioning scheme to allow multiple transactions to write data, with a “last one in wins” kind of approach. That eliminates all sorts of locking and blocking.

Additionally, instead of forcing all transactions to wait until they are written to the log to complete, an assumption is made that most transactions complete. Therefore, log writes occur asynchronously so that they don’t slow down transactions for the in-memory tables.

Yet another step was to make memory management itself optimistic. In-memory tables work off an eventually consistent model. There is a conflict resolution process that could roll back a transaction but won’t allow a transaction to block another. All operations within memory-optimized tables are then subsequently faster.

Additionally, internally, they’ve changed the way that the buffer pool works so that there are optimized scans for systems with extremely large memory pools. Without this, you would simply be moving where the slowdown occurs.

Finally, in addition to all the optimizations to data access, Microsoft added natively compiled stored procedures. This is T-SQL code that is turned into a DLL and run within the SQL Server OS. There is a lengthy, and expensive, compile process, but then, execution is radically enhanced, especially when working with the memory-optimized tables.

Best of all, largely, you can treat memory-optimized tables largely the same way you treat other tables, although you can’t do just anything you want. There are a number of limitations on behaviors and some specific system requirements.

System Requirements

A modern 64-bit processor

Twice the amount of free disk storage for the data you’re putting into memory

A lot of memory

You can, if you choose, run memory-optimized tables on a system with minimal memory. You must have enough memory to load the data, or you’ll get an error. However, to do this well, you have to have a lot of memory. Remember, all the memory needed by SQL Server to support your system as is will likely still be needed. Then you’re adding additional memory to load tables into for the performance enhancements.

Basic Setup

To begin to use in-memory tables, you have to modify your databases, adding a specific new file group that will store and manage your in-memory tables.

After you add this memory-optimized file group to your database, you can never remove it. Do not experiment with memory-optimized tables on a production system.

Creating a test database

You want your in-memory tables to write to disk (at least I assume you do; most people usually like their data) in addition to the memory. Writing to disk is about persisting data; in the event of a power loss, failover, etc., your data is still there. You get a choice with in-memory tables. You can make them durable or nondurable. Durability is part of the ACID properties, explained in Chapter 16. The crux of the issue is with a nondurable table, you may lose data, even though it was written out to memory. If you’re dealing with data such as session state, it might not matter if that data can be lost, so nondurable means just a little faster still, on top of all the rest, but with the potential for data loss.

Adding a MEMORY_OPTIMIZED_DATA file group to a database

DBCC CHECKDB: Consistency checks run as normal, but they skip the memory-optimized tables. If you attempt CHECKTABLE on the memory-optimized table, you’ll generate an error.

AUTO_CLOSE: This is not supported.

Database Snapshot: This is not supported.

ATTACH_REBUILD_LOG: This is not supported

Database Mirroring: You cannot mirror a database with a MEMORY_OPTIMIZED_DATA file group. Availability Groups, however, work perfectly.

Creating Tables

Creating a memory-optimized version of the Address table

The primary magic is in the WITH statement. First, I’m identifying this table as being specifically MEMORY_OPTIMIZED. That will ensure that it goes to the correct file group. Additionally, I’m defining my durability, here a fully durable table supporting both schema and data.

The next thing worth noting is the index. This is not one of the indexes we’ve referenced throughout the book. This index, NOCLUSTERED HASH, is unique to memory-optimized tables. We’ll drill down on indexing a little later in the chapter.

GEOGRAPHY/GEOMETRY

XML

DATETIMEOFFSET

ROWVERSION

HIERARCHYID

SQL_VARIANT

User-defined data types

Otherwise, all the data types are supported. You can also use foreign keys, check constraints, and unique constraints ever since SQL Server 2016. Also, memory-optimized tables support off-row columns, meaning a single row can be greater than 8060 bytes.

Loading the dbo.Address table

Another limitation is that a memory-optimized table can’t take part in a cross-database query. Therefore, in order to load the data, I created a staging table. There may be ways to optimize this process, but it should be noted the cross-database query took 77ms and the query to load the in-memory table only took 18ms, for the same data.

Setting up a more complete database of tables

Querying memory-optimized tables

A diagram begins on the right with 2 non-clustered hash index seek with values of, 1% cost, 0.000 seconds, and 1 of 1, each, and a clustered index seek with values 99% cost, 0.000 seconds, and 1 of 1, given.

Execution plan showing a mix of table types

The best part is that the optimizer thinks that the single row, Clustered Index Seek, against the standard clustered index is 99% of the cost of the query.

However, the important point here is how mundane this is. It’s just an execution plan. Yes, it has seeks against a nonclustered hash table instead of any of the indexes we’ve covered throughout the book, but if you’ve read straight through to this point, you know what an index seek represents. It’s no different because it’s in memory-optimized tables.

Comparing performance for a simple SELECT query

Database | Duration | Reads |

|---|---|---|

InMemoryTest | 198mcs | 2 |

AdventureWorks | 252mcs | 6 |

This is a very small example returning a single row, but approximately 22% faster, and a 66% reduction in reads indicates the possibility of other performance enhancements as other queries get run.

One point I need to make here. The reads that we’ve been using throughout the book are not the same as reads with memory-optimized tables. No data is read off of disk except during the recovery of the database during startup or failover. That means while we still have the value of reads, we can’t do what I just did, a straight comparison on those reads. Fewer reads with memory-optimized tables are better, of course, but it’s not the same values. Duration is your better comparison measurement, at least when comparing traditional behaviors with the in-memory ones.

In-Memory Indexes

Every memory-optimized table must have one index. Since the row is stored as a unit within the in-memory table, an index has to be there in order to make a table. The data is not stored on a page, which is why reads are not the same. Because of this, there is no worry about index fragmentation. An UPDATE consists of a logical DELETE and an INSERT. A process comes along later to clean things up.

Prior to SQL Server 2017, you were limited to eight indexes per table. Now, the limit is 999 (but remember, it’s a limit, not a goal).

The indexes on a memory-optimized table live in memory in the same way that the table does. Their durability matches that of the table they’re created on.

Let’s explore these indexes in more detail.

Hash Index

The hash index is different from the other index types within SQL Server. A hash index uses a calculation to create a hash value of the key. The hash values are stored in buckets, or a table of values. The hash calculation is a constant, so for any given value, the same hash value will always be calculated.

Hash tables are very efficient. A hash value is a good way to retrieve a single row. However, when you start to have a lot of rows with the same hash value, that efficiency begins to drop.

The key to making the hash index efficient is getting the correct distribution across your hash buckets. When you create the index, you supply a bucket count. In the example in Listing 19-3, I supplied a value of 50,000 for the bucket count. When you consider there are currently about 19,000 rows in the table, I’ve made the bucket count more than big enough to hold the existing data, and I left room for growth over time.

The bucket count has to be big enough without being too big. If the bucket count is small, a lot of values are inside a single bucket, making the index less efficient. If you have a lot of empty buckets, when a scan occurs, they are all read, even though they are empty, slowing down the scan.

A diagram on top labeled large bucket count has a row of 6 buckets, where buckets 1, and 3 to 6 contain bricks labeled hash values 1 to 5, and bucket 2 is empty. The diagram below labeled, small bucket count, has a row of 6 buckets.

Hash values in buckets with a shallow and deep distribution

The first set of buckets is what is defined as a shallow distribution. That is to say, a few hash values distributed across a lot of buckets. The second set of buckets at the bottom of Figure 19-2 represents a deep distribution. In that case, fewer buckets with more values in them.

With a shallow distribution, point lookups, individual rows, are going to be extremely efficient, but scans will suffer. Whereas in the deep distribution, point lookups won’t be as fast, but scans will be faster. However, if you are in a situation where your queries are doing a lot of scans of a memory-optimized table, you may want to switch back to a regular table again.

The recommendation for bucket counts completely depends on your data and your data growth. If you don’t expect any data growth at all, set the number of buckets equal to your row count. If your data is going to grow over time, set it somewhere between one and two times the row count. Don’t worry too much about this. Even with too many, or too few, buckets, performance is likely to be great. Just remember, the more buckets you allocate, the more memory you’re using.

You also have to be concerned with how many values can be returned by the hash value. Unique indexes and primary keys are excellent choices for a hash index because their values will be unique. The general recommendation is a ratio of total rows to unique values being less than 10.

The hash value is simply a pointer to the bucket. If there are multiple rows, they are chained together, each row pointing to the next row. When a bucket is found, you’ve found the first value in the chain. You then scan through the chain to find the actual row you’re interested in. This means a point lookup turns into a bit of a scan, hence keeping the number of duplicates in the hash to a minimum.

Querying sys.dm_db_xtp_hash_index_stats

A table has 5 columns and 1 row with headers, index name, total bucket count, empty bucket count, average chain length, and max chain length. The row entries are P K underscore address underscore 091 C 2 A 1 A 604 E D F A 2, 65536, 48652, 1, and 5.

Results from sys.dm_db_xtp_hash_index_stats

There are several imports pieces of information here. First you should note the total_bucket_count value of 65,536. I called for 50,000 buckets, but SQL Server rounded it up to the next power of 2 value. I have about 48,652 empty buckets, which is a little high, but maybe I’m anticipating growth. The average chain length is 1, which is very good. The maximum chain length is 5, which is good enough being below 10.

If there were even more empty buckets, the average chain length was above 5 and max chain length was greater than 10, it might be time to consider a nonclustered index for the memory-optimized table.

Nonclustered Indexes

A nonclustered index on a memory-optimized table is no different than a nonclustered index on a regular table, other than where the data is stored. Durability again will be dictated by the durability of the table.

A query in need of an index

A diagram on top begins with a table scan with values 73% cost, 0.005 seconds, and 19614 of 19614 given, to filter at 8% cost, 2 nested loops at 0% cost each, and select at 0%.

A scan of an in-memory table

Because the WHERE clause is unsupported by any index, we’re getting a scan of the dbo.Address table. While a scan of an in-memory table will be faster than one stored on disk, it’s still a performance issue in the same way it would be on another query.

ALTER a memory-optimized table to add an index

A diagram on top begins with 2 non-clustered index seek with values of 9% cost, 0.000 seconds, 100 of 100, and 7%, 0.000 seconds, 100 to 100, given, respectively, to 2 nested loops at 2% cost each, and select at 0%.

Scan replaced with a seek

The nonclustered index replaces the scan. Because of how the data is stored in a memory-optimized table, no Key Lookup operation is needed. Performance went from 5.9ms to 1.1ms, a considerable gain.

Columnstore Index

There are no major distinctions between a memory-optimized table and a regular table when it comes to a columnstore index. You are limited to only a clustered columnstore. You have to have the memory to support the data stored in the index. Otherwise, it’s just a columnstore index.

Statistics Maintenance

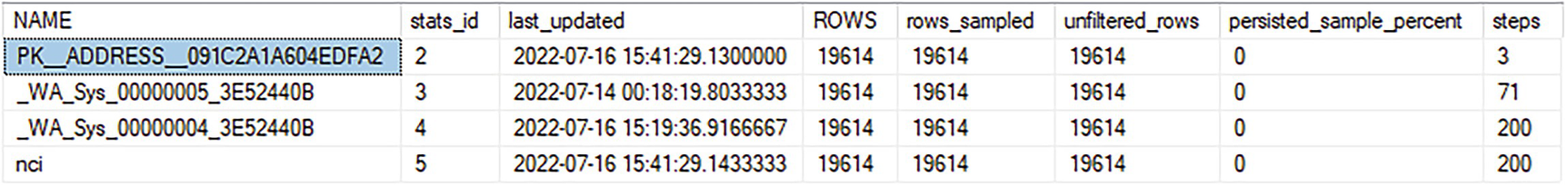

Querying sys.dm_db_stats_properties

A table has 8 columns and 4 rows with headers, name, stats i d, last updated, rows, rows sampled, unfiltered rows, persisted sample percent, and steps. The first entry under the name column is P K underscore address underscore 091 C 2 A 1 A 604 E D F A 2, and is highlighted.

Statistics on the memory-optimized table

Querying sys.dm_db_stats_histogram

A table has 6 columns and 3 rows, with column headers step number, range high key, range rows, equal rows, distinct range rows, and average range rows. The first row entries are 1, 1, 0, 1, 0, 1, where the first entry is highlighted.

Histogram of the hash index

Statistics are automatically maintained in SQL Server 2016 and greater as long as the database compatibility level is set to at least 130. Prior to that, or with the compatibility level set lower, you will have to maintain statistics manually.

Updating statistics in 2014

Otherwise, you will generate an error.

Apart from that exception, statistics are largely the same as described in Chapter 5.

Natively Compiled Stored Procedures

Recreating the CountryRegion table as memory optimized

Creating a natively compiled stored procedure

Executing the natively compiled procedure for the value of ‘Walla Walla’ in the same way as Listing 19-8 results in a query running in 794mcs compared to the 1.1ms from before.

There are other restrictions and requirements visible in the code in Listing 19-13. First, parameters cannot accept NULL values. You have to enforce schema binding to the underlying tables. Finally, the procedure must exist within an ATOMIC BLOCK. Atomic blocks require that all statements within the transaction succeed, or all statements within the transaction get rolled back.

The diagram on top begins with a non-clustered index seek and a non clustered hash index seek at costs of 6% and 3%, respectively, to 2 nested loops, compute scaler, and select.

Execution plan for a natively compiled procedure

A table titled M i s c has two columns and five rows. The entries in the fifth row are in bold fonts and read as, statement, select A, dot, address line 1, comma, A, dot, city, comma.

SELECT operator properties

Because this procedure executes within the OS, most of the properties we’re used to seeing aren’t applicable.

You should use execution plans the same way as you would with a regular query. You’ll see where there may be optimization opportunities looking for scans, etc. One note, all join operations within a natively compiled procedure will be Nested Loops. No other join operations are supported.

Recommendations

While the performance enhancements of memory-optimized tables and natively compiled procedures are simply huge, you should not simply, blindly, start migrating your databases and objects to in-memory. There are serious hardware requirements that you must meet. Here are a number of considerations you should work through prior to implementing this technology.

Baselines

The very first thing you should have in hand is a good understanding of the existing performance and behavior of your system. This means implementing Extended Events and all the other tools to gather performance metrics. With that information in hand, you’ll know if you’re in a situation where locking and latches are leading to performance bottlenecks that memory-optimized tables could solve.

Correct Workload

The name of the technology is In-Memory OLTP. That’s because it is oriented and designed to better support Online Transaction Processing, not analytics or reporting. If your system is primarily read focused, has only intermittent data loads, or simply has a very low level of transaction processing, this technology is not right for you. You may see only marginal improvements in performance for a substantial amount of work. Microsoft outlines a series of possible workloads in more detail in their documentation.

Memory Optimization Advisor

A window has a table with three columns and fourteen rows. The first column contains X, check, and warning symbols.

Results of the Memory Optimization Advisor

Because the Geography data type isn’t allowed in memory-optimized tables, this fails the initial test. You can see that it passed several other tests and received a warning that for a migration scenario, other tables would have to be processed without foreign keys.

Setting up a table for migration to memory optimized

A window has a table with three columns and nine rows. The first column has check marks as entries in all rows, and the second column contains entries like, a table is not partitioned or replicated, and no sparse columns are defined, among others.

All checks are passed

A window has a table with 3 columns and 5 rows. The first column has the information icon as entries in all rows, and the second column contains entries like truncate table and merge statements cannot target a memory-optimized table, among others.

Warnings about migrating a table to in-memory storage

A dialog box contains the following, memory-optimized filegroup, logical file name, file path, rename the original table, and estimated current memory cost in megabytes, among others. A tick mark is next to the text, also copy table data to the new memory optimized table.

Naming the table and migrating data

A dialog box contains the following, a 2 column table with headers column, and type, with the entry, address I D ticked and highlighted, and the selected option, use a non clustered hash index, among others.

Selecting an index for the in-memory table

A table has 3 columns and 5 rows, with the last two columns with headers, action, and result. The first, third, and fifth entries under action are, renaming the original table, creating the memory optimized table in the database.

After migrating the table to in-memory storage

That table has been moved to in-memory storage. While the advisor will help you move a table to in-memory, it doesn’t help you determine which tables should be moved. You’re still on your own for that evaluation.

Native Compilation Advisor

Procedures for testing the Native Compilation Advisor

A window has a table with 3 columns and 1 row with the last two columns with headers, action, and result. The row entries under the first and second columns are, an X symbol, and validating the stored procedure.

Native Compilation Advisor failed this procedure

A window has a table with 3 columns and 1 row with headers, transact S Q L element, occurrences in the stored procedure, and start line. The row entries are N O L O C K, N O L O C K, and 9.

Explanation for the failure from the Native Compiled Advisor

Running the Advisor against the good procedure just reports that it doesn’t have any issues that you need to address. Unlike the Table Advisor, there is no actual migration wizard. Instead, you’d have to write the code yourself.

Summary

You’ve been introduced to the concepts behind memory-optimized tables and natively compiled procedures as a part of the In-Memory OLTP suite of tools. This is a specialized set of functionality that will absolutely help some workloads. Just remember that there are a lot of limitations on which tables can be migrated and what kind of code can be run against them. You also must have the hardware to support this functionality. However, with all that in place, the performance benefits of memory-optimized tables and natively compiled procedures are difficult to overemphasize.

In the next chapter, I’ll introduce Graph tables and the performance issues you may encounter there.