SQL Server ensures that your data is protected even as the data is changed. It does this through a process called locking. However, even as SQL Server is locking within the I/O system in support of your data changes, this is causing what is called blocking, through contention for those resources. As the number of users on your systems increases, performance degrades as they contend for those resources. In order to understand how to deal with blocking, you need to understand how, and why, locking occurs.

The fundamentals of blocking in SQL Server

The ACID properties of a transactional database

Isolation levels within SQL Server

The effects of indexes on locking

Information needed to analyze blocking

Recommendations to minimize blocking

Blocking Fundamentals

Some degree of blocking is inevitable. There is simply no way for modern systems with large numbers of users and vast amounts of data to be set up or maintained in such a way that there will not be some amount of blocking. SQL Server takes control of managing the necessary locks through a process called the lock manager. It’s the locks needed to ensure data integrity and consistency that lead to blocks.

A Short Discussion of Terminology

Locking

Blocking

Deadlocking

Locking is an integral part of the process of SQL Server managing multiple sessions, all attempting to read or modify data. When a session needs access to a piece of data, the appropriate lock will be placed on it.

When one session holds a lock that another session needs, the second session can be said to be blocked. While blocked, the second session will wait until the first session, the blocking session, is complete. At which time the first session will release its lock, and then the blocking will end. These are two very distinct terms referring to two different behaviors within SQL Server.

Finally, a situation can arise where two sessions each have a lock on two different pieces of data. Each session needs the piece of data locked by the other. These sessions are mutually blocking one another in what can be referred to as a deadly embrace. Neither can let go of the lock it has until it gets the other piece of data. Both will have to wait an infinite amount of time. This is what is referred to as a deadlock. We’ll cover deadlocks in detail in Chapter 17.

The important information I want you to understand and remember is that while these three terms are absolutely interrelated, they are not the same. Locks can lead to blocks, and both blocks and locks play a part in deadlocks. However, they are three, completely distinct, processes within SQL Server. Keeping them separate in your head will absolutely assist in your understanding when you’re faced with performance issues. Further, when you need assistance with locking, blocking, or deadlocks, using the right terminology will ensure that those attempting to help understand what the actual problem may be.

Introducing Blocking

A connection to a database that is used to run a query is called a session and comes with an identifier called the session ID. The sessions may be from one or more users in one or more applications. It’s all the same inside SQL Server. When a session needs access to a piece of data, whether it’s writing to that data through an INSERT, UPDATE, or DELETE, or reading from that data in a SELECT, some kind of lock must be taken out on that piece of data, row, page, extent, or table.

When two sessions attempt to modify the same piece of data, well, one of them will get there first. That session takes out a lock. The second session is waiting for that lock to be released. This is the situation we refer to as blocking, one session waiting on another.

Now, when two sessions both wish to read a piece of data, they both read it. While there are still locks taken out for reads, they cooperate with other read locks on the data. Therefore, two sessions reading data won’t block one another.

Blocking and locking are constantly taking place within SQL Server as queries are run. The majority of the time, this isn’t noticeable. However, as the load increases on a server, or there are bad or missing indexes, or the code is written in an inefficient manner, transactions are poorly configured, for any or all of these reasons, locks are held longer. As locks are held longer, blocking occurs and extends. As you get more blocking, other sessions come along, looking to put locks on the same resources. Blocking gets worse and worse.

Because of all this, you need to minimize blocking on your servers in order to be able to support a large number of concurrent users.

Memory-optimized tables, also called in-memory tables, introduce some changes to how locking and blocking occur. I’ll address them separately in Chapter 19.

Transactions and ACID Properties

Atomicity

Consistency

Isolation

Durability

Ensuring that these properties are met is a big part of why we get blocking in a database. Let’s explore each of these properties in more detail.

Atomicity

Adding data in an atomic transaction

The table defined in the CREATE TABLE statement includes a constraint that will only allow a value of 1 to be added to the table. Then, I attempt to INSERT rows to the table that don’t have the value of 1. Of course, there’s an error, so the SELECT statement will return zero rows.

The INSERT operation is atomic, meaning all the data goes, or none of it does. There is one ProductID value in the Production.Product table that has the value of “1”. That means I could add that row. However, since SQL Server insists on an atomic transaction, I don’t get any rows. All must go or none can go.

More than one statement within a transaction

Listing 16-2 explicitly defines a transaction. Now, if we run this query, we’ll still see an error, just like in Listing 16-1. However, the second statement, where we only add a single value, which is within the definition of the constraint, succeeds.

While each of these statements is within a transaction, it’s possible for some parts of a transaction to succeed while other parts fail, and yet still be an atomic operation.

Since the atomicity property requires that all the actions of a logical unit of work are completed, or none of the changes are retained, SQL Server uses a process to isolate the work. Exclusive rights are granted to the affected resources while the atomic operation is being completed. These exclusive rights are what lead directly to blocking all other transactions from access to those resources. While atomicity is necessary to ensure data integrity, it automatically introduces the undesirable side effect of blocking.

SET XACT_ABORT ON

Explicit rollback

We should understand these before we move on.

SET XACT_ABORT ON

Changing transaction behavior

With the setting on, the entire transaction is rolled back with an error, making the whole transaction an atomic operation. The default setting for XACT_ABORT is off, so if you want this type of behavior, you will have to either modify code as shown in Listing 16-3 or change your connection strings.

Explicit Rollback

Catching the error

When the error occurs, the first statement in the CATCH block is a ROLLBACK. This means that all work of the transaction is immediately undone. Further, no attempt at making the second INSERT is done, reducing the time this transaction is held open, possibly, slightly, improving performance. That’s not to say that TRY/CATCH is a performance enhancement, but that it can be a side effect of their use.

Consistency

The next property that affects transactions, and therefore locking and blocking, is the need for your database to be consistent. This means that when a transaction is complete, the data is either written or rolled back. You will not be in an indeterminant state with your data.

SQL Server ensures this through a series of actions. First, when you’re modifying a given data structure, all indexes, constraints, and other dependent objects that can be affected by the change in code are identified. Then, the optimization process assures that any data changes that must be done, such as adding or modifying a row in a nonclustered index after the clustered index is changed are also taken into account.

The actual logical consistency of the data is maintained by SQL Server. However, it’s your database design and the logical needs of the application being supported that drive that design. In order to ensure that all these constraints are maintained appropriately, exclusive rights must again be applied. This also leads to locks and blocking.

Isolation

It’s expected in the multiuser environment of SQL Server for more than one transaction to be executing simultaneously. Isolation is the act of walling each of these transactions off from each other to ensure that one transaction’s set of actions doesn’t directly affect other transactions. Isolation is actually a setting within the database and can be controlled. We’ll discuss it later in the chapter in the “Isolation Levels” section.

As data gets manipulated by a given transaction, SQL Server ensures that this transaction can conclude its operations before another transaction starts changing the data. The idea being two processes simply can’t modify the exact same pieces of data at the same time.

Isolation is one of the factors that can most negatively impact performance. The necessary locks to allow data manipulation to occur, and complete, mean other transactions can’t get to that data during the operation. This happens even if the second transaction is simply trying to read the data.

Durability

At the conclusion of a transaction, all data should be written to disk, or, in short, durable. If you turned a server off, an instant after the conclusion of a transaction, nothing should happen to that transaction. Transactions in flight, ones that are not completed, will of course have to be rolled back (this is a part of the recovery process and goes way beyond topic for a book on query tuning).

Durability in and of itself doesn’t really contribute directly to blocking, unlike the other ACID properties. However, the requirements to maintain the transaction log, along with the data manipulation of the transaction itself, do add to the duration of a given transaction and, therefore, the time that locks are held.

However, you can use a setting called “delayed durability” to reduce the time of some transactions by letting the transaction conclude before it has been written to the transaction log.

Lock Types

To understand blocking, you first have to understand the root of blocking, locks. With the exception of certain data in flight and in-memory tables (which we’ll discuss in Chapter 19), all the data within SQL Server is stored on disk. However, in order to ensure that what is stored on disk is there successfully, SQL Server uses the ACID properties of a transaction, as discussed in the last section.

Row (RID)

Key (KEY)

Page (PAG)

Extent (EXT)

Heap or B-Tree (HoBT)

Rowgroup()

Table (TAB)

File (FIL)

Application (APP)

Metadata (MDT)

Allocation Unit (AU)

Database (DB)

These locks are directly related to how the data is stored within SQL Server. I’m going to detail some of the behaviors on the most important of these lock types.

Row Locks

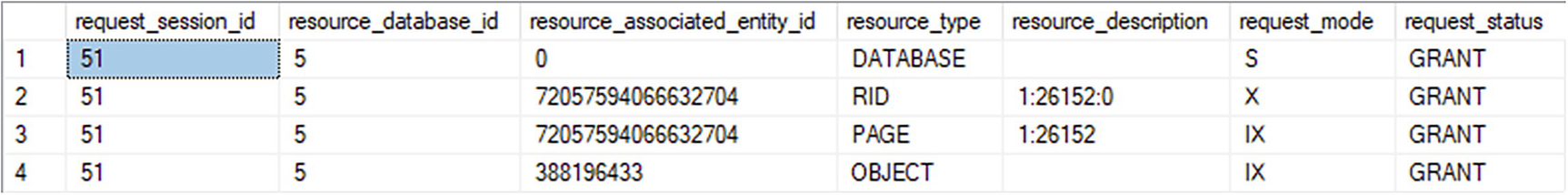

Observing a row-level lock in action

A table has unlabeled, request session i d, resource database i d, resource associated entity i d, resource type, resource description, request mode, and request status. Rid and page under resource type have descriptions of 26152. The request status is Grant.

A row-level lock for the DELETE statement

There is a lot of detail on display here, but I want to focus rather tightly at the moment. I will explore all the information on display in more detail throughout this chapter. If you look at line two (2), you’ll see that the resource_type is “RID” for this session, indicating a row lock. Further, you’ll see the request_mode is a value of “X”, which means it is exclusive. The DELETE statement received an exclusive lock on the row in order to remove it.

Some of the additional information on display is the database identifier in the resource_database_id column. That lets you know where the action is occurring. You can also see the exact resource being locked in the resource_description column: “1:26152:0”. This represents

FileID:PageID:Slot(row)

Retrieving the object name from the object ID value

No lock is superior to another in terms of performance. They all serve a different need. However, in this case, a single-row delete should see a row lock that will provide high concurrency.

Key Locks

A KEY lock is a lock within an index, similar to a row lock on a table. As I outlined in Chapter 9, a clustered index defines data storage, keeping all the data at the leaf level of the index. When you then need to modify a single row, or a limited range or rows, with a clustered index, the key value of the index is used to find the row, or rows, and then a KEY lock is used while the data is being manipulated.

Adding a clustered index to the LockTest table

Deleting rows with a clustered index

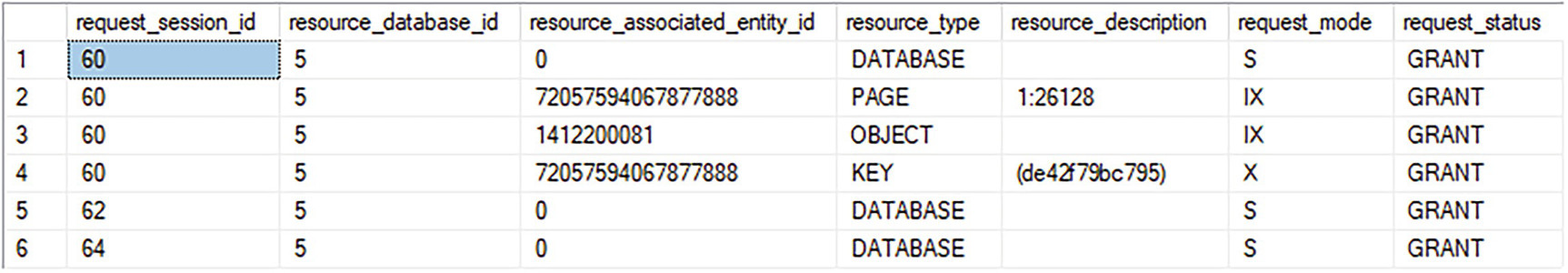

A table has 8 columns and 4 rows. Page and key under resource type have descriptions of 1 colon 26168 and d e 42 f 79 b c 795.

A KEY lock to DELETE data from a clustered index

Not only has the resource_type column changed to the value KEY, but we can see a different page now in the resource_description, 1:26168.

Page Locks

When more than a few rows or key values are needed to be locked, it can be much more efficient for SQL Server to take out a single lock on a page, identified as a PAG lock. The optimizer provides estimates for the number of rows that will be locked, and from that, the engine manages the locks, ensuring as few locks as possible since each one not only locks the resources in question but takes up memory and CPU. So as the lower-level locks increase, it’s more efficient to take a higher-level lock, reducing resource use, even as the granularity of the lock decreases.

While you will see increased performance for a given query due to a broader lock, you will also see a decrease in concurrency since all rows on a given page will be locked.

Extent Locks

An Extent is a group of eight contiguous data or index pages. An extent lock is then a lock of eight pages identified as an EXT lock. Just as excessive row or key locks lead to a page lock, larger number of page locks can lead to an extent lock.

Heap or B-Tree Locks

A heap or B-Tree lock is used to describe when a lock to either type of object could be made. The target object could be an unordered heap table, a table without a clustered index, or a B-Tree object, usually referring to partitions. A setting within the ALTER TABLE function allows you to exercise a level of control over how locking escalation (covered in the “Lock Escalation” section) is affected with the partitions. Because partitions can be across multiple filegroups, each one has to have its own data allocation definition. This is where the HoBT lock comes into play. It acts like a table-level lock but on a partition instead of on the table itself.

Rowgroup Locks

When dealing with columnstore indexes, you still have to take into account locking as data gets manipulated. However, instead of worrying about a row or a page, columnstore focuses on the rowgroup as a locking mechanism. As discussed in Chapter 9, the columnstore uses a rowgroup as its mechanism of storage. When data moves from the deltastore into the pivoted, compressed storage, you’ll see rowgroup locks as that data moves.

Table Locks

It’s possible to lock an entire table using a TAB lock. This reserves not just the table itself but all associated indexes.

As before, when there are too many lower-level locks, it can be much more efficient to take out a single lock on the entire table. You’ll see a single value in the resource_description column for the OBJECT lock, the ID value for the table in question.

While this lock takes up as few resources as a single KEY lock, the concurrency is radically reduced.

Database Locks

Every connection that opens on your system sets a shared database lock on the database being used. This prevents the database from being dropped or overwritten through a restore operation while people are using it.

Lock Operations and Modes

Locks are not just a question of deciding what is being modified and then taking the lowest-level lock. Instead, a fairly complex set of decisions has to be completed within SQL Server. Not only does the appropriate lock have to be placed on the objects being modified, but the type of lock has to be determined and locking escalation has to be accounted for.

Lock Escalation

I’ve already discussed how the locks are arranged from extremely granular at the RID and KEY levels to not granular at all at the TAB or DB level. An initial estimate is made for the kind of locks needed within SQL Server. As the query executes, the lock manager monitors the number of locks requested by a given query. If that number grows beyond internal thresholds, lock escalation can occur, meaning a higher-level, less granular, lock is taken.

Key and page locks can be automatically escalated to a table lock as the internal thresholds are exceeded. At that point, the higher-level lock is taken out, and all the resources for the lower-level locks are released. While this decreases the concurrency on the table, it increases the resources available for other sessions running on the server. The dynamic nature of lock management helps queries to run faster.

Preventing lock escalation on a table

Running that code, supplying an appropriate schema and table name, will eliminate most lock escalations (but not every possible one).

Alternatively, you can set the LOCK_ESCALATION value to TABLE. In that case, every time escalation occurs, it goes straight to a TAB-level lock.

Setting the value to AUTO reenables the dynamic lock management within SQL Server for the table in question. This kind of control over locking should only be done after extensive testing to establish that you’re not doing more harm than good on your system.

You also have the option to disable lock escalation on a wider basis by using trace flag 1224. This disables lock escalation based on the number of locks but leaves intact lock escalation based on memory pressure. You can also disable the memory pressure lock escalation as well as the number of locks by using trace flag 1211, but that’s a dangerous choice and can lead to errors on your systems. I strongly suggest thorough testing before using either of these options.

Lock Modes

Shared (S)

Update (U)

Exclusive (X)

- Intent

Intent Shared (IS)

Intent Exclusive (IX)

Shared with Intent Exclusive (SIX)

- Schema

Schema Modification (Sch-M)

Schema Stability (Sch-S)

Bulk Update (BU)

Key-Range

Shared (S) Mode

Shared mode is used for queries that are going to read data, like a SELECT statement. A shared lock doesn’t prevent other queries that are only reading data from accessing the data simultaneously. This is because the integrity of the data is not affected in any way by concurrent reads. However, concurrent data modification queries are intentionally stopped in order protect data integrity. The (S) lock is released immediately after the data is read.

Even if the SELECT query is part of a transaction, each of the necessary (S) locks is released as soon as the read on that row, or page, whatever, is completed. This is the default behavior under the read_committed isolation level (more on isolation levels later in the chapter). You can change the behavior of the shared (S) lock by using different isolation levels or a lock hint within the query.

Update (U) Mode

The Update (U) mode is actually indicating a read operation. However, the read is being done with the intention to then modify the data. Since the data is going to be modified, only one U lock on a single resource is possible in order to protect data integrity. The (U) lock is a common part of an UPDATE statement, which consists of two actions: reading the data to be modified and then modifying that data.

There are actually multiple lock modes that will take place during an UPDATE of data. As the data is being read in preparation for the possibility of being updated, a (U) lock is taken. If the data is then going to be modified, the (U) lock is converted to an exclusive lock for the actual data modification. If no data is being updated (e.g., it doesn’t match the WHERE criteria), then the (U) lock is immediately released.

Scripts running in multiple T-SQL windows to arrive at a blocked process

Script Order | T-SQL Window 1(Connection 1) | T-SQL Window 2(Connection 2) | T-SQL Window 3(Connection 3) |

|---|---|---|---|

1 | BEGIN TRANSACTION LockTran2; --Retain an (S) lock on the resource SELECT * FROM Sales.Currency AS c WITH (REPEATABLEREAD) WHERE c.CurrencyCode = 'EUR'; --Allow DMVs to be executed before second step of -- UPDATE statement is executed by transaction LockTran1 WAITFOR DELAY '00:00:10'; COMMIT; | ||

2 | BEGIN TRANSACTION LockTran1; UPDATE Sales.Currency SET Name = 'Euro' WHERE CurrencyCode = 'EUR'; -- NOTE: We're not committing yet | ||

3 | SELECT dtl.request_session_id, dtl.resource_database_id, dtl.resource_associated_entity_id, dtl.resource_type, dtl.resource_description, dtl.request_mode, dtl.request_status FROM sys.dm_tran_locks AS dtl ORDER BY dtl.request_session_id; | ||

4 | --wait 10 seconds | ||

5 | SELECT dtl.request_session_id, dtl.resource_database_id, dtl.resource_associated_entity_id, dtl.resource_type, dtl.resource_description, dtl.request_mode, dtl.request_status FROM sys.dm_tran_locks AS dtl ORDER BY dtl.request_session_id; | ||

6 | COMMIT; |

Feel free to edit this code and put in a longer delay if you need more time to switch between windows to make this all work.

Step 1 modifies some data but waits on the commit. Step 2 modifies the same data, so it’s blocked while the first step is waiting on the ten seconds. Step 3 allows you to use sys.dm_tran_locks to see the locks taken out by the two queries. In step 4, we wait out the ten seconds. In step 5, I query sys.dm_tran_locks a second time to see the locks used by the uncommitted code from step 2. Finally, in Step 6, I commit the code.

One note, I’m using the REPEATABLEREAD locking in step 1, running in Connection 1, to ensure that the (S) lock is retained on the code through the transaction.

A table has 8 rows and 11 rows. The key modes are changed from s to u then x via their placements in the second, sixth, and eighth rows.

Locks held by initial UPDATE statements

The focus here is on the U or UPDATE lock taken out by the session_id 78. It’s against the key where the data is stored. However, the plan is, as you can see, to CONVERT to an exclusive, X, lock (visible on line 8, described in the request_status column).

A table has 8 columns and 7 rows. The key and page, under resource type, have descriptions of 0 d 881 dad f c 5 c and 1 colon 12304 with no presence of a U lock.

Locks held only by the second UPDATE statement

The reason a U lock is used is because other resources can continue to read the data until the changes are committed. That means until the exclusive lock is needed, other processes can continue to read data, increasing concurrency in the system. However, once an update lock is taken on a resource, only one of those is allowed. While other resources can read the data, nothing else can modify the data until the first transaction is complete.

Exclusive (X) Mode

I’ve already shown exclusive, X, mode in action in the examples shown previously. The purpose of the exclusive locking mode is to hold a resource within the database while data manipulation is completed. No concurrent transactions are allowed, ensuring ACID compliance. While you saw that UPDATE uses lock conversion as a way to ensure a higher degree of concurrency, INSERT and DELETE statements immediately take exclusive locks on the necessary resources.

Other transactions can’t access the resource while the change is being made, ensuring that they are reading either before, or after, the modification, with no ongoing modifications being read.

Exclusive locks provide the mechanism to roll back transactions safely without modifying data since no other process has access to the data until the transaction is complete.

Intent Shared (IS), Intent Exclusive (IX), and Shared with Intent Exclusive (SIX) Modes

DELETE statement and a query for locks within a transaction

A table has 8 columns and 8 rows. The 2 keys and 2 pages, with different values under the resource description, have x and i x locks, respectively.

Locks taken out for a DELETE include Intent locks

The Intent lock here is IX, Intent Exclusive, at the PAGE level. That means it will lock at the page, row, or key level. There’s a second IX lock as well for a second object, a nonclustered index, on the table where the DELETE is occurring. Again, this means it will be using a page or key lock to deal with the DELETE operation.

The Intent locks are meant to ensure that while a lower-level lock is likely going to be used, no other resource can take out a higher-level lock that would cover the same resource. Without this type of lock, a process intending to take out a higher-level lock would need to scan all the locks in order to determine if it could take out its lock. The Intent lock ensures that high-level locking is optimized.

Only one Shared with Intent Exclusive, SIX, lock is allowed on a given resource. Other processes can place IS locks on a given resource at a lower level while the SIX lock is in place.

Schema Modification (Sch-M) and Schema Stability (Sch-S) Modes

Schema Modification and Schema Stability locks are acquired on a table by SQL statements that depend on the schema of the table. A DDL statement, working on the schema of a table, acquires an (Sch-M) lock on the table and prevents other transactions from accessing the table. An (Sch-S) lock is acquired for database activities that depend on the schema but do not modify the schema, such as a query compilation. It prevents an (Sch-M) lock on the table, but it allows other locks to be granted on the table.

Since, on a production database, schema modifications are infrequent, (Sch-M) locks don’t usually become a blocking issue. And because (Sch-S) locks don’t block other locks except (Sch-M) locks, concurrency is generally not affected by (Sch-S) locks either.

Bulk Update (BU) Mode

The Bulk Update lock mode is unique to bulk load operations. These operations are the older-style bcp (bulk copy), the BULK INSERT statement, and inserts from the OPENROWSET using the BULK option. As a mechanism for speeding up these processes, you can provide a TABLOCK hint or set the option on the table for it to lock on bulk load. The key to (BU) locking mode is that it will allow multiple bulk operations against the table being locked but prevent other operations while the bulk process is running.

Key-Range Mode

The Key-Range mode is applicable only while the isolation level is set to Serializable (you’ll learn more about transaction isolation levels in the “Isolation Levels” section). The Key-Range locks are applied to a series, or range, of key values that will be used repeatedly while the transaction is open. Locking a range during a serializable transaction ensures that other rows are not inserted within the range, possibly changing result sets within the transaction. The range can be locked using the other lock modes, making this more like a combined locking mode rather than a distinctively separate locking mode. For the Key-Range lock mode to work, an index must be used to define the values within the range.

Lock Compatibility

SQL Server provides isolation to a transaction by preventing other transactions from accessing the same resource in an incompatible way. However, if a transaction attempts a compatible task on the same resource, then to increase concurrency, it won’t be blocked by the first transaction. SQL Server ensures this kind of selective blocking by preventing a transaction from acquiring an incompatible lock on a resource held by another transaction. For example, an (S) lock acquired on a resource by a transaction allows other transactions to acquire an (S) lock on the same resource. However, an (Sch-M) lock on a resource by a transaction prevents other transactions from acquiring any lock on that resource.

Isolation Levels

The purpose of the locks and lock modes described in the previous section is very tightly coupled around the simple concept of ensuring data consistency through ACID compliance. However, the locks and modes are not the only driving factors for the behavior of data isolation within transactions. When you need to adjust how some of the locking processes are controlled, you use the isolation levels within SQL Server.

Read Uncommitted

Read Committed

Repeatable Read

Serializable

Snapshot

Read Committed Snapshot (technically a subset of the Read Committed isolation level)

The first four isolation levels are in the order of the degree of isolation. The isolation levels can be set at the database, connection, or query level. You can use the SET TRANSACTION ISOLATION level or the locking hints to affect this behavior at the query level. Connection settings for the isolation level are maintained throughout the connection unless a SET statement is used to change them.

Read Uncommitted

Read Uncommitted is the least restrictive of the four standard isolation levels. Read Uncommitted allows SELECT queries to access data without taking out a shared, S, lock. Since there is no S lock taken, not only do the queries running SELECT statements not interfere with data manipulation queries taking out Exclusive, X, locks, but the reads are not affected by those same X locks. In short, you can read data that is being actively manipulated. This is what is called a dirty read.

The common explanation of a dirty read goes as follows. The column stores the value “Cat”, and it’s actively being updated to the value “Dog”. A dirty read could get the value “Cat” or the value “Dog”. Because there are no locks taken for the reads, either could be returned.

However, that explanation for dirty reads does not go far enough. The data could be getting deleted, but you’ll still retrieve a value. In the event of scans across indexes at the leaf level, it’s possible to get duplicated values as page splits (explained earlier in Chapter 12) can cause rows to be moved before, or after, the point where a scan is occurring. Dirty reads could also miss rows, again, because of page splits moving their location.

Incorrect data

Duplicate data

Missing data

While many people treat Read Uncommitted (primarily through the query hint NOLOCK) as a magic “run faster” switch in SQL Server, this is not true.

In order to apply Read Uncommitted (and by extension NOLOCK), you should be able to ensure that you’re dealing with a system with a low need for accuracy in the data. For example, a banking or retail application would be a poor choice for using Read Uncommitted.

Setting Read Uncommitted for a connection

It bears repeating, using Read Uncommitted (and the NOLOCK query hint) on systems that have a high level of transaction activity can result in radically undependable data. Read Uncommitted is meant for reporting systems that have little to no data changes occurring in real time. While you may see a performance improvement in OLTP systems using Read Uncommitted, few organizations realize that they’re sacrificing data accuracy for that speed improvement.

Read Committed

Setting Read Committed for a connection

Read Committed is the default isolation level within SQL Server, and it will serve well for most databases. Because of the S locks, you may see contention between reads and data manipulation queries.

An option you have for dealing with read contention is to use the Read Committed Snapshot isolation level. Turning this isolation level on means that data manipulation queries will use row versioning in the tempdb. Basically, a copy of the row is created that SELECT queries will use until the data manipulation is complete.

Using Read Committed Snapshot will place additional load on the tempdb, so you’ll need to plan for that. However, you’ll see an improvement in speed since you’re eliminating contention between the reads and writes within the database. Also, unlike using NOLOCK or Read Uncommitted, you will not have an issue with page splits since there are no dirty reads taking place. Unlike SQL Server, the default isolation level in Azure SQL Database is Read Committed Snapshot.

Setting Read Committed Snapshot for a database

Retrieving Color from the Production.Product table

Running this query against my version of AdventureWorks returns the value “Blue”. Please note, I haven’t committed the transaction.

Changing the Color in the Production.Product table

You’ll see, within this transaction, the value returned is “Coyote”, not “Blue”. If I go back to the first connection, where Listing 16-14 is located, I can run the SELECT statement again (but not the BEGIN TRAN again). It won’t be blocked by the uncommitted transaction from Listing 16-15. The value returned will still be “Blue”. If I then commit the transaction in Listing 16-15 and then rerun our original query, the results now come back as “Coyote” because the transaction has been committed.

Disabling Read Committed Snapshot at the database

One point you should know about tempdb and row versioning. If tempdb fills, you won’t see issues with data manipulation queries. However, you may see errors on reads because the row versions will not be available.

Repeatable Read

The next most restrictive isolation level is Repeatable Read. In Read Committed, a shared lock, S, is taken as the data is read, but as soon as the read completes, that lock is dropped, regardless of the state of the transaction (we saw that in action with Read Committed Snapshot). Repeatable Read, on the other hand, maintains the S lock until the transaction is complete. In short, no data updates can be performed until the open transaction reading the data is committed or rolled back. This ensures that multiple queries within a given transaction will have identical results.

For a single Product, I want to change the price if it’s currently greater than ten.

For all products in the table, I want to discount the prices by 40%.

Creating a test table and data

Applying discounts to prices in the MyProduct table

Running each transaction in order from two different query windows results in the Price where ProductID = 1 being -1.0.

The issue here is that the second transaction is allowed to modify the data while the first is still running through its processes, reading the data. In order for Transaction 1 to not be affected in this way, a more stringent isolation level than Read Committed will be required.

Changes to the first transaction

Now if both transactions are run together, the second transaction cannot update the data until the first transaction completes. You could also add a lock hint to the QUERY like this: …FROM dbo.MyProduct AS mp WITH (REPEATABLEREAD).

Serializable

Serializable is the highest of the isolation levels. Instead of acquiring a lock only on the row to be accessed, transactions under the Serializable isolation level will take a lock on the row in question and on the next row in the order of the data set. This does two things. First, transactions cannot insert rows into the data set being locked. Next, it prevents additional rows being discovered after the fact, also known as a phantom read.

Creating the MyEmployee table and data

Applying the bonus and hiring a new employee

Running these two transactions, the result of the final @Fund is -$50. The addition of the new employee meant that the bonus fund was overdrawn. The solution here, like in the previous examples, is to change the locking mechanism to prevent this sort of thing from occurring, using Serializable isolation level.

There are several considerations when working with all these locking levels. On the one hand, reducing the locking as much as possible can help performance. However, it comes at the cost of data consistency and behavioral consistency. On the other hand, increasing locking can prevent these kinds of issues but increases contention, hurting performance. In short, this is all a balancing act.

Snapshot

Snapshot isolation is the second of the row-versioning isolation levels available in SQL Server since SQL Server 2005. Unlike Read Committed Snapshot isolation, Snapshot isolation requires an explicit call to SET TRANSACTION ISOLATION LEVEL at the start of the transaction. It also requires setting the isolation level on the database. Snapshot isolation is meant as a more stringent isolation level than the Read Committed Snapshot isolation. Snapshot isolation will attempt to put an exclusive lock on the data it intends to modify. If that data already has a lock on it, the snapshot transaction will fail. It provides transaction-level read consistency, which makes it more applicable to financial-type systems than Read Committed Snapshot.

Effect of Indexes on Locking

If a table is a heap, meaning no clustered index defining data storage, then it can only have a very limited set of locks: row (RID), page (PAG), and table (TAB). Adding indexes to a table changes what resources can be, and must be, locked in support of the transactions. The effect of an index on locking varies with the index type and that index’s ability to support WHERE, ON, and HAVING clauses that are part of the data manipulation queries. I’m going to explore some of the effects of an index on locking.

Effect of a Nonclustered Index

Creating a nonclustered index on MyEmployees

Running a named transaction, LockBehavior

A table has 8 columns and 6 rows. Resource types database, page, rid, object, and key have a variety of s, i x, i u, x, and u locks assigned.

Locking in a nonclustered index

An (IU) lock on the page containing the nonclustered index row

A (U) lock on the nonclustered index row within the index page

An (IX) lock on the table

An (IX) lock on the page containing the data row

An (X) lock on the data row within the data page

You can then see that additional locking overhead is introduced. This can, possibly, negatively impact performance. Also, in some cases, you may see more contention. That’s not to say we won’t be using nonclustered indexes. Of course, we will.

Changing the locking behavior for an index

This approach is inherently dangerous. You’re taking control away from SQL Server. Using this method, only a table lock will be taken out, radically increasing contention on the table. Only perform this sort of action as a last resort and try changing just one setting at a time.

Effects of a Clustered Index

Replacing the nonclustered index with a clustered index

A table has 8 columns and 6 rows. Resource types database, page, object, and key have a variety of s, i x, and x locks assigned.

Effects of a clustered index on locking behavior

An (IX) lock on the table

An (IX) lock on the page containing the clustered index row

An (X) lock on the clustered index row within the table or clustered index

The locks on the clustered index row and the leaf page are actually the locks on the data row and data page, too, since the data pages and the leaf pages are the same. Thus, the clustered index reduced the locking overhead on the table compared to the nonclustered index.

Reduced locking overhead of a clustered index is another benefit of using a clustered index over a heap.

Capturing Blocking Information

With a good understanding of how locking occurs, the next step is to look at the blocking that occurs between processes caused by those locks. While the locks are entirely necessary to maintain ACID compliance and data consistency, excessive blocking from the locks is a performance concern. Understanding where blocking is occurring will help you improve concurrency and therefore performance.

Connection information about both the blocking and blocked sessions

The lock information about the blocking and blocked sessions

The SQL statements being executed by the blocking and blocked sessions

There are several ways to gather this information. You can use the Activity Monitor within Management Studio. The Process page provides basic blocked processing information including the process identifier (SPID) for blocking processes, the blocked processes (called the block chain), and information about the queries. You can also query directly against the Dynamic Management Objects/Views (DMVs) to pull back detailed information. Finally, Extended Events comes with a blocked process report event that supplies a lot of detail, along with all that you can do in Extended Events (detailed in Chapter 3).

Here, I’m going to cover the most flexible and most powerful methods: T-SQL and the DMVs and Extended Events.

Capturing Blocking Information Using T-SQL

Querying the DMVs for blocking information

Creating the BlockTest table

Two scripts that will result in a blocked process

Since the transaction, User1, is started but not committed, it’s going to hold the locks on the BlockTest table until we stop the script, roll it back, or cancel the execution.

4 tables with varying numbers of columns and 1 row each. The columns are labeled blocking session I D, waiting session I D, resource d e s cm blocking t s q l, waiting t s q l, wait type, waiting request type, wait duration, and much more.

Information about the blocked and blocking processes

You can easily spot the blocking session and the waiting session. You can also see the details about exactly which resource the contention is over. Both queries are available, and then you can see additional information about the objects, database, and more. The duration in this case is in milliseconds.

I included the database name because the DMVs are system wide, so you’ll want to see which database is involved as a part of your investigation.

Before going forward, be sure to commit or roll back the transaction for User1.

Extended Events and the blocked_process_report Event

Changing the blocked process threshold

The default value for the threshold is zero (0), meaning it will never actually fire the blocked process event. Using the script in Listing 16-29, I changed mine to 5. Determining this value is an important part of configuring your systems. There is no single correct answer. For some systems, a one-second blocked process may be too long, while others may not care until minutes have passed. However, setting this value controls the behavior of the blocked_process_report and any emails, Slack messages, tweets, or whatever you use from your alerting system. I would suggest setting a relatively low value to start and then adjusting from there.

Extended Events session for blocked_process_report

Yeah, it really is that easy.

A table has field and value columns and 9 rows for details of the block process report event. The leftmost row has entries such as blocked process and duration.

Data from the blocked_process_report Extended Event

The basic information shown gives you a little bit of an idea what’s happening. There’s a blocked process in the AdventureWorks database, and the duration, in microseconds, is 5770000, or just over five seconds. By the way, every five seconds you’ll get a new copy of the report, with an ever-increasing duration, so, again, as I say, experiment with the appropriate setting.

Reading through XML is certainly not enjoyable. However, all the information we’re looking for, and more, is available in the report. The Session ID values are listed, as the “spid”. You can see the query, lock mode, wait resource, and all the other information we pulled from the DMVs, but this time automatically gathered through Extended Events.

Recommendations to Reduce Blocking

Keep transactions short.

Perform the minimum steps/logic within a transaction.

Do not perform costly external activity within a transaction, such as sending an acknowledgment email or performing activities driven by the end user.

Optimize the queries involved.

Create indexes as required to ensure optimal performance of the queries within the system.

Avoid a clustered index on frequently updated columns. Updates to clustered index key columns require locks on the clustered index and all nonclustered indexes (since their row locator contains the clustered index key).

Consider using a covering index to serve the blocked SELECT statements.

Use query timeouts or a resource governor to control runaway queries. For more on the resource governor, consult Microsoft’s documentation.

Avoid losing control over the scope of the transactions because of poor error-handling routines or application logic.

Use SET XACT_ABORT ON to avoid a transaction being left open on an error condition within the transaction.

Execute the following SQL statement from a client error handler (TRY/CATCH) after executing a SQL batch or stored procedure containing a transaction: IF @@TRANCOUNT > 0 ROLLBACK.

Use the lowest isolation level required.

Consider using row versioning, one of the SNAPSHOT isolation levels, to help reduce contention.

Summary

Blocking may be inevitable, but understanding the root causes through the ACID properties of the database, locks and locking, isolation levels, and all the rest of the information in this chapter can help you reduce them. The goal is having your servers scale as you add more and more users with more and more data. However, some blocking is inevitable. Therefore, learn to use the DMVs and Extended Events to capture blocking information. With that, you can help to alleviate contention and make your databases run faster and scale better.

Blocking not only hurts concurrency but can actually cause a major performance problem known as a deadlock. This is when processes mutually block one another, which we’ll cover in the next chapter.