Chapter 1. Clojure philosophy

- The Clojure way

- Why a(nother) Lisp?

- Functional programming

- Why Clojure isn’t especially object-oriented

Learning a new language generally requires significant investment of thought and effort, and it’s only fair that programmers expect each language they consider learning to justify that investment. Clojure was born out of creator Rich Hickey’s desire to avoid many of the complications, both inherent and incidental, of managing state using traditional object-oriented techniques. Thanks to a thoughtful design based in rigorous programming language research, coupled with a fervent look toward practicality, Clojure has blossomed into a programming language playing an undeniably important role in the current state of the art in language design.

In the grand timeline of programming language history, Clojure is an infant, but its colloquialisms (loosely translated as “best practices” or idioms) are rooted in 50+ years of Lisp, as well as 15+ years of Java history.[1] Additionally, the enthusiastic community that has exploded since its introduction has cultivated its own set of unique idioms.

1 Although it draws on the traditions of Lisps (in general) and Java, Clojure in many ways stands as a direct challenge to them for change.

In this chapter, we’ll discuss the weaknesses in existing languages that Clojure was designed to address, how it provides strength in those areas, and many of the design decisions Clojure embodies. We’ll also look at some of the ways existing languages have influenced Clojure, and define many of the terms used throughout the book. We assume some familiarity with Clojure, although if you feel like you’re getting lost, jumping to chapter 2 for a quick language tutorial will help. This chapter only scratches the surface of the topics that we’ll cover in this book, but it serves as a nice basis for understanding not only the “how” of Clojure, but also the “why.”

1.1. The Clojure way

We’ll start slowly.

Clojure is an opinionated language—it doesn’t try to cover all paradigms or provide every checklist bullet-point feature. Instead, it provides the features needed to solve all kinds of real-world problems the Clojure way. To reap the most benefit from Clojure, you’ll want to write your code with the same vision as the language itself. As we walk through the language features in the rest of the book, we’ll mention not just what a feature does, but also why it’s there and how best to take advantage of it.

But before we get to that, we’ll first take a high-level look at some of Clojure’s most important philosophical underpinnings. Figure 1.1 lists some broad goals that Rich Hickey had in mind while designing Clojure and some of the more specific decisions that are built into the language to support these goals. As the figure illustrates, Clojure’s broad goals are formed from a confluence of supporting goals and functionality, which we’ll touch on in the following subsections.

Figure 1.1. Some of the concepts that underlie the Clojure philosophy, and how they intersect

1.1.1. Simplicity

It’s hard to write simple solutions to complex problems. But every experienced programmer has also stumbled on areas where we’ve made things more complex than necessary—what you might call incidental complexity as opposed to complexity that’s essential to the task at hand (Moseley 2006). Clojure strives to let you tackle complex problems involving a wide variety of data requirements, multiple concurrent threads, independently developed libraries, and so on without adding incidental complexity. It also provides tools to reduce what at first glance may seem like essential complexity. Clojure is built on the premise of providing a key set of simple (consisting of few, orthogonal parts) abstractions and building blocks that you can use to form different and more powerful capabilities. The resulting set of features may not always seem easy (or familiar), but as you read this book, we think you’ll come to see how much complexity Clojure helps strip away.

One example of incidental complexity is the tendency of modern object-oriented languages to require that every piece of runnable code be packaged in layers of class definitions, inheritance, and type declarations. Clojure cuts through all this by championing the pure function, which takes a few arguments and produces a return value based solely on those arguments. An enormous amount of Clojure is built from such functions, and most applications can be, too, which means there’s less to think about when you’re trying to solve the problem at hand.

1.1.2. Freedom to focus

Writing code is often a constant struggle against distraction, and every time a language requires you to think about syntax, operator precedence, or inheritance hierarchies, it exacerbates the problem. Clojure tries to stay out of your way by keeping things as simple as possible, not requiring you to go through a compile-and-run cycle to explore an idea, not requiring type declarations, and so on. It also gives you tools to mold the language itself so that the vocabulary and grammar available to you fit as well as possible to your problem domain. Clojure is expressive. It packs a punch, allowing you to perform highly complicated tasks succinctly without sacrificing comprehensibility.

One key to delivering this freedom is a commitment to dynamic systems. Almost everything defined in a Clojure program can be redefined, even while the program is running: functions, multimethods, types, type hierarchies, and even Java method implementations. Although redefining things on the fly might be scary on a production system, it opens a world of amazing possibilities in how you think about writing programs. It allows for more experimentation and exploration of unfamiliar APIs, and it adds an element of fun that can sometimes be impeded by more static languages and long compilation cycles.

But Clojure’s not just about having fun. The fun is a byproduct of giving programmers the power to be more productive than they ever thought imaginable.

1.1.3. Empowerment

Some programming languages have been created primarily to demonstrate a particular nugget of academia or to explore certain theories of computation. Clojure is not one of these. Rich Hickey has said on numerous occasions that Clojure has value to the degree that it lets you build interesting and useful applications.

To serve this goal, Clojure strives to be practical—a tool for getting the job done. If a decision about some design point in Clojure had to weigh the trade-offs between the practical solution and a clever, fancy, or theoretically pure solution, usually the practical solution won out. Clojure could try to shield you from Java by inserting a comprehensive API between the programmer and the libraries, but this could make the use of third-party Java libraries clumsier. So Clojure went the other way: direct, wrapper-free, compiles-to-the-same-bytecode access to Java classes and methods. Clojure strings are Java strings, and ClojureScript strings are JavaScript strings; Clojure and ClojureScript function calls are native method calls—it’s simple, direct, and practical.

The decisions to use the Java Virtual Machine (JVM) and target JavaScript are clear examples of this practicality. The JVM, for instance, is an amazingly practical platform—it’s mature, fast, and widely deployed. It supports a variety of hardware and operating systems and has a staggering number of libraries and support tools available, all of which Clojure can take advantage of right out of the box. Likewise, in targeting JavaScript, ClojureScript can take advantage of its near-ubiquitous reach into the browser, server, and mobile devices, and even as a database processing script.

With direct method calls, proxy, gen-class, gen-interface (see chapter 10), reify, definterface, deftype, and defrecord (see section 9.3), Clojure works hard to provide a bevy of interoperability options, all in the name of helping you get your job done. Practicality is important to Clojure, but many other languages are practical as well. You’ll start to see some ways that Clojure sets itself apart by looking at how it avoids muddles.

1.1.4. Clarity

When beetles battle beetles in a puddle paddle battle and the beetle battle puddle is a puddle in a bottle ... they call this a tweetle beetle bottle puddle paddle battle muddle.

Dr. Seuss[2]

2 Fox in Socks (Random House, 1965).

Consider what might be described as a simple snippet of code in a language like Python:

# This is Python code x = [5] process(x) x[0] = x[0] + 1

After executing this code, what’s the value of x? If you assume process doesn’t change the contents of x at all, it should be [6], right? But how can you make that assumption? Without knowing exactly what process does, and what function it calls does, and so on, you can’t be sure.

Even if you’re sure process doesn’t change the contents of x, add multithreading and now you have another set of concerns. What if some other thread changes x between the first and third lines? Worse yet, what if something is setting x at the moment the third line is doing its assignment—are you sure your platform guarantees an atomic write to that variable, or is it possible that the value will be a corrupted mix of multiple writes? We could continue this thought exercise in hopes of gaining some clarity, but the end result would be the same—what you have ends up not being clear, but the opposite: a muddle.

Clojure strives for code clarity by providing tools to ward off several different kinds of muddles. For the one just described, it provides immutable locals and persistent collections, which together eliminate most of the single- and multithreaded issues.

You can find yourself in several other kinds of muddles when the language you’re using merges unrelated behavior into a single construct. Clojure fights this by being vigilant about separation of concerns. When things start off separated, it clarifies your thinking and allows you to recombine them only when and to the extent that doing so is useful for a particular problem. Table 1.1 contrasts common approaches that merge concepts in some other languages with separations of similar concepts in Clojure that will be explained in greater detail throughout this book.

Table 1.1. Separation of concerns in Clojure

|

Conflated |

Separated |

Where |

|---|---|---|

| Object with mutable fields | Values from identities | Chapter 4 and section 5.1 |

| Class acts as a namespace for methods | Function namespaces from type namespaces | Sections 8.2 and 8.3 |

| Inheritance hierarchy made of classes | Hierarchy of names from data and functions | Chapter 8 |

| Data and methods bound together lexically | Data objects from functions | Sections 6.1 and 6.2 and chapter 8 |

| Method implementations embedded throughout the class inheritance chain | Interface declarations from function implementations | Sections 8.2 and 8.3 |

It can be hard at times to tease apart these concepts in your mind, but accomplishing it can bring remarkable clarity and a sense of power and flexibility that’s worth the effort. With all these different concepts at your disposal, it’s important that the code and data you work with express this variety in a consistent way.

1.1.5. Consistency

Clojure works to provide consistency in two specific ways: consistency of syntax and of data structures. Consistency of syntax is about the similarity in form between related concepts. One simple but powerful example of this is the shared syntax of the for and doseq macros. They don’t do the same thing—for returns a lazy seq, whereas doseq is for generating side effects—but both support the same mini-language of nested iteration, destructuring, and :when and :while guards.

The similarities stand out when comparing the following examples of Clojure code. Each example shows all possible pairs formed using one of the keywords a or b and a positive odd integer less than 5. The first example uses what’s known as a for comprehension and returns a data structure of the pairs:

(for [x [:a :b], y (range 5) :when (odd? y)] [x y]) ;;=> ([:a 1] [:a 3] [:b 1] [:b 3])

The second example uses a doseq to print the pairs:

(doseq [x [:a :b], y (range 5) :when (odd? y)] (prn x y)) ; :a 1 ; :a 3 ; :b 1 ; :b 3 ;;=> nil

The value of this similarity is having to learn only one basic syntax for both situations, as well as the ease with which you can convert any particular usage of one form to the other if necessary.

Likewise, the consistency of data structures is the deliberate design of all of Clojure’s persistent collection types to provide interfaces as similar to each other as possible, as well as to make them as broadly useful as possible. This is an extension of the classic Lisp “code is data” philosophy. Clojure data structures aren’t used just for holding large amounts of application data, but also to hold the expression elements of the application itself. They’re used to describe destructuring forms and to provide named options to various built-in functions. Where other object-oriented languages might encourage applications to define multiple incompatible classes to hold different kinds of application data, Clojure encourages the use of compatible map-like objects.

The benefit of this is that the same set of functions designed to work with Clojure data structures can be applied to all these contexts: large data stores, application code, and application data objects. You can use into to build any of these types, seq to get a lazy seq to walk through them, filter to select elements of any of them that satisfy a particular predicate, and so on. Once you’ve grown accustomed to having the richness of all these functions available everywhere, dealing with a Java or C++ application’s Person or Address class will feel constraining.

Simplicity, freedom to focus, empowerment, consistency, and clarity—nearly every element of the Clojure programming language is designed to promote these goals. When writing Clojure code, if you keep in mind the desire to maximize simplicity, empowerment, and the freedom to focus on the real problem at hand, we think you’ll find Clojure provides you the tools you need to succeed.

1.2. Why a(nother) Lisp?

By relieving the brain of all unnecessary work, a good notation sets it free to concentrate on more advanced problems.

Alfred North Whitehead[3]

3 Quoted in Philip J. Davis and Reuben Hersh, The Mathematical Experience (Birkhäuser, 1981).

Go to any open source project hosting site, and perform a search for the term Lisp interpreter. You’ll likely get a cyclopean mountain[4] of results from this seemingly innocuous term. The fact of the matter is that the history of computer science is littered (Fogus 2009) with the abandoned husks of Lisp implementations. Well-intentioned Lisps have come and gone and been ridiculed along the way, and yet tomorrow, search results for hobby and academic Lisps will have grown almost without bounds. Bearing in mind this legacy of brutality, why would anyone want to base their brand-new programming language on the Lisp model?

4 ... of madness.

1.2.1. Beauty

Lisp has attracted some of the brightest minds in the history of computer science. But an argument from authority is insufficient, so you shouldn’t judge Lisp on this alone. The real value in the Lisp family of languages can be directly observed through the activity of using it to write applications. The Lisp style is one of expressivity, empowerment, and, in many cases, outright beauty. Joy awaits the Lisp neophyte. The original Lisp language, as defined by John McCarthy in his earth-shattering essay “Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I” (McCarthy 1960), defined the entire language in terms of only seven functions and two special forms: atom, car, cdr, cond, cons, eq, quote, lambda, and label.

Through the composition of those nine forms, McCarthy was able to describe the whole of computation in a way that takes our breath away. Computer programmers are perpetually in search of beauty, and, more often than not, this beauty presents itself in the form of simplicity. Seven functions and two special forms—it doesn’t get more beautiful than that.

1.2.2. But what’s with all the parentheses?

Why has Lisp persevered for more than 50 years while countless other languages have come and gone? There are probably complex reasons, but chief among them is likely the fact that Lisp as a language genotype (Tarver 2008) fosters language flexibility in the extreme. Newcomers to Lisp are sometimes unnerved by its pervasive use of parentheses and prefix notation, which is different than non-Lisp programming languages. The regularity of this behavior not only reduces the number of syntax rules you have to remember, but also makes writing macros trivial. Macros let you introduce new syntax into Lisp to make it easy to handle specific tasks; in other words, you can easily create domain-specific languages (DSLs) to customize Lisp to your immediate needs. We’ll look at macros in more detail in chapter 8; but to whet your appetite, we’ll talk about how Clojure’s seemingly odd syntax makes using them easy.

Clojure looks unlike almost every other popular language in use today. When you first encounter nearly every Lisp-based language, one thing stands out: the parentheses are in the wrong place! If you’re familiar with a language like C, Java, JavaScript, or C#, then function and method calls look like the following:

myFunction(arg1, arg2); myThing.myMethod(arg1, arg2);

Likewise, arithmetic operations tend to always look like the following:

// THIS IS NOT CLOJURE CODE 1 + 2 * 3; //=> 7

But in Clojure, a function and a method call look like this:

(my-function arg1 arg2) (.myMethod my-thing arg1 arg2)

Even more astonishing, mathematical operations look the same and likewise evaluate from the innermost nested parentheses to the outer:

(+ 1 (* 2 3)) ;;=> 7

We come from a background in languages with C-like syntax, and the Lispy prefix notation took some getting used to. Clojure’s extreme flexibility allows you to mold it in many ways to suit your personal style. For example, let’s assume that you didn’t want to deal with the Lispy prefix notation and instead wanted something more akin to APL (Iverson 1962), where every mathematical operation is strictly evaluated from right to left. A function that handles right-to-left evaluation of mathematical equations of up to four variables is shown next.

Listing 1.1. Function that solves math equations, evaluated right to left

(defn r->lfix ([a op b] (op a b)) ([a op1 b op2 c] (op1 a (op2 b c))) ([a op1 b op2 c op3 d] (op1 a (op2 b (op3 c d)))))

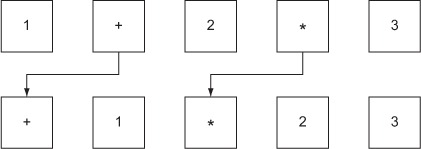

Perhaps you see how this works; but just in case, figure 1.2 shows how the operators are shuffled.

Figure 1.2. Right-to-left shuffle: the r->lfix function shuffles math operators, moving the rightmost infix operations to the innermost nested parentheses to ensure that they execute first.

The operation of r->lfix is as follows:

(r->lfix 1 + 2) ;;=> 3 (r->lfix 1 + 2 + 3) ;;=> 6

As shown, the basic cases using only a single operator type work as expected. Likewise, mixing operator types seems to work fine:

(r->lfix 1 + 2 * 3) ;;=> 7

But changing numbers and shifting operators around shows a potential problem:

(r->lfix 10 * 2 + 3) ;;=> 50

You might’ve hoped for 23 because you, like us, were taught that multiplication has a higher precedence than addition and should be performed first. Indeed, 23 is the answer in most C-like languages with infix math operators:

// THIS IS NOT CLOJURE CODE 10 * 2 + 3 //=> 23

That’s OK, because we can change our function to instead use a more Smalltalk-like evaluation order, where mathematical operations are performed strictly from left to right.

Listing 1.2. Function that solves math equations, evaluated left to right

(defn l->rfix ([a op b] (op a b)) ([a op1 b op2 c] (op2 c (op1 a b))) ([a op1 b op2 c op3 d] (op3 d (op2 c (op1 a b)))))

A visualization of how this new operator-shuffling function works is shown in figure 1.3. The multiplication is nested so as to be the first evaluation.

Figure 1.3. Left-to-right shuffle: the l->rfix function shuffles math operators, moving the leftmost infix operations to the inner-most nested parentheses to ensure that they execute first.

To be doubly sure that everything seems correct, we can try the new function:

(l->rfix 10 * 2 + 3) ;;=> 23

Looks good. What about some of the other equations? Observe:

(l->rfix 1 + 2 + 3) ;;=> 6

By now you’ve probably realized that we’re being silly. You could easily break our grade-school expectations with the following:

(l->rfix 1 + 2 * 3) ;;=> 9

Just sticking to a strict right-to-left and left-to-right evaluation isn’t going to allow us to observe proper operator precedence. Instead, we’d like to devise a way to describe the correct order of operations. For our purposes, a simple map will suffice:

(def order {+ 0 - 0

* 1 / 1})

The order map describes what the teachers of our youth tried to explain—the multiplication and division operators are weighted more than addition and subtraction and therefore should be used first. To keep things simple, we’ll write a new function, infix3, that observes the operator weights only for equations of three variables.

Listing 1.3. Function that changes evaluation order depending on operation weights

(defn infix3 [a op1 b op2 c]

(if (< (get order op1) (get order op2))

(r->lfix a op1 b op2 c)

(l->rfix a op1 b op2 c)))

As you may notice, the operators are looked up in the order map and compared. If the leftmost operator is weighted less than the rightmost, we know that the evaluation order should go the other way (right to left). In general terms, the evaluation order should occur starting on the side with the operator with higher precedence.

Checking out the new function shows that everything seems in order:

(infix3 1 + 2 * 3) ;;=> 7 (infix3 10 * 2 + 3) ;;=> 23

The syntax of any Lisp, and Clojure in particular, seems so different from the norm that many people mistakenly assume the language requires a superhuman effort to learn. Certainly it’s possible to expand the infix3 function to handle any number of nested arithmetic operations,[5] and perhaps that would make such math easier to read, but there are good reasons why Clojure has the syntax it does.

5 We created an example infix parser at www.github.com/joyofclojure/unfix that fits in about 20 lines of code.

No precedence rules

It seems obvious to us that multiplication has a higher precedence than addition, because that’s what we’ve been taught from time out of mind. But what are the precedence rules for operators like less-than, equals, bitwise-or, and modulo? We personally find it difficult to remember the proper precedence of all the C-like operators and as a result can’t immediately type the correct incantation of grouping parentheses to control the evaluation order. In Clojure, the evaluation ordering is always the same—from innermost to out, left to right:

(< (+ 1 (* 2 3)) (* 2 (+ 1 3))) ;;=> true

We frankly don’t buy the precedence argument for why Lisp syntax is great. Certainly it’s nice to not worry about precedence rules, but we don’t write a huge amount of code that requires us to memorize them. We’re personally happy to crack open a Java book or perform a web search to refresh our memories when we forget. But this points to a larger issue. In Clojure, parentheses mean only one thing to the evaluation scheme: aggregating a bunch of things together into a list where the head is a function and the rest of the things are its arguments. In a language like C or Java, the parentheses serve any of the following purposes:

- Aggregating the method, constructor, or function arguments

- A syntax to group method, constructor, or function parameters

- A way to control the evaluation order of a mathematical expression

- The syntax elements of for and while loops

- A way to properly resolve reference casting

- An optional way to group expressions in a return

- A way to group expressions to serve as a method-call target

- General-purpose expression grouping

- Creating capture groups in regular expressions

If you come from a language where parentheses are so overloaded, it’s understandable that you might be leery about a Lisp.

Adaptability is key

As we’ve mentioned, calls to Clojure functions all look the same:

(a-function arg1 arg2)

The mathematical operators in Clojure are also functions. In a language like Java, mathematical operators are special and only exist as members of special math expressions. Because a Clojure mathematical operator is instead a function, it can do anything that functions can do, including take any number of arguments:

(+ 1 2 3 4 5 6 7 8 9 10) ;;=> 55

If all function calls look the same to Clojure, then it’s easy to call functions with any number of arguments, because the call and its arguments are bound by the parentheses. The same expression in C or Java would look as follows:

// THIS IS NOT CLOJURE CODE 1 + 2 + 3 + 4 + 5 + 6 + 7 + 8 + 9 + 10; //=> 55

Honestly, how often do you need to write something like this? Probably not often at all. But because C and Java operators can only take two arguments, a left side and a right side, performing math on an arbitrary number of numbers requires a completely separate looping construct:

int numbers[] = {1,2,3,4,5,6,7,8,9,10};

int sum = 0;

for (int n : numbers) {

sum += n;

}

In Clojure, you can use the apply function to send a sequence of numbers to a function as if they were sent as arguments:

(def numbers [1 2 3 4 5 6 7 8 9 10]) (apply + numbers)

This is nice. Certainly you could create a specialized utility class to hold an array-summation function, but what if you needed a method to multiply a bunch of numbers in an array? You’d need to add it as well. Not a big deal, right? Maybe not, but the Lispy syntax that Clojure uses facilitates viewing functions as operating on collections of data by default. An interesting example is the less-than operator, used in many programming languages as follows:

// THIS IS NOT CLOJURE CODE 0 < 42; //=> true

Clojure provides the same kind of behavior:

(< 0 42) ;;=> true

But the fact that Clojure’s < function takes any number of arguments means you can directly express the idea of monotonically increasing, best defined with an example:

(< 0 1 3 9 36 42 108) ;;=> true

If any of the given numbers were not increasing, then the call would be false:

(< 0 1 3 9 36 -1000 42 108) ;;=> false

And this is a key point. The consistency of the function call form allows a great deal of flexibility in the way that functions receive arguments.

REPL phases

Clojure programs are composed of data structures. For example, Clojure can see the textual form (+ 1 2) one of two ways:

- The function call to + with the arguments 1 and 2

- The list of the symbol +, number 1, and number 2

What Clojure does with these two different things depends on context. If you open a Clojure REPL and type the following, then an evaluation occurs:

(+ 1 2) ;;=> 3

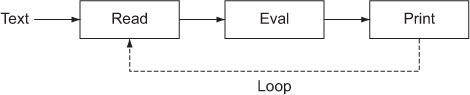

This is probably what you’d expect. But Clojure’s evaluation model has multiple phases, only one of which is the evaluation phase. The word REPL refers to the Read-Eval-Print Loop phases shown in figure 1.4.

Figure 1.4. The word REPL hints at three repeated or looped phases: read, eval, and print.

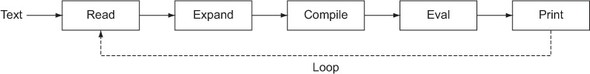

Most Lisps have the same high-level phase distinction separating the text read, from the eval step, from the printing step. But a more realistic picture of Clojure’s evaluation phases looks like figure 1.5.

Figure 1.5. More REPL phases: Clojure also has macro-expansion and compilation phases.

The addition of the expand and compile phases hints at an interesting aspect of most Lisps—macros.

Macros

We’ll talk more about macros in chapter 8, but here we’ll spend a page or two discussing the high-level idea behind Clojure macros. By splitting the evaluation into distinct phases, Clojure allows you to tap into some of these phases to manipulate the way that programs are evaluated. Recall from the previous section that to Clojure, (+ 1 2) can be either a list or a function call; the distinction is a matter of context. When you look at the characters on the screen or in this book, the text (+ 1 2) is just text. As shown in figure 1.6, after the read phase, Clojure doesn’t deal with parentheses, which are just textual demarcations of lists. Instead, after the read phase, Clojure deals exclusively with data structures residing in memory.

Figure 1.6. The reader takes a textual representation of a Clojure program and produces the corresponding data structures.

![]()

But the textual characters work to easily demarcate lists to the reader.[6] Lisps (and Clojure is no exception) have chosen to mirror the list-like textual syntax with the list data structure.[7] By mirroring the syntax with the underlying data representation of code, there’s little conceptual distance between the form of the code on the screen and the code that’s fed into the compiler. This short conceptual gap facilitates the manipulation of the data that represents a program using Clojure.

6 And for that matter, an editor also, as users of Paredit can attest.

7 And as you’ll soon see, Clojure’s textual representation is likewise conceptually tied to the corresponding data structure.

The notion of “code is data” is difficult to grasp at first. Implementing a programming language where code shares the same footing as the data structures it comprises presupposes a fundamental malleability of the language. When your language is represented as the inherent data structures, the language can manipulate its own structure and behavior (Graham 1995). Lisp can be likened to a self-licking lollipop—more formally defined as homoiconicity. Lisp’s homoiconicity requires a great conceptual leap in order for you to fully grasp it, but we’ll lead you toward that understanding throughout this book in hopes that you too will come to realize its inherent power.[8]

8 Chapters 8 and 14 are especially key in understanding macros.

As we hope you understand, Lisp has its syntax for an excellent reason: to simplify the conceptual model needed for direct program manipulation by the programs themselves. There’s a joy in learning Lisp for the first time, and if that’s your experience coming into this book, we welcome you—and envy you.

1.3. Functional programming

Quick, what does functional programming mean? Wrong answer.

Don’t be too discouraged—we don’t really know the answer either. Functional programming is one of those computing terms[9] that has an amorphous definition. If you ask 100 programmers for their definition, you’ll likely receive 100 different answers. Sure, some definitions will be similar, but like snowflakes, no two will be exactly the same. To further muddy the waters, the cognoscenti of computer science will often contradict one another in their own independent definitions. Likewise, the basic structure of any definition of functional programming will be different depending on whether your answer comes from someone who favors writing their programs in Haskell, ML, Factor, Unlambda, Ruby, Shen, or even Clojure. How can any person, book, or language claim authority for functional programming? As it turns out, just as the multitudes of unique snowflakes are all made mostly of water, the core of functional programming across all meanings has its core tenets.

9 Quick, what’s the definition of combinator? How about cloud computing? Enterprise? SOA? Web 2.0? Real-world? Hacker? Often it seems that the only term with a definitive meaning is yak shaving.

1.3.1. A workable definition of functional programming

Whether your own definition of functional programming hinges on the lambda calculus, monadic I/O, delegates, or java.lang.Runnable, your basic unit of currency is likely some form of procedure, function, or method—herein lies the root. Functional programming concerns and facilitates the application and composition of functions. Further, for a language to be considered functional, its notion of function must be first-class. First-class functions can be stored, passed, and returned just like any other piece of data. Beyond this core concept, the definitions branch toward infinity; but, thankfully, it’s enough to start. Of course, we’ll also present a further definition of Clojure’s style of functional programming that includes such topics as purity, immutability, recursion, laziness, and referential transparency, but that will come later, in chapter 7.

1.3.2. The implications of functional programming

Object-oriented programmers and functional programmers often see and solve a problem in different ways. Whereas an object-oriented mindset fosters the approach of defining an application domain as a set of nouns (classes), the functional mind sees the solution as the composition or verbs (functions). Although both programmers may generate equivalent results, the functional solution will be more succinct, understandable, and reusable. Grand claims, indeed! We hope that by the end of this book you’ll agree that functional programming fosters elegance in programming. It takes a shift in mindset to go from thinking in nouns to thinking in verbs, but the journey will be worthwhile. In any case, we think there’s much that you can take from Clojure to apply to your chosen language—if only you approach the subject with an open mind.

1.4. Why Clojure isn’t especially object-oriented

Elegance and familiarity are orthogonal.

Rich Hickey

Clojure was born out of frustration provoked in large part by the complexities of concurrent programming, complicated by the weaknesses of object-oriented programming in facilitating it. This section explores these weaknesses and lays the groundwork for why Clojure is functional and not object-oriented.

1.4.1. Defining terms

Before we begin, it’s useful to define terms.[10]

10 These terms are also defined and elaborated on in Rich Hickey’s presentation “Are We There Yet?” (Hickey 2009).

The first important term to define is time. Simply put, time refers to the relative moments when events occur. Over time, the properties associated with an entity—both static and changing, singular or composite—will form a concrescence (Whitehead 1929), or, in other words, its identity. It follows that at any given time, a snapshot can be taken of an entity’s properties, defining its state. This notion of state is an immutable one because it’s not defined as a mutation in the entity itself, but only as a manifestation of its properties at a given moment in time. Imagine a child’s flip book, as shown in figure 1.7, to understand the terms fully.

Figure 1.7. The Runner. A child’s flip book serves to illustrate Clojure’s notions of state, time, and identity. The book itself represents the identity. Whenever you wish to show a change in the illustration, you draw another picture and add it to the end of your flip book. The act of flipping the pages therefore represents the states over time of the image within. Stopping at any given page and observing the particular picture represents the state of the Runner at that moment in time.

It’s important to note that in the canon of object-oriented programming (OOP), there’s no clear distinction between state and identity. In other words, these two ideas are conflated into what’s commonly referred to as mutable state. The classical object-oriented model allows unrestrained mutation of object properties without a willingness to preserve historical states. Clojure’s implementation attempts to draw a clear separation between an object’s state and identity as they relate to time. To state the difference from Clojure’s model in terms of the aforementioned flip book, the mutable state model is different, as shown in figure 1.8.

Figure 1.8. The Mutable Runner. Modeling state change with mutation requires that you stock up on erasers. Your book becomes a single page: in order to model changes, you must physically erase and redraw the parts of the picture requiring change. Using this model, you should see that mutation destroys all notion of time, and state and identity become one.

Immutability lies at the cornerstone of Clojure, and much of the implementation ensures that immutability is supported efficiently. By focusing on immutability, Clojure eliminates the notion of mutable state (which is an oxymoron) and instead expounds that most of what’s meant by objects are instead values. Value by definition refers to an object’s constant representative amount,[11] magnitude, or epoch. You might ask yourself: what are the implications of the value-based programming semantics of Clojure?

11 Some entities have no representative value—pi is an example. But in the realm of computing, where we’re ultimately referring to finite things, this is a moot point.

Naturally, by adhering to a strict model of immutability, concurrency suddenly becomes a simpler (although not simple) problem, meaning if you have no fear that an object’s state will change, then you can promiscuously share it without fear of concurrent modification. Clojure instead isolates value change to its reference types, as we’ll show in chapter 11. Clojure’s reference types provide a level of indirection to an identity that can be used to obtain consistent, if not always current, states.

1.4.2. Imperative “baked in”

Imperative programming is the dominant programming paradigm today. The most unadulterated definition of an imperative programming language is one where a sequence of statements mutates program state. During the writing of this book (and likely for some time beyond), the preferred flavor of imperative programming is the object-oriented style. This fact isn’t inherently bad, because countless successful software projects have been built using object-oriented imperative programming techniques. But from the context of concurrent programming, the object-oriented imperative model is self-cannibalizing. By allowing (and even promoting) unrestrained mutation via variables, the imperative model doesn’t directly support concurrency. Instead, by allowing a maenadic[12] approach to mutation, there are no guarantees that any variable contains the expected value. Object-oriented programming takes this one step further by aggregating state in object internals. Although individual methods may be thread-safe through locking schemes, there’s no way to ensure a consistent object state across multiple method calls without expanding the scope of potentially complex locking scheme(s). Clojure instead focuses on functional programming, immutability, and the distinction between state, time, and identity. But OOP isn’t a lost cause. In fact, many aspects are conducive to powerful programming practice.

12 Wild and unrestrained; from the Greek term for a follower of the god Dionysus, the god of wine and patron saint of party animals.

1.4.3. Most of what OOP gives you, Clojure provides

It should be made clear that we’re not attempting to mark object-oriented programmers as pariahs. Instead, it’s important that we identify the shortcomings of OOP if we’re ever to improve our craft. In the next few subsections, we’ll touch on the powerful aspects of OOP and how they’re adopted and, in some cases, improved by Clojure. To start, we’ll discuss Clojure’s flavor of polymorphism, expressed via its protocol feature, an example of which is shown next.

Listing 1.4. Polymorphic Concatenatable protocol

(defprotocol Concatenatable (cat [this other]))

Protocols are somewhat related to Java interfaces and a distillation of what are commonly known as mix-ins. The Concatenatable protocol defines a single function cat that takes two arguments, the target object and another object, to concatenate to it. But the Concatenatable protocol describes only a sketch of the functions that form a protocol for concatenation—there is as of yet no implementation of this protocol. In the next section, we’ll discuss protocols in more detail, including their extension to new and existing types.

Polymorphism and the expression problem

Polymorphism is the ability of a function or method to perform different actions depending on the type of its arguments or a target object. Clojure provides polymorphism via protocols,[13] which let you attach a set of behaviors to any number of existing types and classes; they’re similar to what are sometimes called mix-ins, traits, or interfaces in other languages and are more open and extensible than polymorphism in many languages.

13 Clojure also provides polymorphic multimethods, covered in depth in section 9.2.

To reiterate, what we’ve done in listing 1.4 is to define a protocol named Concatenatable that groups one or more functions (in this case only one, cat) defining the set of behaviors provided. That means the function cat will work for any object that fully satisfies the protocol Concatenatable. We can then extend this protocol to the String class and define the specific implementation—a function body that concatenates the argument other onto the string this:

(extend-type String

Concatenatable

(cat [this other]

(.concat this other)))

;;=> nil

(cat "House" " of Leaves")

;;=> "House of Leaves"

We can also extend this protocol to the type java.util.List, so that the cat function can be called on either type:

(extend-type java.util.List

Concatenatable

(cat [this other]

(concat this other)))

(cat [1 2 3] [4 5 6])

;;=> (1 2 3 4 5 6)

Now the protocol has been extended to two different types, String and java.util.List, and thus the cat function can be called with either type as its first argument—the appropriate implementation will be invoked.

Note that String was already defined (in this case by Java itself) before we defined the protocol, and yet we were able to successfully extend the new protocol to it. This isn’t possible in many languages. For example, Java requires that you define all the method names and their groupings (known as interfaces) before you can define a class that implements them, a restriction that’s known as the expression problem.

A Clojure protocol can be extended to any type where it makes sense, even those that were never anticipated by the original implementer of the type or the original designer of the protocol. We’ll dive deeper into Clojure’s flavor of polymorphism in chapter 9, but we hope now you have a basic idea of how it works.

The expression problem refers to the desire to implement an existing set of abstract methods for an existing concrete class without having to change the code that defines either. Object-oriented languages allow you to implement an existing abstract method in a concrete class you control (interface inheritance), but if the concrete class is outside your control, the options for making it implement new or existing abstract methods tend to be sparse. Many popular programming languages such as Ruby, Scala, C#, Groovy, and JavaScript provide partial solutions to this problem by allowing you to add methods to an existing concrete object, a feature sometimes known as monkey-patching; or you can interpose a different object between mismatched types via implicit conversion.

Subtyping and interface-oriented programming

Clojure provides a form of subtyping by allowing the creation of ad hoc hierarchies: inheritance relationships you can define among data types or even among symbols, which let you easily use the principles of polymorphism. We’ll delve into using the ad hoc hierarchy facility later, in section 9.2. As shown before, Clojure provides a capability similar to Java’s interfaces via its protocol mechanism. By defining a logically grouped set of functions, you can begin to define protocols to which data-type abstractions must adhere. This abstraction-oriented programming model is key in building large-scale applications, as you’ll discover in section 9.3 and beyond.

Encapsulation

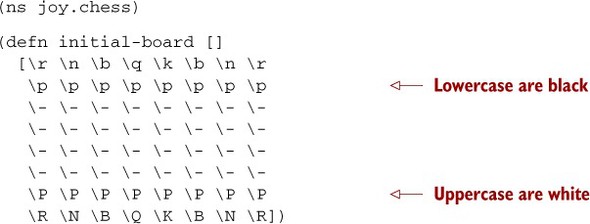

If Clojure isn’t oriented around classes, then how does it provide encapsulation? Imagine that you need a function that, given a representation of a chessboard and a coordinate, returns a basic representation of the piece at the given square. To keep the implementation as simple as possible, we’ll use a vector containing a set of characters corresponding to the colored chess pieces, as shown in the following listing.

Listing 1.5. Simple chessboard representation in Clojure

There’s no need to complicate matters with the chessboard representation; chess is hard enough. This data structure in the code corresponds directly to an actual chessboard in the starting position, as shown in figure 1.9.

Figure 1.9. The corresponding chessboard layout

From the figure, you can gather that the black pieces are lowercase characters and white pieces are uppercase. You can name any square on the chessboard using a standard rank-and-file notation—using a letter for the column and a number for the row. For example, a1 indicates the square at lower left, containing a white rook. This kind of structure likely isn’t optimal for an enterprise-ready chess application, but it’s a good start. You can ignore the actual implementation details for now and focus on the client interface to query the board for square occupations. This is a perfect opportunity to enforce encapsulation to avoid drowning the client in board-implementation details. Clojure has closures, and closures are an excellent way to group functions (Crockford 2008) with their supporting data.[14]

14 This form of encapsulation is described as the module pattern. But the module pattern as implemented with JavaScript provides some level of data hiding also, whereas in Clojure—not so much.

The code in the next listing provides a function named lookup that returns the contents of a square on the chessboard, given the name of the square in standard rank-and-file notation. It also defines a few supporting functions[15] that are used in the implementation of lookup; these are encapsulated at the level of the namespace joy.chess through the use of the defn- macro that creates namespace private functions.

15 And as a nice bonus, these functions can be generalized to project a 2D structure of any size to a 1D representation—which we leave to you as an exercise.

Listing 1.6. Querying the squares of a chessboard

Clojure’s namespace encapsulation is the most prevalent form of encapsulation that you’ll encounter in Clojure code. But the use of lexical closures provides another option for encapsulation: block-level encapsulation, as shown in the following listing, and local encapsulation, both of which effectively aggregate implementation details within a smaller scope.

Listing 1.7. Using block-level encapsulation

(letfn [(index [file rank]

(let [f (- (int file) (int a))

r (* 8 (- 8 (- (int rank) (int �))))]

(+ f r)))]

(defn lookup2 [board pos]

(let [[file rank] pos]

(board (index file rank)))))

(lookup2 (initial-board) "a1")

;;=> R

When possible, it’s a good idea to aggregate relevant data, functions, and macros at their most specific scope. You still call lookup2 as before, but now the ancillary functions aren’t readily visible to the larger enclosing scope—in this case, the namespace joy.chess. In the preceding code, we take the file-component and rank-component functions and the *file-key* and *rank-key* values out of the namespace proper and roll them into a block-level index function defined with the body of the letfn macro. Within this body, we then define the lookup function, thus limiting the client exposure to the chessboard API and hiding the implementation-specific functions and forms. But we can further limit the scope of the encapsulation, as shown in the next listing, by shrinking the scope even more to a truly function-local context.

Listing 1.8. Local encapsulation

(defn lookup3 [board pos]

(let [[file rank] (map int pos)

[fc rc] (map int [a �])

f (- file fc)

r (* 8 (- 8 (- rank rc)))

index (+ f r)]

(board index)))

(lookup3 (initial-board) "a1")

;;=> R

Finally, we’ve pulled all the implementation-specific details into the body of the lookup3 function. This localizes the scope of the index function and all auxiliary values to only the relevant party—lookup3. As a nice bonus, lookup3 is simple and compact without sacrificing readability.

Not everything is an object

Another downside to OOP is the tight coupling between function and data. The Java programming language forces you to build programs entirely from class hierarchies, restricting all functionality to containing methods in a highly restrictive “Kingdom of Nouns” (Yegge 2006). This environment is so restrictive that programmers are often forced to turn a blind eye to awkward attachments of inappropriately grouped methods and classes.[16] It’s because of the proliferation of this stringent object-centric viewpoint that Java code tends toward being verbose and complex (Budd 1995). Clojure functions are data, yet this in no way restricts the decoupling of data and the functions that work on it.[17] Many of what programmers perceive to be classes are data tables that Clojure provides via maps[18] and records. The final strike against viewing everything as an object is that mathematicians view little (if anything) as objects (Abadi 1996). Instead, mathematics is built on the relationships between one set of elements and another through the application of functions.

16 We like to call this condition the fallacy of misplaced concretions, taken from Whitehead—be careful using this term in mixed company.

17 Realize that at some point in your program you’ll need to know the keys on a map or the position of an element in a vector; we don’t mean to say that these precise details of structure are never important. Instead, Clojure lets you operate on data abstractions in the aggregate, allowing you to defer fine details until needed.

18 See section 5.6 for more discussion on this idea.

1.5. Summary

We’ve covered a lot of conceptual ground in this chapter, but it was necessary to define the terms used throughout the remainder of the book. Likewise, it’s important to understand Clojure’s terminology (some familiar and some new) to frame our discussion. If you’re still not sure what to make of Clojure, it’s OK—we understand that it may be a lot to take in all at once. Understanding will come gradually as we piece together Clojure’s story. If you’re coming from a functional programming background, you’ll likely have recognized much of the discussion in the previous sections, but perhaps with some surprising twists. Conversely, if your background is more rooted in OOP, you may get the feeling that Clojure is very different than you’re accustomed to. Although in many ways this is true, in the coming chapters you’ll see how Clojure elegantly solves many of the problems that you deal with on a daily basis. Clojure approaches solving software problems from a different angle than classical object-oriented techniques, but it does so having been motivated by their fundamental strengths and shortcomings.[19]

19 Although it’s wonderful when programming languages can positively influence the creation of new languages, a negative influence has proven much more motivating.

With this conceptual underpinning in place, it’s time to make a brief run through Clojure’s technical basics and syntax. We’ll be moving fairly quickly, but no faster than necessary to get to the deeper topics in following chapters. So hang on to your REPL, here we go ...