We just created nice, clean, pickle files with preprocessed images to train and test our classifier. However, we've ended up with 20 pickle files. There are two problems with this. First, we have too many files to keep track of easily. Secondly, we've only completed part of our pipeline, where we've processed our image sets but have not prepared a TensorFlow consumable file.

Now we will need to create our three major sets—the training set, the validation set, and the test set. The training set will be used to nudge our classifier, while the validation set will be used to gauge progress on each iteration. The test set will be kept secret until the end of the training, at which point, it will be used to test how well we've trained the model.

The code to do all this is long, so we'll leave you to review the Git repository. Pay close attention to the following three functions:

def randomize(dataset, labels):

permutation = np.random.permutation(labels.shape[0])

shuffled_dataset = dataset[permutation, :, :]

shuffled_labels = labels[permutation]

return shuffled_dataset, shuffled_labels

def make_arrays(nb_rows, img_size):

if nb_rows:

dataset = np.ndarray((nb_rows, img_size, img_size),

dtype=np.float32)

labels = np.ndarray(nb_rows, dtype=np.int32)

else:

dataset, labels = None, None

return dataset, labels

def merge_datasets(pickle_files, train_size, valid_size=0):

num_classes = len(pickle_files)

valid_dataset, valid_labels = make_arrays(valid_size,

image_size)

train_dataset, train_labels = make_arrays(train_size,

image_size)

vsize_per_class = valid_size // num_classes

tsize_per_class = train_size // num_classes

start_v, start_t = 0, 0

end_v, end_t = vsize_per_class, tsize_per_class

end_l = vsize_per_class+tsize_per_class

for label, pickle_file in enumerate(pickle_files):

try:

with open(pickle_file, 'rb') as f:

letter_set = pickle.load(f)

np.random.shuffle(letter_set)

if valid_dataset is not None:

valid_letter = letter_set[:vsize_per_class, :, :]

valid_dataset[start_v:end_v, :, :] = valid_letter

valid_labels[start_v:end_v] = label

start_v += vsize_per_class

end_v += vsize_per_class

train_letter = letter_set[vsize_per_class:end_l, :, :]

train_dataset[start_t:end_t, :, :] = train_letter

train_labels[start_t:end_t] = label

start_t += tsize_per_class

end_t += tsize_per_class

except Exception as e:

print('Unable to process data from', pickle_file, ':', e)

raise

return valid_dataset, valid_labels, train_dataset, train_labels

These three complete our pipeline methods. But, we will still need to use the pipeline. To do so, we will first define our training, validation, and test sizes. You can change this, but you should keep it less than the full size available, of course:

train_size = 200000

valid_size = 10000

test_size = 10000

These sizes will then be used to construct merged (that is, combining all our classes) datasets. We will pass in the list of pickle files to source our data from and get back a vector of labels and a matrix stack of images. We will finish by shuffling our datasets, as follows:

valid_dataset, valid_labels, train_dataset, train_labels =

merge_datasets( picklenamesTrn, train_size, valid_size) _, _, test_dataset, test_labels = merge_datasets(picklenamesTst,

test_size) train_dataset, train_labels = randomize(train_dataset,

train_labels) test_dataset, test_labels = randomize(test_dataset, test_labels) valid_dataset, valid_labels = randomize(valid_dataset,

valid_labels)

We can peek into our newly-merged datasets as follows:

print('Training:', train_dataset.shape, train_labels.shape)

print('Validation:', valid_dataset.shape, valid_labels.shape)

print('Testing:', test_dataset.shape, test_labels.shape)

Whew! That was a lot of work we do not want to repeat in the future. Luckily, we won't have to, because we'll re-pickle our three new datasets into a single, giant, pickle file. Going forward, all learning will skip the preceding steps and work straight off the giant pickle:

pickle_file = 'notMNIST.pickle'

try:

f = open(pickle_file, 'wb')

save = {

'datTrn': train_dataset,

'labTrn': train_labels,

'datVal': valid_dataset,

'labVal': valid_labels,

'datTst': test_dataset,

'labTst': test_labels,

}

pickle.dump(save, f, pickle.HIGHEST_PROTOCOL)

f.close()

except Exception as e:

print('Unable to save data to', pickle_file, ':', e)

raise

statinfo = os.stat(pickle_file)

print('Compressed pickle size:', statinfo.st_size)

The ideal way to feed the matrices into TensorFlow is actually as a one-dimensional array; so, we'll reformat our 28x28 matrices into strings of 784 decimals. For that, we'll use the following reformat method:

def reformat(dataset, labels): dataset = dataset.reshape((-1, image_size *

image_size)).astype(np.float32) labels = (np.arange(num_labels) ==

labels[:,None]).astype(np.float32) return dataset, labels

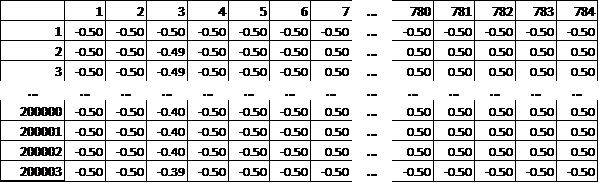

Our images now look like this, with a row for every image in the training, validation, and test sets:

Finally, to open up and work with the contents of the pickle file, we will simply read the variable names chosen earlier and pick off the data like a hashmap:

with open(pickle_file, 'rb') as f: pkl = pickle.load(f) train_dataset, train_labels = reformat(pkl['datTrn'],

pkl['labTrn']) valid_dataset, valid_labels = reformat(pkl['datVal'],

pkl['labVal']) test_dataset, test_labels = reformat(pkl['datTst'],

pkl['labTst'])