We've demonstrated our new tools on the notMNIST dataset, which was helpful as it served to provide a comparison to our earlier simpler network setup. Now, let's progress to a more difficult problem—actual cats and dogs.

We'll utilize the CIFAR-10 dataset. There will be more than just cats and dogs, there are 10 classes—airplanes, automobiles, birds, cats, deer, dogs, frogs, horses, ships, and trucks. Unlike the notMNIST set, there are two major complexities, which are as follows:

- There is far more heterogeneity in the photos, including background scenes

- The photos are color

We have not worked with color datasets before. Luckily, it is not that different from the usual black and white dataset—we will just add another dimension. Recall that our previous 28x28 images were flat matrices. Now, we'll have 32x32x3 matrices - the extra dimension represents a layer for each red, green, and blue channels. This does make visualizing the dataset more difficult, as stacking up images will go into a fourth dimension. So, our training/validation/test sets will now be 32x32x3xSET_SIZE in dimension. We'll just need to get used to having matrices that we cannot visualize in our familiar 3D space.

The mechanics of the color dimension are the same though. Just as we had floating point numbers representing shades of grey earlier, we will now have floating point numbers representing shades of red, green, and blue.

Recall how we loaded the notMNIST dataset:

dataset, image_size, num_of_classes, num_channels =

prepare_not_mnist_dataset()

The num_channels variable dictated the color channels. It was just one until now.

We'll load the CIFAR-10 set similarly, except this time, we'll have three channels returned, as follows:

dataset, image_size, num_of_classes, num_channels =

prepare_cifar_10_dataset()

Not reinventing the wheel.

Recall how we automated the dataset grab, extraction, and preparation for our notMNIST dataset in Chapter 2, Your First Classifier? We put those pipeline functions into the data_utils.py file to separate our pipeline code from our actual machine learning code. Having that clean separation and maintaining clean, generic functions allows us to reuse those for our current project.

In particular, we will reuse nine of those functions, which are as follows:

- download_hook_function

- download_file

- extract_file

- load_class

- make_pickles

- randomize

- make_arrays

- merge_datasets

- pickle_whole

Recall how we used those functions inside an overarching function, prepare_not_mnist_dataset, which ran the entire pipeline for us. We just reused that function earlier, saving ourselves quite a bit of time.

Let's create an analogous function for the CIFAR-10 set. In general, you should save your own pipeline functions, try to generalize them, isolate them into a single module, and reuse them across projects. As you do your own projects, this will help you focus on the key machine learning efforts rather than spending time on rebuilding pipelines.

Notice the revised version of data_utils.py; we have an overarching function called prepare_cifar_10_dataset that isolates the dataset details and pipelines for this new dataset, which is as follows:

def prepare_cifar_10_dataset():

print('Started preparing CIFAR-10 dataset')

image_size = 32

image_depth = 255

cifar_dataset_url = 'https://www.cs.toronto.edu/~kriz/cifar-

10-python.tar.gz'

dataset_size = 170498071

train_size = 45000

valid_size = 5000

test_size = 10000

num_of_classes = 10

num_of_channels = 3

pickle_batch_size = 10000

Here is a quick overview of the preceding code:

- We will grab the dataset from Alex Krizhevsky's site at the University of Toronto using cifar_dataset_url = 'https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz'

- We will use dataset_size = 170498071 to validate whether we've received the file successfully, rather than some truncated half download

- We will also declare some details based on our knowledge of the dataset

- We will segment our set of 60,000 images into training, validation, and test sets of 45000, 5000, and 10000 images respectively

- There are ten classes of images, so we have num_of_classes = 10

- These are color images with red, green, and blue channels, so we have num_of_channels = 3

- We will know the images are 32x32 pixels, so we have image_size = 32 that we'll use for both width and height

- Finally, we will know the images are 8-bit on each channel, so we have image_depth = 255

- The data will end up at /datasets/CIFAR-10/

Much like we did with the notMNIST dataset, we will download the dataset only if we don't already have it. We will unarchive the dataset, do the requisite transformations, and save preprocessed matrices as pickles using pickle_cifar_10. If we find the pickle files, we can reload intermediate data using the load_cifar_10_from_pickles method.

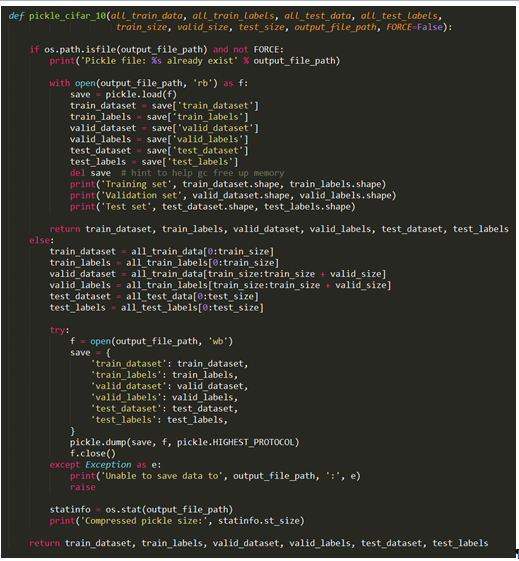

The following are the three helper methods that we will use to keep the complexity of the main method manageable:

- pickle_cifar_10

- load_cifar_10_from_pickles

- load_cifar_10_pickle

The functions are defined as follows:

The load_cifar_10_pickle method allocates numpy arrays to train and test data and labels as well as load existing pickle files into these arrays. As we will need to do everything twice, we will isolate the load_cifar_10_pickle method, which actually loads the data and zero-centers it:

Much like earlier, we will check to see if the pickle files exist already and if so, load them. Only if they don't exist (the else clause), we actually save pickle files with the data we've prepared.