Chapter 6: Vulnerability Assessment and Penetration Testing Methods and Tools

Security professionals must constantly assess the security posture of operating systems, networks, industrial control systems, end user devices, and user behaviors (to name but a few). We must constantly assess the security of our systems by utilizing vulnerability scanning. We should use industry-standard tools and protocols, to ensure compatibility across the enterprise. Security professionals should be aware of information sources where current threats and vulnerabilities are published. We may need independent verification of our security posture; this will involve enlisting third parties to assess our systems. Independent audits may be required for regulatory, legal, or industry compliance.

In this chapter, we will cover the following topics:

- Vulnerability scans

- Security Content Automation Protocol (SCAP)

- Information sources

- Testing methods

- Penetration testing

- Security tools

Vulnerability scans

Vulnerability scans are important as they allow security professionals to understand when systems are lacking important security configuration or are missing important patches. Vulnerability scanning will be done by security professionals in the enterprise to discover systems that require remediation. Vulnerability scanning may also be performed by malicious actors who wish to discover systems that are missing critical security patches and configuration. They will then attempt to exploit these weaknesses.

Credentialed versus non-credentialed scans

When performing vulnerability assessments, it is important to understand the capabilities of the assessment tool. In some cases, credentials will be required to give useful, accurate information on the systems that are being scanned. In some cases, some tools do not require credentials and the scans can be done anonymously. If we want to get an accurate security assessment of an operating system, it is important we supply credentials that are privileged. This will allow the vulnerability report to list settings such as the following:

- Installed software version

- Local services

- Vulnerabilities in registry files

- Local filesystem information

- Patch levels

Tenable Nessus is a popular vulnerability scanner, used by government agencies and commercial organizations. In their configuration requirements, they stipulate the use of an administrator account or root (for non-Windows).

If we run a scan without credentials, we will see the report from an attacker's perspective. It will lack detail, as there is no local logon available.

Agent-based/server-based

There are some vulnerability assessment solutions that require an agent to be installed on the end devices. This approach allows for pull technology solutions, meaning the scan can be done on the end device and the results can be pushed back to the server-based management console. There are also solutions where there are no agents deployed (agentless scanning). This means the server must push out requests to gather information from the end devices. In most cases, agent-based assessments will take some of the workload off the server and potentially take some traffic off the network.

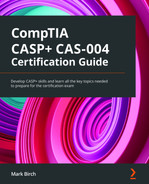

Criticality ranking

When we run a vulnerability assessment and we look at the output report, it is important to present the reports in a meaningful language that allows us to assess the most critical vulnerabilities and be able to respond accordingly. Figure 6.1 shows two vulnerability scans. In the first instance, there are highly critical vulnerabilities related to Wireshark installation. The report indicates they can be remediated with a software update. The second scan shows these vulnerabilities have been remediated:

Figure 6.1 – Critical ranked vulnerabilities

Other remediation requires configuration workarounds and the removal of applications/services.

Active versus passive scans

Active scanning will require interaction between the reporting/management station and the endpoints. Passive scanning can collect logged data and perform analysis on this data. On certain networks, such as Operational Technology (OT), we may not want to overwhelm the network or endpoints with additional reporting and subsequent network traffic. With passive scanning, the goal is to connect to a read-only (span) port and perform analysis on network traffic without injecting any data.

An alternative approach is to use active scanning, in an effort to generate more accurate reporting. A good example of active scanning would be SCAP.

Security Content Automation Protocol (SCAP)

SCAP is an open standard and is used by many vendors within the industry to support a common security reporting language. It is supported by the National Vulnerability Database (NVD), which is a US government-funded organization that produces content that can be used within a SCAP scanner.

It is used extensively within US government departments, including the Department of Defence (DoD), and meets Federal Information Security Management Act (FISMA) requirements.

Extensible Configuration Checklist Description Format (XCCDF)

XCCDF specifies a format for configuration files. These are the checklists that the SCAP scanner (the vulnerability assessment tool) will use. They are written in an XML format. Within the US government and DoD, these files are more commonly known as Secure Technical Implementation Guide (STIG). More information can be obtained from the following public site: https://public.cyber.mil/stigs/.

Important Note

XCCDF does not contain commands to perform a scan but relies on the Open Vulnerability and Assessment Language (OVAL) to process the requirements.

Open Vulnerability and Assessment Language (OVAL)

OVAL is an open international standard and is free for public use. It enables vendors to create output that is consistent. If I view a report that has been run using the Nessus tool, it will match the output created by another vendor's OVAL-compliant tool.

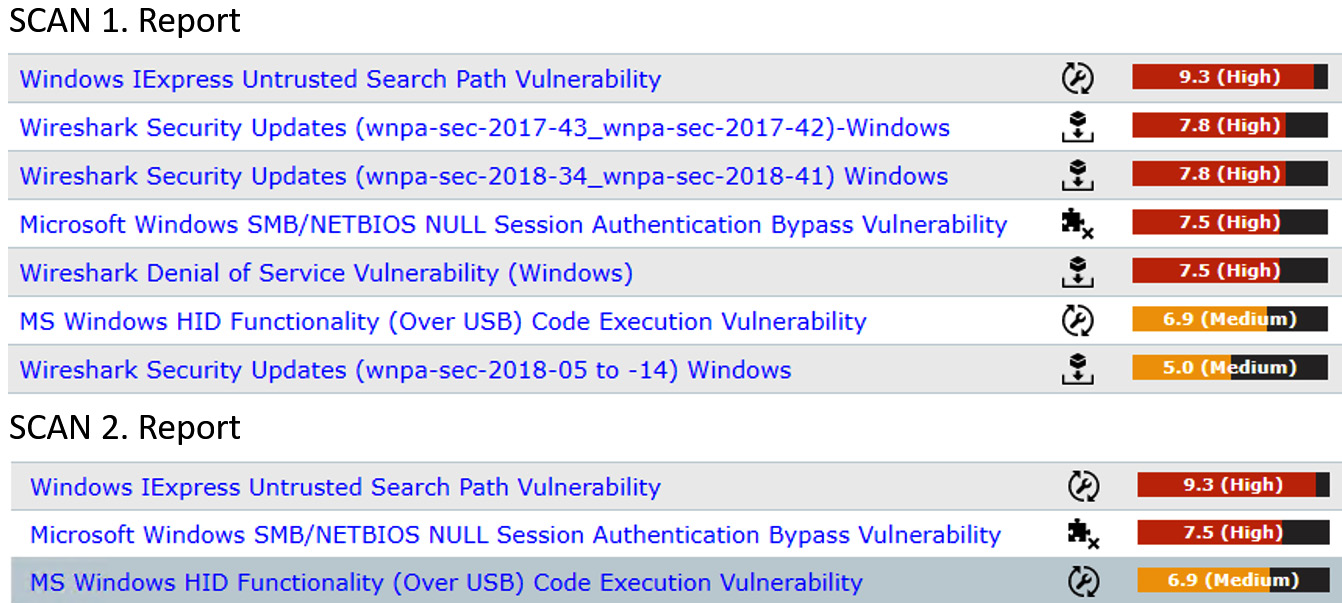

Common Platform Enumeration (CPE)

CPE is used to identify different operating system types, devices, and applications. This can then allow the vulnerability scanning tool to make automated decisions about which vulnerabilities to search for. An example would be if the system is identified as Linux running a common web application service, it will only need to check for vulnerabilities against those installed products.

Figure 6.2 shows a recent scan that has discovered 16 CPE items:

Figure 6.2 – List of discovered CPE items

When you begin a SCAP scan, the scanner will use this information to apply the correct templates/audit input for the scan. In the Figure 6.2 example, the tool will now look for any vulnerabilities associated with the Windows Server operating system with services including Remote Desktop Protocol (RDP), Wireshark, and Internet Explorer 11 (there are more services).

Common Vulnerabilities and Exposures (CVE)

CVEs are published by the NVD and MITRE. They give information about the vulnerability; this includes the severity or importance of the vulnerability and how to remediate the vulnerability. This will often be a link to a vendor site for a patch or resolution.

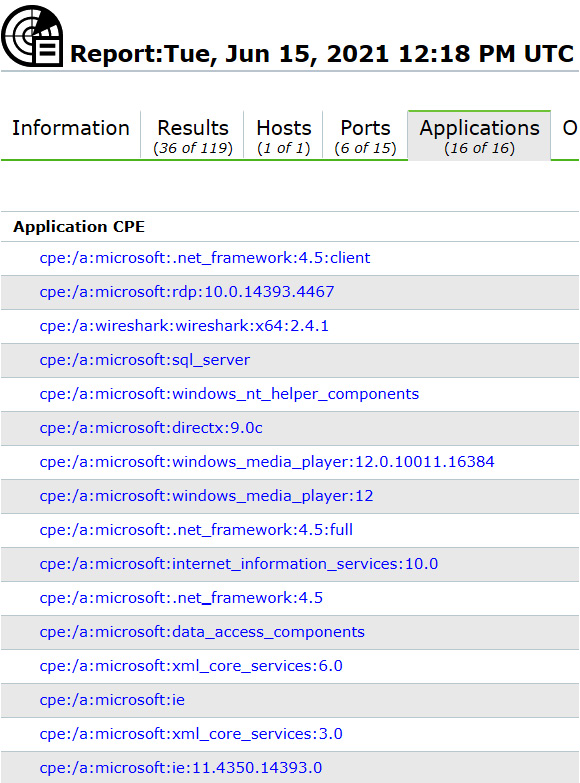

Common Vulnerability Scoring System (CVSS)

CVSS is used to calculate the severity of vulnerabilities by using qualitative risk scoring. The current scoring system is version 3.1. There is a CVSS calculator available at the following link, https://www.first.org/cvss/calculator/3.1, to enable security professionals to view the metrics that are used. See Figure 6.3 for an example CVSS calculator:

Figure 6.3 – CVSS calculator

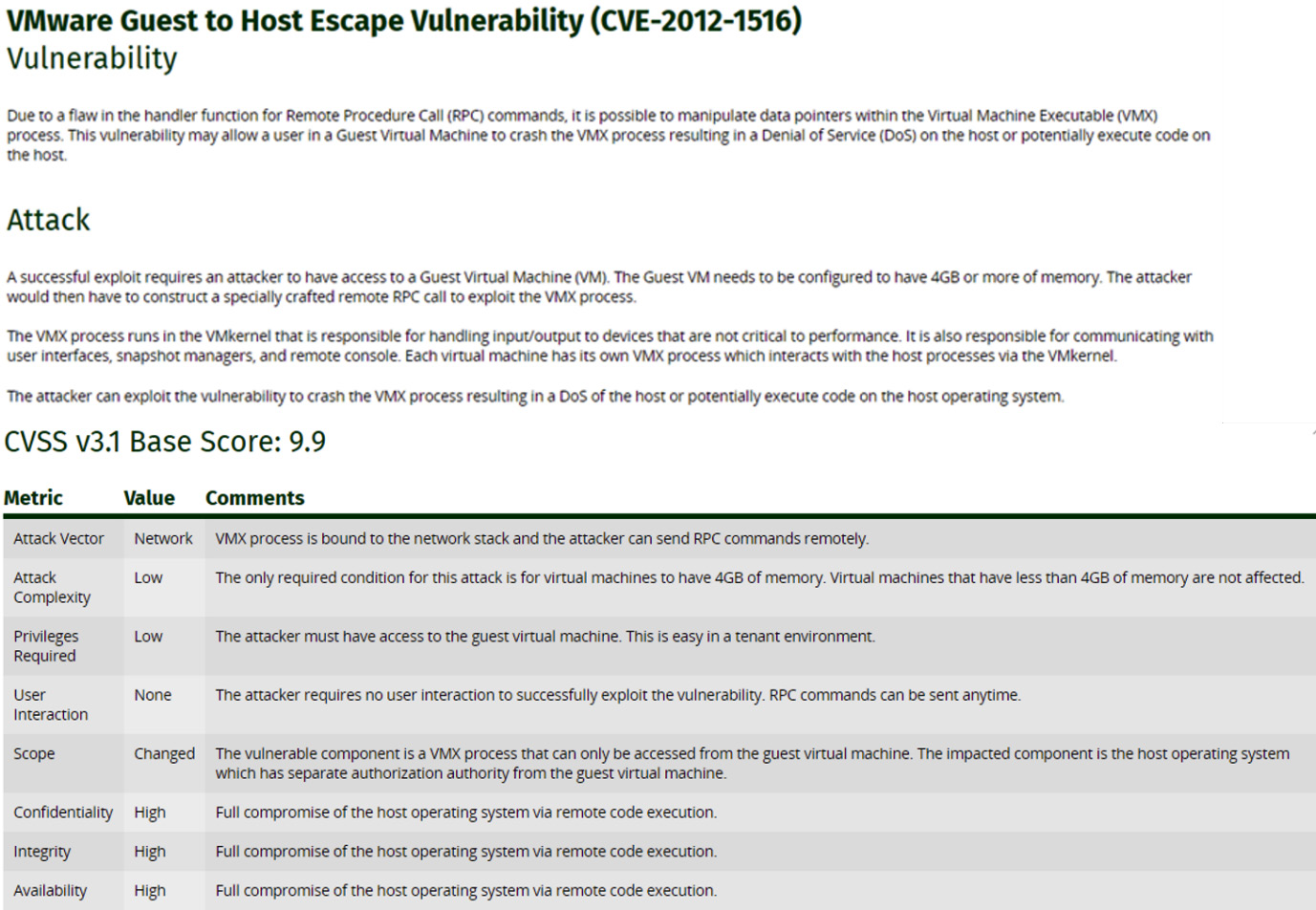

An example of a critically rated vulnerability is shown in Figure 6.4:

Figure 6.4 – Critical vulnerability

Important Note

CVE02012-1516 documents a VM escape vulnerability, which could severely impact a modern data center. This would be a top priority to remediate.

The accepted approach is to deal with critically rated vulnerabilities first.

Common Configuration Enumeration (CCE)

This is a set of identifiers to allow configuration items to be easily verified. Currently, the standard is designed only for software-configurable items. This standard allows for templates to be created using a standard format. A common set of identifiers is published for the Microsoft Windows operating system. This allows for the creation of template files to be used with SCAP scanners.

Asset Reporting Format (ARF)

ARF is a standard report format, based on XML templates. It allows for the results of a scan to be formatted in a SCAP-compliant way.

Now that we are aware of the standards and tools available, we should consider who will be responsible for the security assessment.

Self-assessment versus third-party vendor assessment

Self-assessments may be acceptable in certain situations where internal reporting is important. In all cases, governance and risk compliance should be a continuous assessment but sometimes we may need third-party verification; for example, to operate as a Certificate Authority (CA), you would require third-party auditing reports. To operate as a cloud data center, you would typically require third-party verification, as your customers may only be able to work with suppliers who have accreditation (US government agencies only work with cloud providers who meet FedRAMP requirements). PCI DSS compliance requires an external party to perform a yearly penetration test, where they would likely perform a vulnerability scan. This yearly test cannot be performed internally.

Patch management

Patch management is critical in an enterprise. End devices, servers, and appliances all require constant updating. We have operating systems running services and applications and embedded firmware. Patches offer functionality improvements on the system and, more importantly, security mitigation. Vulnerabilities in a system can ultimately cause availability issues to operations. Patches are first tested by the vendor; they can then be made available to the customer. Occasionally, patches can break working systems, and then the benefit of the patch is largely outweighed by the need to have the system functioning properly. Therefore, an enterprise should thoroughly test patches in a non-production environment before deploying them into a production environment.

There are many other sources for security-related information.

Information sources

While it is important to use automation where possible to produce reports and generate reporting dashboards, it is also important to consider alternative resources to maintain a positive security posture. These resources will provide additional vendor-specific information and industry-related information.

Advisories

It is important for an organization to monitor security advisories; this is information published by vendors or third parties. The information is security-related and will be posted as an update when a new vulnerability is discovered, such as a zero-day exploit. This would be an important mechanism, allowing security professionals to be informed about new threats. We can use advisories to access advice and mitigation for new and existing threats. Some example site URLs are listed as follows:

- Cisco: https://tools.cisco.com/security/center/publicationListing.x

- VMware: https://www.vmware.com/security/advisories.html

- Microsoft: https://www.microsoft.com/en-us/msrc/technical-security-notifications?rtc=1

- Hewlett Packard: https://www.hpe.com/us/en/services/security-vulnerability.html

Tip

This would be a good choice for recent vulnerabilities that may not have a CVE assigned to them, such as a zero-day exploit.

Bulletins

Bulletins are another way for vendors to publish security-related issues and vulnerabilities that may affect their customers. Many vendors will support subscriptions through a Remote Syndicate Service (RSS) feed. Examples of vendor security bulletins are listed as follows:

- Amazon Web Services (AWS): https://tinyurl.com/awsbulletins

- Google Cloud: https://cloud.google.com/compute/docs/security-bulletins

Vendor websites

Vendor websites can be useful resources for security-related information. In addition to the previously mentioned (advisories and bulletins), additional security-related information, tools, whitepapers, and configuration guides may be available. Microsoft is one of many vendors who offer their customers access to extensive security guidance and best practices: https://docs.microsoft.com/en-us/security/.

Information Sharing and Analysis Centers (ISACs)

ISACs allow the sharing of security information or cyber threats by organizations that provide critical infrastructure; it is a global initiative. This initiative also allows the sharing of information between public and private organizations. For more information concerning this initiative, go to https://www.nationalisacs.org/.

News reports

News reports can be a useful resource to heighten people's awareness of current threat levels and threat actors. In May/June 2021, there were prominent news reports about recent outbreaks of crypto-malware and ransomware. The following cases made headline news:

- 14 May 2021: The US fuel pipeline Colonial paid criminals $5 million to regain access to systems and resume fuel deliveries. See details at the following link: https://tinyurl.com/colonialransomware.

- 10 June 2021: JBS, the world's largest meat processing company, paid out $11 million in bitcoin to regain control of their IT services. Follow the story at this link: https://tinyurl.com/jcbransomware.

It is important to be aware that ransomware is still one of the biggest threats facing an organization's information systems.

Testing methods

There are various methods to search for vulnerabilities within an enterprise, depending on the scope of the assignment. Vulnerability assessments are performed by both security professionals, searching for vulnerabilities, and attackers threatening our networks (searching for the same vulnerabilities).

Static analysis

Static analysis is generally used against source code or uncompiled program code. It requires access to the source code so it is more difficult for an attacker to gain access. During a penetration test, the tester would be given the source code to carry out this type of analysis. Static Application Security Testing (SAST) is an important process to mitigate the risks of vulnerable code.

Dynamic analysis

Dynamic analysis can be done against systems that are operating. If this is software, this will mean the code is already compiled and we assess it using dynamic tools.

Side-channel analysis

Side-channel analysis is targeted against measurable outputs. It is not an attack against the code itself or the actual encryption technology. It requires the monitoring of signals, such as CPU cycles, power spikes, and noise going across a network. The electromagnetic signals can be analyzed using powerful computing technologies and artificial intelligence. Using these techniques, it may be possible to generate the encryption key that is being used in the transmission. An early example of attacks using side-channel analysis was in the 1980s, where IBM equipment was targeted by the Soviet Union. Listening devices were placed inside electronic typewriters. The goal seems to have been to monitor the electrical noise as the carriage struck the paper, with each character having a particular distinctive signature. See the following URL for more information: https://tinyurl.com/ibmgolfball.

Reverse engineering may be necessary when we are attempting to understand what a system or piece of code is doing. If we do not have the original source code, it is possible to run an executable in a sandbox environment and monitor its actions. Some code can be decompiled; an example would be Java. There are many available online tools to reverse-engineer Java code. One example is https://devtoolzone.com/decompiler/java. Other coding languages do not readily support decompiling.

If the code is embedded on a chip, then we may need to monitor activities in a sandbox environment. Monitor inputs and outputs on the network and compile logs of activity.

Wireless vulnerability scan

Wireless vulnerability scanning is very important as many organizations have a wireless capability. Vulnerabilities may be related to the signal strength, meaning it is visible outside the network boundary.

Security professionals need to secure wireless networks to ensure we are not vulnerable to unwanted intruders. Unsecured protocols must not be used on our wireless networks, ensuring credentials cannot be seen in clear text. We need to ensure administration is not happening across wireless networks in an unsecured format. Older networks may have been configured to use Wireless Encryption Privact (WEP) encryption, which is easily crackable, so hackers look for WEP systems so they can crack the key.

Software Composition Analysis (SCA)

It is estimated that around 90% of software developed contains open source software (this was around 10% in 2000). Open source software may be included in developed software by code reuse, it can also be included when a company purchases Commercial Off-the-Shelf (COTS) products. Third-party development, third-party libraries, and developers accessing code repositories may be other sources of open source software.

SCA is a tool that can analyze all open source code in use in your environment. It is important to have visibility of any third-party code that is included in your production systems. SCA tools can detect all use of third-party code.

As well as searching for third-party code artifacts, SCA will also report on any licensing issues. It is important to understand the restrictions that may affect the distribution or use of software that contains open source code. It may only allow use for non-commercial projects or have strict requirements to distribute your developed software freely.

One of the biggest risks is introducing vulnerabilities through the use of third-party code. SCA can produce reports, allowing for remediation. For more information, see the following link: https://tinyurl.com/scaTools/.

Fuzz testing

Fuzz testing will be used to ensure input validation and error handling are tested on the compiled software. Fuzzing will send pseudo-random inputs to the application, in an attempt to create errors within the running code. A fuzzer needs a certain amount of expertise to program and interpret the errors that may be generated. It is common now to incorporate automated fuzz testing into the DevOps pipeline. Google uses a tool called ClusterFuzz to ensure automated testing of its infrastructure is maintained and errors are logged.

While it is vitally important to perform regular vulnerability assessments against enterprise networks and systems, it will be important to obtain independent verification of the security posture of networks, systems, software, and users.

Penetration testing

Penetration testing can be performed by in-house teams, but regulatory compliance may dictate that independent verification is obtained. It is important you choose a pen testing team that is qualified and trustworthy. The National Cyber Security Center (NCSC) recommends that United Kingdom government agencies choose a penetration tester that holds one of the following accreditations:

- CREST: https://www.crest-approved.org/

- Tigerscheme: https://www.tigerscheme.org/

- Cyber Scheme: https://www.thecyberscheme.org/

It is important that penetration testers use an industry-standard framework. There are many to choose from. One very useful resource is Open Web Application Security Project (OWASP). They offer guidance, allowing organizations to select testing criteria to meet regulatory compliance. More information can be found at the following link: http://www.pentest-standard.org/index.php/Exploitation.

One of the popular standards is the Penetration Testing Execution Standard (PTES). This standard covers seven steps:

- Pre-engagement interactions

- Intelligence gathering

- Threat modeling

- Vulnerability analysis

- Exploitation

- Post-exploitation

- Reporting

The first step is pre-engagement, where discussions need to be held between the penetration testing representative and the customer representative.

Requirements

When an organization is preparing for security testing, for the report to have value, it is important to understand the exact reasons for the engagement. Are we looking to prepare for regulatory compliance? Perhaps we are testing the security awareness of our employees?

Box testing

When considering the requirements of the assessment, it is worth considering how realistic the assessment will be in terms of simulating an external attack from a highly skilled and motivated adversary.

White box

White box testing is when the attackers have access to all information that is relevant to the engagement. So, if the penetration testers are testing a web application and the customer wants quick results, they would provide the source code and the system design documentation. This is also known as a full-knowledge test.

Gray box

Gray box testing sits between white and black and may be a good choice when we don't have an abundance of time. We could eliminate reconnaissance and footprinting, by making available physical and logical network diagrams. This is also known as a partial-knowledge test.

Black box

In black box testing, the testers would be given no information, apart from what is publicly available. This is also known as a zero-knowledge test. The customer's goal may be to gain a real insight into how secure the company is with a test that simulates a real-world external attacker.

Scope of work

When preparing for penetration testing, it is important to create a scope of work. This should involve stakeholders in the organization, IT professionals managing the target systems, and representation from the penetration testers. This is all about what will be tested. Some of the issues to be discussed would include the following:

- What is the testing environment?

- Is the test for some type of regulatory compliance?

- How long will the testing take? A clear indication of timelines and costs is important.

- How many systems are involved? It is important to have a clear idea of assets to be tested.

- How should the team proceed after initial exploitation?

- Data handling: Will the team need to actually access sensitive documents (PHI, PII, or IP), or simply prove that it is possible?

- Physical testing: Are we testing physical access?

- What level of access will be required? What permissions are needed?

It is important to agree upon how the testing will proceed.

Rules of engagement

It is important to set clear rules about how the testing will proceed. While the scope is all about what is included in the testing, this is more about the process to deliver the test results. Here are some of the typical issues covered within the rules of engagement:

- Timeline: A clear understanding of when and how long the entire process will take is important.

- Locations: The customer may have multiple physical locations; do we need to gain physical access to all locations, or can we perform remote testing? Is the testing impacted by laws within a specific country?

- Evidence handling: Ensure all customer data is secured.

- Status meetings: Regular meetings to keep the customer informed of progress is important.

- Time of day of testing: It is important to clarify when testing will take place. The customer may want to minimize disruption during regular business hours.

- Invasive versus non-invasive testing: How much disruption is the customer prepared to accept?

- Permission to test: Do not proceed until the documentation is signed off. This may also be necessary when dealing with cloud providers.

- Policy: Will the testing violate any corporate or third-party policies?

- Legal: Is the type of testing proposed legal within the country?

Once the rules of engagement are agreed upon and documented, the test can begin. If the rules of engagement are not properly signed off, there could be legal ramifications as you could be considered to be hacking a system.

Post-exploitation

Post-exploitation will involve gathering as much information as possible from compromised systems. This phase will also involve persistence. It is important to adhere to the rules of engagement already defined with the customer. Common activities could include privilege escalation, data exfiltration, and Denial of Service (DOS). For a comprehensive list of activities, follow this URL: http://www.pentest-standard.org/index.php/Post_Exploitation#Purpose.

Persistence

Persistence is the goal for attacks that are long term, for instance, Advanced Persistent Threats (APTs) are long-term compromises on the organizations' network. MITRE lists 19 techniques, used for peristence, in the ATT&CK Matrix for Enterprise. The full list can be found at the following link: https://attack.mitre.org/tactics/TA0003/. Here are a few of the techniques that are listed:

- Account Manipulation

- Background Intelligent Transfer Service (BITS) Jobs

- Boot or Logon AutoStart Execution

- Boot or Logon Initialization Scripts

- Browser Extensions

- Compromise Client Software Binary

- Create Accounts

Pivoting

Pivoting is when an attacker gains an initial foothold on a compromised system and then moves laterally through the network to bigger and more important areas. This will typically be achieved by using an existing backdoor, such as a known built-in account. The MITRE ATT&CK Matrix for Enterprise lists nine techniques for lateral movement. The following is a list of typical tactics used for pivoting:

- Exploitation of Remote Services

- Internal SpearPhishing

- Lateral Tool Transfer

- Remote Service Session Hijacking

- Remote Services

- Replication Through Removable Media

- Software Deployment Tools

- Taint Shared Content

- Use Alternate Authentication Material

For more detail on these nine methods, follow this URL: https://attack.mitre.org/versions/v9/matrices/enterprise/.

Pivoting allows the tester to move laterally through networks and is an important process for gaining insights into systems on other network segments.

Rescanning for corrections/changes

It is important for management and security professionals to act on the results of penetration testing. Controls should be put in place to remediate issues with the highest criticalities being prioritized. We can run further tests to assess the controls.

Security professionals and penetration testing teams will use a variety of tools to test the security posture of networks, systems, and users.

Security tools

There are many tools available to identify and collect security vulnerabilities or to provide a deeper analysis of interactions between systems and services. When penetration testing is being conducted, the scope of the test may mean the team is given zero knowledge of the network. This would be referred to as black box testing. The team would need to deploy tools to enumerate networks and services and use reverse engineering techniques against applications. We will take a look at these tools.

SCAP scanner

A SCAP scanner will be used to report on deviations from a baseline, using input files such as STIGs or other XML baseline configuration files. The SCAP scan will also search for vulnerabilities present, by detecting the operating system and software installed using the CPE standard. Once the information is gathered about installed products, the SCAP scan can now search for known vulnerabilities related to CVEs.

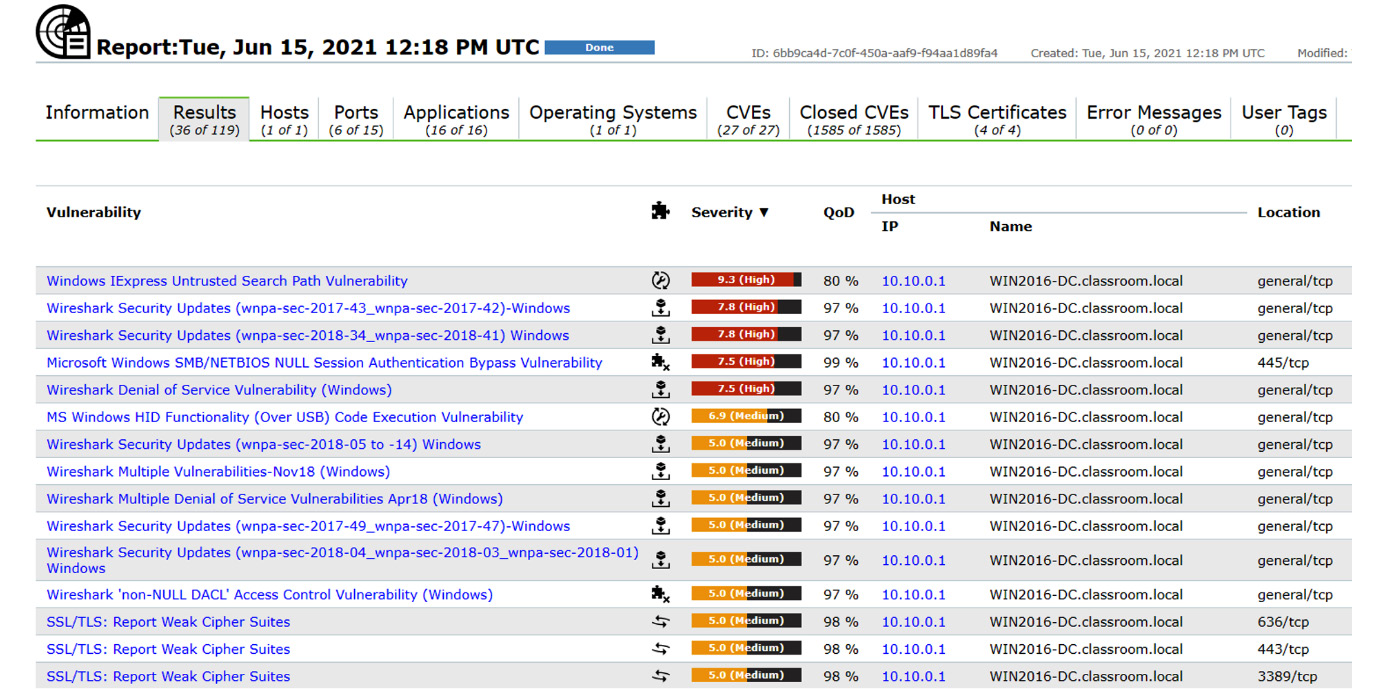

Figure 6.5 shows the results of a SCAP scan with critical vulnerabilities listed first (OpenVAS is an OVAL-compliant SCAP scanner):

Figure 6.5 – OpenVAS SCAP scan

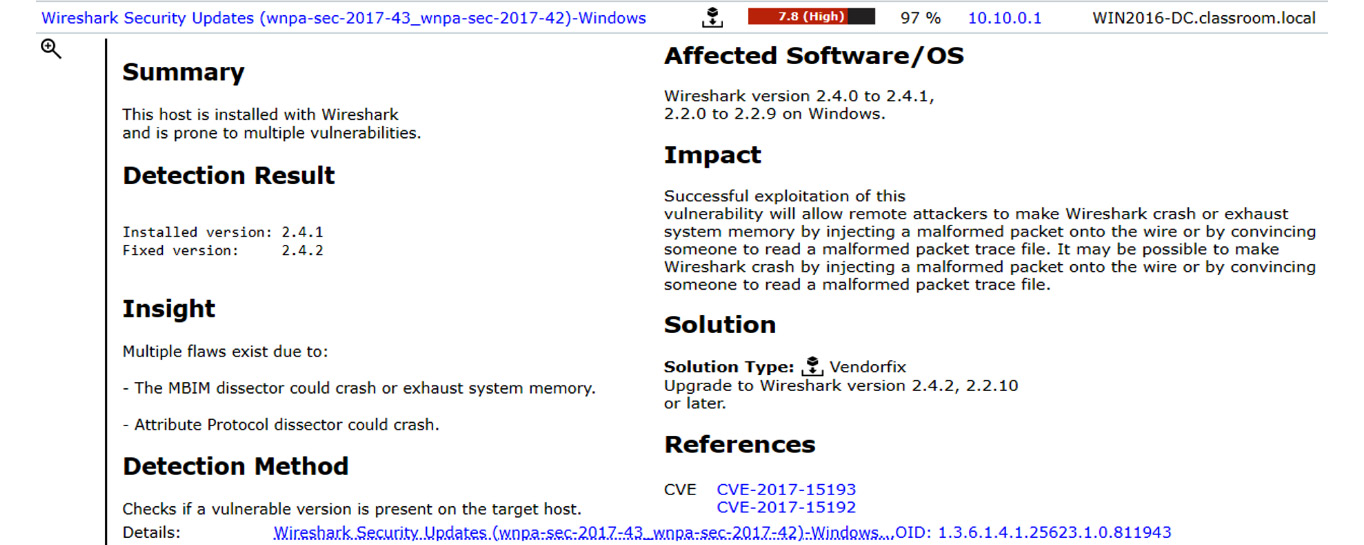

To remediate one of the critically rated vulnerabilities, there is a vendor fix, in the form of a software update; see Figure 6.6:

Figure 6.6 – Vendor fix

For each identified vulnerability, there will be a course of action, patch to be downloaded, configuration change, workaround, or uninstallation.

Network traffic analyzer

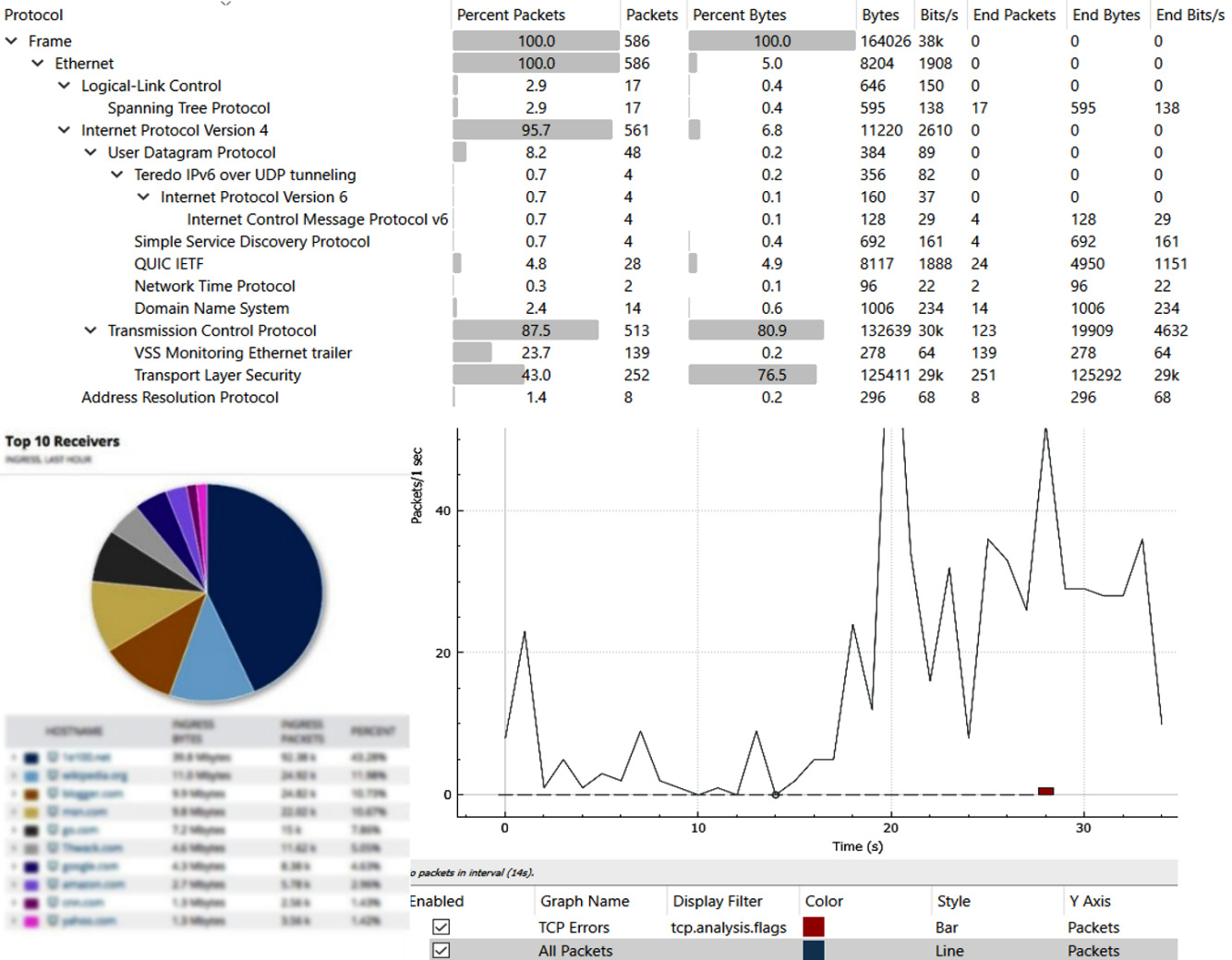

It is important for baseline traffic to be gathered. This allows professionals to understand normal traffic patterns and be alerted to anomalies. These tools can be useful for capacity planning as we can detect trends. Figure 6.7 shows the network traffic flow breakdown:

Figure 6.7 – Network traffic flow

As well as understanding the traffic patterns on the network, it is important to consider the security posture of the devices connected to the network.

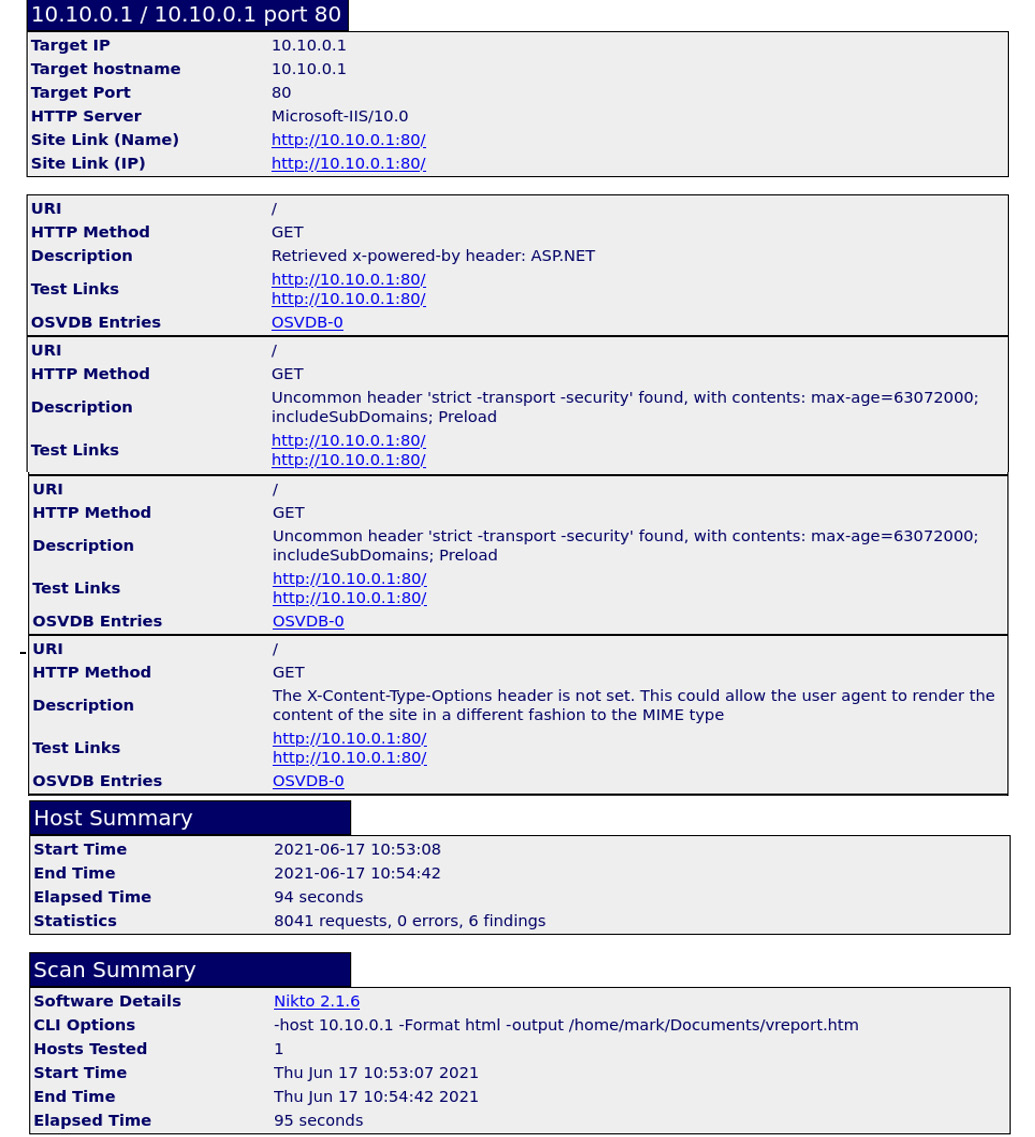

Vulnerability scanner

A vulnerability scanner can be used to detect open listening ports, misconfigured applications, missing security patches, poor security on a web application, and many other types of vulnerability. Figure 6.8 shows a vulnerability scan against a website:

Figure 6.8 – Vulnerability scanner

A vulnerability scan is very important but may not reveal additional information contained within network traffic protocols and payloads.

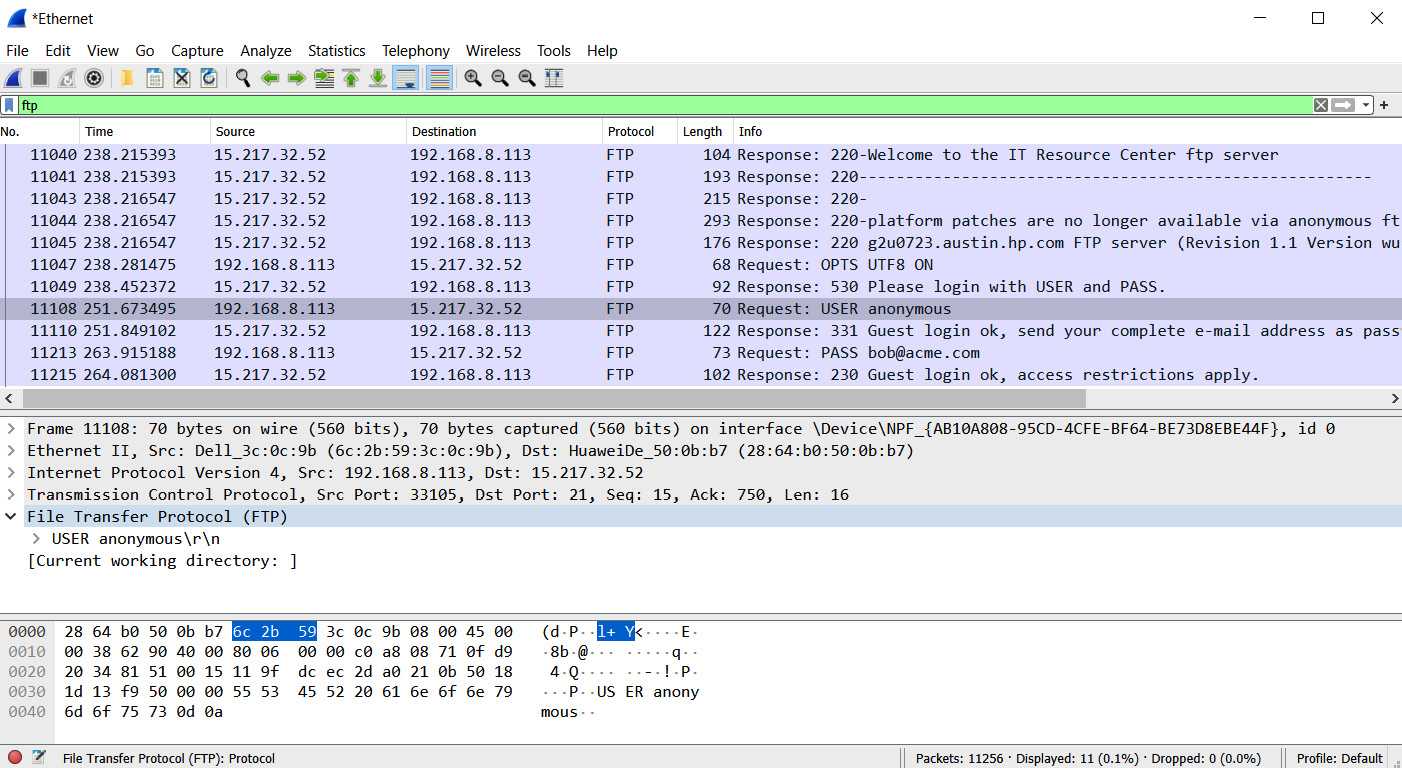

Protocol analyzer

A protocol analyzer is useful when we need to perform Deep Packet Inspection (DPI) on network traffic. tcpdump, TShark, and Wireshark would be common implementations of this technology. It is possible to capture network traffic using a common file format, to then analyze within the tool at a later time. We could use this tool to confirm the use of unsecure protocols or data exfiltration. Figure 6.9 shows Wireshark in use, with a capture of unsecured FTP traffic:

Figure 6.9 – Wireshark protocol analyzer

When the network needs to be enumerated or mapped, a protocol analyzer will not be the most efficient tool. An active scan may be a better choice.

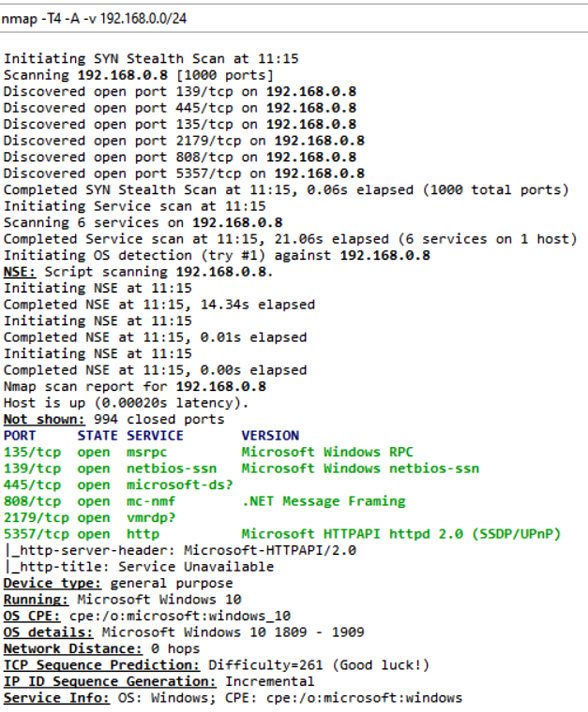

Port scanner

A port scanner will typically perform a ping sweep in the first instance, to identify all the live network hosts on a network. Each host will then be probed to establish the TCP and UDP listening ports. Port scans can be done to identify unnecessary applications present on a network. They can also be used by hackers to enumerate services on a network segment. A port scan performed by NMAP is shown in Figure 6.10:

Figure 6.10 – NMAP scan

Once an attacker or penetration tester has identified available hosts and services, they can attempt to gather more information, using additional tools.

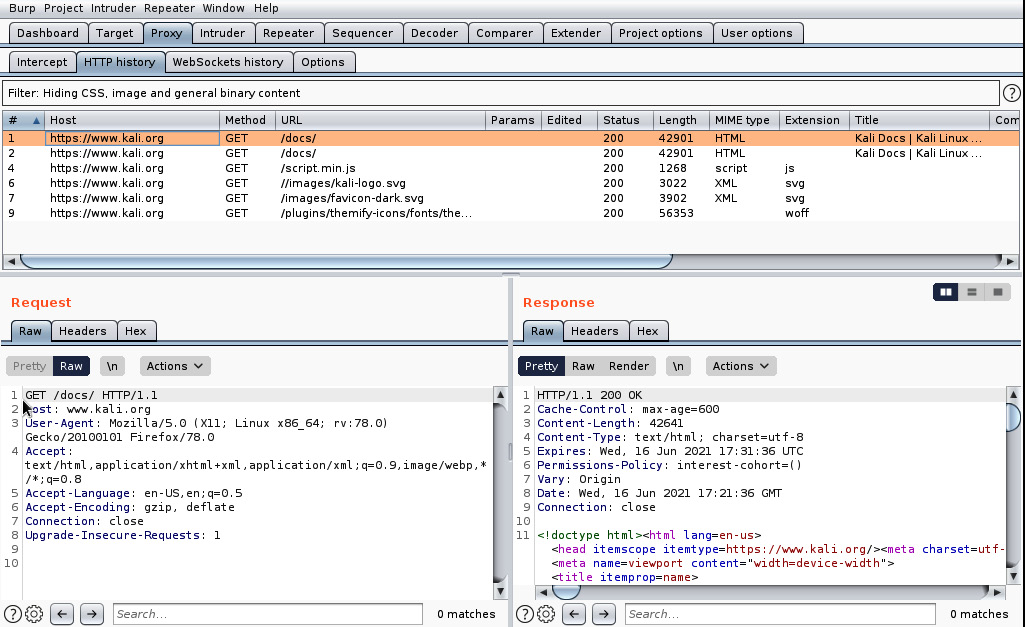

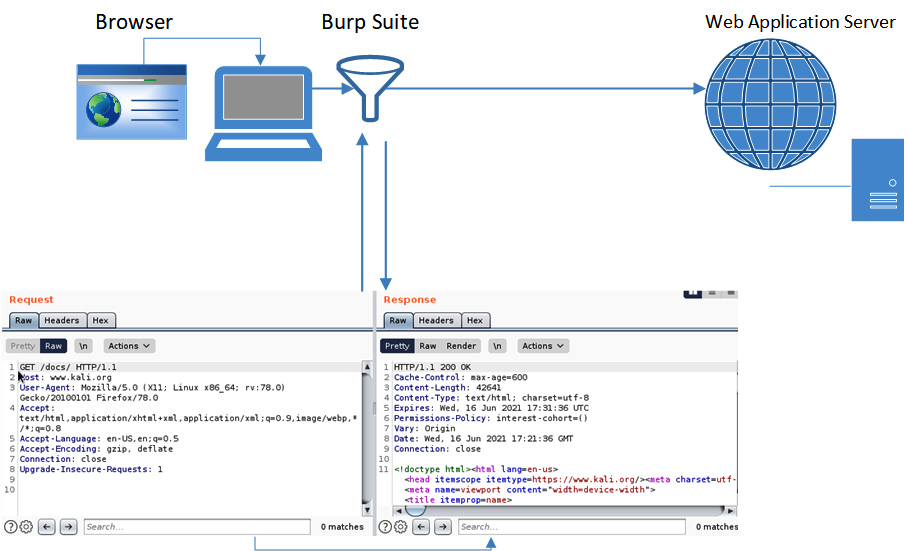

HTTP interceptor

HTTP interceptors are used by security analysts and pen testers. When we need to assess the security posture of a web application without being given access to the source code, this tool is useful. Figure 6.11 shows Burp Suite, a popular HTTP interceptor. It is provided as a default application on Kali Linux:

Figure 6.11 – HTTP interceptor

The HTTP interceptor is installed as a proxy directly on the host workstation. All browser requests and web server responses can be viewed in raw HTML, allowing the tester to gain valuable information about the application. See Figure 6.12 for the interceptor traffic flow:

Figure 6.12 – Burp Suite deployment

The use of this tool would require some developer/coding knowledge, to interpret the captured payloads.

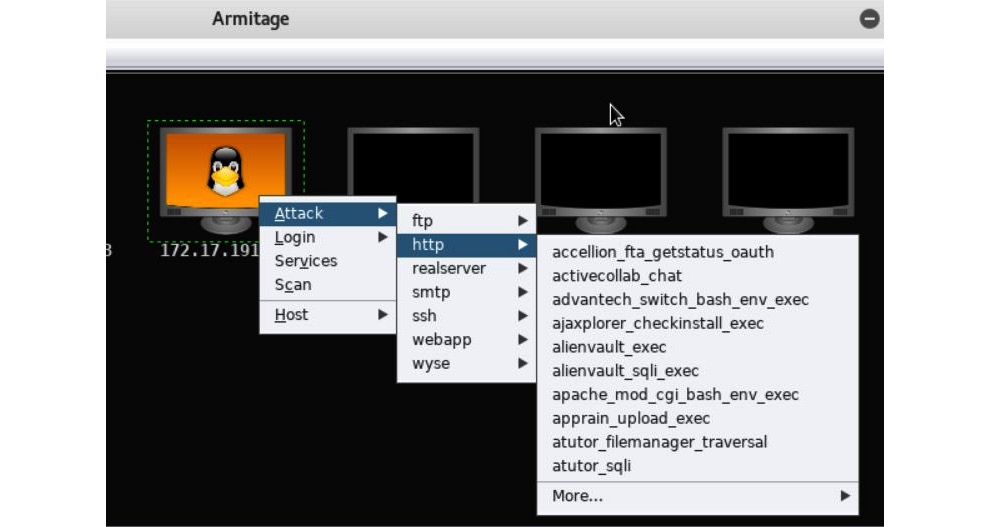

Exploit framework

Penetration testers can use an exploitation framework to transmit payloads to hosts on a network. Typically, this is done through vulnerabilities that have been identified. Popular frameworks include Sn1per, Core Impact, Canvas, and Metasploit. Exploit frameworks must be kept up to date to make sure all vulnerabilities are discovered and tested. Once a scan has detected hosts on the network and identified operating systems, the attack can begin. Figure 6.13 shows a Linux host about to be tested by Metasploit:

Figure 6.13 – Exploitation framework

Exploitation frameworks can be quite complex, requiring expertise in a pen testing environment.

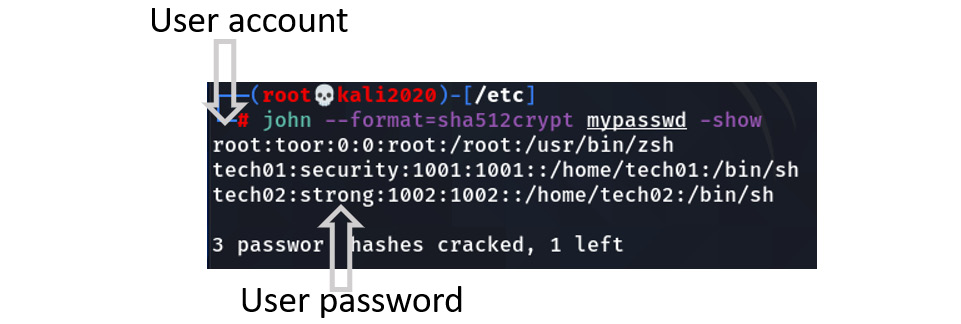

Password crackers

Password crackers are useful to determine whether weak passwords are being used in the organization. Common password crackers are Ophcrack, John the Ripper, and Brutus (there are many more examples). There are many online resources as well, including dictionary and rainbow tables.

Figure 6.14 shows the use of John the Ripper against a Linux password database:

Figure 6.14 – John the Ripper password cracking

In the output for Figure 6.14, the first column is for the user account and the second value is the cracked password. So, user tech02 has set their password to be strong (which is just a plain text dictionary word).

Important Note

While toor is not a common dictionary word, it is a poor choice of password for the root account.

Dependency management tools

Dependency management is important when planning to build large and often complex applications. Many software builds will incorporate third-party libraries and additional code modules. These tools will ensure we are using the correct library version or code version and can also keep track of any security issues with all code bases.

Popular tools include NuGet (for Microsoft .NET development) and Composer (for PHP developers), but there are many more.

Summary

In this chapter, we have looked at a variety of tools and frameworks to protect information systems.

We have identified the appropriate tools used to assess the security posture of operating systems, networks, and end user devices. We have also learned how to secure our systems using vulnerability scanning. We have identified industry-standard tools and protocols, to ensure compatibility across the enterprise (such as SCAP, CVE, CPE, and OVAL), and examined information sources where current threats and vulnerabilities are published. We have also looked at the requirements for managing third-party engagements, to assess our systems, and learned about the tools used for internal and external penetration testing.

These skills will be important as we learn about incident response and forensic analysis in the next chapter.

Questions

Here are a few questions to test your understanding of the chapter:

- When performing a SCAP scan on a system, which of the following types of scans will be most useful?

- Credentialed

- Non-credentialed

- Agent-based

- Intrusive

- What would be most important when monitoring security on ICS networks, where latency must be minimized?

- Group Policy

- Active scanning

- Passive scanning

- Continuous integration

- What is the protocol that allows for the automation of security compliance scans?

- SCAP

- CVSS

- CVE

- ARF

- What standard would support the creation of XML-format configuration templates?

- XCCDF

- CVE

- CPE

- NMAP

- What standard allows a vulnerability scanner to detect the host operating system and installed applications?

- XCCDF

- CVE

- CPE

- SCAP

- What standard supports a common reporting standard for vulnerability scanning?

- XCCDF

- CVE

- OVAL

- STIG

- What information type can be found at MITRE and NIST NVD that describes a known vulnerability and gives information regarding remediation?

- CVE

- CPE

- CVSS

- OVAL

- What is used to calculate the criticality of a known vulnerability?

- CVE

- CPE

- CVSS

- OVAL

- If my organization is preparing to host publicly available SaaS services in the data center, what kind of assessment would be best?

- Self-assessment

- Third-party assessment

- PCI compliance

- Internal assessment

- When we download patches from Microsoft, where should they be tested first?

- Staging network

- Production network

- DMZ network

- IT administration network

- Where can security professionals go to remain aware of vendor-published security updates and guidance? (Choose all that apply.)

- Advisories

- Bulletins

- Vendor websites

- MITRE

- What allows European critical infrastructure providers to share security-related information?

- ISACs

- NIST

- SCAP

- CISA

- What kind of testing would be performed against uncompiled code?

- Static analysis

- Dynamic analysis

- Fuzzing

- Reverse engineering

- What type of analysis would allow researchers to measure power usage to predict the encryption keys generated by a crypto-processor?

- Side-channel analysis

- Frequency analysis

- Network analysis

- Hacking

- What type of analysis would most likely be used when researchers need to study third-party compiled code?

- Static analysis

- Side-channel analysis

- Input validation

- Reverse engineering

- What automated tool would developers use to report on any outdated software libraries and licensing requirements?

- Software composition analysis

- Side-channel analysis

- Input validation

- Reverse engineering

- What is it called when we send pseudo-random inputs to an application, in an attempt to find flaws in the code?

- Fuzz testing

- Input validation

- Reverse engineering

- Pivoting

- What is the term for lateral movement from a compromised host system?

- Pivoting

- Reverse engineering

- Persistence

- Requirements

- When would a penetration tester use a privilege escalation exploit?

- Post-exploitation

- OSINT

- Reconnaissance

- Foot printing

- What is the correct term for a penetration tester manipulating the registry in order to launch a binary file during the boot sequence?

- Pivoting

- Reverse engineering

- Persistence

- Requirements

- What tool would allow network analysts to report on network utilization levels?

- Network traffic analyzer

- Vulnerability scanner

- Protocol analyzer

- Port scanner

- What would be the best tool to test the security configuration settings for a web application server?

- Network traffic analyzer

- Vulnerability scanner

- Protocol analyzer

- Port scanner

- With what tool would penetration testers discover live hosts and application services on a network segment?

- Network traffic analyzer

- Vulnerability scanner

- Protocol analyzer

- Port scanner

- What type of tool would perform uncredentialed scans?

- Network traffic analyzer

- Vulnerability scanner

- Protocol analyzer

- Port scanner

- What could be used to reverse engineer a web server API when conducting a zero-knowledge (black box) test?

- Exploitation framework

- Port scanner

- HTTP interceptor

- Password cracker

- What tool could be used by hackers to discover unpatched systems using automated scripts?

- Exploitation framework

- Port scanner

- HTTP interceptor

- Password cracker

- What would allow system administrators to discover weak passwords stored on the server?

- Exploitation framework

- Port scanner

- HTTP interceptor

- Password cracker

- What documentation would mitigate the risk of pen testers testing the security posture of all regional data centers when the requirement was only for the e-commerce operation center?

- Requirements

- Scope of work

- Rules of engagement

- Asset inventory

- What documentation would mitigate the risk of pen testers unintentionally causing an outage on the network during business hours?

- Requirements

- Scope of work

- Rules of engagement

- Asset inventory

- What type of security assessment is taking place if the tester needs to perform badge skimming first?

- Network security assessment

- Corporate policy considerations

- Facility considerations

- Physical security assessment

Answers

- A

- C

- A

- A

- C

- C

- A

- C

- B

- A

- A, B and C

- A

- A

- A

- D

- A

- A

- A

- A

- C

- A

- B

- D

- D

- C

- A

- D

- B

- C

- D