4

Using Machine Learning to Improve Operational Efficiency for Healthcare Providers

Operating an efficient healthcare system is a challenging but highly rewarding endeavor. As discussed in Chapter 3, healthcare systems are recognized for better care quality and saving costs. An efficient healthcare system innovates and focuses its time on improving patient care while reducing the time spent on repeatable operational tasks. It allows providers to focus on their core competency – taking care of patients – and reduce distractions that prevent them from doing this.

Patients in many places today have choices between multiple healthcare providers. The more patients a hospital is able to treat, the better it is so it can meet its revenue goals and remain profitable. However, a high volume of patients doesn’t always equate to a high quality of care, so it’s critical to strike a balance.

There are multiple areas of optimization in a healthcare workflow. Some of them are straightforward and can be implemented easily. Others require the use of advanced technologies such as machine learning (ML).

In this chapter, we will dive deeper into the topic of operational efficiency in healthcare by looking at some examples and the impact that increased efficiency can have on hospital systems. We will then dive deeper into two common applications of ML for reducing operational overhead in healthcare – clinical document processing and dealing with voice-based applications. Both these areas require substantial manual intervention and the use of ML can help automate certain repeatable tasks. We will then build an example application for smart medical transcription analysis using the AWS AI and ML services. The application transcribes a sample audio file containing a clinical dictation and extracts key clinical entities from the transcript for clinical interpretation of the transcript and further downstream processing.

The chapter is divided into the following sections:

- Introducing operational efficiency in healthcare

- Automating clinical document processing in healthcare

- Working with voice-based applications in healthcare

- Building a smart medical transcription analysis application on AWS

Technical requirements

The following are the technical requirements that you need to complete before building the example implementation at the end of this chapter:

- Set up an AWS account and create an administrator user, as described in the Amazon Transcribe Medical getting started guide here: https://docs.aws.amazon.com/transcribe/latest/dg/setting-up-med.html.

- Set up the AWS Command Line Interface (CLI) as described in the Amazon Comprehend Medical getting started guide here: https://docs.aws.amazon.com/comprehend-medical/latest/dev/gettingstarted-awscli.html.

- Install Python 3.7 or later. You can do that by navigating to the Python Downloads page here: https://www.python.org/downloads/.

- Install the Boto3 framework by navigating to the following link: https://boto3.amazonaws.com/v1/documentation/api/latest/guide/quickstart.html.

- Install Git by following the instructions at the following link: https://github.com/git-guides/install-git.

Once you have completed these steps, you should be all set to execute the steps in the example implementation in the last section of this chapter.

Introducing operational efficiency in healthcare

For a business to run efficiently, it needs to spend the majority of its time and energy on its core competency and reduce or optimize the time spent on supporting functions. A healthcare provider’s core competency is patient care. The more time physicians spend with patients providing care, the more efficient or profitable a healthcare system is.

One of the primary areas to optimize in a healthcare system is resource utilization. Resources in a hospital could be assets such as medical equipment or beds. They could be special rooms such as operating rooms (ORs) or intensive care units (ICUs). They could be consumables such as gloves and syringes. Optimal usage of these resources helps hospitals reduce waste and save costs. For example, ORs are the rooms in a hospital that earn the highest revenue, and leaving them underutilized is detrimental to a hospital’s revenue. Using standard scheduling practices may leave out important considerations, such as the type of case before and after a particular case. Having similar cases scheduled back to back can minimize the setup time for the second case. It will also allow surgeons to be appropriately placed so they spend less time moving between cases. All these aspects add up to increase utilization of an OR room.

Another area of optimization is hospital bed utilization. It is a very important metric that helps hospitals plan a number of things. For instance, a low utilization of beds in a certain ward on a particular day could mean the hospital staff in that ward can be routed to other wards instead. Moreover, if the hospital can accurately estimate the length of stay for a patient, they can proactively plan for staffing adequate support staff and consumable resources. It will reduce pressure on employees and help them perform better over extended periods of time.

The concept of appropriate resource utilization also extends to reducing wait times. As a patient moves through their care journey in a hospital, they may be routed to multiple facilities or their case may be transferred to different departments. For example, a patient may come for a regular consultation at a primary care facility. They may then be routed to a lab for sample collection and testing. The case may then be sent to the billing department to generate an invoice and process a payment. The routing and management of the case is much more complicated for inpatient admissions and specialty care. It is important that the case moves seamlessly across all these stages without any bottlenecks. Using appropriate scheduling and the utilization of the underlying resources, hospitals can ensure shorter wait times between the stages of patient care. It also improves patient experience, which is a key metric for hospitals to retain and attract new patients to their facilities. A long wait time in the ER or an outpatient facility results in a bad patient experience and reduces the chances of patient retention. Moreover, waiting for labs or the results of an X-ray can delay critical medical procedures that depend on receiving those scan results. These are sequential steps and a delay in one step delays the whole operation.

The reduction of wait times requires the identification of steps in each area that are bottlenecks, finding ways to minimize those bottlenecks and accelerate the processes as a result. One of the common reasons for these kinds of delays is the result of manual intervention: depending on humans for repeatable tasks that can be automated reduces productivity. Using ML-based automation can help improve throughput and reduce errors. In the next two sections, we will look at two example categories of automation in a healthcare system: document processing and working with voice-based solutions.

Automating clinical document processing in healthcare

Clinical documentation is a required byproduct of patient care and is prevalent in all aspects of care delivery. It is an important part of healthcare and was the sole medium of information exchange in the past. The digital revolution in healthcare has allowed hospitals to move away from paper documentation to digital documentation. However, there are a lot of inefficiencies in the way information is generated, recorded, and shared via clinical documents.

A majority of these inefficiencies lie in the manual processing of these documents. ML-driven automation can help reduce the burden of manual processing. Let us look at some common types of clinical documents and some details about them:

- Discharge summaries: A discharge summary summarizes the hospital admission for the patient. It includes the reason for admission, the tests, and a summary of next steps in their care journey.

- Patient history forms: This form includes historical medical information for the patient. It is updated regularly and is used for the initial triaging of a patient’s condition. It also contains notes from past physicians or specialists to understand the patient’s condition or treatment plan better.

- Medical test reports: Medical tests are key components of patient care and provide information for physicians and specialists to determine the best course of action for the patient. They range from regular tests such as blood and urine to imaging tests such as MRI or X-ray scans and also specialized tests such as genetic testing. The results of the tests are captured in test reports and shared with the patients and their care team.

- Mental health reports: Mental health reports capture behavioral aspects of the patients assessed by psychologists or licensed medical councilors.

- Claim forms: Subscribers to health plans for a health insurance company need to fill out a claim form to be reimbursed for the out-of-pocket expenses they incur as part of their medical treatment. It includes fields for identifying the care provided, along with identifiable information about the patient.

Each of these forms stores information that needs to be extracted and interpreted in a timely manner, which is largely manually done by people. A major side effect of this manual processing is burnout.

Healthcare practitioners spend hours in stressful environments, working at a fast pace to keep up with the demands of delivering high-quality healthcare services. Some studies estimate over 50% of clinicians experiencing burnout in recent years. High rates of physician burnout introduce substantial quality issues and adversely affect patients. For example, stressed physicians are more likely to stop practicing, which puts patients at risk of having gaps in their care. Moreover, clinicians are prone to errors in judgement when making critical decisions about a patient’s care journey, which is a safety concern. Research has also shown burnout causes boredom and loss of interest on the part of clinicians providing care, which does not bode well for patients who are looking for personalized attention. This may lead to patients dropping out of regular visits due to bad experiences. One answer to preventing burnout is to help clinicians spend less of their time on administrative and back-office support work such as organizing documentation and spend more time in providing care. This can be achieved through the ML-driven automation of clinical document processing.

The automation of clinical document processing essentially consists of two broad steps – firstly, extracting information from different modalities of healthcare data. For example, healthcare information can be hidden in images, forms, voice transcripts, or handwritten notes. ML can help extract information from these images using deep learning techniques such as image recognition, optical character recognition (OCR), and speech-to-text. Secondly, the information needs to be interpreted or understood for meaningful action or decisions based on it to be taken. The solution to this can be as simple as a rules engine that carries out the post-processing of extracted information based on human-defined rules. However, for a more complex, human-like interpretation of healthcare information, we need to rely on natural language processing (NLP) algorithms. For example, a rules engine can be used to find a keyword such as “cancer” in a medical transcript generated by a speech-to-text algorithm. However, we need to rely on an NLP algorithm to determine the sentiment (sad, happy, or neutral) in the transcript. The complexity involved in training, deploying, and maintaining deep learning models is a deterrent for automation. In the final section of this chapter, we will see how AWS AI services can help by providing pre-trained models that make it easy to use automation in medical transcription workflows. Before we do that, let’s dive deeper into voice-based applications in healthcare.

Working with voice-based applications in healthcare

Voice plays a big role in healthcare with multiple conversational and dictation-based types of software relying on speech to capture information critical to patient care. Voice applications in healthcare refer to applications that feature speech as a central element in their workflows. These applications can be patient-facing or can be internal applications only accessible to physicians and nurses. The idea behind voice-based applications is to remove or reduce the need for hands-on keyboard so workflows can be optimized and interactions become more intuitive. Let us look at some common examples of voice-based applications in healthcare:

- Medical transcription software: This is by far the most commonly used voice-based application in healthcare. As the name suggests, medical transcription software allows clinicians to dictate medical terminologies and convert them into text for interpretation, and store them in an Electronic Health Record (EHR) system. For example, a physician can summarize a patient’s regular visit by speaking into a dictation device that then converts their voice into text. By using advanced analytics and ML, medical transcription software is becoming smarter and is now able to detect a variety of clinical entities from a transcription for easy interpretation of clinical notes. We will see an example of this in the final section of this chapter, where we build a smart medical transcription solution. Using medical transcription software reduces the time required and errors in processing clinical information.

- Virtual assistants and chatbots: While medical transcription solutions are great for long-form clinical dictations, there are some workflows in healthcare that benefit from short-form voice exchanges. For example, the process of searching for a healthcare specialist or scheduling an appointment for a visit involves actions such as filtering the type of specialization, looking up their ratings, checking their availability, and booking an appointment. You can make these actions voice-enabled to allow users to use their voices to perform the search and scheduling. The application acts as a virtual assistant, listening to commands and performing actions accordingly. These assistants are usually available to the end users in a variety of forms. For example, they can be accessed via chatbots running on a web application. They can also be evoked using a voice command on a mobile device – for instance, Alexa. They can also be packaged into an edge device that can be sitting in your home (such as an Amazon Echo Dot) or a wearable (such as the Apple Watch). At the end of the day, the purpose of virtual assistants is to make regular tasks easy and intuitive through voice, and it is vital to choose the right mode of delivery so the service does not feel like a disruption in regular workflows. In fact, the more invisible the virtual assistant is, the better it is for the end user. Virtual assistants have been successfully implemented throughout the healthcare ecosystem, whether in robotic surgeries, primary care visits, or even bedside assistance for admitted patients.

- Telemedicine and telehealth services: Telehealth and telemedicine visits have become increasingly popular among patients and providers lately. For patients, they provide a convenient way to get access to healthcare consultation from the comfort of their home or anywhere they may be, as long as they have network connectivity. It avoids the travel and wait times often required by in-person visits. For providers, it is easier to consult with patients remotely, especially if the consultation is in connection with an infectious disease. No wonder telehealth became so popular during the peak surge of the Covid-19 pandemic in 2021. As you can imagine, voice interactions are a big part of telemedicine and telehealth. Unlike medical transcriptions, which involve a clinician dictating notes, telehealth involves conversations between multiple parties, such as doctors, patients, and nurses. Hence, the technology used to process and understand such interactions needs to be different from medical transcription software. A smart telehealth and telemedicine solution allows multiple parties in a healthcare visit to converse with each other naturally and convert each of their speech portions to text, assigning labels to each speaker so it’s understandable who said what in the conversation. This technology is known as speaker diarization and is an advanced area of speech-to-text deep learning algorithms. Moreover, NLP algorithms that derive meaning from these interactions should be able to handle conversational data, which is different from deriving meaning from single-person dictations.

- Remote patient monitoring and patient adherence solutions: Remote patient monitoring and patient adherence solutions are a new category of voice-based applications that providers use to ensure patients are adhering to the steps in their care plan, which is an integral part of patient care. Whether it is the adherence to medications as prescribed or adherence to visits per a set schedule, it is vital that patients follow the plan to ensure the healthcare continuum, which essentially means continuous access to healthcare over an extended period of time. It is estimated that about 50% of patients do not take their medications as prescribed and providers have little control over this metric while the patients are at home. Hence, a remote patient monitoring solution allows you to monitor a patient’s adherence to a care plan and also nudge them with proactive alerts when it’s detected that the patient is veering off course. These categories of solutions also provide reminder notifications when it’s time to take a medication or time to leave for an appointment. They can also send recommended readings with references to information about the medications or the clinical conditions that the patient may be experiencing. These actions and notifications can be voice-enabled to make them more natural and easier to interact with, keeping them less disruptive.

As you can see, verbal communication plays a critical role in healthcare, so it’s important that it’s captured and processed efficiently for healthcare providers to be able to operate a high-quality and operationally efficient healthcare organization. Let us now see how we can build a smart medical transcription application using AWS AI services.

Building a smart medical transcription application on AWS

Medical transcripts document a patient’s encounter with a provider. The transcripts contain information about a patient’s medical history, diagnosis, medications, and past medical procedures. They may also contain information about labs or tests and any referral information if applicable. As you may have guessed, processing and extracting medical transcripts is critical to getting a full understanding of a patient’s condition. The medical transcripts are usually generated by healthcare providers who dictate notes that capture this information. Then, medical transcriptions are manually transcribed into notes by medical transcriptionists. These notes are stored in the EHR system to capture a patient’s encounter summary. The manual transcription and understanding of medical information introduce operational challenges in a healthcare system, especially when done at scale. To solve this problem, we will build a smart medical transcription solution that automates the following two steps:

- Automating the conversation of an audio transcript into a clinical note using Amazon Transcribe Medical.

- Automating the extraction of clinical entities such as medical conditions, protected health information (PHI), and medical procedures from the clinical note so they can be stored in an EHR system.

Let us now look at the steps to set up and run this application.

Creating an S3 bucket

Please make sure you have completed the steps mentioned in the Technical requirements section before you attempt the following steps:

- Navigate to the AWS console using the console.aws.amazon.com URL and log in with your IAM username and password.

- In the search bar at the top, type S3 and click on the S3 link to arrive at the S3 console home page.

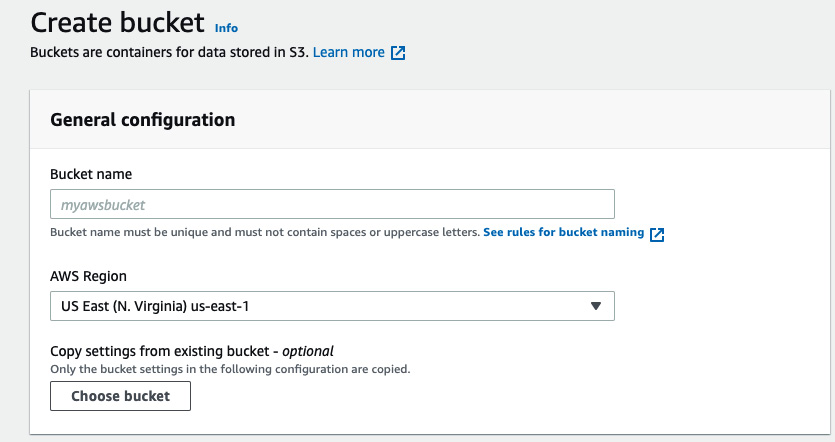

- Click on the Create bucket button.

- On the Create bucket console page, under General configuration, provide the name of your bucket in the Bucket name textbox, as shown in Figure 4.1:

Figure 4.1 – The S3 Create bucket screen in the AWS Management Console

This should create the S3 bucket for our application. This is where we will upload the audio file that will act as an input for Amazon Transcribe Medical.

Downloading the audio file and Python script

After you have created the S3 bucket, it’s time to download the Python script and the audio file from GitHub:

- Open the terminal or command prompt on your computer. Clone the Applied-Machine-Learning-for-Healthcare-and-Life-Sciences-using-AWS repository by typing the following command:

Git clone https://github.com/PacktPublishing/Applied-Machine-Learning-for-Healthcare-and-Life-Sciences-using-AWS.git

You should now see a folder named Applied-Machine-Learning-for-Healthcare-and-Life-Sciences-using-AWS.

- Navigate to the code files for this exercise located at Applied-Machine-Learning-for-Healthcare-and-Life-Sciences-using-AWS/chapter-4/. You should see two files in the directory: audio.flac and transcribe_text.py.

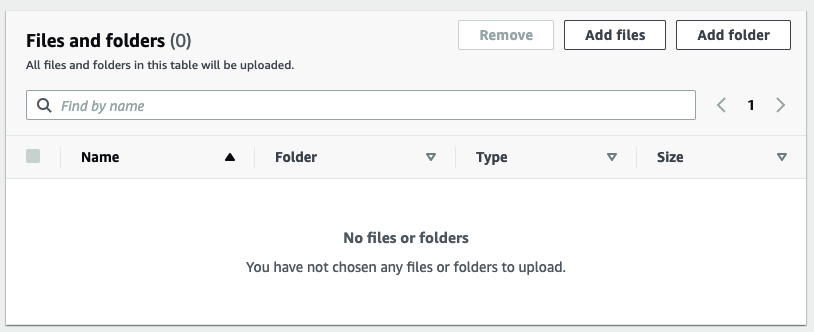

- On the AWS console, navigate to the S3 bucket created in the previous section and click Upload.

- On the next screen, select Add files and select the audio.flac audio file. Click Upload to upload the file to S3:

Figure 4.2 – The Add files screen of the S3 bucket in the AWS Management Console

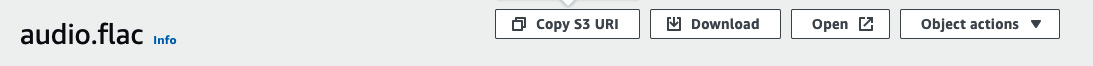

- Once uploaded, you should see the file in your S3 bucket. Click on it and open the audio.flac info screen. Once there, click Copy S3 URI:

Figure 4.3 – The object info screen in S3 with the Copy S3 URI button

- Next, open the transcribe_text.py file on your computer. Scroll to line 10 and change the value of the job_uri variable by pasting the URI copied in the previous step between the double quotations. The S3 URI should be in the following format: s3://bucket-name/key/audio.flac. Also, input the S3 output bucket name on lines 25 and 37. You can have the same bucket name for input and output. Make sure to save the file before exiting.

You are now ready to run the Python script. You can look at the rest of the script to understand the code and read more about the APIs being used. You can also play the audio.flac audio file using an audio player on your computer to hear its contents.

Running the application

Now, we are ready to run this application. To run, simply execute the Python script, transcribe_text.py. Here are the steps:

- Open the terminal or CLI on your computer and navigate to the directory where you have the transcribe_text.py file.

- Run the script by typing the following:

python transcribe_text.py

The preceding script reads the audio file from S3, transcribes it using Transcribe Medical, and then calls Comprehend Medical to detect various clinical entities in the transcription. Here is how the output of the script looks in the terminal:

bash-4.2$ python transcribe_text.py

creating new transcript job med-transcription-job

Not ready yet...

Not ready yet...

Not ready yet...

Not ready yet...

Not ready yet...

Not ready yet...

Not ready yet...

Not ready yet...

Not ready yet...

Not ready yet...

Not ready yet...

transcription complete. Transcription Status: COMPLETED

****Transcription Output***

The patient is an 86 year old female admitted for evaluation of abdominal pain. The patient has colitis and is undergoing treatment during the hospitalization. The patient complained of shortness of breath, which is worsening. The patient underwent an echocardiogram, which shows large pleural effusion. This consultation is for further evaluation in this regard.

Transcription analysis complete. Entities saved in entities.csv

The preceding output shows the transcription output from Transcribe Medical. The script also saves the output of Transcribe Medical in the raw JSON format as a file named transcript.json. You can open and inspect the contents of the file. Lastly, the script saves the entities extracted from Comprehend Medical into a CSV file named entities.csv. You should see the following in the CSV file:

|

Id |

Text |

Category |

Type |

|

7 |

86 |

PROTECTED_HEALTH_INFORMATION |

AGE |

|

8 |

evaluation |

TEST_TREATMENT_PROCEDURE |

TEST_NAME |

|

1 |

abdominal |

ANATOMY |

SYSTEM_ORGAN_SITE |

|

3 |

pain |

MEDICAL_CONDITION |

DX_NAME |

|

4 |

colitis |

MEDICAL_CONDITION |

DX_NAME |

|

9 |

treatment |

TEST_TREATMENT_PROCEDURE |

TREATMENT_NAME |

|

5 |

shortness of breath |

MEDICAL_CONDITION |

DX_NAME |

|

10 |

echocardiogram |

TEST_TREATMENT_PROCEDURE |

TEST_NAME |

|

2 |

pleural |

ANATOMY |

SYSTEM_ORGAN_SITE |

|

6 |

effusion |

MEDICAL_CONDITION |

DX_NAME |

|

12 |

evaluation |

TEST_TREATMENT_PROCEDURE |

TEST_NAME |

Table 4.1 – The contents of the entities.csv file

These are the entities extracted from Comprehend Medical from the transcript. You can see the original text, the category, and the type of entity recognized by Comprehend Medical. As you can see, these entities are structured into rows and columns, which is much easier to process and interpret than a blurb of text. Moreover, you can use this CSV file as a source of your analytical dashboards or even ML models to build more intelligence into this application.

Now that we have seen how to use Amazon Transcribe Medical and Amazon Comprehend medical together, you can also think about combining other AWS AI services with Comprehend Medical for different types of automation use cases. For example, you can combine Amazon Textract and Comprehend Medical to automatically extract clinical entities from medical forms and build a smart document processing solution for healthcare and life sciences. You can combine the output of Comprehend Medical with Kendra, a managed search service from AWS to create a document search application driven by graph neural networks. All these smart solutions reduce manual intervention in healthcare processes, minimize potential errors, and make the overall health system more efficient.

Summary

In this chapter, we looked at the concepts of operational efficiency in healthcare and why is it important for providers to pay attention to it. We then looked into two important areas of automation in healthcare – clinical document processing and voice-based applications. Each of these areas consumes a lot of time, as they require manual intervention for processing and an understanding of the clinical information embedded within them. We looked at some common methods of automating the extraction of clinical information from these unstructured data modalities and processing them to create a longitudinal view of a patient, a vital asset to have for the applications of clinical analytics and ML. Lastly, we built an example application to transcribe a clinical dictation using Amazon Transcribe Medical and then process that transcription using Amazon Comprehend Medical to extract clinical entities into a structured row or column format.

In Chapter 5, Implementing Machine Learning for Healthcare Payors, we will look into some areas of applications of ML in the health insurance industry.

Further reading

- Transcribe Medical documentation: https://docs.aws.amazon.com/transcribe/latest/dg/transcribe-medical.html

- Comprehend Medical documentation: https://docs.aws.amazon.com/comprehend-medical/latest/dev/comprehendmedical-welcome.html

- Textract documentation: https://docs.aws.amazon.com/textract/latest/dg/what-is.html