8

Applying Machine Learning to Molecular Data

Molecular data contains information about the chemical structures of different molecules. Extrapolating this data for analysis helps us determine important properties of a chemical at a molecular level. These properties are key in the discovery of new therapies and drugs. For example, small molecules with specific atomic structures can combine with other molecules to form compounds. These compounds may then become compounds of interest or candidate compounds if they are beneficial in treating diseases. This is done by looking at the interaction of the compound with a protein or gene involved in a disease and understanding how this interaction affects the underlying protein or gene. If the interaction helps to modulate the function or behavior of the gene or the protein, we can say that we have found a biological target for our compound. This process, also known as target discovery, is a key step in discovering new drugs. In some cases, instead of scanning known compounds, researchers can discover drugs by designing compound structures that have an affinity for the target. By bonding with the target, the compounds can change the function of the disease-causing target to render them harmless to humans. This process is known as drug design. Once we identify the compound for a target, the process moves toward determining the compound’s properties such as its solubility or toxicity. This helps us to understand the positive or negative effects it could have on humans and also the exact quantities that need to be administered to make sure it has the desired effects on the target.

However, all this is easier said than done. There are billions of compounds to consider and a growing list of diseases to correlate them with. This introduces a search problem at an insurmountable scale, with the search sometimes taking several months to complete. Traditional computing techniques rely on the brute-force method of target identification, which essentially involves going through a whole list of probable compounds and evaluating them based on their effects on diseases, based on trial and error. This process is not scalable and introduces bottlenecks. As a result, researchers now rely on techniques such as ML that can infer patterns in the chemical composition of known compounds and use that to predict the interactive effects of novel compounds on targets not yet studied or known.

In this chapter, we will look at the various steps in drug discovery. We will see how molecular data is stored and processed during the drug discovery process and also understand different applications of ML in the areas of drug design and discovery. We will look at a feature of SageMaker that allows you to bring your own custom container for training, allowing you to package specific software that can work with molecular data. Lastly, we will build an ML model to predict different molecular properties.

We will cover the following topics:

- Understanding molecular data

- Introducing drug discovery and design

- Applying ML to molecular data

- Introducing custom containers in SageMaker

- Building a molecular property prediction model on SageMaker

Technical requirements

The following are the technical requirements that you need to complete before building the example implementation at the end of this chapter:

- Complete the steps to set up the prerequisites for Amazon SageMaker as described here: https://docs.aws.amazon.com/sagemaker/latest/dg/gs-set-up.html.

- Create a SageMaker notebook instance by following the steps in the following guide: https://docs.aws.amazon.com/sagemaker/latest/dg/howitworks-create-ws.html.

- Create an S3 bucket as described in Chapter 4, in the Building a smart medical transcription application on AWS section under Create an S3 bucket. If you already have an S3 bucket, you can use that instead of creating a new bucket.

- Open the Jupyter notebook interface of the SageMaker notebook instance by clicking on the Open Jupyter link on the Notebook Instances screen.

- In the top-right corner of the Jupyter notebook, click on New and then Terminal.

- Type the following commands on the terminal screen:

$cd SageMaker

$git clone https://github.com/PacktPublishing/Applied-Machine-Learning-for-Healthcare-and-Life-Sciences-using-AWS.git

You should now see a folder named Applied-Machine-Learning-for-Healthcare-and-Life-Sciences-using-AWS.

Note

If you have already cloned the repository in a previous exercise, you should already have this folder. You do not need to do step 6 again.

Once you have completed these steps, you should be all set to execute the steps in the example implementation in the last section of this chapter.

Understanding molecular data

Having a good understanding of molecular properties and structures is extremely critical to determine how they react with each other. These reactions lead to the discovery of new molecules that lead to drug development. Pharmacology is the branch of science that studies such reactions and their impact on the body. Pharmacologists do this by reviewing molecular data stored in a variety of formats. At a very high level, molecules can be divided into two categories, small and large molecules. The distinction between them is not just because of their size. Let’s look at them in more detail.

Small molecules

Small molecules have been the basis of drug development for a very long time. They weigh less than 900 Dalton (Da) (1 Da is equal to 1.66053904x10^-24 grams) and account for more than 90% of drugs on the market today. Drugs based on small molecules are mostly developed through chemical synthesis. Due to their small size, they are easily absorbed into the bloodstream and can reach their biological targets through cell membranes to induce a biological response and can be administered orally as tablets. There are several small molecule databases, such as the small molecule pathway database (SMDB), which contains comprehensive information on more than 600 small molecule metabolic pathways found in humans. Their structures can be determined via well-known techniques such as spectroscopy and X-ray crystallography, which can determine the mean positions of the atoms and the chemical bonds allowing us to infer the structure of the molecule. A common way to represent the structure of the molecule is by using simplified molecular-input line-entry system (SMILES) notation. SMILES allows you to represent molecular structures using a string and is a common feature in small molecule data. SMILES is also useful to create a graph representation of a molecular structure, with atoms representing the nodes and the bonds between them representing the edges of the graph. This type of graph representation of molecular structure allows us to create a chemical graph of the compound and feed that as a feature for downstream analysis of the compound, using graph query language such as Gremlin with data stored in graph databases such as Neo4j.

Large molecules

Large molecules or macromolecules can be anywhere between 3,000 and 150,000 Daltons in size. They include biologics such as proteins that can interact with other proteins and create a therapeutic effect. Large molecules are more complex than their small counterparts and usually need to be injected into the body instead of taken orally. Drug development with large molecules involves engineering cells to bind with site-specific biological targets to induce a more localized response, instead of interfering with the functions of the overall body. This has made biologics more popular for site-specific targeted therapies for diseases such as cancer, where a tumor may be localized to a few areas of the body. The structure of large molecules such as proteins can be determined using technologies such as crystallography and cryo-electron microscopy (cryo-EM), which uses electron beams to capture the image of radiation-sensitive samples in an electron microscope. There are several databases of known protein structures, such as the protein databank (PDB), that researchers can use to study the 3D representations of proteins. More recently, our effort to understand proteins took a huge leap with the announcement of Alphafold by DeepMind in 2021. It is an AI algorithm that can accurately predict the structure of a protein from a sequence of amino acids. This has led to a slew of research targeted toward large molecule therapies that involve structure-based drug design.

With this understanding of molecular data types and their role in drug development, let us now dive into the details of the drug discovery process by looking at its various stages.

Introducing drug discovery and design

The process of drug discovery and design centers around the identification of biological targets. A disease may demonstrate multiple clinical characteristics, and the goal of the drug compound is to modulate the behavior of the biological entity (such as proteins and genes) that can change the clinical behavior of the disease. This is referred to as the target being druggable. The compounds do this by binding to the target and altering its molecular structure. As a result, understanding the physical structure of the molecule is extremely critical in the drug discovery and design process. Cheminformatics is a branch of science that studies the physical structure as well as the properties of molecules, such as absorption, distribution, metabolism, and extraction (ADME). Targets are screened against millions of compounds based on these properties to find the candidates that can be taken through the steps of clinical research and clinical trials. This process is known as target-based drug discovery (TBDD) and has the advantage of researchers being able to target the specific properties of a compound to make it suitable for a target. A contrasting approach to TBDD is phenotypic screening. In this approach, having prior knowledge of the biological target is not necessary. Instead, the process relies on monitoring changes to a specific phenotype. This provides a more natural environment for a drug to interact with a biological molecule to track how it alters the phenotype. However, this method is more difficult as the characteristics of the target are not known. There are multiple methods for target discovery, ranging from simple techniques such as literature survey and competitive analysis to more complex analysis techniques, involving simulations with genomic and proteomic datasets and chromatographic approaches. The interpretation of data generated from these processes requires specialized technology, including high-performance computing clusters with GPUs, image-processing pipelines, and search applications.

In the case of small molecule drug discovery, once a target is identified, it needs to be validated. This is the phase when the target is scrutinized to demonstrate that it does play a critical role in a disease. It is also the step where the drug’s effects on the target are determined. The in-depth analysis during the validation phase involves the creation of biological and compound screening assays. Assays are procedures defined to measure the biological changes as a result of interaction between the compound and the target. The assay should be reproducible and robust to false positives and false negatives by having a balance between selectivity and sensitivity. This helps shortlist a few compounds that are relevant as they demonstrate the desired changes in the drug target. These compounds are referred to as a hit molecule. There could be several hit molecules for a target. The process of screening multiple potential compound libraries for hits is known as high-throughput screening (HTS).

HTS involves scanning through thousands of potential compounds that have the desired effects on a target. HTS uses automation and robotics to speed up this search process by quickly performing millions of assays across entire compound libraries. The assays used in HTS vary in complexity and strategy. The type of assay used in HTS depends on multiple factors, such as the biology of the target, the automation used in the labs, the equipment utilized in the assay, and the infrastructure to support the assay. For example, assays can measure the affinity of a compound to a target (biochemical assays) or use fluorescence-based detection methods to measure the changes in the target (luminescent assays). We are now seeing the increased use of virtual screening, which involves creating simulation models for assays that can screen a large number of compounds. These virtual screening techniques are powered by ML models and have dramatically increased the speed with which new targets can be matched up to potential compounds.

The steps following HTS largely consist of tasks to further refine the hits for potency and test out their efficacy. It involves analyzing ADME properties along with structure analysis using crystallography and molecular modeling. This is typically known as lead optimization and results in a candidate that can be taken through the clinical research phase for drug development.

In contrast to small molecules, therapies involving large molecules are known to target more serious illnesses, such as cancers. As large molecules have a more complicated structure, the process of developing therapies with them involves specialized processes and non-standard equipment. It is also a more costly affair with a larger upfront investment and comes with considerable risks. However, this can be a competitive advantage to a pharmaceutical organization because the steps are not as easy to reproduce by other pharmaceutical organizations. It is also known that cancers can develop resistance to small molecules over a period of time. Such discoveries have led to the formation of a variety of start-ups doing cutting-edge research in CAR T-cell therapy and gene-based therapy. These efforts have made biologics more common in recent years.

While drug discovery has gained momentum with the advancements in recent technology, it is still a costly and time-consuming affair. In contrast, researchers are now able to design a drug based on their knowledge of the biological target. Let’s understand this better.

Understanding structure-based drug design

The process of drug design revolves around creating molecules that have a particular affinity for a target in question and can bind to it structurally. This is done by designing molecules complementary in design to the target molecule. The structure and the knowledge of the target are extremely important in the design process. It involves computer simulation and modeling to synthesize and develop the best candidate compounds that have an affinity for the target. This is largely categorized as computer-aided drug design (CADD) and has become a cost-effective option for designing promising drug candidates in preference to In Vitro methods. With increasing knowledge about the structure of various proteins, more potential drug targets are being identified.

The structure of a target is determined using techniques such as X-ray crystallography or homology modeling. You can also use more advanced techniques such as cryo-EM or AI algorithms such as Alphafold to ascertain the structure of the target. Once the target is identified and its structure is determined, the process aims at determining the possible binding sites on the target. Compounds are then scored based on their ability to bind with the target. The high-scoring ones are developed into assays. This target-compound structure is further optimized with multiple parameters of the compound that determine its effectiveness as a drug. These include parameters such as the stability and toxicity of the compounds. The process is repeated multiple times and aims to determine the structure and optimize the properties of the compound-target complex. After several rounds of optimization, it is highly likely that we will have a compound that will be specific to the target in question.

At the end of the day, drug discovery and design are both iterative processes that try to find the most probable compound that has an affinity to a target. The discovery process does it via search and scan whereas the design process achieves it via optimization of different parameters. Both of them have largely been modernized by the use of ML, and their implementation has become much more common in recent years. Let us now look at various applications of ML on molecular data that have led to advances in drug discovery and design.

Applying ML to molecular data

The discovery of a drug requires a trial-and-error method that involves scanning large libraries of small molecules and proteins using high-performance computing environments. ML can speed up the process by predicting a variety of properties of the molecules and proteins, such as their toxicity and binding affinity. This reduces the search space, thereby allowing scientists to speed up the process. In addition, drug manufacturers are looking at ways to customize drugs to an individual’s biomarkers (also known as precision medicine). ML can speed up these processes by correlating molecular properties to clinical outcomes, which helps in detecting biomarkers from a variety of datasets such as biomedical images, protein sequences, and clinical information. Let us look at a few applications of ML on molecular data.

Molecular reaction prediction

One of the most common applications of ML in drug discovery is the prediction of how two molecules would react with each other. For instance, how would a protein react with a drug compound? These models can be pre-trained on existing data, such as the drug protein interaction (DPI) database, and can be used to predict future unknown reactions in a simulated fashion. We can apply such models for repurposing drugs, a method that encourages using existing drug candidates that were shortlisted for some clinical condition but instead uses them for another condition. Using these reaction simulations, you can get a better idea of how the candidate would react to the new target and, hence, determine whether further testing is needed. This method is also useful for target identification. A target provides binding sites (also known as druggable sites) for candidate molecules to dock. In a typical scenario, the identification of new targets requires the design of complicated assays for multiple proteins. Instead, an in silco approach reduces the time to discover new targets by reducing the number of experiments. A drug target interaction (DTI) model can predict whether a protein and a molecule would react. Another application of this is for biologics design. Such therapies depend on macromolecule reactions such as protein-protein interactions (PPIs). These models attempt to understand the pathogenicity of the host protein and how it interacts with other proteins. An example implementation of this is in the process of vaccine development for known viruses.

Molecular property prediction

ML models can help determine the essential properties of an underlying molecule. These properties help to determine various aspects of a compound as well as a target that could ultimately decide how successful the drug would be. For example, in the case of compounds, you can predict their ADME properties using ML models trained on small molecule data banks. These models can then be used in the virtual screening of millions of compounds during the lead optimization phase. In the initial stages, the models can be used in hit optimization where the molecular properties determine the size, shape, and solubility of the compounds that help to determine compound quality. In the drug design workflow, these properties play the role of parameters in an optimization pipeline powered by ML models. These models can iteratively tune to come up with optimal values of these parameters so that the designed drug has the best chance of being successful. A common application of molecular property prediction is during a quantitative structure- activity relationship (QSAR) analysis. This analysis is typically done at the HTS stage of drug discovery and helps to achieve a good hit rate. The QSAR models help predict the biological activities of a compound based on the chemical properties derived from molecular structure. The models make use of classification and regression models to understand the category of biological activity or its value.

Molecular structure prediction

Another application of ML in drug discovery is in the prediction of structures of both large and small molecules, which is an integral part of structure-based drug design. In the case of macromolecules such as proteins, their structure defines how they function and helps you find which molecules could bind to their binding sites. In the case of small molecules, the structures help determine their kinetic and physical properties, ultimately leading to a hit. Structural properties are also critical in drug design where novel compounds are designed based on their affinity to a target structure. Traditionally, the structure of molecules has been determined using technologies such as mass spectrometry, which aims to determine the molecular mass of a compound. Another approach to determining structure, especially for more complex molecules, is crystallography, which makes use of X-rays to determine the bonding arrangements in a molecule. For macromolecules, a popular technique to determine structure is cryo-electron microscopy (cryo-EM).

While these technologies show a lot of promise, ML has been increasingly used in structure prediction because of its ability to simulate these structures and study them virtually. For example, generative models in ML can generate possible compound structures from known properties that allow researchers to perform bioactivity analysis on a few sets of compounds. Since these compounds are already generated with known characteristics learned by the model, their chances of being successful are higher.

Language models for proteins and compounds

Language models are a type of natural language processing (NLP) technique that allows you to predict whether a given sequence of words is likely to exist in a sentence. They have wide applications in NLP and have been used successfully in applications involving virtual assistants (such as Siri and Alexa), speech-to-text services, translation services, and sentiment analysis. Language models work by generating a probability distribution of the word and picking the ones that have the most validity. The validity is decided by the rules that it learns from text corpora using a variety of techniques. It ranges from approaches such as n-grams for simple sentences to more complex recurrent neural networks (RNNs) that can understand context in natural language. A breakthrough discovery in language models came with the announcement of BERT and GPT models that utilize transformer architecture. They use an attention-based mechanism and can learn context by learning which inputs deserve more attention than others. To know more about transformer architecture, you can refer to the original paper: https://arxiv.org/pdf/1706.03762.pdf.

Just as language models can predict words in a sentence, the same concept has been extended by researchers in the context of proteins and chemicals. Language models can be trained on common public repositories of molecular data such as DrugBank, ChEMBL, and ZINC and learn the sequence of amino acids or compounds. Transformer architecture allows us to easily fine-tune the language models for specific tasks in drug discovery, such as molecule generation, and a variety of classification and regression tasks, such as property prediction and reaction prediction.

The field of drug discovery and design is ripe with innovation, and it will continue to transform the way new therapies and drugs are discovered and designed, fueled by technology and automation. It has the promise to one day find a cure for diseases such as cancer, Alzheimer’s, and HIV/AIDS. Now that we have a good idea of some of the applications of ML in drug discovery and design, let’s dive into the custom container options in SageMaker that allow you to use specialized cheminformatics and bioinformatics libraries for model training.

Introducing custom containers in SageMaker

In Chapter 6 and Chapter 7, we went over various options for training and deploying models on Amazon SageMaker. These options allow you to cover a variety of scenarios that should address most of your ML needs. As you saw, SageMaker makes heavy use of Docker containers to train and host models. By utilizing pre-built SageMaker algorithms and framework containers, you can use SageMaker to train and deploy ML models with ease. Sometimes, however, your need may not be fully addressed by the pre-built containers. This may be because you need specific software or a dependency that cannot be directly addressed by the framework and algorithm containers in SageMaker. This is when you can use the option of bringing your own container to SageMaker. To do this, you need to adapt or create a container that can work with SageMaker. Let’s now dive into the details of how to utilize this option.

Adapting your container for SageMaker training

SageMaker provides a toolkit for training to make it easy to extend pre-built SageMaker Docker images based on your requirements. You can find the toolkit at the following location: https://github.com/aws/sagemaker-training-toolkit.

The toolkit provides wrapper functions and environment variables to train a model using Docker containers and can be easily added to any container to make it work with SageMaker. The Docker file expects two environment variables:

- SAGEMAKER_SUBMIT_DIRECTORY: This is the directory where the Python script for training is located

- SAGEMAKER_PROGRAM: This is the program that will be executed as the entry point for the container

In addition, you can install additional libraries or dependencies into the Docker container using the pip command. You can then build and push the container to the ECR repository in your account. The process for training with the custom container is very similar to that of the built-in containers of SageMaker. To learn more about how to adapt your containers for training with SageMaker, refer to the following link: https://docs.aws.amazon.com/sagemaker/latest/dg/adapt-training-container.html.

Adapting your container for SageMaker inference

As well as a training toolkit, SageMaker provides an inference toolkit. The inference toolkit has the necessary functions to make your inference code work with SageMaker hosting. To learn more about the SageMaker inference toolkit, you can refer to the following documentation: https://github.com/aws/sagemaker-inference-toolkit.

The inference toolkit requires you to create an inference script and a Dockerfile that can import the inference handler, an inference service, and an entry-point script. These are three separate Python files. The job of the inference handler Python script is to provide a function to load the model, pre-process the input data, generate predictions, and process the output data. The inference service is responsible for handling the initialization of the model when the model server starts and is a method to handle all incoming inference requests to the model server. Finally, an entry-point script simply starts the model server by invoking the handler service. In the Dockerfile, we add the model handler script to the /home/model-server/ directory in the container and also specify the entry point to be the entry-point script. You can then build and push the container to the ECR repository in your account. The process for using the custom inference container is very similar to that of the built-in containers of SageMaker. To learn more about how to adapt your containers for inference with SageMaker, refer to the following link: https://docs.aws.amazon.com/sagemaker/latest/dg/adapt-inference-container.html.

With this theoretical understanding of the SageMaker custom containers, let us now utilize these concepts to create a custom container to train a molecular property prediction model on SageMaker.

Building a molecular property prediction model on SageMaker

In the previous chapters, we learned how to use SageMaker training and inference using built-in containers. In this chapter, we will see how we can extend SageMaker to train with custom containers. We will be using a Dockerfile to create a training container for SageMaker using the SageMaker training toolkit. We will then utilize that container to train a molecular property prediction model and see some results of our training job in a Jupyter notebook. We will also see how to test the container locally before submitting a training job to it. This is a handy feature of SageMaker that lets you validate whether your training container is working as expected and helps you debug the errors if needed.

For the purposes of this exercise, we will use a few custom libraries. Here is a list of custom libraries that we will be using:

- RDKit: A collection of cheminformatics and machine-learning software written in C++ and Python: https://github.com/rdkit/rdkit

- Therapeutics Data Commons (TDC): The first unifying framework to systematically access, evaluate, and benchmark ML methods across an entire range of therapeutics: https://pypi.org/project/PyTDC/

- Pandas Flavor: pandas-flavor extends pandas’ extension API in the following ways:

- By adding support for registering methods as well

- By making each of these functions backward compatible with older versions of pandas: https://pypi.org/project/pandas-flavor/

- Deep Purpose: A deep learning-based molecular modeling and prediction toolkit on drug-target interaction prediction, compound property prediction, protein-protein interaction prediction, and protein function prediction (using PyTorch): https://github.com/kexinhuang12345/DeepPurpose

We will install these libraries on a custom training container using pip commands in a Dockerfile. Before we move forward, please familiarize yourselves with these libraries and their usage.

Running the Jupyter Notebook

The notebook for this exercise is saved on GitHub here: https://github.com/PacktPublishing/Applied-Machine-Learning-for-Healthcare-and-Life-Sciences-using-AWS/blob/main/chapter-08/molecular_property_prediction.ipynb:

- Open the Jupyter notebook interface of the SageMaker notebook instance by clicking on the Open Jupyter link on the Notebook Instances screen.

- Open the notebook for this exercise by navigating to Applied-Machine-Learning-for-Healthcare-and-Life-Sciences-using-AWS/chapter-8/ and clicking on molecular_property_prediction.ipynb.

- Follow the instructions in the notebook to complete the exercise.

In this exercise, we will train a molecular property prediction model on SageMaker using a custom training container. We will run the training in two modes:

- Local mode: In this mode, we will test our custom container by running a single model for the Human Intestinal Absorption (HIA) prediction model

- SageMaker training mode: In this mode, we will run multiple ADME models on a GPU on SageMaker

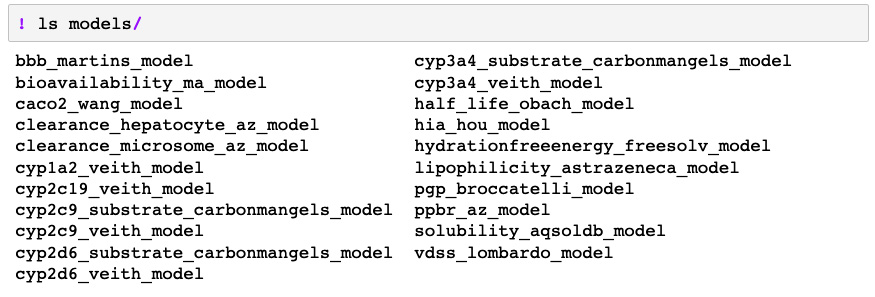

We will then download the trained models locally. At the end of the exercise, you can view the trained models in a directory called models.

Figure 8.1 – Trained models downloaded locally at the end of the SageMaker training job

Once you have concluded the exercise, make sure you stop or delete your SageMaker resources to avoid incurring charges, as described at the following link: https://sagemaker-workshop.com/cleanup/sagemaker.html.

Summary

In this chapter, we summarized the types of molecular data and how they are represented. We understood why it’s important to understand molecular data and its role in the drug discovery and design process. Next, we were introduced to the complex process of drug discovery and design. We looked at a few innovations that have advanced the field over the years. We also looked at some common applications of ML in this field. For the technical portion of this chapter, we learned about the use of custom containers in SageMaker. We saw how this option allows us to run custom packages for bioinformatics and cheminformatics on SageMaker. Lastly, we built an ML model to predict the molecular properties of some compounds.

In Chapter 9, Applying Machine Learning to Clinical Trials and Pharmacovigilance, we will look at how ML can optimize the steps of clinical trials and track the adverse effects of drugs.

Further reading

- Hughes, J.P., Rees, S., Kalindjian, S.B., and Philpott, K.L. Principles of early drug discovery in British Journal of Phamacology (2011): https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3058157/

- Small Molecule: https://www.sciencedirect.com/topics/biochemistry-genetics-and-molecular-biology/small-molecule

- Yasmine S. Al-Hamdani, Péter R. Nagy, Dennis Barton, Mihály Kállay, Jan Gerit Brandenburg, Alexandre Tkatchenko. Interactions between Large Molecules: Puzzle for Reference Quantum-Mechanical Methods: https://arxiv.org/abs/2009.08927

- Dara S, Dhamercherla S, Jadav SS, Babu CM, Ahsan MJ. Machine Learning in Drug Discovery: A Review. Artif Intell Rev. 2022;55(3):1947–1999. doi: 10.1007/s10462-021-10058-4. Epub 2021 Aug 11. PMID: 34393317; PMCID: PMC8356896. https://pubmed.ncbi.nlm.nih.gov/34393317/