Chapter 4. Software testing principles

A crash is when your competitor’s program dies. When your program dies, it is an “idiosyncrasy.” Frequently, crashes are followed with a message like “ID 02.” “ID” is an abbreviation for idiosyncrasy and the number that follows indicates how many more months of testing the product should have had.

Guy Kawasaki

This chapter covers

- The need for software tests

- Types of software tests

- Types of unit tests

Earlier chapters in this book took a pragmatic approach to designing and deploying unit tests. This chapter steps back and looks at the various types of software tests and the roles they play in the application’s lifecycle.

Why would you need to know all this? Because unit testing isn’t something you do out of the blue. In order to become a well-rounded developer, you need to understand unit tests compared to functional, integration, and other types of tests. Once you understand why unit tests are necessary, then you need to know how far to take your tests. Testing in and of itself isn’t the goal.

4.1. The need for unit tests

The main goal of unit testing is to verify that your application works as expected and to catch bugs early. Although functional testing accomplishes the same goal, unit tests are extremely powerful and versatile and offer much more than verifying that the application works. Unit tests

- Allow greater test coverage than functional tests

- Increase team productivity

- Detect regressions and limit the need for debugging

- Give us the confidence to refactor and, in general, make changes

- Improve implementation

- Document expected behavior

- Enable code coverage and other metrics

4.1.1. Allowing greater test coverage

Unit tests are the first type of test any application should have. If you had to choose between writing unit tests and writing functional tests, you should choose the latter. In our experience, functional tests are able to cover about 70 percent of the application code. If you wish to go further and provide more test coverage, then you need to write unit tests.

Unit tests can easily simulate error conditions, which is extremely difficult to do with functional tests (it’s impossible in some instances). Unit tests provide much more than just testing, as explained in the following sections.

4.1.2. Increasing team productivity

Imagine you’re on a team working on a large application. Unit tests allow you to deliver quality code (tested code) without having to wait for all the other components to be ready. On the other hand, functional tests are more coarse grained and need the full application (or a good part of it) to be ready before you can test it.

4.1.3. Detecting regressions and limiting debugging

A passing unit test suite confirms your code works and gives you the confidence to modify your existing code, either for refactoring or to add and modify new features. As a developer, you’ll get no better feeling than knowing that someone is watching your back and will warn you if you break something.

A suite of unit tests reduces the need to debug an application to find out why something is failing. Whereas a functional test tells you that a bug exists somewhere in the implementation of a use case, a unit test tells you that a specific method is failing for a specific reason. You no longer need to spend hours trying to find the problem.

4.1.4. Refactoring with confidence

Without unit tests, it’s difficult to justify refactoring, because there’s always a relatively high risk that you may break something. Why would you chance spending hours of debugging time (and putting the delivery at risk) only to improve the implementation or change a method name? Unit tests provide the safety net that gives you the confidence to refactor.

Let’s move on with our implementation and try to improve it further.

Throughout the history of computer science, many great teachers have advocated iterative development. Niklaus Wirth, for example, who gave us the now-ancient languages Algol and Pascal, championed techniques like stepwise refinement.

For a time, these techniques seemed difficult to apply to larger, layered applications. Small changes can reverberate throughout a system. Project managers looked to up-front planning as a way to minimize change, but productivity remained low.

The rise of the xUnit framework has fueled the popularity of agile methodologies that once again advocate iterative development. Agile methodologists favor writing code in vertical slices to produce a working use case, as opposed to writing code in horizontal slices to provide services layer by layer.

When you design and write code for a single use case or functional chain, your design may be adequate for this feature, but it may not be adequate for the next feature. To retain a design across features, agile methodologies encourage refactoring to adapt the code base as needed.

But how do you ensure that refactoring, or improving the design of existing code, doesn’t break the existing code? This answer is that unit tests tell you when and where code breaks. In short, unit tests give you the confidence to refactor.

The agile methodologies try to lower project risks by providing the ability to cope with change. They allow and embrace change by standardizing on quick iterations and applying principles like YAGNI (You Ain’t Gonna Need It) and The Simplest Thing That Could Possibly Work. But the foundation on which all these principles rest is a solid bed of unit tests.

4.1.5. Improving implementation

Unit tests are a first-rate client of the code they test. They force the API under test to be flexible and to be unit testable in isolation. You usually have to refactor your code under test to make it unit testable (or use the TDD approach, which by definition spawns code that can be unit tested; see the next chapter).

It’s important to monitor your unit tests as you create and modify them. If a unit test is too long and unwieldy, it usually means the code under test has a design smell and you should refactor it. You may also be testing too many features in one test method. If a test can’t verify a feature in isolation, it usually means the code isn’t flexible enough and you should refactor it. Modifying code to test it is normal.

4.1.6. Documenting expected behavior

Imagine you need to learn a new API. On one side is a 300-page document describing the API, and on the other are some examples showing how to use it. Which would you choose?

The power of examples is well known. Unit tests are exactly this: examples that show how to use the API. As such, they make excellent developer documentation. Because unit tests match the production code, they must always be up to date, unlike other forms of documentation,

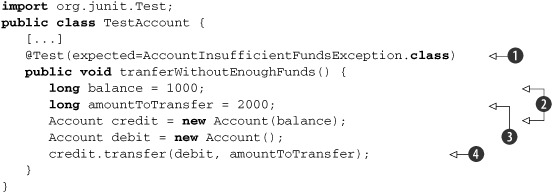

Listing 4.1 illustrates how unit tests help provide documentation. The testTransferWithoutEnoughFunds() method shows that an AccountInsufficientFundsException is thrown when an account transfer is performed without enough funds.

Listing 4.1. Unit tests as automatic documentation

At ![]() we declare the method as a test method by annotating it with @Test and declare that it must throw the AccountInsufficientFundsException (with the expected parameter). Next, we create a new account with a balance of 1000

we declare the method as a test method by annotating it with @Test and declare that it must throw the AccountInsufficientFundsException (with the expected parameter). Next, we create a new account with a balance of 1000 ![]() and the amount to transfer

and the amount to transfer ![]() . Then we request a transfer of 2000

. Then we request a transfer of 2000 ![]() . As expected, the transfer method throws an AccountInsufficientFundsException. If it didn’t, JUnit would fail the test.

. As expected, the transfer method throws an AccountInsufficientFundsException. If it didn’t, JUnit would fail the test.

4.1.7. Enabling code coverage and other metrics

Unit tests tell you, at the push of a button, if everything still works. Furthermore, unit tests enable you to gather code-coverage metrics (see the next chapter) showing, statement by statement, what code the tests caused to execute and what code the tests did not touch. You can also use tools to track the progress of passing versus failing tests from one build to the next. You can also monitor performance and cause a test to fail if its performance has degraded compared to a previous build.

4.2. Test types

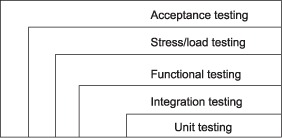

Figure 4.1 outlines our five categories of software tests. There are other ways of categorizing software tests, but we find these most useful for the purposes of this book. Please note that this section is discussing software tests in general, not just the automated unit tests covered elsewhere in the book.

Figure 4.1. The five types of tests

In figure 4.1, the outermost tests are broadest in scope. The innermost tests are narrowest in scope. As you move from the inner boxes to the outer boxes, the software tests get more functional and require that more of the application be present.

Next, we take a look at the general test types. Then, we focus on the types of unit tests.

4.2.1. The four types of software tests

We’ve mentioned that unit tests each focus on a distinct unit of work. What about testing different units of work combined into a workflow? Will the result of the workflow do what you expect? How well will the application work when many people are using it at once? Different kinds of tests answer these questions; we categorize them into four varieties:

- Integration tests

- Functional tests

- Stress and load tests

- Acceptance tests

Let’s look at each of the test types, starting with the innermost after unit testing and working our way out.

Integration software testing

Individual unit tests are essential to quality control, but what happens when different units of work are combined into a workflow? Once you have the tests for a class up and running, the next step is to hook up the class with other methods and services. Examining the interaction between components, possibly running in their target environment, is the job of integration testing. Table 4.1 describes the various cases under which components interact.

Table 4.1. Testing how objects, services, and subsystems interact

|

Interaction |

Test description |

|---|---|

| Objects | The test instantiates objects and calls methods on these objects. |

| Services | The test runs while a servlet or EJB container hosts the application, which may connect to a database or attach to any other external resource or device. |

| Subsystems | A layered application may have a front end to handle the presentation and a back end to execute the business logic. Tests can verify that a request passes through the front end and returns an appropriate response from the back end. |

Just as more traffic collisions occur at intersections, the points where objects interact are major contributors of bugs. Ideally, you should define integration tests before you write application code. Being able to code to the test dramatically increases a programmer’s ability to write well-behaved objects.

Functional software testing

Functional tests examine the code at the boundary of its public API. In general, this corresponds to testing application use cases.

Developers often combine functional tests with integration tests. For example, a web application contains a secure web page that only authorized clients can access. If the client doesn’t log in, then trying to access the page should result in a redirect to the login page. A functional unit test can examine this case by sending an HTTP request to the page to verify that a redirect (HTTP status code 302) response code comes back.

Depending on the application, you can use several types of functional tests, as shown in table 4.2.

Table 4.2. Testing frameworks, GUIs, and subsystems

|

Application type |

Functional test description |

|---|---|

| The application uses a framework. | Functional testing within a framework focuses on testing the framework API (from the point of view of end users or service providers). |

| The application has a GUI. | Functional testing of a GUI verifies that all features can be accessed and provide expected results. The tests access the GUI directly, which may in turn call several other components or a back end. |

| The application is made up of subsystems. | A layered system tries to separate systems by roles. There may be a presentation subsystem, a business logic subsystem, and a data subsystem. Layering provides flexibility and the ability to access the back end with several different front ends. Each layer defines an API for other layers to use. Functional tests verify that the API contract is enforced. |

Stress testing

How well will the application perform when many people are using it at once? Most stress tests examine whether the application can process a large number of requests within a given period. Usually, you implement this with software like JMeter,[1] which automatically sends preprogrammed requests and tracks how quickly the application responds. These tests usually don’t verify the validity of responses, which is why we have the other tests. Figure 4.2 shows a JMeter throughput graph.

Figure 4.2. A JMeter throughput graph

You normally perform stress tests in a separate environment, typically more controlled than a development environment. The stress test environment should be as close as possible to the production environment; if not, the results won’t be useful.

Let’s prefix our quick look at performance testing with the often-quoted number-one rule of optimization: “Don’t do it.” The point is that before you spend valuable time optimizing code, you must have a specific problem that needs addressing. That said, let’s proceed to performance testing.

Aside from stress tests, you can perform other types of performance tests within the development environment. A profiler can look for bottlenecks in an application, which the developer can try to optimize. You must be able to prove that a specific bottleneck exists and then prove that your changes remove the bottleneck.

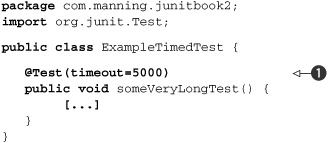

Unit tests can also help you profile an application as a natural part of development. With JUnit, you can create a performance test to match your unit test. You might want to assert that a critical method never takes too long to execute. Listing 4.2 shows a timed test.

Listing 4.2. Enforcing a timeout on a method with JUnit

The example uses the timeout parameter ![]() on the @Test annotation to set a timeout in milliseconds on the method. If this method takes more than 5,000 milliseconds to run, the

test will fail.

on the @Test annotation to set a timeout in milliseconds on the method. If this method takes more than 5,000 milliseconds to run, the

test will fail.

An issue with this kind of test is that you may need to update the timeout value when the underlying hardware changes, the OS changes, or the test environment changes to or from running under virtualization.

Acceptance software testing

It’s important that an application perform well, but the application must also meet the customer’s needs. Acceptance tests are our final level of testing. The customer or a proxy usually conducts acceptance tests to ensure that the application has met whatever goals the customer or stakeholder defined.

Acceptance tests are a superset of all other tests. Usually they start as functional and performance tests, but they may include subjective criteria like “ease of use” and “look and feel.” Sometimes, the acceptance suite may include a subset of the tests run by the developers, the difference being that this time the customer or QA team runs the tests.

For more about using acceptance tests with an agile software methodology, visit the wiki site regarding Ward Cunningham’s fit framework (http://fit.c2.com/).

4.2.2. The three types of unit tests

Writing unit tests and production code takes place in tandem, ensuring that your application is under test from the beginning. We encourage this process and urge programmers to use their knowledge of implementation details to create and maintain unit tests that can be run automatically in builds. Using your knowledge of implementation details to write tests is also known as white box testing.

Your application should undergo other forms of testing, starting with unit tests and finishing with acceptance tests, as described in the previous section.

As a developer, you want to ensure that each of your subsystems works correctly. As you write code, your first tests will probably be logic unit tests. As you write more tests and more code, you’ll add integration and functional unit tests. At any one time, you may be working on a logic unit test, an integration unit test, or a functional unit test. Table 4.3 summarizes these unit test types.

Table 4.3. Three flavors of unit tests: logic, integration, and functional

|

Test type |

Description |

|---|---|

| Logic unit test | A test that exercises code by focusing on a single method. You can control the boundaries of a given test method using mock objects or stubs (see part 2 of the book). |

| Integration unit test | A test that focuses on the interaction between components in their real environment (or part of the real environment). For example, code that accesses a database has tests that effectively call the database (see chapters 16 and 17). |

| Functional unit test | A test that extends the boundaries of integration unit testing to confirm a stimulus response. For example, a web application contains a secure web page that only authorized clients can access. If the client doesn’t log in, then trying to access the page should result in a redirect to the login page. A functional unit test can examine this case by sending an HTTP request to the page to verify that a redirect (HTTP status code 302) response code comes back. |

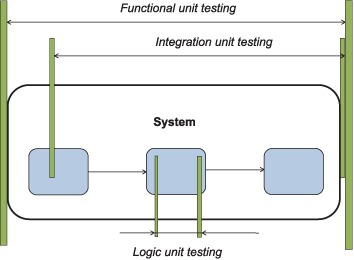

Figure 4.3 illustrates how these three flavors of unit tests interact.

Figure 4.3. Interaction among the three unit test types: functional, integration, and logic

The sliders define the boundaries between the types of unit tests. You need all three types of tests to ensure your code works. Using this type of testing will increase your test code coverage, which will increase your confidence in making changes to the existing code base while minimizing the risk of introducing regression bugs.

Strictly speaking, functional unit tests aren’t pure unit tests, but neither are they pure functional tests. They’re more dependent on an external environment than pure unit tests are, but they don’t test a complete workflow, as expected by pure functional tests. We put functional unit tests in our scope because they’re often useful as part of the battery of tests run in development.

A typical example is the StrutsTestCase framework (http://strutstestcase.sourceforge.net/), which provides functional unit testing of the runtime Struts configuration. These tests tell a developer that the controller is invoking the appropriate software action and forwarding to the expected presentation page, but they don’t confirm that the page is present and renders correctly.

Having examined the various types of unit tests, we now have a complete picture of application testing. We develop with confidence because we’re creating tests as we go, and we’re running existing tests as we go to find regression bugs. When a test fails, we know exactly what failed and where, and we can then focus on fixing each problem directly.

4.3. Black box versus white box testing

Before we close this chapter, we focus on one other categorization of software tests: black box and white box testing. This categorization is intuitive and easy to grasp, but developers often forget about it. We start by exploring black box testing, with a definition.

Definition

Black box test—A black box test has no knowledge of the internal state or behavior of the system. The test relies solely on the external system interface to verify its correctness.

As the name of this methodology suggests, we treat the system as a black box; imagine it with buttons and LEDs. We don’t know what’s inside or how the system operates. All we know is that by providing correct input, the system produces the desired output. All we need to know in order to test the system properly is the system’s functional specification. The early stages of a project typically produce this kind of specification, which means we can start testing early. Anyone can take part in testing the system—a QA engineer, a developer, or even a customer.

The simplest form of black box testing would try to mimic manually actions on the user interface. Another, more sophisticated approach would be to use a tool for this task, such as HTTPUnit, HTMLUnit, or Selenium. We discuss most of these tools in the last part of the book.

At the other end of the spectrum is white box testing, sometimes called glass box testing. In contrast to black box testing, we use detailed knowledge of the implementation to create tests and drive the testing process. Not only is knowledge of a component’s implementation required, but also of how it interacts with other components. For these reasons, the implementers are the best candidates to create white box tests.

Which one of the two approaches should you use? Unfortunately, there’s no correct answer, and we suggest that you use both approaches. In some situations, you’ll need user-centric tests, and in others, you’ll need to test the implementation details of the system. Next, we present pros and cons for both approaches.

User-centric approach

We know that there is tremendous value in customer feedback, and one of our goals from Extreme Programming is to “release early and release often.” But we’re unlikely to get useful feedback if we just tell the customer, “Here it is. Let me know what you think.” It’s far better to get the customer involved by providing a manual test script to run through. By making the customer think about the application, they can also clarify what the system should do.

Testing difficulties

Black box tests are more difficult to write and run[2] because they usually deal with a graphical front end, whether a web browser or desktop application. Another issue is that a valid result on the screen doesn’t always mean the application is correct. White box tests are usually easier to write and run, but the developers must implement them.

2 Black box testing is getting easier with tools like Selenium and HtmlUnit, which we describe in chapter 12.

Test coverage

White box testing provides better test coverage than black box testing. On the other hand, black box tests can bring more value than white box tests. We focus on test coverage in the next chapter.

Although these test distinctions can seem academic, recall that divide and conquer doesn’t have to apply only to writing production software; it can also apply to testing. We encourage you to use these different types of tests to provide the best code coverage possible, thereby giving you the confidence to refactor and evolve your applications.

4.4. Summary

The pace of change is increasing. Product release cycles are getting shorter, and we need to react to change quickly. In addition, the development process is shifting—development as the art of writing code isn’t enough. Development must be the art of writing complete and tested solutions.

To accommodate rapid change, we must break with the waterfall model where testing follows development. Late-stage testing doesn’t scale when change and swiftness are paramount.

When it comes to unit testing an application, you can use several types of unit tests: logic, integration, and functional unit tests. All are useful during development and complement each other. They also complement the other software tests that are performed by quality assurance personnel and by the customer.

In the next chapter, we continue to explore the world of testing. We present best practices, like measuring test coverage, writing testable code, and practicing test-driven development (TDD).